1. Introduction

Technology evolves rapidly, but the basic principles of human–computer interaction stay consistent. Software that neglects consideration of fundamental usability concerns, even when technically sophisticated, risks frustrating users and ultimately losing market share. When developers incorporate usability principles into their design, they are better equipped to create systems that align naturally with users’ thought processes and workflows, resulting in products that are both more appealing and more effective. Despite usability’s well-known importance, a considerable gap exists in the research [

1]. While previous studies have examined usability evaluation from user perspectives and reviews [

2,

3], and recent advances have introduced AI-powered tools for enhancing UX evaluation methodologies [

4], the developer community’s engagement with usability topics, the evolution of these discussions, and their prominence relative to other software development concerns remain unexplored. Recent work has highlighted ongoing challenges in developer usability practices [

1] and collaboration issues [

5], yet systematic analysis of how developers discuss and prioritize usability within their communities is still lacking.

In this paper, our goal is to fill this gap by exploring usability discussions on Stack Overflow, one of the largest online Q&A platforms for developers to find answers to their technical questions [

6]. While developers engage across multiple platforms including GitHub for collaborative development [

7], Reddit for conversational discussions [

8], and Discord for real-time community interaction [

9], Stack Overflow remains the predominant platform for technical Q&A and problem-solving discussions [

10]. Research demonstrates that Stack Overflow serves as the primary platform for developers seeking answers about programming languages, libraries, and technical implementation challenges [

11], making it the most representative platform for analyzing developer engagement with usability topics. Unlike GitHub’s code-centric discussions or Reddit’s general programming discourse, Stack Overflow’s structured Q&A format and comprehensive tagging system provide optimal conditions for the systematic analysis of specific technical concerns like usability [

12].

Stack Overflow has provided valuable insights into how different software quality concerns are discussed and prioritized [

13]. We extracted 894 Stack Overflow posts tagged with usability, examining the temporal changes in discussion volume and engagement and comparing these patterns to other non-functional requirements (NFRs). We also investigated how usability challenges occur across different programming languages and platforms. Specifically, we address the following research questions:

RQ1: How have usability discussions on Stack Overflow changed over the years?

This question examines the evolution of usability posts on Stack Overflow from 2008 to 2024. The goal is to identify patterns in engagement, including the post frequency, view counts, and acceptance rates, to understand how developers’ interactions with usability topics have changed over time. We also consider possible external factors that may influence these patterns, such as the introduction of new technologies and the impact of other platforms.

RQ2: To what extent do engagement patterns differ between usability discussions and other non-functional requirements on Stack Overflow?

In this research question, we examine the impact and role of usability among the non-functional requirements discussed on Stack Overflow. We used engagement metrics and thematic analysis to compare usability with other NFRs from ISO/IEC 25010 to understand the priority in developers’ design considerations and find possible trade-offs relative to other quality attributes and their possible effect on user experience.

RQ3: What usability issues are most frequently discussed in relation to specific programming languages and platforms on Stack Overflow?

This research question aims to identify the most frequently mentioned usability problems associated with various platforms (e.g., web, mobile, desktop) and specific programming languages (e.g., Java, Python, JavaScript) on Stack Overflow. We also examined which languages are most commonly associated with usability issues and what types of challenges appear to be unique to specific contexts, thereby providing a better understanding of practical usability difficulties in different development environments.The main contributions of this study are summarized as follows:

We perform the first comprehensive longitudinal analysis (2008–2024) of usability discussions on Stack Overflow, detailing their evolution over time.

We provide novel insights into how usability engagement and prioritization compare to other key non-functional requirements within developer discourse.

We apply a mixed-methods approach, yielding quantitative trends in usability discussions and qualitative insights into common usability issues associated with specific programming languages and platforms.

Our findings offer actionable implications for software developers, UX/UI designers, platform communities, and educators seeking to better integrate usability considerations throughout the development lifecycle.

This paper is structured as follows: Section 2 provides background on usability and NFRs in the context of Stack Overflow.

Section 3 reviews the related work on mining discussions in Stack Overflow and empirical studies on usability.

Section 4 describes the research methodology, including data collection and analysis techniques.

Section 5 presents the study results, detailing the findings for each research question.

Section 6 discusses the key takeaways and their broader implications.

Section 7 addresses potential threats to validity. Finally,

Section 8 summarizes the main findings and concludes the paper.

4. Study Design

In this study, we used a mixed-methods research methodology that combines the quantitative analysis of engagement metrics with the qualitative content analysis of posts, as described by Tashakkori et al. [

64].

Figure 1 shows an overview of our study’s methodology, which consists of three main key steps: (1) extracting the Stack Overflow dataset, (2) cleaning and manually labeling the dataset, (3) validating the coding results, and addressing each research question. The statistical significance of temporal trends and group differences was assessed using non-parametric tests (Spearman correlation, Mann–Whitney U test) and effect sizes (Cohen’s d) to account for non-normal distributions in engagement metrics [

65].

4.1. Data Collection

We searched Stack Overflow to collect all posts tagged with usability and also the other seven non-functional requirements defined in ISO/IEC 25010: accessibility, performance, compatibility, reliability, security, maintainability, and portability, to answer research question 2. We selected Stack Overflow as our primary data source based on several methodological and practical considerations. Unlike conversational platforms such as Reddit or Discord, Stack Overflow’s structured question–answer format enables the systematic extraction and analysis of specific technical discussions [

66], facilitating reliable identification of usability-related content and engagement metrics. Stack Overflow’s extensive tagging system allows the precise identification of usability discussions through validated tags, providing higher accuracy than keyword-based approaches required on unstructured platforms [

67]. With over 22 million questions spanning 16 years (2008–2024), Stack Overflow provides the largest longitudinal dataset of developer technical discussions available [

9]. In contrast, alternative platforms either lack sufficient historical data or focus on different interaction types, such as GitHub’s code-centric discussions [

7]. The 2024 Stack Overflow Developer Survey demonstrates broad representation across experience levels, with over 65,000 responses from developers worldwide [

9]. Extensive academic research validates Stack Overflow as a reliable source for understanding developer behavior and technical challenges [

10,

12].

We used the Stack Exchange Data Explorer tool (

https://data.stackexchange.com/stackoverflow/query/edit/1914805, accessed on 17 July 2025) to query posts from Stack Overflow that contained these tags, disregarding the creation date of the posts. In the query process, we used SQL wildcard pattern matching in our queries, utilizing the LIKE operator with the pattern “

Usabilit%” to ensure that we could collect all relevant posts. It allowed us to gather all variations of usability-related tags such as “

usability,” “

usability-testing,” and other potential derivatives in a single query. However, this method also collected some false positive tags such as “

reusability” and “

focusability,” which we manually removed from our dataset to maintain focus on genuine usability discussions. Stack Overflow uses a supervised tagging system, where tags are standardized and limited to specific options. Users must select from existing tags rather than creating arbitrary variations such as “

usabilitytesting” or “

usability-testings.” This standardization made our data collection more consistent. At the same time, it was necessary to manually verify them to exclude irrelevant tags that shared the same word root.

We observed that some tags, such as “

usability-evaluation”, “

heuristic-evaluation”, and “

interface-evaluation”, did not have any posts. We also removed posts with tags “

reusability” and “

focusability” that were collected in the ’usability’ query.

Table 3 presents the collected posts from September 2008, when Stack Overflow was founded, to December 2024, the date the data were gathered.

4.2. Data Preprocessing

Following our initial data collection, we needed to verify the relevance of the collected posts, since keyword-based searching alone is insufficient for determining true usability discussions. The textual nature of usability posts adds an additional dimension of issues associated with the meaning ambiguity. To show this problem, consider the following

Box 1 and

Box 2.

In the first example, the user is seeking a library or framework that can effectively explain the interface to new users. This post mentions the issue of presenting information in a user-friendly way. The question is related to usability, but the term “usability” is not explicitly used. On the other hand, in

Box 2, the post contains the keyword “usability”, but it is not related to usability in the context of software design. Instead, the post is related to a coding question about the performance of different ways of storing matrices when applying transformations. This shows that depending entirely on keywords to identify posts related to usability can be misleading and is a challenging task due to the meaning ambiguity of natural language descriptions. It requires developing more sophisticated approaches that go beyond keyword-based analysis. A post that addresses usability issues may not contain the keyword “usability.” In contrast, a post that contains the keyword “usability” may not be related to usability in the context of software design.

Two researchers with complementary expertise conducted the preprocessing. Both inspectors individually analyzed the 894 usability posts to identify and eliminate false positives and posts that only mentioned superficial usability. They read through each post to determine whether it was genuinely related to software usability and contained substantive discussion, considering the post’s context, language used, and specific references to usability concepts. Through discussion and consensus, they identified and eliminated 222 posts, including those that were incorrectly labeled as usability (such as posts about code “usability” meaning ease of programming) and those that contained only brief or tangential references to usability without meaningful content for analysis. This brought our final dataset to 672 usability posts.

4.3. Data Labeling

After we completed preprocessing, we labeled 672 usability posts based on different aspects needed for our analysis. We extracted temporal information, including creation dates and engagement metrics such as view counts, scores, answer rates, and accepted answer percentages. We classified each post by platform type, categorizing them as web development, desktop applications, mobile development, or platform-agnostic discussions. We identified the programming languages mentioned in each post, including JavaScript, HTML, CSS, C#, Java, Python, and others. We also categorized the types of usability issues discussed, such as layout and responsiveness problems, form design challenges, navigation difficulties, and performance perception concerns.

Additional Validation. To ensure the validity and reliability of our preprocessing and labeling process, we implemented validation procedures. Following Aljedaani et al. [

68,

69], we randomly selected 9% of our dataset (81 out of 894 posts) for analysis by an auxiliary expert, a Master’s student in Computer Science with more than 1 year of experience in software engineering research and HCI research, who is completely familiar with usability and accessibility evaluation methodologies. The auxiliary expert independently reviewed and labeled the selected posts to validate the consistency of our classification. This sample size meets a 95% confidence level with a 6% confidence interval. We calculated inter-rater agreement using Cohen’s Kappa Coefficient [

70], achieving 0.87, which indicates almost perfect agreement [

71]. We also cross-verified our findings by comparing post metadata with textual content to ensure consistency and accuracy across our labeling dimensions.

5. Study Results

This section presents the outcomes of our analysis. For each research question, we present the findings.

5.1. RQ1: How Have Usability Discussions on Stack Overflow Changed over the Years?

We examined 672 usability posts from 2008 to 2024 to understand the temporal patterns of usability posts. We examined dates, post frequencies, and engagement metrics, including view counts, scores, and accepted answer rates, to understand the change in community interaction. We analyzed the number of usability posts in relation to the overall activity on Stack Overflow to gain a better understanding of our findings.

Our examination of usability-related posts on Stack Overflow, from its inception in 2008 to 2024, reveals that discussions among developers regarding usability have undergone significant evolution.

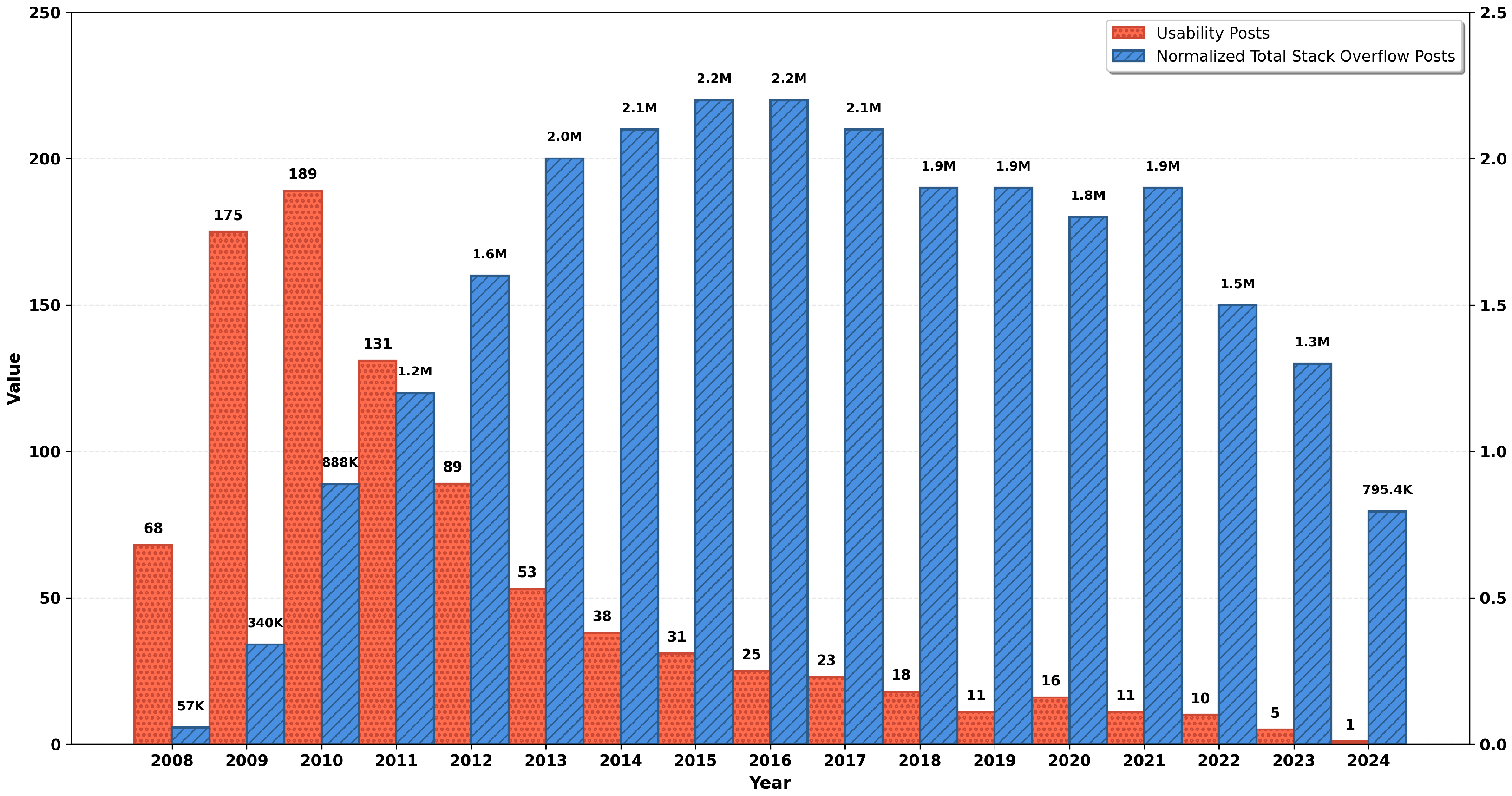

Figure 2 shows that usability discussions peaked in 2010 with 189 posts, preceded by substantial activity in 2009 (175 posts) and followed by 131 posts in 2011. From 2012 onward, usability discussions experienced a pronounced decline, decreasing to 91 posts in 2012, 54 posts in 2013, and continuing to decline steadily. By 2014, the number of posts per year had fallen below 40, and recent years have shown low activity, with only 5 posts in 2023 and 1 post in 2024.

To confirm whether this decline is domain-specific or reflects general Stack Overflow activity patterns, we examined usability posts in relation to the overall platform posts. As shown in

Figure 2, Stack Overflow’s total post volume grew from 57.2 K posts in 2008 to a peak of approximately 2.2 M posts during 2015–2016. Meanwhile, the decline in usability posts began in 2012, while overall platform activity continued to increase. This difference in timing and the uneven rate of decline indicate that usability discussions were influenced by unique factors beyond general platform trends.

We also analyzed engagement metrics for usability posts across this period.

Table 4 presents the average view count, score, and answer acceptance rates for usability posts by year. The average view count for usability posts has declined by approximately 87% from 2008 to 2024. The average score, representing the community’s perceived value of a post, has decreased from 6.4 in 2008 to 0.7 in 2024. The accepted answer rate also shows a general decline from the high rates observed in the initial years (consistently above 80% from 2008–2009), becoming much more variable and often lower in the latter half of the study period (Note: Data from 2022–2024 should be interpreted with caution due to the small sample size (

n < 15) of posts in these years).

Content analysis shows a change in the focus of usability discussions. Early posts (2008–2012) frequently addressed fundamental interface design principles, while later posts tended to focus more on specific implementation challenges. We also observed an increasing overlap with accessibility discussions over time, with more recent usability posts often addressing both concerns simultaneously.

Statistical analysis confirms these temporal trends are significant. Spearman correlation analysis reveals significant negative correlations between year and engagement metrics: view count ( = −0.356, p < 0.001) and score ( = −0.410, p < 0.001). To quantify the magnitude of change, we compared the early period (2008–2011, n = 563) with the recent period (2020–2024, n = 42) using the Mann–Whitney U test. Early-period posts exhibited significantly higher engagement across all metrics: the median view count declined from 742 to 166 (U = 5447, p < 0.001), and the median score declined from 2.0 to 0.0 (U = 5828, p < 0.001). The effect size analysis using Cohen’s d indicated small but meaningful effects (d = 0.29 for view count, d = 0.42 for score), confirming the practical significance of the observed decline. Additionally, the chi-square analysis revealed a significant decrease in the accepted answer rates, from 79.4% in the early period to 44.2% in the recent period ( = 27.88, df = 1, p < 0.001).

To address the potential bias that newer posts have had less time to accumulate views and engagement, we structured our temporal analysis to compare periods rather than individual years, ensuring sufficient accumulation time across all comparison groups. The early period (2008–2011) and recent period (2020–2024) both represent posts with at least 4–5 years of potential engagement accumulation as of our data collection in January 2025. Furthermore, the consistent decline pattern observed across the intermediate years (2012–2019) confirms this is not merely an artifact of differential accumulation time but reflects a genuine shift in how developers engage with usability discussions on Stack Overflow.

5.2. RQ2: To What Extent Do Engagement Patterns Differ Between Usability Discussions and Other Non-Functional Requirements on Stack Overflow?

To investigate how usability discussions differ from other non-functional requirements (NFRs), we collected and compared questions from

usability,

accessibility,

performance,

security,

reliability,

compatibility,

maintainability, and

portability. We focused on multiple engagement metrics: total post volume, mean question score, fraction of questions answered, fraction of questions with an accepted answer, average number of answers, mean view count, comment count, and time until the first response. For this research question, we used the unfiltered usability set to ensure identical inclusion criteria across all NFR categories, which were likewise unfiltered. Using the filtered usability subset would change the denominator only for usability and yield non-comparable base rates.

Table 5 summarizes the basic engagement metrics across the eight NFR tags.

The data reveal several notable patterns. Performance (102,821 posts) and security (56,443 posts) dominate the discussion space, while usability (894 posts) and reliability (294 posts) have significantly fewer posts. Usability posts show the highest answer rates (94.5%), highest acceptance rates (68.6%), and highest average answer counts (3.3). Portability questions receive the highest average scores (8.27) and the highest average view counts (6263).

We further analyzed the time to first answer for questions in each category, as shown in

Table 6.

These data shows a wide variation in community response times across topics. Questions related to maintainability receive the fastest answers on average (8.1 days), while questions related to reliability take the longest (48.3 days).

5.3. RQ3: What Usability Issues Are Most Frequently Discussed in Relation to Specific Programming Languages and Platforms on Stack Overflow?

To identify the most frequently discussed usability issues related to specific programming languages and platforms, we conducted a detailed analysis of the usability posts in our dataset. We first categorized each post based on the programming languages and platforms mentioned. We then analyzed the content of these posts to identify common usability challenges within each programming context. This analysis helps us understand how usability concerns vary across different development environments and technologies.

We begin by presenting a comprehensive taxonomy of usability issue types identified across all posts.

Table 7 shows the distribution of primary usability issue categories in our dataset.

Interactive elements and controls dominate usability discussions, representing nearly one-quarter of all posts (24.0%). This category encompasses questions about custom widgets, dropdown menus, button design, and modal dialogs. Navigation and information architecture (12.8%) addresses how users find and access content, while form design and input (11.2%) and error handling and validation (9.7%) together account for over one-fifth of discussions. Accessibility and inclusion (7.9%) demonstrates a growing awareness of inclusive design needs. Mobile and touch interactions constitute 3.1% of posts.

Our analysis revealed significant variations in the frequency and types of usability issues discussed across different programming languages and platforms.

Table 8 presents the distribution of programming languages mentioned in usability posts. JavaScript emerged as the most frequently mentioned programming language in usability discussions, with 166 posts (24.7% of all usability posts). This was closely followed by HTML with 160 posts (23.8%) and CSS with 88 posts (13.1%). Other commonly mentioned languages included C# (48 posts, 7.1%), PHP (37 posts, 5.5%), Java (29 posts, 4.3%), and Python (24 posts, 3.6%).

When examining the platforms discussed in usability posts, we found that web development dominated the discussions, as shown in

Table 9. Web platforms accounted for 303 posts (45.1% of all usability posts), followed by desktop applications with 148 posts (22.0%) and mobile platforms with 83 posts (12.4%). Interestingly, 138 posts (20.5%) were platform-agnostic, discussing general usability principles applicable across multiple environments.

Our analysis also identified distinct patterns in the types of usability issues discussed for different programming languages and platforms:

5.3.1. Web Development

Among the 303 web platform posts, layout and responsiveness issues featured prominently. These posts focused on creating adaptable interfaces across various screen sizes and devices. Common questions included handling complex layouts, implementing responsive design patterns, and solving CSS layout challenges. One representative example asked: “How to maintain usability of complex data tables on mobile screens without horizontal scrolling?”

Form design and validation represented another major theme in web usability discussions. These focused on creating intuitive forms, providing appropriate feedback during validation, and striking a balance between security and usability in form submissions. An illustrative post asked: “What’s the most user-friendly way to indicate required fields in a complex form without cluttering the interface?”

Navigation and information architecture also emerged as a significant concern, addressing menu design, navigation patterns, and organizing content to facilitate intuitive user journeys. A typical post asked: “Best practices for implementing breadcrumb navigation for deep website hierarchies?”

Performance perception discussions featured regularly in web usability posts. These questions focused on loading indicators, perceived performance, and techniques to make interfaces feel responsive even when processing is occurring. An example post asked: “How to implement skeleton screens in React to improve perceived loading performance?”

Accessibility and usability integration comprised another important area of web usability posts. These discussions explored the intersection of accessibility requirements and usability best practices, particularly in complex interactive components. An example post asked about “Making custom JavaScript dropdown components both accessible and usable for all users.”

5.3.2. Desktop Applications

Among the 148 desktop platform posts, controls and widgets dominated the discussions. These questions addressed designing and implementing custom controls, modifying standard controls for better usability, and creating consistent control behaviors. A representative post asked about “Creating a more usable multi-selection tree view in WPF.”

Window management and layout represented another major theme in desktop usability posts. These discussions explored the differences between MDI (Multiple Document Interface) and SDI (Single Document Interface), managing multiple windows, and organizing complex interfaces. An example post asked about the “Best approach for organizing multiple document editing in a Java Swing application.”

Keyboard navigation and shortcuts also played a prominent role in desktop usability discussions. These focused on implementing intuitive keyboard shortcuts, handling key conflicts, and designing for power users. A typical post asked about “Implementing customizable keyboard shortcuts in a C# desktop application.”

Application feedback and status discussions comprised another important area of desktop usability posts. These questions addressed progress indication, status bars, and communicating system state to users. An example post asked about “How to effectively communicate background task progress in a WinForms application.”

Installation and configuration usability represented an additional concern in desktop usability posts. These addressed first-run experiences, configuration interfaces, and simplified setup procedures. A typical post asked about “Designing a user-friendly configuration interface for a complex Java application.”

5.3.3. Mobile Development

Among the 83 mobile platform posts, several key themes emerged. Touch target size and spacing dominated these discussions, with questions addressing appropriate button sizes, handling small targets, and designing for different finger sizes. A representative post asked about “Best practices for touch target sizes in a space-constrained Android interface.”

Gesture recognition and feedback represented another significant area of discussion, centered on implementing intuitive gestures, providing appropriate feedback for gesture interactions, and handling gesture conflicts. An example post asked, “How to indicate the availability of swipe gestures in a mobile app?”

On-screen keyboards and input methods also featured prominently, addressing challenges with text input, keyboard appearances, and optimizing forms for mobile input. A typical post inquired about “Optimizing form input fields to work well with iOS predictive keyboard.”

Navigation patterns for small screens comprised another major theme, with questions focused on implementing hamburger menus, tab bars, and other navigation paradigms effectively. An example post asked about “Bottom tab bar vs hamburger menu for a content-heavy Android application.”

Performance and battery considerations rounded out the mobile discussions, exploring how to balance interactive features with performance and battery life. A representative post asked about “Implementing smooth animations in React Native without impacting battery life.”

The evolution of usability discussions within specific programming contexts also reveals shifting priorities over time. Early discussions (2008–2012) often focused on fundamental implementation challenges, such as creating responsive layouts and custom controls. More recent discussions increasingly address higher-level usability concerns, including information architecture, interaction design patterns, and the integration of usability with other quality attributes, like accessibility and performance.

Additionally, we observed that certain programming languages showed stronger associations with particular usability concerns. JavaScript discussions frequently addressed dynamic interface updates and interactive behaviors, while C# posts often focused on enterprise application usability patterns and complex form design. The significant presence of posts that mention multiple languages (particularly JavaScript/HTML/CSS combinations) highlights the interconnected nature of modern development and the need for usability solutions that work across technology stacks.

6. Discussion

This section interprets our findings in the context of the existing literature, explains the theoretical and practical significance of our results, and discusses the broader implications for software development practice and research.

6.1. Decline and Transformation of Usability Discussions

The 87% decline in average view counts and 89% decline in average scores from 2008 to 2024 suggest a decreasing need within the broader developer community for seeking out or engaging with usability discussions on this platform. Statistical analysis confirms that these patterns are significant (Spearman correlation, p < 0.001; Mann–Whitney U tests, p < 0.001), not due to random variation. Our period-based temporal comparison methodology addresses potential accumulation bias, as detailed in the Results section. The decrease in scores indicates that newer usability questions are either considered less valuable or are attracting less positive interaction from users. The declining and increasingly variable accepted answer rates may suggest that more recent usability questions are more difficult to resolve to the asker’s satisfaction or that the expertise needed to answer them is less active on the platform.

This transformation in usability discussions can be attributed to several factors that mirror the broader evolution of software development. The early peak period (2009–2011) overlaps with Stack Overflow’s initial growth phase and the widespread adoption of web technologies, suggesting that fundamental usability questions may have been comprehensively addressed during this formative period.

The subsequent decline may reflect the maturation of development tools and frameworks that have increasingly integrated usability considerations into their core functionality. Modern UI frameworks and design systems have standardized many interface patterns that previously required explicit discussion, potentially reducing the need for developers to seek guidance on basic usability implementation [

72,

73]. Additionally, other Q&A communities, such as Reddit and specialized communities focused on user experience like Stack Exchange User Experience, may have provided alternative places for usability discussions, leading to a migration of expertise and questions away from Stack Overflow.

The dramatic decline in usability discussions on Stack Overflow coincides with the rise of specialized UX communities, suggesting a redistribution rather than disappearance of usability knowledge exchange. This pattern reflects broader specialization trends in software development, where cross-cutting concerns increasingly find dedicated platforms [

32]. As design systems and component libraries have standardized common patterns, the focus has shifted from basic implementation questions to more nuanced context-specific challenges.

The temporal pattern also suggests a transformation in how usability knowledge is acquired and applied within development workflows. The integration of usability principles into development education, the availability of comprehensive design resources, and the establishment of organizational design systems may have reduced reliance on ad hoc community support for usability challenges. This evolution reflects the increasing professionalization and specialization within the broader field of software development. This transformation signals a maturing field where fundamental knowledge has been codified and incorporated into development frameworks. The integration of usability considerations into standard development practices could indicate that certain fundamental principles have become established knowledge that does not require frequent discussion. Educators and practitioners should recognize that usability knowledge now flows through multiple channels: formal design systems, specialized communities such as UX Stack Exchange, and integrated documentation, rather than primarily through general programming forums.

The arrival of Large Language Models (LLMs) such as OpenAI ChatGPT, which became publicly available in late 2022, represents a technological development that may influence future patterns of developer knowledge-seeking behavior [

74]. However, the temporal characteristics of our data provide important context for interpreting this potential influence. The pronounced decline in usability discussions began in 2012 and continued consistently through 2022, preceding the widespread availability of conversational AI systems by a full decade. Therefore, while LLMs may affect future trends in how developers seek usability guidance, they cannot account for the historical decline patterns observed in our analysis. The potential impact of LLMs on developer communities and traditional Q&A platforms needs investigation in future studies that extend beyond our analysis period.

6.2. Usability as a Specialized Knowledge Domain

These findings reveal a complex picture of usability discussions on Stack Overflow. While usability is a niche topic in terms of question volume, it stands out for its high resolution rates. The high answer and acceptance percentages suggest that when usability questions are asked, the community is exceptionally effective at providing satisfactory answers. This contrasts with other niche topics, such as portability, which, despite being even less frequent, garner the highest community value in terms of scores and views. This indicates that different types of specialized questions are valued in different ways by the community.

The varying response times across NFRs also provide insight into the platform’s knowledge landscape. The long delays in answering reliability questions suggest a scarcity of active experts in that domain, whereas the quicker responses for topics like usability and maintainability point to a more responsive and available community of specialists. These patterns suggest that usability discussions on Stack Overflow represent a highly efficient, albeit small, corner of the platform where specialized questions are resolved effectively.

While the volume of usability questions is low compared to areas like performance, the community’s engagement with them is uniquely effective. This specialized community exhibits distinctive engagement patterns: usability posts receive the highest answer rates, the highest acceptance rates, and attract more answers per question than other non-functional requirements. This suggests that when usability questions are asked, the community is highly efficient at resolving them. This aligns with findings from Ahmad et al. [

19], who noted that specialized knowledge in non-functional requirements often clusters within distinct subcommunities.

This phenomenon presents both challenges and opportunities. While the small number of questions may suggest a knowledge silo, the high-quality engagement and relatively fast response times indicate that the available expertise is highly responsive. Organizations seeking to build usability expertise might benefit from cultivating connections to these specialized communities rather than expecting such knowledge to naturally develop within general development teams.

6.3. Platform-Specific Usability Patterns

Our analysis reveals fundamentally different usability concerns across web, desktop, and mobile contexts—beyond mere implementation differences. Each platform has evolved distinct interaction paradigms: web developers prioritize responsive layouts and progressive enhancement; desktop application developers focus on workflow efficiency and keyboard control; mobile developers emphasize touch targets and gesture recognition. The dominance of web technologies (JavaScript, HTML, CSS) in usability discussions reflects both the prevalence of web development and the unique usability challenges posed by web interfaces. Web developers must ensure an interface works well on everything from a small smartphone to a large desktop monitor, accommodating both touch and mouse interactions—a challenge not faced to the same degree by developers working in more controlled environments. Additionally, web interfaces are often customer-facing, making their usability directly tied to business success.

The different focus areas across platforms highlight how usability concerns are contextual and environment-specific. These different environments effectively represent distinct design languages and constraints. Desktop applications emphasize efficient interaction for professional users, with significant attention to keyboard shortcuts and complex controls. Mobile applications focus on touch interaction, space constraints, and simplified navigation patterns. Web applications must strike a balance between responsiveness across devices and rich interaction capabilities. These fundamental differences in interaction models and user expectations result in distinct usability challenges that necessitate specialized solutions.

The evolution of usability discussions within specific programming contexts mirrors the maturation of web and mobile technologies, as developers have transitioned from implementing basic interfaces to refining and optimizing more sophisticated user experiences. Different development ecosystems may cultivate distinct usability perspectives and priorities, influenced by both the technical capabilities of the language and the typical application domains where it is used.

Overall, usability discussions on Stack Overflow are highly contextualized by programming language and platform. The differences in usability concerns across these contexts suggest that usability knowledge and best practices must be tailored to specific development ecosystems rather than applied uniformly. This finding is consistent with research by Alexandrini et al. [

62], who observed significant differences in usability requirements across development environments. This pattern challenges the notion of universal usability principles, suggesting instead that effective usability guidance must be contextualized within specific technological environments. The dominance of web technologies in usability discussions reflects both the prevalence of web development and the inherently complex usability challenges of creating interfaces that work across diverse devices. Developers transitioning between platforms may require explicit education in the different usability paradigms, rather than assuming the transferability of knowledge.

This finding has important implications for usability education and documentation. Rather than teaching general usability principles in isolation, more effective approaches might integrate usability guidance directly into programming language and platform documentation, addressing the specific challenges and patterns relevant to each context. Similarly, usability tools and evaluation methods might be more effective when tailored to specific development environments.

6.4. The Value of Longitudinal Perspective

Our longitudinal findings reveal patterns that cross-sectional studies cannot capture. All the studies summarized in

Table 2 employ cross-sectional designs, analyzing usability issues at single time points or across a collection of applications without examining temporal evolution. While these studies provide valuable insights into prevalent usability problems at specific moments, they cannot reveal how developer engagement with usability has changed over time, how usability discussions compare to other non-functional requirements across different periods, or how the nature of usability challenges has evolved alongside technological advances.

For example, Hedegaard et al. [

51] quantified usability information in online reviews from 2013, and Diniz et al. [

2] identified usability heuristic violations in 200 mobile reviews from 2022. However, neither study was able to observe the temporal shift we document, from fundamental design principles (2008–2012) to framework-specific implementation challenges (2013–2024). Similarly, while studies such as Morgan et al. [

3] and Alsanousi et al. [

57] provide snapshots of usability concerns in specific application domains, our work uniquely demonstrates how these concerns have evolved over 16 years and how they differ across different development platforms.

The cross-sectional studies in

Table 2 analyze user reviews to identify usability problems from the end-user perspective, whereas our study examines developer discussions seeking solutions to usability implementation challenges. This difference in perspective is significant: user reviews capture what users find problematic after release, while developer discussions on Stack Overflow reveal what challenges developers face during implementation. Our longitudinal approach to developer discussions thus provides a complementary and previously missing perspective on how usability knowledge is sought, shared, and applied within the development community over time.

6.5. Research-Practice Gap: The Absent User Voice

Our analysis reveals a striking disconnect between developers asking usability questions and actual user research practices. Among the 672 usability posts, 619 posts (92.1%) made no mention of any form of user research or testing. Only 21 posts (3.1%) cited user feedback, 18 posts (2.7%) mentioned user testing, and merely 9 posts (1.3%) referenced analytics or data to support their usability concerns. A negligible number mentioned A/B testing (2 posts, 0.3%) or accessibility testing (3 posts, 0.4%).

This finding suggests that developers are seeking usability guidance largely in the absence of empirical user validation—relying instead on community expertise, established heuristics, or personal judgment. While usability literature strongly emphasizes user-centered design and empirical validation as fundamental to effective usability practice, our findings indicate these practices remain largely disconnected from day-to-day development questions posted on Stack Overflow.

The high acceptance rate (68.6%) of usability answers on Stack Overflow, despite the overwhelming absence of user research mentions, suggests that community consensus and expert opinion may be substituting for empirical validation. This raises important questions about how usability knowledge is validated and propagated within developer communities. Are developers making usability decisions based primarily on peer validation rather than user testing? Does the community’s effectiveness at resolving usability questions (94.5% answer rate) indicate that experiential knowledge can effectively substitute for formal user research in many contexts, or does it suggest a systemic gap in connecting usability decisions to actual user needs?

This research-practice gap has significant implications for both usability education and tool development. It suggests that while developers recognize usability as important enough to ask questions about it, the integration of user research methods into their workflow remains limited. Educational efforts may need to focus not only on usability principles, but also on practical, lightweight methods for incorporating user feedback into development processes. Development tools and platforms could better support this integration by making user research methods more accessible and integrated into existing workflows.

6.6. Novel Contributions to Usability Research

This study makes several novel contributions to understanding usability in software development practice that distinguish it from all prior work:

First Longitudinal Analysis of Developer Usability Discussions. Unlike all previous studies that analyze usability at single time points, our 16-year temporal analysis reveals a significant decline pattern (from 189 posts in 2010 to 1 post in 2024) that fundamentally changes our understanding of how developers engage with usability knowledge. This is not merely a quantitative difference but a qualitative shift in research approach: cross-sectional studies can identify what usability issues exist, but only longitudinal analysis can reveal how these issues, and the community’s engagement with them, evolve over time. The sustained decline beginning in 2012 and persisting through 2022—a full decade before the emergence of conversational AI—demonstrates that this transformation in usability knowledge sharing reflects deep structural changes in software development practice rather than recent technological disruptions.

Cross-NFR Comparative Framework. We are the first to systematically compare usability engagement with seven other ISO/IEC 25010 non-functional requirements over an extended timeframe. This reveals that usability’s decline is unique—performance and security discussions remained stable or grew during the same period, indicating domain-specific factors rather than general platform trends. This comparative framework allows us to position usability not in isolation but within the broader landscape of software quality concerns, revealing that while usability questions are infrequent (894 posts vs. 102,821 for performance), they exhibit exceptional resolution efficiency (94.5% answer rate, 68.6% acceptance rate) unmatched by other NFRs. This finding suggests that usability represents a specialized knowledge domain with a highly effective subcommunity rather than simply being undervalued or overlooked.

Granular Taxonomy with Temporal Dimension. Our detailed classification (10 primary categories) combined with 16-year evolution tracking provides unprecedented insight into how specific usability concerns have shifted over time. Previous studies identified usability issue types but were unable to track their evolution. We demonstrate that interactive elements and controls consistently dominate discussions (24.0% of posts), while mobile/touch interactions represent only 3.1%—possibly because mobile usability patterns have become well-established through platform guidelines. This granular categorization, validated through systematic coding with high inter-rater reliability (Cohen’s Kappa = 0.87), demonstrates sample adequacy through breadth and depth rather than volume alone, enabling future researchers to build upon a standardized framework for analyzing usability discussions across platforms and time periods.

6.7. Future Directions and Practical Implications

The positioning of usability discussions on Stack Overflow represents a significant intersection between the development and design disciplines. The complexity of these discussions, as evidenced by the nuanced and platform-specific challenges identified in our analysis, suggests that integrating technical and design considerations creates particularly challenging problems that resist straightforward solutions.

Educational approaches that integrate usability principles directly into programming courses and documentation may prove more effective than treating them as separate domains. Development tools and frameworks could better support this integration by providing not just implementation patterns but also contextual guidance on when and why particular approaches improve usability. This integration would help address the gap between usability theory and practical implementation that our study identified, similar to challenges noted by Diniz et al. [

2] in their analysis of usability issues in mobile applications.

The patterns we identified point to fertile ground for future research on how usability knowledge is transferred and applied within development communities. As usability discussions have declined on Stack Overflow, understanding where and how this knowledge now flows would provide valuable insights into effective knowledge transfer mechanisms. This could build upon the work by Zou et al. [

20], who analyzed how different non-functional requirements are prioritized and discussed in technical communities.

Additionally, the context-specific nature of usability challenges suggests opportunities for research on how development platforms might better support appropriate usability patterns through specialized guidance, templates, and evaluation tools tailored to specific technological contexts.

Our findings have important implications for multiple stakeholders in the software development ecosystem. For educators, this highlights the need to integrate usability principles into technical curricula in ways that acknowledge platform-specific interaction paradigms, rather than treating usability as a separate context-independent domain. For development platform creators, our results suggest opportunities to better support appropriate usability patterns through specialized guidance, templates, and evaluation tools tailored to specific technological contexts. For research communities, the observed transformation in how usability knowledge is shared points to fertile ground for investigating knowledge transfer mechanisms across increasingly specialized development domains.

The research-practice gap we uncovered—with over 90% of usability discussions proceeding without any mention of user research—suggests that efforts to promote user-centered design may need to focus on making user research methods more accessible and integrated into existing developer workflows, rather than simply advocating for their importance. The success of community-based usability problem-solving on Stack Overflow, despite the absence of user research mentions, raises important questions about when and how peer expertise can effectively substitute for empirical validation and when it cannot.

These takeaways collectively suggest that usability knowledge is undergoing a transformation in how it is shared, structured, and applied within software development—evolving from general forum discussions to more specialized, contextualized, and integrated approaches that reflect the maturing nature of both software development and usability as interrelated fields.

7. Threats to Validity

This section examines potential threats that could limit the validity of our findings and discusses our mitigation strategies. We classify these threats into construct, internal, conclusion, external, and reliability validity categories.

7.1. Construct Validity

Construct validity concerns the accuracy with which our operational measures represent the concepts under study. Our dataset construction methodology presents a key threat, as we primarily identified posts through tags like “usability” and “usability-testing.” Despite using pattern matching with the “Usabilit%” wildcard to capture variations, we may have missed relevant discussions that use alternative terminology. Similarly, the inherent semantic ambiguity in natural language created challenges in post classification. As demonstrated in our methodology section, some posts containing “usability” addressed unrelated topics, while others discussed usability concepts without the explicit term. Through our manual validation process, we identified and eliminated 222 false positives from the initial 894 posts (24.8%), including posts where “usability” referred to code reusability or API usability rather than software user interface usability. However, our keyword-based approach may have also missed relevant usability discussions that used alternative terminology such as “user experience,” “UI/UX,” or “user-friendly,” representing potential false negatives that we cannot fully quantify. Our manual analysis with multiple inspectors and the auxiliary expert validation (yielding Cohen’s Kappa of 0.87) helped mitigate these limitations, though perfect classification remains challenging. Future research could employ advanced natural language processing techniques, such as semantic embeddings (e.g., BERT [

75]), topic modeling [

76], or transformer-based classification models [

77], to improve relevance detection and reduce both false positives and false negatives in usability discussion identification.

The selection of engagement metrics (view counts, scores, and answer rates) as proxies for community interest and question complexity represents another construct validity concern. To strengthen our analysis, we employed multiple complementary metrics rather than relying on any single measure, providing a more holistic view of engagement patterns across non-functional requirements.

7.2. Internal Validity

Regarding internal validity, the temporal trends we observed might be influenced by external factors beyond those explicitly identified. Changes in Stack Overflow’s platform mechanics, algorithm adjustments affecting post visibility, shifts in user demographics, or broader trends in software development practices could all contribute to the patterns we observed. While we contextualized our findings within industry trends, definitively attributing causality remains challenging. To partially mitigate this, we compared the usability trend against the overall platform activity trend, which helped isolate topic-specific factors from general platform dynamics.

While view counts and scores naturally accumulate over time, potentially creating bias favoring older posts, several factors indicate our observed trends reflect genuine changes rather than mere temporal artifacts. First, the pronounced decline began in 2012, providing over a decade for even middle-period posts to accumulate engagement metrics by our 2025 data collection. Second, our statistical analysis in RQ1 explicitly addressed this concern by comparing early-period posts (2008–2011, n = 563) with recent-period posts (2020–2024, n = 42) using methods that account for the temporal dimension. The Mann–Whitney U tests revealed significantly higher engagement in the early period across all metrics (

p < 0.001). Effect size analysis (Cohen’s d = 0.29 for view count, d = 0.42 for score) confirmed the practical significance of these differences. These statistical findings demonstrate that the decline represents a genuine shift in community engagement patterns rather than insufficient maturation time for recent posts. Additionally, our content analysis reveals that 628 of 672 posts (93.5%) address basic implementation questions rather than advanced or framework-specific challenges, and 362 posts (53.9%) involve best practice inquiries seeking established usability standards. The predominance of questions about fundamental usability principles suggests that the observed decline represents a genuine shift in community interest and knowledge-seeking behavior, rather than merely an artifact of insufficient time for recent posts to accumulate engagement metrics. The decline also coincides with documented trends in framework maturation and the rise of specialized UX communities [

32], providing a plausible mechanism for the observed pattern beyond simple post-age effects.

Our manual classification process inevitably involves subjective judgment when categorizing posts by programming language, platform, or issue type. To address this threat, we implemented a systematic iterative approach with multiple validation steps and calculated an inter-rater reliability (Cohen’s Kappa) score of 0.87, indicating “almost perfect agreement” according to Fleiss et al. [

71]. This rigorous process helps ensure consistency and reliability in our manual classifications.

The final dataset of 672 usability posts (reduced from the initial 894 posts with usability-related tags) introduces potential selection bias where certain types of usability discussions might be systematically excluded. While necessary for ensuring dataset quality, this filtering process could affect our understanding of the full spectrum of usability concerns on Stack Overflow.

7.3. Conclusion Validity

Conclusion validity concerns whether our statistical inferences are reasonable given the data and methods employed. Our use of non-parametric statistical tests (Spearman’s rank correlation and Mann–Whitney U test) is appropriate for the non-normal distribution of Stack Overflow engagement metrics. The observed correlations between time and engagement (Spearman’s = −0.356 for view count, = −0.410 for score, both p < 0.001) represent moderate effect sizes. While statistically significant, we acknowledge these correlations establish association rather than causation, and other factors beyond temporal trends may contribute to the observed patterns.

Comparing usability (894 posts) with other NFRs that have substantially larger volumes (e.g., performance with 102,821 posts) presents challenges for statistical comparison. We addressed this by focusing on relative engagement metrics (percentages, answer rates, acceptance rates) rather than absolute counts, and by employing rank-based statistical tests that are robust to scale differences. Our sample size provides adequate statistical power for detecting medium to large effects in our primary analyses, though the power is limited for analyzing rare subcategories (e.g., 21 mobile/touch interaction posts).

7.4. External Validity

Our exclusive focus on Stack Overflow limits the generalizability of our findings to other developer communities and platforms. Usability discussions may occur differently on collaborative platforms like GitHub, conversational platforms like Reddit, or real-time communities like Discord, as developer communities can develop platform-specific cultural norms that influence discussion patterns [

11]. However, Stack Overflow’s role as a robust platform for developer technical discussions [

10] and its structured Q&A format make it a suitable platform for systematic longitudinal analysis.

Our study covers posts from 2008 to 2024, but the software development landscape continues to evolve rapidly. Current findings might not necessarily predict future trends, particularly given the accelerating pace of change in development tools, frameworks, and practices. Additionally, while we analyzed the most frequently mentioned programming languages and platforms, our findings might not generalize equally well to less common languages or specialized domains that may be underrepresented in our dataset. Future work should explore cross-platform analysis to validate our findings across different developer community contexts. Additionally, while our study focuses on non-functional requirements (specifically usability), the findings and methodology may have limited applicability to functional requirements discussions, which have different characteristics and discussion patterns on Stack Overflow.

7.5. Reliability Validity

Our reliance on manual content analysis introduces challenges for reproducibility despite detailed documentation of our methodology and strong inter-rater agreement. This limitation is inherent to qualitative content analysis in natural language contexts [

78]. We mitigated this threat through methodological transparency, multiple validation procedures, and a representative sampling approach that ensured a sufficiently large sample (9% of the dataset) with a 95% confidence level and 6% confidence interval, following guidelines from Aljedaani et al. [

68].

Stack Overflow’s content volatility presents another reliability concern, as posts can be edited, deleted, or re-tagged over time. Our analysis represents a snapshot taken at the time of data collection (January 2025), and future replication attempts may encounter slightly different data even when using identical query parameters. We addressed this by providing detailed documentation of our data collection timeframe and methodology to contextualize our findings appropriately.

Throughout our study, we maintained appropriate scientific caution about the generalizability and scope of our findings while providing valuable insights into the evolution and characteristics of usability discussions in developer communities.

8. Conclusions

This study presents the first comprehensive longitudinal analysis of usability discussions on Stack Overflow, spanning from 2008 to 2024, and reveals significant insights into how developers engage with usability concerns in technical communities. Our findings demonstrate a pronounced and sustained decline in usability discussion frequency since its peak in 2010, contrasting sharply with more stable patterns observed in other non-functional requirements such as performance and security. This decline, however, does not necessarily indicate diminishing importance of usability in software development. Rather, it suggests a transformation in how usability knowledge is shared and acquired, likely reflecting the maturation of the field, integration of usability patterns into development frameworks, and migration of specialized discussions to dedicated UX/UI communities.

Despite their declining frequency, usability discussions on Stack Overflow exhibit unique engagement patterns. These posts achieve the highest answer and acceptance rates of all non-functional requirements, indicating that while the topic is niche, the community resolves these questions with exceptional efficiency. This contrasts with other specialized topics, such as portability, which garner the highest average scores and view counts, suggesting that different types of niche questions are valued by the community in varying ways. This highlights a complex dynamic where usability discussions are defined not by broad popularity but by the effectiveness of a specialized subcommunity in resolving its distinct challenges.

Our analysis of programming language and platform contexts reveals that usability challenges are highly contextualized by development environment, with fundamentally different concerns emerging across web, desktop, and mobile platforms. Web development discussions predominantly address responsive layouts and form design, desktop application posts focus on keyboard navigation and complex controls, while mobile development conversations center around touch interactions and small-screen constraints. This context specificity challenges the notion of universal usability principles, suggesting instead that effective usability guidance must be tailored to specific technological environments and incorporated directly into technical documentation and education.

These findings have important implications for multiple stakeholders in the software development ecosystem. For educators, this highlights the need to integrate usability principles into technical curricula in ways that acknowledge platform-specific interaction paradigms, rather than treating usability as a separate context-independent domain. For development platform creators, our results suggest opportunities to better support appropriate usability patterns through specialized guidance, templates, and evaluation tools tailored to specific technological contexts. For research communities, the observed transformation in how usability knowledge is shared points to fertile ground for investigating knowledge transfer mechanisms across increasingly specialized development domains.

Future research should investigate where usability discussions have evolved to and how usability knowledge is now disseminated within development teams. Additional work is needed to develop and validate platform-specific usability guidelines that bridge technical implementation considerations with human-centered design principles. As software continues to permeate all aspects of society, the effective integration of usability considerations into development processes remains critically important, even as the forums for these discussions evolve. Our work contributes to understanding this evolution, offering insights into how the software development community approaches the essential challenge of creating not just functional but truly usable technologies.

Body: “Recently you can find this in lots of apps: When the app starts the first time, it explains the interface with nifty arrows and handwriting letters. Does anyone know of a library or framework which can draw these arrows and handwriting labels in an easy way?…”

Body: “Recently you can find this in lots of apps: When the app starts the first time, it explains the interface with nifty arrows and handwriting letters. Does anyone know of a library or framework which can draw these arrows and handwriting labels in an easy way?…”

Body: “I need to create a 4 × 4 matrix class for a 3D engine in C#. I have seen some other engines storing the matrix values in single float variables…What is the fastest way of storing the matrix for applying transformations? Which option would you choose for usability?”

Body: “I need to create a 4 × 4 matrix class for a 3D engine in C#. I have seen some other engines storing the matrix values in single float variables…What is the fastest way of storing the matrix for applying transformations? Which option would you choose for usability?”