Abstract

Software testing ensures the quality and reliability of software products, but manual test case creation is labor-intensive. With the rise of Large Language Models (LLMs), there is growing interest in unit test creation with LLMs. However, effective assessment of LLM-generated test cases is limited by the lack of standardized benchmarks that comprehensively cover diverse programming scenarios. To address the assessment of an LLM’s test case generation ability and lacking a dataset for evaluation, we propose the Generated Benchmark from Control-Flow Structure and Variable Usage Composition (GBCV) approach, which systematically generates programs used for evaluating LLMs’ test generation capabilities. By leveraging basic control-flow structures and variable usage, GBCV provides a flexible framework to create a spectrum of programs ranging from simple to complex. Because GPT-4o and GPT-3.5-Turbo are publicly accessible models, to present real-world regular users’ use cases, we use GBCV to assess LLM performance on them. Our findings indicate that GPT-4o performs better on composite program structures, while all models effectively detect boundary values in simple conditions but face challenges with arithmetic computations. This study highlights the strengths and limitations of LLMs in test generation, provides a benchmark framework, and suggests directions for future improvement.

1. Introduction

Software testing is an important component of the software development lifecycle because the practice ensures the quality and functionality of software products [1]. It is among the most resource-intensive aspects of software development, playing a critical role in validating that software systems meet established requirements and operate without faults [2,3]. As a result, developers employ various types of rigorous testing to identify bugs to significantly reduce the risk of costly defects in production environments [2,3,4]. Among tests like integration tests, acceptance tests, regression tests, etc., in software development, the unit test can be regarded as the most essential and widely used practice, where test cases are written to validate specific methods or components of the source code in isolation. This focused approach facilitates the early identification of defects at their source, minimizing their potential impact on the broader software system [5,6].

Since manual unit test case creation is a time-consuming process, consuming over 15% of a developer’s time on average [7], automating test case generation from codes or requirements has become an area motivating extensive research. Various generation approaches have been suggested, as outlined in review and survey studies [8,9]. Practical applications of automatic test case generation were also explored in past research [10]. Due to the emergence of Large Language Models (LLMs), many scholars and industrial practitioners feel the significant potential of LLMs in automating software development tasks. They have the ability to learn from extensive datasets and produce contextual codes to form relevant function or programs. Therefore, traditional automating coding-related tasks, such as code generation, test case creation, test execution, and code recommendation, transform their applications or studies into possible integration with LLMs [11,12,13,14]. That is, with their remarkable performance in generation and inference tasks, researchers can explore new applications of LLMs for software testing. In particular, unit test case generation has emerged as a promising area of research.

Generating unit test cases with LLMs introduces unique challenges. To generate effective and accurate test cases, the training and evaluation require diverse inputs and comprehensive coverage so that the generation tests can validate functional correctness to ensure robust software components. Overcoming this challenge heavily depends on the availability of a benchmark dataset, which serves as a means for evaluating task performance and can also be utilized for further training. An analogy can be drawn from text-to-SQL research, where datasets like Spider [15] and BIRD [16] are commonly used both to assess the performance of generating SQL queries from texts and to support text-to-SQL training. While previous research, such as TESTEVAL [17], proposed a benchmark by collecting Python programs from LeetCode to evaluate test case generation with LLMs, there is still a lack of a widely accepted, generic, and extendable benchmark dataset for assessing the quality, coverage, and effectiveness of tests generated by LLMs, as well as for training test case generation. This critical absence of a benchmarking dataset for LLM-based test generation limits the ability to reliably and objectively assess the performance and effectiveness of LLMs in generating accurate and functional test cases, especially when the LLM has been updated through new or different training or tuning methods. Hence, to remedy this limitation requires the development of a benchmark dataset for systematic evaluation of an LLM’s test case generation ability.

Instead of directly creating a benchmarking dataset, which typically involves collecting data from practices or online sources, as performed for other domains, we propose an approach called Generated Benchmark from Control-Flow Structure and Variable Usage Composition (GBCV), a systematic, bottom-up method which is designed to target any programming language for assessing test case generation. To maintain its flexibility, GBCV enables users to develop various control-flow structures and define desired variable usages, which are then used to generate programs for testing. These programs are subsequently incorporated into prompts to guide the LLM in creating unit test cases. The test cases are then applied to the programs for assessing the LLM’s test case capability. In addition, GBCV suggests generating code by starting with basic control-flow structures, which are used to systematically produce more complex programs without relying on retrieving data from existing code databases. It also provides users with a way to create a comprehensive spectrum of programs, ranging from simple and small programs to large and complex ones.

This paper marks an important first step toward our ambitious goal of advancing LLMs’ capabilities, through continuous improvements, in generating high-quality test cases. In this paper, our scope of study focuses on introducing the GBCV method, illustrating its use, and evaluating existing LLMs with this approach. Through these assessments, we not only highlight the utility of GBCV but also uncover key insights that pave the way for further research and refinement. Ultimately, our work lays the groundwork for developing more effective and reliable LLMs capable of producing robust test cases. The remainder of this paper is structured as follows: Section 2 discusses related work, while Section 3 details the GBCV approach and the evaluation metrics. Section 4 shows and analyzes the investigation results. Finally, Section 5 concludes this study and outlines the directions for future research.

2. Related Work

Our research aligns with two closely related areas: the utilization of Large Language Models (LLMs) or natural language processing (NLP) techniques for automated test case generation and the development of benchmark datasets to evaluate LLM performance in generating computing artifacts.

2.1. Test Cases Generation via LLMs

Test cases must be generated from certain software artifacts, which specify the inputs or expected outputs of the test cases [8]. Traditionally, according to the types of artifacts used, test case generation techniques fall into several categories, such as symbolic execution [18], search-based, and evolutionary approaches [19,20]. These approaches rely on static or dynamic analysis techniques to explore a program’s control and data-flow paths. These typically result in test cases that are less readable and understandable than manually written tests [21] and may either lack assertions or include only generic ones [22]. With the advancement of LLMs, researchers have explored their potential to automate certain software artifacts like codes, which has sparked interest in integrating LLMs into software testing, particularly for test case generation. The growing attention on LLM-based test case generation aims to improve efficiency, enable self-learning in test creation, or produce more natural-looking tests [23].

One way to use LLMs in test case generation is by emphasizing prompt creation. Yuan et al. [24] proposed a framework, ChatTester, which iteratively refines test cases by asking ChatGPT-3.5-Turbo to generate follow-up prompts. This method resulted in a 34.3% increase in compilable tests and an 18.7% improvement in tests with correct assertions, demonstrating the potential of LLMs in enhancing test generation. Schäfer et al. [23] introduced TestPilot, a tool that automates unit test generation using a Javascript framework without additional training. TestPilot leverages OpenAI’s Codex to generate unit tests by constructing prompts with an analyzing function’s signature, usage snippets, and documentation, then refining prompts to improve generation outcomes. Similarly, ChatUniTest, developed by Xie et al. [25], extracts raw information from JAVA codes and converted it into an Abstract Syntax Tree (AST). It utilizes static analysis during the preparation stage, applies an adaptive focal context mechanism to capture relevant contextual information within prompts, and follows a generation–validation–repair mechanism to refine and correct errors in generated unit tests.

Another area of focus is to use LLMs for property-based or specialized testing. Vikram et al. [26] used LLMs to generate property-based tests with API documentation. Koziolek et al. [27] studied the use of LLMs to automate test case generation for Programmable Logic Controller (PLC) and Distributed Control System (DCS) control logic. Plein et al. [28] investigated the use of LLMs, including ChatGPT and CodeGPT, for creating executable test cases from natural language bug reports. Their approach showed potential for enhancing tasks like fault localization and patch validation, with test cases successfully generated for up to 50% of examined bugs. Wang et al. [29] proposed UMTG, an NLP-based method to automate the generation of acceptance test cases from natural language use case specifications.

2.2. Benchmark for Evaluating LLMs

Research on creating benchmark datasets for LLMs to generate test cases is limited. One recent proposal, TESTEVAL [17], collected 210 Python programs from the online coding site LeetCodes. However, the benchmark is limited to the collected Python programs, which restricts its generalization or extension to other programming languages or use patterns. To better understand how to create benchmarks for an LLM’s capabilities in generating code-related artifacts, we explored two close fields: text-to-SQL translation and code generation.

Li et al. [16] in 2023 introduced the BIRD dataset, a large-scale benchmark designed to assess LLMs’ ability to parse text-to-SQL queries across diverse domains. Similarly, Wang et al. (2022) [30] developed the UNITE benchmark, consolidating 18 publicly available text-to-SQL datasets, creating a unified resource for evaluation. UNITE comprises over 120,000 examples from more than 12 domains, 29,000 databases, and 3900 SQL query patterns.

For benchmarking code generation and evaluation, HumanEval, proposed by Chen et al. in 2021 [31], was a carefully crafted benchmark consisting of 164 programming challenges. Each challenge includes a function signature, docstring, body, and multiple unit tests. Liu et al. [32] addressed the limitations of existing benchmarks in evaluating the functional correctness of code generated by LLMs, such as ChatGPT. They identified that many benchmarks rely on insufficient and low-quality test cases, leading to an overestimation of LLM performance. To tackle this, they introduced EvalPlus, a framework that augments a significant number of additional test cases using both LLM-based and mutation-based strategies. Yan et al. [33] presented CodeScope, a comprehensive benchmark for evaluating LLMs in code understanding and generation across multilingual, multitask, and multidimensional scenarios. CodeScope covered 43 programming languages and eight tasks, including code summarization, repair, and optimization.

2.3. Summary

Past research highlights the importance of benchmark datasets for training and evaluating an LLM’s abilities to generate computing artifacts. However, the existing benchmark dataset for test case generation is highly limited, lacking flexibility for extension to other programming languages or use cases. Most existing LLM-based test generation techniques focus on prompt crafting and additional documents or data for generating tests. Additionally, they are also domain-specific and lack generalizable evaluation methods.

This raises three key questions:

- Can we develop a flexible benchmark dataset?

- Can users use only a single prompt with source codes given to an LLM to generate test cases?

- How well can LLMs generate test cases while solely inputting source codes?

To address these gaps, there is a need for a new, more adaptable dataset to simulate real-world scenarios where users enter codes directly, without supplementary data like documentation, and request an LLM’s test case generation through a single prompt. To achieve this, we propose GBCV, an approach designed to create an extendable benchmark dataset and facilitate studies on LLMs’ test case generation ability with minimal input.

3. Method

The GBCV approach begins by using fundamental Control-Flow Graph (CFG) structures, integrated with data-flow analysis techniques, specifically focusing on predicate uses (p-uses) and computation uses (c-uses) for variable usage. By combining CFG with data-flow analysis, the user can generate diverse program variants that contain different structures and variable integrations. This systematic process enables the user to create a comprehensive dataset of generated programs designed to cover multiple execution paths, value conditions, and comparisons, which serves as a robust benchmark for evaluating LLM-generated test cases.

3.1. Overall Process

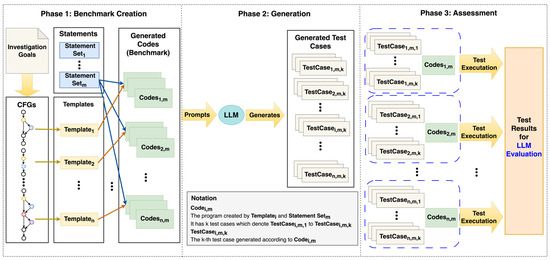

The systematic process of the GBCV approach consists of three main phases (see Figure 1). The first phase focuses on benchmark creation, where programs (codes) are generated to form a dataset used to prompt an LLM to generate test cases. The second phase is for test case generation. Each prompt that includes a generated program is used to ask the LLM to generate test cases. In the last phase, the generated test cases (e.g., TestCasei,m,k in Figure 1) are used to test the corresponding programs (e.g., Codesi,m in Figure 1), with the expectation that the program will pass all the test cases. In this way, we can investigate whether the LLM can produce valid and correct test cases.

Figure 1.

The process of the GBCV approach.

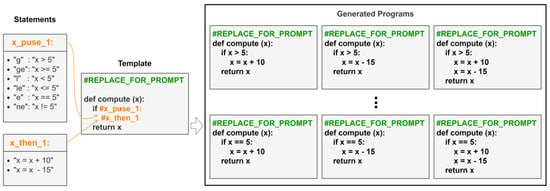

The CFG specifies the template which will be filled by partial or complete statements for the code generation. In the initial step of the GBCV approach, basic CFG, such as branches and sequences, serve as building blocks to form a program. These basic structures can be incrementally added to be built up into more complex combinations based on the desired level of investigation, which is a stopping point of structure creation.

Within the templates, placeholders are replaced with actual statements (e.g., c-use) or partial statements (e.g., p-use) to generate a complete program (see Figure 2). The p-use and c-use statements are designed based on several factors: the number of computations, the types of computations, and the specific decisions in a branch the evaluators plan to assess. They help in understanding how variables are utilized within the program’s logic. One can use a complicated combination of conditions to test an LLM’s logical reasoning capabilities. Hence, adding statements also increases the overall complexity of the programs. Additionally, by specifying different statements and templates, the combination can be manually controlled to make every generated program unique. Therefore, the benchmark dataset will not contain duplicated programs for investigation.

Figure 2.

An example to describe the steps of the Python program generation. The green color texts are the placeholder for prompts. The orange color texts are predicate nodes.

When specifying templates and statements, language-specific details must be considered. For example, Java and Python use different syntax for combining predicates. Java employs x > 4 || x < 10, while Python uses x > 4 or x < 10. Therefore, to apply GBCV across different languages, the same abstractions (i.e., CFG) and placeholder locations can be reused, but language-specific details are added while formatting the template and statements.

Finally, to assess the LLM’s test case generation, the user-defined metrics are calculated against the test outputs across different types of structures and computations. The users can ensure a comprehensive understanding of the model’s capabilities and limitations. In Section 3.4, we discuss the metrics defined according to the investigation goals described in Section 3.2.

3.2. Guiding Principles of Benchmark Creation

The GBCV is a bottom-up approach to generate programs for assessing the LLM. Several factors determine the final generated programs. We describe them in Table 1.

Table 1.

Details of guiding principles of benchmark creation. The examples contain Python codes or datatypes.

The complexity levels leverage a combination of cyclomatic complexity [34], cognitive complexity [35,36], and the reasoning behaviors of the LLM. Both cyclomatic and cognitive complexity account for the code structure (e.g., CFG) and decision points. Specifically, cyclomatic complexity is categorized into four levels: low (1–4), moderate (5–7), high (8–10), and very high (above 10, typically recommended for refactoring). Unlike cyclomatic complexity, cognitive complexity differentiates predicates and program structures and considers logical operators in evaluation. When assessing an LLM’s reasoning behaviors, we consider factors such as the number of statements, predicates, and datatypes. The considerations about the number of statements and predicates are drawn from prior research on chain-of-thought reasoning [37]. The datatype selection leverages the insights from previous studies on type-aware reasoning [32,38].

Once the complexity level and the specific LLM behaviors of interest are identified, the appropriate CFG and statements can be selected accordingly. The selection also depends on the intended coverage range. For instance, if the objective is to assess whether an LLM can recognize a sequence of computations, incorporating multiple c-use nodes is necessary. Likewise, if datatype awareness is important, incorporating diverse or complex datatypes should be considered.

3.3. Dataset Creation

Since the programs in the benchmark dataset for evaluating LLMs’ test case generation ability are created based on the guiding principles of the GBCV approach, the number and types of generated programs dynamically adjust according to the user’s specified assessment goals. Given that the primary objective of this study is to introduce the GBCV approach and gain an initial understanding of LLMs’ test case generation capabilities as a foundation for future research on more complex or real-world programs, our assessments focus on relatively simple structures, datatypes, and arithmetic computations. Additionally, we think LLMs should first demonstrate proficiency in generating test cases for simple programs, such as sequential or branch structures, before progressing to more realistic and complex programs. Our decisions on CFG constructions and variable selections are not made based on existing real-world software snippets but rather on the need to evaluate the LLMs’ capabilities. Therefore, according to the guiding principles, we intentionally select structures, datatypes, and computation levels between low and middle complexity.

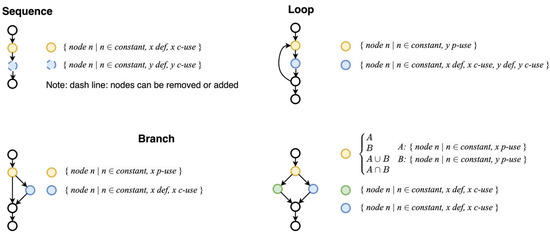

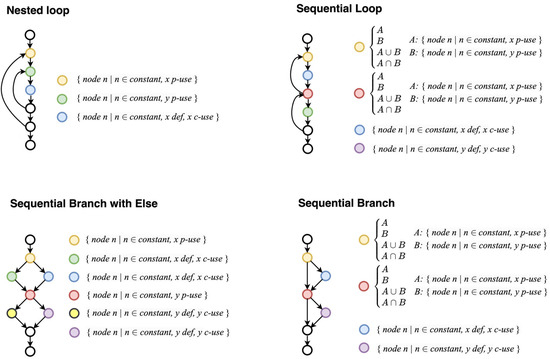

The following discusses the investigation goals in this study. Figure 3 and Figure 4 illustrate the control-flow structures and set of nodes that we defined based on our investigation goals.

Figure 3.

Simple program structure for code generation. The colors point to placeholder nodes that are replaced with the statements from corresponding sets of statement candidates. Examples include x def (x = 15), x def with x c-use (x = x + 10), y def (y = 7), and y-def with y c-use (y = y + 7) in sequence. These example statements are used to replace the respective nodes.

Figure 4.

Composite program structure for code generation. The color nodes indicate placeholders that are replaced with statements selected from the corresponding candidate sets. In addition to x def, y def, x c-use, and y c-use described earlier, examples of x p-use and y p-use include x < 5 and y > 10, respectively. Compound predicates consider union (or) and intersection (and) in the logical operations.

- Boundary and Comparison

This evaluation assesses the LLM’s ability to perform logical operations and comparisons. The p-use of a variable in a conditional statement, such as “x > 10”, checks whether the LLM can correctly identify the boundary value (i.e., 10), resulting in true/false branches when the comparison clause contains constant values. Successful boundary value detection has two key characteristics: First, the input value chosen in a test case matches the boundary value (e.g., an input value of 10 for “x > 10”). Second, the chosen input values can lead to both true and false execution paths, depending on the comparison.

Therefore, according to the low- to middle-complexity levels of the type of predicates and numbers of predicates and the low complexity for datatypes, we use two variables to form compound predicates. The datatypes and values for comparison are integers.

In the branch structure (see Figure 3), the value we chose for the variable x in the comparison predicate is 5; to maintain consistency for investigation, the integer value 5 is also used in the compound predicate in other branch-like structures (see Figure 4). We want to examine if an integer value (boundary) can be successfully detected given various structures, comparisons, and logical operations. Therefore, the following list shows some examples of the x p-use and y p-use nodes we used.

- ▪

- Branch, sequential branch, sequential branch with else:

- ○

- x p-use set 1: x > 5, x < 5 …, x == 5.

- ○

- x p-use set 2: x > 15, x < 15 …, x == 15.

- ○

- y p-use: y > 10, y < 10 …, y == 10.

- ○

- Compound predicate for x and y: x > 5 and y > 10, … x == 5 or y == 10.

- 2.

- Computation

This evaluation examines how well the LLM can perform computations involving variables. The c-use nodes in a sequence test the LLM’s ability to compute results, particularly when operations involve multiple variables, such as “x” and “y”. This helps assess the LLM’s effectiveness in handling simple arithmetic operations.

Therefore, those def and c-use nodes in all the structures present the computation. Note that not all programs contain computation. For example, a program in the branch can just change the definition of the variable. The following lists some examples of computation used in program generation.

- ▪

- Sequence, loop, branch, sequential branch, sequential branch with else:

- ○

- x def with x c-use: x = x + 10, … x = x*7, x = x − 7.

- ○

- y def with y c-use: y = y + 7, …, y = y − 7.

- ○

- x def with x c-use and y c-use: x = x + y + 10, …, x = x + y − 7.

When examining the correct test case generation in the case of computations joining with a branch in a branch-like structure, we further want to investigate if the LLM can reason both computation and boundary correctly without interfering with each other. Similarly, the computations with loops test whether the LLM can remember the temporary results during the iterations:

- 3.

- Iteration

Simple loops are used to evaluate the LLM’s ability to determine the correct number of iterations and accurately iterate through a portion of the code. This scenario is more complex than branches or sequences, as it may require handling a combination of computations and predicate uses. The following lists the iteration conditions we used in the loop-like structures. By comparing the constant number of loops and dynamic number of loops (i.e., the number of iterations depending on the input), whether the LLM detects the differences can be investigated.

- ▪

- Loop, sequential loop, nested loop:

- ○

- Constant value: 3.

- ○

- Dynamic value: y p-use.

Complex control-flow structures are composed of multiple basic structures. They are used to evaluate how well the LLM can manage combined operations during test case generation. For example, evaluating two branches can reveal whether the LLM can correctly handle multiple branching conditions. In theory, the depth branch (or even loop) is not constrained. However, guided by the creation principles and investigation goals, to add one additional branch to the basic branch structure to assess whether boundaries can be correctly detected while also keeping the program structure relatively simple, we add Sequential Loop structures. By assessing these combinations, we can also gain a deeper understanding of the LLM’s ability to manage boundary detection, computations, and iterations effectively, as well as identify the impact of new combinational effects within complex structures.

Two variables are used for examining test case generation under multivariable conditions in our study. However, if further exploration is needed on how LLMs handle additional variables, our approach allows the definition of more variables in the control-flow structure to generate additional programs for testing. The number of created programs depends on the combination of candidates used to replace the placeholders. Programs with the same control-flow structure but different predicates and computations can let us understand which types of usage or structures can be the potential weakness in the LLM’s test case generation abilities.

To understand whether boundaries can be correctly identified across various predicates or conditions, the comparisons under a single variable use logic operators, including greater than (>), less than (<), equal to (==), greater than or equal to (, and less than or equal to (. For predicates involving two variables, we employ both union (or) and intersection (and) operators to combine logical expressions, enabling an assessment of the LLM’s capability of handling compound conditions.

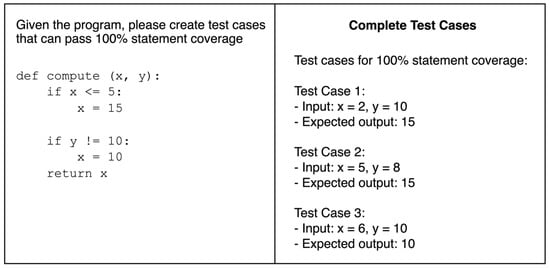

To facilitate prompting, we utilize a placeholder, #REPLACE_FOR_PROMPT, in the template. In our study, the prompting placeholder is replaced with the following instruction: “Given the program, please create test cases that can pass 100% statement coverage”. While achieving 100% statement coverage is not the primary objective of this study, this prompt encourages the LLM to generate a diverse set of test cases rather than a single one. Moreover, our aim is to evaluate the LLM’s ability to generate correct test cases on its first attempt without iterative refinements on prompts or follow-up questions. Other qualities of the test cases, such as branch coverage or coverage percentage, should be evaluated under the assumption that the LLM can generate correct test cases. These metrics are meaningful only if the generated test cases are valid and correctly test the program’s behavior. Therefore, ensuring the correctness of the test cases is fundamental before assessing their coverage effectiveness. Thus, the request for full statement coverage serves merely as a mechanism to elicit comprehensive output and does not impact the LLM’s inherent capability of generating accurate test cases.

3.4. Metrics

To define our metrics, we first need to introduce the following terminologies, which describe several characteristics of the test cases generated by LLMs.

- Complete (valid) and incomplete (invalid) test case.

For a test case in the LLM’s response to be valid, it must be complete, meaning it includes both inputs and expected results. If a generated test case does not contain expected results, it is considered incomplete and, therefore, invalid. The following presentations describe complete (valid) and incomplete (invalid) test cases:

- Complete (valid) test cases: (input values, output values).

- Incomplete (invalid) test cases: (input values).

- 2.

- Correct test case.

A correct test case must meet two conditions. First, it must be a complete test case, meaning it includes inputs and expected outputs. Second, when applied to test the generated program, the actual output must match the expected output. If an LLM cannot produce correct test cases, it indicates poor test case generation capability.

- 3.

- Untestable program (codes)

The untestable program in this paper does not mean that the program itself cannot be tested. Rather, it refers to a generated program that lacks valid generated test cases for its testing. Therefore, let T be the set of all LLM-generated test cases for a generated program P after one prompt request, and T = {T1, T2, …, Tn}. If all elements in T are invalid test cases, the program P cannot further be tested. However, if at least one valid test case Ti exists, the program P remains testable, as it can still be tested by Ti. In this case, we check if the valid test cases are correct and classify invalid test cases as incorrect test cases. The untestable program is special when we investigate the LLM’s ability of test case generation. It indicates that the LLM cannot even produce a valid test case that can be used to test the program.

There are multiple test cases generated by the LLM after a prompt request is associated with the program P. Hence, the error rate Ep of the LLM test case generation associated with program P is calculated as Equation (1), where Nt,p represents the total number of generated test cases according to a program and Ns,p is the number of correct test cases. The error rate helps identify weaknesses in the LLM’s ability to handle specific programs related to its structure, datatype, predicate, or computations.

Therefore, the average error rate AvgEi is calculated by dividing the sum of the error rate per program by the total number of programs TPi in category i, as shown in Equation (2).

The untestable program rate can be determined within a category. Equation (3) calculates Ri, the percentage of untestable programs within each program structure category. In this equation, Wi represents the total number of untestable programs in code structure category i, and TPi is the total number of programs in that category i.

The incomplete test case rate based on an LLM model also implies the level of understanding of the LLM of the definition of a complete (valid) test case. Equation (4) shows how to calculate this rate, where TRi is the incomplete test case rate, ITi represents the number of all the incomplete test cases generated by the LLM model i, CTi is the number of all the complete test cases generated by the LLM model i, and TTi is the number of all the test cases generated by the model i (i.e., the sum of the total test cases generated per category by the model i):

Each program does not have an equal number of test cases; therefore, the average test case number AvgTNi within category i is calculated by dividing the total generated test cases TNi in category i by the total number of programs TPi in category i, as shown in Equation (5).

4. Results

An automated program that was developed in this study automatically executed the LLM-generated test cases on their associated benchmark. This automated program ensures that each generated test case runs on its corresponding benchmark program, verifying whether it passes successfully (i.e., assertion holding true). The underlying assumption is that correctly generated test cases should result in assertions evaluated to be true rather than being arbitrary cases that fail. The number of passed tests is collected after the automatic execution of the test cases. Notably, the dataset (i.e., generated programs) is not extracted from any existing repository and does not follow any real-world programs. The generated programs themselves also do not contain any predefined test cases.

Under the selected guiding principles, a total of 786 Python programs were all generated using seven specified types of control-flow structures: branch, loop, nested loop, sequence, sequential branch, sequential branch with else, and sequential loop. Table 2 presents the average complexity of the generated programs within a category by using the average source lines of code (SLOC) and illustrates the coverage of each program type as a percentage of the total generated programs. The program that includes at most two variables can be used to assess the models’ performance in handling multiple predicates or computations. The categories, “sequential branch” and “sequential branch with else”, contain various combinations of c-use nodes and compound predicates; therefore, their coverages are relatively higher than other categories. To simulate how regular users use prompts to ask for test cases for a program from an LLM as the initial investigation performed by our generated benchmark, we used the generated programs to evaluate three language models, GPT-3.5-Turbo, GPT-4o-mini, and GPT-4o, rather than LLMs specifically trained for coding.

Table 2.

Average complexity and coverage across categories.

Table 3 shows the evaluation results, including the total number of test cases generated by each model, the number of complete test cases, and the percentage of incomplete test cases calculated by Equation (4). Among the models, GPT-3.5-Turbo had the highest rate of incomplete test cases at 32.74%, making it the least effective. GPT-4o-mini performed the best, with an incomplete test case rate of 6.1%, while GPT-4o had a similar performance, with an incomplete rate of 7.5%.

Table 3.

Incomplete test case rates across three models.

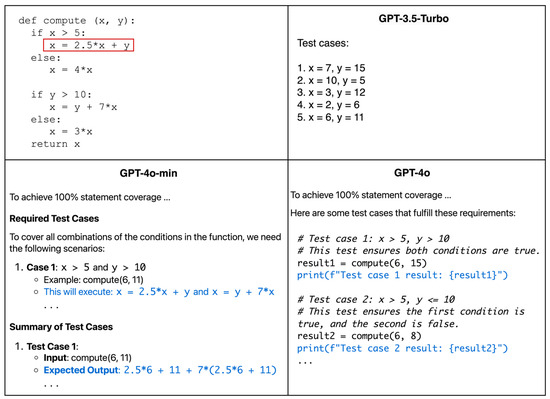

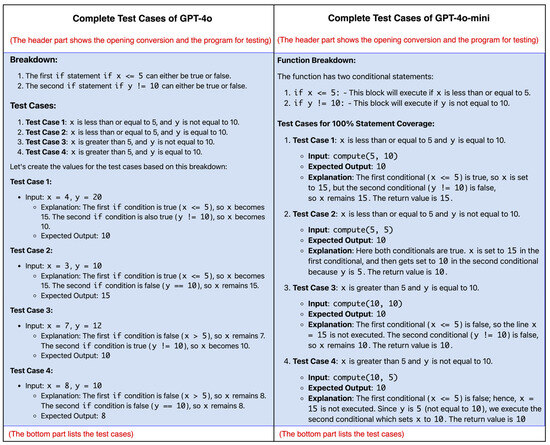

Figure 5 illustrates examples of incomplete test cases, while Figure 6 and Figure 7 present examples of a complete test case. In the case of GPT-3.5-Turbo, the generated responses often lacked the detailed explanations that were included in the outputs of GPT-4o and GPT-4o-mini. These detailed explanations are step-by-step descriptions on how expected outputs are derived in GPT-4o and GPT-4o-mini (see Figure 7). Specifically, GPT-4o and GPT-4o-mini provided expected test results in the form of either repeating the function inputs or elaborating on the computations involving those inputs (see the sentence in blue in Figure 5). However, these elaborations were often inaccurate, highlighting the need for further improvement in the models’ ability to provide reliable and precise explanations alongside the generated test cases.

Figure 5.

GPT-3.5-Turbo produces incomplete (invalid) test cases (i.e., no expected output values in the response) for the program (upper-left corner). In contrast, the same program that generates complete (valid) test cases is executed using models from the GPT-4o family.

Figure 6.

An example of complete test cases generated by GPT-3.5-Turbo consists solely of inputs and expected outputs, without additional explanations and descriptions.

Figure 7.

Example of complete test cases with detailed explanations, generated by the GPT-4o family for the same program shown in Figure 6.

Table 4 summarizes the performance of three LLMs, GPT-3.5-Turbo, GPT-4o-mini, and GPT-4o, across various categories of control-flow structures, showing their average error rates and number of test cases. They are calculated by Equations (2) and (5), respectively. It is important to note that the evaluation only considers complete test cases generated by each model. As expected, GPT-4o and GPT-4o-mini outperformed GPT-3.5-Turbo in most categories except for the “loop” category. GPT-3.5-Turbo struggled significantly, with error rates exceeding 80%, in the “nested loop,” “sequential branch with else,” and “sequential loop” categories. GPT-4o-mini had the lowest performance in the “nested loop” category but achieved its best results in the “sequential” category. Interestingly, GPT-4o faced challenges in the “loop” category while excelling in the “branch” category, matching the top performance of GPT-4o-mini in this area.

Table 4.

Average test cases and error rate across three models.

Table 5 shows the percentage of untestable programs in each category. GPT-3.5-Turbo had a high percentage of untestable programs in every category (all above 14.29%). GPT-4o-mini performed comparably the best, though GPT-4o had similar performance as GPT-4o-mini. A lower percentage of untestable programs indicates that the LLMs were able to provide complete test cases for those programs; however, this does not guarantee that the generated test cases are all correct. This suggests that some complete test cases may have been generated inaccurately through additional improper reasoning.

Table 5.

Percentage of untestable programs in every category.

5. Discussion

Our approach effectively and automatically evaluates the LLM’s test case generation capabilities based on defined metrics, providing flexibility to extend program structures from simple to complex. This flexibility allows us to discover common behaviors, such as iteration, across different program types, providing deeper insights into how LLMs handle similar operations or usage among programs with various complexities. The automated evaluation identifies areas where the models excel and where they encounter poor performance, while a qualitative assessment, performed by the manual process, provides a detailed analysis of the models’ outputs. This combined method ensures that we can assess both the accuracy and the reasoning behind the generated test cases, offering a comprehensive understanding of the strengths and weaknesses of different LLMs. The following sections present our findings from analyzing the responses of different language models in detail.

5.1. Simple vs. Composite Structure

The following two points highlight the differences among GPT-4o, GPT-4o-mini, and GPT-3.5 Turbo:

- GPT-4o performs better with composite structures, whereas GPT-4o-mini excels with simpler ones (as seen in the average error rate in Table 4).

GPT-4o appears to enhance the ability to understand composite programs but tends to generate more erroneous test cases when handling simple structures. This is evident from the higher rate of incorrect test cases produced by GPT-4o. GPT-4o is more likely to provide detailed explanations in its responses, which can also introduce inaccuracies if the reasoning is flawed. This suggests that the level of detail in GPT-4o’s responses may contribute to its higher error rate in simpler programs. The high rates of untestable programs suggest that GPT-4o has challenges interpreting certain programs, resulting in situations where expected outcomes are absent.

- Both the GPT-4o and GPT-4o-mini models produce more complete test cases than GPT-3.5-Turbo, particularly when dealing with composite program structures.

In the “sequential branch” category (see Table 5), the performance gap between the GPT-4o family models and GPT-3.5-Turbo is particularly evident, highlighting the enhanced ability of the GPT-4o family in managing complex control flows. This indicates that while GPT-4o has a strong capacity for dealing with complexity, there is still room for improvement in handling simpler scenarios and reducing the rates of untestable cases.

5.2. Computation

Two observations, below, summarize our discoveries regarding computation:

- Computation tasks continue to be a challenge in test case generation.

Understanding computational tasks and accurately calculating their outcomes in a problem still remains a challenge for test case generation; the later version of GPT [39] has reported improvements in previous studies that had poor interpretation on arithmetic computation in earlier GPT models. Our evaluation found that similar computational difficulties persist in test case results for the computation nodes (c-use) within generated programs. Given that errors are frequently observed in our dataset, which primarily contains a relatively simple structure, this indicates that arithmetic-related inaccuracies are still prevalent. This suggests that further refinement is needed for improvement in generating test cases for programs involving arithmetic computations.

- Computations involving two c-use variables produce incorrect results more easily.

Specifically, programs involving the c-use of two variables, such as “x = x + y + 5”, often lead to incorrect expected results in the generated test cases regardless of the overall program complexity. Furthermore, scenarios that include multiple c-use nodes within composite structures, such as nested loops or sequential loops, can further amplify these computational challenges during test case generation. In summary, computational challenges are common across all program types. Addressing these issues will be crucial to enhancing the overall robustness of LLM-generated test cases, particularly for programs with heavy arithmetic operations or composite structures.

5.3. Boundary and Comparison

We observed that all language models perform relatively well in detecting the boundary values of a variable within simple conditional statements (e.g., x > 5 or y < 10). LLMs were capable of selecting the appropriate integer values to identify boundaries even when handling more complex structures. For instance, Table 6 presents an example of test cases generated by all models in the sequential branch category. It is worth noting that many successful boundary detections occurred in conditional statements that do not involve any computation (e.g., x + y > 5).

Table 6.

Inputs for a program with sequential branch structure.

5.4. Iteration

All the language models demonstrate relatively high error rates and high untestable program rates in all the loop-like structures. These outcomes indicate that LLMs face challenges in handling iterations, particularly in programs that require continuous computation. Given that arithmetic and computation are already challenging for these models, it is not surprising to see poor performance when loops are introduced. Iterations inherently require repeated computations and maintaining the state over multiple cycles, which complicates the task for language models. This issue can be examined from two perspectives, as follows:

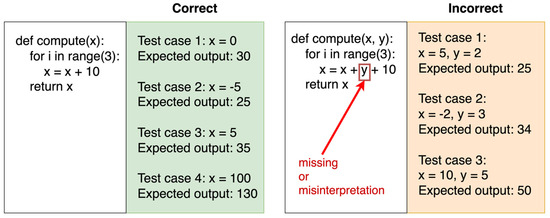

- Adding a c-use variable leads to incorrect results in computations.

Figure 8 illustrates that GPT 3.5-Turbo correctly knows three iterations should result in an increase of 30 at the end of the loop. However, after variable “y” is added to the statement, GPT 3.5-Turbo fails to interpret the variable “y” within the computation statement. The expected output becomes incorrect. This suggests a difficulty in integrating new variables into iterative logic consistently.

Figure 8.

Correct and incorrect test cases generated by GPT-3.5-Turbo.

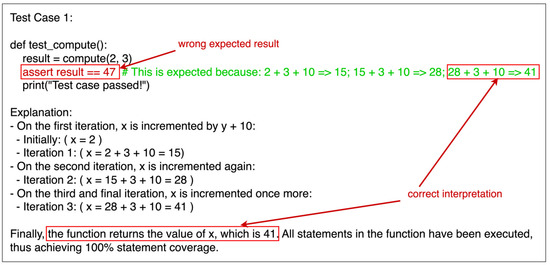

- The GPT-4o family shows partial success in handling iterations.

Figure 9 shows the test case generated by GPT-4o, which is the same program shown on the right-hand side of Figure 8. GPT-4o can perform the correct computation at each iteration, but the expected result (i.e., assert result value) is wrong. This discrepancy indicates that GPT-4o may separate generative mechanisms—one for performing arithmetic operations (i.e., addition) and another for generating the expected output. While it handles the arithmetic correctly, there is a disconnect when it comes to aligning the output with what is actually computed. This inconsistency suggests that the model’s internal representation for computation and output generation may not be fully integrated.

Figure 9.

The test case and its explanations generated by GPT-4o.

On the other hand, GPT-4o-mini shows mixed performance. It produces only partially correct test cases across all its generated cases. This suggests that GPT-4o-mini can correctly handle some aspects of iteration but may fail when multiple variables and computations are involved. The inconsistency in its output highlights the ongoing challenges in handling iterations effectively, particularly in programs with more complex looping and variable interactions.

These findings suggest that despite improvements, iterations involving repeated computations remain a significant obstacle for LLMs. Improving how these models handle state changes, variable usages, and multistep calculations within loops will be crucial for advancing their test case generation capabilities.

5.5. Limitations

Although users of the GBCV approach can rely on guiding principles to make decisions on various aspects, such as variable selection or Control-Flow Graph (CFG) construction, the influence of human judgment introduces potential bias. These biases originate from individual perspectives and subjective interpretations of the guiding principles. However, these biases can be mitigated through collaborative discussions among multiple users, ensuring a more balanced and objective approach. By aligning on investigation goals and critically evaluating different viewpoints, users can refine their decisions, enhance consistency, and improve the overall reliability of the analysis. In addition, the behaviors we discovered were only limited to the GPT model family. The conclusion might not be universal across existing LLMs.

6. Conclusions

Our GBCV approach remedies the critical gap of lacking a benchmark dataset for evaluating the test case generation capabilities of LLMs. By systematically assembling basic control-flow blocks combined with variable usage, we were able to use a wide range of various programs to comprehensively evaluate LLMs’ performance in four key areas: computation, boundary detection, comparison, and iteration. This approach provides a flexible, automated method to assess the correctness and effectiveness of LLMs when generating test cases for a diverse set of program structures.

In summary, GPT-4o and GPT-4o-mini outperformed GPT 3.5-Turbo, especially in handling more complex program structures. However, significant challenges remain, particularly in generating correct test cases for programs involving arithmetic computations and iterations. Handling computation still is one of the primary obstacles across all models. Similarly, high error rates and untestable programs were observed for loop-based structures, showing the ongoing difficulty LLMs face in maintaining a consistent state during repeated iterations. While GPT-4o and GPT-4o-mini have demonstrated some level of success in boundary detection and iteration interpretation, there is still considerable progress to be made before these models can be considered robust tools for automatic test case generation.

7. Future Work

Our goal is to improve the ability of LLMs to generate correct and comprehensive test cases, thereby making them more useful for software testing tasks in various increasingly complex scenarios. For future work, we plan to focus on enhancing LLM test case generation capabilities through several strategies. First, we will explore prompt engineering to optimize how prompts are structured and to better guide LLMs in generating more accurate test cases. Producing no valid test cases for a program (i.e., untestable program cases) also influences the LLM’s generation ability. We will further investigate why this case happens. Additionally, step-wise refinement will be added to incrementally improve test case generation, allowing LLMs to progressively enhance their understanding and output.

Another focus will be on creating more sophisticated metrics and a broader benchmark dataset. Regarding sophisticated metrics, we can add branch and statement coverages to examine various execution paths. Different test case qualities should also be applied to understand the quality of an LLM’s generation ability, such as robustness or execution efficiency. While creating a benchmark with wider coverage, we will extend to high-complexity-level choices, so covering multiple control-flow structures, integrating diverse comparisons, including additional logical operations, and using more than three variables should be considered. To generalize our discoveries, we will extend the GBCV to other LLMs; specifically, code generation models like CodeT5 or Codex will be investigated to understand that our findings are common behaviors.

Author Contributions

Conceptualization, H.-F.C.; methodology, H.-F.C.; software, H.-F.C.; investigation, H.-F.C. and M.S.S.; writing—original draft, H.-F.C.; writing—review and editing, H.-F.C. and M.S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tassey, G. The Economic Impacts of Inadequate Infrastructure for Software Testing; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2002. [Google Scholar]

- Grano, G.; Scalabrino, S.; Gall, H.C.; Oliveto, R. An Empirical Investigation on the Readability of Manual and Generated Test Cases. In Proceedings of the 26th International Conference on Program Comprehension, ICPC, Gothenburg, Sweden, 27 May–3 June 2018; Zurich Open Repository and Archive (University of Zurich): Zürich, Switzerland, 2018. [Google Scholar] [CrossRef]

- Tufano, M.; Drain, D.; Svyatkovskiy, A.; Deng, S.K.; Sundaresan, N. Unit Test Case Generation with Transformers and Focal Context. arXiv 2020, arXiv:2009.05617. Available online: http://arxiv.org/abs/2009.05617 (accessed on 17 November 2024).

- Winkler, D.; Urbanke, P.; Ramler, R. Investigating the Readability of Test Code. Empir. Softw. Eng. 2024, 29, 53. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, Z.; Zhi, C.; Han, J.; Deng, S.; Yin, J. ChatUniTest: A Framework for LLM-Based Test Generation. arXiv 2023, arXiv:2305.04764. Available online: http://arxiv.org/abs/2305.04764 (accessed on 17 November 2024).

- Siddiq, M.L.; Da Silva Santos, J.C.; Tanvir, R.H.; Ulfat, N.; Al Rifat, F.; Carvalho Lopes, V. Using Large Language Models to Generate JUnit Tests: An Empirical Study. In Proceedings of the 28th International Conference on Evaluation and Assessment in Software Engineering, Salerno Italy, 18–21 June 2024; pp. 313–322. [Google Scholar] [CrossRef]

- Daka, E.; Fraser, G. A Survey on Unit Testing Practices and Problems. In Proceedings of the International Symposium on Software Reliability Engineering, Naples, Italy, 3–6 November 2014; pp. 201–211. [Google Scholar]

- Anand, S.; Burke, E.K.; Chen, T.Y.; Clark, J.; Cohen, M.B.; Grieskamp, W.; Harman, M.; Harrold, M.J.; McMinn, P.; Bertolino, A.; et al. An Orchestrated Survey of Methodologies for Automated Software Test Case Generation. J. Syst. Softw. 2013, 86, 1978–2001. [Google Scholar] [CrossRef]

- Kaur, A.; Vig, V. Systematic Review of Automatic Test Case Generation by UML Diagrams. Int. J. Eng. Res. Technol. 2012, 1, 6. [Google Scholar]

- Brunetto, M.; Denaro, G.; Mariani, L.; Pezzè, M. On Introducing Automatic Test Case Generation in Practice: A Success Story and Lessons Learned. J. Syst. Softw. 2021, 176, 110933. [Google Scholar] [CrossRef]

- Li, J.; Li, G.; Li, Y.; Jin, Z. Structured Chain-of-Thought Prompting for Code Generation. ACM Trans. Softw. Eng. Methodol. 2024, 34, 1–23. [Google Scholar] [CrossRef]

- Li, J.; Li, Y.; Li, G.; Jin, Z.; Hao, Y.; Hu, X. SKCODER: A Sketch-Based Approach for Automatic Code Generation. In Proceedings of the 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE), Melbourne, VIC, Australia, 14–20 May 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 2124–2135. [Google Scholar]

- Li, J.; Zhao, Y.; Li, Y.; Li, G.; Jin, Z. AceCoder: Utilizing Existing Code to Enhance Code Generation. Available online: https://api.semanticscholar.org/CorpusID:257901190 (accessed on 17 November 2024).

- Dong, Y.; Jiang, X.; Jin, Z.; Li, G. Self-Collaboration Code Generation via ChatGPT. arXiv 2023, arXiv:2304.07590. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, R.; Yang, K.; Yasunaga, M.; Wang, D.; Li, Z.; Metcalfe, J.; Li, I.; Yao, Q.; Roman, S.; et al. Spider: A Large-Scale Human-Labeled Dataset for Complex and Cross-Domain Semantic Parsing and Text-To-SQL Task. arXiv 2018, arXiv:1809.08887. [Google Scholar] [CrossRef]

- Li, J.; Hui, B.; Qu, G.; Li, B.; Yang, J.; Li, B.; Wang, B.; Qin, B.; Cao, R.; Geng, R.; et al. Can LLM Already Serve as a Database Interface? A BIg Bench for Large-Scale Database Grounded Text-To-SQLs. arXiv 2023, arXiv:2305.03111. [Google Scholar] [CrossRef]

- Wang, W.; Yang, C.; Wang, Z.; Huang, Y.; Chu, Z.; Song, D.; Zhang, L.; Chen, A.R.; Ma, L. TESTEVAL: Benchmarking Large Language Models for Test Case Generation. arXiv 2024, arXiv:2406.04531. [Google Scholar] [CrossRef]

- Anand, S.; Harrold, M.J. Heap Cloning: Enabling Dynamic Symbolic Execution of Java Programs. In Proceedings of the 2011 26th IEEE/ACM International Conference on Automated Software Engineering (ASE 2011), Lawrence, KS, USA, 6–10 November 2011. [Google Scholar] [CrossRef]

- Fraser, G.; Arcuri, A. Evolutionary Generation of Whole Test Suites. In Proceedings of the 2011 11th International Conference on Quality Software, Madrid, Spain, 13–14 July 2011. [Google Scholar] [CrossRef]

- Fraser, G.; Arcuri, A. EvoSuite. In Proceedings of the 19th ACM SIGSOFT Symposium and the 13th European Conference on Foundations of Software Engineering—SIGSOFT/FSE ’11, Szeged, Hungary, 5–9 September 2011. [Google Scholar] [CrossRef]

- Almasi, M.M.; Hemmati, H.; Fraser, G.; Arcuri, A.; Benefelds, J. An Industrial Evaluation of Unit Test Generation: Finding Real Faults in a Financial Application. In Proceedings of the 2017 IEEE/ACM 39th International Conference on Software Engineering: Software Engineering in Practice Track (ICSE-SEIP), Buenos Aires, Argentina, 20–28 May 2017. [Google Scholar] [CrossRef]

- Panichella, A.; Panichella, S.; Fraser, G.; Sawant, A.A.; Hellendoorn, V.J. Replication Package of “Revisiting Test Smells in Automatically Generated Tests: Limitations, Pitfalls, and Opportunities”; Zenodo (CERN European Organization for Nuclear Research): Geneva, Switzerland, 2020. [Google Scholar] [CrossRef]

- Schäfer, M.; Nadi, S.; Eghbali, A.; Tip, F. An Empirical Evaluation of Using Large Language Models for Automated Unit Test Generation. arXiv 2023, arXiv:2302.06527. Available online: https://arxiv.org/abs/2302.06527 (accessed on 10 December 2023). [CrossRef]

- Yuan, Z.; Lou, Y.; Liu, M.; Ding, S.; Wang, K.; Chen, Y.; Peng, X. No More Manual Tests? Evaluating and Improving ChatGPT for Unit Test Generation. arXiv 2023. [Google Scholar] [CrossRef]

- Xie, Z.; Chen, Y.; Chen, Z.; Deng, S.; Yin, J. ChatUniTest: A ChatGPT-Based Automated Unit Test Generation Tool. arXiv 2023, arXiv:2305.04764. [Google Scholar] [CrossRef]

- Vikram, V.; Lemieux, C.; Padhye, R. Can Large Language Models Write Good Property-Based Tests? arXiv 2023, arXiv:2307.04346. [Google Scholar] [CrossRef]

- Koziolek, H.; Ashiwal, V.; Bandyopadhyay, S.; Chandrika, K.R. Automated Control Logic Test Case Generation Using Large Language Models. arXiv 2024, arXiv:2405.01874. [Google Scholar] [CrossRef]

- Plein, L.; Ouédraogo, W.C.; Klein, J.; Bissyandé, T.F. Automatic Generation of Test Cases Based on Bug Reports: A Feasibility Study with Large Language Models. arXiv 2024, arXiv:2310.06320. [Google Scholar] [CrossRef]

- Wang, C.; Pastore, F.; Göknil, A.; Briand, L.C. Automatic Generation of Acceptance Test Cases from Use Case Specifications: An NLP-Based Approach. arXiv 2019, arXiv:1907.08490. [Google Scholar] [CrossRef]

- Lan, W.; Wang, Z.; Chauhan, A.; Zhu, H.; Li, A.; Guo, J.; Zhang, S.; Hang, C.-W.; Lilien, J.; Hu, Y.; et al. UNITE: A Unified Benchmark for Text-To-SQL Evaluation. arXiv 2023, arXiv:2305.16265. [Google Scholar] [CrossRef]

- Chen, M.I.-C.; Tworek, J.; Jun, H.; Yuan, Q.; Henrique; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating Large Language Models Trained on Code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

- Liu, J.; Xia, C.S.; Wang, Y.; Zhang, L. Is Your Code Generated by ChatGPT Really Correct? Rigorous Evaluation of Large Language Models for Code Generation. Available online: https://openreview.net/forum?id=1qvx610Cu7 (accessed on 10 May 2024).

- Yan, W.; Liu, H.; Wang, Y.; Li, Y.; Chen, Q.; Wang, W.; Lin, T.; Zhao, W.; Zhu, L.; Deng, S.; et al. CodeScope: An Execution-Based Multilingual Multitask Multidimensional Benchmark for Evaluating LLMs on Code Understanding and Generation. arXiv 2023, arXiv:2311.08588. [Google Scholar] [CrossRef]

- Watson, A.H.; Wallace, D.R.; McCabe, T.J. Structured Testing: A Testing Methodology Using the Cyclomatic Complexity Metric; NIST Special Publication: Gaithersburg, MD, USA, 1996; p. 500. [Google Scholar]

- Shao, J.; Wang, Y. A New Measure of Software Complexity Based on Cognitive Weights. Can. J. Electr. Comput. Eng. 2003, 28, 69–74. [Google Scholar] [CrossRef]

- Misra, S. A Complexity Measure Based on Cognitive Weights. Int. J. Theor. Appl. Comput. Sci. 2006, 1, 1–10. [Google Scholar]

- Wei, J.S.; Wang, X.; Schuurmans, D.; Bosma, M.; Chi, E.H.; Le, Q.V.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar] [CrossRef]

- Winterer, D.; Zhang, C.; Su, Z. On the Unusual Effectiveness of Type-Aware Operator Mutations for Testing SMT Solvers. Proc. ACM Program. Lang. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).