Abstract

It has become increasingly preferable to construct bespoke software development processes according to the specifications of the project at hand; however, defining a separate process for each project is time consuming and costly. One solution is to use a Software Process Line (SPrL), a specialized Software Product Line (SPL) in the context of process definition. However, instantiating an SPrL is a slow and error-prone task if performed manually; an adequate degree of automation is therefore essential, which can be achieved by using a Model-Driven Development (MDD) approach. Furthermore, we have identified specific shortcomings in existing approaches for SPrL Engineering (SPrLE). To address the identified shortcomings, we propose a novel MDD approach specifically intended for SPrLE; this approach can be used by method engineers and project managers to first define an SPrL, and then construct custom processes by instantiating it. The proposed approach uses a modeling framework for modeling an SPrL, and applies transformations to provide a high degree of automation when instantiating the SPrL. The proposed approach addresses the shortcomings by providing an adequate coverage of four activities, including Feasibility analysis, Enhancing the core process, Managing configuration complexity, and Post-derivation enhancement. The proposed approach has been validated through an industrial case study and an experiment; the results have shown that the proposed approach can improve the processes being used in organizations, and is rated highly as to usefulness and ease of use.

1. Introduction

The software process (also referred to as software development methodology/method) is a critical factor in developing quality software systems, as it helps transform user needs into a software product that satisfies those needs [1]. There is no software process suitable for all development situations [2], as the suitability of a process depends on various product, project, and organizational characteristics [3]. Thus, building custom processes has become critical for software development organizations [4]; however, defining a specific process for each and every project is a time-consuming and costly endeavor [5]. Method tailoring is an effective approach for building bespoke software processes [6].

Software Process Line (SPrL) is a relatively new method tailoring approach that aims to tackle the deficiencies of traditional tailoring approaches (e.g., deficiency in supporting certain process modifications such as replacing or adding new elements) [7]. In the context of software process engineering, an SPrL is a special Software Product Line (SPL) [8,9] that increases reuse opportunities and decreases the adaptation effort [10]. According to [11], an SPrL is “a set of software development processes that share a common, managed set of features satisfying the specific needs of an organization and that are developed from a common set of core assets in a prescribed way”. Based on this definition, SPrLs focus on identifying the variabilities among a set of processes and modeling them as a core process; the core process is then instantiated to produce a bespoke process. Although the SPrL approach can potentially help define specific processes faster and with less effort [12], its successful implementation requires that organizations first examine its suitability for their specific needs [13]. However, existing SPrL approaches fail to dedicate proper attention to this issue.

If done manually, instantiating an SPrL for constructing project-specific processes is time consuming and error prone [14]; therefore, it is important that a certain degree of automation be provided. This can be achieved by using the Model-Driven Development (MDD) approach for SPrL Engineering (SPrLE): the tacit tailoring knowledge of experts is captured in the form of transformations, which are automatically applied to the model of the SPrL to produce custom processes [12]. MDD has many advantages, such as increasing productivity, managing complexity, and enhancing portability [4]. Due to its potential benefits, MDD has already been used in SPrLE approaches [12]; however, as discussed in Section 4.3, existing approaches are deficient in that many of them lack adequate support for the features that are considered essential in MDD, such as multi-level modeling [15,16,17]. Multi-level modeling has been used for multi-stage configuration of software product lines and can help manage configuration complexity [18,19,20]; providing adequate support for multi-level modeling is also desirable in the context of software processes, as the process model can be gradually refined from a fully abstract specification to a fully concrete one and the output of each level can be polished, as needed, at the process engineer’s discretion.

The core process is a key part of an SPrL [14]. In most SPrLE approaches, a bottom-up approach is applied in which the existing processes of the organization are analyzed to identify and model their commonalities and variabilities in the form of a core process. Since existing processes can be afflicted with shortcomings, the bottom-up approach can result in a less-than-appropriate core process. Therefore, it is required that the core process be improved by adding additional process elements. Furthermore, the process instantiated from the core process may not satisfy all organizational/project needs, resulting in delta (remaining) requirements. It is therefore essential to define specific mechanisms for enhancing the configured process to meet these delta requirements.

Through analyzing existing model-driven SPrLE approaches (as reported in Section 2), we have identified the following four activities as the main areas that require improvement, thus providing the motivation for this research:

- Feasibility analysis: Examining the suitability and feasibility of the SPrLE approach in the target organization.

- Enhancing the core process: Extension and improvement of the produced core process.

- Managing configuration complexity: Managing the complexities involved in variability resolution.

- Post-derivation enhancement: Enhancing the configured process to meet the remaining requirements.

The main contribution of this paper is a novel MDD approach for SPrLE that can be used by process engineers for defining the SPrL, and also by project managers for developing custom processes. A high-level, preliminary version of this approach was introduced in [14]; the version introduced herein is the complete and detailed approach, refined and validated through empirical evaluation. The approach consists of the following: (1) a framework for modeling SPrLs, in which the chain of models created throughout the proposed approach are represented, and (2) a process for applying the framework to create an SPrL and develop specific processes by instantiating the SPrL. The proposed approach is performed in two phases: Domain Engineering (DE) and Application Engineering (AE). During DE, the commonalities and variabilities among existing processes are analyzed to create a core process (also referred to as the common architecture or the reference architecture). During AE, bespoke processes are automatically built by executing transformations for resolving the variabilities defined in the core process (based on specific project contexts). The framework and the process are defined with the specific aim of providing adequate coverage of the four activities mentioned above (as further discussed in Section 4.3).

The proposed approach has been evaluated through a case study and an experiment. Through the case study, we have evaluated the proposed approach as to the challenges of using it for developing an SPrL, and its potential for improving the processes currently in use. Through the experiment, we have compared process instantiation by the proposed approach (AE phase) with the ad hoc, manual approach that is commonly used to construct bespoke processes. The results have shown that the proposed approach can indeed result in improvements in the processes currently in use, and is also useful and easy to use in real situations. In addition to the case study and the experiment, we have also compared our approach to existing ones to show its superiorities in addressing the four activities mentioned above.

The rest of this paper is structured as follows: Section 2 provides a brief analytical survey of the related research; Section 3 introduces the proposed framework and proposes a process for applying it; Section 4 presents the validation of the approach in three parts, including a case study (Section 4.1), an experiment (Section 4.2), and a comparative analysis (Section 4.3); Section 5 provides a discussion on the benefits and limitations of the proposed approach; and Section 6 presents the concluding remarks and suggests ways for furthering this research.

2. Related Research

Existing approaches for model-driven engineering of SPrLs are briefly introduced in this section. Their shortcomings are then listed, forming the main motivation for this research. Furthermore, further explanations about these approaches are provided in Section 4.3, where we compare them with the proposed approach based on the support that they provide for the four activities identified as the main areas that require improvement.

In [10], a meta-process called CASPER and a set of process practices for creating adaptable process models are proposed. As expected, there are two main subprocesses in CASPER: Domain Engineering and Application Engineering. DE is an iterative process focused on capturing software process domain knowledge and developing reusable core assets, and includes five activities: process context analysis, process feature analysis, scoping analysis, reference process model design, and production strategy implementation. In AE, a specific process is automatically produced according to the requirements of the project at hand by using a reference process model and a set of transformation rules.

In [12], an approach is proposed in which the variabilities of process models are represented via feature models, and the software process is modeled in eSPEM; MDD is used for supporting automatic execution of transformation rules. In [6], an approach is proposed for developing an architecture for the target SPrL and then deriving a specific process from this architecture; this approach provides extensions to the SPEM Metamodel for clearly representing the commonalities and variabilities in the SPrL.

In [21], an approach is proposed for use by Software Process Consulting Organizations (SPCOs) to define reusable processes; SPCOs can use these processes to provide support for other organizations by helping them define, deploy, and improve their software processes.

In [22], the use of variability operations (such as RenameElement and RemoveElement) is examined for constructing SPrLs in the context of VM-XT; VM-XT is a flexible software process model that is considered as the de facto standard for public-sector IT projects in Germany. In [23], the results of [22] are used for developing an approach for constructing flexible SPrLs; this approach has been validated by application to VM-XT. In this approach, a variability metamodel is created and embedded in the VM-XT metamodel. This adapted metamodel includes a set of variability operations that are applied to the root variant (the reference model) to create specific process variants.

In [24], an algorithm called the V-algorithm is proposed for discovering SPrLs from process logs; in this approach, the log is split into three clusters that are used for identifying the common and variable parts of processes. The approach proposed in [25] supports variability management in software processes, automatic derivation of software processes, and also automatic transformation of the derived processes into workflow specifications that can be executed by workflow engines.

In [26], a mega-model (a model whose elements represent models and transformations) is proposed for modeling and evolution of SPrLs in small software organizations. There are certain complexities involved in defining and applying tailoring transformations in current transformation languages; hence, process engineers and project managers find it too difficult to define the transformations. To address this issue, an MDE-based tool is proposed in [27] that uses the Mega-model introduced in [26] to hide the complexities involved in defining the transformations.

In [28], a learning approach called Odyssey-ProcessCase is proposed, which uses Case-Based Reasoning (CBR) and a rule-based system to resolve SPrL variabilities. In [29], a systematic software process reuse approach is proposed that combines SPrLE and Component Based Process Definition (CBPD) techniques to manage process-domain variability and modularity. In [30], the Odyssey tool is proposed for supporting SPrLE activities; the usefulness and ease of use of the tool has been evaluated through an experiment.

In [31], an approach called Meduse is proposed for tailoring development processes according to project needs; the approach is based on SPLE and method engineering techniques. Process variabilities are specified as a feature model during the domain analysis phase; Pure Delta-Oriented Programming is then used to deal with process variabilities during the process domain implementation phase. In [32], integration of process engineering and variability management is investigated for tailoring safety-oriented processes; to this end, an Eclipse plugin is proposed by integrating Eclipse Process Framework Composer (EPFC) tool with Base Variability Resolution (BVR). The tool thus produced has been validated through applying it for engineering a safety-oriented process line for space projects.

A relatively small number of approaches focus on proposing an SPrL for a specific domain; in other words, these approaches do not deal with how to define and configure SPrLs. In [33], a canonical software process family based on the Unified Process (UP) is proposed to support software process tailoring; the UP-process line is constructed based on the CASPER meta-process [10]. In [34], an SPrL is proposed for engineering service-oriented products; an environment is also developed to support the proposed SPrL.

The main motivation for this research is the observation that existing SPrLE approaches need to be improved in several aspects:

- None of the approaches provides mechanisms for examining the suitability and feasibility of SPrLs for the target organization; they suppose that SPrLs have already been deemed as suitable for the target organization. Therefore, they begin by identifying the commonalities and variabilities among the processes; however, adopting the SPrL approach without prior assessment of its suitability can lead to failure [13].

- The bottom-up approach is the predominant approach used for creating a core process: In this approach, processes that already exist in the problem domain are used for identifying the commonalities and variabilities. However, this approach can result in an inappropriate core process, as existing processes may not be ideal. The top-down approach attempts to define the commonalities and variabilities in a particular domain from scratch (based on general process frameworks), thus raising the chances of missing important details [6]. The bottom-up and top-down approaches can complement each other; however, a synergistic combination of the two has never been attempted in SPrLE approaches.

- In most of the approaches, configuration complexity is solely managed via implementing a set of transformations for automatic resolution of variabilities (e.g., [10,12]). As demonstrated in SPLE approaches, multi-stage configuration can be used effectively as a mechanism for managing configuration complexity; however, existing SPrLE approaches lack adequate support for this sort of configuration.

- The configured process may not satisfy all organizational/project needs. Therefore, specific mechanisms should be defined for enhancing the configured process to meet the remaining requirements; a feature that is completely ignored by existing SPrLE approaches.

Research on software product lines is abundant, and SPL Engineering (SPLE) approaches can indeed help ameliorate some of the deficiencies found in SPrLE approaches. For instance, multi-stage configuration is currently employed in SPLE for managing configuration complexity, and it can benefit existing SPrLE approaches as well [35,36,37]; furthermore, some of the tools used in SPLE, such as FeatureIDE [38], have been successfully employed in some SPrLE approaches (e.g., [39]). However, existing model-driven SPLE approaches (such as [40,41,42,43]) are not mature yet, and the problems listed above are observed in these approaches as well. Therefore, even though SPLE techniques can be used for improving existing SPrLE approaches (or for proposing new ones), migrating the currently available model-driven SPLE approaches to the process domain (thereby producing model-driven SPrLE versions) is not likely to improve the status quo.

3. Proposed Model-Driven Approach for SPrLE

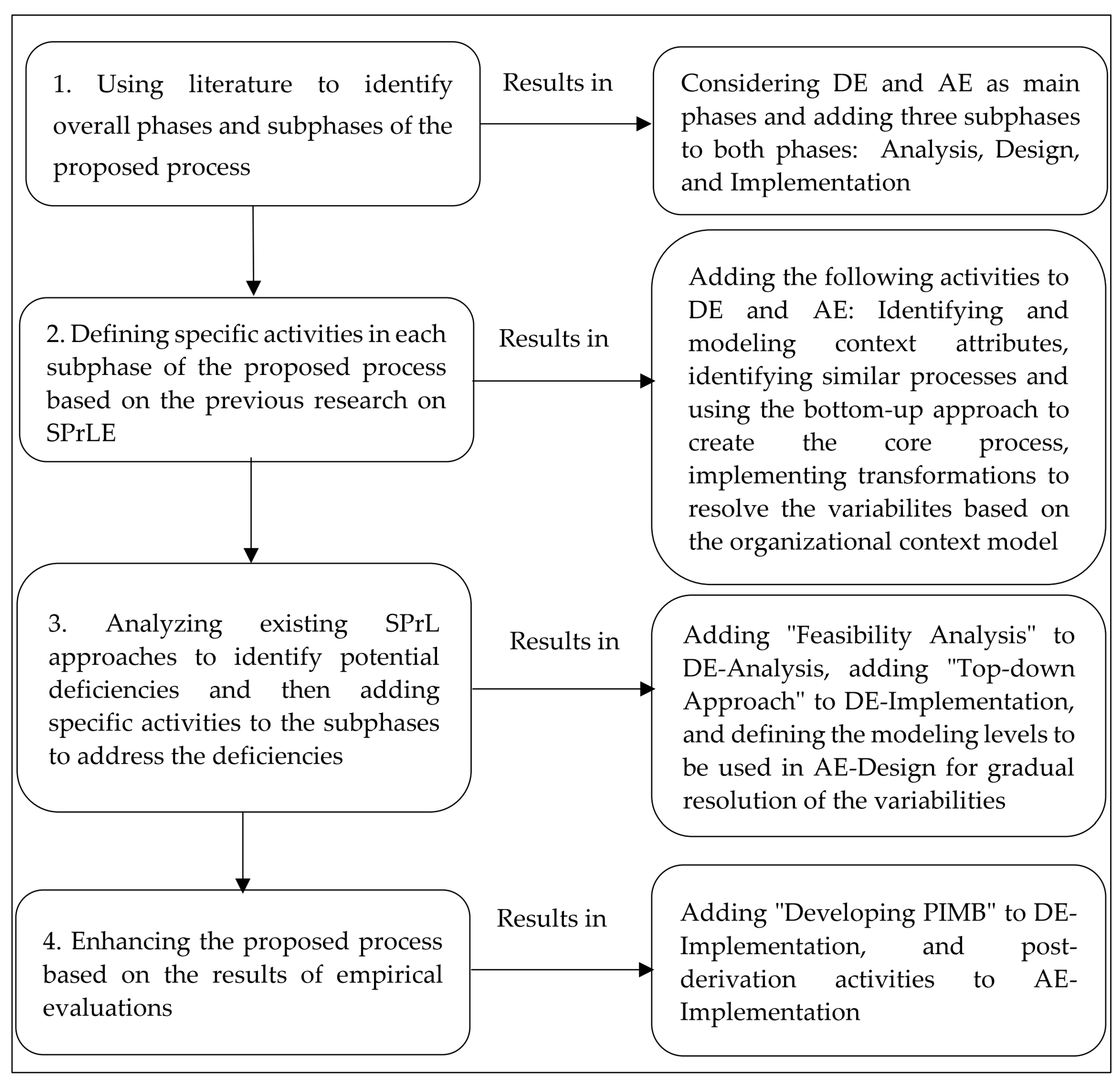

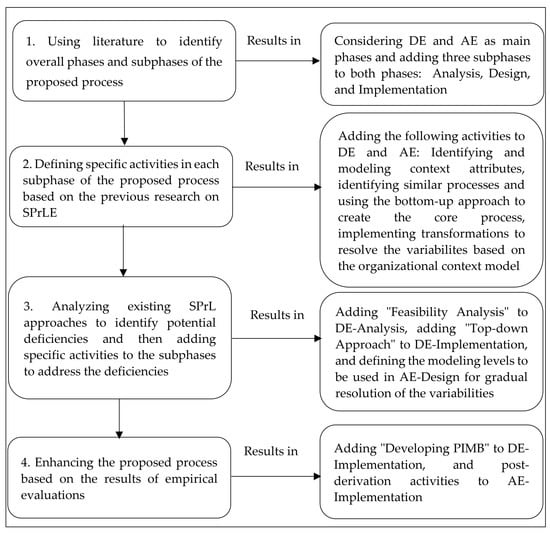

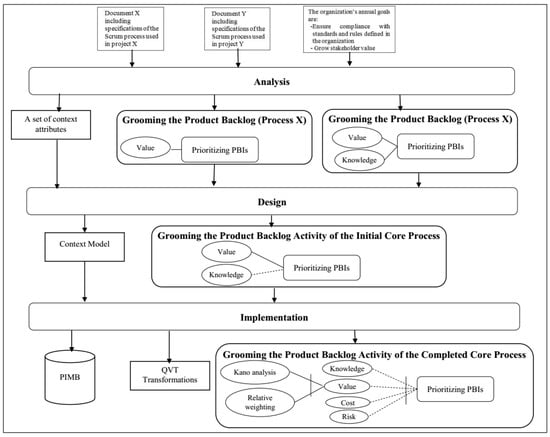

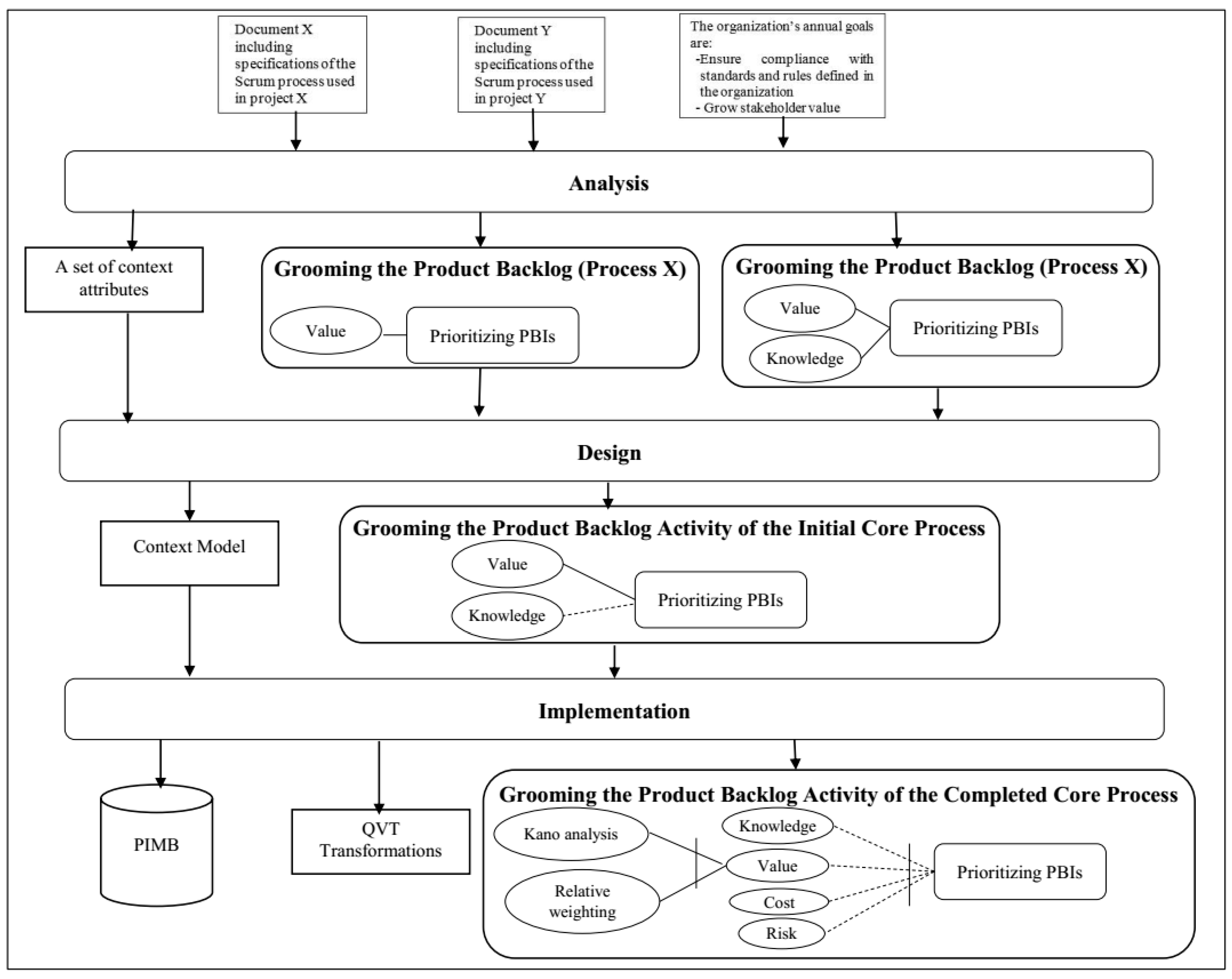

Developing an MDD approach for SPrLE requires that a modeling framework, consisting of modeling levels, be defined for modeling SPrLs. In addition, a process should be developed for applying the framework; an overview of the research methodology used to build the SPrLE process is shown in Figure 1. The phases and subphases of the proposed process were constructed based on existing literature on SPLE and SPrLE. Engineering a process line is usually performed in two generic phases: Domain Engineering (DE) and Application Engineering (AE) [44]. Therefore, our proposed approach is also performed in these two phases. In SPLE, DE encompasses three main subphases: Analysis, Design, and Implementation/Realization [45,46]. The main purpose of Domain Analysis is to identify a set of reusable requirements for the systems in the domain. Domain Design is focused on establishing a common architecture for the systems in the domain whereas the purpose of Domain Implementation is to implement the reusable assets (e.g., reusable components) [45]. Similarly, there are three main subphases in AE: Analysis, Design, and Implementation/Realization [46]. Application Analysis is focused on identifying product-specific requirements by resolving the variabilities defined at the requirements level. The purpose of Application Design is to derive an instance of the reference architecture, which conforms to the requirements identified in Application Analysis. In Application Implementation, the final implementation of the product is developed by reusing and configuring existing components and also by building new components to satisfy product-specific functionality [46].

Figure 1.

The research methodology used to build the SPrLE process.

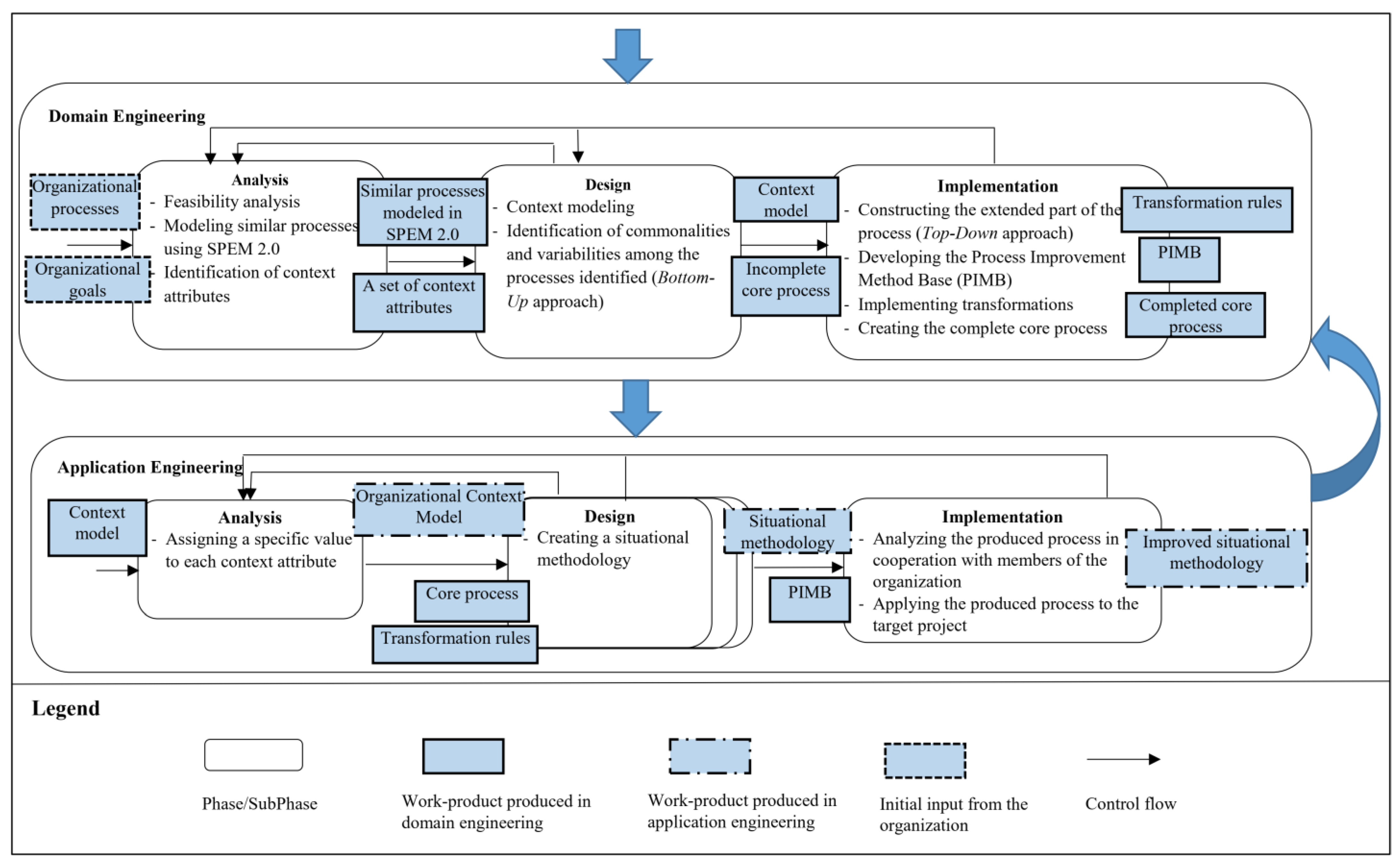

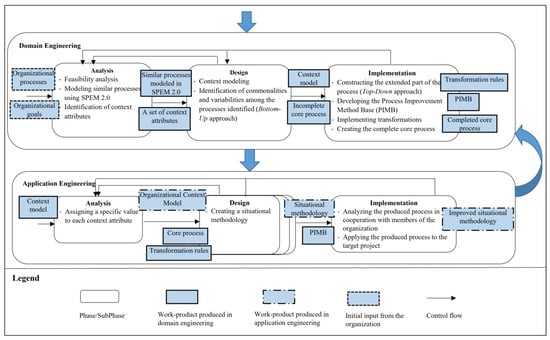

As SPrLE is a specific version of SPLE, we decided to include the three subphases explained earlier into the DE and AE phases of our proposed process. The proposed process is shown in Figure 2. As shown in Figure 2, returning from each subphase to the previous one(s) is possible, depicted in Figure 2 by feedback loops. Moreover, we can return from each subphase of AE to DE for applying changes to the models; this makes it possible to apply changes to the SPrL while constructing or using it.

Figure 2.

Proposed process for SPrLE.

The activities performed in each subphase were defined in such a way as to tackle the deficiencies detected in previous approaches, as explained throughout the rest of this section.

The subphases of DE are performed as follows:

- Analysis: This subphase is focused on specifying the domain scope by identifying a subset of existing processes and a set of attributes to describe different project situations. However, we first need to analyze the current situation of the target organization to examine whether the SPrL approach is suitable for its specific needs (Feasibility analysis). If the SPrL approach is suitable for the organization, the current processes and organizational goals should first be identified to then be used for identifying the variabilities and context attributes. To this end, the processes currently used in the organization are fed to this subphase to be modeled in Software & System Process Engineering Metamodel (SPEM) 2.0 notation [47]. Organizational goals are also used here to help identify the context attributes that are important to the organization. The processes (modeled in SPEM 2.0) and a set of context attributes are the outputs of the analysis subphase.

- Design: The aim of this subphase is to build an initial version of the SPrL by identifying variabilities among existing processes; this activity is commonly performed in SPrL approaches (e.g., [10,12]). To this end, the outputs of the analysis subphase are fed to the design phase as input models. Context attributes are modeled by instantiating the context metamodel defined for this purpose. The similarities and variabilities among existing processes are also identified via the bottom-up approach; the output of this approach is an incomplete core process (also referred to as the reference architecture). The context model and the initial core process are the outputs of the design subphase.

- Implementation: This subphase is mainly focused on the following: improving the initial core process, building the required foundations for improving the processes after instantiation (post-derivation enhancement), and managing the complexity of process instantiation by providing a certain degree of automation. To this end, the outputs of the design subphase are fed to the implementation subphase as input models. During implementation, the top-down approach is applied to produce a metaprocess that includes all the variabilities identified in the target domain; the initial core process is then completed by enriching it with the results of the top-down approach. Transformations are also produced in this subphase, to be used in AE for automatic derivation of processes from the SPrL. As the process generated in AE may not satisfy all organizational/project needs (resulting in delta requirements), a process improvement method base (PIMB) is also produced; the PIMB contains process elements extracted from other processes, such as XP [48] and DSDM [49], which can be added to the generated process to address the delta requirements.

The subphases of AE are performed as follows:

- Analysis: The aim of this subphase is to specify the context in which a specific process needs to be built; these attributes are like requirements specific to a product in SPLE. To this end, context attributes are given specific values based on the situation at hand, and the organizational context model is produced.

- Design: This subphase is focused on producing a specific process while managing the configuration complexity by gradually resolving variabilities; the modeling levels comprising our proposed modeling framework are used in this subphase for gradual resolution of the variabilities. The organizational context model, core process and transformation rules are fed to the design subphase as input models. During design, the variabilities defined in the core process are gradually resolved by executing the pre-defined transformations in a multi-level fashion (based on the values of context attributes). The bespoke methodology thus generated is the output of the design subphase.

- Implementation: The aim of this subphase is to improve the constructed process by satisfying the remaining organizational/project needs (post-derivation enhancement); the need to conduct this activity has been identified throughout evaluating the proposed approach via a case study. The bespoke methodology is fed to the implementation subphase as the input model. The methodology is first analyzed, and if delta requirements are identified, the PIMB is used to address the shortcomings. The enhanced methodology is then applied to the target project.

The framework and the process are defined so that the proposed approach addresses the shortcomings observed in previous model-driven SPrLE approaches. To be specific, the four activities that we had identified as the main areas requiring improvement are properly addressed, as explained below:

- Feasibility analysis: The feasibility of using an SPrL approach for the organization is examined during DE by a set of requirements specifically defined for this purpose [13].

- Enhancing the core process: During DE, the top-down approach is applied to produce a metaprocess for the target domain. The core process created by analyzing existing processes (via the bottom-up approach) is combined with the metaprocess, resulting in an enhanced core process.

- Managing configuration complexity: During AE, configuration complexity is managed by the following: (1) providing semi-automation in resolving the variabilities, and (2) support for staged configuration by providing multi-level resolution of variabilities.

- Post-derivation enhancement: During the implementation subphase in AE, the process instantiated from the SPrL is analyzed to identify the remaining/delta requirements; the PIMB is then used to address the delta requirements.

A more thorough analysis of how the proposed approach compares to previous approaches with regard to supporting the above activities will be provided in Section 4.3.

3.1. Domain Engineering

The SPrL is built during DE’s three subphases. The subphases are explained in the following subsections.

3.1.1. Analysis

The following activities are performed in this subphase:

- Feasibility Analysis: Organizations should first examine whether the SPrL approach is suitable for their specific needs. In [13], we have identified a comprehensive set of requirements for adopting the SPrL approach. These requirements have been presented in the form of a questionnaire, which has been designed for determining the suitability of organizations for creating SPrLs; the questionnaire has been explained in [13]. Organizations that intend to develop an SPrL can consider this questionnaire as a suitability filter. The more an organization satisfies the requirements, the more justified it is to adopt the SPrL approach. As pointed out in [13], the level of importance of the requirements may not be the same in different contexts. Furthermore, it is required that a threshold be determined when applying the requirements to a specific context; this threshold is the minimum score that should be satisfied by the organization in order to be suitable for creating the SPrL. An example of the application of requirements for feasibility analysis has been provided in Appendix A.

- Modeling similar processes using SPEM 2.0: Of the requirements defined in [13], two are related to all the (potential) processes that can be instantiated from the core process (the process line portfolio): Structural similarity, and Process type. These two requirements are used for identifying the software processes in an organization that will be used for constructing the core process of the SPrL. Structural similarity refers to the degree of similarity in the structure of processes selected for creating the core process; there is no rigid threshold, but the degree of variabilities defined in the core process should be justifiable. Process type refers to the type of processes that will be part of the SPrL (such as agile or plan-driven). As the identified processes may have been modeled in different notations (or not documented at all), all of them should first be modeled/re-modeled in SPEM 2.0 [47] in order to unify their representations and thereby facilitate the identification of their commonalities and variabilities. SPEM 2.0 has been selected because it is the OMG standard for software process modeling.

- Identification of context attributes: Context attributes (also referred to as situational factors [50]) are used for describing the situation in which a specific process should be built. These attributes are the specific characteristics of the organization (e.g., maturity level), or the project at hand (e.g., degree of risk), or the individuals involved in the project (e.g., skill and knowledge). There are many research works on specifying situational factors (e.g., [51,52,53,54]). These works can also be used as sources for identifying context attributes; however, the tacit knowledge of subject matter experts should also be explored to refine these context attributes.

3.1.2. Design

The following activities are performed in this subphase:

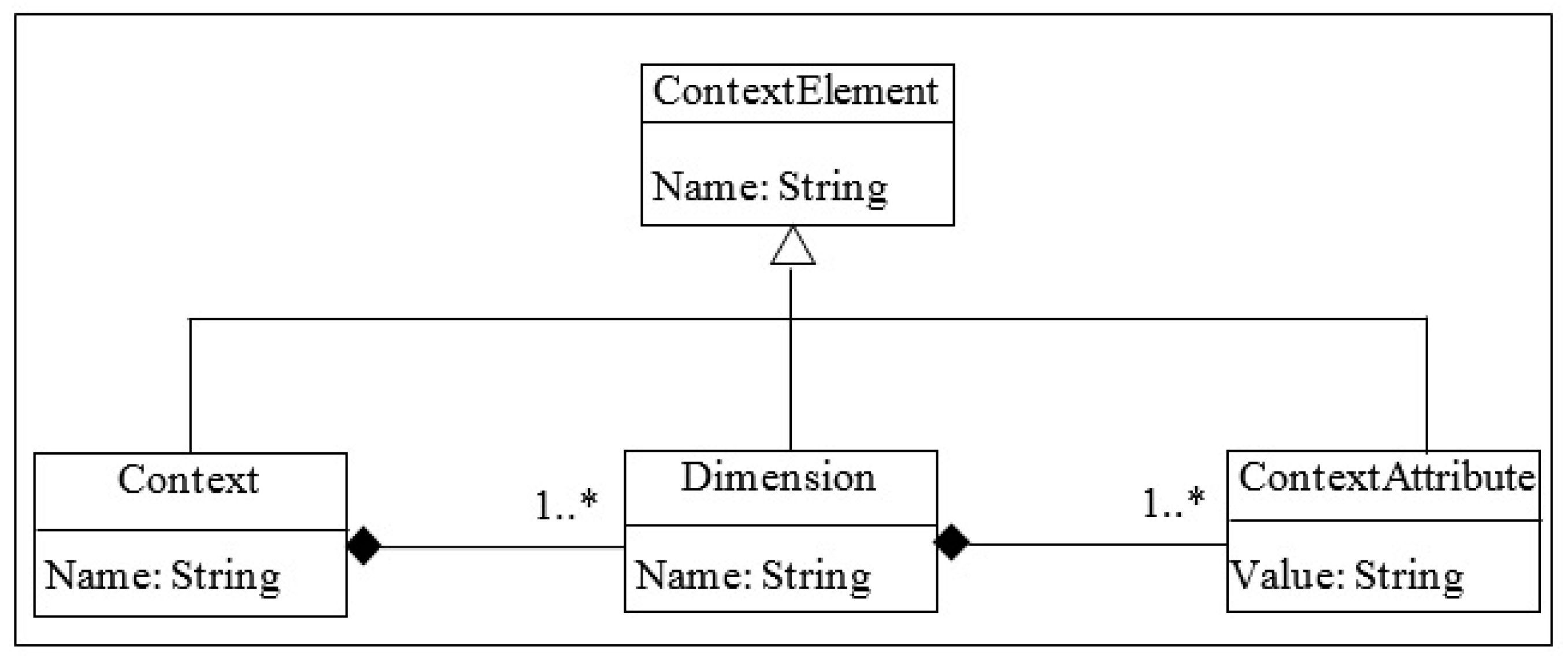

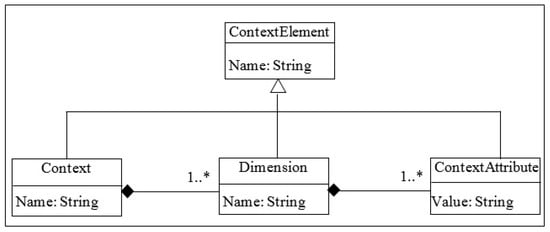

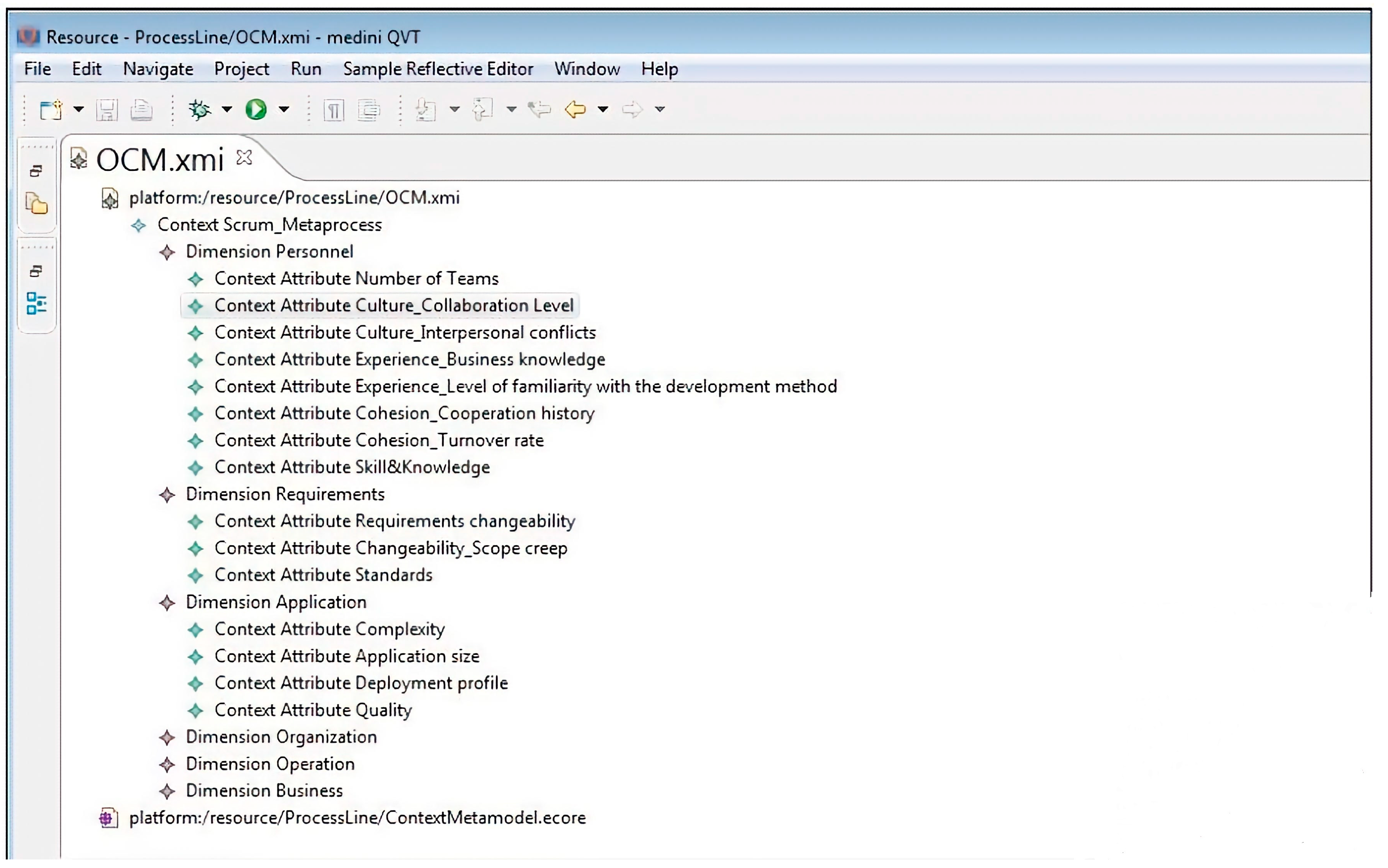

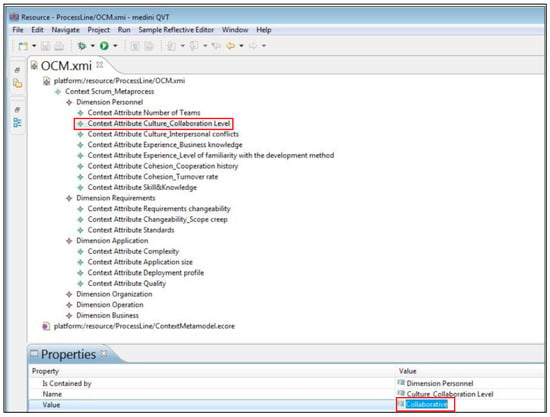

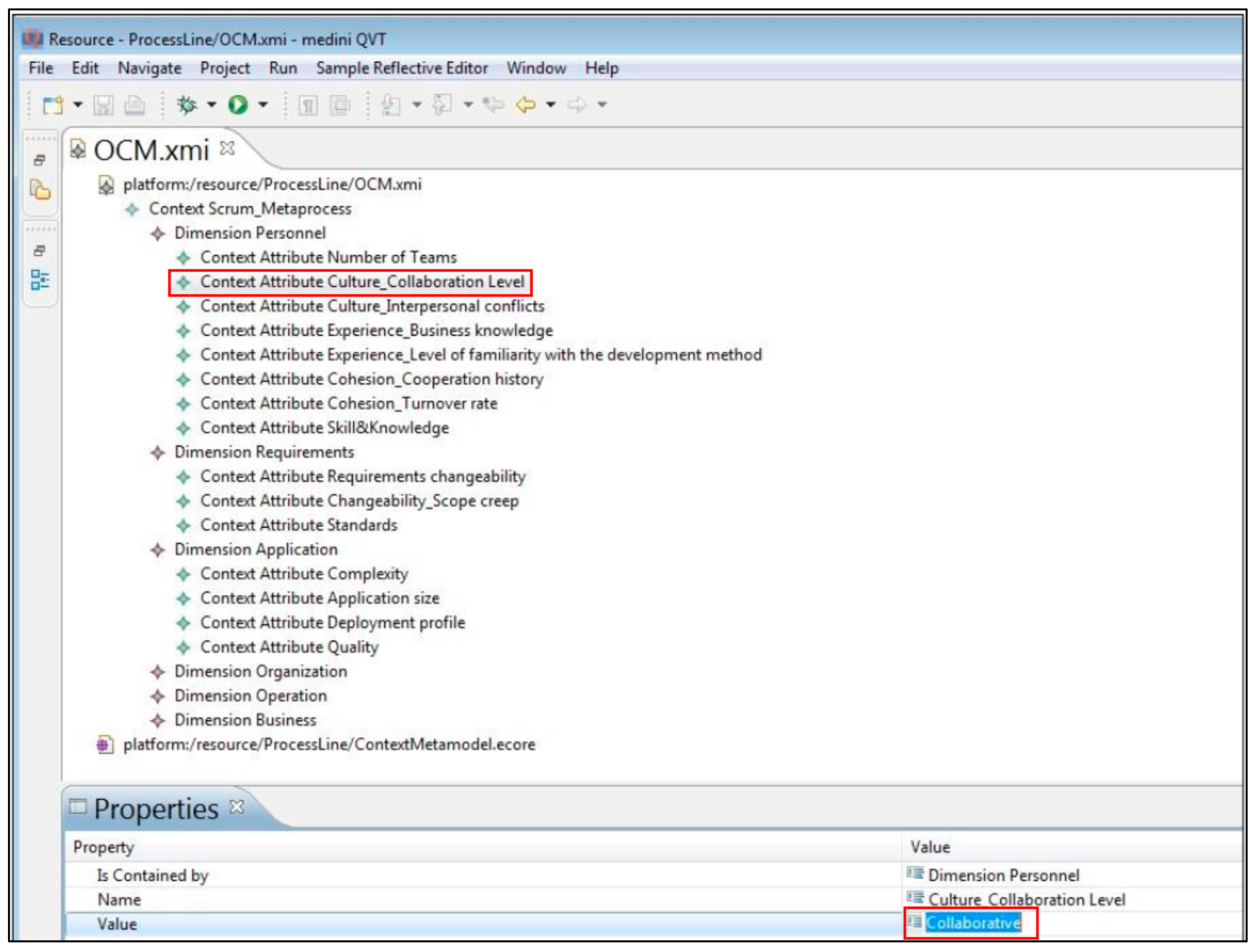

- Context Modeling: The context attributes identified in the analysis subphase are fed to this activity as input. These context attributes are modeled using the metamodel shown in Figure 3. The metamodel has been implemented in Medini-QVT [55]; in this tool, metamodels are defined as Ecore metamodels and transformations are implemented as QVT rules. An example of modeling context attributes in this tool has been shown in Appendix B.

Figure 3. Context Metamodel.

Figure 3. Context Metamodel. - Identification of commonalities and variabilities among the processes identified (Bottom-Up approach): The bottom-up approach can result in an inappropriate core process, as existing processes may not be ideal. The top-down approach is also error-prone, as it attempts to define the commonalities and variabilities in a particular domain from scratch. We have therefore decided to combine the two approaches. The bottom-up approach is used in the design subphase of DE for building an initial core process, and the top-down approach is used for improving the quality of the produced core process in the implementation subphase of DE. Separating the bottom-up and top-down approaches has specific benefits, including the following:

- −

- The organization can use an existing metaprocess to improve the core process instead of expending time and effort on improving existing processes. For example, the Scrum metaprocess [56] can be used in organizations that use different versions of Scrum.

- −

- The organization may not have enough knowledge to improve existing processes. Creating the metaprocess through the application of the top-down approach by experts can address this issue.

- −

- The metaprocess can be used to train organizational staff, and also to document the software processes currently used in the organization.

Variability management is a key activity in SPrLEs and is used in both bottom-up and top-down approaches; hence, we will first explain our proposed variability management approach, and will then elaborate on the proposed bottom-up approach.

Variability Management in the Proposed Approach

Several approaches have been proposed for variability management in SPrLE approaches. In [57], a criteria-based evaluation has been conucted on four such variability management methods: SPEM, vSPEM [58], Feature Model [59], and OVM [59]. The results show that none of the approaches satisfies the requirements identified in [57] for adoption in the industry. Furthermore, the authors have concluded that SPEM 2.0 is the best approach, since it allows the specification of the process and its variabilities using only one notation and one tool (Eclipse Process Framework Composer—EPFC). On the other hand, SPEM’s variability mechanisms are rather limited; for instance, it has no mechanisms for representing the relationships and constraints of variabilities. Even vSPEM [58], an extended version of SPEM 2.0, lacks mechanisms for defining multilevel variabilities.

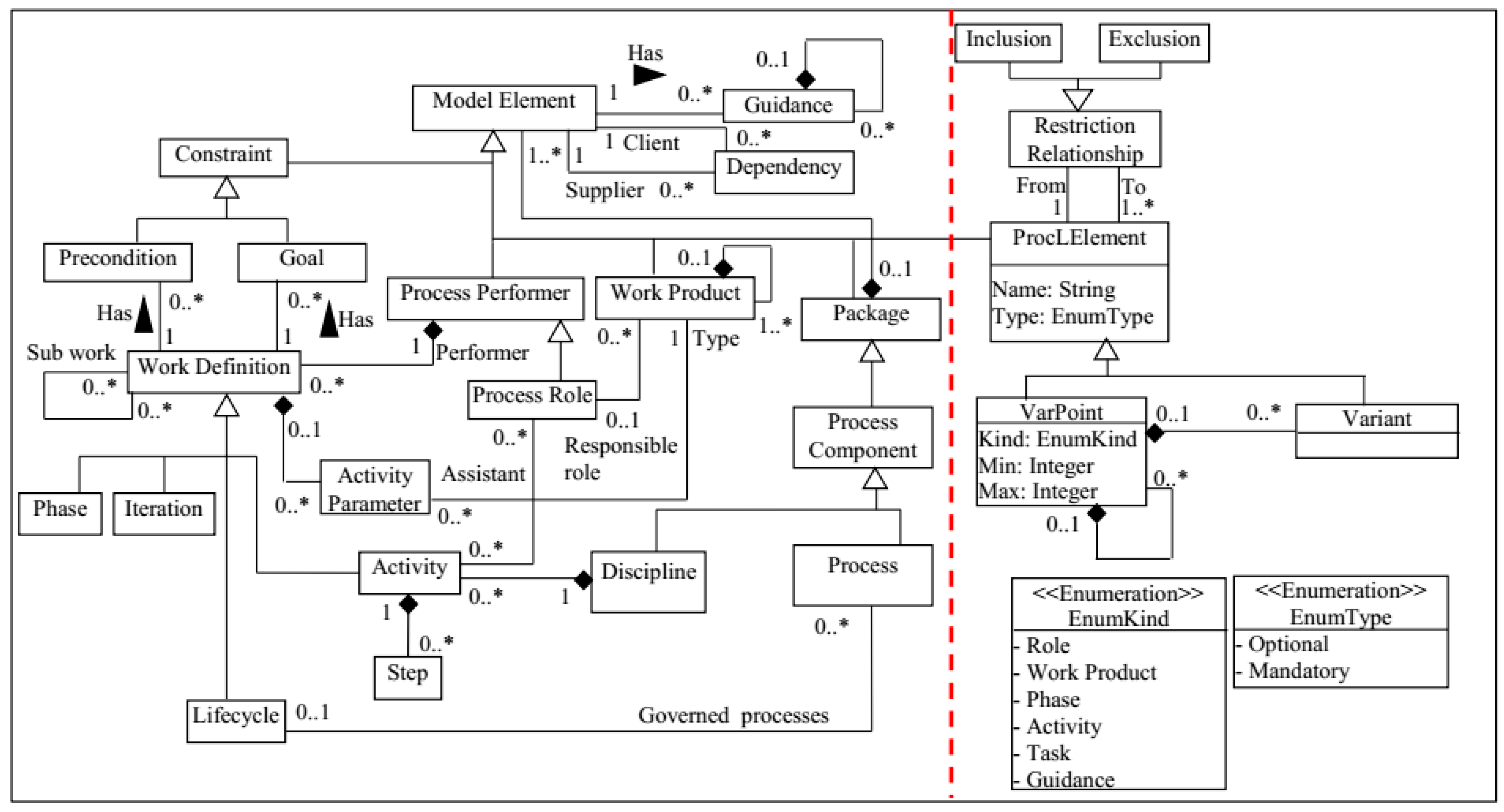

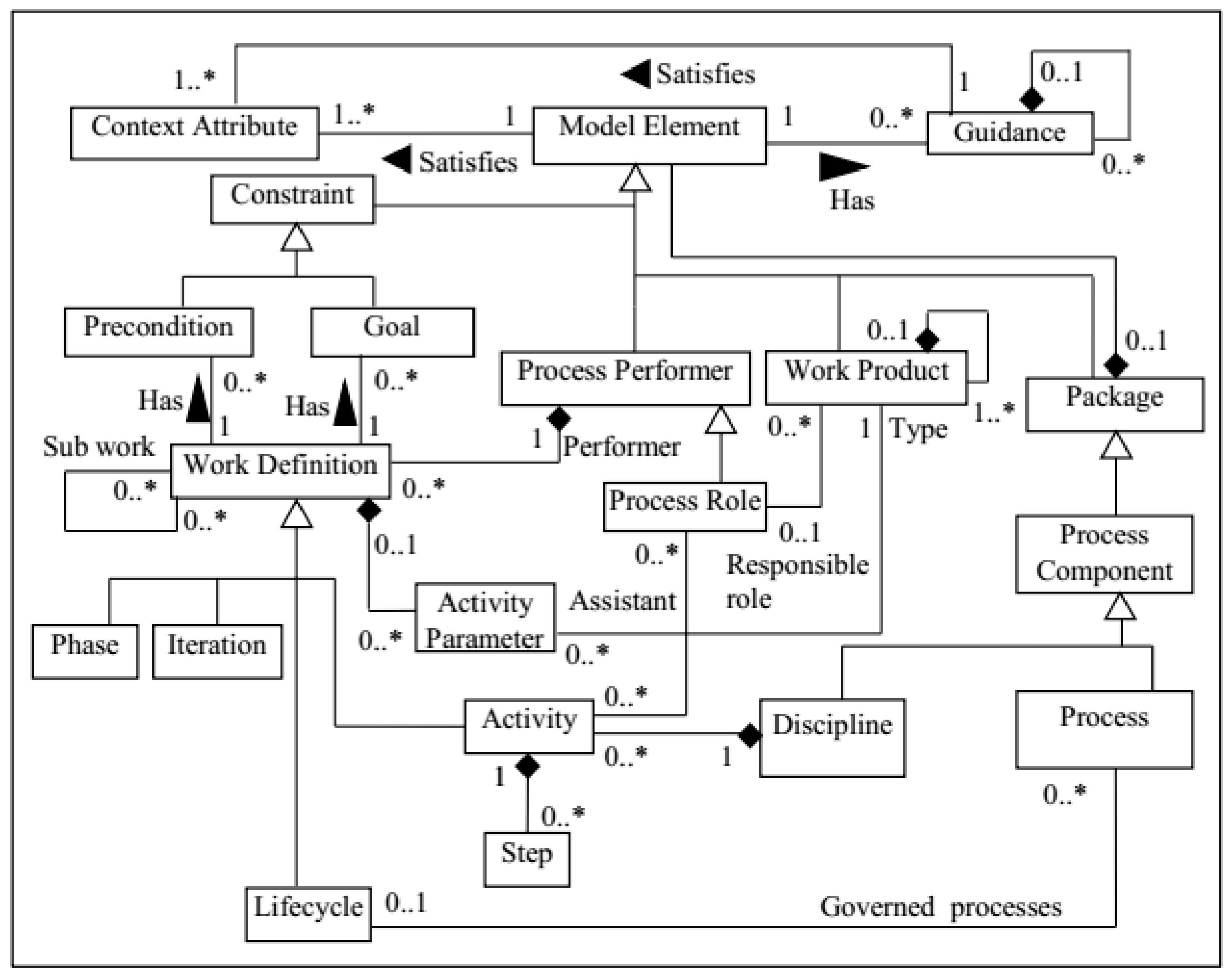

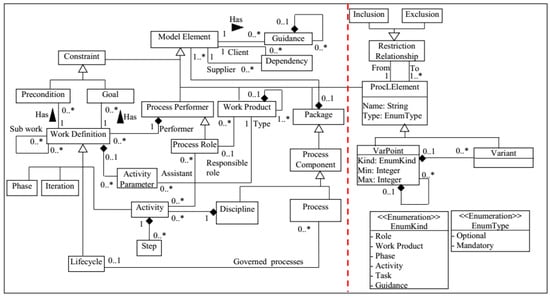

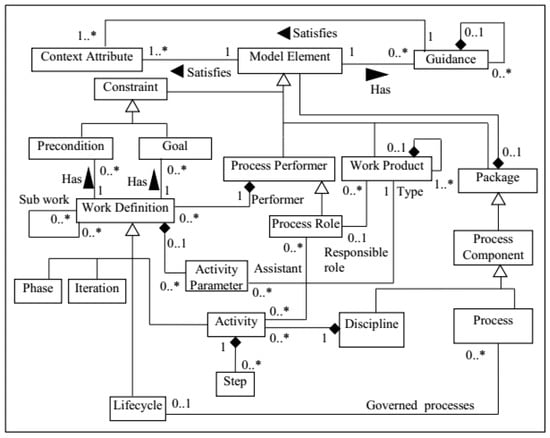

To address the above problems, we modeled the software process, along with its variation points, via the metamodel shown in Figure 4. The problems have been resolved as follows: the left part of the metamodel is inspired by SPEM 2.0, since it has been recognized as an effective metamodel (even considered by some researchers as the best [57]); the semantics of some of the concepts defined in the ProcessStructure and MethodContent packages of SPEM 2.0 have been changed to fit our requirements. The right part of the metamodel shows new elements that focus on software process variability. These additions have been defined to overcome the limitations of SPEM’s variability mechanisms, and include the following elements:

Figure 4.

The variability metamodel used in the proposed SPrLE approach.

- ProcLElement: This abstract class represents SPrL elements and has two subclasses: Varpoint, and Variant. As shown in Figure 4, ProcLElement is a subclass of the Model Element class; therefore, each instance of ProcLElement subclasses can be connected to instances of the Model Element subclasses via the Dependency class.

- VarPoint: Each variation point in the core process is an instance of this class; it can be of different types and granularities, namely the following: Phase, Activity, Task, Role, Work Product, and Guidance. Each variation point can be either Mandatory or Optional. It can include one or more variants that show possible alternatives for resolving the variability [1,60]. Furthermore, multi-level variabilities can be defined via the composition relationship drawn from the VarPoint class to itself, so that each variation point can include one or more other variation points. We have replaced or, xor, and and relations with UML-style multiplicities. Multiplicities consist of two integers: a lower bound and an upper bound, which are specified by two attributes in the VarPoint class: Min and Max.

- Variant: The variants related to variation points are instances of this class, and can be either Mandatory or Optional.

- Restriction Relationship: The relationships and constraints of variabilities can be represented via the Restriction Relationship class. Both variation points and variants can affect other parts of the process structure, as a variation point may exclude another one. These dependencies are represented by the Inclusion and Exclusion classes, which are subclasses of the abstract class Restriction Relationship.

The variability metamodel has been defined in Medini QVT; an example of the variability modeling performed in the tool has been presented in Appendix C.

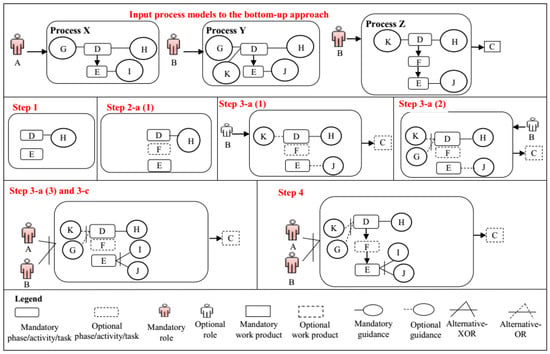

Bottom-Up Approach

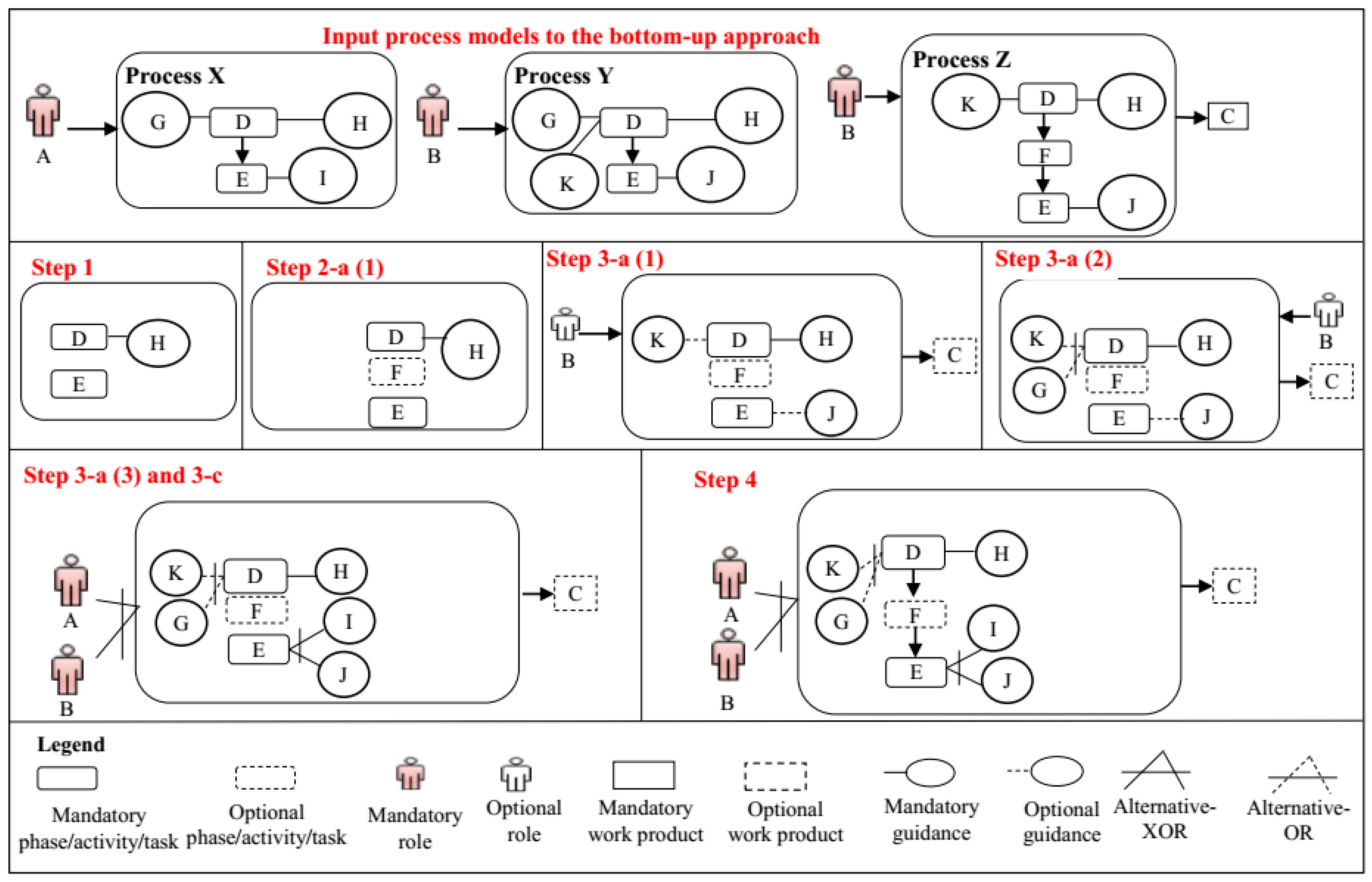

The Software Product Line domain offers many approaches for migrating existing systems to SPLs (e.g., [61,62]); these approaches analyze existing systems to identify code similarities and variabilities and then migrate a family of legacy software products to an SPL. Similarly, as the core process is an integral part of an SPrL, it is required that the similarities and variabilities of a set of process variants be analyzed to construct the core process. However, existing SPrLE approaches lack a precise method for this purpose. We therefore propose a multistep bottom-up approach for building an initial core process. Although the overall idea is similar to what has already been used in Software Product Lines, our approach focuses on identifying the similarities and variabilities of processes (rather than code), which makes the context quite different. Our proposed approach is currently performed manually, but it has the potential to be automated via model transformation tools. An example of applying the proposed bottom-up approach is shown in Figure 5, depicting how the commonalities and variabilities of three hypothetical processes (X, Y, and Z) are identified. The notation used in Figure 5 (shown in the legend) will be used throughout this paper for representing the commonalities and variabilities of processes. The steps of the proposed bottom-up approach are as follows:

Figure 5.

Example of applying the bottom-up approach on three hypothetical processes.

- Elements that are common to the processes are identified. These elements are of these types: Phase, Activity, Task, Role, Input/Output, Work Product, Guidance, and Dependency relationships. A process element is common to a set of processes if its name and description is identical in all of them. A dependency relationship is included in the core process if the elements on both sides have already been identified as common elements. An example of the output of this step is shown in Figure 5 (Step 1).

- Variation points and related variants are identified through the following substages by analyzing each of the phases, activities, and task elements that are not already included in the core process (we will call it e1):

- a.

- If the predecessor (e1-pre) or the successor (e1-succ) of e1 is already identified as a common element, two outcomes are possible: (1) an optional variation point is added to the core process, either after e1-pre or before e1-succ, and e1 is added as its optional variant; an example of the output of this step is shown in Figure 5 (Step 2-a (1)). (2) If a variation point after e1-pre or before e1-succ is already defined, no variation point is added to the process model, and e1 is just added to the core process as an optional variant under the previously defined variation point.

- b.

- If the predecessor (e1-pre) and successor (e1-succ) of e1 are not common elements, an optional variation point is added to the core process after or before the variation point related to e1-pre or e1-succ, respectively, and e1 is added as its optional variant.

- c.

- If at least one element of each process is added under a common variation point, the variation point is converted to a mandatory variation point.

- d.

- If no predecessor/successor elements exist before/after e1, an optional variation point is added to the core process under the process element with the higher granularity, and e1 is added as its optional variant. For example, the variation point related to a task is added under the related activity.

If any elements of types phase or activity are added to the core process in this step, steps 1 and 2 are repeated for them in order to identify their common and variable internal elements. - For each role, work product, and guidance that is not already included in the core process (we will call it e2), its variation points and variants are specified through these substages:

- a.

- If the phase/activity/task related to e2 is already added to the core process as a common element, three outcomes are possible: (1) An optional variation point is added to the core process and a dependency is established between the phase/activity/task and the variation point, and e2 is added as its optional variant; an example of the output of this step is shown in Figure 5 (Step 3-a (1)). (2) If another element of the process under investigation with the same type as e2 has already been added as a variant under a variation point, e2 is only added to the core process as an optional variant under the variation point, and the variation point is converted to Alternative OR, which is specified in our approach by assigning 1 to Min, and n (the number of variants) to Max; an example of the output of this step is shown in Figure 5 (Step 3-a (2)). (3) If another element of another process with the same type as e2 has already been added as a variant under a variation point, e2 is only added to the core process as an optional variant under the variation point, and the variation point is converted to Alternative XOR, which is specified in our approach by assigning 1 to Min and 1 to Max; an example of the output of this step is shown in Figure 5 (Step 3-a (3)).

- b.

- If the phase/activity/task related to e2 is not already specified as a common element, three outcomes, similar to those explained in the previous step, are possible. However, a dependency is established between the variation point related to the phase/activity/task and the variation point added to the core process.

- c.

- If at least one element of each process is added under a common variation point, the variation point is converted to a mandatory variation point. As the result of this step, the variation points related to the roles and techniques shown in Figure 5 are converted to mandatory (Step 3-c). Due to space limitations, the variation points are not shown in Figure 5, and variants are directly connected to the related process elements.

- For each dependency relationship that is not already added to the core process, the elements or variation points related to the elements on both sides of the dependency are found in the core process, and a dependency relationship is added between them; an example of the output of this step is shown in Figure 5 (Step 4).

3.1.3. Implementation

The following activities are performed in this subphase:

- Constructing the extended part of the core process (Top-Down approach): In the bottom-up approach, the initial core process is created by analyzing existing processes. To address the problems in existing processes, and thereby enhance the initial core process constructed, we use the top-down approach to create a metaprocess that includes all the variabilities in the target domain. Existing process frameworks, such as DAD [63], can also be used for this purpose. The top-down approach has already been used for constructing SPrLs (e.g., in [21,23]); however, it has been used in previous research to identify the variabilities in standard processes that can be instantiated across multiple organizations, whereas in our approach, the top-down approach has been used for enhancing the SPrL being created in a target organization. Furthermore, the bottom-up approach has never been used for analyzing the existing processes of an organization.An organization interested in implementing an SPrL can create the metaprocess by identifying the variabilities in a process framework; the selection of the framework depends on the type of processes being used in the organization. As Scrum is the most widely used agile framework, we have defined the Scrum metaprocess [56] through the top-down approach as an example. An example of the variabilities identified in the Scrum metaprocess has been provided in Appendix D.

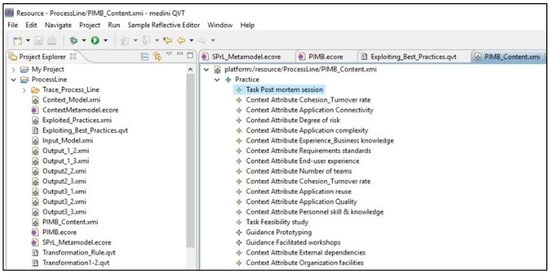

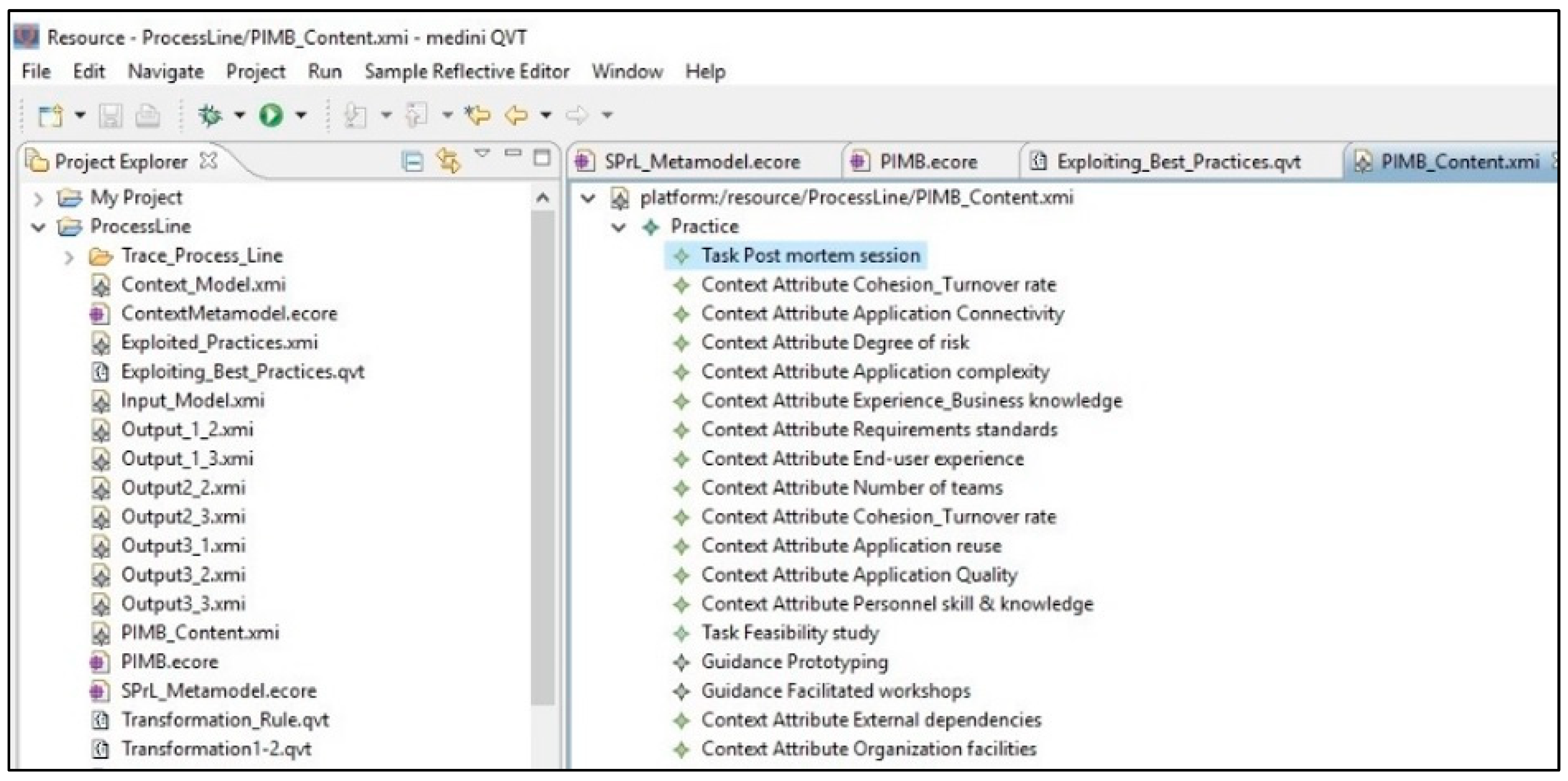

- Developing the Process Improvement Method Base (PIMB): The constructed process may not satisfy all organizational/project needs (remaining requirements/delta requirements). To address this problem, a method base (PIMB) is built for storing additional core assets. PIMB’s metamodel is shown in Figure 6. This metamodel is an extended version of the left-hand section of the variability metamodel shown in Figure 4; two relationships have been added: one between Model Element and Context Attribute, and the other between Guidance and Context Attribute. The relationships between context attributes and process elements are also stored in PIMB. If the instantiated process cannot satisfy all the needs, context attributes are given specific values and are then fed to the transformations; by executing the transformations, suitable process elements are automatically extracted from PIMB. The metamodel has been defined in Medini QVT; the method base has been built by instantiating the metamodel and enriching it with process elements from DSDM and XP. However, the method base can be complemented by other process elements as well. The process elements so far defined in the method base are presented in Appendix E, and an example of how PIMB can be used for satisfying the delta requirements is provided in [64].

Figure 6. Metamodel of PIMB.

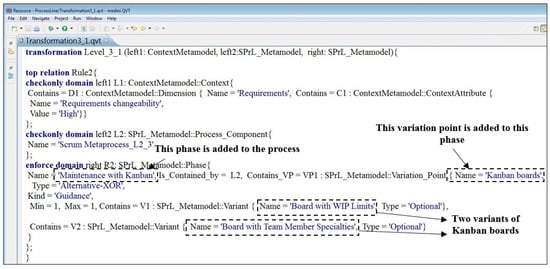

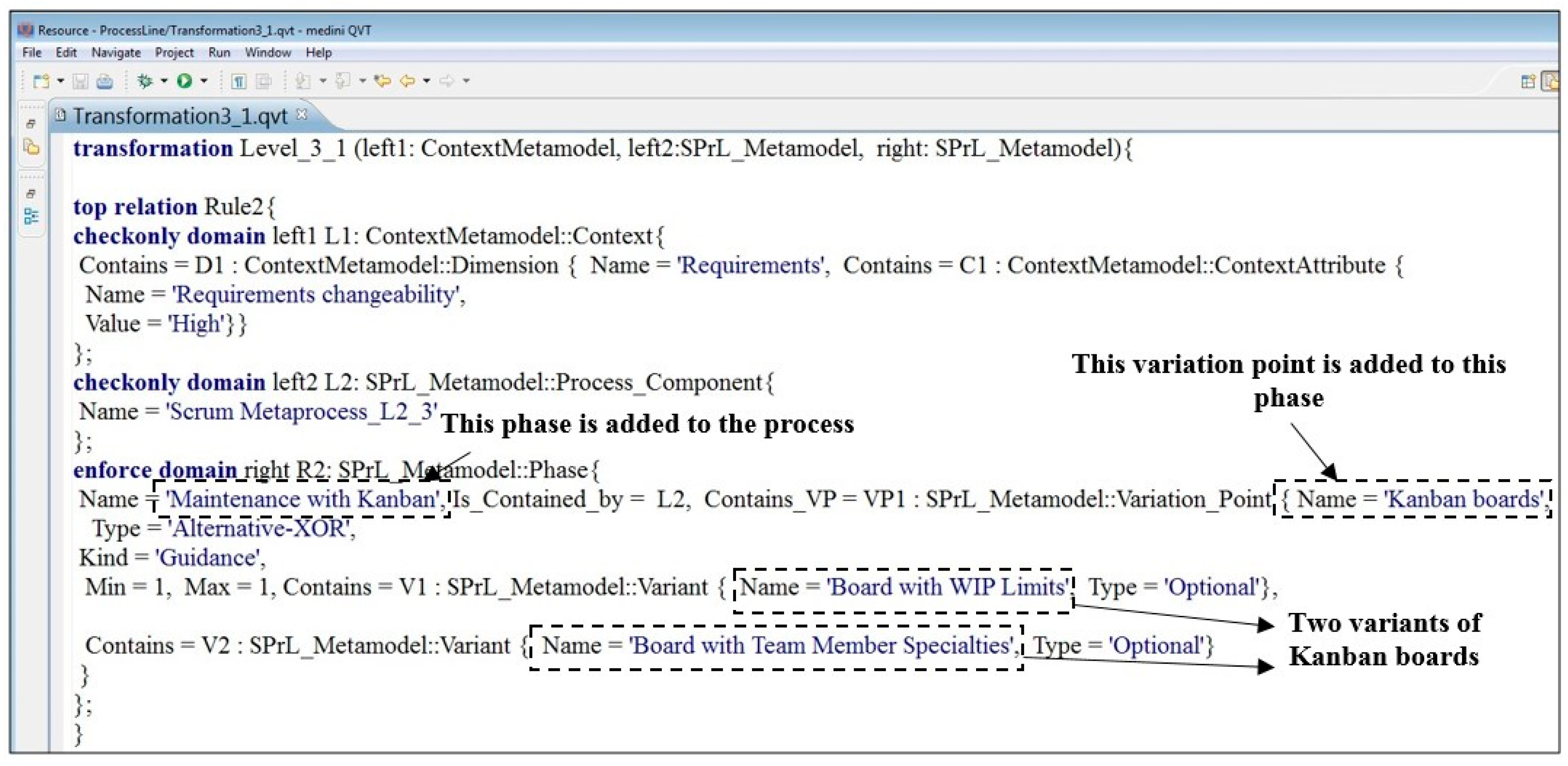

Figure 6. Metamodel of PIMB. - Implementing transformations: Transformations are used for automatic derivation of a process from the SPrL. Transformations are implemented in a tool using a model transformation language, such as QVT [55]. Medini QVT has been used for implementing transformations in our approach; however, organizations can use other tools or languages for this purpose. An example of the implemented transformations has been provided in Appendix F. Due to space limitations, we will not provide the detailed descriptions of other transformations implemented for resolving the variabilities of the Scrum metaprocess. The complete set of transformations is available on Mendeley Data [64].

- Creating the complete core process: The models created by the bottom-up and top-down approaches are merged to obtain the final core process. This stage is currently performed manually. If the required transformations are implemented, the two input models can be automatically merged to produce the completed core process.

To provide an overall overview, examples of the work products of DE subphases are provided in Appendix G.

3.2. Application Engineering

The SPrL is instantiated during AE’s three subphases to yield bespoke processes for specific project situations. The subphases are explained in the following subsections.

3.2.1. Analysis

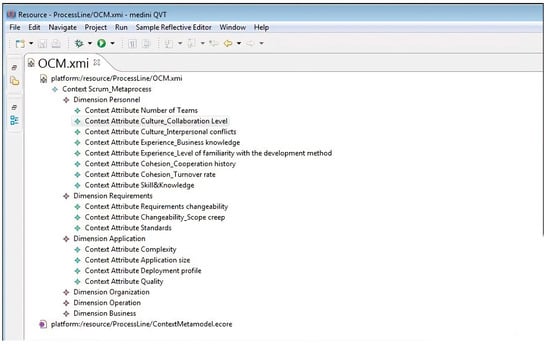

The context model created in DE is fed to this subphase as input, and the values of context attributes are set by the process engineer according to the project situation at hand. Consequently, an Organizational Context Model (OCM) is produced. An example of an OCM implemented in the tool has been presented in Appendix H.

3.2.2. Design

The organizational context model, core process model, and transformations are fed to this subphase, and a specific process is automatically created by applying the transformations. The target process is gradually created by using multilevel modeling, which is an essential MDD feature; the process model is gradually refined from a fully abstract specification to a fully concrete one. Although the target process is automatically created, the output of each level can be polished, as needed, at the process engineer’s discretion. As shown in Figure 4, specific constraints are defined in the core process, including Multiplicity, and Restriction Relationships. These constraints should be considered in variability resolution. As variability resolution is automatically performed in our approach, checking these constraints is performed through the following transformations:

- Inclusion: If a variant selected at a specific level has “inclusion” relationship with another variant, that variant is added to the process under construction.

- Exclusion: If a variant selected at a specific level has “exclusion” relationship with another variation-point/variant, that variation-point/variant is removed from the process under construction.

- Multiplicity: If there is a variation point with (min, max) = (1,1), selecting one of the variants of the variant group results in removing the variation point from the process under construction.

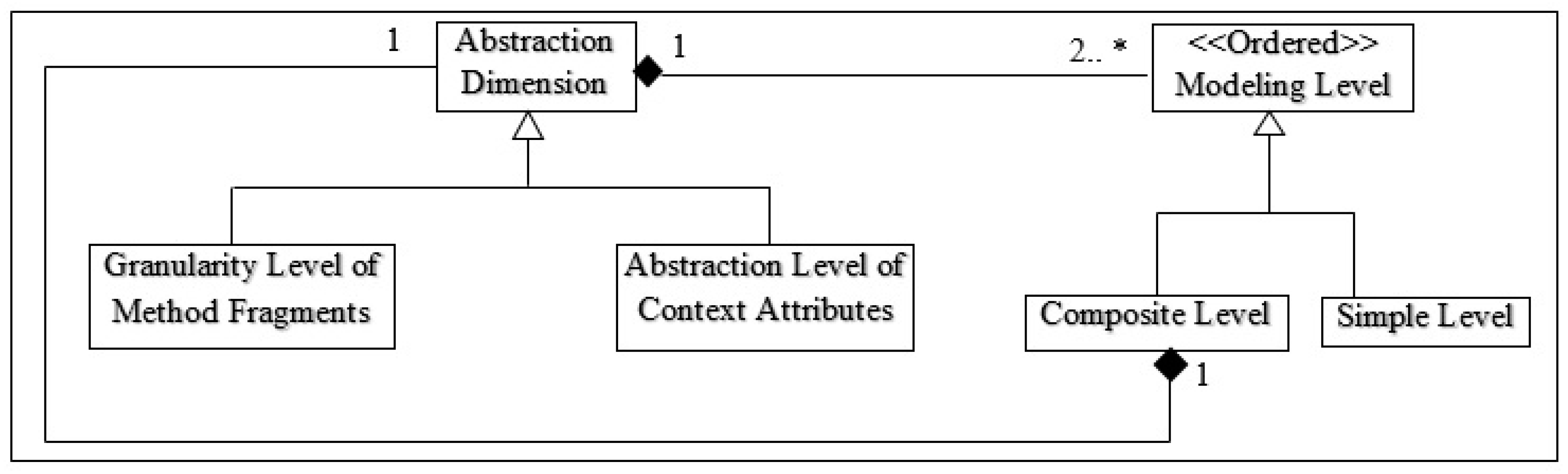

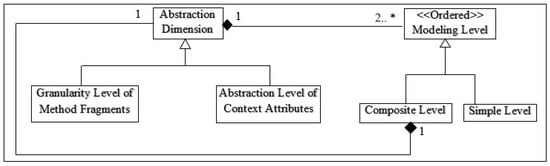

Modeling levels can be differentiated according to different concepts; we propose the framework shown in Figure 7 for defining the modeling levels used in the AE phase. In this framework, two dimensions are used for distinguishing the modeling levels: Granularity level of the method fragments, and Abstraction level of the context attributes; these dimensions can be extended as required. Each dimension specifies an ordered set of simple or composite modeling levels. A composite level includes an abstract dimension and therefore, an ordered set of nested levels.

Figure 7.

Proposed framework for defining the modeling levels.

In the “Granularity level of method fragments” dimension, a variation point is resolved based on the granularity level of its type. In other words, variation points with a higher granularity, such as the ones associated with phases or activities, are resolved first (at higher levels of modeling); whereas variation points with a lower granularity, such as those associated with tasks, roles, and work products, are resolved at lower levels. This solution can have several benefits; e.g., reusability is enhanced because higher-level models are independent from lower-level method fragments, and are hence more readily reusable due to their relative abstractness. We have defined three modeling levels using this dimension: Methodology-Fragments-Independent Level, Technique-Independent Level, and Technique-Specific Level. At the fragment-independent level, variation points at the granularity levels of Phase or Activity are resolved; at the technique-independent level, variation points at the granularity levels of Task, Work Product, and Role are resolved; at the technique-specific level, variation points at the granularity level of Guidance are resolved.

In the “Abstraction level of context attributes” dimension, situational factors are classified based on their abstraction levels; there are three types of situational factors: Organizational, Environmental, and Project; this classification has been adapted from [50,51,65,66]. There are three modeling levels based on this classification: Organizational Level, Environmental Level, and Project Level. At the organizational level, all the variation points dependent on the values of organizational factors are resolved; organizational factors are characteristics of the organization (or unit thereof) responsible for developing the system, such as “Personnel experience”. The output of this level is an SPrL specific to the organization (or unit). The produced SPrL can be used for different projects and customers. At the environmental level, all the variation points dependent on the values of environmental factors are resolved; environmental factors are characteristics of the environment in which the system will be operated, such as “End-user experience”. The output of this level is an SPrL specific to a customer of the organization; the produced SPrL can be used for different projects of the customer. At the project level, all the variation points dependent on the values of project factors are resolved; project factors are characteristics of the system under development, such as “Degree of risk”. The output of this level is a specific process, without any unresolved variation points, for the project.

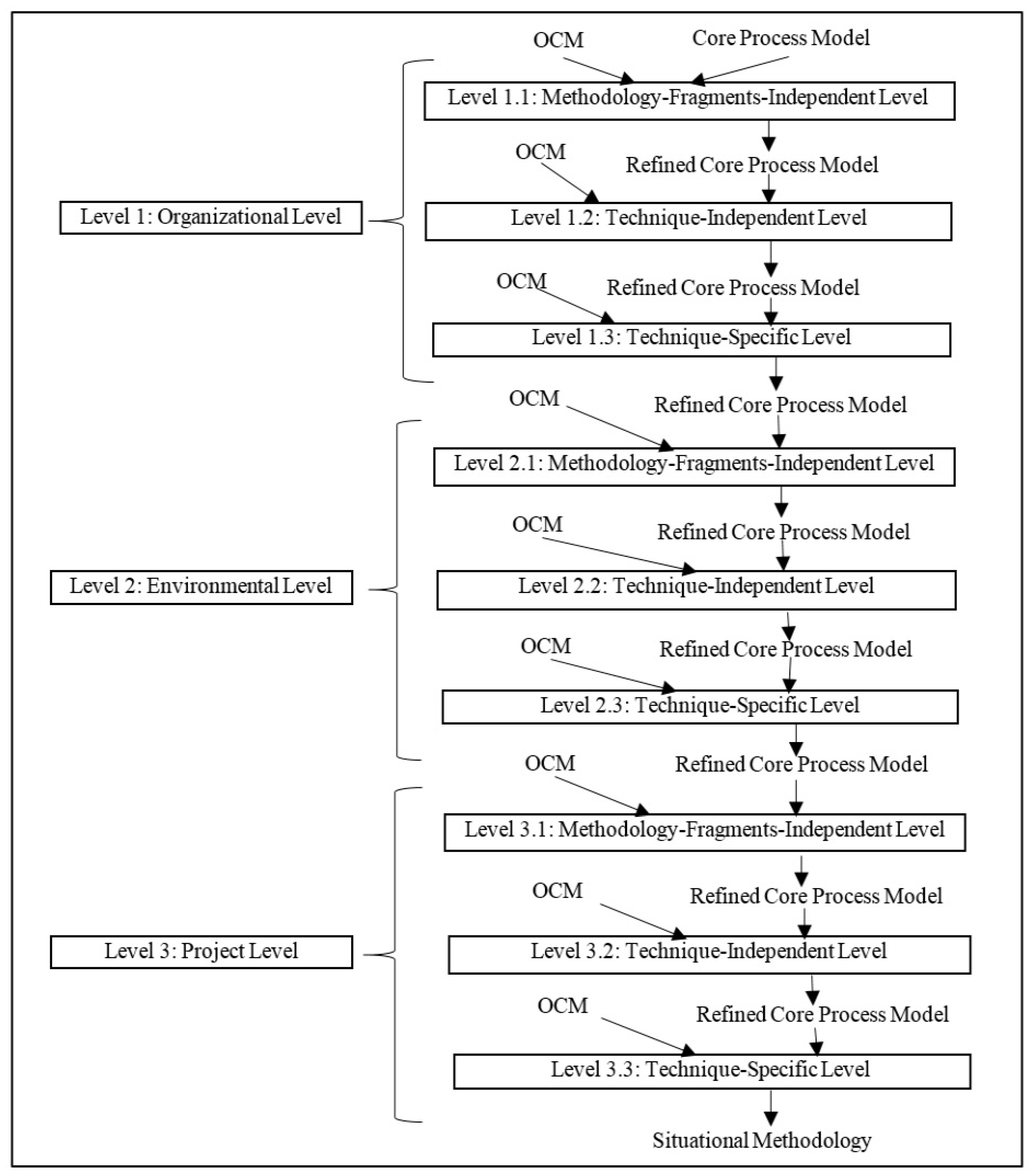

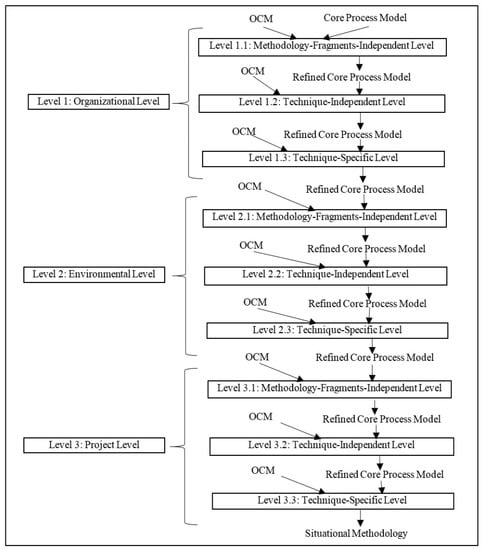

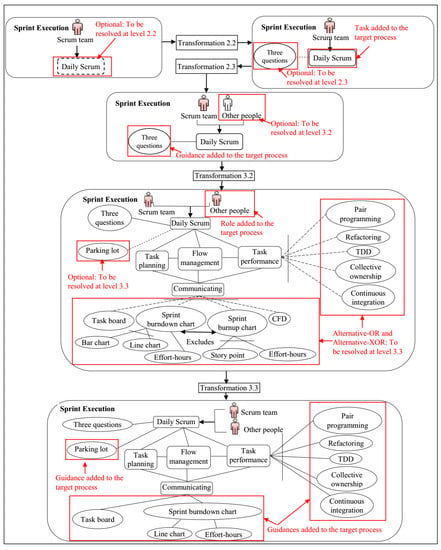

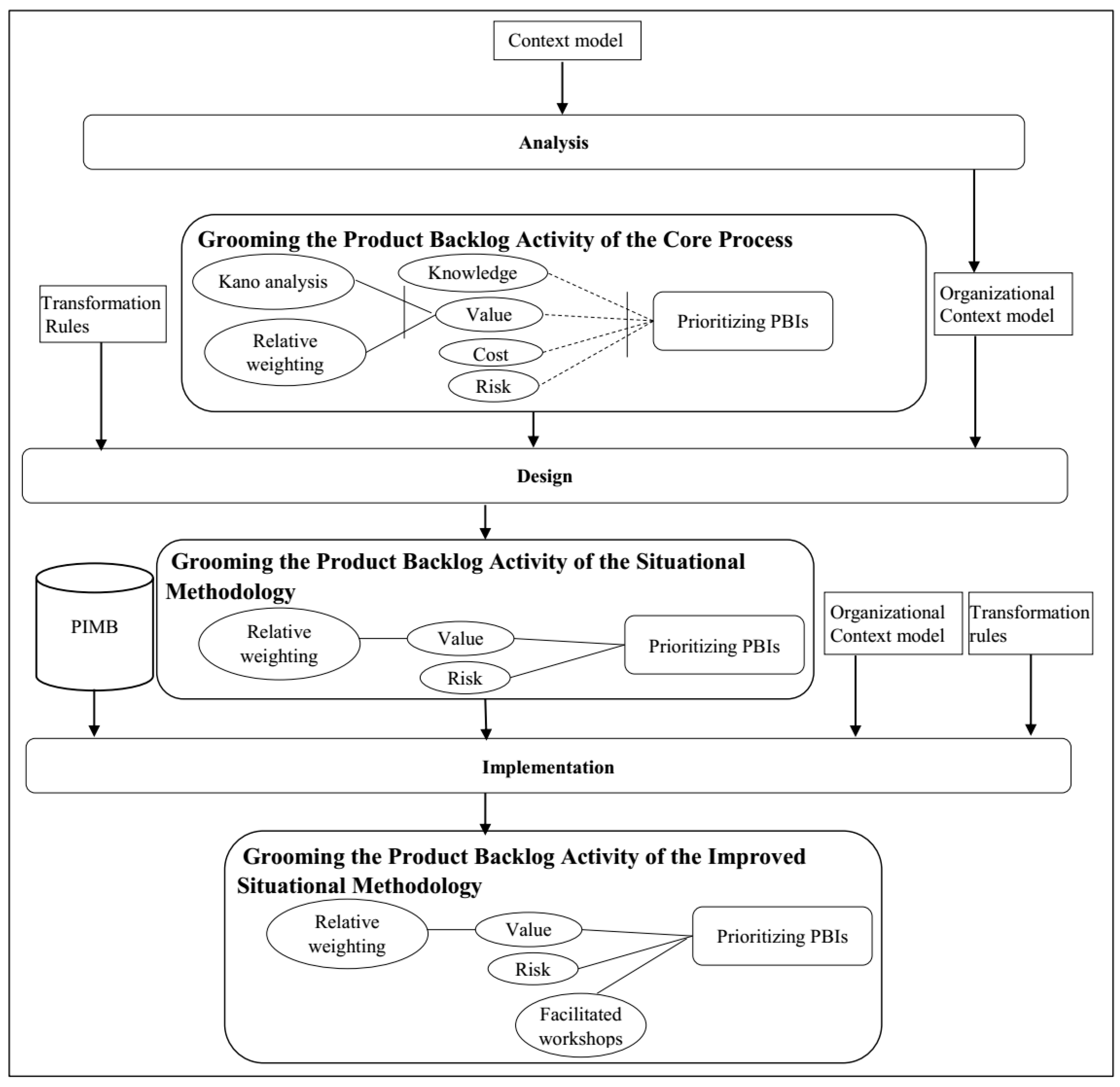

For gaining the full benefits of these two dimensions in our proposed approach, “Abstraction level of context attributes” is used for defining the outer modeling levels, and “Granularity level of method fragments” is used for defining the inner levels, as shown in Figure 8. Based on these modeling levels, variation points are resolved in the following order: at level 1.1, variation points that are dependent on organizational factors and have a granularity of Phase or Activity are resolved; at level 1.2, variation points that are dependent on organizational factors and have a granularity of Task, Role, or Work product are resolved; and at level 1.3, variation points that are dependent on organizational factors and have a granularity of Guidance are resolved. Resolution at levels two and three is performed in a similar fashion, but for variation points that are dependent on Environmental factors and Project factors, respectively. If a variation point is dependent on a combination of factors, the resolution is performed at the lowest abstraction level corresponding to the factors. For example, if a variation point is dependent on a combination of organizational and environmental factors, its resolution is performed at level two. An example of resolving the variabilities throughout the modeling levels has been provided in Appendix I.

Figure 8.

Modeling levels defined in the design subphase.

3.2.3. Implementation

The activities performed in this subphase are as follows:

- Analyzing the produced process in cooperation with members of the organization: The process produced in the previous phase is analyzed to identify the organizational/project needs that have not been addressed. If such needs exist, transformations are applied to PIMB to extract suitable process elements for addressing those needs.

- Applying the produced process to the target project: The produced process is enacted in the real world, and the results of its application may call for further iterations of DE and AE.

To provide an overall overview, examples of the work products of AE subphases are provided in Appendix J.

4. Evaluation

The proposed approach has been evaluated through a case study, an experiment, and a comparison performed between the proposed approach and other SPrLE approaches.

4.1. Case Study

In order to validate the proposed approach as to applicability and efficacy, an industrial case study was designed with the following research questions:

RQ1.

What are the challenges of using the proposed approach for developing a process line?

RQ2.

Can the specific processes produced from the SPrL improve the processes currently used in the organization?

The intention of the first RQ is to evaluate the applicability of the proposed approach in real situations; therefore, we have focused on identifying the challenges that an organization might face during the use of our approach. Thus, the activities defined in the proposed approach to address the shortcomings observed in previous approaches have been evaluated as to their applicability. The intention of the second RQ is to evaluate whether the top-down approach, as used in our proposed process, can indeed result in improvements to the processes currently used in the organization.

We sought a case that was suitable for creating SPrLs based on the set of requirements defined in [13], and the recommendations proposed in [67]. Based on [67] (p. 48), case study selection in software engineering research is usually performed based on the availability of the case. Furthermore, adding or removing cases due to practical constraints and objectives of the research may occur as the study progresses [67] (p. 64). We first selected two companies to conduct feasibility analysis based on the requirements presented in [13]. Then, we decided to add two more companies since one of the first two companies used only one version of Scrum. All of the four candidate companies were selected based on their existing relationships with the authors of this paper. We also sought cases where people with enough knowledge about the processes being used in the organization had enough time to collaborate with us during the execution of the case study. In [13], we have identified a comprehensive set of requirements for adopting the SPrL approach. These requirements have been presented in the form of a questionnaire, which has been designed for determining the suitability of candidate companies for creating SPrLs in the case study. The more a company satisfies the requirements, the more justified it is to adopt the SPrL approach. The questionnaire has been presented in Appendix K.

The study was conducted in a medium-sized, Iranian software development company, which we will call ‘A’ (the real name has been made anonymous). Company A, which has 120 employees, is made up of four units (A1, A2, A3, A4), and its organizational software process is based on Scrum. Various products are developed at this company, including systems software, web applications, databases, web designs, graphics designs, multimedia software, mobile applications, games, and desktop applications. The Scrum process implemented in each unit was not documented. In order to identify how Scrum was used in the units, one in-progress project was selected from each unit. Brief descriptions of these projects are presented in Table 1. The size of each development team was between 2 to 9 people.

Table 1.

Projects selected in A.

Since there was no documented information about the processes used in the projects, the subject sampling strategy was to interview a sample of the people involved in the projects who had enough information about the processes used. In total, four people were interviewed, who played “Project Manager” or “Product Owner” roles in the projects. Three semi-structured one-hour interview sessions were held with each subject. The interview instruments are provided in Appendix L. These instruments were intended to focus the interviewees’ attention on the areas of discussion. The instruments were adapted as the interviews progressed to gain further information about the process used in the organization and the problems occurring during its execution. In addition to the notes taken during the interviews, sound recordings were produced to provide transcripts for the analysis process. The answers given to the research questions are provided in the following subsections.

4.1.1. RQ1. What are the Challenges of Using the Proposed Approach for Developing a Process Line?

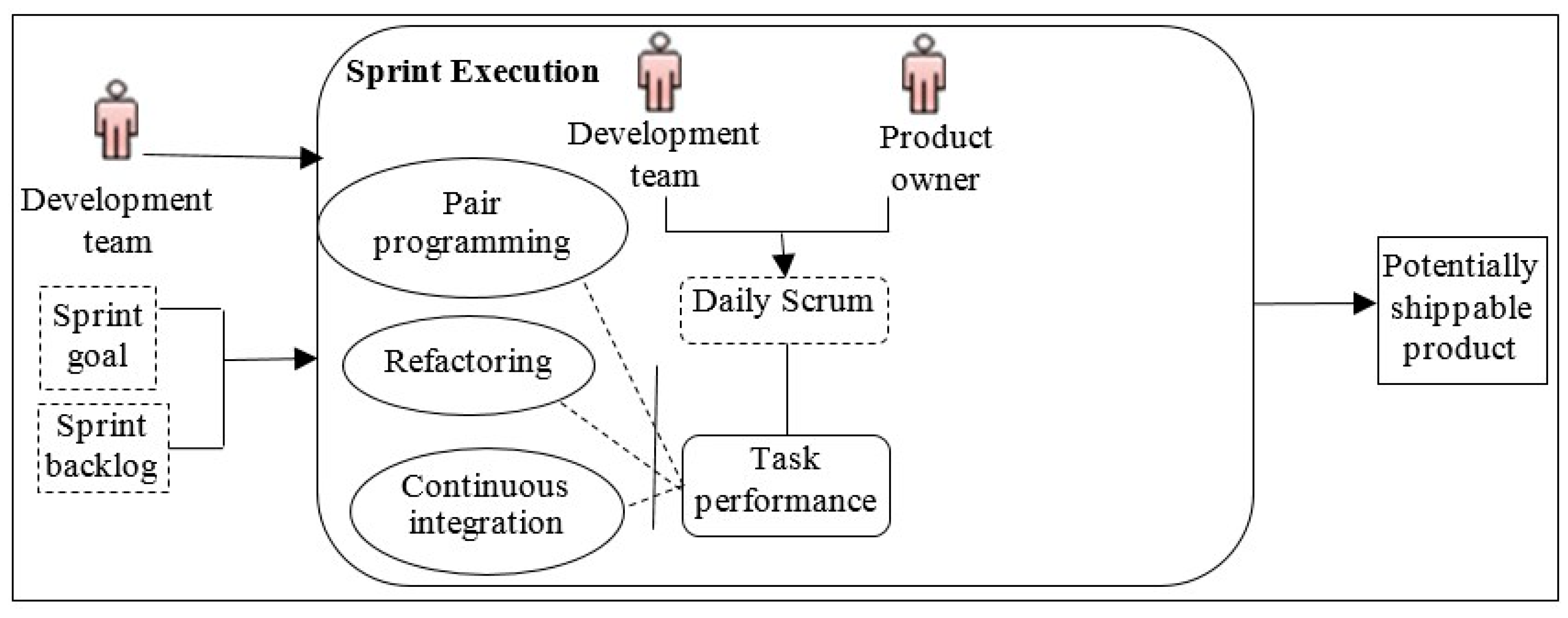

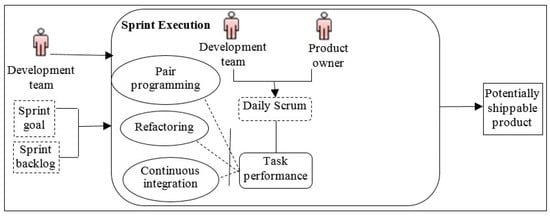

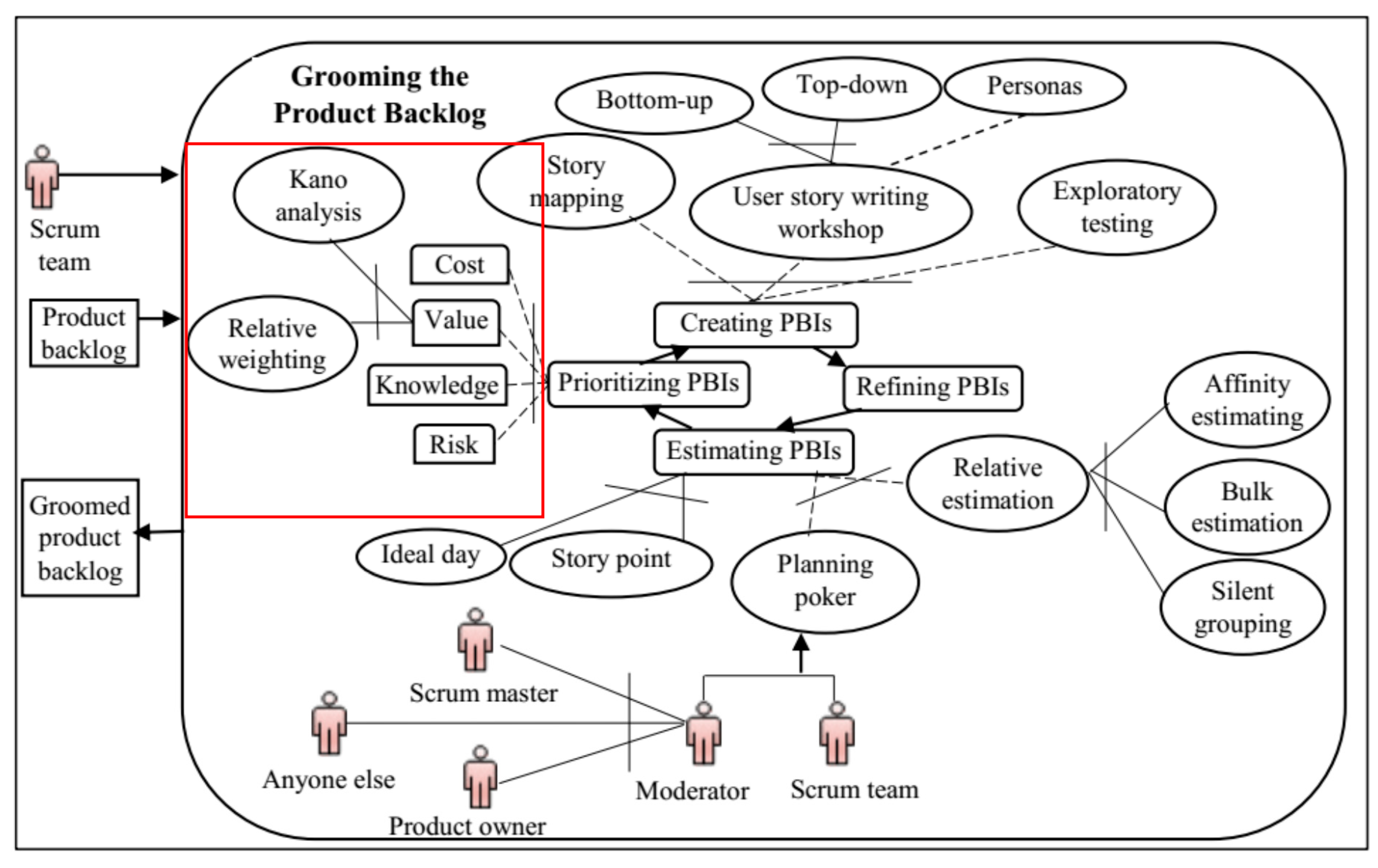

In this study, we investigated all the activities mentioned in Scrum, from planning to development. However, for sake of brevity, we will only focus on one activity herein, namely “Sprint Execution”. To answer the research questions (RQ1 and RQ2), we will first explain the activities performed for creating the SPrL in the following subsections.

Domain Engineering

In this section, the activities performed for creating an SPrL for A are explained (Sections A, B and C).

- A.

- Analysis

The following tasks were performed:

- Feasibility analysis: The suitability-filter questionnaire was filled out by four companies, of which A was then identified as a suitable venue for creating an SPrL. The questionnaire has been provided in Appendix K.

- Modeling similar processes in SPEM: Interview sessions were held to elicit the different versions of the Scrum process used in A. These processes were then modeled with the tool.

- Identification of context attributes: In [51], a reference framework has been proposed for situational factors that affect software processes; we used this framework to identify the situational factors relevant to agile methodologies. These factors were then refined and completed based on other resources. The finalized list of situational factors is shown in Table 2, along with the additional resources that were used for refining or extending them. The third column in Table 2 depicts the range of possible values for each factor. Attributes under Personnel and Organization are considered as organizational factors; the attribute under Operation is considered as an environmental factor; attributes under Requirements, Application, and Business (except for the Opportunities attribute) are classified as project factors; and the Opportunities attribute, by definition, is an organizational factor.

Table 2. Situational factors (context attributes) relevant to agile methodologies.

Table 2. Situational factors (context attributes) relevant to agile methodologies.

- B.

- Design

The following tasks were performed:

- Context modeling: The situational factors shown in Table 2 were modeled in the tool.

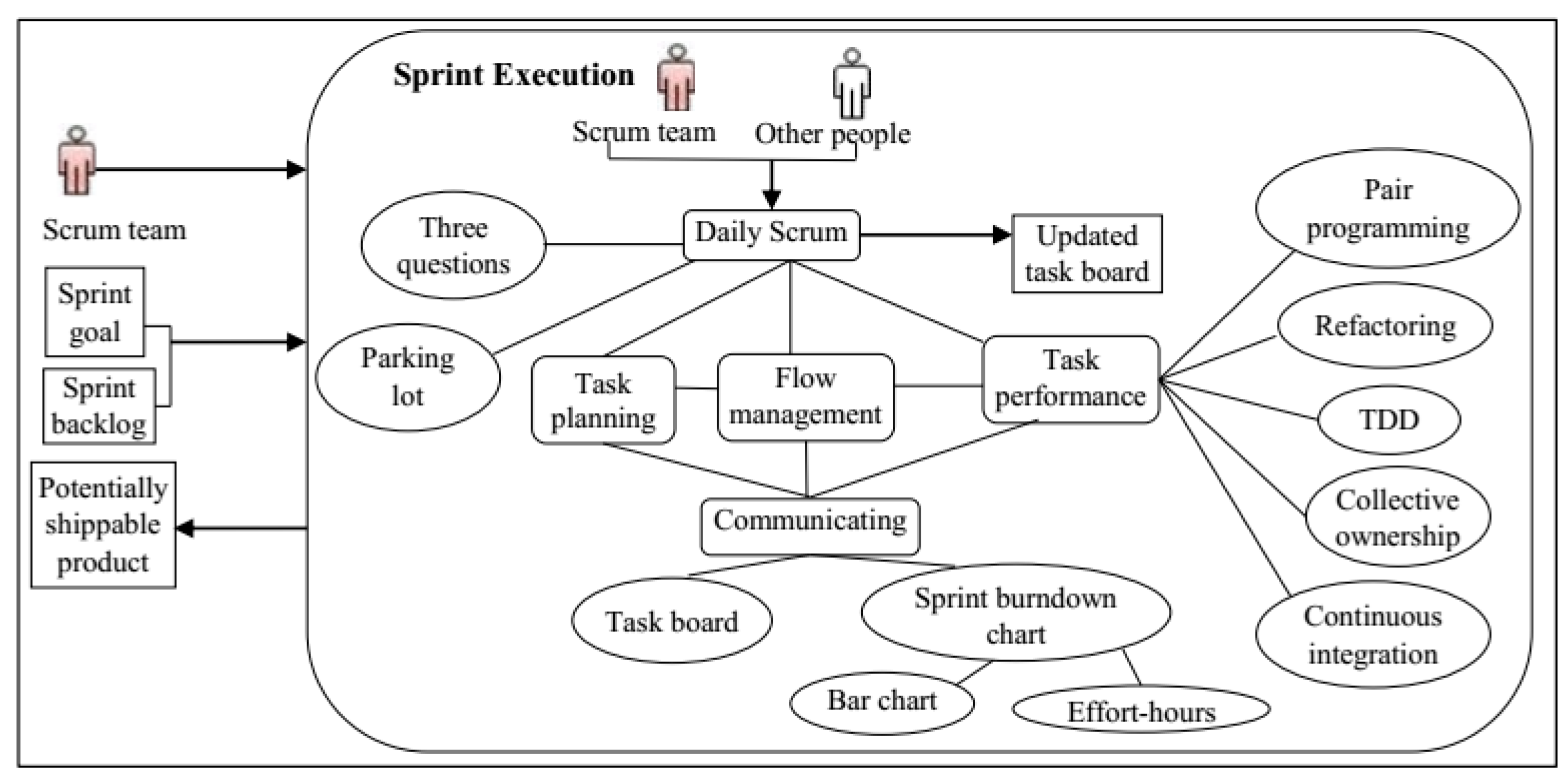

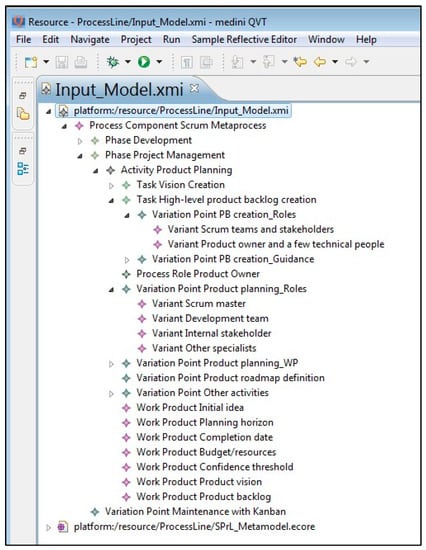

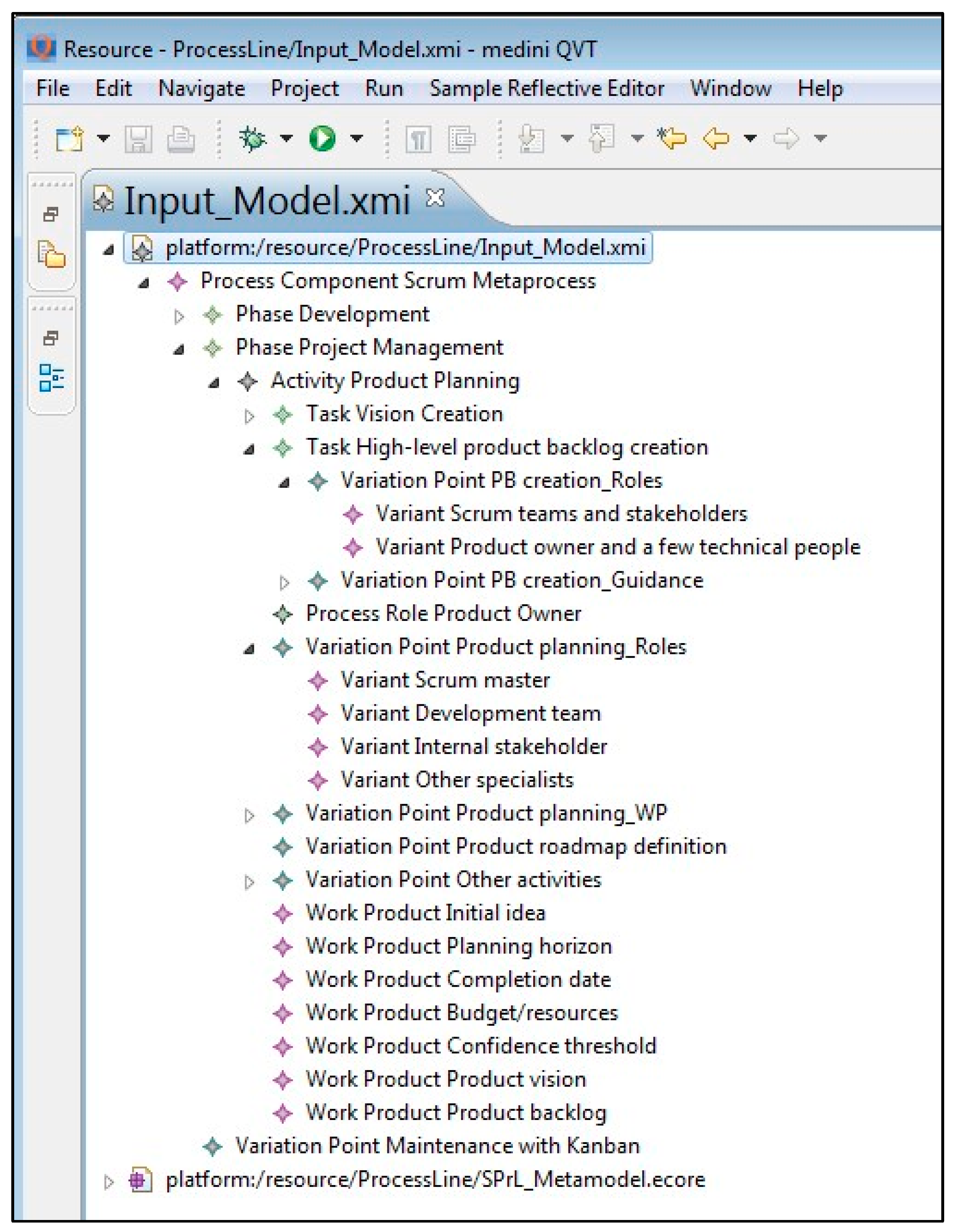

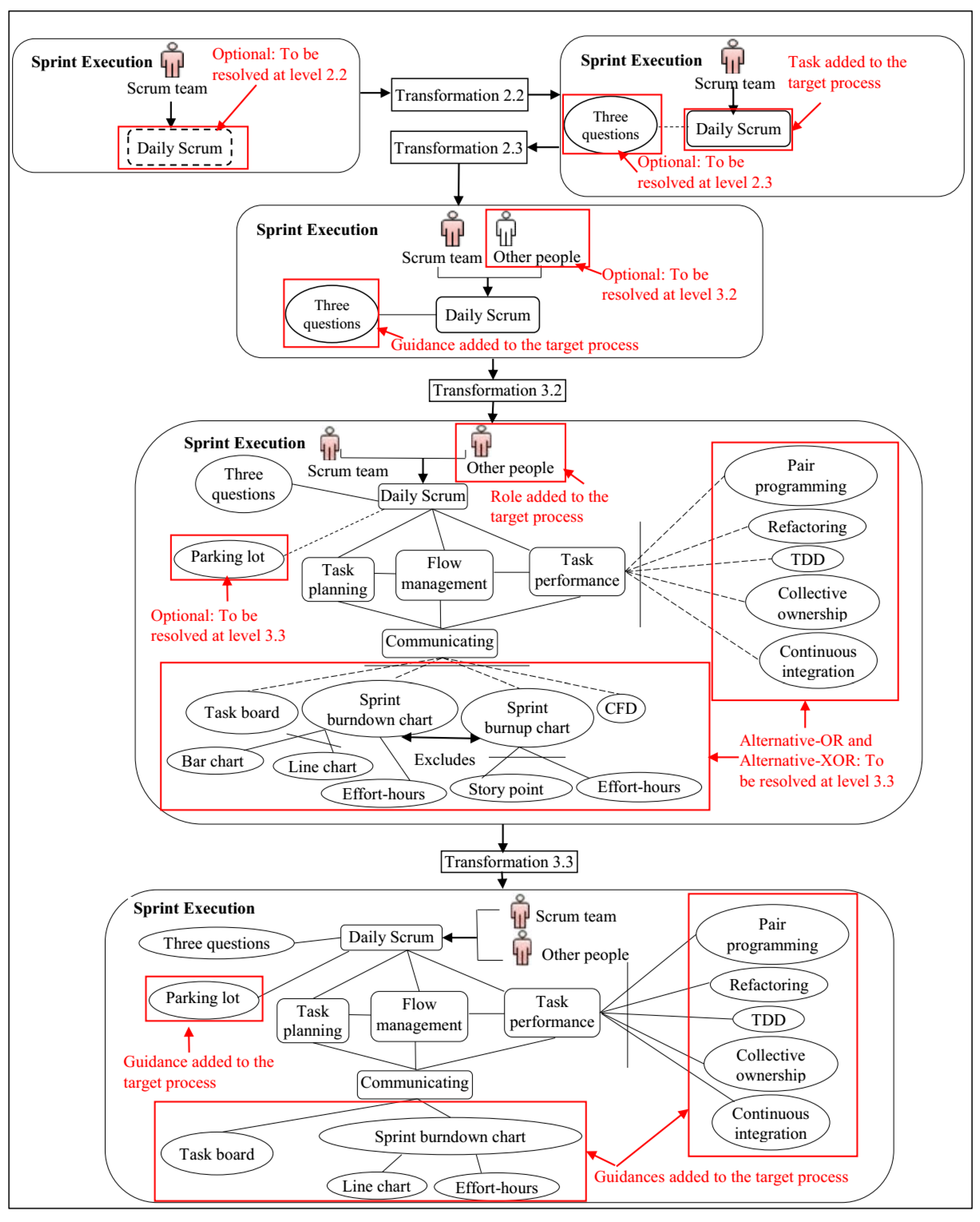

- Identification of commonalities and variabilities (Bottom-up approach): The commonalities and variabilities among the processes being executed in A were identified by applying the bottom-up approach; details have been provided in [64]. For sake of brevity, only “Sprint Execution” is presented herein (Figure 9).

Figure 9. “Sprint Execution” in A.

Figure 9. “Sprint Execution” in A.

- C.

- Implementation

The following tasks were performed:

- Constructing the extended part of the core process (Top-Down approach): The “Sprint Execution” variabilities that were defined in the Scrum metaprocess have already been shown in Figure A7 in Appendix I.

- Developing PIMB (method base): The PIMB, implemented in Medini-QVT, is provided in Appendix E.

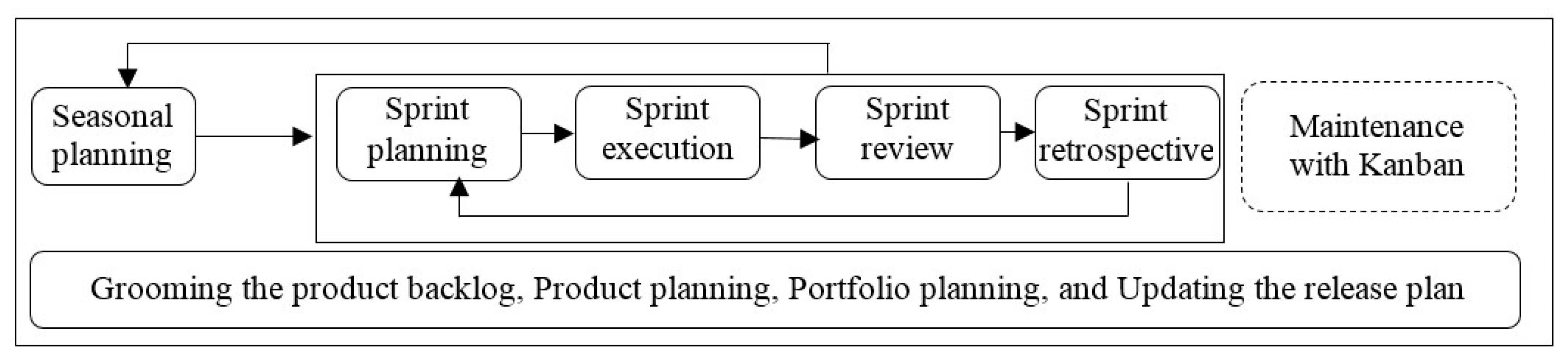

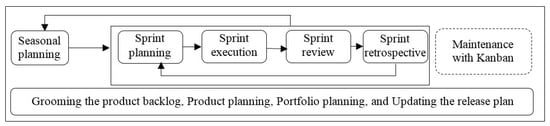

- Creating the complete core process: The final SPrL was created by combining the models created by the bottom-up and top-down approaches. An overview of this SPrL is shown in Figure 10. The details and variabilities of “Sprint Execution” in the Scrum metaprocess (Figure A7 in Appendix I) were more comprehensive than the processes being used in A (Figure 9). Therefore, the combined result was similar to Figure A7.

Figure 10. Overview of the proposed SPrL for A.

Figure 10. Overview of the proposed SPrL for A. - Implementing transformations: A set of transformations were implemented in the tool for resolving the variabilities identified in the core process. The transformations have been provided in [64].

Application Engineering

In this section, the activities performed for instantiating processes from the SPrL for projects in A are explained (Sections A, B, and C).

- A.

- Analysis

The values of context attributes in projects A.1 to A.4 were determined. For the sake of brevity, only the values of context attributes for project A.2 are presented in Appendix M. The values for other projects are provided in [64].

- B.

- Design

The variation points defined in the SPrL were gradually resolved by executing the transformations based on the values of context attributes. In the SPrL produced, there is just one phase variation point, namely “Maintenance with Kanban”. By resolving this variability, several process elements and their associated variabilities were added. The transformation implemented for this purpose is shown in Appendix N. Examples of multilevel resolution of variabilities implemented in the tool are provided in [64]. The processes currently used in A, processes produced by instantiating the SPrL, and transformations implemented in the tool have also been provided in [64].

- C.

- Implementation

The tasks were performed as follows:

- Analyzing the produced process in cooperation with members of the organization: The subjects of A confirmed that all organizational/project needs could potentially be satisfied by the processes instantiated from the SPrL, except for two problems related to the A.4 project. One problem was related to intra-team knowledge sharing, and the other was related to the capabilities of team members in writing high-quality code. Transformations were therefore applied to PIMB, and the following guidances were extracted: “Moving people around”, “Preparing documents of project status”, and “Training team members on code quality by a coach”.

- Applying the produced process to a real-world project: Parts of the process produced for project A.2 were applied in one sprint. The results are presented in Section 4.1.2.

Challenges Identified during the Application of the Proposed Approach for Creating the SPrL

The challenges that we faced when applying the proposed approach are as follows:

- Identifying commonalities and variabilities among existing processes in case A was time-consuming, mainly because their processes were not documented.

- Presenting the variabilities of the Scrum metaprocess implemented in the tool to subjects was difficult. To facilitate the adoption of SPrLs in organizations, a high level of user friendliness is needed in the user interface of the tool.

- Assigning values to situational factors was challenging since subjects had different opinions about them. To address this issue, we recommend that organizations provide concrete examples on how to assign a specific value to each situational factor based on organizational context and project characteristics.

- Using the tool for executing transformations was difficult for the subjects because of its low-level user interface. Implementing a graphical user interface would therefore improve the adoption of SPrLs in organizations.

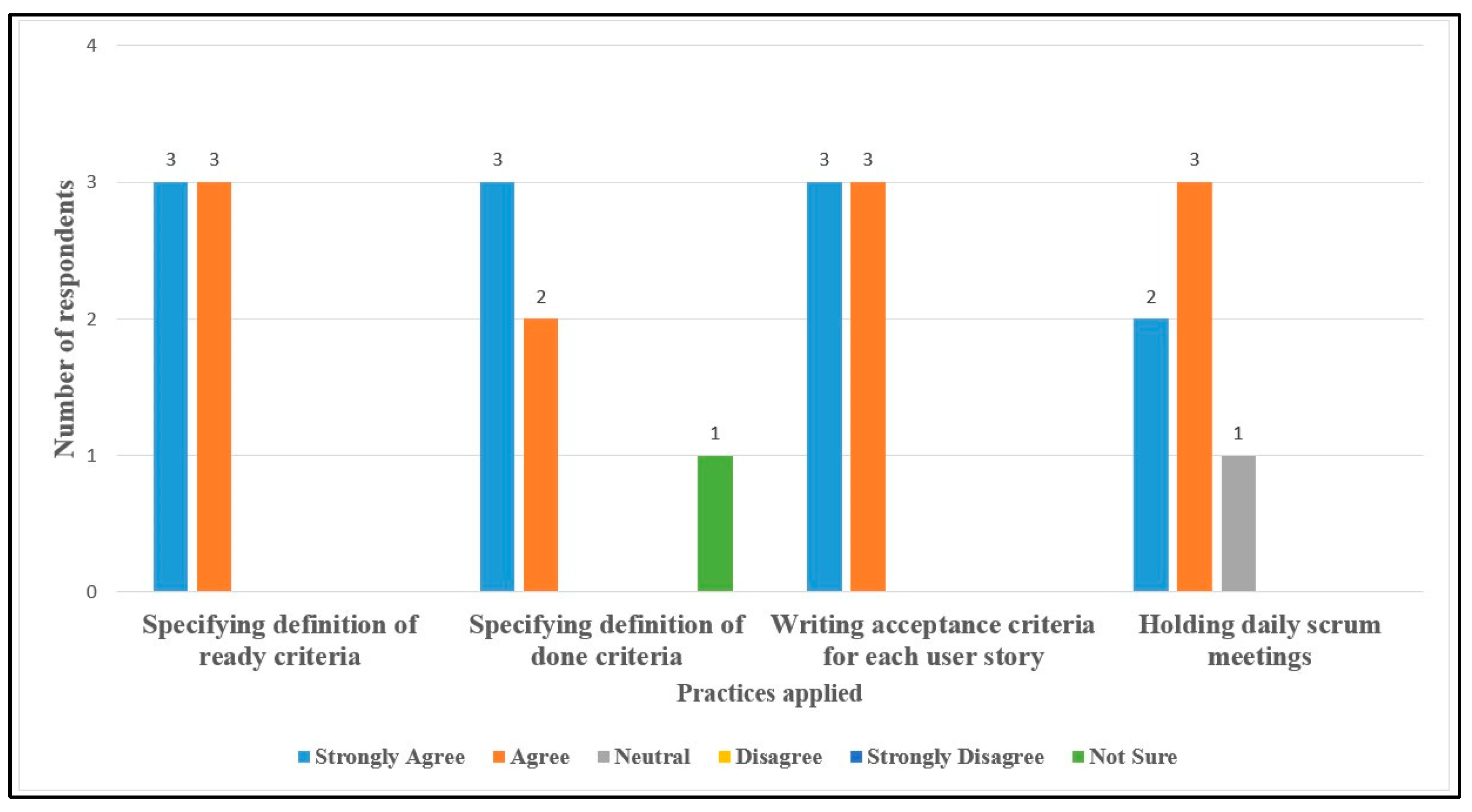

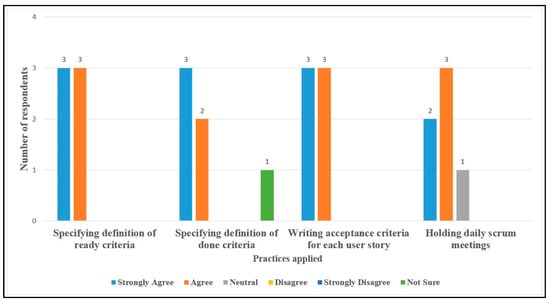

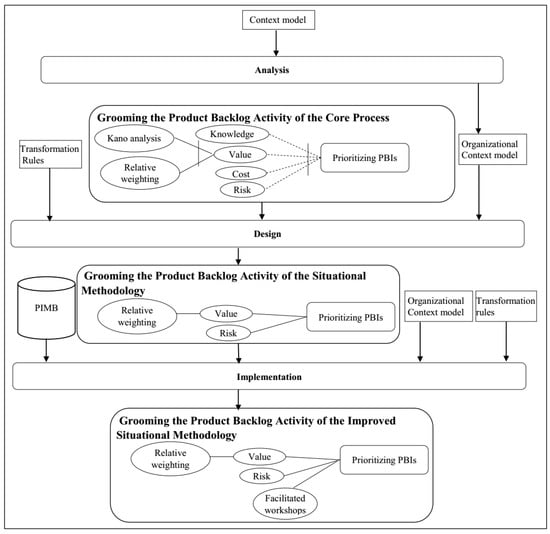

4.1.2. RQ2. Can the Specific Processes Produced from the SPrL Improve the Processes Currently Used in the Organization?

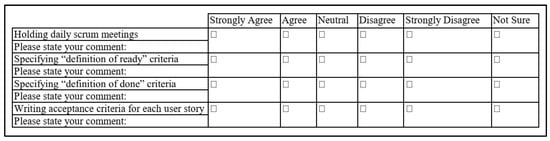

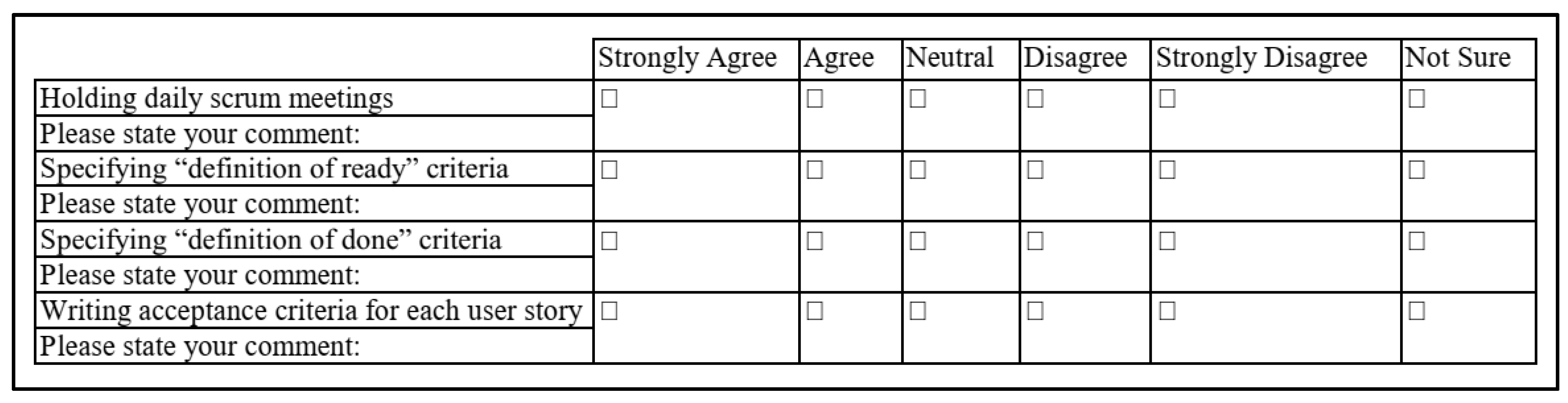

To answer this question, the subjects involved in project A.2 applied specific parts of the instantiated process in their upcoming sprint. A questionnaire was used to acquire feedback on the impact of applying the proposed process, which is provided in Appendix O. We asked the respondents to state their opinion on whether the process elements applied improved the process currently used; a list of the problem-solution pairs is provided in Appendix P. Figure 11 shows a summary of the responses given by six subjects to the questions. Almost all of the respondents agreed that the process elements applied had had a positive effect on improving the process currently used in project A.2.

Figure 11.

Summary of responses in project A.2.

In addition to the questions about the process elements applied, we also designed questionnaires for identifying the potential effects of some of the proposed process elements on improving the processes being used in projects A.1, A.3, and A.4. The questionnaires are provided in [64]. Analyzing the responses given by nine subjects to these questions indicated that the percentage of positive responses to the proposed practices was above 50%.

4.1.3. Discussion on Case Study Results

The main result of this study was creating an SPrL for company A that could be used for building specific processes. Although certain challenges were faced in applying the approach (RQ1), it had several benefits from the subjects’ point of view: documenting the processes being used in the company and identifying their deficiencies, gaining knowledge about the factors influencing the processes, and producing future processes in less time and with less effort. The results of the improvements showed that the proposed approach can indeed address the shortcomings of existing processes by infusing best practices through the top-down approach and also by using the PIMB method base (RQ2).

There are several threats to the validity of this case study, including the following:

- Internal validity: A potential threat to internal validity is subject fatigue; this was handled by planning interviews in multiple one-hour sessions. Another threat to internal validity is misinterpretations in the interviews; to mitigate this threat, in addition to the notes taken during interviews, sound recordings were produced to later be transcribed as part of analysis. The results of each interview session were also reported back to the subjects via email and face-to-face conversation (Member checking).

- Construct validity: A potential threat to construct validity is subject selection. Random subject selection was not possible, as we needed subjects with adequate knowledge about the processes used. However, viewpoints of the different roles involved were considered throughout the case study, as well as in the questionnaire designed for obtaining feedback about the proposed practices (Theory triangulation). Furthermore, a case study protocol was defined at the beginning of the study and was updated continuously afterwards (Audit trail).

- Conclusion validity: One potential threat to conclusion validity is the reliability of the study results. Reviewing the procedures selected for data collection and analysis by an expert (the second author), member checking, and theory triangulation helped mitigate this risk.

- External validity: An inherent problem of case studies is external validity. To mitigate this risk, we applied the approach on a real case. However, the study has been conducted in a company that based its software processes on Scrum; therefore, we cannot generalize the results to companies using other types of processes.

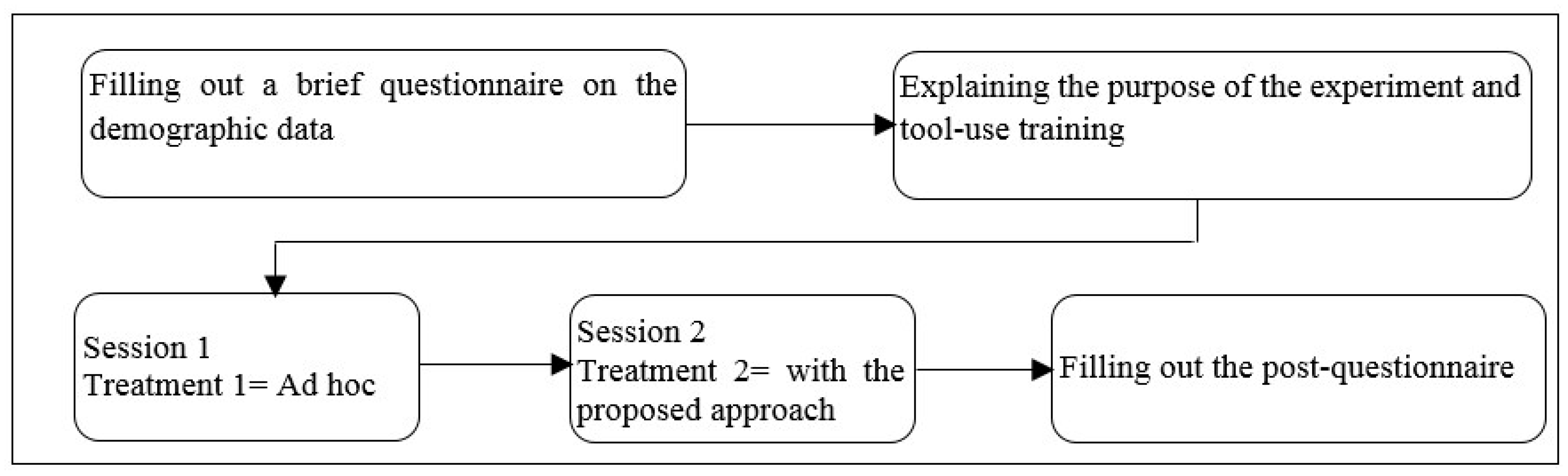

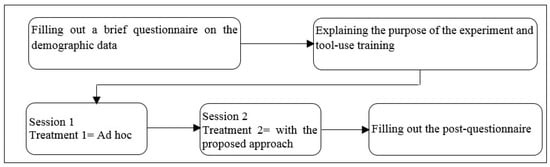

4.2. Experiment

The challenges of applying the proposed approach and its efficacy were scrutinized through the case study. However, the fundamental question still remained: how does process instantiation by the proposed approach (AE phase) compare to the ad hoc, manual approach that is commonly used to construct bespoke processes? An experiment was designed and executed to answer this question by assessing two quality attributes: usefulness and ease of use (Table 3). These attributes have previously been used for evaluating model-driven SME approaches [77]. The subjective and objective measures used in this experiment are shown in Table 3. As most usability studies use questionnaires to evaluate satisfaction [78], we designed a two-part questionnaire to gather the subjective data. The first part was designed to evaluate the users’ perceived usefulness and ease of use; this section was prepared based on the questionnaires defined by TAM [79], as it is the most widely used model for evaluating usefulness and ease of use based on subjective data [80]. The second part was designed to measure the completeness of the process produced (as a measure of its effectiveness). The objective measure was the time expended on defining the target process (completion time), which is considered as a measure of efficiency. The guidelines recommended by [81] were followed in designing the experiment. Before finalizing the design, a pilot run was performed with two subjects; these subjects were not involved in the main experiment. The details of the experiment and its results are explained in the following subsections.

Table 3.

Measures used in the experiment.

4.2.1. Definition, Planning, and Execution

The experiment’s goal was formulated as follows: Analyze the proposed approach as to usefulness and ease of use from the point of view of process engineers within the context of academia and industry. Usefulness is focused on evaluating whether the proposed approach helps build a specific process that satisfies all project/organization needs and adequately manages configuration complexity; therefore, through this question, we intend to verify that the solutions proposed by our approach do indeed address the shortcomings observed in previous approaches, including the following: Enhancing the core process, Managing configuration complexity, and Post-derivation enhancement. Ease of use is specifically focused on evaluating the ease of use of the proposed approach for constructing the SPrL and instantiating it to produce a specific process.

To achieve this goal, research questions were defined as follows:

RQ3.

What is the users’ perceived usefulness of the proposed approach?

RQ4.

What is the users’ perceived ease of use of the proposed approach?

RQ5.

To what extent does the proposed approach enhance efficiency?

RQ6.

To what extent does the proposed approach enhance effectiveness?

In order to compare the efficiency and ease of use of the proposed approach with the manual ad hoc alternative, we need subjects with knowledge on Scrum, model-driven engineering, and process engineering so that they can build an instance of Scrum by using their own tacit knowledge and then compare it with the results of using the proposed approach. Therefore, a total of 32 Computer Science graduates (MS or PhD) were invited to participate in the experiment, of which 14 subjects agreed to collaborate: eight of the subjects were professional developers, one subject was a post-doc student, and five were PhD students. These subjects were former members (12 subjects) or current members (2 subjects) of the Methodology Engineering (ME) Laboratory at the Department of Computer Engineering, Sharif University of Technology; we limited the subjects to ME-Lab members since it is the only laboratory in Iran that focuses on process engineering. Eleven subjects had already used Scrum in their past projects and three subjects had participated in process engineering courses covering Scrum. It should be mentioned that all of the subjects were either studying PhD abroad (12 subjects) or were involved in other industrial/research projects when this research was being conducted; therefore, none of them were familiar with the research or the tool. The “Sprint Execution” activity of Scrum and a hypothetical project situation were considered as experiment objects. The demographic data of the subjects and the description of the hypothetical situation are provided in Appendix Q and Appendix R, respectively.

One factor (SPrLE approach) with two treatments (Ad hoc and Proposed approach) was applied in the experiment. All the subjects applied both treatments, so this was a “Within-Subject” designed experiment. In the Ad hoc treatment (manual approach), subjects defined a Sprint Execution suitable for the hypothetical situation by using their own tacit knowledge. In the second treatment, subjects used the Medini QVT tool and the packages provided by the proposed approach (situational factors, transformations, and the Scrum metaprocess) for automatic resolution of the variabilities of the Scrum metaprocess to produce the target “Sprint Execution”; this was done by assigning values to the situational factors, and then executing the transformations. A training session was conducted for the subjects to ensure that they could work effectively with the tool. Since the subjects were geographically distributed, the experiment was executed online. The experiment’s design is illustrated in Figure 12. Independent and dependent variables of the experiment are presented in Table 4. Subjects used a timer to measure the time expended on creating the target process. At the end of each treatment, subjects submitted a snapshot of the resulting process along with the time it took to produce. After the execution of the experiment, subjects filled out a post-questionnaire, which is provided in Appendix S; the instruments used for executing the experiment are provided in Appendix T.

Figure 12.

Experiment setup.

Table 4.

Independent and dependent variables in the experiment.

To answer the research questions, subjective and objective data were analyzed as follows:

- Subjective data were of two types: quantitative data and qualitative data. To analyze the quantitative data, which were obtained from closed-ended questions, numerical values were assigned to the responses given to these questions (from 5 for “Strongly agree,” to 0 for “Not sure”). The minimum, maximum, and average values for each Likert item of the questionnaire were then calculated. Qualitative data were obtained from open-ended questions; responses to these questions were categorized in three groups: Problems and challenges, Benefits, and Suggestions for improving the approach.

- Objective data were obtained by measuring the time expended by the subjects in each treatment. The Wilcoxon signed-rank test was used to verify whether differences in time measurements were statistically significant.

4.2.2. Results

In this section, results of the experiment are explained through analyzing its four research questions.

RQ3. What Is the Users’ Perceived Usefulness of the Proposed Approach?

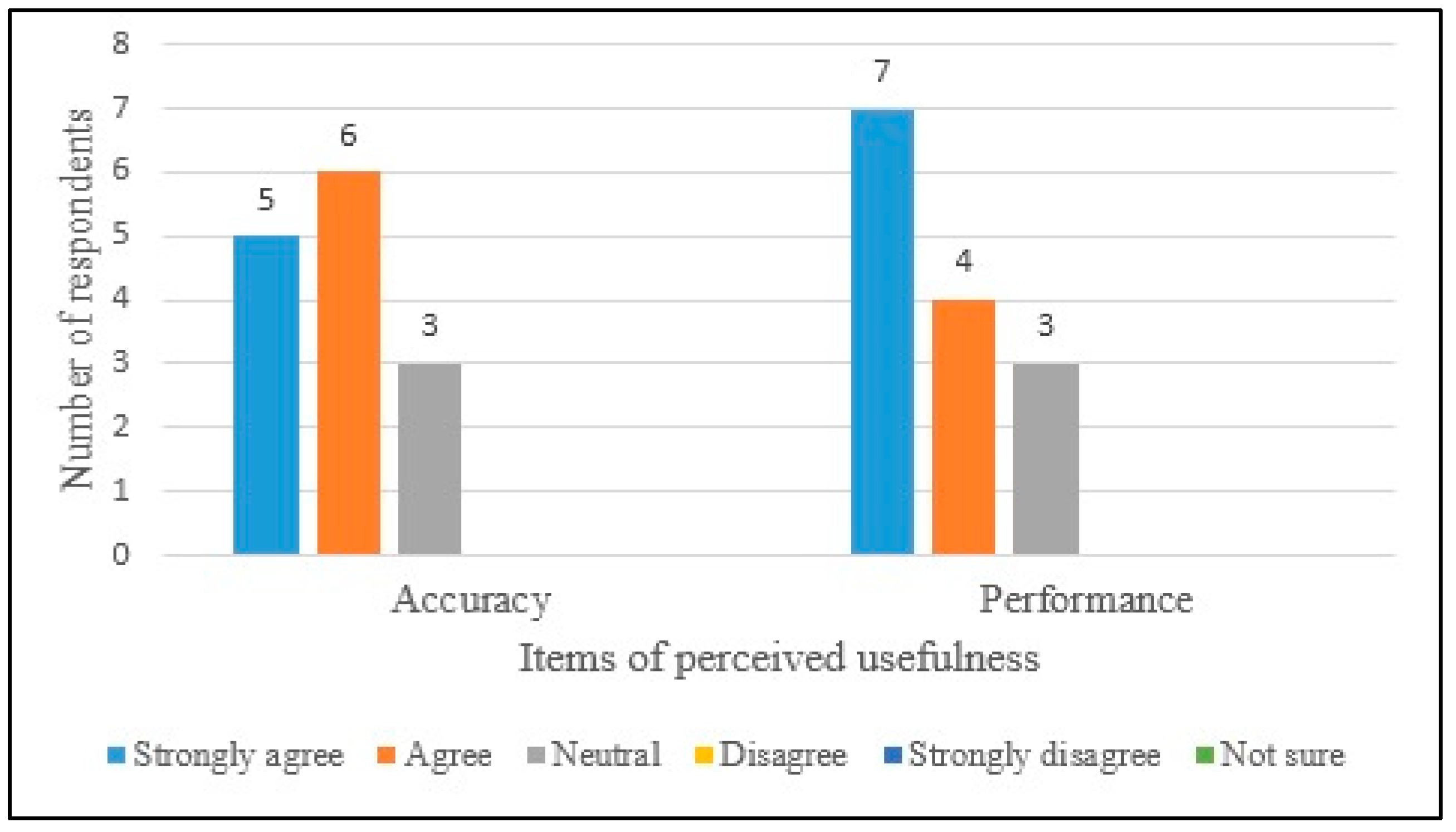

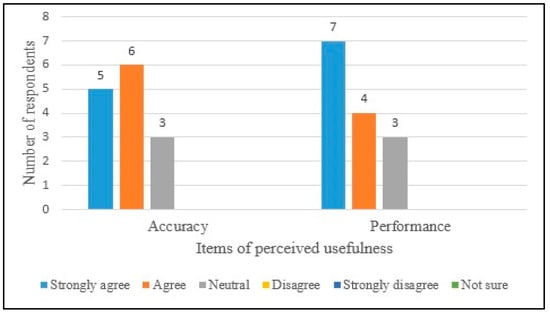

The distribution of the responses given to questions on perceived usefulness is shown in Figure 13. Analysis results are shown in Table 5. Most of the subjects answered “Strongly agree” or “Agree” to whether the proposed approach improved Accuracy and Performance (overall average: 4.21). This result was reinforced by the qualitative feedback; the answers given to the open-ended questions are provided in [64].

Figure 13.

Distribution of responses given to questions on perceived usefulness.

Table 5.

Analysis results on perceived usefulness.

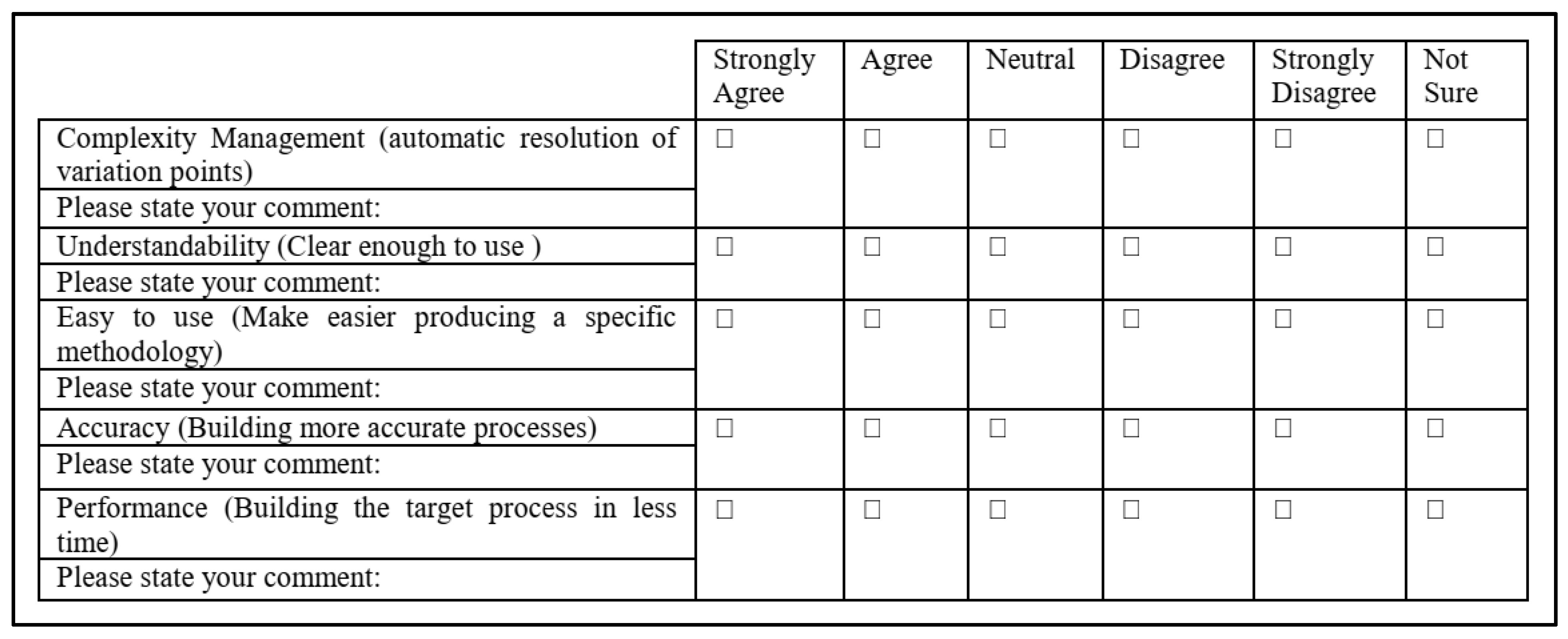

RQ4. What Is the Users’ Perceived Ease of Use of the Proposed Approach?

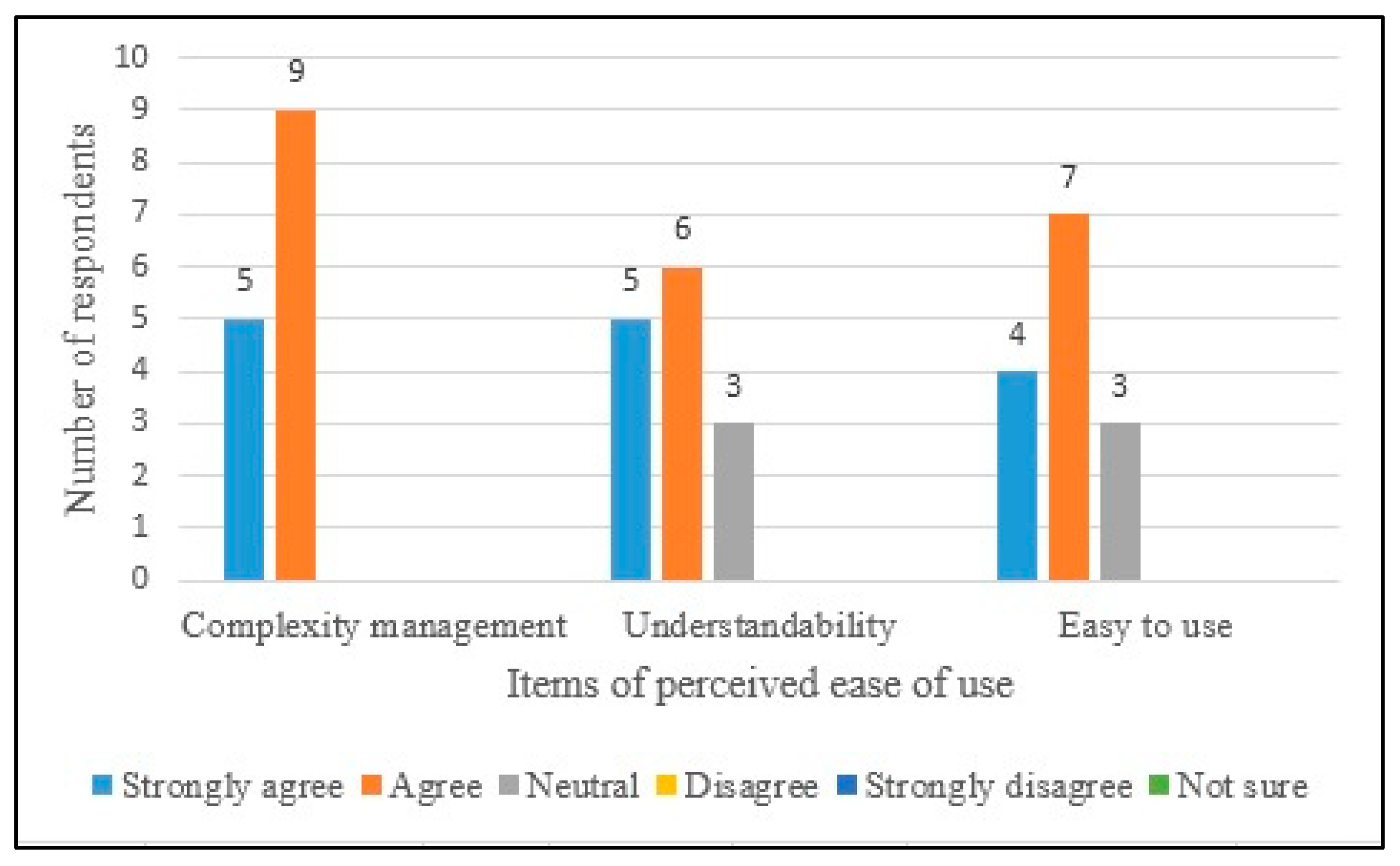

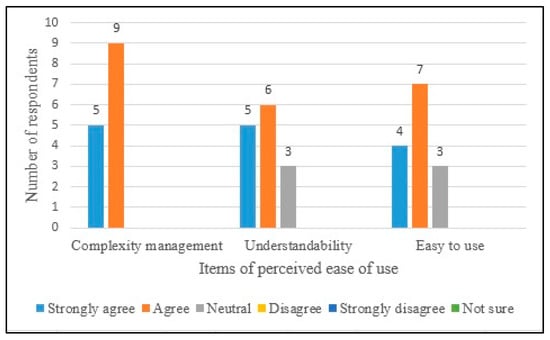

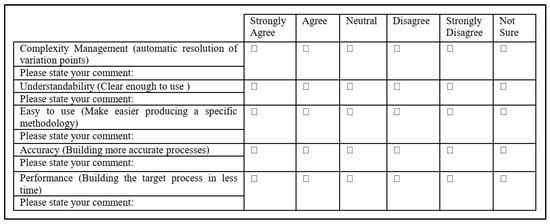

The distribution of the responses given to questions on perceived ease of use is shown in Figure 14. Analysis results are shown in Table 6. All of the subjects answered “Strongly agree” or “Agree” to whether the proposed approach supported Complexity Management, and most subjects answered “Strongly agree” or “Agree” to questions on the proposed approach’s Understandability and Ease of Use (overall average: 4.19). This result was reinforced by the qualitative feedback.

Figure 14.

Distribution of responses given to questions on perceived ease of use.

Table 6.

Analysis results on perceived ease of use.

RQ5. To What Extent Does the Proposed Approach Enhance Efficiency?

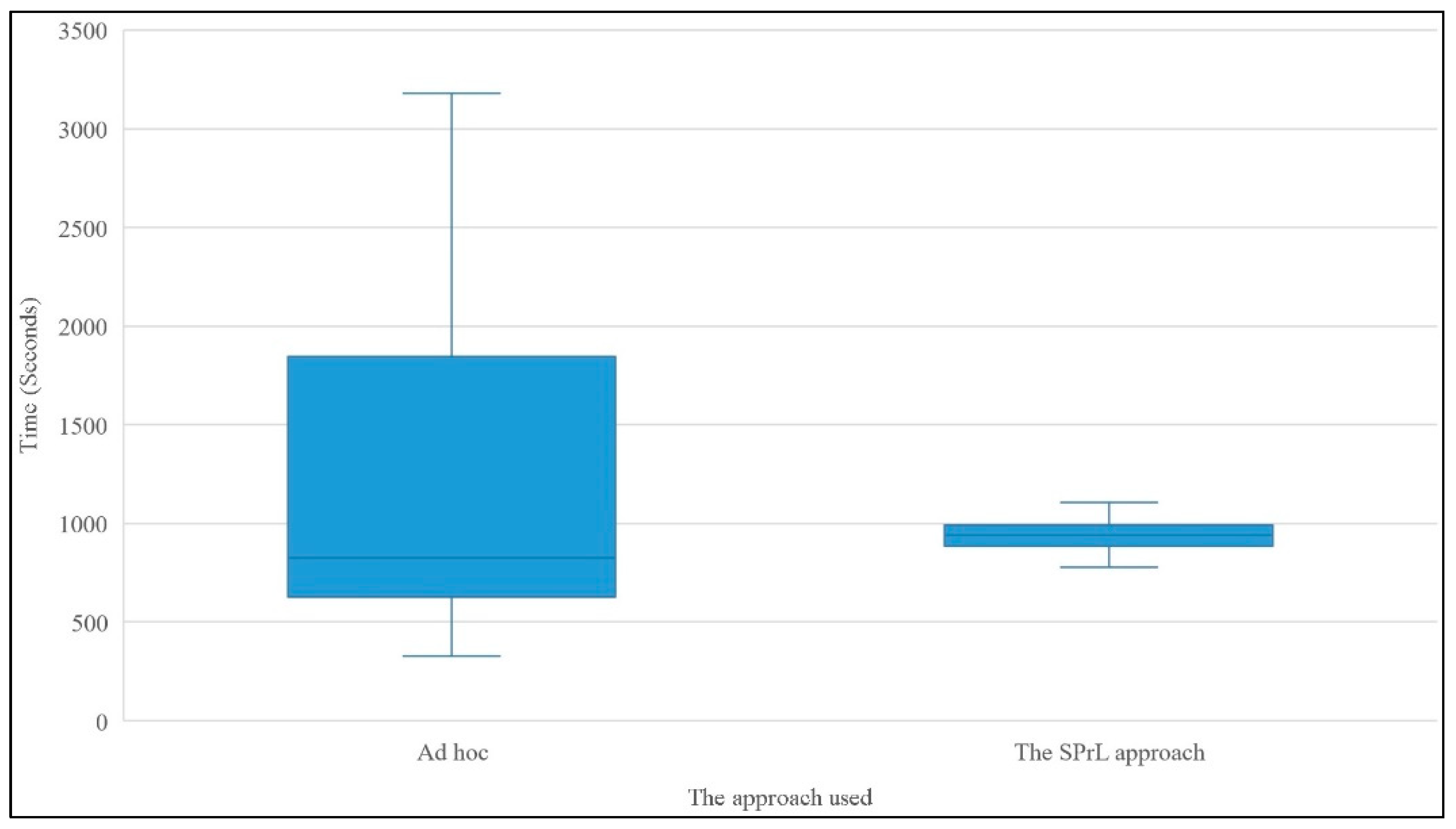

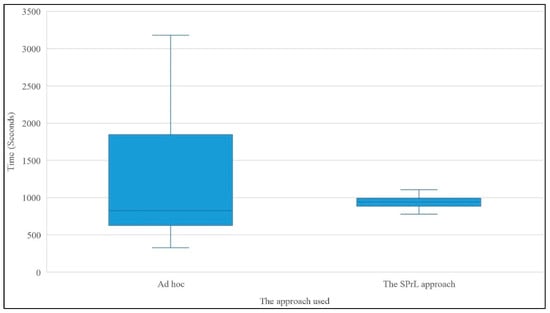

The subjects’ efficiencies are shown in Figure 15. The distribution of data in the box plots indicates that more than half of the subjects were more efficient in building an instance of Sprint Execution without using the SPrLE approach. The reason for this result was the high amount of time expended on assigning values to situational factors; the values assigned to situational factors for the hypothetical situation are provided in [64].

Figure 15.

Efficiency of subjects in the two treatments.

As shown in Figure 15, in the Ad hoc treatment, some of the subjects expended more time than others; however, in the second treatment, the times expended by different subjects were not significantly different. This indicates that the Ad hoc approach is relying, to a large extent, on expertise; whereas in the model-driven approach, the times expended are almost stable. Even though this stability is not always optimal, it is still interesting from a project management perspective.

The Wilcoxon signed-rank test was applied to verify whether differences in the times were statistically significant. This test was selected because the results of the Kolmogorov-Smirnov test (p = 0.06) indicated that a normal distribution could not be assumed. In the Wilcoxon test, p = 0.747 was obtained for an alpha level of 0.05. Since p > ∝, we could not reject H0. Thus, there was no significant difference in the times expended on the two treatments. However, the average time expended by the subjects in the second treatment (971.1 s) was lower than the average time expended in the first treatment (1229.7 s); this might show that using the proposed approach was generally more efficient than the alternative.

RQ6. To What Extent Does the Proposed Approach Enhance Effectiveness?

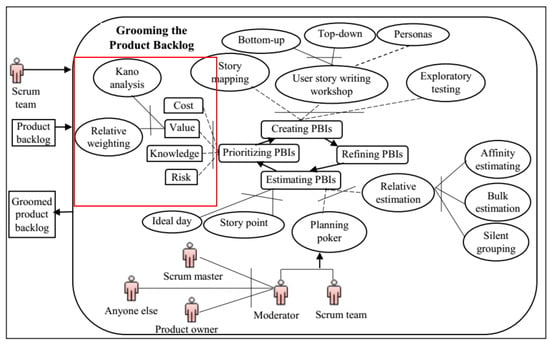

Figure 16 shows the result of executing the transformations in the tool (second treatment). To measure the effectiveness of the proposed approach, we compared the instances of “Sprint Execution” produced in the two treatments. Results showed that only 32.79% of the process elements shown in Figure 16 were identified by the subjects in the Ad hoc treatment. The details of this comparison are provided in Appendix U.

Figure 16.

Output of the tool.

In the post-questionnaire, we asked the subjects to state their opinions about the suitability of the process elements produced by the tool (Strongly agree = 5 to Not sure = 0); this section of the questionnaire was only filled out by subjects who had used Scrum in real projects (11 subjects). Details are provided in Appendix S. The average values for almost all of the process elements were greater than 4, indicating that subjects agreed with the suitability of almost all of the process elements.

4.2.3. Discussion on the Results of the Experiment