User Story Quality in Practice: A Case Study

Abstract

:1. Introduction

2. Related Work

2.1. User Stories as Requirements

2.2. Requirements in Practice

3. The Study

3.1. The Hotline System

3.2. Analyst Recruitment and Profiles

4. Evaluation

- Completeness: Are all stakeholder issues (needs and wishes) specified in the requirements?

- Correctness: Do the requirements represent stakeholder issues? Some requirements may be incorrect because they restrict the solution space by enforcing an inconvenient solution. Requirements can also be incorrect because they specify something that the customer does not need.

- Verifiability: Is there a cost-effective method for checking that the requirements are met?

- Traceability: Can we trace business issues to requirements and test scripts to requirements?

Procedure

- We gave the analysts the four-page analysis report explained in Section 3.1. The analysts were invited to ask questions, but none of them did.

- We received 8 replies (replies A–H). After receiving the replies, we noticed that they covered the stakeholder issues badly. So, we asked the analysts whether they thought their replies covered all the issues mentioned in the analysis report. To our surprise, they all said “Yes.”

- The two authors assessed all replies independently, based on an agreed assessment protocol illustrated below. Each author spent two to three hours on each reply. Further, for each reply, we had agreed on making a tracking version of the analysis report, in which we indicated for each of the 30 issues where the reply dealt with it—if at all. Further, we made an annotated copy of the reply, in which we indicated for each user story or requirement, which issue or issues it dealt with, and whether it was a possible solution, a partial solution, a wrong solution, or that it did not cover the issue. The replies and our assessments are available in [31].

- We compared our independent assessments and settled all disagreements by scrutinizing the reply.

- We sent our joint evaluation to the analysts, asking them for comments. Some authors pointed out a few mistakes in our evaluation, for instance, that we had missed requirements in their reply that dealt with some issue. We had follow-up discussions with them and easily agreed on these points.

5. Findings

- Reply A had 8 epics, called “backlog item#1#”, “backlog item #2#”, etc. Each of them had one user story (As a … I want …) with several acceptance criteria, each corresponding to a system functionality (roughly: the system shall …).

- Reply B had 12 high-level user stories (As a …), each comprising several sub-user stories (As a …).

- Reply C had around 28 user stories grouped into 3 user groups (IT user, supporter, and manager). The term “epic” was not mentioned.

- Reply D had 15 system-shall requirements, which we decided to treat as the “I-want-to” part of 15 user stories.

- Reply E had 77 user stories, organized in a group for each user type (IT-user, supporter, …).

- Reply F had 5 user stories, all starting with “As a supporter.” Further, there were two epics (New system and Statistical Analysis) with around 5 user stories.

- Reply G had 5 epics, e.g., Notifications and Features. Each epic had around 4 stories.

- Reply H had 2 epics (From the user’s and the supporter’s perspectives). Each had around 30 user stories organized in two levels.

5.1. Completeness

5.2. Correctness

- A4: User: When can I expect a reply? The issue has been solved, but the user does not know.

- Reply: As the requester, I should be able to see what the expected completion time of my request is, based on prior statistics of a similar problem (Reply F, Lines 56, 57)

- A24: Supporter: difficult to specify a cause initially. Cannot be changed later.

- Management: No list of potential causes, and hard to make one.

- Reply: As a line 1 supporter, I want to be able to request an additional cause to be added to the root causes list without delaying the ticket closure so that the list is updated properly (Reply E, Lines 75, 76).

- A4: User: When can I expect a reply? The issue has been solved, but the user does not know.

- Reply: As an IT user, I want to be able to look up the status of a request I have made, so I can track its progress in detail and plan my work (Reply B, 3c).

- A11: Supporter: nobody left on 1st line.

- Reply: As a supporter, I should be notified if my team members move from first line to second line or vice versa so that I know the current team lists. (Reply H, d-ix).

5.3. Verifiability

- A25: Supporter: Today it is hard to record additional comments.

- Reply: As a hotline supporter, I want to be able to add additional information to any request, so that I can help the request be resolved sooner. (Reply B, 9f).

- A14: Supporter: hard to spot important requests among other requests.

- Reply: As a line 1 supporter, I want to sort all open issues by different methods so that I can easily locate an issue I want to work on. (Reply E, Lines 84, 85).

5.4. Traceability

6. Discussion

6.1. How a Good Idea Is Corrupted

- The analyst invents a user. As an example, look at issue A11 (Table A1): “It happens that a supporter moves to second line without realizing that nobody is left on first-line”. Analyst B dealt with this by inventing a manager (Reply B, 12a): “As a manager, I want the support system to always have a person available for the users, so that the users are not angry”. Actually, this small hotline does not have a manager. The hotline is part of the IT department, which has many other duties. Supporters find out themselves who does what, so the requirement was wrong. The customer did not want it. As another example, issue A4 (Table A1) states: “If the user cannot have his problem solved right away, it is annoying not knowing when it will be solved”. Analyst F made the “system” a user (Reply F, Line 25): “As the new system, I should poll all devices.”

- The analyst invents a solution and claims that a user wants it. As an example, reply C (Lines 29, 30) said: “As an IT User, I want a reference number for my request, so that if I need to call support again, they will be able to easily look up my request”. This user story was not related to a specific issue. Further, in our experience, IT users hate such numbers. They much prefer that hotline staff identify the request by the IT-user’s name. So, the requirement was wrong. It was a bad solution. The reference number was convenient for other reasons, but not because the user wanted it.

- The analyst ignores the issue. This happened often, and as a result, we saw a low hit rate: On average, each reply covered only 33% of the issues.

- “As a user I wish to report an IT-request to service desk, in order to get it solved as soon as possible so I can continue my work” (Reply A, User Story 1, in relation to issue A3 in Table A1).

- “As an IT user, I want to report printer problems so that I can print” (reply B, User Story 1b, in relation to issue A2 in Table A1).

- “As a supporter, I want a simple template to create requests, so it is fast and easy to create simple requests” (reply C, Lines 36,37, in relation to issue A7 in Table A1).

- “As a Supporter, I want to be able to view requests from a mobile device, so if I need information away from the office it is available” (reply C, Lines 93, 94, concerning issue A18 in Table A1).

- “As a Line supporter, I want to view all open issues so that I can action them” (reply E, Line 20, in relation to issues A15 and A19)

6.2. Development Contexts

6.3. Implementing a User Story

6.4. Comparison with Problem-Oriented Requirements

7. Threats to Validity

- Participation Selection: Since it is hard to recruit qualified analysts who will commit several hours for such a study, the sample of analysts was small and may not have represented the entire population of IT practitioners. To mitigate the threat, the two authors recruited the analysts from their networks independently. Further, we only recruited analysts that had reasonable professional or academic experience with user stories. As a result, there was some diversity in the sample in terms of the analysts’ education, location, and experience. However, it could be argued that some analysts did not have sufficient experience to participate in this study. However, according to Table 1, six analysts had used user stories professionally as part of their daily work, and only two had theoretical knowledge of user stories (analysts B and H). However, when we compared the quality of their replies with that of the analysts who had professional experience with user stories, there was no significant difference. Nonetheless, with such a small sample, the authors do not claim that the results of this study are generalizable. The purpose of the study was not to obtain generalizable, statistically significant results, but to explore new territory, the quality of user stories as requirements in practice.

- Participant Incentive: Half of the analysts (A, B, D, F) were not financially compensated for their involvement in the case study. The participants simply volunteered to do it as a challenge. It could be argued that these analysts did not have the incentive to write quality user stories. Nevertheless, when we compared the quality of their replies with that of the analysts who were paid (C, E, G, H), there was no sign of the paid analysts performing better. In fact, analyst B scored the highest in terms of completeness (43%), followed by analyst D (37%). Both analysts belong to the group that was not financially compensated.

- Case Study Setup: It could be argued that user stories are a natural language tool used for brainstorming. As such, it is more natural to let analysts talk to the client and develop the user stories gradually as opposed to basing the user stories on a pre-made report. Such a setting would be more realistic but would be costly and impractical. Nevertheless, we recognize that this is a limitation of our study. Consequently, we mitigated this issue by inviting the analysts to ask the authors questions as they would ask a customer in a realistic setting, but nobody did. We also asked them whether they were sure they covered everything, and they all said yes. Further, the analysts received our evaluation for comments. There were few comments, and they were easily reconciled.

- Author Bias: One of the authors has used an alternative technique to write requirements, problem-oriented requirements [36]. This might cause him to be biased against user stories. We mitigated this threat by getting consensus on the evaluation from both authors and the analysts, as explained above.

- Construct Validity: The quality of user stories is not something that can be directly observed or measured. To avoid subjective measurement of effectiveness, we relied on the IEEE 830 requirements criteria to measure quality. Further, as discussed in Section 4, we implemented several checks and balances to maintain objectivity. First, the authors assessed the criteria independently, reconciled the differences, and arrived at a consensus using an inter-coded agreement. Second, in assessing the criteria, we intentionally ensured we did not make a harsh judgment. For instance, as explained in Section 4, we considered a case fully covered even if it imposed an inconvenient solution, while we considered a case partly covered even if it just mentioned it. Second, the analysts were invited to challenge our assessment and measurement. We exchanged a few e-mails in cases where there were doubts about our assessment. We easily agreed. Third, we invited experts and researchers to challenge our assessment by making our assessment publicly available here [31].

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| ID | Stakeholder Issue (Need or Wish) | Replies | Coverage A–H | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| A | B | C | D | E | F | G | H | S | |||

| A1 | The Z-Department is a department of the Danish government. It has around 1000 IT users. It has its own hotline (help desk). They are unhappy with their present open-source system for hotline support and want to get a better one. They do not know whether to modify the system they have or buy a new one in a tender process. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0% |

| A2 | The users encounter problems of many kinds. For instance, they may have forgotten their password, so they cannot start their work; or the printer lacks toner; or they cannot remember how to make Word write in two columns. The problem may also be to repair something, for instance a printer, or to order a program the user needs. | 0 | ½ | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 19% |

| A3 | The easiest solution is to phone hotline or walk into their office. In many cases, this solves the problem right away. However, hotline prefers to receive the problem request by e-mail to hotline@… Sometimes this is impossible, for instance if the problem is that the user has forgotten his password. | ½ | 1 | 0 | ½ | 1 | 0 | 0 | 1 | 1 | 50% |

| A4 | If the user cannot have his problem solved right away, it is annoying not knowing when it will be solved. How often will he for instance have to go to the printer to check whether it has got toner now? In many cases the problem has been solved, but the user does not know. | 0 | 1 | 1 | ½ | ½ | 1 | ½ | ½ | 1 | 63% |

| A5 | The present support system allows the user to look up his problem request to see what has happened, but it is inconvenient and how often should he look? | 0 | 1 | 0 | 0 | ½ | 0 | 0 | ½ | 1 | 25% |

| A6 | Hotline is staffed by supporters. Some supporters are first line, others are second line. First-line supporters receive the requests by phone or e-mail, or when the user in person turns up at the hotline desk. | ½ | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 1 | 56% |

| A7 | In busy periods, a first-line supporter may receive around 50 requests a day. Around 80% of the requests can be dealt with right away, and for these problems it is particularly hard to ensure that supporters record them for statistical use. | 0 | ½ | ½ | 0 | 0 | 0 | ½ | 0 | 1 | 19% |

| A8 | The remaining 20% of the requests are passed on to second line. Based on the problem description and talks with the user, first line can often give the request a priority and maybe an estimated time for the solution. (Experience shows that users should not be allowed to define the priority themselves, because they tend to give everything a high priority.) | 0 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 1 | 63% |

| A9 | Half of the second-line requests are in principle easy but cannot be dealt with immediately. The supporter may have to move out of the office, for instance to change toner in the printer or help the user at his own PC. Usually this ends the request, but it may also turn into a long request because a specialist or a spare part is needed. Often the supporter visits several locations when he moves out of the office. | 0 | 0 | ½ | 0 | 0 | 0 | ½ | 1 | 1 | 19% |

| A10 | Around 10% of all problems are long requests because the problem has to be transferred to a hotline person with special expertise, or because spare parts and expertise have to be ordered from external sources. Transferring the problem often fails. The supporter places a yellow sticker on the expert’s desk, but the stickers often disappear. Or the expert misunderstands the problem. For this reason, it is important that the expert in person or by phone can talk with the supporter who initially received the request, or with the user himself. | ½ | ½ | ½ | ½ | 0 | 0 | ½ | 0 | 1 | 31% |

| A11 | There are 10–15 employees who occasionally or full time serve as supporters. They know each other and know who is an expert in what. The supporters frequently change between first and second line, for instance to get variation. It happens, unfortunately, that a supporter moves to second line without realizing that nobody remains in first line. | 1 | 0 | 0 | 0 | 0 | 0 | ½ | ½ | 1 | 25% |

| A12 | The request is sometimes lost because a supporter has started working on it, but becomes ill or goes on vacation before it is finished. | 0 | ½ | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 19% |

| A13 | Managers ask for statistics of frequent and time-consuming requests in order to find ways to prevent the problems. However, with the present system it is cumbersome to record the data needed for statistics. Gathering this data would also make it possible to measure how long hotline takes to handle the requests. | ½ | ½ | 1 | 0 | 1 | 0 | 0 | 0 | 1 | 38% |

| A14 | In busy periods, around 100 requests may be open (unresolved). Then it is hard for the individual supporter to survey the problems he is working on and see which problems are most urgent. | ½ | ½ | 1 | 0 | ½ | ½ | ½ | 0 | 1 | 44% |

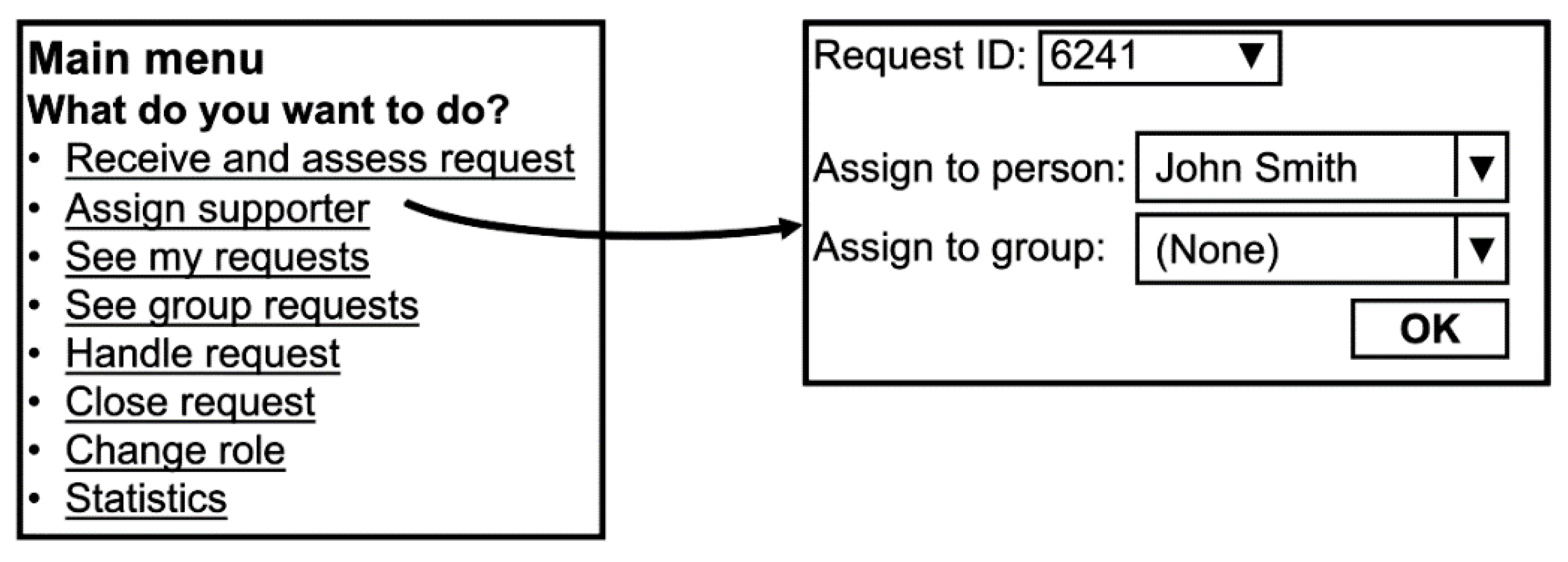

| A15 | The present system automatically collects all e-mails sent to hotline@… and put them in a list of open requests. Figure 3 [was enclosed] shows an example of such a list. You can see the request number (Req), the user (Sender, i.e., his e-mail address), the supporter working on it (Owner), and how long ago the request was received (Age). | ½ | 0 | 1 | 0 | ½ | 0 | 0 | ½ | 1 | 31% |

| A16 | You can also see when someone last looked at the request (ActOn). However, this is not really useful. It would be better to see when the request should be closed according to what the user expects. It would be nice if the system warned about requests that are not completed on time. | 1 | ½ | 0 | 1 | ½ | 0 | 0 | 0 | 1 | 38% |

| A17 | When a user calls by phone or in person, the supporter creates a new request. It will appear in the normal list of requests. However, when the supporter can resolve the request right away, he often does not record it because it is too cumbersome. This causes misleading statistics. | 0 | ½ | 1 | 0 | ½ | 0 | ½ | 0 | 1 | 31% |

| A18 | As you can tell from the figure, the system cannot handle the special Danish letters (æ, ø, å), and it is not intuitive what the various functions do. The user interface is in English, which is the policy in the IT-department. The system has a web-based user interface that can be used from Mac (several supporters use Mac) and mobile. However, it has very low usability and is rarely used. | ½ | 0 | 0 | 1 | 1 | 0 | ½ | 0 | 1 | 38% |

| A19 | Anyway, the basic principle is okay. A supporter keeps the list on the screen so he can follow what is going on. He can open an incoming request (much the same way as you open an e-mail), maybe take on the request (for instance by sending a reply mail), classify the case according to the cause of the problem (printer, login, etc.), give it a priority, transfer it to someone else, etc. When the request has been completed, the supporter closes it, and the request will no longer be on the usual list of open requests. | ½ | ½ | 0 | ½ | 1 | 0 | ½ | ½ | 1 | 44% |

| A20 | As you can see in Figure 3 [was enclosed], Status is not used at all. It is too cumbersome and the present state names are confusing. | 0 | 0 | 1 | ½ | ½ | ½ | ½ | ½ | 1 | 44% |

| A21 | Some of the supporters have proposed to distinguish between these request states: First-line (waiting for a first-line supporter), Second-line (waiting for a second-line supporter), Taken (the request is currently being handled by a supporter), Parked (the request awaits something such as ordered parts), Reminder (the request has not closed in due time), and Closed (handled, may be reopened). | ½ | ½ | ½ | 1 | ½ | 0 | 1 | ½ | 1 | 56% |

| A22 | Open requests are those that are neither parked, nor closed. | 0 | ½ | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 6% |

| A23 | For statistical purposes and to support the resolution of the request, it would be useful to keep track of when a request has changed state. | 0 | 0 | 0 | ½ | 0 | 0 | 1 | 0 | 1 | 19% |

| A24 | The present system can be configured to record a problem cause, but then the system insists that a cause be recorded initially, although the real cause may not be known until later. In addition, somebody must set up a list of possible causes, and this is a difficult task. As a result, causes are not recorded, and statistics are poor. | 0 | 0 | 0 | 1 | ½ | 0 | ½ | 0 | 1 | 25% |

| A25 | While a long request is handled, it may receive additional information from the original user as well as from supporters. In the present system it is cumbersome to record this, and as a result the information may not be available for the supporter who later works on the request. | ½ | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 81% |

| A26 | A supporter can set the system to send an ordinary e-mail to himself when he has to look at some request. This is particularly useful for second-line supporters who concentrate on other tasks until they are needed for support. | 0 | 1 | 0 | 0 | ½ | 0 | ½ | 0 | 1 | 25% |

| A27 | The Z-Department uses Microsoft Active Directory (AD) to keep track of employee data, e.g., the user’s full name, phone number, office number, e-mail address, username, password and the user’s access rights to various systems. The support system should retrieve data from AD and not maintain an employee file of its own. | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 38% |

| A28 | When getting a new system, they imagine running the new and old system at the same time until all the old requests have been resolved. However, if the old requests can be migrated to the new system at a reasonable price, it would be convenient. | 1 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 25% |

| A29 | They expect to operate the system themselves and handle security as they do for many other systems. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0% |

| A30 | They imagine connecting the new hotline system to systems that can generate hotline requests when something needs attention. Examples: The system that monitors the servers could generate a request when a server is down. Systems that keep track of employee’s loan of various items, could generate a request when the item is not returned on time. | ½ | ½ | 0 | 0 | 0 | ½ | 0 | 0 | 1 | 19% |

| Total (rounded down) | 9 | 13 | 11 | 11 | 11 | 5 | 10 | 8 | 29 | Average 33% | |

References

- Beck, K.; Andres, C. Extreme Programming Explained: Embrace Change; Addison-Wesley: Boston, MA, USA, 2004. [Google Scholar]

- Cohn, M. User Stories Applied: For Agile Software Development; Addison-Wesley Professional: Boston, MA, USA, 2004. [Google Scholar]

- Jeffries, R.; Hendrickson, M.; Anderson, A.; Hendrickson, C. Extreme Programming Installed; Addison-Wesley Professional: Boston, MA, USA, 2000. [Google Scholar]

- Beck, K.; Fowle, M. Planning Extreme Programming; Addison-Wesley Professional: Boston, MA, USA, 2000. [Google Scholar]

- Cohn, M. Agile Estimating and Planning; Pearson: Upper Saddle River, NJ, USA, 2005. [Google Scholar]

- Wang, X.; Zhao, L.; Wang, Y.; Sun, J. The Role of Requirements Engineering Practices in Agile Development: An Empirical Study. In Requirements Engineering; Springer: Berlin/Heidelberg, Germany, 2014; pp. 195–209. [Google Scholar] [CrossRef]

- Dimitrijević, S.; Jovanović, J.; Devedžić, V. A comparative study of software tools for user story management. Inf. Softw. Technol. 2015, 57, 352–368. [Google Scholar] [CrossRef]

- Lucassen, G.; Dalpiaz, F.; Werf, J.M.; Brinkkemper, S. The Use and Effectiveness of User Stories in Practice. In Requirements Engineering: Foundation for Software Quality, Proceedings of the 22nd International Working Conference, REFSQ 2016, Gothenburg, Sweden, 14–17 March 2016; Springer: Cham, Switzerland, 2016; pp. 205–222. [Google Scholar] [CrossRef]

- Cao, L.; Ramesh, B. Agile Requirements Engineering Practices: An Empirical Study. IEEE Softw. 2008, 25, 60–67. [Google Scholar] [CrossRef]

- Ramesh, B.; Cao, L.; Baskerville, R. Agile requirements engineering practices and challenges: An empirical study. Inf. Syst. J. 2010, 20, 449–480. [Google Scholar] [CrossRef]

- IEEE Computer Society. 830-1998-IEEE Recommended Practice for Software Requirements Specifications; IEEE: New York, NY, USA, 2009. [Google Scholar]

- Lucassen, G.; Dalpiaz, F.; Werf, J.M.; Brinkkemper, S. Forging High-Quality User Stories: Towards a Discipline for Agile Requirements. In Proceedings of the 23rd IEEE International Conference on Requirements Engineering, Ottawa, ON, Canada, 24–28 August 2015. [Google Scholar] [CrossRef]

- Savolainen, J.; Kuusela, J.; Vilavaara, A. Transition to Agile Development-Rediscovery of Important Requirements Engineering Practices. In Proceedings of the 18th IEEE International Requirements Engineering Conference, Sydney, NSW, Australia, 27 September–1 October 2010. [Google Scholar] [CrossRef]

- Danevaa, M.; Veena, E.V.; Amrita, C.; Ghaisasb, S.; Sikkela, K.; Kumarb, R.; Wieringaa, R. Agile requirements prioritization in large-scale outsourced system projects: An empirical study. J. Syst. Softw. 2013, 86, 1333–1353. [Google Scholar] [CrossRef]

- Ernst, N.A.; Murphy, G.C. Case studies in just-in-time requirements analysis. In Proceedings of the Second IEEE International Workshop on Empirical Requirements Engineering (EmpiRE), Chicago, IL, USA, 25 September 2012. [Google Scholar] [CrossRef]

- Paetsch, F.; Eberlein, A.; Maurer, F. Requirements engineering and agile software development. In Proceedings of the Twelfth IEEE International Workshops on Enabling Technologies: Infrastructure for Collaborative Enterprises, Linz, Austria, 11 June 2013; pp. 308–313. [Google Scholar] [CrossRef]

- Behutiye, W.; Karhapää, P.; Costal, D.; Oivo, M.; Franch, X. Non-functional Requirements Documentation in Agile Software Development: Challenges and Solution Proposal. In Product-Focused Software Process Improvement, Proceedings of the 18th International Conference, PROFES 2017, Innsbruck, Austria, 29 November–1 December 2017; Felderer, M., Méndez Fernández, D., Turhan, B., Kalinowski, M., Sarro, F., Winkler, D., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2017; Volume 10611. [Google Scholar] [CrossRef]

- Farid, W.M.; Mitropoulos, F.J. NORMATIC: A visual tool for modeling Non-Functional Requirements in agile processes. In Proceedings of the IEEE Southeastcon, Orlando, FL, USA, 15–18 March 2012; pp. 1–8. [Google Scholar] [CrossRef]

- Wake, B. INVEST in Good Stories, and SMART Tasks. 2003. Available online: http://xp123.com/articles/invest-in-good-stories-and-smart-tasks (accessed on 16 October 2020).

- Heck, P.; Zaidman, A. A Quality Framework for Agile Requirements: A Practitioner’s Perspective. arXiv 2014. [Google Scholar] [CrossRef]

- Wautelet, Y.; Heng, S.; Kolp, M.; Mirbel, I. Unifying and Extending User Story Models. In Advanced Information Systems Engineering, Proceedings of the 26th International Conference, CAiSE 2014, Thessaloniki, Greece, 16–20 June 2014; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8484. [Google Scholar] [CrossRef]

- Wautelet, Y.; Heng, S.; Kolp, M.; Mirbel, I.; Poelmans, S. Building a rationale diagram for evaluating user story sets. In Proceedings of the 2016 IEEE Tenth International Conference on Research Challenges in Information Science (RCIS), Grenoble, France, 1–3 June 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Wautelet, Y.; Velghe, M.; Heng, S.; Poelmans, S.; Kolp, M. On Modelers Ability to Build a Visual Diagram from a User Story Set: A Goal-Oriented Approach. In Requirements Engineering: Foundation for Software Quality, Proceedings of the 24th International Working Conference, REFSQ 2018, Utrecht, The Netherlands, 19–22 March 2018; Lecture Notes in Computer Science; Kamsties, E., Horkoff, J., Dalpiaz, F., Eds.; Springer: Cham, Switzerland, 2018; Volume 10753. [Google Scholar] [CrossRef]

- Tsilionis, K.; Maene, J.; Heng, S.; Wautelet, Y.; Poelmans, S. Conceptual Modeling Versus User Story Mapping: Which is the Best Approach to Agile Requirements Engineering? In Research Challenges in Information Science, Proceedings of the 15th International Conference, RCIS 2021, Limassol, Cyprus, 11–14 May 2021; Lecture Notes in Business Information Processing; Cherfi, S., Perini, A., Nurcan, S., Eds.; Springer: Cham, Switzerland, 2021; Volume 415. [Google Scholar] [CrossRef]

- Condori-Fernandez, N.; Daneva, M.; Sikkel, K.; Wieringa, R. A Systematic Mapping Study on Empirical Evaluation of Software. In Proceedings of the Third International Symposium on Empirical Software Engineering and Measurement, Lake Buena Vista, FL, USA, 15–16 October 2009; pp. 502–505. [Google Scholar]

- Dalpiaz, F.; Sturm, A. Conceptualizing Requirements Using User Stories and Use Cases: A Controlled Experiment. REFSQ 2020: Requirements Engineering: Foundation for Software Quality; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Dalpiaz, F.; Gieske, P.; Sturm, A. On deriving conceptual models from user requirements: An empirical study. Inf. Softw. Technol. 2021, 131, 106484. [Google Scholar] [CrossRef]

- Inayat, I.; Salim, S.S.; Marczak, S.; Daneva, M.; Shamshirband, S. A systematic literature review on agile requirements engineering practices and challenges. Comput. Hum. Behav. 2015, 51, 915–929. [Google Scholar] [CrossRef]

- Lauesen, S.; Kuhail, M.A. Use Cases versus Task Descriptions. In Requirements Engineering: Foundation for Software Quality; Lecture Notes in Computer Science; Berry, D., Franch, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6606. [Google Scholar] [CrossRef]

- De Lucia, A.; Qusef, A. Requirements Engineering in Agile Software Development. J. Emerg. Technol. Web Intell. 2003, 2, 212–220. [Google Scholar] [CrossRef]

- User Story Experiment Assignment and Replies. Available online: http://www.itu.dk/~slauesen/UserStories/ (accessed on 25 March 2021).

- Atlassian. Jira Software Tool. Available online: https://www.atlassian.com/software/jira (accessed on 19 March 2021).

- Gotel, O.C.; Finkelstein, A.C. An Analysis of the Requirements Traceability Problem. In Proceedings of the IEEE International Conference on Requirements Engineering, Colorado Springs, CO, USA, 18–22 April 1994; pp. 94–101. [Google Scholar]

- Consortium, A.B. The DSDM Agile Project Framework Handbook; Buckland Media Group: Dover, UK, 2014. [Google Scholar]

- Kulak, D.; Guiney, E. Use Cases: Requirements in context. ACM SIGSOFT Softw. Eng. Notes 2001, 26, 101. [Google Scholar] [CrossRef]

- Lauesen, S. Problem-Oriented Requirements in Practice–A Case Study. In Requirements Engineering: Foundation for Software Quality; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

| Analyst | Position/Country | Education | Experience |

|---|---|---|---|

| A | A team of two consultants (Denmark). | MS in computer science. | The team has extensive industry experience and has taught their own version of user stories for 7 years. |

| B | A researcher (Sweden). | Ph.D. in computer science. | Modest industry experience. |

| C | A software engineer (USA). | BS in computer science. | Industrial experience in a corporation for 4 years. She used user stories extensively in her work as part of specifying requirements. |

| D | A software engineering consultant (USA). | MS in computer science. | More than 4 years of professional experience in a corporation. He has written user stories using Jira Software as part of his work. |

| E | A business analyst (UAE). | BS in Management Information Systems (MIS). | She has experience in managing and analyzing projects for more than a year. She writes user story requirements as a daily part of her job. |

| F | A software engineer (USA). | BS in Computer Science. | He has industry experience at various corporations for more than 3 years. He has used user stories as part of his job. |

| G | A software engineer (USA). | BS in Computer Science. | He has industry experience at a corporation for more than 2 years. He has working experience with user stories as his employer uses them to define requirements. |

| H | A researcher (USA). | MS in Computer Science. | He has industry experience at various US-based companies working with data science, quality assurance, and software development. |

| S | Soren Lauesen | MS in math and physics. | This was not a reply, but the second author’s problem-oriented requirements for the hotline project. |

| C13. Change role | |

| Users: Any supporter. | |

| Start: A supporter has to change line, leave the hotline, etc. | |

| End: New role recorded. | |

| Frequency: Around 6 times a day for each supporter. | |

| All subtasks are optional and may be performed in any sequence | |

| Subtasks and variants: | Example solutions: |

| 1. Change own settings for line, absence, etc. (see data requirements in section D3). | |

| 2. Change other supporter’s settings, for instance if they are ill. | |

| 3. When leaving for a longer period, transfer any taken requests to 1st or 2nd line. | |

| 3p. Problem: The supporter forgets to transfer them. | The system warns and suggests changing status for all of them. |

| 4. When leaving 1st line, check that enough 1st line supporters are left. | |

| 4p. Often forgotten. | The system warns. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuhail, M.A.; Lauesen, S. User Story Quality in Practice: A Case Study. Software 2022, 1, 223-243. https://doi.org/10.3390/software1030010

Kuhail MA, Lauesen S. User Story Quality in Practice: A Case Study. Software. 2022; 1(3):223-243. https://doi.org/10.3390/software1030010

Chicago/Turabian StyleKuhail, Mohammad Amin, and Soren Lauesen. 2022. "User Story Quality in Practice: A Case Study" Software 1, no. 3: 223-243. https://doi.org/10.3390/software1030010

APA StyleKuhail, M. A., & Lauesen, S. (2022). User Story Quality in Practice: A Case Study. Software, 1(3), 223-243. https://doi.org/10.3390/software1030010