Software Productivity in Practice: A Systematic Mapping Study

Abstract

1. Introduction

2. Systematic Mapping Methodology

2.1. Context Definitions

2.2. Systematic Mapping Definitions

2.3. Review Question Formulation

- RQ1

- Which business sectors and knowledge areas are studied in connection to software productivity?

- RQ2

- How is productivity data collected and analyzed, based on which measures?

- RQ3

- Which are the approaches to software productivity and what are their effects?

- RQ4

- What kinds of empirical studies are developed regarding software productivity and what are their findings?

2.4. Bibliographic Reference Search Strategy

2.5. Reference Exclusion Criteria

- Correspond to complete articles written in English published in peer-reviewed journals and event proceedings: The retrieved references were ignored if they corresponded to books, theses, technical reports, editorials, abstracts and summaries, preventing the analysis of incomplete, partial or not completely validated research results. The few references corresponding to papers written in other languages were also ignored;

- Correspond to journal papers, book chapters and conference/workshop papers which were not later subsumed: Each retrieved reference was excluded if it was later subsumed by a subsequent publication. Subsumption was chosen as an exclusion criteria to avoid analyzing results that later on appear in modified form or with different contents in relation to previously published versions;

- Are strictly connected to software productivity: This criterion was posed to avoid analyzing studies related primarily to other subjects (such as SE education and training), or experience reports that study specific subjects (such as productivity software) or methods, techniques and tools addressing software productivity as a secondary subject (such as management techniques and software development environments that ensure higher productivity);

2.6. Paper Inclusion Criteria

- Reports at least on one empirical study;

- Has a industry practitioner author or analyses software industry data (data from the software industry is admitted here in an ample sense, covering raw data and source code from private and public administration organizations, from open databases or closed development projects, so long as they are effectively used/adopted in industry);

- Describes the adopted methodology;

- Explains the studied variables and measures;

- Answers the study(ies) research question(s);

- Provides a statement of the main findings.

2.7. Secondary and Tertiary Study Treatment

2.8. Paper Processing and Treatment

- Bibliographic key;

- Year of publication;

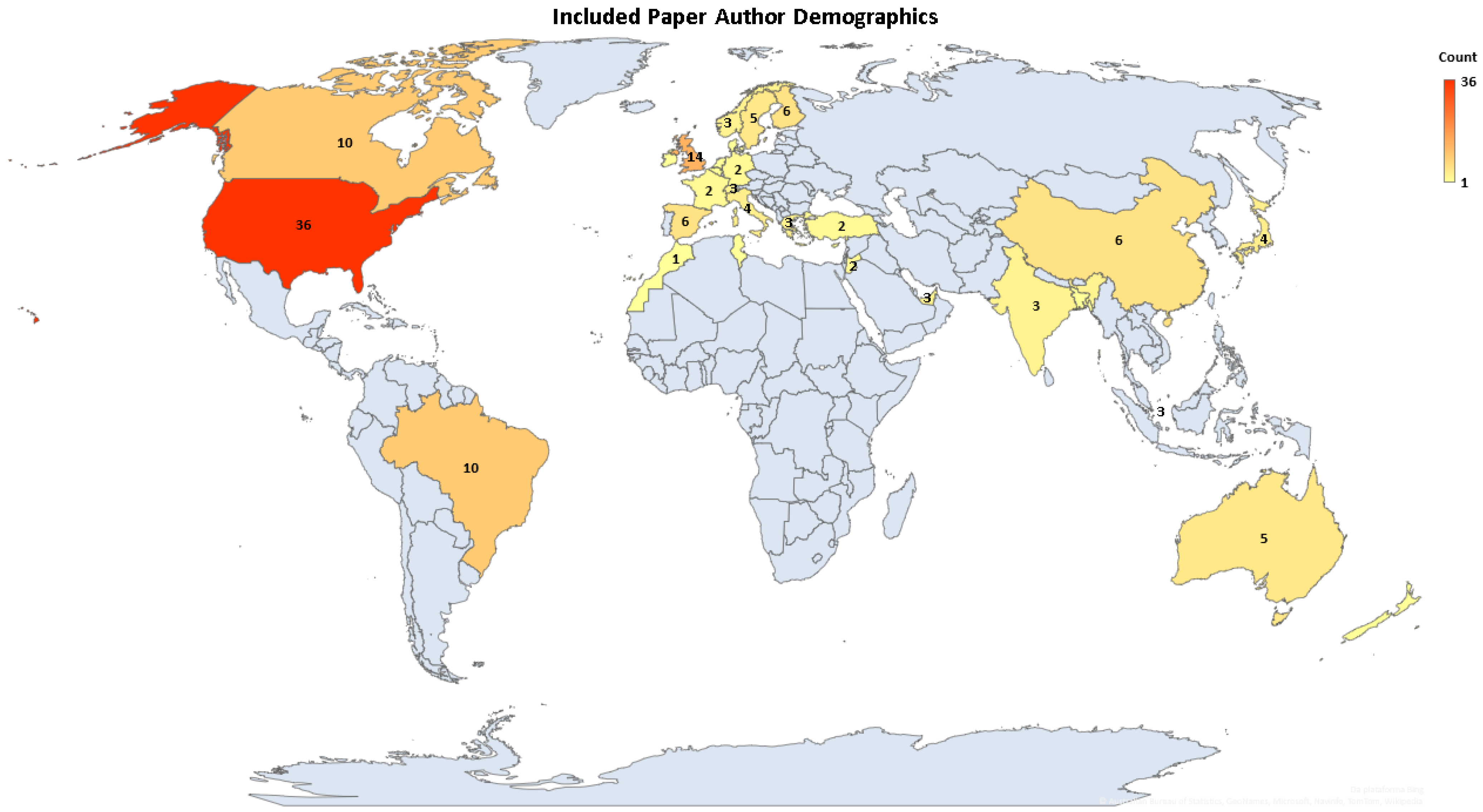

- Total number of authors and industry practitioner authors;

- Author(s) affiliation(s) information;

- Number of studies on software productivity;

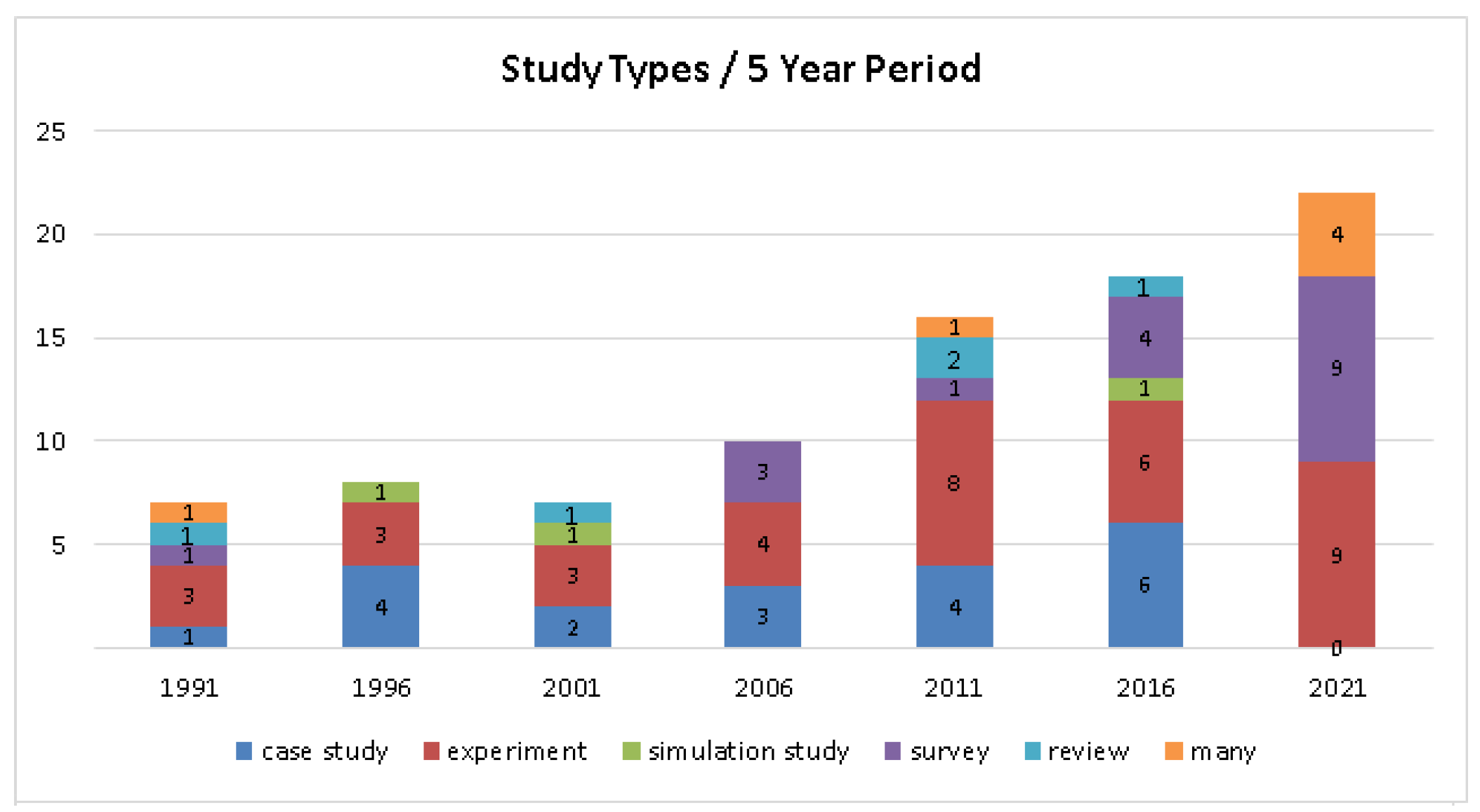

- (Qualified) empirical study type(s);

- Studied business sector(s);

- Main SE KA and KA topic(s);

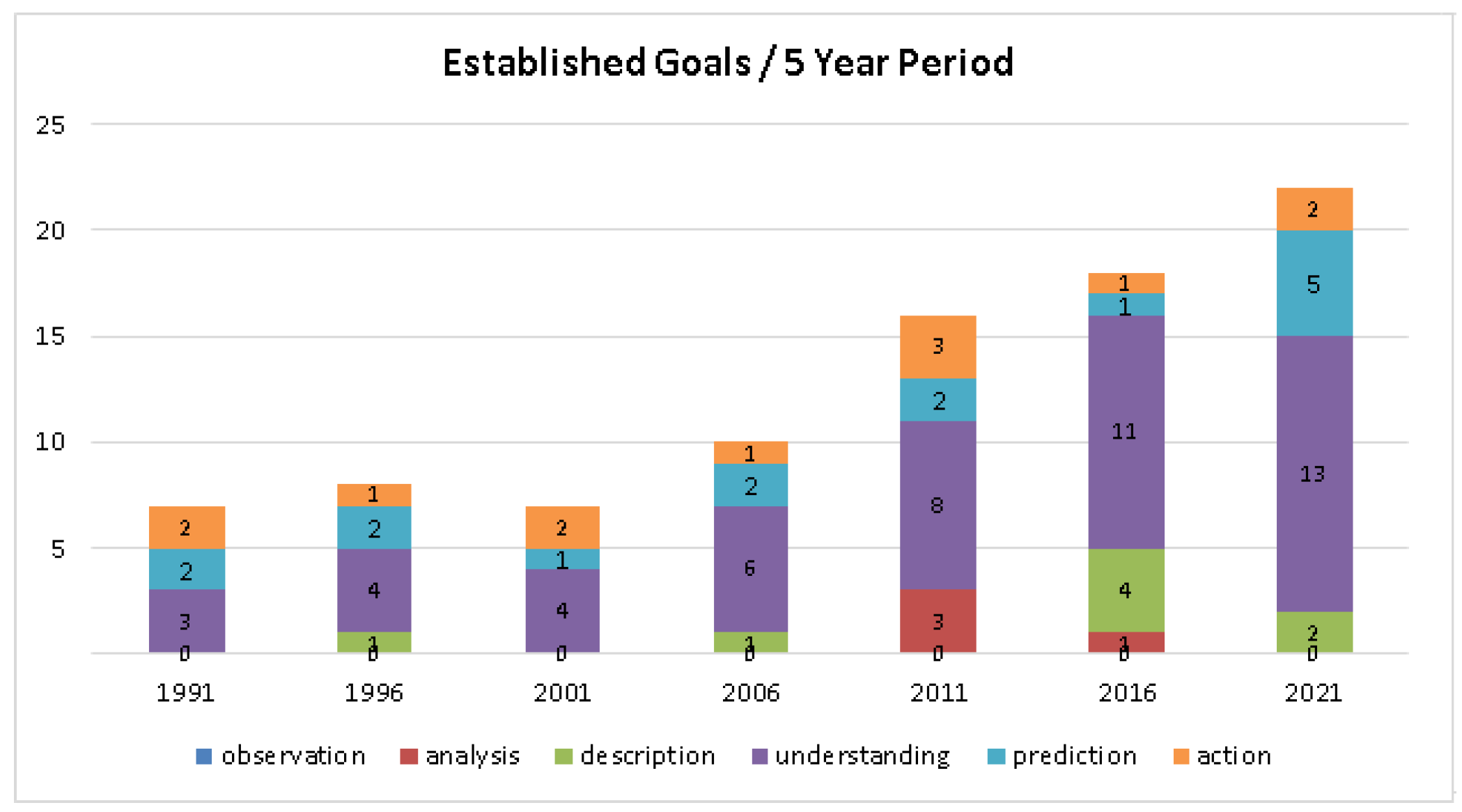

- Productivity approach ultimate goal;

- Data source(s) and their characterization(s);

- Interventions and outcomes, if applicable;

- Adopted productivity measure(s);

- Employed analysis method(s);

- Main finding(s);

- Conflict of interest and funding information.

2.9. Analysis and Synthesis Methods

3. Data Analysis and Primary Study Finding Compilation

3.1. Business Sectors and KAs in Studies (RQ1)

3.2. Data Collection, Measurement and Analysis (RQ2)

| Name | Definition | Count | Primary Studies (with Occurrence Period) |

|---|---|---|---|

| many | many different measures were used | 14 | [3,7,10,11,24,32,44,46,51,83,91,103,104,105] * |

| TFP | total factor productivity | 1 | [106,107] (2012–2021) |

| EVA/y | economic value added per year | 2 | [90,99] (2009–2011) |

| labor productivity | annual net revenue/number of employees | 2 | [54,100] (2013–2017) |

| US$ Cost/LOC | American Dollar cost per line of code | 1 | [80] (1999–1999) |

| SDE | stochastic data envelopes: f(FP, SLOC)/person-hour | 4 | [62,63,70,96] (1991–2006) |

| CP/US$ cost | change points/cost in American dollars | 1 | [65] (1995–1995) |

| adjusted size/total effort | deliverables size-effort/total-effort month | 2 | [22,23] (2004–2017) |

| effort/task | source lines of task code/task person-hours | 1 | [75] (2021–2021) |

| FP/p-(m; d; h) | function points/person-(months; days; hours) | 8 | [42]; [35,37,57,77]; [49,50,53] ** |

| FP/y | function points per year | 1 | [78] (1993–1993) |

| UFP/(m; h) | unadjusted function point per (month; hour) | 5 | [55,69] (1999–2017); [56,93,97] (2004–2020) |

| UCP/p-h | use case points/person-hours | 2 | [85,86] (2017–2018) |

| LOP/p-w | lines of proof/person-weeks | 1 | [101] (2014–2014) |

| SLOC/p-(y; m; h) | source lines of code/person-(years; months; hours) | 11 | [48]; [41,61,68,108]; [21,38,52,71,72,84] *** |

| NCSLOC/p-(m; d) | non-comm. source lines of code/person-(months; days) | 2 | [64] (1994–1994); [81] (2001–2001) |

| DSLOC/p-(m; h) | delivered lines of code/person-(months; hours) | 3 | [79,92] (1996–2005); [67] (1996–1996) |

| added SLOC/d | added source lines of code/days elapsed | 1 | [98] (2016–2016) |

| p-h/FP | person-hours per function point | 2 | [39,58] (2011–2012) |

| resolution time/task | resolution time per task | 1 | [40] (2013–2013) |

| features/w | features per week | 1 | [66] (1996–1996) |

| CLOC/m | committed lines of code per month | 1 | [94] (2010–2010) |

| time to first CCR | time to first contributor commit | 1 | [45] (2017–2017) |

| daily contribution | committed lines of code and files per day | 1 | [102] (2021–2021) |

| CCR/(m; w) | contributor commits per (month; week) | 1 | [59] (2009–2009); [36] (2009–2009) |

| PR/m | pull requests per month | 1 | [95] (2021–2021) |

| inter-CCR time | time between contributor commits | 1 | [43] (2016–2016) |

| SAP | self-assessed productivity | 8 | [4,5,47,60,74,76,87,88,89] (2015–2021) |

| qualitative | only qualitative measures were used | 7 | [6,9,12,73,82,109] (1991–2017) |

| TOTAL | 89 | periods: * (1991–2020). ** (2014–2014); (1991–2012); (2000–2009). *** (1999–1999); (1988–2014); (1987–2011). |

3.3. Software Productivity Approaches (RQ3)

3.4. Study Types and Reported Findings (RQ4)

3.4.1. Studies Using SE Economics Databases

3.4.2. Other Studies Covering the SWEBOK

- Economies and diseconomies of scale in pure, mixed and non-SaaS firms ([99]);

- Positioning of software companies in supply chains as prime contractors, intermediate contractors, end-contractors, and independent enterprises ([104]);

- Regional differences in the level of development of software companies ([107]).

3.4.3. Requirements Engineering

3.4.4. Object-Oriented Development

3.4.5. Software Construction

3.4.6. Software Reuse

3.4.7. Open-Source Software

3.4.8. Software Testing

3.4.9. Software Maintenance

3.4.10. Software Engineering Management

3.4.11. Rapid Application Development

3.4.12. Software Engineering Professional Practice

3.4.13. Software Processes, Quality, Models and Methods

4. Related Work Discussion and Indirect Study Finding Compilation

5. Systematic Mapping Findings and Recommendations

5.1. Risk of Bias Assessment

5.2. Evaluation of Certainty in the Body of Evidence

5.3. Evidence Profile and Summary of Findings Table

- Remotion of individual studies with low certainty: Only studies with moderate or high certainty are considered;

- Inclusion of findings that have been deemed collectively important: Only outcomes determined in at least three high- or moderate-certainty papers are considered;

- Formulation of each aggregated finding definition: Analysis of individual definitions and formulation of an aggregated relationship, involving the productivity factors mentioned in the original studies, any directionality of effects and significance of results, considering the lowest significance and more general scope conclusively reported;

- Computation of the numbers of papers and studies leading to the finding;

- Evaluation of the pooled risk of bias for the finding: Computed by weighing the individual paper risk of bias ratings according to the respective number of reported studies and their assessments of risk of bias, using the same criteria of Section 6.1;

- Determination of inconsistency, indirectness, imprecision and review limitations related to the finding: Usage of the GRADE criteria for determining these aspects;

- Computation of the overall quality of the finding: Usage of the lowest quality of evidence level among the respective studies as the baseline quality of the outcome, possibly downgraded (according to what was determined in the previous two steps) or upgraded (depending on the findings reported in any systematic indirect study with the same coverage) in one or two certainty levels;

- Registration of any relevant comment.

5.4. Methodological Lessons Learned and Recommendations

5.4.1. Software Productivity Standards

Lesson 1. The software productivity community should seek to reduce the uncertainty concerning definitions related to software productivity by participating in standardization initiatives and standardization boards, apart from effectively adopting standards in research and practice.

5.4.2. Practitioner/Industry Involvement and Participation

Lesson 2. In order to motivate involvement, software productivity researchers should seek the participation of industry practitioners and researchers in studies by presenting them the potential benefits together with the identified risk mitigators.

5.4.3. Software Productivity Data Collection

Lesson 3. Software productivity data analysts should be concerned with data collection processes and data quality. They should always characterize the context and population under study in a precise way; propose in a justified manner sample, experiment or case study size; describe data sources, studied variables and data collection processes, with their time spans and collection instruments. Whenever possible, randomization should be adopted.

5.4.4. Usage of Productivity and Open-Source Code Databases

Lesson 4. Software productivity data scientists should seek to adopt and expand the practice of compiling productivity databases towards exploring new and innovative applications, taking into account the best practices and the associated challenges and opportunities.

5.4.5. Software Productivity Measurement and Analysis

Lesson 5. Software productivity data analysts should choose productivity measurement and analysis methods considering the problem at hand. They should take into account the measurement level and approach, the corporate goals and the best practices in terms of analysis methods.

5.4.6. Confounding Factors

Lesson 6. Authors of software productivity studies should clarify and analyze the software engineering dimensions that may be confounded with software productivity and the factors that may confound software productivity analysis.

5.4.7. Conduction of Studies on Software Productivity

Lesson 7. Authors of software productivity studies should prefer GCM over PICO. The adoption of PICO should always be justified in terms of the study goals and characteristics.

Lesson 8. The IEEE SWEBOK should be updated to cover emergent software engineering subjects and should contain more practice-oriented guidance.

Lesson 9. Authors of systematic literature reviews and mappings on software productivity should formulate strategies of paper screening considering variation in the adopted search string and bibliographic reference databases, apart from using alternative methods of reference discovery.

Lesson 10. Authors of software productivity studies should ensure research quality and transparent reporting by including in their papers clear and explicit statements of author affiliations, sources of funding, technology and data, and conflicts of interests, apart from transparently reporting incentives for study participation and disclosure limitations on research data and findings.

6. Threats to Validity

6.1. Construct and Internal Validity

6.2. External Validity

7. Concluding Remarks

Supplementary Materials

Funding

Conflicts of Interest

Appendix A. Coding of Factors Affecting Software Productivity

Appendix A.1. Studies Using SE Economics Databases

- development platform development project productivity ([55]);

- business sector □ software project productivity

- team size development project productivity

- adopted programming language □ development project productivity

- project size development project productivity ([52]);

- level of outsourcing development project productivity ([53]);

- adopted programming language → maintenance project productivity ([56]);

- adoption of development tools development project productivity ([56]);

- development project productivity □ maintenance project productivity

- large team development productivity < small team development productivity ([39]).

Appendix A.2. Other Studies Covering the SWEBOK

- formal education labor productivity ([106]);

- organizational structure development project productivity ([108]);

- risk classification development project productivity ([57]);

- UCPs development project productivity; ([86]);

- FPs development project productivity ([57]);

- LOCs development project productivity ([108]);

- development platform development project productivity ([57]);

- software complexity development project productivity ([86]);

- adopted programming language recency development project productivity ([109]);

- team experience development project productivity ([109]);

- experience with user community development project productivity ([109]);

- team size development project productivity ([108]);

- application type → development project productivity ([108]);

- software reuse development project productivity ([61]);

- technical debt development project productivity ([87]);

- software development approach adequacy scientific software productivity ([83]);

- RAD development project productivity ([21]);

- adopted programming language → development project productivity ([108]);

Appendix A.3. Requirements Engineering

Appendix A.4. Object-Oriented Development

- project size development project productivity ([48]);

- application domain → development project productivity ([72]);

- adoption of OOD → development project productivity ([69]);

- rigorous enforcement of project deadlines development project productivity ([68]);

- early intermediate task completion incentives development project productivity ([68]).

Appendix A.5. Software Construction

- software reuse development project productivity

- formal education development project productivity ([23]);

- architecture → development project productivity ([84]);

- requirements volatility development project productivity ([71]);

- knowledge of unit testing development project productivity ([23]);

- concurrent development pair productivity < simultaneous development pair productivity ([97]).

Appendix A.6. Software Reuse

- software reuse development project productivity

Appendix A.7. Open-Source Software

- adopted programming language fragmentation OSS project productivity ([94]);

- OSS adoption service corporate labor productivity ([100]);

- OSS age OSS project productivity ([95]);

- team size OSS project productivity ([43]);

- team experience OSS project productivity ([36]);

- LOC-based size increment OSS project project productivity ([41]).

Appendix A.8. Software Testing

Appendix A.9. Software Maintenance

- domain knowledge maintenance project productivity ([96]);

- team capabilities maintenance project productivity ([96]);

- mentors succession and experience maintenance project productivity ([59]);

- mentors work load maintenance project productivity ([59]);

- level of offshoring succession maintenance project productivity. ([59]);

- project size maintenance project productivity

- LOC-based size increment maintenance project productivity ([63]);

- maintenance granularity maintenance project productivity ([98]);

- software artifact coupling maintenance project productivity ([98]);

- project quality maintenance project productivity ([96]).

Appendix A.10. Software Engineering Management

- project size development project productivity ([35]);

- adoption of development tools development project productivity ([47]);

- adoption of process models development project productivity ([47]);

- team autonomy development project productivity ([47]);

- technology knowledge development project productivity ([37]);

- team experience heterogeneity development project productivity ([38]);

- adoption of testing tools development project productivity ([37]);

- task coordination development project productivity ([40]);

- software complexity development project productivity ([37]);

- task completion incentives development project productivity ([47]);

- possibility of mobility development project productivity ([47]);

- in-house development project productivity □ offshored development project productivity

Appendix A.11. Rapid Application Development

- team size → agile software development productivity ([12]);

- team diversity → agile software development productivity ([12]);

- team turnover → agile software development productivity ([12]);

- Scrum adoption → software development productivity. ([73]);

- traditional project productivity < Scrum-RUP project productivity ([58]).

Appendix A.12. Software Processes, Quality, Models and Methods

- organizational structure → development project productivity ([78]);

- personal software process maturity levels → software developer productivity ([82]);

- proof size → formal verification productivity ([101]);

- appraised software process maturity levels □ corporate labor productivity

- adoption of development tools development project productivity ([77]);

- proof complexity formal verification productivity ([101]).

Appendix B. Risk of Bias Assessment Tables

| Key | Risk of Bias Domains | |||

|---|---|---|---|---|

| D1 | D2 | D3 | D4 | |

| (AbdelHamid96) [79] | Low | Low | Low | Low |

| (AdamsCB09) [36] | Unclear | Low | Low | Low |

| (AsmildPK06) [70] | Low | Low | Low | Low |

| (AzzehN17) [85] | Low | Low | Low | Low |

| (AzzehN18) [86] | Low | Low | Low | Low |

| (BankerDK91) [96] | Low | Low | Low | Low |

| (BankerK91) [62] | Unclear | Low | High | Unclear |

| (BankerS94) [63] | Low | Low | Low | Low |

| (BellerOBZ21) [74] | Low | Low | High | Unclear |

| (BeskerMB19) [87] | High | Low | Low | Unclear |

| (BezerraEA20) [88] | Unclear | Low | Low | Low |

| (BibiAS16) [98] | Low | Low | Low | Low |

| (BibiSA08) [91] | Unclear | Low | Low | Low |

| (Boehm87) [21] | Low | Low | Low | Low |

| (Boehm99a) [80] | Low | Low | Low | Low |

| (CarvalhoRSCB11) [58] | Low | Unclear | Low | Unclear |

| (CataldoH13) [40] | Low | Low | Unclear | Low |

| (ChapettaT20) [24] | Low | Low | Low | Low |

| (Chatman95) [65] | Unclear | Low | High | Unclear |

| (CheikhiARI12) [11] | Low | Low | Low | Low |

| (DamianC06) [9] | Low | Low | Low | Low |

| (DiesteEtAll17) [23] | High | Low | Low | Unclear |

| (Duarte17a) [54] | Unclear | Low | Low | Low |

| (Duncan88) [61] | Low | Low | High | Unclear |

| (FatemaS17) [6] | Low | Low | Low | Low |

| (FaulkLVSV09) [83] | Low | Unclear | High | Unclear |

| (FrakesS01) [81] | Low | Low | Low | Low |

| (GeH11) [90] | Low | Low | Low | Low |

| (GraziotinWA15) [4] | High | Low | Low | Unclear |

| (GreenHC05) [82] | Unclear | Low | Low | Low |

| (HenshawJMB96) [66] | Low | Low | Unclear | Low |

| (HernandezLopezCSC15) [7] | High | Low | Low | Unclear |

| (HernandezLopezPGC11) [32] | Low | Low | Low | Low |

| (HuangW09) [99] | Unclear | Low | Low | Low |

| (JaloteK21) [75] | Unclear | Low | Unclear | Unclear |

| (JohnsonZB21) [76] | Unclear | Unclear | High | Unclear |

| (KautzJU14) [73] | Low | Low | Low | Low |

| (KemayelMO91) [109] | Low | Low | Low | Low |

| (KitchenhamM04) [22] | Unclear | Low | Low | Low |

| (KreinMKDE10) [94] | Low | Low | Low | Low |

| (KuutilaMCEA21) [102] | Low | Low | Low | Low |

| (LagerstromWHL12) [57] | Low | Low | Low | Low |

| (LavazzaMT18) [56] | Unclear | Low | Low | Low |

| (LavazzaLM20) [93] | Unclear | Low | Low | Low |

| (LiaoEA21) [95] | Low | Low | Low | Low |

| (Lim94) [64] | Low | Low | High | Unclear |

| (LowJ91) [77] | Low | Low | Low | Low |

| (MacCormackKCC03) [35] | Unclear | Low | Unclear | Unclear |

| (MantylaADGO16) [60] | Low | Low | Low | Low |

| (Maxwe96) [108] | Low | Low | Unclear | Low |

| (MaxwellF00) [49] | Low | Low | Unclear | Low |

| (MeloCKC13) [12] | Low | Low | Low | Low |

| (MeyerBMZF17) [5] | Unclear | Low | Low | Low |

| (MeyerZF17) [10] | Unclear | Low | Unclear | Unclear |

| (MinetakiM09) [104] | Low | Low | Low | Low |

| (MoazeniLCB14) [41] | High | Low | Low | Unclear |

| (Mockus09) [59] | Low | Low | High | Unclear |

| (Mohapatra11) [37] | Low | Low | Low | Low |

| (MosesFPS06) [51] | Unclear | Low | Low | Low |

| (MurphyHillEA21) [89] | Low | Low | Low | Low |

| (OliveiraEA20) [46] | Unclear | Low | Low | Low |

| (PalaciosCSGT14) [42] | Unclear | Low | Low | Low |

| (ParrishSHH04) [97] | Low | Low | Low | Low |

| (PortM99) [69] | Low | Low | Unclear | Low |

| (PotokV97) [68] | Low | Low | High | Unclear |

| (PotokVR99) [48] | Low | Low | Unclear | Low |

| (PremrajSKF05) [50] | Low | Low | Unclear | Low |

| (RamasubbuCBH11) [38] | Low | Low | Unclear | Low |

| (RastogiT0NC17) [45] | Low | Low | Low | Low |

| (RodriguezSGH12) [39] | Low | Low | Low | Low |

| (Rubin93a) [78] | Low | Low | Low | Low |

| (Scacchi91) [103] | Low | Low | Low | Low |

| (ScholtesMS16) [43] | Low | Low | Low | Low |

| (SentasASB05) [92] | Low | Low | Low | Low |

| (SiokT07) [72] | Low | Low | Unclear | Low |

| (SovaS96) [67] | Low | Low | Low | Low |

| (StaplesEA14) [101] | Low | Low | Low | Low |

| (StoreyEA21) [47] | Unclear | Low | Low | Low |

| (StylianouA16) [44] | Low | Low | Low | Low |

| (Tan09) [84] | Low | Low | Low | Low |

| (TanihanaN13) [100] | Low | Low | Low | Low |

| (TomaszewskiL06) [71] | Low | Low | Low | Low |

| (TrendM09) [105] | Low | Low | Low | Low |

| (Tsuno09) [53] | Low | Low | Low | Low |

| (TsunodaA17) [55] | Low | Low | Low | Low |

| (Wang12) [106] | Low | Low | Low | Low |

| (WangWZ08) [52] | Low | Low | Low | Low |

| (YilmazOC16) [3] | Low | Low | Low | Low |

| (ZhaoWW21) [107] | Low | Low | Low | Low |

| (BissiNE16) [25] | Unclear | Low | Low | Low |

| (CardozoNBFS10) [26] | Unclear | Unclear | Low | Unclear |

| (HernandezLopezPG13) [8] | Unclear | Unclear | Low | Unclear |

| (MohagheghiC07) [18] | High | Low | Low | Unclear |

| (OliveiraVCC17) [27] | Unclear | Unclear | Low | Unclear |

| (OliveiraCCV18) [28] | Unclear | Unclear | Low | Unclear |

| (Peter11) [29] | Unclear | Low | Low | Low |

| (RafiqueM13) [17] | Low | Low | Low | Low |

| (ShahPN15) [30] | Unclear | Unclear | Low | Unclear |

| (WagnerR08) [2] | High | Low | Unclear | Unclear |

| Key | Explanation for Downgrading |

|---|---|

| (AdamsCB09) [36] | Bug-tracking data were disregarded and only actual commits studied (observation risk); |

| (BankerK91) [62] | “Our final sample of 20 projects excluded one project among the initial 21 that was believed to be an outlier” (exclusion risk); “Bedell’s alternative strategy to cope with this ’functionality risk’ was to build the ICASE tool in house. Although the investment posed a major risk to the firm, First Boston Bank subsequently committed $65 million”, “This article addresses three principal research questions: did reusability lead to any significant productivity gains during the first two years of the deployment of the ICASE tool” (conflicting interests risk, studied tool financially supported by the company that demanded the study); |

| (BellerOBZ21) [74] | “We start to bridge the gap between them with an empirical study of 81 software developers at Microsoft” (conflicting interests risk, due to the authors’ affiliation); |

| (BeskerMB19) [87] | “This study’s selection of participating companies was carried out with a representative convenience sample of software professionals from our industrial partners” (selection-availability risk). “On average, each respondent reported their data on 11 out of 14 occasions” (missing data or non-response risk); |

| (BezerraEA20) [88] | “The survey used two approaches: (i) we used self-recruitment, sharing posts to invite members of social networking groups related to IT professionals on Facebook, Instagram and mailing lists; and, (ii) we sent out direct invitations to people we knew” (selection-availability risk); |

| (BibiSA08) [91] | “Although there are many missing values in the above fields (over 72%) and the extracted rules have low values of confidence, the results are satisfactory” (missing data risk); |

| (CarvalhoRSCB11) [58] | 14 samples were analyzed, but data were collected regarding 16 projects (selective reporting risk); |

| (CataldoH13) [40] | “We collected data from a multinational development organization responsible for producing a complex embedded system for the automotive industry” (conflicting interests risk, due to the affiliation of an author); |

| (Chatman95) [65] | “Current data retention does not preserve all the data implied by the change-point approach, so the results shown in the figures are incomplete” (missing data risk); “The figures present data collected for three releases of a product developed at IBM’s Santa Teresa Laboratory” (conflicting interests risk, due to the affiliation of the author); |

| (DiesteEtAll17) [23] | “The experimental subjects were convenience sampled” (selection-availability risk); “Although we had 126 experimental subjects, 11 observations were lost during the analysis as two subjects failed to complete the experimental task, six failed to report their academic qualifications and four failed to report any experience” (missing data or non-response risk); “Each quasi-experiment was measured by a single measurer” (measurement risk); |

| (Duarte17a) [54] | “Since our economic data set is sparse, in the sense that there are some missing observations in the middle of some periods, we used interpolation” (missing data risk); |

| (Duncan88) [61] | “The paper describes the software development process used within one software engineering group at Digital Equipment Corporation”, “The questions that the Commercial Languages and Tools software product engineering group at DEC asked are: how are we doing compared to ourselves in previous years? Can we quantify the impact of using software development tools?” (conflicting interests risk, due to the affiliation of the author); |

| (FaulkLVSV09) [83] | “We ran a set of experiments”, but only reduction ratio was reported (selective reporting risk); “Sun Microsystems took a broad view of the productivity problem”, “We studied the missions, technologies and practices at government-funded institutions”, “DARPA programmatic goal was to address ’real productivity”’ (conflicting interests risk, due to author’s affiliations and the source of funding); |

| (GraziotinWA15) [4] | “The participants have been obtained using convenience sampling” (selection-availability risk); “When questioned about the difficulties and about what influenced their productivity, the participants found difficulties in answering” (measurement-recall risk); |

| (GreenHC05) [82] | “A few respondents noted that it was too early to assess productivity gains. Therefore, some respondents did not respond to productivity related items” (non-response risk); |

| (HenshawJMB96) [66] | “In the organization we studied, requirements planning had been done using the AIX file and operating system” (conflicting interests risk, studied technology supplied by the employer of an author); |

| (HernandezLopezCSC15) [7] | “One of the authors contacted via e-mail ex-alumni with experience of at least one year in any activities of SE. From these, 15 positive answers were obtained. Interviews were conducted between April and October 2011” (selection-availability risk); “The authors wrote some posts in LinkedIn groups related to SE. 31% of the respondents accessed the questionnaire from LinkedIn” (selection-inception risk); |

| (HuangW09) [99] | “Since we do not have access to the proportion of SaaS revenue in a software company, we need to subjectively decide whether its SaaS operations are significant enough so that the target firm is coded as a mixed-SaaS firm. The other source of data limitations is that some firms do not mention their SaaS business in the annual report, or use a different name for SaaS services that is not captured by our Java program” (observation risk); |

| (JaloteK21) [75] | “As the data were not normally distributed, the Kruskal–Wallis non-parametric test was conducted after removing the outlier” (exclusion risk)” (exclusion risk); “We conducted this field study at Robert Bosch Engineering and Business Solutions Ltd (RBEI)” (conflicting interests risk, due to the affiliation of an author); |

| (JohnsonZB21) [76] | “We sent the survey to 1,252 individuals with an engineer or program management position at Microsoft in the Puget Sound area” (selection risk); “Design with a total of 1,159 participants” and “We sent the survey to 1,252 individuals” (selective reporting risk); “To address the lack of empirical data on work environments in software development, we carried out an empirical study of physical work environments at Microsoft” (conflicting interests risk, due to the authors’ affiliation); |

| (LavazzaMT18) [56] | “In the derivation of models, outliers, identified based on Cook’s distance, following a consolidated practice, were excluded” (exclusion risk); |

| (LavazzaLM20) [93] | “Data points with Cook’s distance greater than 4/n (n being the cardinality of the training set) were considered for removal” (exclusion risk); |

| (Lim94) [64] | “The reusable work products were written in Pascal and SPL, the Systems Programming Language for HP 300 computer system”, “The development operating system was HPUX” (conflicting interests risk, studied technology supplied by the employer of the author); |

| (MacCormackKCC03) [35] | “We removed from the analysis projects that were outliers on each performance dimension on a case-by-case analysis” (exclusion risk); “Our results are based on a sample of HP software development projects” (conflicting interests risk, studied technology supplied by the employer of an author); |

| (Maxwe96) [108] | “We present the results of our analysis of the European Space Agency software development database” (conflicting interests risk, studied projects funded by the research financial supporter). |

| (MaxwellF00) [49] | “The project grew and is now an STTF-managed commercial activity” (conflicting interests risk, studied database supplied by the employer of an author); |

| (MeyerBMZF17) [5] | “We used personal contacts, e-mails and sometimes a short presentation at the company to recruit participants” (selection-availability risk); |

| (MeyerZF17) [10] | “We advertised the survey by sending personalized invitation emails to 1600 professional software developers within Microsoft” (selection risk); “We analyze the variation in productivity perceptions based on an online survey with 413 professional software developers at Microsoft” (conflicting interests risk, due to the affiliation of an author); |

| (MoazeniLCB14) [41] | “The threat is mitigated for professional and student developers by the likelihood of distortions being common to all parts of the project” (measurement risk); “For a limited range of increments within a minor version of projects that have been going on for many years, the staff size and the applied effort of the staff members remained either constant or did not change significantly” (observation risk); |

| (Mockus09) [59] | “We investigate software development at Avaya with many past and present projects of various sizes and types involving more than 2000 developers” (conflicting interests risk, studied developers affiliated to the employer of the author); |

| (MosesFPS06) [51] | “It is necessary to assume that SLOC are counted in approximately the same way for the company” (measurement risk); |

| (OliveiraEA20) [46] | “We have contacted as many companies as possible to ask for authorization to analyze their projects” (inception risk); |

| (PalaciosCSGT14) [42] | “Participants were obtained from those who responded positively to a personal invitation sent by the authors to contacts working in Spanish and French IT companies” (selection-availability risk); |

| (PortM99) [69] | “The organization requesting the study hoped to compare the projects through the metric of productivity”, “The customer of this study was particularly interested in this aspect” (conflicting interests risk, studied projects supported by the employer of an author); |

| (PotokV97) [68] | “The empirical data was collected at the IBM Software Solutions Laboratory in Research Triangle Park, North Carolina” (conflicting interests risk, studied projects supported by the employer of the authors); |

| (PotokVR99) [48] | “The empirical data discussed in this paper was collected at IBM Software Solutions”, “The measurements collected are defined by a corporate metric council” (conflicting interests risk, studied projects supported by the employer of an author); |

| (PremrajSKF05) [50] | “The authors regret that presently the data set is not publicly available” (conflicting interests risk, studied database supported by the employer of an author); |

| (RamasubbuCBH11) [38] | “CodeMine provides a data collection framework for all major Microsoft development teams”, “We conducted quantitative analysis on the version control system data and employee information stores in CodeMine” (conflicting interests risk, due to the affiliation of most authors); |

| (SiokT07) [72] | “The goal of this study was to provide answers to several questions regarding software development productivity and product quality within the avionics software engineering organization” (conflicting interests risk, studied projects supported by the employer of an author); |

| (StoreyEA21) [47] | “Our case company, Microsoft, is a large software company with tens of thousands of developers distributed in offices around the word” (conflicting interests risk, due to the affiliation of most authors); |

| (BissiNE16) [25] | No risk of bias assessment (performance risk); |

| (CardozoNBFS10) [26] | No risk of bias assessment (performance risk); Synthesis methods were not sufficiently detailed (selective non-reporting risk); |

| (HernandezLopezPG13) [8] | No risk of bias assessment (performance risk); Synthesis methods were not sufficiently detailed (selective non-reporting risk); |

| (MohagheghiC07) [18] | Paper screening, inclusion and exclusion criteria not sufficiently detailed (selection risk); No risk of bias assessment (performance risk); |

| (OliveiraVCC17) [27] | No risk of bias assessment (performance risk); Synthesis methods were not sufficiently detailed (selective non-reporting risk); |

| (OliveiraCCV18) [28] | No risk of bias assessment (performance risk); Synthesis methods were not sufficiently detailed (selective non-reporting risk); |

| (Peter11) [29] | No risk of bias assessment (performance risk); |

| (ShahPN15) [30] | No risk of bias assessment (performance risk); Synthesis methods were not sufficiently detailed (selective non-reporting risk); |

| (WagnerR08) [2] | “We inspected the first 100 results of each portal. We also collected papers manually in a number of important journals” (selection risk); No risk of bias assessment (performance risk); “The ProdFLOW method uses interview techniques for determining the most influential factors in productivity for a specific organization. ProdFLOW is a registered trademark of the Siemens AG” (conflicting interest risk, due to the affiliation of an author). |

Appendix C. Evaluation of Certainty in the Body of Evidence

| Key | Certainty Evaluation Criteria | ||

|---|---|---|---|

| C1 | C2 | C3 | |

| (AbdelHamid96) [79] | Low | Low | Low |

| (AdamsCB09) [36] | Low | Low | Low |

| (AsmildPK06) [70] | High | Low | High |

| (AzzehN17) [85] | High | Low | High |

| (AzzehN18) [86] | High | Low | High |

| (BankerDK91) [96] | High | Low | High |

| (BankerK91) [62] | Moderate | Unclear | Low |

| (BankerS94) [63] | Moderate | Low | Moderate |

| (BellerOBZ21) [74] | Moderate | Unclear | Low |

| (BeskerMB19) [87] | Moderate | Unclear | Low |

| (BezerraEA20) [88] | Moderate | Low | Moderate |

| (BibiAS16) [98] | Low | Low | Low |

| (BibiSA08) [91] | Moderate | Low | Moderate |

| (Boehm87) [21] | Moderate | Low | Moderate |

| (Boehm99a) [80] | Moderate | Low | Moderate |

| (CarvalhoRSCB11) [58] | Low | Unclear | Very low |

| (CataldoH13) [40] | Low | Low | Low |

| (ChapettaT20) [24] | Moderate | Low | Moderate |

| (Chatman95) [65] | Low | Unclear | Very low |

| (CheikhiARI12) [11] | Moderate | Low | Moderate |

| (DamianC06) [9] | Moderate | Low | Moderate |

| (DiesteEtAll17) [23] | High | Unclear | Moderate |

| (Duarte17a) [54] | Moderate | Low | Moderate |

| (Duncan88) [61] | Low | Unclear | Very low |

| (FatemaS17) [6] | Moderate | Low | Moderate |

| (FaulkLVSV09) [83] | Moderate | Unclear | Low |

| (FrakesS01) [81] | Moderate | Low | Moderate |

| (GeH11) [90] | Moderate | Low | Moderate |

| (GraziotinWA15) [4] | Moderate | Unclear | Low |

| (GreenHC05) [82] | Moderate | Low | Moderate |

| (HenshawJMB96) [66] | Low | Low | Low |

| (HernandezLopezCSC15) [7] | Moderate | Unclear | Low |

| (HernandezLopezPGC11) [32] | Moderate | Low | Moderate |

| (HuangW09) [99] | High | Low | High |

| (JaloteK21) [75] | Moderate | Unclear | Low |

| (JohnsonZB21) [76] | Moderate | Unclear | Low |

| (KautzJU14) [73] | Low | Low | Low |

| (KemayelMO91) [109] | Moderate | Low | Moderate |

| (KitchenhamM04) [22] | High | Low | High |

| (KreinMKDE10) [94] | High | Low | High |

| (KuutilaMCEA21) [102] | Moderate | Low | Moderate |

| (LagerstromWHL12) [57] | High | Low | High |

| (LavazzaMT18) [56] | Moderate | Low | Moderate |

| (LavazzaLM20) [93] | Moderate | Low | Moderate |

| (LiaoEA21) [95] | Moderate | Low | Moderate |

| (Lim94) [64] | Low | Unclear | Very low |

| (LowJ91) [77] | Moderate | Low | Moderate |

| (MacCormackKCC03) [35] | Moderate | Unclear | Low |

| (MantylaADGO16) [60] | Moderate | Low | Moderate |

| (Maxwe96) [108] | High | Low | High |

| (MaxwellF00) [49] | Low | Low | Low |

| (MeloCKC13) [12] | Low | Low | Low |

| (MeyerBMZF17) [5] | Moderate | Low | Moderate |

| (MeyerZF17) [10] | Moderate | Unclear | Low |

| (MinetakiM09) [104] | Moderate | Low | Moderate |

| (MoazeniLCB14) [41] | Low | Unclear | Very low |

| (Mockus09) [59] | High | Unclear | Moderate |

| (Mohapatra11) [37] | Moderate | Low | Moderate |

| (MosesFPS06) [51] | Low | Low | Low |

| (MurphyHillEA21) [89] | High | Low | High |

| (OliveiraEA20) [46] | Moderate | Low | Moderate |

| (PalaciosCSGT14) [42] | Moderate | Low | Moderate |

| (ParrishSHH04) [97] | Low | Low | Low |

| (PortM99) [69] | Low | Low | Low |

| (PotokV97) [68] | Low | Unclear | Very low |

| (PotokVR99) [48] | High | Low | High |

| (PremrajSKF05) [50] | High | Low | High |

| (RamasubbuCBH11) [38] | High | Low | High |

| (RastogiT0NC17) [45] | Moderate | Low | Moderate |

| (RodriguezSGH12) [39] | High | Low | High |

| (Rubin93a) [78] | Moderate | Low | Moderate |

| (Scacchi91) [103] | Moderate | Low | Moderate |

| (ScholtesMS16) [43] | High | Low | High |

| (SentasASB05) [92] | Moderate | Low | Moderate |

| (SiokT07) [72] | Moderate | Low | Moderate |

| (SovaS96) [67] | Low | Low | Low |

| (StaplesEA14) [101] | Moderate | Low | Moderate |

| (StoreyEA21) [47] | High | Low | High |

| (StylianouA16) [44] | Low | Low | Low |

| (Tan09) [84] | Low | Low | Low |

| (TanihanaN13) [100] | Low | Low | Low |

| (TomaszewskiL06) [71] | Low | Low | Low |

| (TrendM09) [105] | Moderate | Low | Moderate |

| (Tsuno09) [53] | Moderate | Low | Moderate |

| (TsunodaA17) [55] | Moderate | Low | Moderate |

| (Wang12) [106] | Moderate | Low | Moderate |

| (WangWZ08) [52] | Low | Low | Low |

| (YilmazOC16) [3] | Moderate | Low | Moderate |

| (ZhaoWW21) [107] | Moderate | Low | Moderate |

| (BissiNE16) [25] | High | Low | High |

| (CardozoNBFS10) [26] | High | Unclear | Moderate |

| (HernandezLopezPG13) [8] | High | Unclear | Moderate |

| (MohagheghiC07) [18] | High | Unclear | Moderate |

| (OliveiraVCC17) [27] | High | Unclear | Moderate |

| (OliveiraCCV18) [28] | High | Unclear | Moderate |

| (Peter11) [29] | High | Low | High |

| (RafiqueM13) [17] | High | Low | High |

| (ShahPN15) [30] | High | Unclear | Moderate |

| (WagnerR08) [2] | High | Unclear | Moderate |

References

- Boehm, B.W. Software Engineering Economics; Prentice-Hall: Hoboken, NJ, USA, 1981. [Google Scholar]

- Wagner, S.; Ruhe, M. A Systematic Review of Productivity Factors in Software Development. In Proceedings of the 2nd International Workshop on Software Productivity Analysis and Cost Estimation (SPACE 2008), Beijing, China, 2 December 2008. [Google Scholar]

- Yilmaz, M.; O’Connor, R.V.; Clarke, P. Effective Social Productivity Measurements during Software Development—An Empirical Study. J. Softw. Eng. Knowl. Eng. 2016, 26, 457–490. [Google Scholar] [CrossRef]

- Graziotin, D.; Wang, X.; Abrahamsson, P. Do feelings matter? On the correlation of affects and the self-assessed productivity in software engineering. J. Softw. Evol. Process 2015, 27, 467–487. [Google Scholar] [CrossRef]

- Meyer, A.; Barton, L.; Murphy, G.C.; Zimmermann, T.; Fritz, T. The Work-Life of Developers: Activities, Switches and Perceived Productivity. IEEE Trans. Softw. Eng. 2017, 43, 1178–1193. [Google Scholar] [CrossRef]

- Fatema, I.; Sakib, K. Factors Influencing Productivity of Agile Software Development Teamwork: A Qualitative System Dynamics Approach. In Proceedings of the 24th Asia-Pacific Software Engineering Conference (APSEC 2017), Nanjing, China, 4–8 December 2017; Lv, J., Zhang, H.J., Hinchey, M., Liu, X., Eds.; IEEE: Piscataway, NJ, USA, 2017; pp. 737–742. [Google Scholar]

- Hernández-López, A.; Palacios, R.C.; Soto-Acosta, P.; Casado-Lumbreras, C. Productivity Measurement in Software Engineering: A Study of the Inputs and the Outputs. Int. J. Inf. Technol. Syst. Appl. 2015, 8, 46–68. [Google Scholar] [CrossRef]

- Hernández-López, A.; Palacios, R.C.; García-Crespo, Á. Software Engineering Job Productivity—A Systematic Review. J. Softw. Eng. Knowl. Eng. 2013, 23, 387–406. [Google Scholar] [CrossRef]

- Damian, D.; Chisan, J. An Empirical Study of the Complex Relationships between Requirements Engineering Processes and Other Processes that lead to Payoffs in Productivity, Quality and Risk Management. IEEE Trans. Softw. Eng. 2006, 32, 433–453. [Google Scholar] [CrossRef]

- Meyer, A.; Zimmermann, T.; Fritz, T. Characterizing Software Developers by Perceptions of Productivity. In Proceedings of the International Symposium on Empirical Software Engineering and Measurement (ESEM 2017), Markham, ON, Canada, 9–10 November 2017; Bener, A., Turhan, B., Biffl, S., Eds.; IEEE: Piscataway, NJ, USA, 2017; pp. 105–110. [Google Scholar]

- Cheikhi, L.; Al-Qutaish, R.E.; Idri, A. Software Productivity: Harmonization in ISO/IEEE Software Engineering Standards. J. Softw. 2012, 7, 462–470. [Google Scholar] [CrossRef][Green Version]

- de O. Melo, C.; Cruzes, D.S.; Kon, F.; Conradi, R. Interpretative case studies on agile team productivity and management. Inf. Softw. Technol. 2013, 55, 412–427. [Google Scholar] [CrossRef]

- Duarte, C.H.C. The Quest for Productivity in Software Engineering: A Practitioners Systematic Literature Review. In Proceedings of the International Conference of Systems and Software Processes (ICSSP 2019), Montreal, QC, Canada, 25 May 2019; pp. 145–154. [Google Scholar]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report EBSE 2007-001, Keele University and Durham University Joint Report. 2007. Available online: https://www.elsevier.com/__data/promis_misc/525444systematicreviewsguide.pdf (accessed on 31 January 2022).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 Statement: An Updated Guideline for Reporting Systematic Reviews. PLoS Med. 2021, 18, e1003583. [Google Scholar] [CrossRef]

- Schünemann, H.; Brożek, J.; Guyatt, G.; Oxman, A. Handbook for Grading the Quality of Evidence and the Strength of Recommendations Using the GRADE Approach. 2013. Available online: https://gradepro.org/handbook (accessed on 31 January 2022).

- Rafique, Y.; Misic, V.B. The Effects of Test-Driven Development on External Quality and Productivity: A Meta-Analysis. IEEE Trans. Softw. Eng. 2013, 39, 835–856. [Google Scholar] [CrossRef]

- Mohagheghi, P.; Conradi, R. Quality, productivity and economic benefits of software reuse: A review of industrial studies. Empir. Softw. Eng. 2007, 12, 471–516. [Google Scholar] [CrossRef]

- Basili, V.; Caldiera, G.; Rombach, H.D. Goal Question Metric (GCM) Approach. In Encyclopedia of Software Engineering; Wiley: Hoboken, NJ, USA, 2002; pp. 528–532. [Google Scholar]

- Ley, M. DBLP: Some Lessons Learned. Proc. VLDB Endow. 2009, 2, 1493–1500. [Google Scholar] [CrossRef]

- Boehm, B.W. Improving Software Productivity. IEEE Comput. 1987, 20, 43–57. [Google Scholar] [CrossRef]

- Kitchenham, B.; Mendes, E. Software productivity measurement using multiple size measures. IEEE Trans. Softw. Eng. 2004, 30, 1023–1035. [Google Scholar] [CrossRef]

- Dieste, O.; Aranda, A.M.; Uyaguari, F.U.; Turhan, B.; Tosun, A.; Fucci, D.; Oivo, M.; Juristo, N. Empirical evaluation of the effects of experience on code quality and programmer productivity: An exploratory study. Empir. Softw. Eng. 2017, 22, 2457–2542. [Google Scholar] [CrossRef]

- Chapetta, W.A.; Travassos, G.H. Towards an evidence-based theoretical framework on factors influencing the software development productivity. Empir. Softw. Eng. 2020, 25, 3501–3543. [Google Scholar] [CrossRef]

- Bissi, W.; Neto, A.G.S.S.; Emer, M.C.F.P. The effects of test-driven development on internal quality, external quality and productivity: A systematic review. Inf. Softw. Technol. 2016, 74, 45–54. [Google Scholar] [CrossRef]

- Cardozo, E.S.F.; Neto, J.B.F.A.; Barza, A.; França, A.C.C.; da Silva, F.Q.B. Scrum and Productivity in Software Projects: A Systematic Literature Review. In Proceedings of the 14th International Conference on Evaluation and Assessment in Software Engineering (EASE 2010), Keele, UK, 12–13 April 2010. [Google Scholar]

- de Oliveira, E.C.C.; Viana, D.; Cristo, M.; Conte, T. How have Software Engineering Researchers been Measuring Software Productivity? A Systematic Mapping Study. In Proceedings of the 19th International Conference on Enterprise Information Systems (ICEIS 2017), Porto, Portugal, 26–29 April 2017; Hammoudi, S., Smialek, M., Camp, O., Filipe, J., Eds.; SciTePress: Setubal, Portugal, 2017; Volume 2, pp. 76–87. [Google Scholar]

- de Oliveira, E.C.C.; Conte, T.; Cristo, M.; Valentim, N.M.C. Influence Factors in Software Productivity: Tertiary Literature Review. J. Softw. Eng. Knowl. Eng. 2018, 28, 1795–1810. [Google Scholar] [CrossRef]

- Petersen, K. Measuring and Predicting Software Productivity. Inf. Softw. Technol. 2011, 53, 317–343. [Google Scholar] [CrossRef]

- Shah, S.M.A.; Papatheocharous, E.; Nyfjord, J. Measuring productivity in agile software development process: A scoping study. In Proceedings of the International Conference on Software and System Process (ICSSP 2015), Tallinn, Estonia, 24–26 August 2015; pp. 102–106. [Google Scholar]

- Jalali, S.; Wohlin, C. Systematic Literature Studies: Database Searches vs. Backward Snowballing. In Proceedings of the International Symposium on Empirical Software Engineering and Measurement (ESEM 2012), Lund, Sweden, 19–20 September 2012; pp. 29–38. [Google Scholar]

- Hernández-López, A.; Palacios, R.C.; García-Crespo, Á.; Cabezas-Isla, F. Software Engineering Productivity: Concepts, Issues and Challenges. Int. J. Inf. Technol. Syst. Approach 2011, 2, 37–47. [Google Scholar] [CrossRef][Green Version]

- Bourque, P.; Fairley, R.E. (Eds.) Guide to the Software Engineering Body of Knowledge (SWEBOK), 3rd ed.; IEEE: Piscataway, NJ, USA, 2014. [Google Scholar]

- Wohlin, C.; Runeson, P.; Høst, M.; Ohlsson, M.C.; Regnell, B.; Wesslén, A. Experimentation in Software Engineering; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- MacCormack, A.; Kemerer, C.F.; Cusumano, M.A.; Crandall, B. Trade-offs between Productivity and Quality in Selecting Software Development Practices. IEEE Softw. 2003, 20, 78–85. [Google Scholar] [CrossRef]

- Adams, P.J.; Capiluppi, A.; Boldyreff, C. Coordination and productivity issues in free software: The role of Brooks’ Law. In Proceedings of the 25th International Conference on Software Maintenance (ICSM 2009), Edmonton, AB, Canada, 20–26 September 2009; pp. 319–328. [Google Scholar]

- Mohapatra, S. Maximising productivity by controlling influencing factors in commercial software development. J. Inf. Commun. Technol. 2011, 3, 160–179. [Google Scholar] [CrossRef]

- Ramasubbu, N.; Cataldo, M.; Balan, R.K.; Herbsleb, J.D. Configuring global software teams: A multi-company analysis of project productivity, quality, and profits. In Proceedings of the 33rd International Conference on Software Engineering (ICSE 2011), Honolulu, HI, USA, 21–28 May 2011; Taylor, R.N., Gall, H.C., Medvidovic, N., Eds.; ACM: New York, NY, USA, 2011; pp. 261–270. [Google Scholar]

- Rodríguez-García, D.; Sicilia, M.; Barriocanal, E.G.; Harrison, R. Empirical findings on team size and productivity in software development. J. Syst. Softw. 2012, 85, 562–570. [Google Scholar] [CrossRef]

- Cataldo, M.; Herbsleb, J.D. Coordination Breakdowns and Their Impact on Development Productivity and Software Failures. IEEE Trans. Softw. Eng. 2013, 39, 343–360. [Google Scholar] [CrossRef]

- Moazeni, R.; Link, D.; Chen, C.; Boehm, B.W. Software domains in incremental development productivity decline. In Proceedings of the International Conference on Software and Systems Process (ICSSP 2014), Nanjing, China, 26–28 May 2014; Zhang, H., Huang, L., Richardson, I., Eds.; ACM: New York, NY, USA, 2014; pp. 75–83. [Google Scholar]

- Palacios, R.C.; Casado-Lumbreras, C.; Soto-Acosta, P.; García-Peñalvo, F.J.; Tovar, E. Project managers in global software development teams: A study of the effects on productivity and performance. Softw. Qual. J. 2014, 22, 3–19. [Google Scholar] [CrossRef]

- Scholtes, I.; Mavrodiev, P.; Schweitzer, F. From Aristotle to Ringelmann: A large-scale analysis of team productivity and coordination in Open-Source Software projects. Empir. Softw. Eng. 2016, 21, 642–683. [Google Scholar] [CrossRef]

- Stylianou, C.; Andreou, A.S. Investigating the impact of developer productivity, task interdependence type and communication overhead in a multi-objective optimization approach for software project planning. Adv. Eng. Softw. 2016, 98, 79–96. [Google Scholar] [CrossRef]

- Rastogi, A.; Thummalapenta, S.; Zimmermann, T.; Nagappan, N.; Czerwonka, J. Ramp-up Journey of New Hires: Do strategic practices of software companies influence productivity? In Proceedings of the 10th Innovations in Software Engineering Conference (ISEC 2017), Jaipur, India, 5–7 February 2017; pp. 107–111. [Google Scholar]

- Oliveira, E.; Fernandes, E.; Steinmacher, I.; Cristo, M.; Conte, T.; Garcia, A. Code and commit metrics of developer productivity: A study on team leaders perceptions. Empir. Softw. Eng. 2020, 25, 2519–2549. [Google Scholar] [CrossRef]

- Storey, M.A.D.; Zimmermann, T.; Bird, C.; Czerwonka, J.; Murphy, B.; Kalliamvakou, E. Towards a Theory of Software Developer Job Satisfaction and Perceived Productivity. IEEE Trans. Softw. Eng. 2021, 47, 2125–2142. [Google Scholar] [CrossRef]

- Potok, T.E.; Vouk, M.A.; Rindos, A. Productivity Analysis of Object-Oriented Software Development in a Commercial Environment. Softw. Pract. Exp. 1999, 29, 833–847. [Google Scholar] [CrossRef]

- Maxwell, K.D.; Forselius, P. Benchmarking Software-Development Productivity. IEEE Softw. 2000, 17, 80–88. [Google Scholar] [CrossRef]

- Premraj, R.; Shepperd, M.J.; Kitchenham, B.A.; Forselius, P. An Empirical Analysis of Software Productivity over Time. In Proceedings of the 11th International Symposium on Software Metrics (METRICS 2005), Como, Italy, 19–22 September 2005; pp. 37–46. [Google Scholar]

- Moses, J.; Farrow, M.; Parrington, N.; Smith, P. A productivity benchmarking case study using Bayesian credible intervals. Softw. Qual. J. 2006, 14, 37–52. [Google Scholar] [CrossRef]

- Wang, H.; Wang, H.; Zhang, H. Software Productivity Analysis with CSBSG Data Set. In Proceedings of the International Conference on Computer Science and Software Engineering (CSSE 2008), Wuhan, China, 12–14 December 2008; Volume 2, pp. 587–593. [Google Scholar]

- Tsunoda, M.; Monden, A.; Yadohisa, H.; Kikuchi, N.; Matsumoto, K. Software Development Productivity of Japanese Enterprise Applications. Inf. Technol. Manag. 2009, 10, 193–205. [Google Scholar] [CrossRef]

- Duarte, C.H.C. Productivity Paradoxes Revisited: Assessing the Relationship Between Quality Maturity Levels and Labor Productivity in Brazilian Software Companies. Empir. Softw. Eng. 2017, 22, 818–847. [Google Scholar] [CrossRef]

- Tsunoda, M.; Amasaki, S. On Software Productivity Analysis with Propensity Score Matching. In Proceedings of the International Symposium on Empirical Software Engineering and Measurement (ESEM 2017), Toronto, ON, Canada, 9–10 November 2017; Bener, A., Turhan, B., Biffl, S., Eds.; IEEE: Piscataway, NJ, USA, 2017; pp. 436–441.

- Lavazza, L.; Morasca, S.; Tosi, D. An Empirical Study on the Factors Affecting Software Development Productivity. e-Inform. Softw. Eng. J. 2018, 12, 27–49. [Google Scholar]

- Lagerström, R.; von Würtemberg, L.M.; Holm, H.; Luczak, O. Identifying factors affecting software development cost and productivity. Softw. Qual. J. 2012, 20, 395–417. [Google Scholar] [CrossRef]

- de Souza Carvalho, W.C.; Rosa, P.F.; dos Santos Soares, M.; da Cunha, M.A.T., Jr.; Buiatte, L.C. A Comparative Analysis of the Agile and Traditional Software Development Processes Productivity. In Proceedings of the 30th International Conference of the Chilean Computer Science Society (SCCC 2011), Curico, Chile, 9–11 November 2011; pp. 74–82. [Google Scholar]

- Mockus, A. Succession: Measuring transfer of code and developer productivity. In Proceedings of the 31st International Conference on Software Engineering (ICSE 2009), Vancouver, BC, Canada, 16–24 May 2009; pp. 67–77. [Google Scholar]

- Mantyla, M.; Adams, B.; Destefanis, G.; Graziotin, D.; Ortu, M. Mining Valence, arousal, and Dominance—Possibilities for detecting burnout and productivity? In Proceedings of the 13th Conference on Mining Software Repositories (MSR 2016), Austin, TX, USA, 14–22 May 2016; Kim, M., Robbes, R., Bird, C., Eds.; ACM: New York, NY, USA, 2016; pp. 247–258. [Google Scholar]

- Duncan, A.S. Software Development Productivity Tools and Metrics. In Proceedings of the 10th International Conference on Software Engineering (ICSE 1988), Singapore, 11–15 April 1988; Nam, T.C., Druffel, L.E., Meyer, B., Eds.; IEEE: Piscataway, NJ, USA, 1988; pp. 41–48. [Google Scholar]

- Banker, R.D.; Kauffman, R.J. Reuse and Productivity in Integrated Computer-Aided Software Engineering: An Empirical Study. MIS Q. 1991, 15, 375–401. [Google Scholar] [CrossRef]

- Banker, R.D.; Slaughter, S. Project Size and Software Maintenance Productivity: Empirical Evidence on Economies of Scale in Software Maintenance. In Proceedings of the 15th International Conference on Information Systems, Vancouver, BC, Canada, 14–17 December 1994; DeGross, J.I., Huff, S.L., Munro, M., Eds.; Association for Information Systems: Atlanta, GA, USA, 1994; pp. 279–289. [Google Scholar]

- Lim, W.C. Effects of Reuse on Quality, Productivity, and Economics. IEEE Softw. 1994, 11, 23–30. [Google Scholar] [CrossRef]

- Chatman, V.V., III. CHANGE-POINTs: A proposal for software productivity measurement. J. Syst. Softw. 1995, 31, 71–91. [Google Scholar] [CrossRef]

- Bruckhaus, T.; Madhavji, N.H.; Henshaw, J.; Janssen, I. The Impact of Tools on Software Productivity. IEEE Softw. 1996, 13, 29–38. [Google Scholar] [CrossRef]

- Sova, D.W.; Smidts, C.S. Increasing testing productivity and software quality: A comparison of software testing methodologies within NASA. Empir. Softw. Eng. 1996, 1, 165–188. [Google Scholar] [CrossRef]

- Potok, T.E.; Vou, M.A. The Effects of the Business Model on Object-Oriented Software Development Productivity. IBM Syst. J. 1997, 36, 140–161. [Google Scholar] [CrossRef]

- Port, D.; McArthur, M. A Study of Productivity and Efficiency for Object-Oriented Methods and Languages. In Proceedings of the 6th Asia-Pacific Software Engineering Conference (APSEC 1999), Takamatsu, Japan, 7–10 December 1999; pp. 128–135. [Google Scholar]

- Asmild, M.; Paradi, J.C.; Kulkarni, A. Using Data Envelopment Analysis in software development productivity measurement. Softw. Process. Improv. Pract. 2006, 11, 561–572. [Google Scholar] [CrossRef]

- Tomaszewski, P.; Lundberg, L. The increase of productivity over time—An industrial case study. Inf. Softw. Technol. 2006, 48, 915–927. [Google Scholar] [CrossRef]

- Siok, M.F.; Tian, J. Empirical Study of Embedded Software Quality and Productivity. In Proceedings of the 10th International Symposium on High-Assurance Systems Engineering (HASE 2007), Dallas, TX, USA, 14–16 November 2007; pp. 313–320. [Google Scholar]

- Kautz, K.; Johansen, T.H.; Uldahl, A. The Perceived Impact of the Agile Development and Project Management Method Scrum on Information Systems and Software Development Productivity. Australas. J. Inf. Syst. 2014, 18, 303–315. [Google Scholar] [CrossRef]

- Beller, M.; Orgovan, V.R.; Buja, S.; Zimmermann, T. Mind the Gap: On the Relationship between Automatically Measured and Self-Reported Productivity. IEEE Softw. 2021, 38, 24–31. [Google Scholar] [CrossRef]

- Jalote, P.; Kamma, D. Studying Task Processes for Improving Programmer Productivity. Trans. Softw. Eng. 2021, 47, 801–817. [Google Scholar] [CrossRef]

- Johnson, B.; Zimmermann, T.; Bird, C. The Effect of Work Environments on Productivity and Satisfaction of Software Engineers. IEEE Trans. Softw. Eng. 2021, 47, 736–757. [Google Scholar] [CrossRef]

- Low, G.; Jeffery, D. Software development productivity and back-end CASE tools. Inf. Softw. Technol. 1991, 33, 616–621. [Google Scholar] [CrossRef]

- Rubin, H.A. Software process maturity: Measuring its impact on productivity and quality. In Proceedings of the 15th International Conference on Software Engineering (ICSE 1993), Baltimore, MA, USA, 17–21 May 1993; pp. 468–476. [Google Scholar]

- Abdel-Hamid, T.K. The Slippery Path to Productivity Improvement. IEEE Softw. 1996, 13, 43–52. [Google Scholar] [CrossRef]

- Boehm, B.W. Managing Software Productivity and Reuse. IEEE Comput. 1999, 32, 111–113. [Google Scholar] [CrossRef]

- Frakes, W.B.; Succi, G. An industrial study of reuse, quality, and productivity. J. Syst. Softw. 2001, 57, 99–106. [Google Scholar] [CrossRef]

- Green, G.C.; Hevner, A.R.; Collins, R.W. The impacts of quality and productivity perceptions on the use of software process improvement innovations. Inf. Softw. Technol. 2005, 47, 543–553. [Google Scholar] [CrossRef]

- Faulk, S.R.; Loh, E.; de Vanter, M.L.V.; Squires, S.; Votta, L.G. Scientific Computing’s Productivity Gridlock: How Software Engineering Can Help. Comput. Sci. Eng. 2009, 11, 30–39. [Google Scholar] [CrossRef]

- Tan, T.; Li, Q.; Boehm, B.; Yang, Y.; Hei, M.; Moazeni, R. Productivity Trends in Incremental and Iterative Software Development. In Proceedings of the 3rd International Symposium on Empirical Software Engineering and Measurement (ESEM 2009), Lake Buena Vista, FL, USA, 15–16 October 2009; pp. 1–10. [Google Scholar]

- Azzeh, M.; Nassif, A.B. Analyzing the relationship between project productivity and environment factors in the use case points method. J. Softw. Evol. Process 2017, 29–53, e1882. [Google Scholar] [CrossRef]

- Azzeh, M.; Nassif, A.B. Project productivity evaluation in early software effort estimation. J. Softw. Evol. Process 2018, 30, e2110. [Google Scholar] [CrossRef]

- Besker, T.; Martini, A.; Bosch, J. Software developer productivity loss due to technical debt—A replication and extension study examining developers’ development work. J. Syst. Softw. 2019, 156, 41–61. [Google Scholar] [CrossRef]

- Bezerra, C.I.M.; de Souza Filho, J.C.; Coutinho, E.F.; Gama, A.; Ferreira, A.L.; ao de Andrade, G.L.; Feitosa, C.E. How Human and Organizational Factors Influence Software Teams Productivity in COVID-19 Pandemic: A Brazilian Survey. In Proceedings of the 34th Brazilian Symposium on Software Engineering (SBES 2020), Natal, Brazil, 21–23 October 2020; pp. 606–615. [Google Scholar]

- Murphy-Hill, E.R.; Jaspan, C.; Sadowski, C.; Shepherd, D.C.; Phillips, M.; Winter, C.; Knight, A.; Smith, E.K.; Jorde, M. What Predicts Software Developers’ Productivity? IEEE Trans. Softw. Eng. 2021, 47, 582–594. [Google Scholar] [CrossRef]

- Ge, C.; Huang, K. Productivity Differences and Catch-Up Effects among Software as a Service Firms: A Stochastic Frontier Approach. In Proceedings of the International Conference on Information Systems (ICIS 2011), Shanghai, China, 4–7 December 2011; Galletta, D.F., Liang, T., Eds.; Association for Information Systems: Atlanta, GA, USA, 2011. [Google Scholar]

- Bibi, S.; Stamelos, I.; Ampatzoglou, A. Combining probabilistic models for explanatory productivity estimation. Inf. Softw. Technol. 2008, 50, 656–669. [Google Scholar] [CrossRef]

- Sentas, P.; Angelis, L.; Stamelos, I.; Bleris, G.L. Software productivity and effort prediction with ordinal regression. Inf. Softw. Technol. 2005, 47, 17–29. [Google Scholar] [CrossRef]

- Lavazza, L.; Liu, G.; Meli, R. Productivity of software enhancement projects: An empirical study. In Proceedings of the Joint 30th International Workshop on Software Measurement and the 15th International Conference on Software Process and Product Measurement (IWSM-Mensura 2020), Mexico City, Mexico, 29–30 October 2020. [Google Scholar]

- Krein, J.L.; MacLean, A.C.; Knutson, C.D.; Delorey, D.P.; Eggett, D. Impact of Programming Language Fragmentation on Developer Productivity: A Sourceforge Empirical Study. Int. J. Open-Source Softw. Process. 2010, 2, 41–61. [Google Scholar] [CrossRef]

- Liao, Z.; Zhao, Y.; Liu, S.; Zhang, Y.; Liu, L.; Long, J. The Measurement of the Software Ecosystem’s Productivity with GitHub. Comput. Syst. Sci. Eng. 2021, 36, 239–258. [Google Scholar] [CrossRef]

- Banker, R.D.; Datar, S.M.; Kemerer, C.F. Model to evaluate variables impact in the productivity of software maintenance projects. Manag. Sci. 1991, 37, 1–18. [Google Scholar] [CrossRef]

- Parrish, A.S.; Smith, R.K.; Hale, D.P.; Hale, J.E. A Field Study of Developer Pairs: Productivity Impacts and Implications. IEEE Softw. 2004, 21, 76–79. [Google Scholar] [CrossRef]

- Bibi, S.; Ampatzoglou, A.; Stamelos, I. A Bayesian Belief Network for Modeling Open-Source Software Maintenance Productivity. In Proceedings of the International Conference 12th IFIP WG 2.13 Open-Source Systems: Integrating Communities (OSS 2016), Gothenburg, Sweden, 30 May–2 June 2016; IFIP Advances in Information and Communication Technology. Springer: Berlin/Heidelberg, Germany, 2016; Volume 472, pp. 32–44. [Google Scholar]

- Huang, K.; Wang, M. Firm-Level Productivity Analysis for Software as a Service Companies. In Proceedings of the Information Conference on Information Systems (ICIS 2009), Phoenix, AZ, USA, 15–18 December 2009; pp. 1–17. [Google Scholar]

- Tanihana, K.; Noda, T. Empirical Study of the Relation between Open-Source Software Use and Productivity of Japan’s Information Service Industries. In Proceedings of the 9th IFIP WG 2.13 International Conference on Open-Source Software: Quality Verification (OSS 2013), Koper-Capodistria, Slovenia, 25–28 June 2013; Petrinja, E., Succi, G., Joni, N.E., Sillitti, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 18–29. [Google Scholar]

- Staples, M.; Jeffery, R.; Andronick, J.; Murray, T.; Klein, G.; Kolanski, R. Productivity for proof engineering. In Proceedings of the 8th International Symposium on Empirical Software Engineering and Measurement (ESEM 2014), Torino, Italy, 18–19 September 2014; Morisio, M., Dyba, T., Torchiano, M., Eds.; ACM: New York, NY, USA, 2014; pp. 1–4. [Google Scholar]

- Kuutila, M.; Mäntylä, M.; Claes, M.; Elovainio, M.; Adams, B. Individual differences limit predicting well-being and productivity using software repositories: A longitudinal industrial study. Empir. Softw. Eng. 2021, 26, 88. [Google Scholar] [CrossRef]

- Scacchi, W. Understanding Software Productivity: Towards a Knowledge-Based Approach. J. Softw. Eng. Knowl. Eng. 1991, 1, 293–321. [Google Scholar] [CrossRef]

- Minetaki, K.; Motohashi, K. Subcontracting Structure and Productivity in the Japanese Software Industry. Rev. Socionetw. Strateg. 2009, 3, 51–65. [Google Scholar] [CrossRef]

- Trendowicz, A.; Münch, J. Factors Influencing Software Development Productivity: State-of-the-Art and Industrial Experiences. Adv. Comput. 2009, 77, 185–241. [Google Scholar]

- Wang, Y.; Zhang, C.; Chen, G.; Shi, Y. Empirical research on the total factor productivity of Chinese software companies. In Proceedings of the International Joint Conferences on Web Intelligence and Intelligent Agent Technology (WI-IAT 2012), Macau, China, 4–7 December 2012; Volume 3, pp. 25–29. [Google Scholar]

- Zhao, L.; Wang, X.; Wu, S. The Total Factor Productivity of China’s Software Industry and its Promotion Path. IEEE Access 2021, 9, 96039–96055. [Google Scholar] [CrossRef]

- Maxwell, K.; Wassenhove, L.V.; Dutta, S. Software development productivity of European space, military and industrial applications. IEEE Trans. Softw. Eng. 1996, 22, 706–718. [Google Scholar] [CrossRef]

- Kemayel, L.; Mili, A.; Ouederni, I. Controllable factors for programmer productivity: A statistical study. J. Syst. Softw. 1991, 16, 151–163. [Google Scholar] [CrossRef]

- Budgen, D.; Brereton, P.; Drummond, S.; Williams, N. Reporting systematic reviews: Some lessons from a tertiary study. Inf. Softw. Technol. 2018, 95, 62–74. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook of Systematic Reviews of Interventions, 6.2 version; Wiley-Blackwell: Hoboken, NJ, USA, 2021. [Google Scholar]

- McGuinness, L.A.; Higgins, J.P.T. Risk-of-bias Visualization (Robvis): An R package and Shiny web app for visualizing risk-of-bias assessments. Res. Synth. Methods 2021, 12, 55–61. [Google Scholar] [CrossRef]

- Guyatt, G.; Oxman, A.D.; Akl, E.A.; Kunz, R.; Vist, G.; Brozek, J.; Norris, S.; Falck-Ytter, Y.; Glasziou, P.; DeBeer, H.; et al. GRADE Guidelines: 1. Introduction — GRADE Evidence Profiles and Summary of Findings Tables. J. Clin. Epidemiol. 2011, 64, 383–394. [Google Scholar] [CrossRef]

- Brereton, P.; Kitchenham, B.A.; Budgen, D.; Turner, M.; Khalil, M. Lessons from Applying the Systematic Literature Review Process within the Software Engineering Domain. J. Syst. Softw. 2007, 80, 571–583. [Google Scholar] [CrossRef]

- Whiting, P.; Savović, J.; Higgins, J.P.; Caldwell, D.M.; Reeves, B.C.; Shea, B.; Davies, P.; Kleijnen, J.; Churchill, R. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. J. Clin. Epidemiol. 2016, 69, 225–234. [Google Scholar] [CrossRef]

| Study Type | Description |

|---|---|

| Case Study | Adopts research questions, hypotheses, units of analysis, logic linking data to hypotheses and multiple criteria for interpreting the findings. If some of these requirements are not satisfied, it is considered an exploratory case study . It is called a case-control study if comparisons are drawn between a focus group and a control group, which has not suffered any intervention. |

| Experiment | Adopts random assignment(s) of interventions in subjects, large sample sizes, well-formulated hypotheses and the selection of (an) independent variable(s), which is (are) (randomly) sampled. If all these requirements are satisfied, it is considered a controlled experiment; otherwise, it is a quasi-experiment. |

| Simulation | Adopts models to represent specific real situations/environments or data from real situations as a basis for setting key parameters in models. If the model is used to establish the goal(s) of (an) objective function(s), it is called an optimization model. |

| Survey | Proposes questions addressed to participants through questionnaires, (structured) interviews, online surveys, focus group meetings and others. Participants may also be approached in a census process or according to random sampling. |

| Review | Incorporates results from previous studies in the analysis. If the subjects are papers, it corresponds to a literature review. If a well-defined methodology is used to collect references, critically appraise results and synthesize their findings, it is called a systematic literature review. If the purpose is to provide a broad overview of a subject area, mapping the distribution of objects across a conceptual structure, it is called a systematic mapping. If statistical analysis methods are adopted, it is regarded as a meta-analysis. |

| STUDY | Literature Review | Systematic Mapping |

|---|---|---|

| Period | 1987–2017 | 1987–2021 |

| Primary Paper Search | ||

| Recovered bibliographic references (a) | 338 | 495 |

| Excluded references after screening (b) | 170 | 242 |

| Papers that were not available (c) | 68 | 90 |

| Papers that did not meet inclusion criteria (d) | 31 | 66 |

| Number of included papers (e = a − b − c − d) | 69 | 97 |

| Backward Snowballing Search | ||

| Recovered bibliographic references (f) | 16 | 9 |

| Excluded references after screening (g) | 3 | 2 |

| Papers that were not available (h) | 8 | 5 |

| Papers that did not meet inclusion criteria (i) | 1 | 0 |

| Number of included papers (j = f − g − h − i) | 4 | 2 |

| Number of Analyzed Papers (k = e + j) | 73 | 99 |

| Acronym | Chapter | Knowledge Area |

|---|---|---|

| SWEBOK | Many | Software Engineering Body of Knowledge |

| SR | 1 | Software Requirements |

| SD | 2 | Software Design |

| SC | 3 | Software Construction |

| ST | 4 | Software Testing |

| SM | 5 | Software Maintenance |

| SCM | 6 | Software Configuration Management |

| SEM | 7 | Software Engineering Management |

| SEP | 8 | Software Engineering Processes |

| SEMM | 9 | Software Engineering Models and Methods |

| SQ | 10 | Software Quality |

| SEPP | 11 | Software Engineering Professional Practice |

| Name/Occurrence | Definition (Based on [18]) | Count | Primary Studies (in Order of Publication) |

|---|---|---|---|

| observation (—) | Empirical observation of the objects and subjects of study (since little is known about them). | 0 | — |

| analysis (2009–2015) | Adoption of established procedures to investigate what are the research objects and subjects. | 4 | [7,32,90,99] |

| description (1996–2017) | Provision of logical descriptions and classifications of studied objects and subjects based on analyses. | 8 | [3,4,6,11,12,55,67,82] |

| understanding (1988–2021) | Explanation of why and how something happens in relation to research objects and subjects (including measurement). | 49 | [5,9,10,22,23,35,39,40,41,43,45,46,47,48,49,50,51,52,53,54,56,57,58,59,60,61,64,65,69,73,74,76,77,78,81,84,87,88,94,97,98,100,101,102,103,104,105,106,108] |

| prediction (1991–2021) | Description of what will happen regarding studied objects and subjects. | 14 | [42,62,63,68,70,79,85,86,89,91,92,93,95,96] |

| action (1987–2021) | Prescription or description of interactions with research objects and subjects so as to give rise to observable effects. | 14 | [21,24,36,37,38,44,66,71,72,75,80,83,107,109] |

| TOTAL | 89 |

| Key | Auth./Pract. | # Studies | Qualified Study Type | SE KA | Main Findings (Related to Productivity) |

|---|---|---|---|---|---|

| (MaxwellF00) [49] | 2/2 | 1 | case study | SWEBOK | The factors mostly impacting software productivity are company and business sector. Companies must statistically analyze available data to develop benchmarking equations based on key productivity factors. |

| (PremrajSKF05) [50] | 4/2 | 1 | controlled experiment | SWEBOK | There is evidence of improved productivity over time, with variations coming from company and business sector. Insurance and commerce are the least productive, while manufacturing is the most productive sector among the studied projects. There is no significant difference in productivity between new developments and maintenance projects. |

| (SentasASB05) [92] | 4/0 | 1 | quasi-experiment | SWEBOK | The ability of ordinal regression models to classify any future project in one of the predefined categories is high based on the studied databases. |

| (AsmildPK06) [70] | 3/0 | 1 | controlled experiment | SWEBOK | It is possible to develop proper exponential statistical models to predict productivity, but linear models are inappropriate. DEA can incorporate the time factor in analyses and can be used to determine the best performers for benchmarking purposes. |

| (MosesFPS06) [51] | 4/0 | 1 | case study | SC | The studied company outperforms those in the ISBSG database by approximately 2.2 times. Possible explanations are that projects are lead by staff with knowledge of systems and business processes and an optimized model-based development process is adopted. The Bayesian credible intervals gives a more informative form of productivity estimation than it would be possible using the usual confidence interval alternative for the geometric mean of the ratio. |

| (WangWZ08) [52] | 3/2 | 1 | exploratory case study | SWEBOK | Project size, type and business sector are factors that influence software productivity with varying significance levels. There is no evidence that team size and adopted programming languages affect productivity. There is no significant difference in productivity between new developments and redevelopment projects. |

| (BibiSA08) [91] | 3/0 | 2 | quasi-experiment | SWEBOK | A combination of the methods of association rules and regression trees is prescribed for software productivity prediction using homogeneous datasets. Their estimates are in the form of rules that the final user can easily understand and modify. |

| (Tsuno09) [53] | 5/1 | 1 | quasi-experiment | SWEBOK | Architecture and team size have a strong correlation with productivity. Business sector, outsourcing and projects skewed towards the implementation ensure moderate productivity. |

| (GeH11) [90] | 2/0 | 1 | quasi-experiment | SWEBOK | The stochastic frontier approach takes both inefficiency and random noise into account and is a better approach for productivity analysis. It allows the understanding of SaaS company dynamics and catch-up effects by comparison to traditional companies. |

| (RodriguezSGH12) [39] | 4/0 | 1 | controlled experiment | SEM | Improvement projects have significantly better productivity than new development and larger teams are less productive than smaller ones. |

| (TsunodaA17) [55] | 2/0 | 1 | quasi-experiment | SWEBOK | The propensity score analysis can determine undiscovered productivity factors. The company business sector and the development platform are significantly related to software productivity. |

| (LavazzaMT18) [56] | 3/0 | 1 | quasi-experiment | SC | The adopted primary programming language has a significant effect on the productivity of new development projects. The productivity of enhancement projects appears much less dependent on programming languages. The business area and architecture have significant effect on productivity. No evidence of the impact of CASE tools usage on productivity was determined. The productivity of new development projects tends to be higher than that of enhancement projects. |