A Systematic Review of Wind Energy Forecasting Models Based on Deep Neural Networks

Abstract

1. Introduction

2. Systematic Literature Review Methodology

2.1. Planning Phase

2.1.1. Review Summary

2.1.2. Research Questions

- General research question:

- What is the current state of the art in wind power forecasting models based on deep neural networks?

- Specific research questions:

- What are the current architectures for wind power forecasting models that utilize deep neural networks, pre-processing and feature extraction techniques, and optimization algorithms?

- What are the current performance metrics for validating models?

- What is the typical forecasting time frame for short-term forecasting models?

- What are the currently accepted datasets for training wind power forecasting models using deep neural networks, and how are these datasets distributed for use?

- What are the typical processing times for current wind power forecasting models that utilize deep neural networks?

2.2. Conducting Phase

2.2.1. Strategy for Searching for Primary Studies

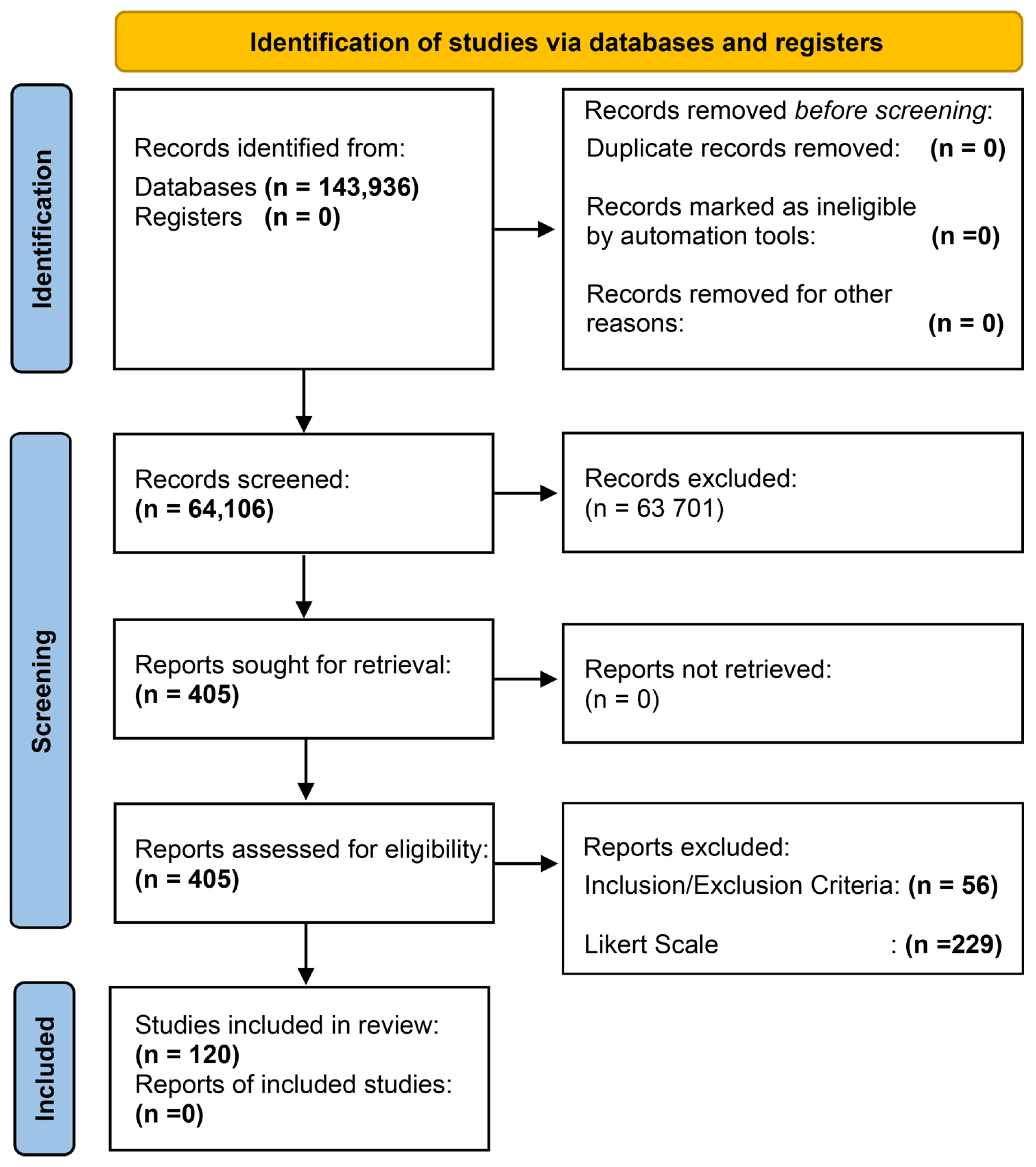

2.2.2. Procedure for Relevant Study Selection

- Database Search: Execute each search string in the selected databases, as indicated in Table 1.

- Filter by Date and Source Type: Limit the search results to publications from 2020 to 2024, including only journal articles and conference papers. The initial search results are summarized in Table 2.

- Title-Based Selection: Select all studies whose titles include the following keywords: “Wind Speed OR Wind Power” AND “Forecasting OR Prediction”.

- Abstract and Keyword Screening: If a title does not explicitly mention the terms from step 3, the abstract, keywords, and conclusions should be reviewed to check for the presence of any of the following terms: deep learning, deep neural network, or DNN. Table 3 presents the specific database configurations applied during this first evaluation.

- Second Evaluation, Inclusion/Exclusion Criteria: Apply the inclusion and exclusion criteria (detailed in a later section) to filter the studies selected in the previous step.

- Third Evaluation, Scientific Validity Assessment: Use a Likert scale to evaluate the scientific quality and validity of the remaining articles.

- Data Extraction: Extract relevant information from the articles identified in step 6 to address the research questions of the study.

2.2.3. Inclusion and Exclusion Criteria

2.2.4. Quality Assessment

- The results are reliable.

- The results hold significant value.

- The study contributes new insights.

- The evaluation effectively addresses its original objectives and proposal.

- The theoretical contributions, perspectives, and values of the research are well-defined.

- The research explores a diverse range of perspectives and contexts.

- The research design is justifiable.

- The problem’s approach, formulation, and analysis are thoroughly executed.

- The design of the sample and selection of target classes are well-documented.

- The data collection process was conducted effectively.

- The criteria for evaluating the results are clearly established.

- The connections between data, interpretation, and conclusions are evident.

- The research scope allows for further investigation.

- The research process is well-documented.

- The reporting is clear and logically structured.

2.3. Reporting Phase

- Preprocessing and Feature Extraction Techniques: Before applying forecasting models, it is crucial to preprocess the raw data and extract meaningful features. Signal decomposition methods are used in this phase to break down the complex time-series data into simpler components.

- DNN Models: Algorithms use the learned patterns and relationships from the data to make accurate predictions about future wind power generation, continuously adapting to new data and improving forecasts over time by learning from historical data patterns.

- Optimization Algorithms: These methods ensure that the DNN model achieves the best possible performance by optimizing its parameters and hyperparameters, speed up the training process, and achieve better convergence.

2.3.1. Preprocessing and Feature Extraction Techniques

2.3.2. DNN-Based Models

2.3.3. Optimization Algorithms

2.3.4. Corpus vs. Narrative Scope

3. Discussion

3.1. RQ1: What Are the Current Architectures for Wind Power Forecasting Models That Utilize Deep Neural Networks, Feature Extraction Techniques, and Optimization Algorithms?

3.1.1. WPF Models Based on DNNs

3.1.2. Signal Decomposition Methods

3.1.3. Optimization Algorithms

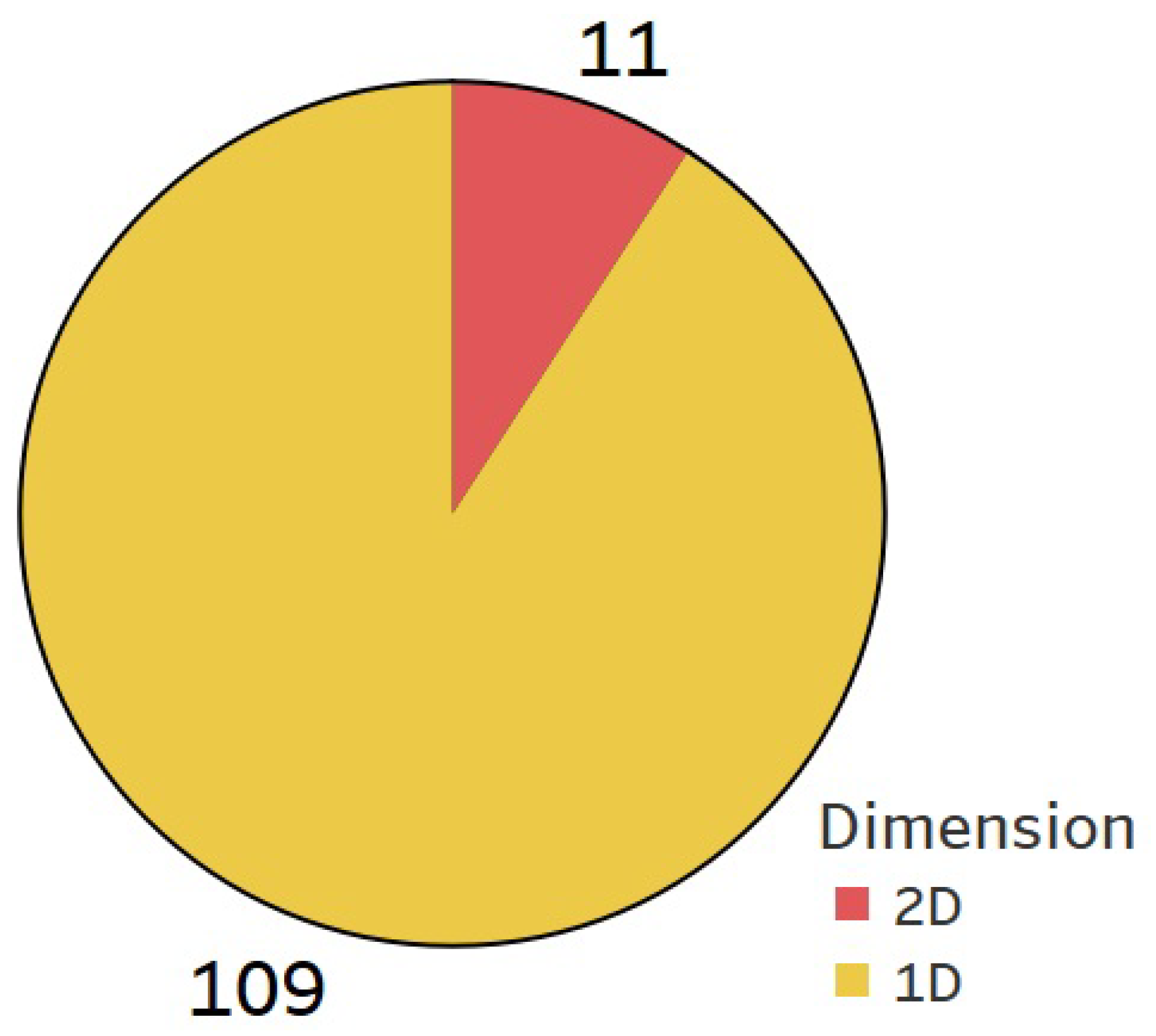

3.1.4. Dimensions

3.2. RQ2: What Are the Current Performance Metrics for Validating Models?

- Error Metrics (RMSE, MAE, MSE, MAPE, PINAW and others): These metrics assess the magnitude of prediction errors and help quantify how far the predicted values are from the actual values.

- Correlation-based Metrics (, R and others): The difference between this group and the previous one is that it focuses on the relationship or correlation between actual and predicted values rather than the error.

- Statistical Test and Model Comparison (DM, Theil’s U and others): These metrics and tests are used to compare different models and determine their relative performance.

- Coverage Metrics (PICP, CWC and others): These metrics assess how well the model’s prediction intervals capture the actual outcomes.

- Forecasting Accuracy Improvement Metrics (INAW, TIC and others): These metrics are used to assess improvements in forecasting accuracy and compare model performance.

3.3. RQ3: What Is the Typical Forecasting Time Frame for Short-Term Horizon Forecasting Models?

3.4. RQ4: What Are the Currently Accepted Datasets for Training Wind Power Forecasting Models Using Deep Neural Networks, and How Are These Datasets Distributed for Use?

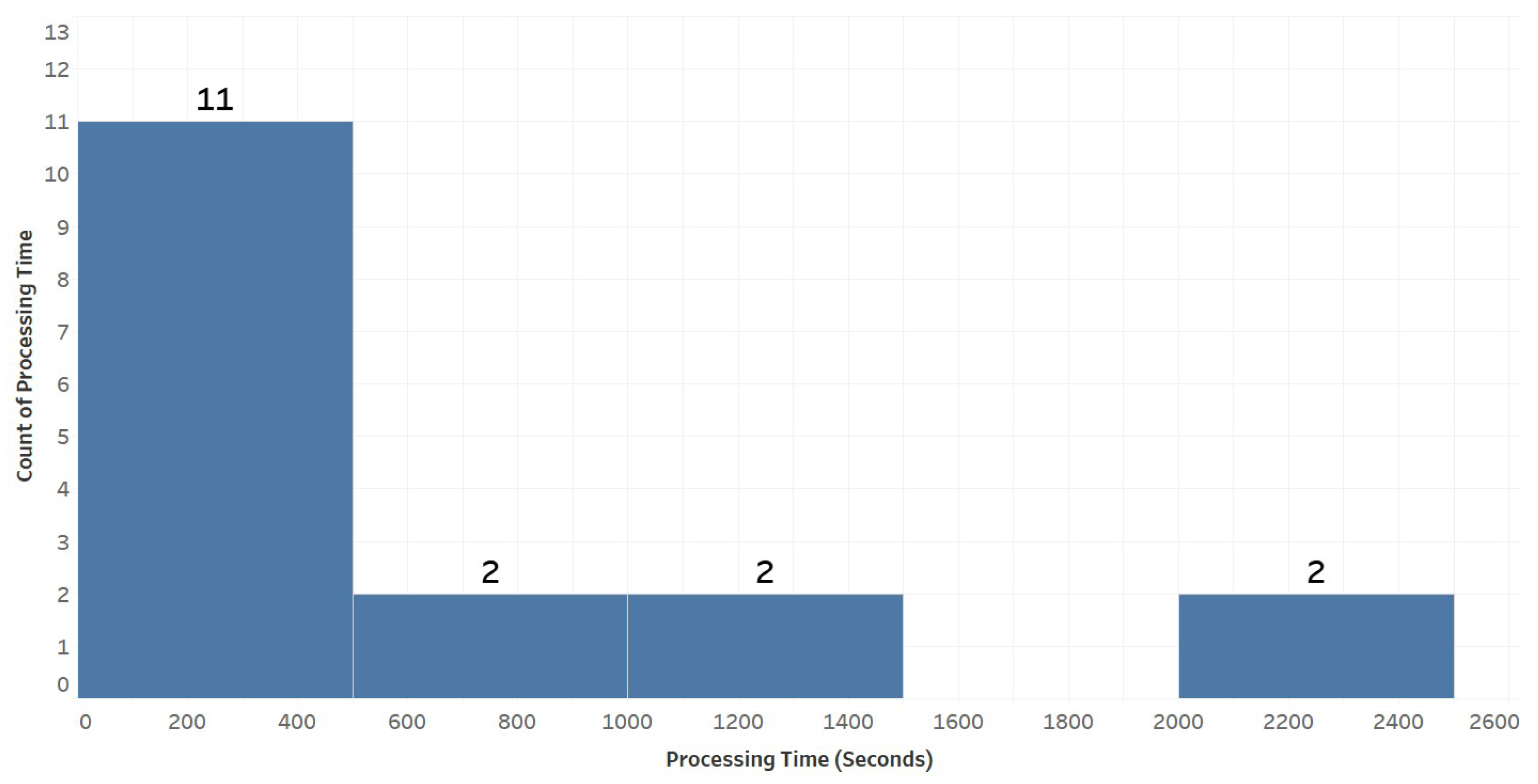

3.5. RQ5: What Are the Typical Processing Times for Current Wind Power Forecasting Models That Utilize Deep Neural Networks?

3.6. Additional Considerations

3.7. Shortages, Barriers, and Development Trends

3.7.1. Shortages

3.7.2. Barriers

3.7.3. Development Trends

4. Conclusions and Gaps

4.1. Conclusions

- To summarize the findings from RQ1: In WPF research, the predominant architectures combine DNNs, feature extraction methods, and optimization algorithms designed to improve predictive accuracy. Among these, LSTM networks are the most widely applied because of their ability to capture long-range temporal dependencies. Hybrid approaches that integrate LSTM with convolutional neural networks (CNNs) and other models are gaining momentum, as they leverage both temporal and spatial features. For feature extraction, VMD—particularly in hybrid settings—is often preferred due to its robustness in signal decomposition, while ICEEMDAN and SSA stand out for their effectiveness in noise reduction and feature extraction. Optimization algorithms, especially hybrid strategies such as GWO and PSO, reflect a clear trend toward combining multiple techniques to enhance model performance. One-dimensional time-series methods remain dominant because of their simplicity and effectiveness, although 2D models provide richer analysis in certain contexts. Taken together, these methodological advances highlight the complexity of wind power data and the ongoing need for refined approaches to improve forecasting precision.

- For RQ2: The validation of WPF models relies on a broad set of performance metrics, spanning error-based measures, statistical tests, coverage indicators, goodness-of-fit, and accuracy-improvement indices. RMSE and MAE are the most common, with MAPE and R2 complementing relative error and overall fit; comparative tests such as the DM test and interval-coverage measures such as PICP and CWC are also used. Benchmark models typically include LSTM, GRU, and BiLSTM, with ARIMA as a classical reference, while feature-extraction and optimization methods (for example VMD–PSO) enhance accuracy. Yet, in the absence of standard benchmarking protocols and consistent validation metrics, cross-study comparability remains limited. To address this, we advocate for blocked temporal splits with explicit horizon definitions and a minimal, consistent metric set (at least MAE, RMSE, and MAPE), reported together with the test period and sample size to improve reproducibility and comparisons.

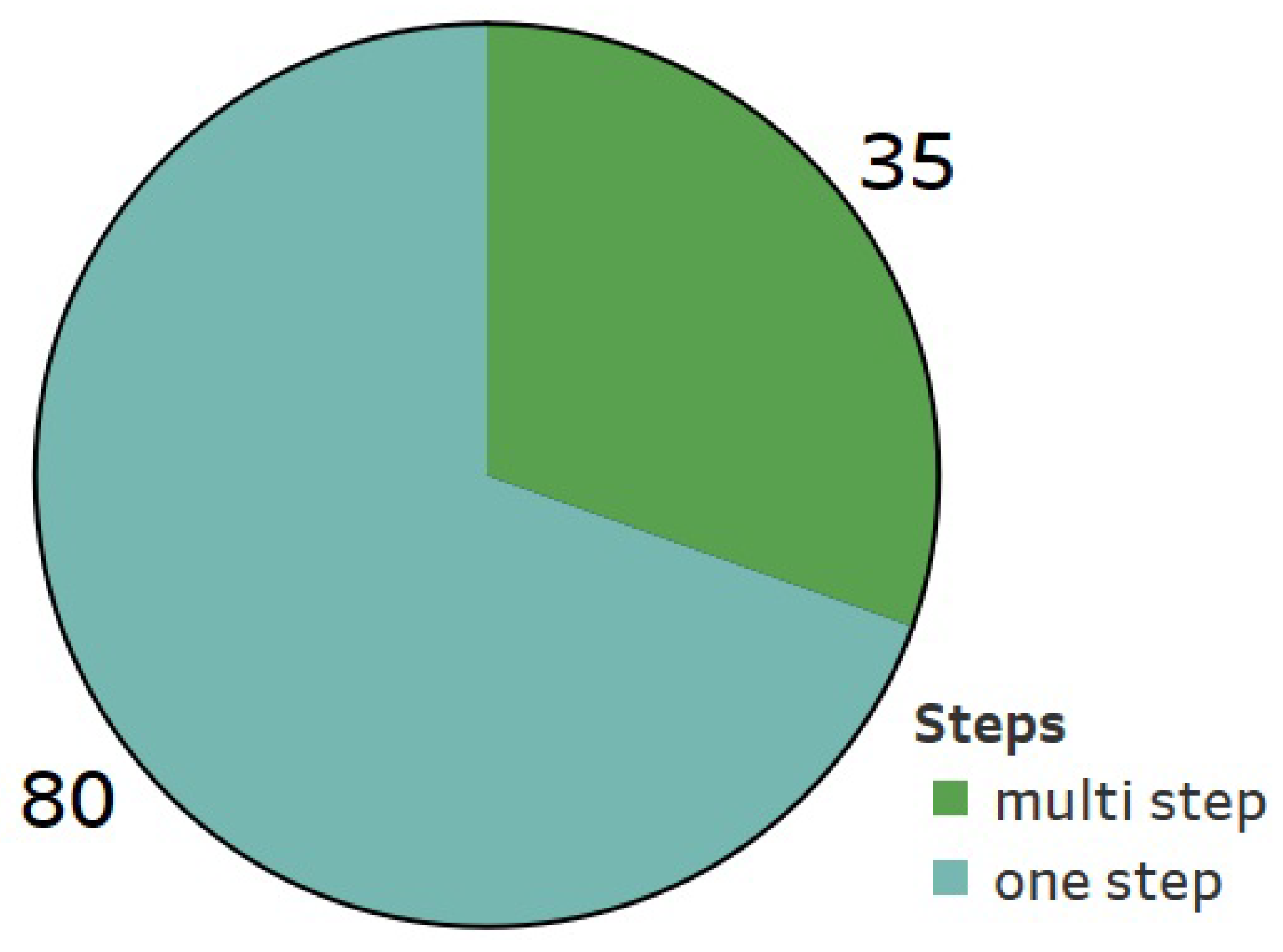

- Regarding RQ3: Forecasting horizons in WPF studies are predominantly short term, with the 10-min interval being the most frequently adopted due to its relevance for high-resolution, near-term prediction. Other common horizons include 15 min and 1 h. One-step-ahead forecasting dominates because of its simplicity and efficiency in generating immediate predictions, whereas multi-step models are used when capturing longer-term dynamics is required. This indicates a clear preference for short-term accuracy using one-step approaches, while still acknowledging the role of multi-step forecasting in more complex scenarios. The strong emphasis on short-term horizons reflects the operational need for timely, frequent, and precise forecasting in wind power management.

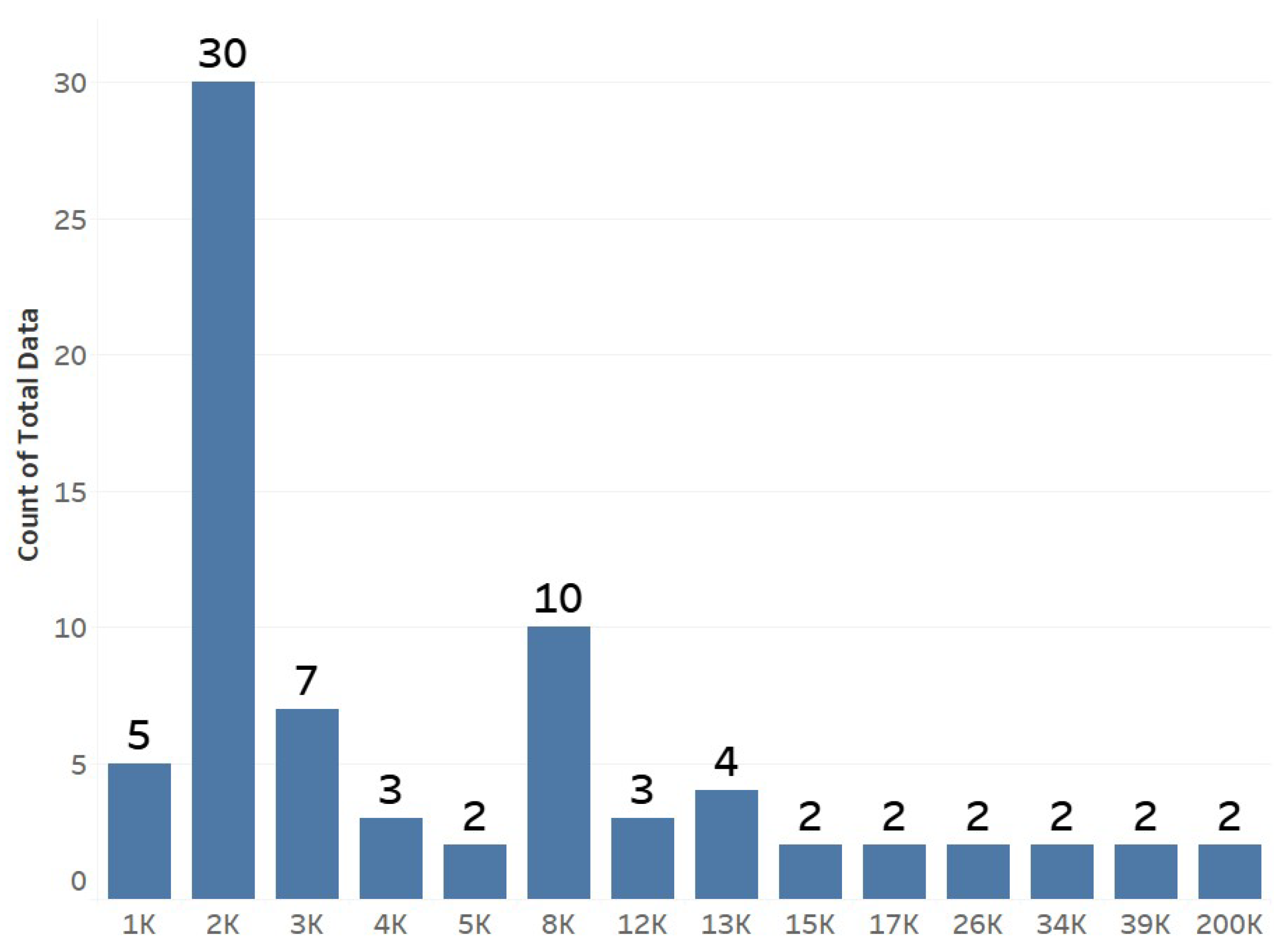

- For RQ4: Accepted datasets for training WPF models using DNNs are globally distributed, led by China and the USA, followed by India, Spain, and Brazil. Private, site-specific datasets still dominate, whereas public resources (e.g., Kaggle) remain limited and heterogeneous. Typical coverage is one month per season at a 10 min resolution, but dataset sizes vary widely (many studies use fewer than 15,000 samples), and common training–validation splits (80–20, 70–30, 90–10) are not standardized across works. This scarcity of standardized public datasets and the reliance on private data hinder reproducibility, independent verification, and fair cross-study comparison, underscoring the need for consistent protocols (fixed temporal splits, clearly defined horizons, and a minimal set of evaluation metrics) to enhance reliability and comparability.

- Finally, for RQ5: The processing times for current WPF models using DNNs vary significantly. The most commonly reported times range from 0 to 288 s, though this range may reflect approximations or limited timing data. Other notable processing durations include approximately 576 s and 2016 s, each reported in a few studies. This variation underscores the diverse computational requirements of different models and implementations. The limited reporting of processing times highlights a gap in the research, pointing to the need for more detailed data to better assess the computational efficiency of DNN-based WPF models.

4.2. Gaps

- Significant progress has been observed with the use of BiLSTM-based models, largely due to advancements in computer processing speeds. This has made BiLSTM an increasingly attractive option for WSP, potentially surpassing the previously popular LSTM and GRU models. It is important to note that these models do not operate in isolation; their performance must be evaluated in conjunction with signal decomposition techniques. Among these techniques, VMD has proven to be superior to all existing variations of ICEEMDAN, and the enhanced processing power of modern computers supports VMD’s integration (similar to BiLSTM). Nonetheless, the benefits of combining VMD with BiLSTM will only be realized if they are paired with optimization algorithms. The review highlights a range of optimization algorithms, including several new versions of GWO. Each of these should be individually assessed for compatibility with the desired model.

- Furthermore, the most commonly used models, feature extraction techniques, and optimization algorithms are typically hybrid in nature, involving a combination of methods and/or the integration of new approaches to enhance performance. Although these methods are regarded as the most promising, the optimal hybrid combinations remain uncertain, leaving room for the discovery of more effective configurations.

- Additionally, considerations regarding datasets are crucial. It is important to establish a data extraction protocol that determines sample sizes based on seasonal variations and wind speed variability to obtain reliable results. The dataset should be classified according to its size, resolution, and characteristics. This classification can help match the dataset with suitable models; in our case, we will focus on short-term horizons models due to their relevance in energy demand markets.

- Moreover, reference models can be established to compare performance metrics based on the results obtained. A more rigorous approach to quantitative results may be necessary for comparing the proposed models with the procedures outlined above. A thorough analysis of processing times is also required. Although it is challenging to compare clock cycles (which might be the most accurate method), the continuous evolution of computer technology complicates fair comparisons of processing times in some instances.

- There is a reporting gap regarding computational efficiency and model complexity. Most studies do not disclose hardware specifications, training/inference times, latency per forecast window, or model-size indicators (e.g., parameter count, memory footprint). This lack of standardized reporting limits reproducibility and practical assessment for real-time deployment. Future work should pair accuracy metrics with these efficiency descriptors to enable fair benchmarking.

- In the surveyed literature, the interpretability of deep models is rarely reported, which limits operator trust, auditing, and practical adoption. We suggest complementing accuracy with concise interpretability artefacts such as variable and time-window importance (via feature attribution or attention summaries) and at least one local explanation per forecast window, together with uncertainty or calibration estimates and transparent documentation of inputs and preprocessing. Minimal, consistent interpretability reporting would improve transparency, help detect spurious correlations, and better support deployment in real-time settings.

- Future directions include integrating physical knowledge with deep learning (e.g., coupling NWP or physics-based components with DNNs or adding physics-inspired constraints), exploring transfer learning and domain adaptation to efficiently adapt models to new sites with limited data, and improving uncertainty quantification by producing calibrated probabilistic forecasts and reporting calibration diagnostics alongside accuracy.

- This review does not include physics-only forecasting studies, as the scope focuses on DNN-based approaches. Nevertheless, integrating physical knowledge with deep learning (e.g., physics-informed losses or NWP–DNN hybrids) is a promising direction and is highlighted for future work.

- Although this review prioritizes short-term horizons, we highlight the need to evaluate long-term forecasting and to develop models that explicitly handle non-stationary, nonlinear wind patterns; both are flagged as directions for future work.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACO | Ant Colony Optimization |

| AGO | Adaptive Greedy Optimization |

| ANFIS | Adaptive Neuro-Fuzzy Inference System |

| ANN | Artificial Neural Network |

| ARIMA | AutoRegressive Integrated Moving Average |

| ATT | Attention Mechanism |

| BO | Bayesian Optimization |

| BPNN | Backpropagation Neural Network |

| BiGRU | Bidirectional Gated Recurrent Unit |

| BiLSTM | Bidirectional Long Short-Term Memory |

| CEEMD | Complementary Ensemble Empirical Mode Decomposition |

| CEEMDAN | Complete Ensemble Empirical Mode Decomposition with Adaptive Noise |

| CNN | Convolutional Neural Network |

| COA | Coati Optimization Algorithm |

| CPSO | Chaotic Particle Swarm Optimization |

| CS | Cuckoo Search |

| CSO | Crisscross Optimization Algorithm |

| CWC | Coverage Width-based Criterion |

| ConvLSTM | Convolutional Long Short-Term Memory |

| DA | Dragonfly Algorithm |

| DBN | Deep Belief Network |

| DESSA | Differential Evolution Sparrow Search Algorithm |

| DL | Deep Learning |

| DM | Diebold–Mariano test statistic |

| DNN | Deep Neural Network |

| DNNs | Deep Neural Networks |

| DWT | Discrete Wavelet Transform |

| EEMD | Ensemble Empirical Mode Decomposition |

| ELM | Extreme Learning Machine |

| EMD | Empirical Mode Decomposition |

| EWT | Empirical Wavelet Transform |

| GA | Genetic Algorithm |

| GNN | Graph Neural Network |

| GRU | Gated Recurrent Unit |

| GWO | Grey Wolf Optimizer |

| HBO | Heap-Based Optimizer |

| HHO | Harris Hawks Optimization |

| HSV | Hue Saturation Value |

| ICEEMDAN | Improved Complete Ensemble Empirical Mode Decomposition with Adaptive Noise |

| ICHOA | Improved Chimp Optimization Algorithm |

| IGWO | Improved Grey Wolf Optimizer |

| INAW | Interval Normalized Average Width |

| IRSA | Improved Reptile Search Algorithm |

| ITSA | Improved Tunicate Swarm Algorithm |

| LSTM | Long Short-Term Memory |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| MHHOGWO | Mutation Harris Hawks Optimization and Grey Wolf Optimizer |

| MLP | Multilayer Perceptron |

| MMODA | Modified Multi-objective Dragonfly Algorithm |

| MMOTA | Modified Multi-objective Tunicate Swarm Algorithm |

| MOBBSA | Multi-objective Binary Backtracking Search Algorithm |

| MOCSA | Multi-objective Crisscross Optimization Algorithm |

| MOEGJO | Multi-objective Enhanced Golden Jackal Optimization |

| MOEMPA | Multi-objective Opposition Elite Marine Predator Optimization Algorithm |

| MOGWO | Multi-objective Grey Wolf Optimizer |

| MOMVO | Multi-objective Multi-Verse Optimizer |

| MOOFADA | Multi-objective Opposition-based Firefly Algorithm with Dragonfly Algorithm |

| MOSMA | Multi-objective Slime Mould Algorithm |

| MSE | Mean Squared Error |

| NTF | Non-stationary Transformer |

| PICP | Prediction Interval Coverage Probability |

| PINAW | Prediction Interval Normalized Average Width |

| PSO | Particle Swarm Optimization |

| R | Pearson’s Correlation Coefficient |

| RES | Renewable Energy Sources |

| RMSE | Root Mean Squared Error |

| RNN | Recurrent Neural Network |

| SARIMA | Seasonal AutoRegressive Integrated Moving Average |

| SGWO | Social Rank Updating Grey Wolf Optimizer |

| SSA | Singular Spectrum Analysis |

| STGN | Spatio-temporal Graph Networks |

| SWT | Stationary Wavelet Transform |

| TCN | Temporal Convolutional Network |

| TMGWO | Two-phase Mutation Grey Wolf Optimizer |

| TSA | Tunicate Swarm Algorithm |

| TVF-EMD | Time Variant Filter Empirical Mode Decomposition |

| VMD | Variational Mode Decomposition |

| WOA | Whale Optimization Algorithm |

| WPF | Wind Power Forecasting |

| WPP | Wind Power Prediction |

| WSF | Wind Speed Forecasting |

| WSP | Wind Speed Prediction |

| WT | Wavelet Transform |

| XAI | Explainable Artificial Intelligence |

References

- Valdivia-Bautista, S.M.; Domínguez-Navarro, J.A.; Pérez-Cisneros, M.; Vega-Gómez, C.J.; Castillo-Téllez, B. Artificial Intelligence in Wind Speed Forecasting: A Review. Energies 2023, 16, 2457. [Google Scholar] [CrossRef]

- Bouabdallaoui, D.; Haidi, T.; Jaadi, M.E. Review of current artificial intelligence methods and metaheuristic algorithms for wind power prediction. Indones. J. Electr. Eng. Comput. Sci. 2023, 29, 626–634. [Google Scholar] [CrossRef]

- Gupta, A.; Kumar, A.; Boopathi, K. Intraday wind power forecasting employing feedback mechanism. Electr. Power Syst. Res. 2021, 201, 107518. [Google Scholar] [CrossRef]

- Sørensen, M.L.; Nystrup, P.; Bjerregård, M.B.; Møller, J.K.; Bacher, P.; Madsen, H. Recent developments in multivariate wind and solar power forecasting. Wiley Interdiscip. Rev. Energy Env. 2023, 12, e465. [Google Scholar] [CrossRef]

- Wang, Y.; Zou, R.; Liu, F.; Zhang, L.; Liu, Q. A review of wind speed and wind power forecasting with deep neural networks. Appl. Energy 2021, 304, 117766. [Google Scholar] [CrossRef]

- Alkesaiberi, A.; Harrou, F.; Sun, Y. Efficient Wind Power Prediction Using Machine Learning Methods: A Comparative Study. Energies 2022, 15, 2327. [Google Scholar] [CrossRef]

- Hossain, M.A.; Chakrabortty, R.K.; Elsawah, S.; Ryan, M.J. Very short-term forecasting of wind power generation using hybrid deep learning model. J. Clean Prod. 2021, 296, 126564. [Google Scholar] [CrossRef]

- Sweeney, C.; Bessa, R.J.; Browell, J.; Pinson, P. The future of forecasting for renewable energy. Wiley Interdiscip. Rev. Energy Env. 2020, 9, e365. [Google Scholar] [CrossRef]

- Hu, H.; Wang, L.; Lv, S.X. Forecasting energy consumption and wind power generation using deep echo state network. Renew. Energy 2020, 154, 598–613. [Google Scholar] [CrossRef]

- Vargas, S.A.; Esteves, G.R.T.; Maçaira, P.M.; Bastos, B.Q.; Oliveira, F.L.C.; Souza, R.C. Wind power generation: A review and a research agenda. J. Clean Prod. 2019, 218, 850–870. [Google Scholar] [CrossRef]

- Jalali, S.M.J.; Ahmadian, S.; Khodayar, M.; Khosravi, A.; Shafie-khah, M.; Nahavandi, S.; Catalao, J.P. An advanced short-term wind power forecasting framework based on the optimized deep neural network models. Int. J. Electr. Power Energy Syst. 2022, 141, 108143. [Google Scholar] [CrossRef]

- Tsai, W.C.; Hong, C.M.; Tu, C.S.; Lin, W.M.; Chen, C.H. A Review of Modern Wind Power Generation Forecasting Technologies. Sustainability 2023, 15, 10757. [Google Scholar] [CrossRef]

- Li, K.; Shen, R.; Wang, Z.; Yan, B.; Yang, Q.; Zhou, X. An efficient wind speed prediction method based on a deep neural network without future information leakage. Energy 2022, 267, 126589. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. EBSE Technical Report. EBSE-2007-01. Available online: https://www.researchgate.net/profile/Barbara-Kitchenham/publication/302924724_Guidelines_for_performing_Systematic_Literature_Reviews_in_Software_Engineering/links/61712932766c4a211c03a6f7/Guidelines-for-performing-Systematic-Literature-Reviews-in-Software-Engineering.pdf (accessed on 2 September 2025).

- Nogales, R.E.; Benalcázar, M.E. Hand gesture recognition using machine learning and infrared information: A systematic literature review. Int. J. Mach. Learn. Cybern. 2021, 12, 2859–2886. [Google Scholar] [CrossRef]

- Bugaieva, L.; Beznosyk, O. Prediction of Electricity Generation by Wind Farms Based on Intelligent Methods: State of the Art and Examples. Energy Eng. Control Syst. 2022, 8, 104–109. [Google Scholar] [CrossRef]

- Shen, Z.; Fan, X.; Zhang, L.; Yu, H. Wind speed prediction of unmanned sailboat based on CNN and LSTM hybrid neural network. Ocean Eng. 2022, 254, 111352. [Google Scholar] [CrossRef]

- Shahid, F.; Mehmood, A.; Khan, R.; Smadi, A.A.; Yaqub, M.; Alsmadi, M.K.; Zheng, Z. 1D Convolutional LSTM-based wind power prediction integrated with PkNN data imputation technique. J. King Saud Univ.-Comput. Inf. Sci. 2023, 35, 101816. [Google Scholar] [CrossRef]

- Fadoul, F.F.; Hassan, A.A.; Caglar, R. Assessing the Feasibility of Integrating Renewable Energy: Decision Tree Analysis for Parameter Evaluation and LSTM Forecasting for Solar and Wind Power Generation in a Campus Microgrid. IEEE Access 2023, 11, 124690–124708. [Google Scholar] [CrossRef]

- Sareen, K.; Panigrahi, B.K.; Shikhola, T.; Sharma, R. An imputation and decomposition algorithms based integrated approach with bidirectional LSTM neural network for wind speed prediction. Energy 2023, 278, 127799. [Google Scholar] [CrossRef]

- Wang, J.; Lv, M.; Li, Z.; Zeng, B. Multivariate selection-combination short-term wind speed forecasting system based on convolution-recurrent network and multi-objective chameleon swarm algorithm. Expert Syst. Appl. 1912, 214, 119129. [Google Scholar] [CrossRef]

- Wang, J.; Gao, D.; Zhuang, Z. An optimized deep nonlinear integrated framework for wind speed forecasting and uncertainty analysis. Appl. Soft Comput. 2023, 141, 110310. [Google Scholar] [CrossRef]

- Lv, S.X.; Wang, L. Multivariate wind speed forecasting based on multi-objective feature selection approach and hybrid deep learning model. Energy 2022, 263, 126100. [Google Scholar] [CrossRef]

- Jaseena, K.U.; Kovoor, B.C. Decomposition-based hybrid wind speed forecasting model using deep bidirectional LSTM networks. Energy Convers. Manag. 2021, 234, 113944. [Google Scholar] [CrossRef]

- Nascimento, E.G.S.; de Melo, T.A.C.; Moreira, D.M. A transformer-based deep neural network with wavelet transform for forecasting wind speed and wind energy. Energy 2023, 278, 127678. [Google Scholar] [CrossRef]

- Liu, Z.H.; Wang, C.T.; Wei, H.L.; Zeng, B.; Li, M.; Song, X.P. A wavelet-LSTM model for short-term wind power forecasting using wind farm SCADA data. Expert Syst. Appl. 2024, 247, 123237. [Google Scholar] [CrossRef]

- Yu, C.; Fu, S.; Wei, Z.W.; Zhang, X.; Li, Y. Multi-feature-fused generative neural network with Gaussian mixture for multi-step probabilistic wind speed prediction. Appl. Energy 2024, 359, 122751. [Google Scholar] [CrossRef]

- Fantini, D.G.; Silva, R.N.; Siqueira, M.B.B.; Pinto, M.S.S.; Guimarães, M.; Brasil, A.C.P. Wind speed short-term prediction using recurrent neural network GRU model and stationary wavelet transform GRU hybrid model. Energy Convers. Manag. 2024, 308, 118333. [Google Scholar] [CrossRef]

- Zhang, W.; Lin, Z.; Liu, X. Short-term offshore wind power forecasting - A hybrid model based on Discrete Wavelet Transform (DWT), Seasonal Autoregressive Integrated Moving Average (SARIMA), and deep-learning-based Long Short-Term Memory (LSTM). Renew. Energy 2021, 185, 611–628. [Google Scholar] [CrossRef]

- Barjasteh, A.; Ghafouri, S.H.; Hashemi, M. A hybrid model based on discrete wavelet transform (DWT) and bidirectional recurrent neural networks for wind speed prediction. Eng. Appl. Artif. Intell. 2023, 127, 107340. [Google Scholar] [CrossRef]

- Wei, D.; Wang, J.; Niu, X.; Li, Z. Wind speed forecasting system based on gated recurrent units and convolutional spiking neural networks. Appl. Energy 2021, 292, 116842. [Google Scholar] [CrossRef]

- Yang, R.; Liu, H.; Nikitas, N.; Duan, Z.; Li, Y.; Li, Y. Short-term wind speed forecasting using deep reinforcement learning with improved multiple error correction approach. Energy 2021, 239, 122128. [Google Scholar] [CrossRef]

- Zhang, Y.; Kong, X.; Wang, J.; Wang, S.; Zhao, Z.; Wang, F. A comprehensive wind speed prediction system based on intelligent optimized deep neural network and error analysis. Eng. Appl. Artif. Intell. 2023, 128, 107479. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Zeng, B.; Lu, H. A novel ensemble probabilistic forecasting system for uncertainty in wind speed. Appl. Energy 2022, 313, 118796. [Google Scholar] [CrossRef]

- Shao, Z.; Han, J.; Zhao, W.; Zhou, K.; Yang, S. Hybrid model for short-term wind power forecasting based on singular spectrum analysis and a temporal convolutional attention network with an adaptive receptive field. Energy Convers. Manag. 2022, 269, 116138. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Y.; Li, Y. A parallel differential learning ensemble framework based on enhanced feature extraction and anti-information leakage mechanism for ultra-short-term wind speed forecast. Appl. Energy 2024, 361, 122909. [Google Scholar] [CrossRef]

- Saxena, B.K.; Mishra, S.; Rao, K.V.S. Offshore wind speed forecasting at different heights by using ensemble empirical mode decomposition and deep learning models. Appl. Ocean Res. 2021, 117, 102937. [Google Scholar] [CrossRef]

- Liu, S.; Xu, T.; Du, X.; Zhang, Y.; Wu, J. A hybrid deep learning model based on parallel architecture TCN-LSTM with Savitzky-Golay filter for wind power prediction. Energy Convers. Manag. 2024, 302, 118122. [Google Scholar] [CrossRef]

- Suo, L.; Peng, T.; Song, S.; Zhang, C.; Wang, Y.; Fu, Y.; Nazir, M.S. Wind speed prediction by a swarm intelligence based deep learning model via signal decomposition and parameter optimization using improved chimp optimization algorithm. Energy 2023, 276, 127526. [Google Scholar] [CrossRef]

- Meng, A.; Chen, S.; Ou, Z.; Ding, W.; Zhou, H.; Fan, J.; Yin, H. A hybrid deep learning architecture for wind power prediction based on bi-attention mechanism and crisscross optimization. Energy 2021, 238, 121795. [Google Scholar] [CrossRef]

- Shang, Z.; Chen, Y.; Chen, Y.; Guo, Z.; Yang, Y. Decomposition-based wind speed forecasting model using causal convolutional network and attention mechanism. Expert Syst. Appl. 1987, 223, 119878. [Google Scholar] [CrossRef]

- Jiang, Z.; Che, J.; Li, N.; Tan, Q. Deterministic and probabilistic multi-time-scale forecasting of wind speed based on secondary decomposition, DFIGR and a hybrid deep learning method. Expert Syst. Appl. 2023, 234, 121051. [Google Scholar] [CrossRef]

- Liu, Z.; Hara, R.; Kita, H. Hybrid forecasting system based on data area division and deep learning neural network for short-term wind speed forecasting. Energy Convers. Manag. 2021, 238, 114136. [Google Scholar] [CrossRef]

- Xiong, J.; Peng, T.; Tao, Z.; Zhang, C.; Song, S.; Nazir, M.S. A dual-scale deep learning model based on ELM-BiLSTM and improved reptile search algorithm for wind power prediction. Energy 2022, 266, 126419. [Google Scholar] [CrossRef]

- Chen, G.; Tang, B.; Zeng, X.; Zhou, P.; Kang, P.; Long, H. Short-term wind speed forecasting based on long short-term memory and improved BP neural network. Int. J. Electr. Power Energy Syst. 2021, 134, 107365. [Google Scholar] [CrossRef]

- Wang, J.; Gao, D.; Chen, Y. A novel discriminated deep learning ensemble paradigm based on joint feature contribution for wind speed forecasting. Energy Convers. Manag. 2022, 270, 116187. [Google Scholar] [CrossRef]

- Zhang, D.; Chen, B.; Zhu, H.; Goh, H.H.; Dong, Y.; Wu, T. Short-term wind power prediction based on two-layer decomposition and BiTCN-BiLSTM-attention model. Energy 2023, 285, 128762. [Google Scholar] [CrossRef]

- Kumar, B.; Yadav, N.; Sunil. A novel hybrid algorithm based on Empirical Fourier decomposition and deep learning for wind speed forecasting. Energy Convers. Manag. 2023, 300, 117891. [Google Scholar] [CrossRef]

- Wang, S.; Shi, J.; Yang, W.; Yin, Q. High and low frequency wind power prediction based on Transformer and BiGRU-Attention. Energy 2023, 288, 129753. [Google Scholar] [CrossRef]

- Wang, S.; Wang, J.; Lu, H.; Zhao, W. A novel combined model for wind speed prediction – Combination of linear model, shallow neural networks, and deep learning approaches. Energy 2021, 234, 121275. [Google Scholar] [CrossRef]

- Wang, J.; Wang, S.; Li, Z. Wind speed deterministic forecasting and probabilistic interval forecasting approach based on deep learning, modified tunicate swarm algorithm, and quantile regression. Renew. Energy 2021, 179, 1246–1261. [Google Scholar] [CrossRef]

- Wang, J.; An, Y.; Li, Z.; Lu, H. A novel combined forecasting model based on neural networks, deep learning approaches, and multi-objective optimization for short-term wind speed forecasting. Energy 2022, 251, 123960. [Google Scholar] [CrossRef]

- Tian, C.; Niu, T.; Wei, W. Developing a wind power forecasting system based on deep learning with attention mechanism. Energy 2022, 257, 124750. [Google Scholar] [CrossRef]

- Hao, Y.; Yang, W.; Yin, K. Novel wind speed forecasting model based on a deep learning combined strategy in urban energy systems. Expert Syst. Appl. 1963, 219, 119636. [Google Scholar] [CrossRef]

- Liang, T.; Zhao, Q.; Lv, Q.; Sun, H. A novel wind speed prediction strategy based on Bi-LSTM, MOOFADA and transfer learning for centralized control centers. Energy 2021, 230, 120904. [Google Scholar] [CrossRef]

- Duan, J.; Wang, P.; Ma, W.; Fang, S.; Hou, Z. A novel hybrid model based on nonlinear weighted combination for short-term wind power forecasting. Int. J. Electr. Power Energy Syst. 2021, 134, 107452. [Google Scholar] [CrossRef]

- Han, Y.; Mi, L.; Shen, L.; Cai, C.S.; Liu, Y.; Li, K. A short-term wind speed interval prediction method based on WRF simulation and multivariate line regression for deep learning algorithms. Energy Convers. Manag. 2022, 258, 115540. [Google Scholar] [CrossRef]

- Wu, B.; Wang, L.; Zeng, Y.R. Interpretable wind speed prediction with multivariate time series and temporal fusion transformers. Energy 2022, 252, 123990. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Mirjalili, S.; Piras, G.; Garcia, D.A. Quaternion convolutional long short-term memory neural model with an adaptive decomposition method for wind speed forecasting: North aegean islands case studies. Energy Convers. Manag. 2022, 259, 115590. [Google Scholar] [CrossRef]

- Han, Y.; Tong, X.; Shi, S.; Li, F.; Deng, Y. Ultra-short-term wind power interval prediction based on hybrid temporal inception convolutional network model. Electr. Power Syst. Res. 2023, 217, 109159. [Google Scholar] [CrossRef]

- Wang, J.; Li, Z. Wind speed interval prediction based on multidimensional time series of Convolutional Neural Networks. Eng. Appl. Artif. Intell. 2023, 121, 105987. [Google Scholar] [CrossRef]

- Gong, Z.; Wan, A.; Ji, Y.; AL-Bukhaiti, K.; Yao, Z. Improving short-term offshore wind speed forecast accuracy using a VMD-PE-FCGRU hybrid model. Energy 2024, 295, 131016. [Google Scholar] [CrossRef]

- Cui, X.; Yu, X.; Niu, D. The ultra-short-term wind power point-interval forecasting model based on improved variational mode decomposition and bidirectional gated recurrent unit improved by improved sparrow search algorithm and attention mechanism. Energy 2023, 288, 129714. [Google Scholar] [CrossRef]

- Niu, D.; Sun, L.; Yu, M.; Wang, K. Point and interval forecasting of ultra-short-term wind power based on a data-driven method and hybrid deep learning model. Energy 2022, 254, 124384. [Google Scholar] [CrossRef]

- Zhang, Y.; Pan, Z.; Wang, H.; Wang, J.; Zhao, Z.; Wang, F. Achieving wind power and photovoltaic power prediction: An intelligent prediction system based on a deep learning approach. Energy 2023, 283, 129005. [Google Scholar] [CrossRef]

- Nahid, F.A.; Ongsakul, W.; Manjiparambil, N.M. Short term multi-steps wind speed forecasting for carbon neutral microgrid by decomposition based hybrid model. Energy Sustain. Dev. 2023, 73, 87–100. [Google Scholar] [CrossRef]

- Wang, J.; Tang, X.; Jiang, W. A deterministic and probabilistic hybrid model for wind power forecasting based improved feature screening and optimal Gaussian mixed kernel function. Expert Syst. Appl. 2024, 251, 123965. [Google Scholar] [CrossRef]

- Li, Y.; Sun, K.; Yao, Q.; Wang, L. A dual-optimization wind speed forecasting model based on deep learning and improved dung beetle optimization algorithm. Energy 2023, 286, 129604. [Google Scholar] [CrossRef]

- Chen, C.; Li, S.; Wen, M.; Yu, Z. Ultra-short term wind power prediction based on quadratic variational mode decomposition and multi-model fusion of deep learning. Comput. Electr. Eng. 2024, 116, 109157. [Google Scholar] [CrossRef]

- Acikgoz, H.; Budak, U.; Korkmaz, D.; Yildiz, C. WSFNet: An efficient wind speed forecasting model using channel attention-based densely connected convolutional neural network. Energy 2021, 233, 121121. [Google Scholar] [CrossRef]

- Sareen, K.; Panigrahi, B.K.; Shikhola, T.; Chawla, A. A robust De-Noising Autoencoder imputation and VMD algorithm based deep learning technique for short-term wind speed prediction ensuring cyber resilience. Energy 2023, 283, 129080. [Google Scholar] [CrossRef]

- Jiang, W.; Liu, B.; Liang, Y.; Gao, H.; Lin, P.; Zhang, D.; Hu, G. Applicability analysis of transformer to wind speed forecasting by a novel deep learning framework with multiple atmospheric variables. Appl. Energy 2023, 353, 122155. [Google Scholar] [CrossRef]

- Xia, H.; Zheng, J.; Chen, Y.; Jia, H.; Gao, C. Short-term wind speed combined forecasting model based on multi-decomposition algorithms and frameworks. Electr. Power Syst. Res. 2023, 227, 109890. [Google Scholar] [CrossRef]

- de Mattos Neto, P.S.G.; de Oliveira, J.F.L.; Domingos, D.S.; Siqueira, H.V.; Marinho, M.H.N.; Madeiro, F. An adaptive hybrid system using deep learning for wind speed forecasting. Inf. Sci. 2021, 581, 495–514. [Google Scholar] [CrossRef]

- Ewees, A.A.; Al-qaness, M.A.A.; Abualigah, L.; Elaziz, M.A. HBO-LSTM: Optimized long short term memory with heap-based optimizer for wind power forecasting. Energy Convers. Manag. 2022, 268, 116022. [Google Scholar] [CrossRef]

- López, G.; Arboleya, P. Short-term wind speed forecasting over complex terrain using linear regression models and multivariable LSTM and NARX networks in the Andes Mountains, Ecuador. Renew. Energy 2021, 183, 351–368. [Google Scholar] [CrossRef]

- Chen, X.; Wang, Y.; Zhang, H.; Wang, J. A novel hybrid forecasting model with feature selection and deep learning for wind speed research. J. Forecast. 2024, 43, 1682–1705. [Google Scholar] [CrossRef]

- Peng, Z.; Peng, S.; Fu, L.; Lu, B.; Tang, J.; Wang, K.; Li, W. A novel deep learning ensemble model with data denoising for short-term wind speed forecasting. Energy Convers. Manag. 2020, 207, 112524. [Google Scholar] [CrossRef]

- Wu, J.; Li, N.; Zhao, Y.; Wang, J. Usage of correlation analysis and hypothesis test in optimizing the gated recurrent unit network for wind speed forecasting. Energy 2021, 242, 122960. [Google Scholar] [CrossRef]

- Neshat, M.; Nezhad, M.M.; Abbasnejad, E.; Mirjalili, S.; Tjernberg, L.B.; Garcia, D.A.; Alexander, B.; Wagner, M. A deep learning-based evolutionary model for short-term wind speed forecasting: A case study of the Lillgrund offshore wind farm. Energy Convers. Manag. 2021, 236, 114002. [Google Scholar] [CrossRef]

- Joseph, L.P.; Deo, R.C.; Prasad, R.; Salcedo-Sanz, S.; Raj, N.; Soar, J. Near real-time wind speed forecast model with bidirectional LSTM networks. Renew. Energy 2022, 204, 39–58. [Google Scholar] [CrossRef]

- Wang, J.; Qian, Y.; Zhang, L.; Wang, K.; Zhang, H. A novel wind power forecasting system integrating time series refining, nonlinear multi-objective optimized deep learning and linear error correction. Energy Convers. Manag. 2023, 299, 117818. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, H.; Wang, J.; Cheng, X.; Wang, T.; Zhao, Z. Ensemble optimization approach based on hybrid mode decomposition and intelligent technology for wind power prediction system. Energy 2024, 292, 130492. [Google Scholar] [CrossRef]

- Chen, H.; Birkelund, Y.; Zhang, Q. Data-augmented sequential deep learning for wind power forecasting. Energy Convers. Manag. 2021, 248, 114790. [Google Scholar] [CrossRef]

- Lv, S.X.; Wang, L. Deep learning combined wind speed forecasting with hybrid time series decomposition and multi-objective parameter optimization. Appl. Energy 2022, 311, 118674. [Google Scholar] [CrossRef]

- Ma, Z.; Mei, G. A hybrid attention-based deep learning approach for wind power prediction. Appl. Energy 1960, 323, 119608. [Google Scholar] [CrossRef]

- Joseph, L.P.; Deo, R.C.; Casillas-Pérez, D.; Prasad, R.; Raj, N.; Salcedo-Sanz, S. Short-term wind speed forecasting using an optimized three-phase convolutional neural network fused with bidirectional long short-term memory network model. Appl. Energy 2024, 359, 122624. [Google Scholar] [CrossRef]

- Liu, Z.F.; Liu, Y.Y.; Chen, X.R.; Zhang, S.R.; Luo, X.F.; Li, L.L.; Yang, Y.Z.; You, G.D. A novel deep learning-based evolutionary model with potential attention and memory decay-enhancement strategy for short-term wind power point-interval forecasting. Appl. Energy 2024, 360, 122785. [Google Scholar] [CrossRef]

- Chen, W.; Zhou, H.; Cheng, L.; Xia, M. Prediction of regional wind power generation using a multi-objective optimized deep learning model with temporal pattern attention. Energy 2023, 278, 127942. [Google Scholar] [CrossRef]

- Liu, Z.H.; Wang, C.T.; Wei, H.L.; Chen, L.; Li, X.H.; Lv, M.Y. An Adaptive Interval Construction Based GRU Model for Short-Term Wind Speed Interval Prediction Using Two Phase Search Strategy. IEEE Open J. Signal Process. 2023, 4, 375–389. [Google Scholar] [CrossRef]

- Zhang, C.; Qiao, X.; Zhang, Z.; Wang, Y.; Fu, Y.; Nazir, M.S.; Peng, T. Simultaneous forecasting of wind speed for multiple stations based on attribute-augmented spatiotemporal graph convolutional network and tree-structured parzen estimator. Energy 2024, 295, 131058. [Google Scholar] [CrossRef]

- Liu, L.; Liu, J.; Ye, Y.; Liu, H.; Chen, K.; Li, D.; Dong, X.; Sun, M. Ultra-short-term wind power forecasting based on deep Bayesian model with uncertainty. Renew. Energy 2023, 205, 598–607. [Google Scholar] [CrossRef]

- Zhong, L.; Wu, P.; Pei, M. Wind power generation prediction during the COVID-19 epidemic based on novel hybrid deep learning techniques. Renew. Energy 1986, 222, 119863. [Google Scholar] [CrossRef]

- Chen, X.; Yu, R.; Ullah, S.; Wu, D.; Li, Z.; Li, Q.; Qi, H.; Liu, J.; Liu, M.; Zhang, Y. A novel loss function of deep learning in wind speed forecasting. Energy 2021, 238, 121808. [Google Scholar] [CrossRef]

- Meka, R.; Alaeddini, A.; Bhaganagar, K. A robust deep learning framework for short-term wind power forecast of a full-scale wind farm using atmospheric variables. Energy 1975, 221, 119759. [Google Scholar] [CrossRef]

- Zhang, D.; Hu, G.; Song, J.; Gao, H.; Ren, H.; Chen, W. A novel spatio-temporal wind speed forecasting method based on the microscale meteorological model and a hybrid deep learning model. Energy 2023, 288, 129823. [Google Scholar] [CrossRef]

- Sun, S.; Liu, Y.; Li, Q.; Wang, T.; Chu, F. Short-term multi-step wind power forecasting based on spatio-temporal correlations and transformer neural networks. Energy Convers. Manag. 2023, 283, 116916. [Google Scholar] [CrossRef]

- Zheng, X.; Bai, F.; Zeng, Z.; Jin, T. A new methodology to improve wind power prediction accuracy considering power quality disturbance dimension reduction and elimination. Energy 2023, 287, 129638. [Google Scholar] [CrossRef]

- Bommidi, B.S.; Teeparthi, K.; Kosana, V. Hybrid wind speed forecasting using ICEEMDAN and transformer model with novel loss function. Energy 2022, 265, 126383. [Google Scholar] [CrossRef]

- Gao, Z.; Li, Z.; Xu, L.; Yu, J. Dynamic adaptive spatio-temporal graph neural network for multi-node offshore wind speed forecasting. Appl. Soft Comput. 2023, 141, 110294. [Google Scholar] [CrossRef]

- Yu, C.; Yan, G.; Yu, C.; Mi, X. Attention mechanism is useful in spatio-temporal wind speed prediction: Evidence from China. Appl. Soft Comput. 2023, 148, 110864. [Google Scholar] [CrossRef]

- Geng, X.; Xu, L.; He, X.; Yu, J. Graph optimization neural network with spatio-temporal correlation learning for multi-node offshore wind speed forecasting. Renew. Energy 2021, 180, 1014–1025. [Google Scholar] [CrossRef]

- Fu, W.; Wang, K.; Tan, J.; Zhang, K. A composite framework coupling multiple feature selection, compound prediction models and novel hybrid swarm optimizer-based synchronization optimization strategy for multi-step ahead short-term wind speed forecasting. Energy Convers. Manag. 2019, 205, 112461. [Google Scholar] [CrossRef]

- Yu, M.; Tao, B.; Li, X.; Liu, Z.; Xiong, W. Local and Long-range Convolutional LSTM Network: A novel multi-step wind speed prediction approach for modeling local and long-range spatial correlations based on ConvLSTM. Eng. Appl. Artif. Intell. 2023, 130, 107613. [Google Scholar] [CrossRef]

- Liu, G.; Wang, Y.; Qin, H.; Shen, K.; Liu, S.; Shen, Q.; Qu, Y.; Zhou, J. Probabilistic spatiotemporal forecasting of wind speed based on multi-network deep ensembles method. Renew. Energy 2023, 209, 231–247. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Y.; Dong, Z.; Su, J.; Han, Z.; Zhou, D.; Zhao, Y.; Bao, Y. 2-D regional short-term wind speed forecast based on CNN-LSTM deep learning model. Energy Convers. Manag. 2021, 244, 114451. [Google Scholar] [CrossRef]

- Nazemi, M.; Chowdhury, S.; Liang, X. A Novel Two-Dimensional Convolutional Neural Network-Based an Hour-Ahead Wind Speed Prediction Method. IEEE Access 2023, 11, 118878–118889. [Google Scholar] [CrossRef]

- Zhang, Z.; Yin, J. Spatial-temporal offshore wind speed characteristics prediction based on an improved purely 2D CNN approach in a large-scale perspective using reanalysis dataset. Energy Convers. Manag. 2024, 299, 117880. [Google Scholar] [CrossRef]

- Houran, M.A.; Bukhari, S.M.S.; Zafar, M.H.; Mansoor, M.; Chen, W. COA-CNN-LSTM: Coati optimization algorithm-based hybrid deep learning model for PV/wind power forecasting in smart grid applications. Appl. Energy 2023, 349, 121638. [Google Scholar] [CrossRef]

- Hanifi, S.; Cammarono, A.; Zare-Behtash, H. Advanced hyperparameter optimization of deep learning models for wind power prediction. Renew. Energy 1970, 221, 119700. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, Z. A bilateral branch learning paradigm for short term wind power prediction with data of multiple sampling resolutions. J. Clean Prod. 2022, 380, 134977. [Google Scholar] [CrossRef]

- Yang, Y.; Lang, J.; Wu, J.; Zhang, Y.; Su, L.; Song, X. Wind speed forecasting with correlation network pruning and augmentation: A two-phase deep learning method. Renew. Energy 2022, 198, 267–282. [Google Scholar] [CrossRef]

- Yang, T.; Yang, Z.; Li, F.; Wang, H. A short-term wind power forecasting method based on multivariate signal decomposition and variable selection. Appl. Energy 2024, 360, 122759. [Google Scholar] [CrossRef]

- Wang, K.; Tang, X.Y.; Zhao, S. Robust multi-step wind speed forecasting based on a graph-based data reconstruction deep learning method. Expert Syst. Appl. 2023, 238, 121886. [Google Scholar] [CrossRef]

- Huo, J.; Xu, J.; Chang, C.; Li, C.; Qi, C.; Li, Y. Ultra-short-term wind power prediction model based on fixed scale dual mode decomposition and deep learning networks. Eng. Appl. Artif. Intell. 2024, 133, 108501. [Google Scholar] [CrossRef]

- Zou, R.; Song, M.; Wang, Y.; Wang, J.; Yang, K.; Affenzeller, M. Deep non-crossing probabilistic wind speed forecasting with multi-scale features. Energy Convers. Manag. 2022, 257, 115433. [Google Scholar] [CrossRef]

- Yang, Z.; Peng, X.; Song, J.; Duan, R.; Jiang, Y.; Liu, S. Short-Term Wind Power Prediction Based on Multi-Parameters Similarity Wind Process Matching and Weighed-Voting-Based Deep Learning Model Selection. IEEE Trans. Power Syst. 2023, 39, 2129–2142. [Google Scholar] [CrossRef]

- Zhu, J.; He, Y.; Yang, X.; Yang, S. Ultra-short-term wind power probabilistic forecasting based on an evolutionary non-crossing multi-output quantile regression deep neural network. Energy Convers. Manag. 2024, 301, 118062. [Google Scholar] [CrossRef]

- Guan, S.; Wang, Y.; Liu, L.; Gao, J.; Xu, Z.; Kan, S. Ultra-short-term wind power prediction method combining financial technology feature engineering and XGBoost algorithm. Heliyon 2023, 9, e16938. [Google Scholar] [CrossRef]

- Wang, J.; Guo, H.; Li, Z.; Song, A.; Niu, X. Quantile deep learning model and multi-objective opposition elite marine predator optimization algorithm for wind speed prediction. Appl. Math. Model. 2022, 115, 56–79. [Google Scholar] [CrossRef]

- Chen, F.; Yan, J.; Liu, Y.; Yan, Y.; Tjernberg, L.B. A novel meta-learning approach for few-shot short-term wind power forecasting. Appl. Energy 2024, 362, 122838. [Google Scholar] [CrossRef]

- Meng, A.; Zhang, H.; Yin, H.; Xian, Z.; Chen, S.; Zhu, Z.; Zhang, Z.; Rong, J.; Li, C.; Wang, C.; et al. A novel multi-gradient evolutionary deep learning approach for few-shot wind power prediction using time-series GAN. Energy 2023, 283, 129139. [Google Scholar] [CrossRef]

- Wei, H.; Chen, Y.; Yu, M.; Ban, G.; Xiong, Z.; Su, J.; Zhuo, Y.; Hu, J. Alleviating distribution shift and mining hidden temporal variations for ultra-short-term wind power forecasting. Energy 2024, 290, 130077. [Google Scholar] [CrossRef]

- Du, P.; Yang, D.; Li, Y.; Wang, J. An innovative interpretable combined learning model for wind speed forecasting. Appl. Energy 2024, 358, 122553. [Google Scholar] [CrossRef]

- Dai, X.; Liu, G.P.; Hu, W. An online-learning-enabled self-attention-based model for ultra-short-term wind power forecasting. Energy 2023, 272, 127173. [Google Scholar] [CrossRef]

- Fan, W.; Fu, Y.; Zheng, S.; Bian, J.; Zhou, Y.; Xiong, H. Dewp: Deep expansion learning for wind power forecasting. ACM Trans. Knowl. Discov. Data 2024, 18, 1–21. [Google Scholar] [CrossRef]

- Baggio, R.; Muzy, J.F. Improving probabilistic wind speed forecasting using M-Rice distribution and spatial data integration. Appl. Energy 2024, 360, 122840. [Google Scholar] [CrossRef]

- Huang, X.; Wang, C.; Zhang, S. Research and application of a Model selection forecasting system for wind speed and theoretical power generation in wind farms based on classification and wind conversion. Energy 2024, 293, 130606. [Google Scholar] [CrossRef]

- Santos, V.O.; Rocha, P.A.C.; Scott, J.; Thé, J.V.G.; Gharabaghi, B. Spatiotemporal analysis of bidimensional wind speed forecasting: Development and thorough assessment of LSTM and ensemble graph neural networks on the Dutch database. Energy 2023, 278, 127852. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, H.; Wu, S.; Zhao, Y.; Wang, Z.; Liu, X. Temporal collaborative attention for wind power forecasting. Appl. Energy 2024, 357, 122502. [Google Scholar] [CrossRef]

- Qin, J.; Yang, J.; Chen, Y.; Ye, Q.; Li, H. Two-stage short-term wind power forecasting algorithm using different feature-learning models. Fundam. Res. 2021, 1, 472–481. [Google Scholar] [CrossRef]

- Tang, Y.; Yang, K.; Zhang, S.; Zhang, Z. Wind power forecasting: A hybrid forecasting model and multi-task learning-based framework. Energy 2023, 278, 127864. [Google Scholar] [CrossRef]

| Databases | Search String |

|---|---|

| ACM Digital Library | wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning) |

| IEEE Xplore | ((wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning)) |

| ScienceDirect | wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning) |

| Springer Link | wind AND power AND speed AND forecasting AND prediction AND (deep OR neural OR network OR learning OR DNN) |

| Wiley Online Library | wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning) |

| Databases | Initial Results | Constrain (2020–2024) |

|---|---|---|

| ACM Digital Library | 61,157 | 23,442 |

| IEEE Xplore | 1003 | 821 |

| ScienceDirect | 25,850 | 16,419 |

| Springer Link | 22,297 | 10,376 |

| Wiley Online Library | 33,629 | 13,048 |

| TOTAL | 143,936 | 64,106 |

| Databases | Search String |

|---|---|

| ACM Digital Library | [All: wind] AND [[All: power] OR [All: speed]] AND [[All: forecasting] OR [All: prediction]] AND [[All: deep neural network] OR [All: dnn] OR [All: deep learning]] AND [[Title: “wind speed”] OR [Title: “wind power”]] AND [[Title: forecasting] OR [Title: forecast] OR [Title: prediction]] AND [E-Publication Date: (1 January 2020 TO 31 December 2024)] |

| IEEE Xplore | (((wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning)) AND ((“Document Title”:“Wind Speed” OR “Document Title”:“Wind Power”) AND (“Document Title”:“Forecasting” OR “Document Title”:“prediction”))) AND (“Abstract”:“deep learning” OR “Abstract”:“deep neural network” OR “Abstract”:“DNN”)) |

| ScienceDirect | wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning) AND [Title, abstract or keywords: (“deep learning” OR “deep neural network” OR DNN)] AND [Title: (“Wind Speed” OR “Wind Power”) AND (Forecasting OR forecast OR prediction)] |

| Springer Link | “wind AND power AND speed AND forecasting AND prediction AND (deep OR neural OR network OR learning OR DNN)” within 2020–2024 Remove this filter |

| Wiley Online Library | “wind AND (power OR speed) AND (forecasting OR prediction) AND (deep neural network OR DNN OR deep learning)” anywhere and “(“Wind Speed” OR “Wind Power”) AND (Forecasting OR forecast OR prediction)” in Title and “deep learning” OR “deep neural network” OR “DNN” in Keywords |

| Category | Description | Weight |

|---|---|---|

| a. Strongly Disagree | Clearly lacking | −1.00 |

| b. Disagree | Weakly addressed | −0.50 |

| c. Neither Agree nor Disagree | Moderately addressed or unclear | 0.25 |

| d. Agree | Adequately addressed | 0.50 |

| e. Strongly Agree | Thoroughly addressed | 1.00 |

| Databases | First Phase | Second Phase | Third Phase |

|---|---|---|---|

| ACM | 9 | 5 | 1 |

| IEEE Xplore | 130 | 116 | 4 |

| ScienceDirect | 231 | 207 | 114 |

| Springer Link | 26 | 13 | 0 |

| Wiley | 9 | 8 | 1 |

| TOTAL | 405 | 349 | 120 |

| Model (Single Family) | Studies |

|---|---|

| LSTM | 10 |

| BiLSTM | 10 |

| Other Variants of LSTM | 2 |

| GRU | 5 |

| BiGRU | 2 |

| Other Variants of GRU | 7 |

| Transformer | 1 |

| GNN (2D) | 1 |

| CNN (2D) | 6 |

| Architecture (Two or More Families/Blocks) | Studies |

|---|---|

| BiLSTM-BiGRU | 1 |

| TCN-LSTM | 4 |

| ATT-GRU | 2 |

| ATT-BiGRU | 1 |

| ATT-LSTM | 1 |

| ATT-BiLSTM | 3 |

| GNN-LSTM | 1 |

| GNN-GRU | 1 |

| GNN-BiGRU | 1 |

| GNN-Transformer | 1 |

| CNN-GRU | 1 |

| CNN-LSTM | 3 |

| CNN-BiLSTM | 5 |

| CNN-BiLSTM-ATT | 2 |

| CNN-BiGRU-TCN | 2 |

| Hybrid LSTM/BiLSTM | 6 |

| Hybrid ConvLSTM | 4 |

| Other Hybrid LSTM | 6 |

| Other Hybrid GRU | 2 |

| Other Hybrid BiLSTM | 2 |

| Other Hybrid Transformer | 1 |

| Other Hybrid GNN (2D) | 3 |

| Other Hybrid CNN (2D) | 6 |

| Hybrid Family | Total Occurrences |

|---|---|

| Total Hybrid LSTM | 26 |

| Total Hybrid GRU | 6 |

| Total Hybrid BiLSTM | 19 |

| Total Hybrid BiGRU | 6 |

| Total Hybrid Transformer | 2 |

| Total Hybrid GNN | 5 |

| Total Hybrid CNN | 18 |

| Signal Decomposition Methods | Studies |

|---|---|

| Hybrid VMD | 10 |

| VMD | 9 |

| CEEMDAN | 6 |

| ICEEMDAN | 5 |

| SSA | 5 |

| EEMD | 5 |

| EWT | 4 |

| WT | 3 |

| EMD | 3 |

| CEEMD | 3 |

| DWT | 2 |

| ED | 2 |

| SWT | 1 |

| Optimization Algorithms | Studies |

|---|---|

| GWO and enhancements | 8 |

| PSO and enhancements | 6 |

| GA variants | 4 |

| BO | 3 |

| Other types of terrestrial swarm algorithms | 3 |

| Hybrid and swarm algorithms | 3 |

| DA variants | 2 |

| Other carnivore-inspired swarm algorithms | 2 |

| ACO, CD, CSO, MOCSA, AGO, MOMVO, MOEMPA, WOA | 1 |

| Abbrev. | Performance Criteria | Studies |

|---|---|---|

| RMSE | Root-Mean-Squared Error | 99 |

| MAE | Mean Absolute Error | 96 |

| MAPE | Mean Absolute Percentage Error | 66 |

| Coefficient of Determination | 46 | |

| MSE | Mean Squared Error | 29 |

| PICP | Prediction Interval Coverage Probability | 13 |

| PINAW | Prediction Interval Normalized Average Width | 11 |

| DM | Diebold–Mariano test statistic | 11 |

| R | Pearson’s Correlation Coefficient | 10 |

| CWC | Coverage Width-based Criterion | 10 |

| Country | Studies |

|---|---|

| China | 57 |

| USA | 21 |

| Spain | 8 |

| India | 7 |

| France, Brazil | 4 |

| Canada | 3 |

| Greece, Scotland, Norway, Australia, Netherlands, Germany, Fiji, Sweden | 2 |

| Dataset Division (%) | Studies |

|---|---|

| 80–20% | 23 |

| 70–30% | 11 |

| 90–10% | 10 |

| 80–10–10% | 6 |

| 70–15–15%, | 5 |

| 67–33% | 5 |

| 60–20–20% | 4 |

| 75–25% | 4 |

| 70–20–10% | 2 |

| 92–8% | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manzano, E.A.; Nogales, R.E.; Rios, A. A Systematic Review of Wind Energy Forecasting Models Based on Deep Neural Networks. Wind 2025, 5, 29. https://doi.org/10.3390/wind5040029

Manzano EA, Nogales RE, Rios A. A Systematic Review of Wind Energy Forecasting Models Based on Deep Neural Networks. Wind. 2025; 5(4):29. https://doi.org/10.3390/wind5040029

Chicago/Turabian StyleManzano, Edgar A., Ruben E. Nogales, and Alberto Rios. 2025. "A Systematic Review of Wind Energy Forecasting Models Based on Deep Neural Networks" Wind 5, no. 4: 29. https://doi.org/10.3390/wind5040029

APA StyleManzano, E. A., Nogales, R. E., & Rios, A. (2025). A Systematic Review of Wind Energy Forecasting Models Based on Deep Neural Networks. Wind, 5(4), 29. https://doi.org/10.3390/wind5040029