Abstract

We derive a weakly informative prior for a set of ordered resonance frequencies from Jaynes’ principle of maximum entropy. The prior facilitates model selection problems in which both the number and the values of the resonance frequencies are unknown. It encodes a weakly inductive bias, provides a reasonable density everywhere, is easily parametrizable, and is easy to sample. We hope that this prior can enable the use of robust evidence-based methods for a new class of problems, even in the presence of multiplets of arbitrary order.

1. Introduction

An important problem in the natural sciences is the accurate measurement of resonance frequencies. The problem can be formalized by the following probabilistic model:

where D is the data, are the K resonance frequencies of interest, and I is the prior information about . As an example instance of (1), we refer to the vocal tract resonance (VTR) problem discussed in Section 5 for which D is audio recorded from the mouth of a speaker; are a set of K VTR frequencies, and the underlying model is a sinusoidal regression model. Any realistic problem will include additional model parameters , but these have been silently ignored by formally integrating them out of (1), i.e., .

In this paper, we assume that the likelihood is given, and our task is to choose an uninformative prior from limited prior information I. A conflict arises, however:

The goal of this paper is to describe this conflict (2) and to show how it can be resolved by adopting a specific choice for . This allows robust inference of the number of resonances K in the important case of such limited prior information I, which in turn enables accurate measurement of the resonance frequencies with standard methods such as nested sampling [1] or reversible jump MCMC [2].

2. Notation

The symbol is intended to convey a vague notion of a generally uninformative or weakly informative prior. Definite choices for are indicated with the subscript i:

where is a placeholder for the hyperparameter specific to . Note that in the plots below and for the experiments in Section 5, the values of the are always set according to Table 1.

Table 1.

The values of the hyperparameters used throughout the paper. All quantities are given in units of Hz.

Each uniquely determines a number of important high-level quantities since the likelihood and data D are assumed to be given. These quantities are the evidence for the model with K resonances:

the posterior:

and the information:

which measures the amount of information obtained by updating from prior to posterior , i.e., , where is the Kullback–Leibler divergence.

3. Conflict

The uninformative priors referenced in (2) are of the independent and identically distributed type:

where is any wide distribution with hyperparameters . A typical choice for g is the uniform distribution over the full frequency bandwidth; other examples include diffuse Gaussians or Jeffreys priors [3,4,5,6,7,8,9].

Second, the limited prior information I in (2) about K implies that the problem will involve model selection, since each value of K implicitly corresponds to a different model for the data. It is, thus, necessary to evaluate and compare evidence for each plausible K.

The conflict between these two elements is due to the label switching problem, which is a well-known issue in mixture modeling, e.g., [10]. The likelihood functions used in models parametrized by resonance frequencies are typically invariant to switching the label k; i.e., the index k of the frequency has no distinguishable meaning in the model underlying the data. The posterior will inherit this exchange symmetry if the prior is of type (7). Thus, if the model parameters are well determined by the data D, the posterior landscape will consist of one primary mode, which is defined as a mode living in the ordered region:

and induced modes, which are identical to the primary mode up to a permutation of the labels k and, thus, live outside of the region . The trouble is that correctly taking into account these induced modes during the evaluation of requires a surprising amount of extra work in addition to tuning the MCMC method of choice, and that is the label switching problem in our setting. In fact, there is currently no widely accepted solution for the label switching problem in the context of mixture models either [11,12]. This is, then, how in (2) uninformative priors are “precluded” by the limited information I: the latter implies model selection, which in turn implies evaluating , which is hampered by the label switching problem due to the exchange symmetry of the former. Therefore, it seems better to try to avoid it by encoding our preference for primary modes directly into the prior. This results in abandoning the uninformative prior in favor of the weakly informative prior , which is proposed in Section 4 as a solution to the conflict.

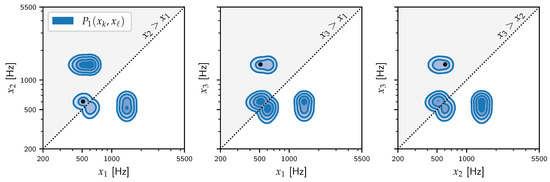

We use the VTR problem to briefly illustrate the label switching problem in Figure 1. The likelihood is described implicitly in Section 5 and is invariant to switching the labels k because the underlying model function (23) of the regression model is essentially a sum of sinusoids, one for each . As frequencies can be profitably thought of as scale variables ([13], Appendix A), the uninformative prior (7) is represented by

where are a common lower and upper bound, and

is the Jeffreys prior, the conventional uninformative prior for a scale variable [although any prior of the form (7) that is sufficiently uninformative would yield essentially the same results.] We have visualized the posterior landscape in Figure 1 by using the pairwise marginal posteriors plotted in blue. Note the exchange symmetry of , which manifests as an (imperfect) reflection symmetry around the dotted diagonal bordering the ordered region . The primary mode can be identified by the black dot; all other modes are induced modes. Integrating all modes to obtain quickly becomes intractable for .

Figure 1.

The exchange symmetry of the posterior for a well-determined instance of the VTR problem from Section 5 with . The pairwise marginal posteriors are shown using the isocontours of kernel density approximations calculated from posterior samples of . For each panel, the diagonal is plotted as a dotted line, and the ordered region is shaded in grey. The black dot marks the mean of the primary mode for this problem.

A Simple Way Out?

A simple method out of the conflict is to break the exchange symmetry by assuming specialized bounds for each :

where with and being hyperparameters specifying the individual bounds. However, in order to enable the model to detect doublets (a resolved pair of two close frequencies such as the primary mode in the leftmost panel in Figure 1), it is necessary to assign overlapping bounds in , presumably by using some heuristic. The necessary degree of overlap increases as the detection of higher order multiplets such as triplets (which can and do occur) is desired, but the more overlap in , the more the label switching problem returns. Despite this issue, there will be cases where we have sufficient prior information I to set the hyperparameters without too much trouble; the VTR problem is such a case for which the overlapping values of up to are given in Table 1.

4. Solution

Our solution to the conflict (2) is a chain of K coupled Pareto distributions:

where

and the hyperparameter is defined as

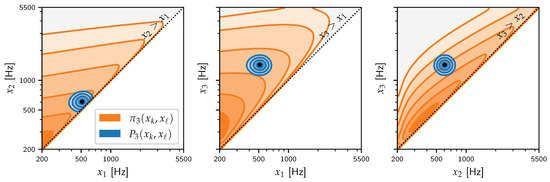

From Figure 2, it can be seen that encodes weakly informative knowledge about K ordered frequencies: (12) and (13) together imply that is defined only for , while nonzero only for . In other words, its support is precisely the ordered region , which solves the label switching problem underlying the conflict automatically, as the exchange symmetry of is broken. This is illustrated in Figure 2, where contracts to a single primary mode, which is just what we would like.

Figure 2.

Contraction of prior () to posterior () for the application of to the VTR problem used in Figure 1. The pairwise marginal prior is obtained by integrating out the third frequency; for example, . Unlike in Figure 1, exhibits only a single mode that coincides with the primary mode as marked by the black dot.

The hyperparameters in (14) are a common lower bound plus K expected values of the resonance frequencies . While the former is generally easily determined, the latter may seem difficult to set given the premise of this paper that we dispose only of limited prior information I. Why do we claim that is only weakly informative if it is parametrized by the expected values of the very things it is supposed to be only weakly informative about? The answer is that for any reasonable amount of data, inference based on is completely insensitive to the exact values of . Therefore, any reasonable guess for will suffice in practice. For example, for the VTR problem, we simply applied a heuristic where we take (see Table 1). This insensitivity is due to the maximum entropy status of and indicates the weak inductive bias it entails. On a more prosaic level, the heavy tails of the Pareto distributions in (12) ensure that the prior will be eventually overwhelmed by the data no matter how a priori improbable the true value of is. More prosaic still, in Section 5.1 below we show quantitatively that for the VTR problem is about as (un)informative as .

4.1. Derivation of

Our ansatz consists of interpreting the as a set of K ordered scale variables that are bounded from below by . Starting from (9) and not bothering with the bounds , we obtain the improper pdf

We can simplify (15) using the one-to-one transformation defined as

which yields (with abuse of notation for brevity)

Since model selection requires proper priors, we need to normalize by adding extra information (i.e., constraints) to it; we propose to simply fix the K first moments . This will yield the Pareto chain prior directly, expressed in space rather than space. The expression for is found by minimizing the Kullback–Leibler divergence [14]

where are the supplied first moments. This variational problem is equivalent to finding by means of Jaynes’ principle of maximum entropy with serving as the invariant measure [15]. Since the exponential distribution is the maximum entropy distribution for a random variable with a fixed first moment , the solution to (18) is

where the rate hyperparameters and

Transforming (19) to space using (16) finally yields (12), but we still need to express in terms of —we might find it hard to pick reasonable values of from limited prior information I. For this, we will need the identity

Constraining and solving for , we obtain , in agreement with (14). Note that the existence of the first marginal moments requires that .

4.2. Sampling from

5. Application: The VTR Problem

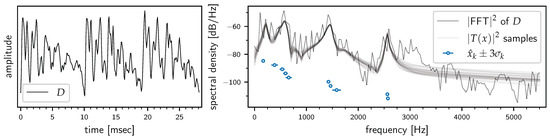

We now present a relatively simple but realistic instance of the problem of measuring resonance frequencies, which will allow us to illustrate the above ideas. The VTR problem consists of measuring human vocal tract resonance (VTR) frequencies for each of five representative vowel sounds taken from the CMU ARCTIC database [16]. The VTR frequencies describe the vocal tract transfer function and are fundamental quantities in acoustic phonetics [17]. The five vowel sounds are recorded utterances of the first vowel in the words . In order to achieve high-quality VTR frequency estimates , only the quasi-periodic steady-state part of the vowel sound is considered for the measurement. The data D, thus, consists of a string of highly correlated pitch periods. See Figure 3 for an illustration of these concepts.

Figure 3.

The VTR problem for the case . Left panel: The data D, i.e., the quasi-periodic steady-state part, consist of 3 highly correlated pitch periods. Right panel: Inferred VTR frequency estimates for at 3 sigma. They describe the power spectral density of the vocal tract transfer function , represented here by 25 posterior samples and compared to the Fast Fourier Transform (FFT) of D. All are well resolved, and most have error bars too small to be seen on this scale.

The measurement itself is formalized as inference using the probabilistic model (1). The model assumed to underlie the data is the sinusoidal regression model introduced in [18]; due to limited space, we only describe it implicitly. The sinusoidal regression model assumes that each pitch period can be modeled as

where is a time series consisting of T samples. The model function

consists of a sinusoidal part (first ∑) and a polynomial trend correction (second ∑). Note the additional model parameters . Formally, given the prior ([18], Section 2.2), the marginal likelihood is then obtained as , where the complete likelihood is implicitly given by (22) and (23). Practically, we just marginalize out from samples obtained from the complete problem .

For inference, the computational method of choice is nested sampling [1] using the dynesty library [19,20,21,22,23], which scales roughly as [24]. Since the VTR problem is quite simple (), we only perform single nested sampling runs and take the obtained and as point estimates. Full details on the experiments and data are available at https://github.com/mvsoom/frequency-prior.

5.1. Experiment I: Comparing and

In Experiment I, we perform a high-level comparison between and in terms of evidence (4) and information (6). The values of the hyperparameters used in the experiment are listed in Table 1. We did not include in this comparison as the label switching problem prevented convergence of nested sampling runs for . The bounds for were based on loosely interpreting the VTRs as formants and consulting formant tables from standard works [25,26,27,28,29,30]. These allowed us to compile bounds up until the fifth formant such that . For , we simply applied a heuristic where we take . We selected empirically (although a theoretical approach is also possible [31]), and was set to the Nyquist frequency. The role of is to truncate in order to avoid aliasing effects, since the support of is unbounded from above. We implemented this by using the following likelihood function in the nested sampling program:

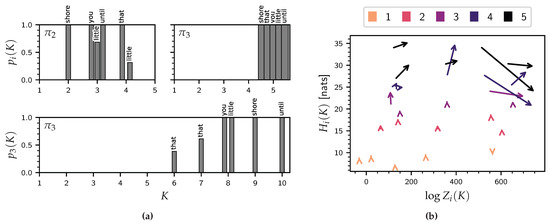

First, we compare the influence of and on model selection. Given , the posterior probability of the number of resonances K is given by the following.

The results in the top row of Figure 4a are striking: while shows individual preferences based on D, prefers unequivocally.

Figure 4.

(a) Model selection in Experiment I (top row) and Experiment II (bottom row). (b) In Experiment I, and are compared in terms of evidence [] and uninformativeness [] for each . The arrows point from to and are color-coded by the value of K. For small values of K, the arrow lengths are too small to be visible on this scale.

Second, in Figure 4b, we compare and directly in terms of differences in evidence [] and uninformativeness [] for each combination .

Arrows pointing eastward indicate . The prior dominates the prior in terms of evidence, for almost all values of K, indicating that places its mass in regions of higher likelihood or, equivalently, that the data were much more probable under than . This implies that the hint of at more structure beyond should be taken serious–we investigate this in Section 5.2.

Arrows pointing northward indicate , i.e., is less informative than , since more information is gained by updating from to than from to . It is observed that and are roughly comparable in terms of (un)informativeness.

5.2. Experiment II: ‘Free’ Analysis

We now freely look for more structure in the data by letting K vary up until . This goes beyond the capacities of (because of the label switching problem) and (because no data are available to set the bounds). Thus, the great advantage of is that we can use a simple heuristic to set and let the model perform the discovering without worrying about convergence issues or the obtained evidence values. The bottom row in Figure 4a shows that model selection for the VTR problem is well-defined, with the most probable values of , except for . That case is investigated in Figure 3, where the need for more VTRs (higher K) is apparent from the unmodeled broad peak centered at around 3000 Hz in the FFT power spectrum (right panel). Incidentally, this spectrum also shows that spectral peaks are often resolved into more than one VTR, which underlines the importance of using a prior that enables trouble-free handling of multiplets of arbitrary order. A final observation from the spectrum is the fact that the inferred differs substantially from the supplied values in (Table 1), which hints at the weak inductive bias underlying .

6. Discussion

It is only when the information in the prior is comparable to the information in the data that the prior probability can make any real difference in parameter estimation problems or in model selection problems.([32], p. 9)

Although the weakly informative prior for resonance frequencies is meant to be overwhelmed, its practical advantage (i.e., solving the label switching problem) will nonetheless persist, making a real difference in model selection problems even when “the information in the prior” is much smaller than “the information in the data”. In this sense, is quite unlike the prior referenced in the above quote. Since it will be overwhelmed, all it has to do is provide a reasonable density everywhere (which it does), be easily parametrizable (which it is), and be easy to sample from (which it is).

Thus, we hope that this prior can enable the use of robust evidence-based methods for a new class of problems, even in the presence of multiplets of arbitrary order. The prior is compatible with off-the-shelf exploration algorithms and solves the label switching problem without any special tuning or post processing. It would be interesting to compare it to other approaches, e.g., [33], especially in terms of exploration efficiency. It is valid for any collection of scale variables that is intrinsically ordered, of which frequencies and wavelengths seem to be the most natural examples. Some examples of recent work where the prior could be applied directly are:

- Nuclear magnetic resonance (NMR) spectroscopy [34];

- Resonant ultrasound spectroscopy (a standard method in material science) [35];

- In the analysis of atomic spectra [36], such as X-ray diffraction [37];

- Accurate modeling of instrument noise (in this case LIGO/Virgo noise) [38];

- Model-based Bayesian analysis in acoustics [39].

Author Contributions

Conceptualization, writing, methodology, and analysis: M.V.S. Supervision: B.d.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Flemish AI plan and by the Research Foundation Flanders (FWO) under grant number G015617N.

Acknowledgments

We would like to thank Roxana Radulescu, Timo Verstraeten, and Yannick Jadoul for helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Skilling, J. Nested Sampling for General Bayesian Computation. Bayesian Anal. 2006, 1, 833–859. [Google Scholar] [CrossRef]

- Green, P.J. Reversible Jump Markov Chain Monte Carlo Computation and Bayesian Model Determination. Biometrika 1995, 82, 711–732. [Google Scholar] [CrossRef]

- Mark, Y.Z.; Hasegawa-johnson, M. Particle Filtering Approach to Bayesian Formant Tracking. In Proceedings of the IEEE Workshop on Statistical Signal Processing, St. Louis, MO, USA, 28 September–1 October 2003. [Google Scholar]

- Zheng, Y.; Hasegawa-Johnson, M. Formant Tracking by Mixture State Particle Filter. In Proceedings of the 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, Montreal, QC, Canada, 17–21 May 2004; Volume 1, pp. 1–565. [Google Scholar] [CrossRef]

- Yan, Q.; Vaseghi, S.; Zavarehei, E.; Milner, B.; Darch, J.; White, P.; Andrianakis, I. Formant Tracking Linear Prediction Model Using HMMs and Kalman Filters for Noisy Speech Processing. Comput. Speech Lang. 2007, 21, 543–561. [Google Scholar] [CrossRef]

- Mehta, D.D.; Rudoy, D.; Wolfe, P.J. Kalman-Based Autoregressive Moving Average Modeling and Inference for Formant and Antiformant Tracking. J. Acoust. Soc. Am. 2012, 132, 1732–1746. [Google Scholar] [CrossRef]

- Shi, Y.; Chang, E. Spectrogram-Based Formant Tracking via Particle Filters. In Proceedings of the (ICASSP ’03), 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, Hong Kong, China, 6–10 April 2003; Volume 1, p. 1. [Google Scholar] [CrossRef]

- Deng, L.; Lee, L.J.; Attias, H.; Acero, A. Adaptive Kalman Filtering and Smoothing for Tracking Vocal Tract Resonances Using a Continuous-Valued Hidden Dynamic Model. IEEE Trans. Audio Speech Lang. Process. 2007, 15, 13–23. [Google Scholar] [CrossRef]

- Luberadzka, J.; Kayser, H.; Hohmann, V. Glimpsed Periodicity Features and Recursive Bayesian Estimation for Modeling Attentive Voice Tracking. Int. Congr. Acoust. 2019, 9, 8. [Google Scholar]

- Stephens, M. Dealing with Label Switching in Mixture Models. J. R. Stat. Soc. Ser. (Stat. Methodol.) 2000, 62, 795–809. [Google Scholar] [CrossRef]

- Celeux, G.; Kamary, K.; Malsiner-Walli, G.; Marin, J.M.; Robert, C.P. Computational Solutions for Bayesian Inference in Mixture Models. arXiv 2018, arXiv:1812.07240. [Google Scholar]

- Celeux, G.; Fruewirth-Schnatter, S.; Robert, C.P. Model Selection for Mixture Models - Perspectives and Strategies. arXiv 2018, arXiv:1812.09885. [Google Scholar]

- Bretthorst, G.L. Bayesian Spectrum Analysis and Parameter Estimation; Springer: Berlin/Heidelberg, Germany, 1988. [Google Scholar]

- Knuth, K.H.; Skilling, J. Foundations of Inference. Axioms 2012, 1, 38–73. [Google Scholar] [CrossRef] [Green Version]

- Jaynes, E.T. Prior Probabilities. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 227–241. [Google Scholar] [CrossRef]

- Kominek, J.; Black, A.W. The CMU Arctic Speech Databases. In Proceedings of the Fifth ISCA Workshop on Speech Synthesis, Pittsburgh, PA, USA, 14–16 June 2004. [Google Scholar]

- Van Soom, M.; de Boer, B. A New Approach to the Formant Measuring Problem. Proceedings 2019, 33, 29. [Google Scholar] [CrossRef] [Green Version]

- Van Soom, M.; de Boer, B. Detrending the Waveforms of Steady-State Vowels. Entropy 2020, 22, 331. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Speagle, J.S. Dynesty: A Dynamic Nested Sampling Package for Estimating Bayesian Posteriors and Evidences. arXiv 2019, arXiv:1904.02180. [Google Scholar] [CrossRef] [Green Version]

- Feroz, F.; Hobson, M.P.; Bridges, M. MULTINEST: An Efficient and Robust Bayesian Inference Tool for Cosmology and Particle Physics. Mon. Not. R. Astron. Soc. 2009, 398, 1601–1614. [Google Scholar] [CrossRef] [Green Version]

- Neal, R.M. Slice Sampling. Ann. Stat. 2003, 31, 705–767. [Google Scholar] [CrossRef]

- Handley, W.J.; Hobson, M.P.; Lasenby, A.N. POLYCHORD: Nested Sampling for Cosmology. Mon. Not. R. Astron. Soc. 2015, 450, L61–L65. [Google Scholar] [CrossRef]

- Handley, W.J.; Hobson, M.P.; Lasenby, A.N. POLYCHORD: Next-Generation Nested Sampling. Mon. Not. R. Astron. Soc. 2015, 453, 4384–4398. [Google Scholar] [CrossRef] [Green Version]

- Buchner, J. Nested Sampling Methods. arXiv 2021, arXiv:2101.09675. [Google Scholar]

- Peterson, G.E.; Barney, H.L. Control Methods Used in a Study of the Vowels. J. Acoust. Soc. Am. 1952, 24, 175–184. [Google Scholar] [CrossRef]

- Hillenbrand, J.; Getty, L.A.; Clark, M.J.; Wheeler, K. Acoustic Characteristics of American English Vowels. J. Acoust. Soc. Am. 1995, 97, 3099–3111. [Google Scholar] [CrossRef] [Green Version]

- Vallée, N. Systèmes Vocaliques: De La Typologie Aux Prédictions. Ph.D. Thesis, Université Stendhal, Grenoble, France, 1994. [Google Scholar]

- Kent, R.D.; Vorperian, H.K. Static Measurements of Vowel Formant Frequencies and Bandwidths: A Review. J. Commun. Disord. 2018, 74, 74–97. [Google Scholar] [CrossRef]

- Vorperian, H.K.; Kent, R.D.; Lee, Y.; Bolt, D.M. Corner Vowels in Males and Females Ages 4 to 20 Years: Fundamental and F1–F4 Formant Frequencies. J. Acoust. Soc. Am. 2019, 146, 3255–3274. [Google Scholar] [CrossRef] [PubMed]

- Klatt, D.H. Software for a Cascade/Parallel Formant Synthesizer. J. Acoust. Soc. Am. 1980, 67, 971–995. [Google Scholar] [CrossRef] [Green Version]

- de Boer, B. Acoustic Tubes with Maximal and Minimal Resonance Frequencies. J. Acoust. Soc. Am. 2008, 123, 3732. [Google Scholar] [CrossRef] [Green Version]

- Bretthorst, G.L. Bayesian Analysis. II. Signal Detection and Model Selection. J. Magn. Reson. 1990, 88, 552–570. [Google Scholar] [CrossRef]

- Buscicchio, R.; Roebber, E.; Goldstein, J.M.; Moore, C.J. Label Switching Problem in Bayesian Analysis for Gravitational Wave Astronomy. Phys. Rev. D 2019, 100, 084041. [Google Scholar] [CrossRef] [Green Version]

- Wilson, A.G.; Wu, Y.; Holland, D.J.; Nowozin, S.; Mantle, M.D.; Gladden, L.F.; Blake, A. Bayesian Inference for NMR Spectroscopy with Applications to Chemical Quantification. arXiv 2014, arXiv:1402.3580. [Google Scholar]

- Xu, K.; Marrelec, G.; Bernard, S.; Grimal, Q. Lorentzian-Model-Based Bayesian Analysis for Automated Estimation of Attenuated Resonance Spectrum. IEEE Trans. Signal Process. 2019, 67, 4–16. [Google Scholar] [CrossRef]

- Trassinelli, M. Bayesian Data Analysis Tools for Atomic Physics. Nucl. Instruments Methods Phys. Res. Sect. Beam Interact. Mater. Atoms 2017, 408, 301–312. [Google Scholar] [CrossRef] [Green Version]

- Fancher, C.M.; Han, Z.; Levin, I.; Page, K.; Reich, B.J.; Smith, R.C.; Wilson, A.G.; Jones, J.L. Use of Bayesian Inference in Crystallographic Structure Refinement via Full Diffraction Profile Analysis. Sci. Rep. 2016, 6, 31625. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Littenberg, T.B.; Cornish, N.J. Bayesian Inference for Spectral Estimation of Gravitational Wave Detector Noise. Phys. Rev. D 2015, 91, 084034. [Google Scholar] [CrossRef] [Green Version]

- Xiang, N. Model-Based Bayesian Analysis in Acoustics—A Tutorial. J. Acoust. Soc. Am. 2020, 148, 1101–1120. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).