Information System for Detecting Strawberry Fruit Locations and Ripeness Conditions in a Farm †

Abstract

:1. Introduction

2. Methods

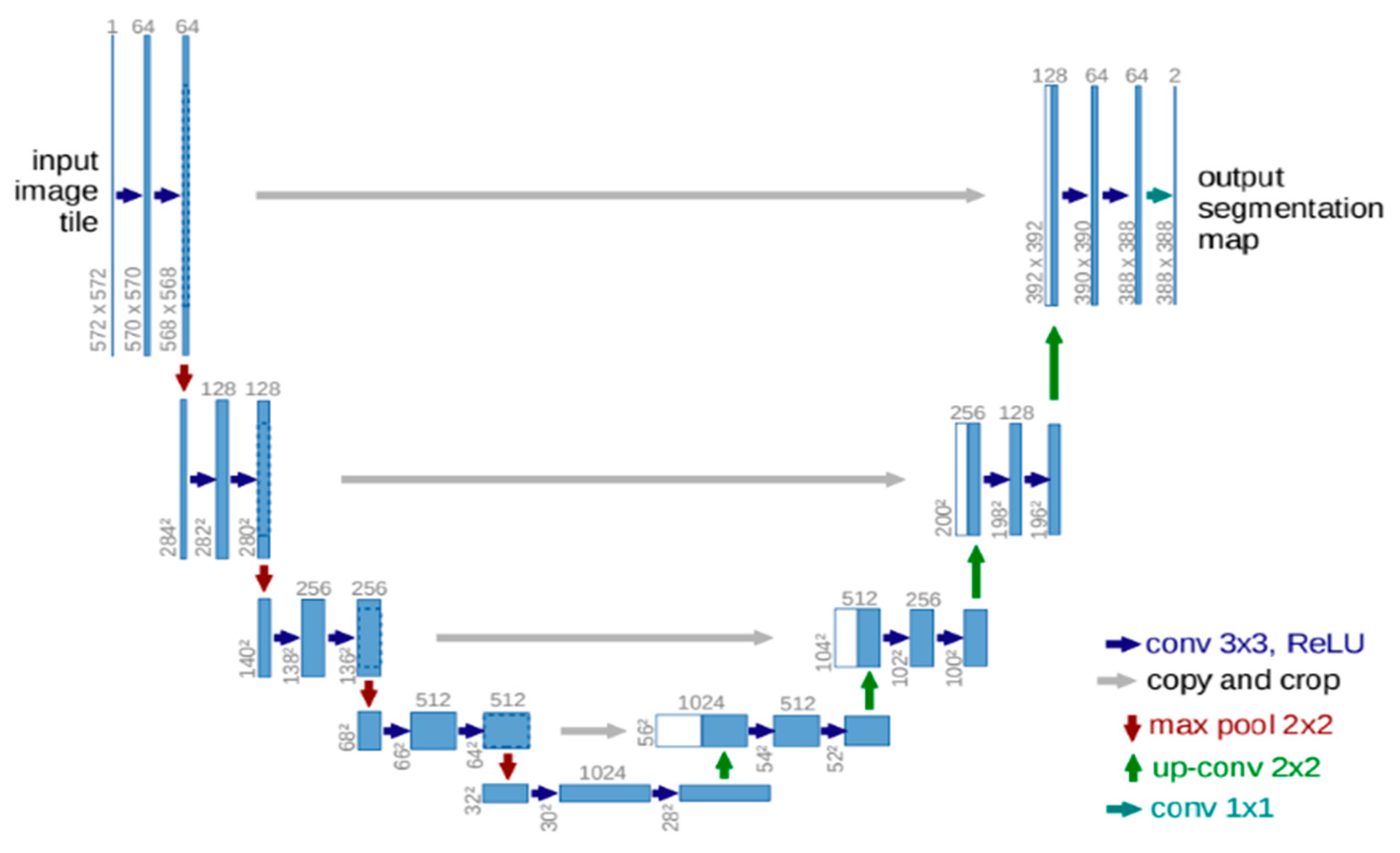

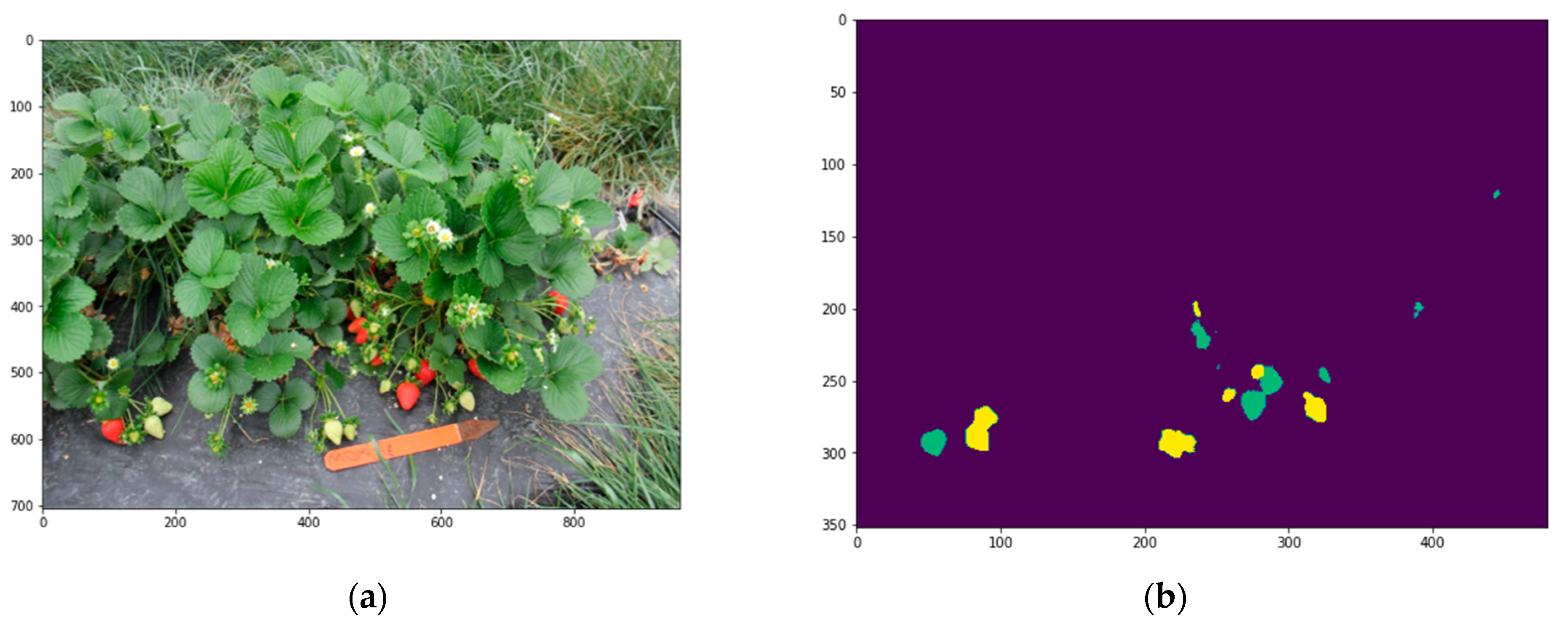

2.1. Fruit Image Semantic Segmentation

2.2. Farm Mapping by SLAM

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- King, A. Technology: The future of agriculture. Nature 2017, 544, S21–S23. [Google Scholar] [CrossRef] [PubMed]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed]

- Benos, L.; Tagarakis, A.C.; Dolias, G.; Berruto, R.; Kateris, D.; Bochtis, D. Machine learning in agriculture: A comprehensive updated review. Sensors 2021, 21, 3758. [Google Scholar] [CrossRef] [PubMed]

- Ramos, P.J.; Prieto, F.A.; Montoya, E.C.; Oliveros, C.E. Automatic fruit count on coffee branches using computer vision. Comput. Electron. Agric. 2017, 137, 9–22. [Google Scholar] [CrossRef]

- Papageorgiou, E.I.; Aggelopoulou, K.; Gemtos, T.A.; Nanos, G.D. Development and evaluation of a fuzzy inference system and a neuro-fuzzy inference system for grading apple quality. Appl. Artif. Intell. 2018, 32, 253–280. [Google Scholar] [CrossRef]

- Genze, N.; Bharti, R.; Grieb, M.; Schultheiss, S.J.; Grimm, D.G. Accurate machine learning-based germination detection, prediction and quality assessment of three grain crops. Plant Methods 2020, 16, 157. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Nazki, H.; Baek, J.; Hong, Y.; Lee, M. Artificial intelligence approach for tomato detection and mass estimation in precision agriculture. Sustainability 2020, 12, 9138. [Google Scholar] [CrossRef]

- Cheein, F.A.; Steiner, G.; Paina, G.P.; Carelli, R. Optimized EIF-SLAM algorithm for precision agriculture mapping based on stems detection. Comput. Electron. Agric. 2011, 78, 195–207. [Google Scholar] [CrossRef]

- Habibie, N.; Nugraha, A.M.; Anshori, A.Z.; Ma’sum, M.A.; Jatmiko, W. Fruit mapping mobile robot on simulated agricultural area in Gazebo Simulator Using Simultaneous Localization and Mapping (SLAM). In Proceedings of the 2017 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 1–4 December 2017; pp. 1–7. [Google Scholar]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Krul, S.; Pantos, C.; Frangulea, M.; Valente, J. Visual SLAM for indoor livestock and farming using a small drone with a monocular camera: A feasibility study. Drones 2021, 5, 41. [Google Scholar] [CrossRef]

- Chen, M.; Tang, Y.; Zou, X.; Huang, Z.; Zhou, H.; Chen, S. 3D global mapping of large-scale unstructured orchard integrating eye-in-hand stereo vision and SLAM. Comput. Agric. 2021, 187, 106237. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE international conference on computer vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Hashemi-Beni, L.; Gebrehiwot, A. Deep learning for remote sensing image classification for agriculture applications. In Proceedings of the International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences, Virtual, 22–26 June 2020; pp. 51–54. [Google Scholar]

- Wang, C.; Du, P.; Wu, H.; Li, J.; Zhao, C.; Zhu, H. A cucumber leaf disease severity classification method based on the fusion of DeepLabV3+ and U-Net. Comput. Electron. Agric. 2021, 189, 106373. [Google Scholar] [CrossRef]

- Wada, K. Labelme: Image Polygonal Annotation with Python. Available online: https://github.com/wkentaro/labelme (accessed on 10 February 2022).

- Kümmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, T.; Chopra, N.; Samtani, J. Information System for Detecting Strawberry Fruit Locations and Ripeness Conditions in a Farm. Biol. Life Sci. Forum 2022, 16, 22. https://doi.org/10.3390/IECHo2022-12488

Liu T, Chopra N, Samtani J. Information System for Detecting Strawberry Fruit Locations and Ripeness Conditions in a Farm. Biology and Life Sciences Forum. 2022; 16(1):22. https://doi.org/10.3390/IECHo2022-12488

Chicago/Turabian StyleLiu, Tianchen, Nikhil Chopra, and Jayesh Samtani. 2022. "Information System for Detecting Strawberry Fruit Locations and Ripeness Conditions in a Farm" Biology and Life Sciences Forum 16, no. 1: 22. https://doi.org/10.3390/IECHo2022-12488

APA StyleLiu, T., Chopra, N., & Samtani, J. (2022). Information System for Detecting Strawberry Fruit Locations and Ripeness Conditions in a Farm. Biology and Life Sciences Forum, 16(1), 22. https://doi.org/10.3390/IECHo2022-12488