1. Introduction

In the rapidly evolving field of natural language processing (NLP), lexical networks have emerged as pivotal tools for understanding and manipulating language [

1,

2,

3]. These networks, which represent the semantic and syntactic relationships between words, are crucial for many NLP tasks, such as word sense disambiguation [

4,

5] or sense labelling [

6], and in language education. Despite their importance, the traditional construction of lexical networks has been a labor-intensive process, heavily reliant on expert lexicographers and manual annotation.

Recent advancements in large language models (LLMs), such as GPT-4o, have opened new avenues for automating and scaling the creation of lexical resources. These models, trained on vast amounts of text data, have demonstrated remarkable abilities in generating text, translating languages, and understanding complex instructions [

7]. However, their potential for constructing lexical networks remains largely unexplored. This research aims to contribute in that direction.

These networks, which represent semantic and syntactic relationships between words, are crucial for advancing NLP beyond simple keyword matching to a deeper level of semantic understanding. The importance of a multi-relational lexical network lies in its ability to model the world knowledge embedded in language. Each type of semantic relation serves a distinct purpose for text extraction and analytics applications. For instance, Hierarchical Relations (Hypernymy and Hyponymy), i.e., “is-a” relationships, are fundamental for semantic search, query understanding, and knowledge generalization. A search engine equipped with this knowledge can understand that a query for the Japanese term 乗り物 norimono ‘vehicle’ should also retrieve documents mentioning its hyponyms such as 車 kuruma—car, バス basu ‘bus’, and 自転車 jitensha ‘bicycle’. This allows for more intelligent information retrieval and robust text classification. On the other hand, Part–Whole Relations (Meronymy and Holonomy), i.e., “is-part-of” relationships, are essential for applications requiring compositional knowledge, such as question-answering and event extraction. A system that knows an エンジン enjin ‘engine ‘is a meronym of kuruma ‘car’ can correctly infer that “the engine failed” is a statement about a problem with the car. This enables deeper reasoning and more accurate information extraction from text. Finally, Associative Relations (Synonymy and Co-hyponymy) are vital for query expansion and recognizing paraphrasing, ensuring that terms such as 物 mono ‘thing’ and 物品 buppin ‘goods’ are treated as semantically equivalent where appropriate. Co-hyponymy, which links terms sharing a common hypernym (e.g., “car” and “bus”), is critical for word sense disambiguation and building recommendation systems, as the presence of one sibling term can provide strong contextual clues for another. By constructing a comprehensive network that encodes these diverse relationships, we provide a foundational resource that can significantly enhance the performance and sophistication of a wide range of NLP applications.

It is also important to contextualize the term ‘resource’ in the era of large language models. While traditional low-resource NLP has focused on minimizing the dependency on large, annotated corpora, the rise of LLMs introduces a paradigm shift. Our approach, while reliant on a significant computational resource, the GPT-4o model is designed to be “low-resource” in terms of human and language-specific assets. The traditional construction of lexical networks like WordNet requires immense investment in expert lexicographers, manual annotation, and years of development. Our methodology automates this labor-intensive process, significantly reducing the need for this specialized human expertise and pre-existing, manually curated linguistic datasets. This work therefore demonstrates how leveraging a general, albeit large, computational model can drastically lower the barrier to creating rich, large-scale lexical resources, especially for languages where such resources are underdeveloped.

In our previous work, we introduced an innovative approach to developing Japanese lexical networks using LLMs, specifically GPT-4o. By leveraging the extensive synonym and antonym generation capabilities of this model, we established a structured and scalable network, encompassing over 100,000 lexical nodes and over 190,000 edges, i.e., synonyms, antonyms and their mutual senses and mutual domains, across Japanese vocabulary. This foundational study emphasized the effectiveness of combining AI-generated data with graph-based techniques, demonstrating the potential of LLMs to complement traditional lexicographic methods [

8]. To evaluate our approach, we referenced traditional Japanese lexical resources, such as Word List by Semantic Principles (WLSP) [

9], as well as lists of synonyms from web dictionaries such as

Weblio [

10] or

Goo dictionary [

11] which use resources from Japanese WordNet [

12,

13]. While the Japanese corpus constitutes a modest 0.11% of the total training dataset for GPT-3 [

14], this still represents a significant volume of data that can be leveraged for constructing robust lexical networks. Although similar data specifics for GPT-4 are not available, the ongoing development of Japanese LLMs [

15,

16] suggests a growing potential for these models to enhance lexical resources.

Building on our previous work, the present study extends the initial approach and methodology by incorporating additional semantic relations—such as hyponymy, hypernymy, meronymy, and holonomy—thereby enriching the lexical network with a broader range of relationship types. These relationships offer deeper insights into the structure of Japanese lexicon, facilitating applications that benefit from an intricate understanding of word associations. Our methodology follows the same principles described in the previous research: it employs GPT-4o to iteratively generate and validate complex word relations, followed by their integration into an enriched lexical graph that reflects the hierarchical and associative dimensions of Japanese lexicon.

In this paper we aim to provide enhancements of the methodology for constructing and enriching lexical networks with additional lexemes and multifaceted semantic relationships as well as to present a viable quantitative comparison of the results with existing lexical resources, such as Japanese WordNet. Our previous study established the foundational methodology for using an LLM to build a single-relation (synonymy/antonymy) lexical graph for Japanese. The current research represents a significant expansion in both methodology and scope. The primary contribution, besides the generation of additional relations, includes the development and validation of a multi-relational framework. This required substantial methodological advancements, including:

Designing and testing novel structured prompts for more complex hierarchical (hypernymy, hyponymy) and part–whole (meronymy, holonomy) relations, which are fundamentally different from associative relations, such as synonymy.

Enhancing the graph construction algorithm to handle a multi-layered, directed graph where nodes can have multiple, distinct relationship types simultaneously. This includes managing inverse relations (e.g., hyponym is the inverse of hypernym) automatically.

A new and more complex evaluation methodology, including the comparative analysis between different relation types the soft-matching approach, and the confusion matrix analysis against WordNet, which were not part of the previous work.

Much like a thesaurus, our resource can be utilized for exploring synonyms and semantically related concepts. Although we did not utilize the ontological structures from resources such as WLSP, they may serve as important comparative frameworks for assessing the adaptability of our system. Our results illustrate the potential for this framework to extend across languages and domains in NLP and support applications in language education through advanced semantic representations. This aligns with the findings of research [

17], which employed ChatGPT 4 to predict semantic categories of basic vocabulary nouns from the Japanese Learners’ Word List, available online:

https://jhlee.sakura.ne.jp/JEV/ (accessed on 31 October 2024) [

18].

Building upon our initial findings, which demonstrated that nearly 20% of extracted nouns are (near) synonyms while others exhibit various relation types such as hyponymy, hypernymy, and meronymy, we have developed an innovative approach that leverages these insights. This novel methodology involves a systematic pipeline that begins with lexical input, processes through LLM output, and dynamically stores the results within a graph structure for an efficient extraction of lexical relations. A key advancement in our approach combines the utilization of OpenAI’s structured output capabilities, which ensures that the LLM outputs are fully structured and ready for integration into lexical networks, with graph structured processing of lexical attributes.

Our key contributions include:

Development of algorithms for efficient graph extraction and storage.

Creation of a comprehensive JSON dataset containing the extracted lexical edges, i.e., relations between two lexemes (nodes in the graph).

Comparison with the existing lexical relation resources, i.e., Japanese WordNet.

The updated lexical network, along with the scripts used for its construction, is available in our project repository [

19] and represented as the web app at

https://jln.syntagent.com/ (accessed on 15 October 2025). Researchers are encouraged to utilize and build upon this resource for further exploration in computational linguistics. This study underscores the synergy between AI-driven data generation and traditional lexicographic expertise, providing a scalable and adaptable framework for constructing comprehensive and nuanced lexical networks across diverse linguistic applications.

2. Methods

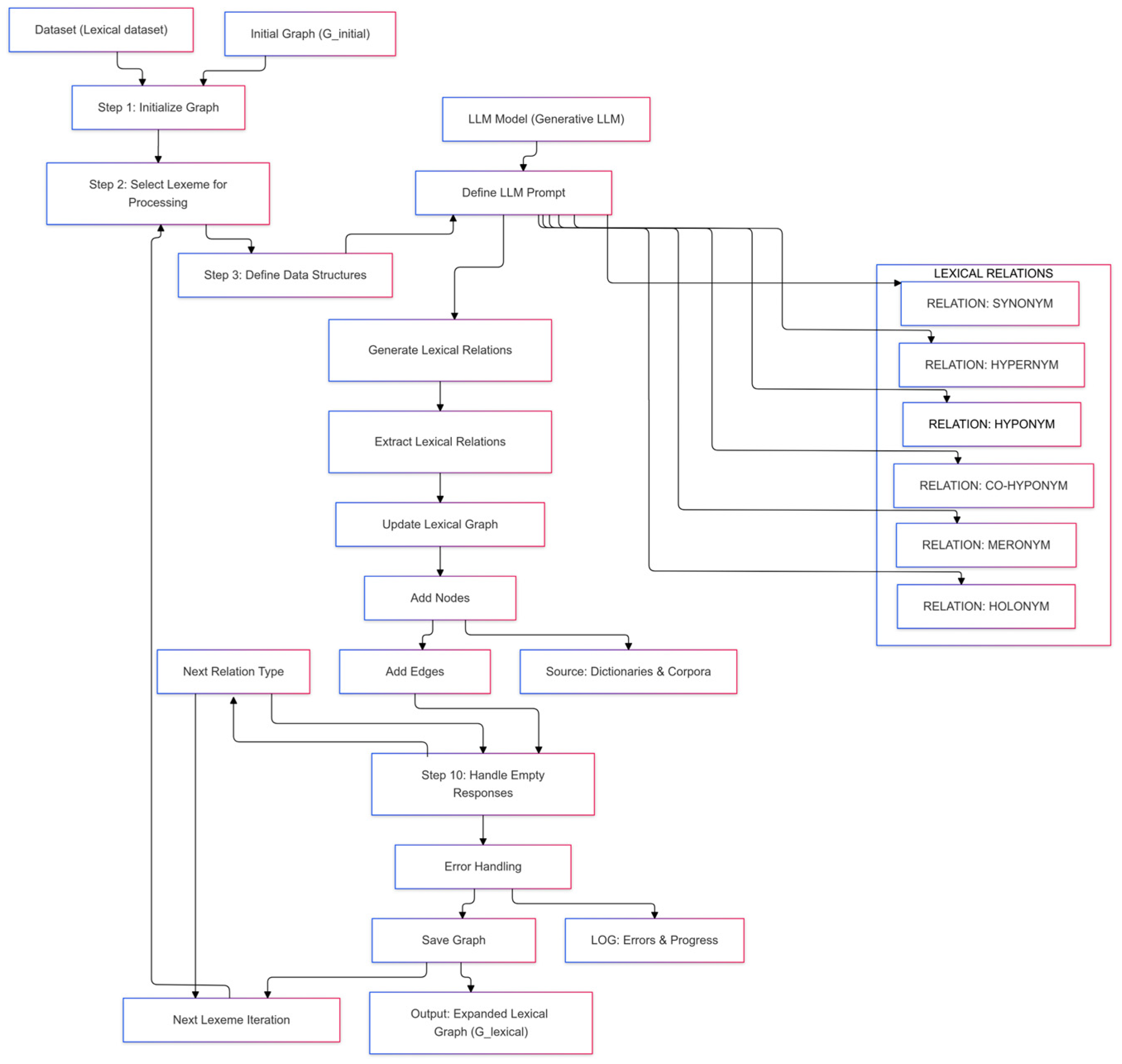

The methodology for constructing the Japanese lexical network is a systematic and automated pipeline designed to iteratively expand an initial lexical graph by leveraging the generative capabilities of a Large Language Model (LLM). This process, visualized in

Figure 1, ensures a structured and scalable approach to data extraction and network enrichment. The workflow begins by loading an initial lexical dataset and, if available, a pre-existing graph file (Initial Graph (G_initial)). The process then proceeds as follows:

Step 1: Initialize Graph: The system first initializes the graph structure, either by creating a new graph object or by loading a previously saved version. This allows the process to be paused and resumed, ensuring continuity and preserving previously extracted data.

Step 2: Select Lexeme for Processing: The core of the methodology is a nested iterative process. The outer loop begins by selecting a source lexeme from the initial dataset for processing.

Inner Loop for Relation Extraction: For each source lexeme, an inner loop cycles through a predefined set of six lexical relation types (SYNONYM, HYPERNYM, HYPONYM, CO-HYPONYM, MERONYM, and HOLONYM), as shown in the LEXICAL RELATIONS box.

First, the system defines the necessary data structures to handle the LLM’s output (Step 3: Define Data Structures).

A structured prompt is then dynamically generated (Define LLM Prompt) for the current lexeme and relation type.

This prompt is sent to LLM, which processes the request to Generate Lexical Relations.

Graph Updating: The LLM’s response, which contains potential target lexemes and associated semantic metadata, is systematically parsed (Extract Lexical Relations). This new information is then used to Update Lexical Graph. This update involves two key actions:

Add Nodes: If a target lexeme from the LLM’s response does not already exist in the graph, a new node is created. To enrich this new node, the system can cross-reference external Sources: Dictionaries & Corpora.

Add Edges: A directed edge representing the specific lexical relationship is added between the source and target nodes.

Error Handling and Data Integrity: To ensure a robust process, the system includes modules for managing empty or invalid LLM responses (Step 10: Handle Empty Responses) and logs all errors and progress for monitoring (Error Handling and LOG: Errors & Progress).

Saving and Iteration: After the inner loop has completed for all relation types for a single source lexeme, the entire graph is saved to a file (Save Graph). This ensures data integrity and prevents data loss. The outer loop then proceeds to the Next Lexeme Iteration until all source lexemes in the initial dataset have been processed.

This modular and iterative design enables the continuous and scalable expansion of the final output: the Expanded Lexical Graph (G_lexical).

While these methods share characteristics with algorithmic workflows, they are better described as a modular system, combining data structures, machine learning models, and graph-based operations.

2.1. Data Sources and Preprocessing

This study utilizes selections of nouns from Vocabulary Database for Reading Japanese (VDRJ), for Japanese language learners [

20] as the initial data source. This database includes over 60,000 Japanese words, labelled for their levels, and for this research, we chose only nouns of pre-2010 JLPT levels 1–4. We began by filtering the dataset to obtain specific categories of nouns: 3215 general common nouns, 1609 general common nouns that can function as verbal nouns, 18 general common nouns that may function as nominal adjectives (-na adjectives), and 13 general common nouns that may function as adverbs. In the initial construction of the synonym graph, we extracted 6200 nouns classified as N0. This process resulted in a network comprising 11,055 unique Japanese lexemes. From this set, we selected lexemes categorized at levels 1–4 of the Japanese Language Proficiency Test (JLPT) to construct a new multi-relational lexical graph for the general Japanese language.

2.2. Algorithmic Extraction of Lexical Data Large Using Language Model (LLM)

The system architecture is driven by a custom Python version 3.12 script that interfaces directly with OpenAI’s GPT-4o model, a large language model (LLM) capable of generating extensive lexical relations [

21]. The extraction pipeline operates as an algorithm, shown as Algorithm 1, with following features:

| Algorithm 1: Lexical Network Expansion Using LLM |

Input: Lexical dataset (lexeme, POS, proficiency, register, frequency, …); LLM (e.g., GPT-4o or other); initial graph G_initial.

Output: Expanded graph G_lexical with nodes = lexemes and edges = {synonym, hypernym, hyponym, co-hyponym, meronym, holonym, mutual domain, mutual sense}.

# StepLoad G ← G_initial from storage. Select the next lexeme x from the processing list. Define a LexicalRelation Pydantic model and JSON encoder for relation records. Draft prompts for x per relation type R ∈ {SYN, HYPER, HYPO, COHYPO, MERO, HOLO} with target counts and inference notes. Include in each prompt the set of already-linked targets for (x, R) to avoid duplicates. Query the LLM with ⟨x, R⟩ and receive candidate relations and attributes. Parse the response into validated, JSON-serializable LexicalRelation objects. For each candidate (x → y, R): skip if an identical edge already exists. If y ∉ V(G), verify in external dictionary/corpus; add node y with (kanji, kana, gloss, corpus features). Add edge (x, y, R, attributes). If R is undirected (SYN, COHYPO), also add (y, x, R). If R is hierarchical, add the inverse (HYPER ↔ HYPO) with proper attributes. Enforce uniqueness constraints per (x, y, R) to maintain integrity. Log errors/warnings; append progress to a checkpoint text file. Persist G to disk after each lexeme (atomic save). Repeat from Step 2 until the seed list is exhausted; return G_lexical.

|

2.3. Output Data Structures

Each filtered lexeme from the VDRJ is fed to the LLM (GPT-4o), which is systematically prompted to generate several types of lexical relations. Through structured prompts, the system elicits synonyms and additional hierarchical relationships, such as hypernyms and hyponyms, as well as part–whole relations like meronyms and holonyms (up to 10 entries per lexeme per relation). This range of relationships ensures a multifaceted representation of each lexeme within the network. To ensure consistency across the model’s outputs, each lexical relationship is parsed into a predefined format using Pydantic models [

22].

This structured output format, exported as JSON, includes fields such as:

synonymity_strength: A numerical value indicating the strength of the synonym relationship between the source word and the target word, on a scale from 0 to 1. A value closer to 1 means the words are very similar or nearly identical in meaning, while a value closer to 0 indicates weaker synonymy.

relation_type: Specifies the type of lexical relationship between the source and target word. Possible values include:

SYNONYM: Words that have the same or very similar meaning.

HYPERNYM: A broader term that encompasses the source term (e.g., “vehicle” is a hypernym for “car”).

HYPONYM: A narrower term that is an instance of a broader term (e.g., “car” is a hyponym for “vehicle”).

CO-HYPONYM: Words that share the same hypernym (e.g., “car” and “truck” are co-hyponyms because they are both hyponyms of “vehicle”).

MERONYM: A part of a whole (e.g., “wheel” is a meronym for “car”).

HOLONYM: A whole that contains a part (e.g., “car” is a holonym for “wheel”).

relation_explanation: A brief explanation in English that describes how the source and target word are related. It provides additional context and understanding of the nature of their relationship.

mutual_domain: The shared domain or category to which both words belong. This helps identify the context or area of knowledge where the words are related (e.g., “medicine,” “technology,” “nature”).

By formalizing the output using the OpenAI structured output [

23], the system creates a uniform dataset that can be integrated directly into the graph structure, with each relation pre-annotated with semantic metadata. This structuring is critical for ensuring that the model’s outputs are both coherent and readily applicable to graph-based data storage.

2.4. Graph Construction

The core of the lexical network system lies in the graph construction process. Using NetworkX Python library (version 3.5) [

24], each extracted lexical relation is modelled as a directed edge between source and target nodes with specified relation type in a multi directed graph, creating a scalable network structure. The script first attempts to load an existing graph file, serving as a cumulative repository of previously extracted lexical data. This incremental loading approach enables continuity, as new lexemes and relationships can be added iteratively without recreating the network from scratch.

Using a system of iterative prompts, the LLM is directed to generate specific types of lexical relationships per relation type (e.g., synonymy, hypernymy, co-hyponymy, meronymy, holonymy). Each relation type adds a unique layer of semantic information to the network, allowing for detailed semantic analysis. Each extracted relationship is represented as an edge in the graph, connecting a source node (lexeme) with a target node (related lexeme). Edges carry attributes specifying:

relation_type;

synonymity_strength;

relation_type_strength;

relation_explanation;

mutual_sense;

mutual_domain.

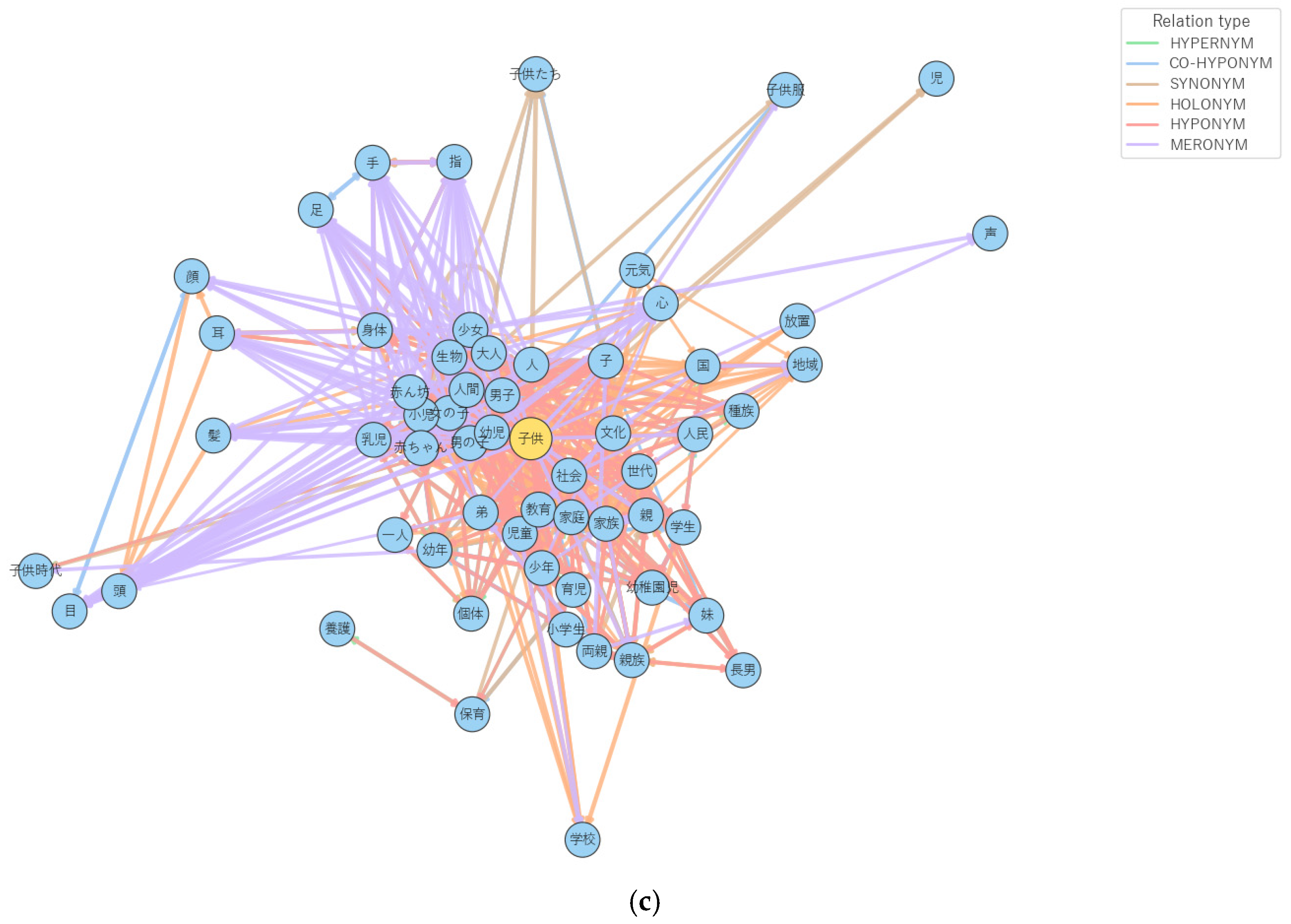

To provide a concrete example of the network’s multi-relational depth and complexity,

Figure 2a–c visualizes the actual subgraph of 1-hop relations centered on the Japanese lexeme 子供

kodomo ‘child’. This data-driven example, showing the central node and its 45 direct neighbors, demonstrates how a single concept is embedded within a dense web of diverse semantic connections generated by our methodology.

An analysis of these layered visualizations reveals the network’s capacity to capture multifaceted world knowledge.

Figure 2a illustrates the structural backbone as the concept’s formal classification. We see clear HYPERNYM links to broader categories like 人間

ningen ‘human being’ and 人

hito ‘person’. Conversely, HYPONYM relations point to more specific instances like 男の子 (otokonoko—boy) and 乳児

nyūji ‘infant’. This hierarchical structure is complemented by compositional knowledge, with MERONYM relations connecting 子供 to its constituent parts, such as 手

te ‘hand’ and 足

ashi ‘foot’. This layer effectively forms the concept’s ontological skeleton.

The Associative Context is rendered in

Figure 2b. This panel adds a layer of thematic richness. SYNONYM relations connect子供to semantically close terms, while the numerous CO-HYPONYM relations place it in a context of peers—other concepts that share a similar role or hypernym. These links to lexemes like 学生

gakusei ‘student’, 少年

shōnen ‘youth’, and 妹

imōto ‘younger sister’ enrich the central concept with social roles and relational context that go beyond strict “is-a” hierarchies.

The Holistic View is illustrated in

Figure 2c combines all layers, presenting a holistic and densely interconnected view. This integrated visualization is the most accurate representation of the output, showing how the different semantic facets are not separate but deeply intertwined. It is in this combined view that the most powerful insights emerge, as we see abstract and thematic connections to concepts like 社会

shakai ‘society’, 教育

kyōiku ‘education’, and 文化

bunka ‘culture’. This demonstrates that the LLM has not just generated a taxonomy, but a rich knowledge graph that situates the concept within its broader functional and cultural context.

This layered example of the 子供 subgraph powerfully underscores our methodology’s ability to create a semantically rich, multi-faceted lexical resource where each node is a nexus of diverse and deeply integrated information.

To further illustrate the structure of the attributes of lexical relationships within the network, consider the following example of a synonym relationship between two Japanese lexemes, mono ‘thing’ and buppin ‘goods’, both of which refer to tangible items or products. Synonym relationships capture pairs of words with closely related meanings, often substitutable in specific contexts.

Example edge: mono ‘thing’—buppin ‘goods’

Source Lexeme: 物 mono ‘thing’

Target Lexeme: 物品 buppin ‘goods’

relation_type: “SYNONYM”

This attribute specifies the type of relationship between the two lexemes. “SYNONYM” indicates that mono ‘thing’ and buppin ‘goods’ share very similar meanings and can often be used interchangeably in contexts related to tangible items, goods, or products.

synonymity_strength: 0.9

The synonymity_strength attribute quantifies the degree of similarity between mono ‘thing’ and buppin ‘goods’ on a scale from 0 to 1. Here, a value of 0.9 suggests a very high level of synonymy, meaning these words are closely related in meaning and can substitute for each other in many contexts.

relation_type_strength: 0.9

The relation_type_strength attribute measures the confidence in the classification of this relationship as synonymity. A high score of 0.9 indicates strong confidence that this relationship is correctly classified as a synonym pair. This value suggests that the model has high certainty that the synonym classification is appropriate for these two lexemes.

relation_explanation: “Both words refer to tangible items or products, often used in commerce.”

Explanation attribute provides a qualitative explanation of the relationship, giving context to the synonymity between mono ‘thing’ and buppin ‘goods’. In this example, it clarifies that both terms are commonly used to describe physical objects, particularly in contexts related to commerce or goods. This explanation enhances the interpretability of the relationship and helps users understand why these two words are considered synonyms.

For synonym relations and for hierarchical relationships (hypernym ↔ hyponym, meronym ↔ holonym), the system automatically generates symmetric and inverse relations, respectively, to reflect bidirectionality, maintaining a comprehensive and navigable network structure.

Additionally, the script employs helper functions to check for pre-existing edges of the same type, ensuring that no duplicate relations are introduced. This edge-based representation, enriched with relationship metadata, enables highly structured querying and semantic exploration within the graph.

2.5. Mutual Domain and Sense Construction

The HAS_DOMAIN and MUTUAL_SENSE relations are derived from the attribute of the synonym, hypernym, hyponym, co-hyponym, meronym and holonym relations designed to provide additional contextual layers within the lexical network, capturing shared meanings and categorical domains for each lexical relationship.

HAS_DOMAIN represents a categorical or thematic association that groups the source and target words into a shared semantic domain. This relation type is intended to capture broader, often abstract, connections that categorize words into general domains (such as nature, technology, or emotions), which can be useful for thematic or topical organization. The model is prompted to identify a broader category (domain) that both the source and target words would logically fall under.

The Mutual_Domain_explanation provides a brief rationale for why this particular domain is relevant, which aids in interpretability and could enhance downstream applications, such as semantic search or educational tools that rely on domain-level understanding.

MUTUAL_SENSE denotes the shared or overlapping sense between the source and target words, providing a finer level of meaning beyond the broader domain category. This is particularly useful for specifying how two lexemes relate semantically when they share attributes or fall within a similar conceptual space, but without being direct synonyms or hypernyms. The prompt requests the model to generate a shared meaning or concept that captures the common aspect between the source and target words. This mutual sense acts as a middle ground between purely lexical similarity (like synonyms) and broader categorical connections (like domain associations), clarifying the specific conceptual overlap.

Together, HAS_DOMAIN and MUTUAL_SENSE allow the network to represent layered associations. HAS_DOMAIN captures the broader, categorical grouping, while MUTUAL_SENSE refines this connection by highlighting more specific shared aspects of meaning. This layered approach adds interpretative depth, enhancing the network’s utility for nuanced language applications, including educational tools, semantic search, and translation aids.

2.6. Quality Control and Data Integrity

To maintain data quality, the system enforces several integrity checks:

After each lexeme is processed, the system saves the updated graph file as a backup, preserving incremental updates and ensuring the long-term integrity of the lexical network.

2.7. Graph Enrichment and Lexical Attribute Integration

To enhance the graph’s linguistic richness, the system integrates supplemental attributes for each lexeme:

Frequency Data: Lexeme frequency from the VDRJ dataset is included to indicate common usage levels.

JLPT Proficiency Levels: Adding JLPT proficiency levels provides insights into the complexity and difficulty of each lexeme, particularly valuable for educational applications. The current version employs pre-2010 JLPT scale N4–N1.

Romanized Transliteration: Through the PyKakasi library, romanized forms of each lexeme are added, improving accessibility and enabling use by a broader audience, including non-native speakers.

Centrality measures within the NetworkX framework, such as degree and weighted degree (using a relation_type and synonymity_strenght for weight), are calculated to identify nodes of high connectivity, which indicate lexemes with significant semantic overlap or influence across multiple relationships. By analyzing these central nodes, the system highlights critical lexical items that may serve as semantic anchors within the Japanese language network. An executable Jupyter notebook that reproduces the visualizations and queries is provided in the

Supplementary Materials (File S1). The Networkx graph object is provided in the

Supplementary Materials (File S2).

3. Results

3.1. Initial Lexical Network Construction

Using the initial single relation methodology, which focused predominantly on synonym (or antonym) relationships, the lexical network was constructed with a foundational set of 60,752 nodes and 127,960 edges. This initial network provided a robust starting point for capturing basic associative relationships among a large group of Japanese nouns. However, the focus on limited relation types restricted the network’s capacity to represent more nuanced semantic relationships, such as hierarchical and part–whole relations, which are essential for a comprehensive lexical understanding. Therefore, the application of the new multi-relational methodology allowed for incorporating additional relation types led to a significant expansion of the lexical network. The multi-relational network at the time of writing comprises 155,233 nodes and 708,782 from the same initial 11,055 edges, representing a 2.6-fold increase in nodes and a 4.9-fold increase in edges compared to the initial structure. This substantial growth underscores the system’s enhanced capability to capture complex semantic relationships, broadening the scope of lexical associations within the network.

3.2. Comparative Analysis of Network Structure

The expanded network not only increased in size but also in depth, as evidenced by the inclusion of multiple relationships that enhance lexical specificity, as represented in

Table 1. For example, hypernyms and hyponyms provide hierarchical layers, allowing for a clearer representation of lexical generality and specificity, while meronyms and holonyms capture part-to-whole relationships. These additional layers support a more intricate mapping of Japanese lexemes, offering potential applications in NLP tasks that require fine-grained semantic differentiation.

Table 2 provides a detailed breakdown of the distribution of relation types within the lexical network, highlighting the prevalence and role of each relation in shaping the network’s semantic structure.

The distribution of relation types within the lexical network reveals insightful patterns about the semantic structure captured in the graph. The HYPONYM and HYPERNYM relation types, both at 17.41% due to the initial mirroring of the created edges in either of these inverse lexical relations, represent the most frequent connections, suggesting a strong hierarchical organization where specific terms are associated with broader categories or subcategories. This high frequency indicates the network’s robustness in capturing classification layers.

CO-HYPONYM relations, at 17.30%, closely follow, highlighting the prevalence of terms within shared categories that are neither direct superordinates nor subordinates. This reflects language’s associative complexity, where items like 猫 neko ‘cat’ and 犬 inu dog” might share a common category without one being a subtype of the other.

HAS_DOMAIN and DOMAIN_OF relations, both accounting for 11.18%, provide significant thematic or categorical connections between lexemes, linking words within shared conceptual domains, such as “medicine” and “hospital.” These relations are essential for organizing words by broader themes.

SYNONYM relations (9.30%) and HOLONYM (8.93%) contribute to the network’s depth, establishing connections based on similarity or part–whole relationships, respectively. The presence of MERONYM relations (7.28%) further enriches the structure by detailing part-to-whole associations.

The expanded lexical network integrates multiple types of semantic relationships, each enriched with quantitative attributes such as synonymity_strength and relation_type_strength. The synonymity strength measures the semantic similarity between source and target lexemes, while the relation type strength reflects the confidence in the classification of each relationship type.

Table 3 provides a statistical overview of the different relation types in the multi-relational lexical network, detailing the synonymity strength and the relation type strength for each relation. Synonymity strength is highest in SYNONYM relations (mean = 0.74), reflecting the close semantic similarity expected within synonymous pairs. In contrast, HYPERNYM relations exhibit the lowest mean synonymity strength (0.55), which aligns with their broader categorical nature compared to the specific semantic overlap in synonyms.

Relation Type strength is relatively high across all relation types, with HAS_DOMAIN and DOMAIN_OF relations scoring the highest (mean = 0.77). This suggests a strong model confidence in these domain-based classifications, likely due to clear semantic boundaries that categorize words into specific thematic domains.

The standard deviation values for synonymity strength are generally higher in part–whole relations like HOLONYM and MERONYM, indicating more variability in semantic similarity across part-to-whole associations. This could be attributed to the inherent complexity and diversity within part–whole relationships, where the degree of connection can vary significantly depending on the context.

3.3. Network Structure and Centrality Analysis

A structural analysis of the expanded network reveals key characteristics about its topology and the organization of the Japanese lexicon it represents. The graph is very sparse, with a density of 0.000016, which is typical for large real-world networks.

A key finding is the network’s connectivity. While there are 96,935 weakly connected components, the vast majority of the lexicon is unified within a single giant component. This largest component contains 167,372 nodes, representing 63% of all lexical items in the graph. This indicates a high degree of semantic interconnectedness across a significant portion of the Japanese vocabulary.

To identify the most influential concepts in the network, a degree centrality analysis was performed. The nodes with the highest number of connections are overwhelmingly abstract concepts that organize large areas of knowledge. The top 5 most connected nodes are:

社会 shakai ‘society;: 4874 connections;

文化 bunka ‘culture’: 4864 connections;

生物 seibutsu ‘living things/biology’: 3307 connections;

教育 kyōiku ’education’: 3179 connections;

経済 keizai ’economy’: 2742 connections.

The prominence of these abstract concepts provides a unique insight into the thematic core of the generated lexicon, a point we will explore further in the Discussion.

3.4. Comparison of Lexical Relations in Graph and WordNet

The analysis involved comparing lexical relations derived from the constructed graph against relations identified within WordNet for Japanese lexemes that was taken from the NLTK library and Open Multilingual WordNet [

25]. Each pair of source–target lexemes was evaluated to identify relations such as HYPERNYM, HYPONYM, HOLONYM, MERONYM, SYNONYM, and CO-HYPONYM. Relations were compared to see if there was a match between the Graph’s relation type and the relation derived from WordNet.

The following examples highlight cases where a source–target pair has multiple relation types in the graph:

Table 4 and

Table 5 present a comparison between graph-derived relations and WordNet relations for selected source–target pairs, along with an indication of whether there is a match between the two. The comparison reveals several key insights into the strengths and limitations of the lexical Graph and WordNet as resources for semantic relationships.

3.4.1. Matching Accuracy and Coverage

The exact match rate of 37.96% indicates that a significant number of Graph-derived relations do not directly align with WordNet relations. However, with soft matching, the alignment improves to 58.70%, suggesting that many source–target pairs share multiple semantic dimensions.

Soft matching allows for better alignment by accounting for lexeme pairs with overlapping or multi-faceted relationships (e.g., HYPONYM and CO-HYPONYM), which are common in nuanced or domain-specific lexicons.

The presence of multiple relation types for the same lexeme pairs, such as アパート apaato ‘apartment’ with コンドミニアム kondominiamu ‘condominium’ or タウンハウス taunhausu ‘townhouse’, illustrates the complexity of lexical semantics. These cases often show both hierarchical (e.g., HYPERNYM, HYPONYM) and associative (CO-HYPONYM) connections, reflecting real-world linguistic nuances where words may belong to overlapping categories. Such multi-relation pairs underscore the importance of context in semantic analysis, as lexemes often have context-dependent relations that cannot be fully captured by a single relation type.

3.4.2. Discrepancies Between Graph and WordNet Relations

For pairs where the Graph and WordNet relations did not align, some discrepancies can be attributed to the inherent differences in data sources. WordNet may lack certain domain-specific terms or interpret hierarchical relationships differently, particularly for lexicons that include modern or specialized terms (e.g., IT terms, media). Additionally, Graph-derived relations rely on automated extraction techniques that may capture broader or alternative associations not encoded in WordNet.

3.4.3. Confusion Matrix Analysis and Relation-Type Statistics

The confusion matrix provides a visual comparison of the alignment between Graph-derived relations and WordNet relations. Each cell in the matrix represents the frequency of occurrences where a specific relation type from the graph matches (or not) the corresponding WordNet relation type. The diagonal cells reflect exact matches, while off-diagonal cells indicate mismatches.

Confusion Matrix heatmap (

Figure 3) depicts the frequency distribution of relation types between the Graph and WordNet. The heatmap color scale highlights the intensity of matches, with deeper colors indicating higher counts of alignment. For each unique relation type, precision and recall metrics were calculated to assess the accuracy of the Graph-derived relations relative to WordNet. Precision represents the proportion of true positive relations (i.e., relations correctly matched between Graph and WordNet) out of all relations predicted by the graph as a specific type, while recall measures the proportion of true positives out of all actual instances of a specific relation type in WordNet.

3.4.4. Distribution of WordNet Relations for Each Graph Relation Type

A complementary distribution analysis was conducted to understand how each graph-derived relation type distributes across WordNet relations in order to identify patterns where specific graph relations may partially align with multiple WordNet relation types, indicating the nuanced overlap between hierarchical, associative, and part–whole relations in natural language.

Table 6 provides a comparative view of precision and recall for each relation type. Additionally, the WordNet Distribution column shows the breakdown of WordNet relation types that align with each graph relation, illustrating how often each WordNet relation type matches the graph’s predictions.

SYNONYM and HYPONYM relations have relatively high precision and recall, indicating strong alignment between the Graph and WordNet.

HOLONYM relations show lower precision (7.92%) but moderate recall (32%), suggesting that while they are often identified in WordNet, their classification in the Graph may vary across similar relation types like HYPERNYM and CO-HYPONYM.

The WordNet Distribution reveals instances where relation types blend, as seen with CO-HYPONYM and HYPERNYM, which frequently align with multiple relation types in WordNet, reflecting nuanced overlaps in semantic roles.

This combined analysis helps to clarify which relations align most consistently and where further refinement may be needed for enhanced semantic accuracy in the graph.

High precision and recall values for certain relation types suggest that the graph accurately captures these relations compared to WordNet. For example, SYNONYM relations often show high precision, indicating that the graph effectively distinguishes synonymy with minimal false positives.

Lower precision or recall in some relation types, such as MERONYM or HOLONYM, reflects the complexity of part–whole relationships, which may require more nuanced criteria for accurate classification.

3.4.5. Implications for NLP Applications

The mixed alignment between graph and WordNet relations suggests that integrating multiple resources can enrich lexical networks and improve their applicability for NLP tasks. Systems that can incorporate both hierarchical (WordNet) and associative or domain-specific relations (graph) are likely to achieve greater accuracy in semantic interpretation.

The findings support the use of a hybrid approach for lexical networks, combining manually curated resources like WordNet with dynamically generated relations to capture a wider range of semantics in language.

The high incidence of multiple relations highlights the need for improved relation disambiguation methods in computational lexicography. Further research could focus on defining hierarchical rules to prioritize or layer relations based on context, which may help refine the accuracy of lexical relations in language models.

Future work might explore machine learning approaches to classify and weight relations, leveraging metrics such as relation strength and synonymity to create more sophisticated and context-aware lexical networks.

4. Discussion

Our findings contribute to the ongoing conversation about resource creation in computational linguistics. The methodology presented here exemplifies a trade-off: it substitutes the intensive labor of human experts and manual data curation with computational resources in the form of LLM API calls. This study demonstrates that this trade-off is highly effective for achieving scalability and speed. While not “resource-free,” this approach democratizes the creation of foundational linguistic tools. It makes the development of a comprehensive lexical network—a task that once took a decade—feasible within a much shorter timeframe and adaptable to other languages without requiring a new team of linguistic experts for each one. This re-frames the challenge of resource development from one of finding and funding human expertise to one of effectively prompting and managing computational models.

4.1. Structural Insights and Lexical Organization

The structural properties of the node network offer a profound glimpse into the organizational principles of the Japanese lexicon as captured by a large language model. The analysis reveals an emergent ontology that mirrors the complex, hierarchical, and associative nature of human language, providing insights that go far beyond simple node and edge counts.

4.1.1. The Giant Component as a Model of Lexical Cohesion

A primary finding is the consolidation of 63% of the vocabulary into a single, massive, weakly connected component. The existence of this “giant component” is highly significant. It demonstrates that the vast majority of the Japanese lexical items in our graph are semantically reachable from one another. This cohesive structure suggests that the network is not a mere collection of disconnected word lists but a holistic and integrated model of the lexicon. It implies a high degree of lexical cohesion, where concepts from disparate domains—from everyday objects to scientific terminology—are linked through chains of semantic relationships. This property is crucial for applications that rely on measuring semantic distance or traversing conceptual pathways, as it ensures that paths can be found between a vast and diverse range of lexemes.

4.1.2. Network Topology: A Core–Periphery Lexical Structure

The network’s topology, characterized by extreme sparsity (density ≈ 0.000016) and a large gap between the average degree (8.57) and median degree (1.0), is indicative of a classic core–periphery or scale-free structure. This is not a random network; it is highly organized. This structure suggests that the lexicon operates on a principle of efficiency and cognitive economy:

The Periphery: The vast majority of nodes (the periphery) have very few connections. These represent specialized, domain-specific, or less frequent words that have a narrow semantic scope.

The Core: A small but critical number of nodes (the core) are exceptionally well-connected, acting as hubs. These are not random words but, as our centrality analysis shows, are foundational concepts.

This core–periphery model aligns with theories of the mental lexicon, where a stable core of general, high-frequency vocabulary provides the structural scaffolding for a much larger, dynamic periphery of specialized terms. Our work provides large-scale empirical evidence for this structure, generated automatically from language data.

4.1.3. Centrality Analysis: Uncovering the Conceptual Backbone

Perhaps the most compelling insight comes from the degree centrality analysis. The emergence of abstract, high-level concepts like 社会 shakai ‘society’, 文化 bunka ‘culture’, 生物 ikimono ‘living things’, and 教育 kyooiku ‘education’ as the most central nodes is a profound result. It indicates that the LLM, in processing its training data, has learned to model the conceptual superstructure of human knowledge.

These central nodes function as semantic hubs or conceptual anchors. They are not central because they are simply frequent, but because they are thematically essential, organizing vast downstream domains of related vocabulary. For example, “society” serves as an anchor connecting thousands of nodes related to law, politics, family, communication, and social structures. The identification of this conceptual backbone is a key contribution, as it provides an empirical map of the thematic centers of gravity within the Japanese language. This emergent, data-driven ontology is far more dynamic and potentially more representative of modern language use than a manually curated taxonomy, making it an invaluable resource for tasks like topic modeling, document summarization, and knowledge-graph-based reasoning. The rich, annotated data, including relation_explanation attributes, further supports this, offering explainable pathways through this complex conceptual space.

4.2. Multiple Relationship Types for Same Source Target Pair

Although the model was prompted to provide a single, mutually exclusive relation for each lexeme pair, it frequently deviated from this constraint. Analysis of the 708,782 extracted relations reveals that 155,233 unique source–target pairs are characterized by multiple relation types. This phenomenon is not interpreted as a model failure but rather as a reflection of the intrinsic nature of lexical semantics. In natural language, the boundaries between relation types are often fluid and context-dependent, rendering any single, discrete categorization artificially limiting. The LLM’s behavior is a direct consequence of its training on vast, unstructured text corpora where such semantic overlaps are ubiquitous. The model’s generative objective, which prioritizes informational completeness, likely compels it to report all valid relationships it identifies, even when instructed to select only one. Thus, the presence of multiple relations underscores the model’s capacity to capture the nuanced, multifaceted nature of word associations as they appear in real-world language use.

4.3. Examples of Pairs with Multiple Relations

The following examples illustrate source–target pairs in the network that have more than one semantic relationship:

Number of Relations: 2

Relation Types: HYPERNYM, CO-HYPONYM

This pair highlights how “360-degree camera” is considered a specific type of “camera” (HYPERNYM), while also sharing categorical similarities with other types of cameras (CO-HYPONYM).

Number of Relations: 2

Relation Types: HYPERNYM, CO-HYPONYM

Similarly, “360-degree camera” is viewed as a specific form of “digital camera,” establishing both hierarchical and categorical relationships.

Number of Relations: 2

Relation Types: HYPERNYM, CO-HYPONYM

This example highlights “commercial” as a specific type of “video” (HYPERNYM) and as part of the broader domain of visual media (CO-HYPONYM).

Number of Relations: 2

Relation Types: HYPERNYM, CO-HYPONYM

“DNS server” and “host” share a hierarchical relationship where “host” is a broader category. They also belong to the same domain of network infrastructure, forming a CO-HYPONYM relation.

4.4. Discussion of Multiple Relations

A significant finding of this study is the high prevalence of source–target pairs linked by multiple, distinct semantic relations. The occurrence of these multi-relational links, found across 155,233 unique pairs, is not an anomaly but a core feature that enriches the network’s semantic depth and offers valuable insights into the complexity of language. For instance, a lexeme pair can exhibit both hierarchical (HYPERNYM) and associative (CO-HYPONYM) connections, reflecting how language organizes concepts into both formal taxonomies and fluid, associative clusters. From an NLP perspective, this layered semantic information is highly valuable; understanding that “DNS server” and “host” share both hierarchical and categorical ties can improve the accuracy of classification and disambiguation tasks. However, this semantic richness also introduces notable challenges, as a high density of edges can increase complexity in network traversal and impact computational efficiency in real-time applications. This behavior stems not from a failure of the model, but from its success in mirroring the natural complexity of language, where strict relational exclusivity is often an artificial constraint. While the LLM was instructed to provide a single relation type, its tendency to return multiple valid connections reflects the intertwined and multifaceted nature of lexical semantics. Consequently, applications requiring a single canonical relation may necessitate additional post-processing or context-aware disambiguation steps. This observation opens promising avenues for future research into semantic role labeling, automated relation disambiguation, and the construction of more nuanced, domain-specific language models.

4.5. Interpreting the Alignment with WordNet: A Complementary Resource

The comparative analysis with WordNet yielded an exact match rate of 37.96% and a soft match rate of 58.70%. While these figures may seem moderate, they do not indicate a failure of our methodology. Instead, we argue that this level of alignment is a significant finding in itself, revealing the fundamental differences between a formally curated ontology and a dynamic, data-driven lexical network. The discrepancy is not a sign of weakness, but a reflection of the complementary strengths of our LLM-generated graph.

Several key factors explain this moderate alignment and highlight our network’s unique value. Firstly, these systems have different design philosophies: WordNet is an expert-curated, top-down resource. It is designed to be a clean, formal ontology with strict, mutually exclusive relationships based on lexicographical principles. In contrast, our network is a bottom-up, emergent structure derived from the statistical patterns in the vast text data on which the LLM was trained. It captures how words are actually used in context, including their associative, thematic, and sometimes ambiguous connections, which may not fit neatly into WordNet’s rigid structure.

The significant increase from an exact match (37.96%) to a soft match (58.70%) is arguably the most important result of the comparison. This 20-percentage-point jump demonstrates that in a large number of cases where the relation type did not match exactly, our graph still identified a valid semantic relationship between the lexemes. For example, a pair identified as CO-HYPONYM in our graph might be a HYPONYM in WordNet. This reflects the inherent complexity and context-dependency of language, where a single word pair can plausibly have multiple relationship types (as discussed in

Section 4.2). Our network captures this semantic multiplicity, whereas WordNet often encodes a single, canonical relationship.

Large language models are trained on contemporary text, including the internet. Consequently, our network is likely to include modern, domain-specific, or colloquial terms that are absent from the version of Japanese WordNet used for our comparison. Discrepancies naturally arise when WordNet lacks an entry for a term or models its relationships differently due to its older or more formal scope.

Our goal was not to perfectly replicate WordNet, but to develop a scalable method for creating a new type of lexical resource. The results show that this goal was achieved. The generated graph should be viewed as a resource that augments, rather than replaces, traditional ontologies. WordNet provides a stable, highly structured hierarchical backbone, while our network offers a dynamic, densely connected, and context-sensitive web of associations. For NLP applications, the combination of both resources would likely yield performance superior to either one alone, with our graph providing the nuanced, real-world context that formal ontologies can sometimes miss.

Therefore, the “moderate” alignment is a positive indicator that our approach successfully captures a rich and different facet of lexical semantics—one that is more fluid, associative, and reflective of modern language use.

4.6. Comparison with Other Lexicon Building Approaches

In this section we mention differences between our methodology and other mature approaches to lexicon building, namely LexicoMatic and LexAN, addressing the unique points of our system.

LexicoMatic is a powerful framework designed to create multilingual lexical–semantic resources. Its core methodology is often seed-based; for instance, it may take a word and its definition from a well-resourced language (like English), use machine translation to port this information to a target language, and then identify related terms in that language. This approach is highly effective for its primary goal of multilingual resource creation. Our work differs in both its objective and technical approach. We focus on creating a deep, monolingual network for Japanese, and our methodology does not rely on translation or definition parsing. Instead, we directly query the emergent, latent knowledge of a generative LLM to extract a broad set of six predefined semantic relations. While LexicoMatic excels at multilingual bootstrapping, our method is designed for deep, multi-relational extraction within a single language at a massive scale.

On the other hand, LexAN (Lexical Association Networks) builds its networks primarily from a corpus-based, statistical perspective. It identifies “lexical functions” by analyzing statistically significant co-occurrences and other associations between words in large text corpora. This represents a classic and powerful methodology for uncovering data-driven relationships. Our approach is fundamentally different, as it is generative rather than statistical. We do not rely on co-occurrence patterns within a specific corpus. This allows us to extract hierarchical (hypernymy) and compositional (meronymy) relationships that are often part of latent world knowledge and may not be explicitly signaled by co-occurrence in text. Our network thus captures a different type of knowledge—the abstract, relational understanding learned by the LLM during its pre-training on a vast dataset.

4.7. Comparison with Recent Literature and State-of-the-Art

Our work enters a vibrant and rapidly evolving field of research focused on leveraging LLMs for automated knowledge base and lexical network construction. Recent surveys confirm that the dominant paradigm for knowledge extraction has shifted towards using pre-trained LLMs, largely replacing complex, multi-stage NLP pipelines [

26,

27,

28]. The core challenges now revolve around effective prompt engineering, enforcing structured outputs (e.g., via JSON schema), and mitigating hallucinations—all of which our methodology explicitly addresses. Our evaluation approach, which benchmarks the generated network against a gold-standard resource such as WordNet, is also a standard and widely accepted practice for assessing the quality of automatically extracted relations.

While our work aligns with these general trends, it is differentiated by several key factors. A significant portion of recent research targets the extraction of knowledge graphs for narrow, specialized domains, such as the biomedical field, where LLMs are used to analyze complex sources like electronic medical records [

29]. These efforts are critical for their respective applications, but our study addresses the distinct and arguably more complex challenge of building a large-scale, general-purpose lexical network for an entire language. Furthermore, our framework is explicitly multi-relational, designed to capture the nuanced and varied ways lexemes relate—from hierarchical to compositional and associative links.

Our pipeline was designed from the ground up for massive scalability. By implementing an iterative and resumable workflow, we have constructed a network of over 155,000 nodes and 700,000 edges. The sheer scale of this general-language resource is a primary contribution, demonstrating a practical and efficient pathway for developing foundational lexical tools for any language with a sufficiently powerful LLM. A key contribution that sets our work apart is the deep analysis of the network’s emergent topological properties. While many studies focus primarily on the precision and recall of the extraction process itself, we investigate the resulting structure. Our analysis of the “giant component,” the core–periphery topology, and the identification of abstract concepts as semantic hubs provides large-scale empirical evidence for theories of the mental lexicon. Finally, literature increasingly points toward the necessity of a human-in-the-loop (HiL) for final verification and quality assurance, noting that a hybrid approach often yields the best balance of precision and recall [

30]. While our framework is fully automated to maximize scalability, we acknowledge that incorporating a HiL validation module is a promising direction for future work to further refine the network’s accuracy. Our current approach represents a significant advance in the rapid and scalable generation of a comprehensive first draft of a language’s lexical network.

4.8. Graph Visualization and Exploration

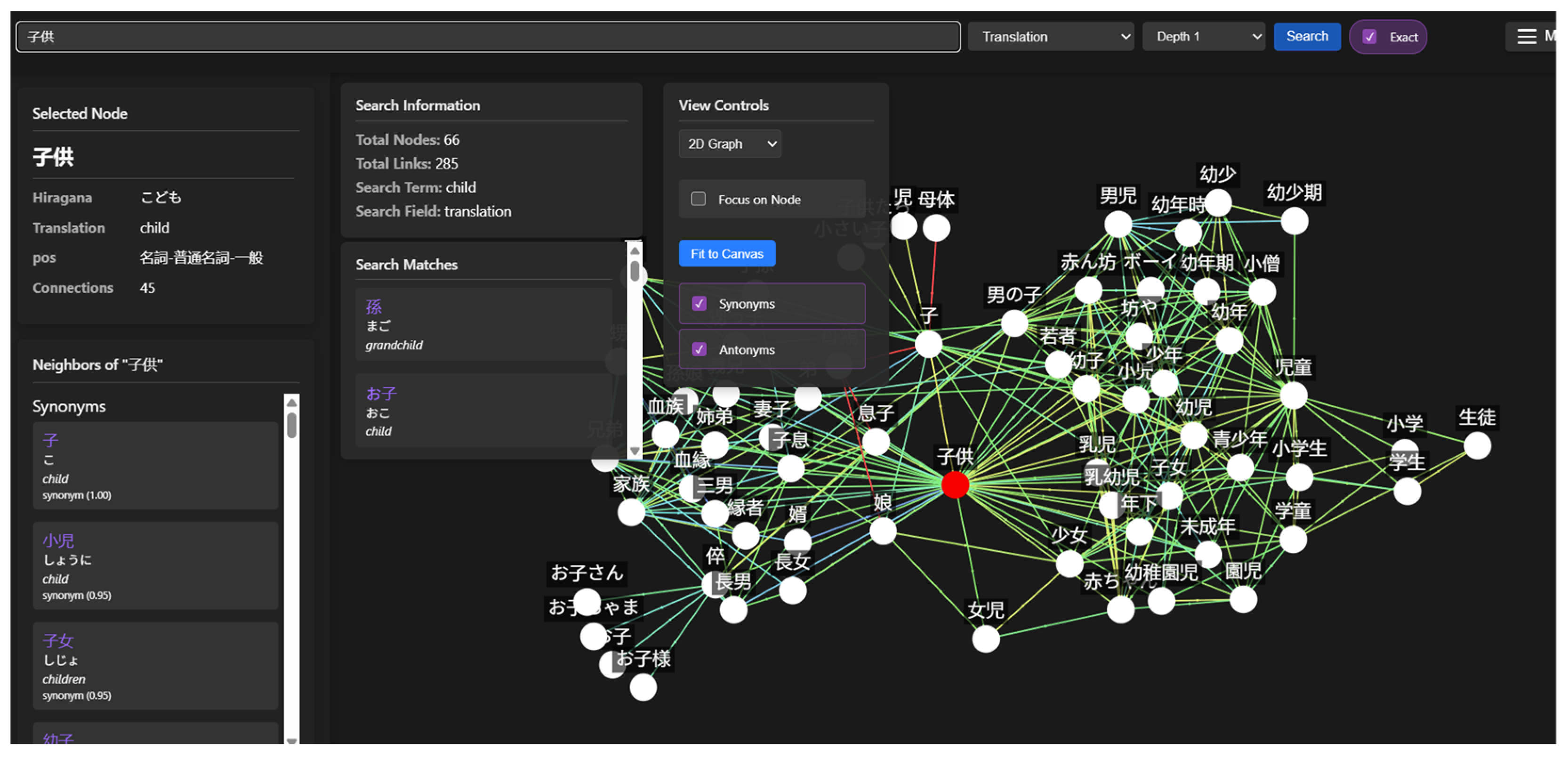

For this project, we developed an interactive 2D visualization tool, represented in

Figure 4, to explore and analyze the Japanese lexical network, with a particular focus on synonym relationships. We created an interface that allows users to interact with the synonym network through several key features, including search functionality, JLPT level filtering, relationship type selection, and graph depth control. These tools enable users to filter and navigate the network based on their specific requirements, providing an adaptable view of the network’s structure.

In addition to the 2D visualization, a 3D representation was implemented using Vásturiano’s 3D library for graph representation [

31], see

Figure 5. This enhanced spatial representation offers an immersive way to explore synonym connections within the network. The 3D layout makes it easier to understand the network’s structure by placing lexemes in a multi-dimensional space.

While these tools have laid the groundwork for visualizing synonym networks effectively, we are still refining the representation to best accommodate the multi-layered nature of lexical relationships. The graph representation is available as a part of the web app at the

https://jln.syntagent.com/ (accessed on 15 October 2025). Future efforts will focus on optimizing the display of various relation types, ensuring that hierarchical and associative connections are accurately and intuitively represented within the same network. This ongoing work aims to provide a comprehensive, user-friendly interface that supports deeper insights into multi-dimensional lexical data.

4.9. A Case Study: Enhancing Japanese Language Education

A tangible application of this multi-relational network lies in the domain of computer-assisted language learning (CALL) for Japanese. Traditional vocabulary acquisition often relies on rote memorization from flat lists, which fails to build the deep, interconnected understanding characteristic of native-like fluency. Our network provides the semantic scaffolding necessary to create a more sophisticated pedagogical tool that moves beyond simple definitions to foster a conceptual understanding of the lexicon.

Such a system could dynamically generate learning modules based on the graph’s structure. For instance, upon learning the word 乗り物 norimono ‘vehicle’, a learner would not only see its definition but also be presented with an interactive tree of its most common hyponyms, such as 車 kuruma ‘car’ and 自転車 jitensha ‘bicycle’. This hierarchical exploration helps learners organize vocabulary into meaningful categories. Furthermore, the system could leverage meronymic relations to create contextually rich exercises, prompting a student to identify that an エンジン enjin ‘engine’ is a part of a car. For advanced learners, the network’s quantitative synonymity_strength attribute could be used to explain the nuanced differences between close synonyms, clarifying which terms are near-perfect substitutes versus those appropriate only in specific contexts. By grounding vocabulary acquisition in a rich web of semantic relationships, this approach would facilitate a more robust and intuitive learning process, mirroring how conceptual knowledge is naturally organized.