Abstract

Generative artificial intelligence (GenAI) is reshaping science, technology, engineering, and mathematics (STEM) education by offering new strategies to address persistent challenges in equity, access, and instructional capacity—particularly within Hispanic-Serving Institutions (HSIs). This review documents a faculty-led, interdisciplinary initiative at the University of La Verne (ULV), an HSI in Southern California, to explore GenAI’s integration across biology, chemistry, mathematics, and physics. Adopting an exploratory qualitative design, this study synthesizes faculty-authored vignettes with peer-reviewed literature to examine how GenAI is being piloted as a scaffold for inclusive pedagogy. Across disciplines, faculty-reported benefits such as simplifying complex content, enhancing multilingual comprehension, and expanding access to early-stage research and technical writing. At the same time, limitations—including factual inaccuracies, algorithmic bias, and student over-reliance—underscore the importance of embedding critical AI literacy and ethical reflection into instruction. The findings highlight equity-driven strategies that position GenAI as a complement, not a substitute, for disciplinary expertise and culturally responsive pedagogy. By documenting diverse, practice-based applications, this review provides a flexible framework for integrating GenAI ethically and inclusively into undergraduate STEM instruction. The insights extend beyond HSIs, offering actionable pathways for other minority-serving and resource-constrained institutions.

1. Introduction

1.1. Sociotechnical Context of GenAI in STEM Education

Generative artificial intelligence (GenAI) aligns with the transformative goals of Education 4.0, which advocates for creativity, problem-solving, adaptability, and lifelong learning as foundational skills for navigating the Fourth Industrial Revolution [1,2,3]. These technologies—ranging from large language models such as ChatGPT (OpenAI GPT-4o) to multimodal tools such as DALL·E—hold the potential to reshape how knowledge is accessed, processed, and applied across disciplines [4]. However, realizing this potential depends on an institution’s capacity to integrate GenAI in ways that are inclusive, pedagogically sound, and contextually relevant [2]. At institutions such as the University of La Verne (ULV)—a federally designated Hispanic-Serving Institution (HSI) enrolling approximately 7000 students, the majority of whom are first-generation, multilingual, or from historically underrepresented backgrounds—GenAI adoption is shaped by persistent structural inequities [5]. These include limited campus-wide digital infrastructure, underfunded academic and technology support services, and unequal access to reliable internet and personal computing devices among low-income and commuter student populations [6,7,8,9]. These challenges are not unique to the ULV but are emblematic of the broader resource disparities that affect Minority-Serving Institutions (MSIs) across the United States—especially as they attempt to scale technological innovation without the fiscal flexibility of better-resourced institutions [6,10,11].

As such, the integration of GenAI must not be viewed as a neutral enhancement to teaching and learning, but rather as a sociotechnical intervention that both reflects and reshapes existing institutional conditions [12]. Its adoption at HSIs demands intentional alignment with justice-centered goals, including equity in digital access, transparency in use, and student empowerment in design and application [13]. This study presents a faculty-led, interdisciplinary exploration of early GenAI implementation across STEM disciplines. While ChatGPT features prominently in our pilot efforts, the objective is to examine how GenAI technologies can be adapted to promote equity, enhance student learning, and build discipline-specific competencies in biology, chemistry, mathematics, and physics. We document a series of reflective, practice-informed interventions that surface both the pedagogical opportunities and institutional constraints associated with GenAI integration. These cases highlight iterative experimentation, cross-disciplinary collaboration, and critical engagement—providing practical insights for institutions navigating the ethical, structural, and instructional dimensions of AI adoption.

The demographic landscape of U.S. higher education continues to shift, with Hispanic students comprising one of the fastest-growing student populations [14]. HSIs now enroll nearly 20% of all U.S. college students and two-thirds of all Hispanic undergraduates [15,16], positioning them as critical spaces for shaping the ethical and equitable implementation of emerging technologies, including GenAI. As key access points for historically excluded communities, HSIs bear a unique responsibility—and opportunity—to lead in developing inclusive and context-aware models of GenAI use. However, these institutions continue to face systemic barriers to digital transformation, including limited availability of Spanish-language AI tools, heightened privacy risks for undocumented students, and underresourced technology infrastructure [2,17,18,19]. These challenges underscore the need for institution-specific approaches to GenAI adoption—ones that account for both demographic strengths and material constraints [19].

Although personalized GenAI tools have shown promise in supporting multilingual comprehension, automating formative feedback, and fostering technical writing and data analysis skills [20,21], their educational value must be assessed critically and contextually through an equity lens. Without intentional attention to cultural relevance, linguistic diversity, and disparities in digital access, such tools risk replicating the very structural exclusions they are often presumed to alleviate [22]. Emerging research has highlighted how algorithmic bias, English-language dominance, and opaque model design can marginalize underrepresented students, particularly in STEM settings [23,24]. Our project adopts a strengths-based approach—one that centers the lived experiences, multilingual resources, and adaptive capacities of students at HSIs—to explore how GenAI can be adapted, not merely adopted, to foster inclusive excellence and educational innovation. This framing deliberately resists deficit-based narratives that characterize students as underprepared and instead highlights student agency, faculty experimentation, and institution-specific strategies responsive to both structural constraints and local opportunities [25,26]. GenAI becomes not just a technological solution, but a site for pedagogical co-creation, where learners and educators engage in iterative dialogue with emerging tools.

This manuscript documents the efforts of faculty across four STEM departments—biology, chemistry, mathematics, and physics—each of whom engaged GenAI to address a specific pedagogical challenge. Their work reveals how generative AI tools can be mobilized to support critical learning outcomes, while also raising ethical, disciplinary, and access-related considerations that must inform implementation. Our work is organized around five interrelated thematic areas:

- Equity and access—How can GenAI help level the playing field for multilingual, first-generation, and low-income learners?

- Culturally relevant pedagogy—In what ways can AI applications reflect students’ lived experiences and local realities?

- Interdisciplinary and community connections—How can GenAI facilitate research that is grounded in local communities and cross-disciplinary collaboration?

- Societal and ethical considerations—What ethical frameworks are needed to guide responsible GenAI use in higher education?

- AI literacy for underrepresented communities—How can students be equipped with the digital competencies necessary to thrive in AI-integrated STEM fields?

Rather than offering a singular or prescriptive model, this manuscript provides a multi-voiced roadmap for navigating GenAI integration in diverse instructional contexts. Through reflective practice, interdisciplinary collaboration, and community-informed pedagogy, we aim to contribute to the development of more equitable, adaptable, and ethically grounded higher education ecosystems—particularly within institutions serving students historically excluded from both STEM fields and technological innovation.

This review contributes significantly to scholarship at the intersection of generative AI and equitable pedagogy within Hispanic-Serving Institutions. Theoretically, it advances understanding of how GenAI functions as a knowledge management technology—a dynamic tool for capturing, codifying, and sharing educational expertise within institutions [27,28]. Societally, it illuminates ways HSIs can center equity by deploying GenAI to support historically underserved students, rendering justice, inclusion, and culturally responsive teaching core to AI deployment [29]. Practically, this work assembles actionable institutional strategies grounded in faculty practice and peer-reviewed literature, including innovations in prompt literacy, inclusive content creation, and scaffolded autonomy—strategies with clear applicability for institutions pursuing inclusive, scalable AI integration [30].

Building on these thematic areas, the manuscript is organized into a series of discipline-specific cases (Section 2, Section 3, Section 4, Section 5, Section 6, Section 7, Section 8 and Section 9). Each case highlights a sociotechnical challenge in teaching and learning and explores how faculty piloted GenAI to address it. Section 2 examines field biology as a living laboratory that democratizes scientific communication; Section 3 considers GenAI as a scaffold for inclusive learning in organic chemistry; Section 4 explores its role as a catalyst for ethical and inclusive engagement in cell biology and immunology; Section 5 analyzes how GenAI can broaden access to instrumental chemistry; Section 6 highlights AI-enhanced strategies in general biology; Section 7 situates GenAI in mathematics education as a vehicle for equity and innovation; Section 8 addresses how GenAI supports bridging scientific literacy gaps in biology education; and Section 9 presents its use as a coding mentor in physics. Together, these cases demonstrate how GenAI can be mobilized across disciplines to address equity, access, and literacy, while raising critical questions about ethics, authorship, and institutional strategy.

1.2. State of the Field: Inclusive AI Pedagogies

The rapid emergence of GenAI has generated an expanding body of scholarship across education, knowledge management, and organizational learning. Early studies emphasize GenAI’s capacity to support learning through scaffolding, personalized feedback, and efficiency gains in academic tasks [30,31]. Within the field of knowledge management, GenAI has been framed as a tool for capturing and codifying institutional expertise, enabling organizations to transform individual practice into collective, shareable assets [32,33,34]. At the same time, concerns about algorithmic bias, English-language dominance, and unequal digital access highlight the sociotechnical nature of AI adoption—not merely as a tool, but as an intervention shaped through inequitable institutional contexts [12,35], particularly in marginalized and multilingual learning environments where structural inequities are reinforced through algorithmic design [36]. This duality—potential for democratization alongside risks of exclusion—has become a central tension in current scholarship [37].

For HSIs and other MSIs, this tension is heightened by structural disparities in infrastructure, funding, and student resources [38,39], as well as limited institutional “servingness” that affects support structures and educational sustainability [40]. Recent scholarship highlights that although HSIs have substantial potential to lead equity-focused innovations, these efforts are frequently constrained by structural disparities in digital readiness, infrastructure, and policy support—particularly evident in issues such as inadequate access to devices and unreliable internet [41]. Yet, the literature also points to the critical role of faculty-driven experimentation and interdisciplinary collaboration in advancing culturally responsive and inclusive approaches to technology integration [42]. Taken together, these studies reveal both opportunities and limitations in deploying GenAI to advance equitable outcomes, underscoring the need for research that is practice-informed and contextually grounded [43].

1.3. Approach and Methodological Framing

This manuscript adopts an exploratory qualitative review design. Rather than presenting new empirical data, it synthesizes peer-reviewed literature and practice-based faculty narratives from four STEM disciplines—biology, chemistry, mathematics, and physics—within a Hispanic-Serving Institution. Faculty-authored vignettes provide reflective accounts of pedagogical strategies, challenges, and institutional contexts. These are analyzed alongside existing scholarship to surface common themes and actionable insights. In doing so, this review positions faculty practice as both a site of inquiry and a form of organizational knowledge production. Ethical guidelines were followed throughout, with no human subject research conducted; all insights are drawn from faculty experiences and published literature. This approach aligns with prior knowledge management scholarship that emphasizes iterative exploration and institutional learning as precursors to systematic organizational change [44,45].

2. Field Biology as a Living Laboratory: Democratizing Scientific Communication with GenAI

2.1. Context and Course Structure: Field Biology at an HSI

At the ULV, field biology courses are designed as Course-Based Undergraduate Research Experiences (CUREs) that blend traditional laboratory methods, outdoor fieldwork, and introductory computational analysis. These courses introduce students to the full arc of scientific investigation—hypothesis development, data collection, analysis, and communication—within the constraints of a single term [46,47]. Many students enrolled in these CUREs are English learners and first-generation college students, who often encounter challenges when engaging with scientific writing, research protocols, and peer-reviewed literature. To address these barriers, GenAI tools such as ChatGPT (OpenAI GPT-4o) have been introduced as cognitive scaffolds that support students in navigating scientific terminology, revising technical writing, and interpreting research methods. Rather than diminishing academic rigor, these tools have helped students organize their thoughts more clearly, refine scientific arguments, and engage more confidently in both oral and written components of the course. Faculty use of GenAI also extends to assignment design and formative feedback, allowing for more iterative, inclusive modes of student engagement in research practice.

2.2. Literacy Scaffolds: Enhancing Comprehension and Writing Skills

A central goal of the ULV’s field biology courses is to develop students’ fluency with primary scientific literature—an essential yet often intimidating genre for early-stage undergraduates, particularly multilingual learners. One of the first major assignments guides students through the structural elements of peer-reviewed articles, including how to identify research questions, methodologies, and conclusions. This approach emphasizes not just content comprehension but literacy as a scientific practice, helping students move from passive reading to analytical engagement with disciplinary discourse. In one example, a student analyzing a study on kangaroo rat adaptations to arid climates initially submitted a summary that lacked precision and failed to clearly identify this study’s conclusions. After using ChatGPT as a peer-editing tool, the student was able to revise the paragraph with improved clarity, scientific tone, and coherence. The AI-generated feedback prompted the student to refine their structure, use more discipline-appropriate vocabulary, and connect conclusions to supporting evidence. This revision process exemplifies how GenAI can serve as a real-time literacy scaffold, offering personalized guidance that helps students move beyond surface-level reading to deeper analytical reasoning.

Scientific writing is foundational to the ULV’s CUREs, where students must articulate methods, results, and interpretations from original investigations. These field-based projects often involve local environmental challenges and collaborations with community partners, making scientific communication not only a classroom requirement but also a civic act. The “living laboratory” framework that structures these courses connects theory with real-world application, culminating in student-led presentations to both scientific and public audiences. To support this writing process, assignments are modeled on journal standards such as the Instructions for Authors for BIOS—the quarterly journal of the Biology Honor Society. Students draft each manuscript section using detailed rubrics, often returning to ChatGPT for iterative revision. In one instance, a student investigating flavonoid variation in Camellia rosea petals used AI-generated suggestions to clarify structure, improve lexical precision, and streamline transitions between sections. Importantly, the student maintained full ownership of the scientific interpretation while using GenAI to refine the form and tone of their writing. These iterative cycles mirror a writing-to-learn approach, where revision is not merely correction but a key step in disciplinary identity formation and academic confidence-building.

2.3. Scientific Reasoning and Experimental Design: GenAI as a Foil

In field biology courses, where experimental design is both a conceptual and logistical challenge, GenAI tools are increasingly used not to provide answers but to provoke critique. One early-semester assignment asks students to prompt ChatGPT to generate an experimental procedure based on their own hypothesis and then evaluate the feasibility, coherence, and scientific validity of the AI’s response. This exercise frames GenAI as a foil—a contrasting voice against which students must articulate their reasoning and defend their methodological choices. For instance, one student group investigating the relationship between leaf litter density, canopy openness, and grass density noticed that ChatGPT produced a procedural suggestion and conclusion that contradicted their initial expectations. Instead of dismissing the output, the students analyzed it in light of their field observations and ecological context. As one student observed, “ChatGPT can’t ‘see’ what’s going on at the study site like we can.” This insight opened a broader class discussion about the limits of decontextualized data generation, the role of situated knowledge in ecology, and the importance of aligning methods with environmental variability.

These assignments prompt students to engage in scientific metacognition: articulating the why behind each design decision and examining the logical structure of their research plans. By interrogating GenAI’s blind spots—its inability to account for site-specific conditions, sampling constraints, or ecosystem dynamics—students strengthen their experimental literacy and deepen their understanding of the scientific process. Rather than treating GenAI as a shortcut, students learn to use it as a prompt for argumentation, critical reflection, and methodological refinement—core competencies in both fieldwork and research more broadly.

2.4. Broader Impacts: Equity, Publishing, and Participation in the Scientific Community

Integrating GenAI tools into field biology courses addresses persistent barriers in STEM education—particularly those related to language access, scientific writing, and experimental design. Early implementation suggests that GenAI can serve as an adaptive scaffold, improving students’ command of scientific terminology and supporting the development of academic writing skills, especially for second-language learners [48]. These tools also support diverse cognitive and linguistic needs, increasing participation and persistence among students historically underrepresented in science [49,50]. When embedded into culturally relevant, hands-on research assignments, GenAI tools contribute to deeper student engagement and reinforce STEM identity formation. As Barajas-Salazar et al. [51] note, experiential learning environments—particularly those tied to local environmental and community issues—enhance student self-efficacy and sense of belonging in scientific fields. These outcomes are especially critical in contexts where students are preparing to tackle complex, real-world problems such as environmental sustainability [52].

In practice, AI-assisted writing tools function as responsive feedback systems. Students use them to refine technical vocabulary, clarify ambiguous phrasing, and strengthen the logical flow of their arguments. In this way, GenAI mirrors the role of a mentor or tutor: guiding revision while preserving authorship and intellectual control [53,54]. Rather than outsourcing the writing process, students learn to treat GenAI as a cognitive partner—helpful in translation, organization, and clarity, but dependent on the student’s critical input. Importantly, these supports have already contributed to student-led research projects that culminated in peer-reviewed publications [55,56,57]. Students involved in these projects used GenAI tools not only to revise their writing, but also to decode journal formatting guidelines, clarify expectations, and improve abstract structure. These experiences demonstrate that GenAI can help demystify scientific publishing for emerging scholars, expanding access to authentic research participation and preparing students to contribute meaningfully to professional scientific discourse [58].

2.5. Future Applications: Scaling Equity Through AI and Authentic Research

As GenAI tools become more widely accessible, future integration in field biology should prioritize collaborative, multilingual science communication and authentic research preparation that empowers diverse learners. At HSIs such as the ULV, such integration holds particular promise for expanding access to high-impact practices traditionally limited by time, resource, and mentoring constraints. Faculty are exploring how GenAI can assist students in translating raw field observations into formalized scientific writing—particularly for learners unfamiliar with the conventions of peer-reviewed publication. Early experimentation has shown that GenAI can help students navigate complex publishing tasks, such as structuring abstracts, decoding journal submission guidelines, and refining figures and captions with greater clarity. These supports are especially relevant for students who may be engaging in manuscript preparation or conference poster design for the first time.

Emerging efforts also examine how GenAI might be embedded into mobile field data collection apps or cloud-based platforms used for ecological monitoring. In these applications, GenAI could provide just-in-time feedback on data descriptions, procedural language, and early-stage interpretation—offering immediate scaffolding to students in the field. Such tools may help reduce cognitive load during fast-paced data collection while reinforcing precision and reflection in field journaling and metadata documentation. In parallel, faculty are investigating how students interpret the role of GenAI in authorship and attribution. Some students describe AI as a helpful language editor, while some faculty and staff raise concerns about over-reliance or a perceived loss of voice in their scientific writing [54,58,59]. These divergent perspectives underscore the importance of developing best practices that promote transparency, equitable authorship, and scientific integrity in undergraduate research [60].

GenAI presents an opportunity to democratize access to authentic undergraduate research by scaffolding critical components of the research process—particularly for those who have been historically excluded from scientific publication or laboratory-intensive training. Realizing this potential, however, will require thoughtful design, institutional support, and clear guidelines that uphold ethical and disciplinary norms while promoting inclusion and innovation in field-based science education.

3. Generative AI as a Scaffold for Inclusive Learning in Organic Chemistry

Organic chemistry is frequently described as a “gatekeeper” course due to its conceptual density, abstract reasoning, and specialized terminology—all of which contribute to historically high attrition rates in STEM degree pathways [61,62]. These challenges are particularly acute at institutions such as the ULV, where a majority of students are first-generation college-goers, multilingual learners, or historically underrepresented in science. In this context, the organic chemistry laboratory becomes not only a pedagogical hurdle but also a site of opportunity to reimagine inclusion and retention through instructional innovation [61]. The faculty at the ULV have begun piloting GenAI tools—particularly large language models such as ChatGPT—as instructional scaffolds to reduce cognitive load, enhance comprehension of procedural language, and support student success in laboratory-based learning. These interventions aim to address multiple access points: clarifying dense instructional protocols, demystifying mechanistic reasoning, and offering personalized feedback on writing and data analysis. When used critically and transparently, GenAI can supplement faculty guidance while empowering students to develop the language, logic, and literacy of organic chemistry. As such, the integration of GenAI in this context is framed not merely as a convenience or technological enhancement, but as a means of pedagogical equity—intended to mitigate structural and linguistic barriers that disproportionately impact students from underserved backgrounds [21,63]. Ongoing faculty experimentation is guided by the principle that inclusive teaching requires adapting tools to student needs—not the other way around.

3.1. Demystifying Laboratory Language and Procedures

Many core organic chemistry labs—such as caffeine extraction from black tea—involve complex multi-step protocols that assume fluency in technical terminology. This presents a linguistic barrier for students encountering scientific English for the first time. In one example, students were asked to follow this step:

- “Once at room temperature, transfer the aqueous extract solution into your separatory funnel. Make sure the stopcock is in the closed position. Wash the solution with 10 mL of methylene chloride three times…”

Students found this language daunting. When the instructions were rephrased using ChatGPT, they became more accessible:

- “Transfer the cooled aqueous extract into a separatory funnel. Ensure the stopcock is closed. Add 10 mL of methylene chloride, shake, vent, and drain the bottom layer into a flask. Repeat this process three times.”

Simplifying the syntax improved comprehension and execution, particularly for multilingual students. This use of GenAI as a real-time translator of scientific language reflects its potential to act as a scaffold—not a crutch—for procedural understanding [48,64].

3.2. Cultivating Critical Visual Literacy with AI

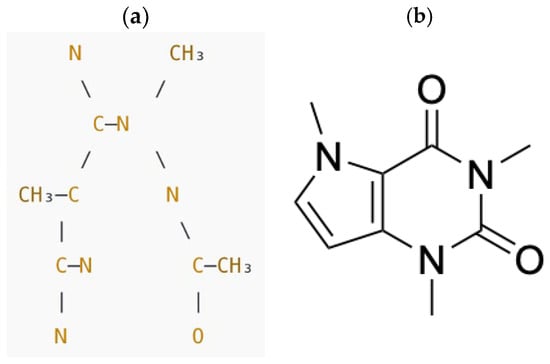

While GenAI supports linguistic comprehension, its visual output remains problematic. When asked to generate a molecular structure of caffeine, ChatGPT produced an inaccurate skeletal diagram (Figure 1a), omitting essential bond angles and atomic connectivity. In contrast, the correct version (Figure 1b) illustrates precise molecular geometry.

Figure 1.

(a) Incorrect chemical structure of caffeine generated by ChatGPT, displaying inaccuracies in bond placement and atomic connectivity [OPENAI/ChatGPT]. (b) Correct skeletal structure of caffeine, illustrating proper bond alignment and molecular geometry.

These limitations underscore the need for human oversight in AI-assisted instruction. Faculty now integrate critical evaluation tasks into assignments, asking students to verify AI outputs against peer-reviewed models. This helps build visual literacy and skepticism—essential skills in organic chemistry and broader scientific reasoning.

3.3. Toward a Culturally Responsive and Skeptical Pedagogy

At minority-serving institutions, where structural inequities intersect with pedagogical challenges, AI must be leveraged intentionally. Rewriting dense procedures or helping students navigate unfamiliar concepts must be accompanied by practices that encourage evaluation, revision, and scientific inquiry. The ULV’s chemistry faculty emphasize that GenAI is most effective when used to cultivate—not shortcut—students’ engagement with complexity.

To this end, faculty model verification habits, frame AI as a collaborator rather than an authority, and use AI-generated feedback as a springboard for metacognition. Students are encouraged to refine AI outputs, reflect on errors, and justify revisions—skills that extend beyond the chemistry lab.

3.4. Next Steps: AI-Enhanced Chemistry Education for Equity

Building on the challenges and opportunities described above, the ULV faculty are exploring next-generation applications of GenAI that could further strengthen equity in chemistry education. One promising direction is the development of accurate visualization tools, as future AI models may support molecular rendering and enable dynamic interaction with structures and reaction mechanisms. Such tools could be particularly valuable for multilingual or returning STEM students who benefit from visual scaffolds.

Another pathway involves layered, adaptive explanations, where AI could provide tiered accounts of complex phenomena—such as reaction kinetics or resonance—allowing students to build knowledge progressively at their own pace. Similarly, simulation-based practice using virtual instruments and AI-assisted troubleshooting could reinforce skill-building for students preparing for lab practicals, especially those with limited access due to commuting or caregiving responsibilities.

Finally, meta-cognitive AI assignments that ask students to critique AI-generated code, quizzes, or analysis may serve as platforms for building both scientific and digital literacy. Future work will examine how AI feedback influences student reasoning in data interpretation and procedural writing, particularly in complex assignments such as spectroscopy or multi-step synthesis. Together, these innovations underscore a larger goal: making chemistry education more inclusive, responsive, and equitable for the next generation of scientists [65].

4. GenAI as a Catalyst for Inclusive, Ethical Learning in Cell Biology and Immunology

In cell biology and immunology at the ULV, GenAI is being explored not simply as a content tool, but as a lever for equity, student agency, and ethical scientific engagement. These courses serve a diverse student population, many of whom face structural barriers to success in STEM. GenAI was piloted to foster inclusive pedagogical practices, amplify underrepresented voices, and cultivate responsible digital literacy.

4.1. Centering Student Voice Through AI-Supported Feedback Loops

Traditional course evaluations often arrive too late to meaningfully impact students’ learning experiences. To address this, instructors implemented mid-semester anonymous feedback via Google Forms and used ChatGPT to synthesize open-ended responses. This process revealed key patterns:

- Start: practice worksheets, guided simulations, study guides;

- Stop: fast lecture pacing, overuse of group work;

- Continue: visual aids, hands-on labs, supportive classroom climate.

Summarizing responses into visualizations and sharing them in class created transparency and invited dialogue. This intervention empowered students—especially those hesitant to speak out—to influence course design in real time. By integrating AI into the feedback process, faculty scaled reflective pedagogy in an equity-minded way.

4.2. Fostering Peer Wisdom and Intergenerational Guidance

To cultivate a community of practice and strengthen culturally responsive learning, students were asked to write letters of advice to future cohorts. ChatGPT helped identify recurring themes, which were then shared with the class. Students emphasized the following:

- Time management and consistency;

- Active engagement with labs and lectures;

- Use of visual aids and peer discussion;

- Office hours and help-seeking;

- Maintaining a growth mindset.

This activity validated diverse ways of learning and created a legacy of peer guidance. It also reframed academic success as a communal, not individual, pursuit—particularly important in serving first-generation and underrepresented learners.

4.3. Cultivating AI Literacy and Ethical Judgment

Recognizing AI’s growing role in science and education, students were assigned a structured “AI Error Correction” task. They received a ChatGPT-generated biology explanation embedded with subtle errors and were asked to identify and revise inaccuracies using peer-reviewed sources.

- Example: students analyzed an AI-generated summary of T-cell activation and flagged incorrect signaling pathways or misattributed molecular roles.

This assignment positioned students as evaluators rather than consumers of AI. It developed digital discernment, reinforced scientific rigor, and modeled transparency about tool use. Students learned to balance the utility of GenAI with critical scrutiny—skills essential for ethical research and data-informed decision-making.

4.4. From Classroom to Institutional Impact: Building Inclusive AI Practices

The multi-pronged integration of GenAI in Cell Biology and Immunology responded to broader institutional challenges around equity, student engagement, and responsible technology use.

Key outcomes include the following:

- Equity and voice: mid-semester feedback elevated marginalized perspectives;

- Culturally responsive pedagogy: peer reflections fostered shared learning values;

- Ethical use of AI: students gained tools for transparent and critical AI engagement;

- AI literacy: structured, critique-driven digital fluency and scientific judgment.

Together, these efforts positioned GenAI as an ally in inclusive STEM instruction—one that supports both identity development and disciplinary expertise.

4.5. Future Pathways: Co-Creating Ethical AI Use in STEM

Building on these practices, faculty are exploring how students can co-author ethical frameworks for AI use in STEM. Rather than relying on static policies, this participatory model would allow students to shape norms for citation, authorship, and transparency.

Emerging directions include the following:

- AI in experimental design: students using GenAI to ideate hypotheses and vet feasibility against published literature;

- Distinguishing hallucinated vs. evidence-based outputs: promoting epistemic awareness and research ethics;

- AI across departments: collaboratively developing AI literacy modules on tool evaluation, data verification, and responsible communication.

In parallel, faculty are exploring student integration into AI-supported research, including co-authoring, data annotation, and science communication roles. These pathways would expand access to undergraduate research while preparing students for ethical leadership in a rapidly evolving digital landscape [66].

5. Broadening Access to Instrumental Chemistry Through Generative AI

Laboratory instrumentation plays a central role in undergraduate chemistry education, enabling students to apply theoretical concepts to real-world analytical problems [67]. Across general, organic, analytical, instrumental, and physical chemistry courses at the ULV, students engage with equipment such as spectrophotometers, chromatographs, and titration systems—often for the first time. These hands-on experiences are foundational to STEM skill development and are frequently cited as critical for retention and professional identity formation in the chemical sciences [68]. However, access to instrumentation remains uneven. First-generation and commuter students, in particular, often face barriers that limit their ability to fully benefit from lab-intensive learning environments—ranging from transportation and scheduling conflicts to caregiving and work obligations [69,70]. While all students pay the same tuition, not all have equal opportunities to participate in extended lab sessions or seek informal mentorship during off-hours. These disparities highlight a structural access gap common at commuter-serving institutions such as the ULV.

GenAI offers a potential bridge. By providing on-demand support outside of formal lab hours, GenAI tools can help students interpret technical manuals, review procedural steps, troubleshoot errors, and build fluency with scientific instrumentation. In this sense, GenAI acts as a scalable supplement to hands-on instruction—reinforcing core concepts and offering just-in-time clarification when students are studying independently or working asynchronously. Early explorations suggest that such tools can help demystify the hidden curriculum of instrumentation, particularly for students without prior exposure to lab-intensive courses [71,72]. As faculty at the ULV begin integrating GenAI into instrumental chemistry instruction, the aim is not to replace experiential learning, but to scaffold it—extending the reach of mentorship and enhancing equity in access to critical STEM competencies. This section examines how AI is being used to supplement instruction in instrumentation-heavy courses and explores its role in reducing systemic barriers to participation and skill acquisition.

5.1. GenAI as a Scalable Instructional Supplement

Virtual laboratories have long supported student engagement and conceptual understanding in STEM fields [73]. Building on these foundations, generative AI tools now offer promising new pathways to expand access to instrumentation literacy.

At the ULV, chemistry faculty are piloting tools such as ChatGPT to help students:

- Interpret technical manuals that are outdated, missing, or overly complex;

- Reinforce core principles across instruments that vary by manufacturer;

- Troubleshoot common errors using general operational logic;

- Clarify terminology and schematics through conversational learning.

These applications are particularly useful when students encounter instrument documentation that assumes prior fluency in procedural language or equipment-specific nuances. In such cases, AI can break down dense content into simpler walkthroughs or generate scaffolding materials—provided students are also taught to assess these outputs for accuracy.

For example, when a student used ChatGPT (free version, December 2024) to generate a labeled diagram of a high-performance liquid chromatography (HPLC) system, the result looked polished but was structurally incorrect (Figure 2). The AI misrepresented key components, underscoring the need for faculty moderation and student skepticism when using GenAI in instrument-heavy disciplines.

Figure 2.

ChatGPT’s inaccurate portrayal of a high-performance liquid chromatography (HPLC) instrument. The diagram fails to correctly label and represent essential components, underscoring current limitations in AI-generated educational content.

These limitations do not negate the tool’s value; rather, they emphasize that GenAI should serve as a supplement, not a substitute, for instructor expertise and hands-on training. When embedded within reflective and evidence-based pedagogy, AI tools can offer just-in-time support, simulate troubleshooting pathways, and strengthen students’ conceptual understanding of complex instrumentation workflows [48,71].

5.2. Future Opportunities in Instrumentation Education

Looking ahead, chemistry faculty at the ULV are piloting a range of innovations to deepen GenAI’s role in supporting inclusive, skill-based instrumentation training—particularly for students who face structural barriers to extended lab access or prior exposure to research environments.

- AI-supported digital twins: Virtual replicas of laboratory instruments, known as digital twins, can provide students with interactive simulations of common techniques before engaging with real hardware. By combining these simulations with AI-generated walkthroughs or augmented reality (AR) overlays, students could asynchronously explore instrument components, troubleshoot virtual malfunctions, and complete pre-lab modules that enhance readiness for in-person assessments. This approach builds familiarity while reducing anxiety around expensive or delicate instrumentation [73].

- Troubleshooting as inquiry: Rather than treating technical malfunctions as interruptions, students could use GenAI to propose general troubleshooting steps, test these solutions during lab, and reflect on observed discrepancies. This approach frames troubleshooting as a mode of inquiry, helping students develop diagnostic reasoning, scientific skepticism, and iterative thinking—key competencies for careers in analytical chemistry, environmental monitoring, and biomedical research [74].

- Adaptive practice via AI quiz banks: AI-generated, scenario-based quiz banks can provide customized reinforcement of procedural knowledge and lab safety protocols. These tools allow students to identify and address knowledge gaps outside of formal lab hours, enabling more efficient use of time during hands-on sessions. When used equitably, adaptive assessments can reduce cognitive overload for first-generation and commuter students, creating a more level learning environment [75].

- Research on AI impact: Empirical studies are needed to evaluate how AI-integrated lab training impacts students’ confidence, technical fluency, and persistence in STEM. Metrics such as skill transfer, reduced error rates, and increased comfort with instrumentation could help validate these interventions. Importantly, research should disaggregate outcomes by demographic groups to ensure that GenAI advances equity rather than reinforcing disparities [76].

By continuing to explore how GenAI can help close access gaps in chemistry education, the ULV faculty are working toward scalable, student-centered strategies that equip diverse learners to thrive in instrument-intensive fields. These efforts align with broader goals for inclusive STEM education and offer actionable pathways for improving workforce readiness across research, industry, and healthcare—particularly as higher education confronts rising expectations to adopt AI in ways that enhance student success and institutional equity [77].

6. AI-Enhanced Teaching in General Biology

General biology serves as a foundational gateway into STEM for students at HSIs, such as the ULV. This course spans diverse and conceptually demanding content areas—including molecular biology, ecology, and evolution—that often appear fragmented or abstract to first-year students, especially those without prior exposure to college-level science. For multilingual learners, first-generation college students, and others navigating unequal access to textbooks, tutoring, or study groups, this course can present significant barriers to persistence in STEM fields [76,78]. In response, the ULV faculty are piloting GenAI tools to personalize instruction, scaffold complex ideas, and expand equitable access to formative feedback. These interventions aim to promote self-directed learning, reduce reliance on expensive external platforms, and create alternate pathways for conceptual engagement. ChatGPT and similar tools have been used to generate tailored review materials, simulate concept quizzes, and offer multilingual explanations—particularly beneficial for students developing academic English fluency [20,21].

At the same time, the instructional team remains attentive to the ethical, cognitive, and pedagogical implications of GenAI integration. Students are introduced to critical AI literacy skills early in the course, including how to evaluate generated content, identify factual inaccuracies, and reflect on their own use practices. This dual emphasis—on access and critical engagement—positions GenAI not as a substitute for faculty interaction, but as a scalable support structure that complements culturally responsive instruction and evidence-based learning design [54,71].

6.1. Scaffolding Learning with Generative AI

To enhance accessibility, the ULV faculty piloted the use of ChatGPT to generate instructional materials aligned with textbook chapters, including the following:

- Practice quizzes;

- Guided activities;

- Conceptual review questions.

These AI-generated resources reduced faculty preparation time while offering students varied ways to engage with content. Many students began using ChatGPT independently to

- Create study guides;

- Generate self-assessment questions;

- Replace expensive flashcard apps or textbook companion tools.

These strategies allowed students to pursue alternative, cost-free learning pathways, fostering greater autonomy and mitigating financial barriers—key priorities at HSIs. However, faculty also noted that AI-supported learning required deliberate oversight. While ChatGPT accelerated content creation and offered accessible language scaffolds, it struggled in areas such as scientific image generation and rubric-based grading—reminding instructors of the need to use GenAI tools judiciously and supplement them with traditional mentorship.

6.2. Improving Metacognition Through AI-Supported Feedback

To address gaps in student self-awareness around learning progress, faculty introduced ungraded “blitz quizzes” at the start of each lecture. These five-minute assessments helped students identify conceptual weaknesses and provided real-time feedback without the pressure of grades.

- ChatGPT was used to generate up to 60 customized quiz questions per week.

- Prompts were refined by instructors to ensure clarity and alignment with textbook language.

- The iterative use of ChatGPT for testing assignments also allowed faculty to preempt confusion by simulating common student misunderstandings.

This integration enhanced student engagement and helped instructors tailor lessons based on performance trends. Yet, alongside these benefits, faculty encountered new ethical challenges: several students began submitting AI-generated work without disclosure, raising concerns about academic integrity and underscoring the need for formal AI literacy instruction.

6.3. Recognizing the Boundaries of GenAI in Biology Education

Despite its promise, ChatGPT (GPT-4o and GPT-o1) revealed several critical limitations when applied to introductory biology:

- Inaccurate visuals: Repeated attempts to generate scientifically accurate diagrams—e.g., insect morphology or microbial diversity graphs—yielded oversimplified or misleading images. This remains a barrier in visually intensive disciplines such as biology.

- Grading inconsistency: When evaluated as a grading assistant for student reflections, ChatGPT matched instructor assessments only about 30% of the time. It frequently overvalued weaker responses and penalized stronger ones, reinforcing the importance of human evaluation for conceptual depth.

- Confident misinformation: One of the more concerning patterns was the AI’s tendency to present incorrect information with unwarranted confidence. Students unfamiliar with the material often lacked the background to question these inaccuracies. Though performance improved with highly specific prompts, novice users may not know how to craft such queries—making AI literacy a central concern.

Taken together, these limitations suggest that while GenAI can support learning, it cannot replace expert instruction, scientific reasoning, or the developmental benefits of hands-on and inquiry-based education.

6.4. Future Directions: Equity-Driven AI Integration in STEM

Looking ahead, the ULV faculty are exploring new avenues for integrating GenAI into general biology while safeguarding core values of equity, rigor, and student agency. These next steps are guided by ongoing reflection on both the affordances and risks of AI-enhanced pedagogy, particularly within HSI contexts. Emerging priorities include the following:

- Enhanced visual accuracy: Faculty are collaborating with instructional designers and AI developers to improve the scientific validity of AI-generated visuals—especially in content areas such as microbiology, physiology, and systems ecology. Inaccurate visuals can mislead novice learners and contribute to conceptual misunderstandings, making this a critical area for development [79].

- Adaptive feedback systems: GenAI is being piloted to develop formative assessments that adjust in difficulty based on student performance. These AI-powered quizzes aim to personalize feedback and support differentiated instruction—a pedagogical strategy shown to reduce achievement gaps in large-enrollment STEM courses [76,78].

- Student-led AI literacy modules: the ULV is experimenting with a peer-led approach to digital ethics. Student ambassadors are being trained to co-facilitate workshops on ethical AI use, citation practices, bias detection, and academic integrity. This participatory model empowers students as co-creators of learning culture while reinforcing community norms around transparency and responsible technology use [54].

- Open-access review materials: Faculty are leveraging GenAI to co-create Creative Commons–licensed biology study guides, glossaries, and flashcard decks. These resources are intended to support students who lack access to commercial platforms or tutoring services, while also enabling knowledge-sharing across institutional boundaries—consistent with open education principles [80].

To assess the effectiveness and ethical dimensions of these practices, ongoing research is needed. Future inquiry should explore how GenAI integration affects student outcomes, such as concept mastery, persistence in STEM, and the development of metacognitive skills. Special attention must be given to disaggregated impacts across demographic groups, including first-generation college students, multilingual learners, and students with limited digital access [81].

7. Advancing Equity and Innovation in Mathematics Education with Generative AI

At the ULV, mathematics instruction serves as a critical foundation for developing quantitative reasoning, data fluency, and analytical thinking across STEM and non-STEM disciplines. For many students—particularly first-generation, multilingual, and underrepresented learners—gateway math courses can serve as both an academic hurdle and an opportunity for growth. Faculty teaching courses such as The Art of Guestimation have begun leveraging GenAI to foster engagement through estimation problems and context-rich scenarios that emphasize creativity, civic relevance, and interdisciplinary thinking. Rather than treating GenAI as a computational shortcut, instructors use it to support exploratory thinking, provide multilingual scaffolds, and generate culturally responsive prompts that invite students to connect mathematical reasoning to their lived environments. This aligns with a broader equity-centered approach to STEM instruction, in which student identity, background knowledge, and local context inform curricular design [82,83].

By incorporating GenAI tools into problem design, adaptive support, and collaborative critique, the ULV faculty are exploring how mathematics education can be both rigorous and inclusive. In particular, the ability to rapidly generate estimative scenarios—from calculating neighborhood water usage to analyzing local energy trends—has helped make mathematical abstraction more concrete, actionable, and personally relevant. At the same time, the integration of GenAI has raised questions about accuracy, overreliance, and ethical use—requiring that students and faculty alike approach these tools with curiosity, caution, and a critical eye. The following subsections examine how GenAI is being piloted in mathematics instruction at the ULV, highlighting both the opportunities and tensions that arise in efforts to scale culturally responsive, innovation-driven teaching.

7.1. Expanding Conceptual Relevance Through Contextualization

For many students—particularly multilingual, first-generation, and underrepresented learners—mathematics can appear abstract or disconnected from lived experience. GenAI offers a means to bridge this gap by generating locally grounded, socially relevant prompts. For example, AI tools were used to create estimation problems rooted in familiar settings:

- Calculating water savings from drought-tolerant landscaping;

- Modeling household energy use in Southern California;

- Analyzing grocery budgets or solar panel adoption at the neighborhood scale.

These personalized prompts enabled students to approach mathematics not simply as a discipline, but as a tool for understanding and improving their communities. Yet, the instructional benefits of GenAI are accompanied by pedagogical risks. Oversimplification of logic, shallow explanations, or culturally narrow scenarios can reinforce surface learning or exclusion. Faculty at the ULV emphasized the importance of pairing AI use with critical AI literacy, encouraging students to interrogate how problems are framed and whose realities they reflect.

7.2. Practical Integration: Supporting Instruction and Multilingual Access

In The Art of Guestimation, GenAI has been used for the following tasks:

- Scaffold complex estimation problems;

- Translate instructions or terminology for English learners;

- Rapidly generate instructional materials tailored to local contexts.

Students reported greater confidence when engaging with problems that reflected familiar decisions—such as planning grocery trips, comparing energy sources, or estimating traffic-related delays. GenAI’s multilingual capabilities also supported greater access to instruction, reducing linguistic barriers for non-native English speakers. However, effective implementation required guardrails. Faculty observed that some students leaned on GenAI to produce full answers without engaging in reasoning, risking passive learning. This led to the development of assignments that explicitly require students to critique, annotate, or revise AI outputs, reinforcing active learning and metacognition.

7.3. The Art of Guestimation as a Model for Inclusive AI Use

The course The Art of Guestimation exemplifies how GenAI can be thoughtfully embedded within inclusive, interdisciplinary pedagogy. Rather than simply solving problems, students are asked to perform the following tasks:

- Articulate assumptions;

- Justify units and scaling factors;

- Assess the credibility and logic of AI-generated estimates.

For instance, when estimating population growth or modeling electric vehicle energy consumption, students evaluate the realism of AI suggestions and offer improvements. These exercises emphasize data literacy, civic engagement, and scientific reasoning—skills increasingly essential in an AI-mediated world.

The course also introduces students to core AI ethics topics, including

- Algorithmic bias;

- Data transparency;

- The social implications of automation.

In doing so, it not only cultivates quantitative fluency, but also supports students as critical, responsible users of emerging technologies.

7.4. Addressing Equity and Infrastructure Challenges

To ensure AI innovations benefit all students, the ULV mathematics faculty have prioritized institutional strategies that address systemic barriers:

- Training and support: workshops for students and faculty on responsible GenAI use help build confidence and fluency.

- AI-critical assignments: tasks that ask students to critique, revise, or improve AI-generated problems foster engagement and discourage overreliance.

- Inclusive content development: ongoing collaboration with developers ensures AI-generated content reflects diverse cultural contexts and lived experiences.

- Digital equity initiatives: Access to devices and reliable internet is essential for inclusive participation. Expanding infrastructure through lending programs and campus Wi-Fi initiatives supports equity in GenAI-enhanced learning.

These strategies align with the ULV’s mission to reduce opportunity gaps and cultivate inclusive academic excellence.

7.5. Illustrative Problems: Real-World Estimation Tasks

The following GenAI-supported examples demonstrate how context-rich prompts can foster deeper engagement:

- Water conservation: Estimate annual water savings from replacing lawns with drought-resistant landscaping. Scale to 1000 households.

- Urban transit: compare energy use between electric and gasoline vehicles during peak traffic across Los Angeles.

- Grocery budgeting: estimate food costs for a family of four and model savings via menu changes or store substitutions.

- Solar energy: calculate energy output and cost savings for a neighborhood installing rooftop solar panels.

- Population growth: model population growth in La Verne over 10 years using different projected growth rates.

Framing estimation in local, real-life terms promotes curiosity and reinforces the utility of mathematics in students’ personal and civic lives.

7.6. Future Directions for AI in Math Education

Looking forward, the ULV mathematics faculty are developing new pathways for integrating GenAI tools in ways that deepen student learning, support inclusive pedagogy, and build critical digital fluency. These initiatives aim not only to extend the utility of GenAI across instructional design, but also to investigate its cognitive, cultural, and ethical implications.

- Empirical studies on engagement and retention: Faculty-led research will examine how GenAI-generated estimation problems compare to traditional assignments in promoting conceptual understanding, retention, and motivation—particularly among multilingual and first-generation students. Metrics will include persistence rates, confidence in mathematical reasoning, and attitudes toward problem-solving [84].

- Student–AI co-creation models: Ongoing pilots are exploring how students can engage in co-creating, critiquing, and refining AI-generated prompts. This process invites students to become co-designers of their learning environment, fostering both metacognitive awareness and AI literacy. By reflecting on AI-generated logic and identifying inaccuracies or bias, students sharpen both quantitative and ethical reasoning [85].

- Multimodal AI tools for modeling: Faculty are testing AI platforms that support multimodal inputs—such as text, numerical data, and graphical visualization—to scaffold interdisciplinary mathematical modeling. These tools hold particular promise for students who excel in spatial or verbal reasoning but may struggle with abstract numeracy alone [86].

- Embedded cross-disciplinary modules: To ensure ethical grounding, the ULV mathematics faculty are collaborating with colleagues in philosophy, education, and computer science to co-develop short AI literacy modules. These will address topics such as algorithmic bias, transparency in tool use, and responsible data modeling—equipping students with transferable skills across STEM and non-STEM fields.

Together, these initiatives reflect the ULV’s broader commitment to designing GenAI-enhanced instruction that centers student experience, cultural relevance, and equity. Rather than positioning GenAI as a replacement for faculty-led instruction, our approach treats it as a pedagogical partner that supports critical thinking, deepens engagement, and expands access to high-impact learning opportunities. This direction is consistent with recent findings that highlight how higher education institutions are increasingly exploring collaborative, instructional uses of GenAI to improve student learning outcomes [87].

8. Bridging Scientific Literacy Gaps Through Generative AI in Biology Education

The COVID-19 pandemic exposed longstanding vulnerabilities in public scientific literacy, especially regarding the ability to differentiate between peer-reviewed evidence and anecdotal claims. While regulatory guidance from the FDA and CDC was grounded in decades of accumulated empirical data, public discourse was often dominated by misinformation, social media speculation, and a limited understanding of scientific process [88,89,90]. This disconnect not only fueled vaccine hesitancy and health misinformation but also reflected a deeper systemic issue: widespread underdevelopment of scientific reasoning skills among both STEM and non-STEM learners [91]. This crisis has renewed calls for institutions of higher education to play a more active role in cultivating critical science literacy—defined not simply as knowledge of facts, but as the capacity to evaluate evidence, interpret data, and understand the social and ethical dimensions of scientific inquiry [92,93]. Within this context, GenAI tools, when integrated with intentional scaffolding, provide promising avenues for supporting this goal. These tools can help students critically engage with scientific texts, formulate and communicate evidence-based arguments, and interrogate the credibility of information sources.

At the ULV, biology faculty have begun incorporating GenAI tools across courses for both majors and non-majors to address these literacy gaps. These implementations aim to achieve the following:

- Support students in summarizing and interpreting complex peer-reviewed research;

- Provide real-time feedback on scientific writing and data interpretation;

- Encourage critical engagement with AI-generated explanations, particularly in assignments that require verification and revision;

- Foster transparency and ethical reasoning in the use of AI in academic work.

This approach aligns with the ULV’s broader commitment to inclusive and justice-oriented STEM education, especially for students from historically marginalized backgrounds. In classrooms where students bring diverse linguistic resources and varying levels of familiarity with scientific discourse, GenAI can function as a mediator—not a substitute—for authentic scientific engagement. By enabling access to high-quality explanations, assisting with interpretation of complex texts, and modeling transparent revision practices, GenAI has the potential to democratize participation in scientific inquiry. However, faculty at the ULV are also careful to position GenAI as a tool that must be interrogated—not blindly trusted. Assignments that focus on evaluating AI-generated summaries, for instance, help students recognize both the affordances and limitations of automated systems in scientific communication. In this way, GenAI serves not just as a learning aid, but as a catalyst for deeper reflection on how knowledge is created, validated, and shared—core components of scientific literacy in the 21st century.

8.1. Supporting Non-Majors in Navigating Scientific Information

For non-STEM majors, introductory biology courses often present unfamiliar challenges: interpreting scientific literature, evaluating data, and understanding experimental design. To address these barriers, the ULV faculty introduced GenAI as a tool to scaffold engagement with primary literature. Students used ChatGPT to perform the following tasks:

- Summarize peer-reviewed articles;

- Generate annotated bibliographies;

- Evaluate the credibility of sources.

Rather than simply translating complex material into simpler terms, these activities focused on helping students unpack the structure, logic, and implications of scientific claims. This process enabled them to critically differentiate between anecdotal information and empirical evidence—skills that are essential in resisting misinformation and building informed civic perspectives [21,94].

8.2. Enhancing Science Communication Among Biology Majors

Within courses for biology and microbiology majors, GenAI was integrated into communication-intensive assignments such as poster presentations and manuscript writing. Early writing assignments were completed independently to reinforce core competencies. GenAI was then introduced as a revision tool, helping students

- Clarify their key findings;

- Distill takeaways into accessible language;

- Organize poster elements or manuscript sections more effectively.

Crucially, students maintained full authorship of their work, using AI as an editorial assistant rather than a ghostwriter. This supported the development of a scientific voice while offering a platform for ethical discussions on transparency, attribution, and intellectual ownership [71]. These practices also raised important research questions for future exploration: How does AI use shape students’ confidence and ability to communicate science? What supports are needed to ensure equity in these emerging modalities?

8.3. Teaching AI Literacy Through Critical Evaluation

Rather than avoiding the known limitations of GenAI, the ULV faculty incorporated them directly into instruction. Students were tasked with evaluating AI-generated summaries of primary literature, identifying

- Misinterpretations of experimental design;

- Logical inconsistencies;

- Unsupported claims.

These assignments helped students develop critical AI literacy, positioning GenAI not as an authoritative voice but as a collaborator requiring verification. Through this lens, AI errors became case studies in scientific reasoning, reinforcing core concepts in hypothesis testing, source verification, and scientific trust-building. This reflective practice—treating AI as both a tool and an object of critique—also promoted metacognitive awareness of how knowledge is constructed and communicated.

8.4. Broadening Access to Research Communication

By embedding GenAI into structured, low-stakes assignments, biology instructors created inclusive opportunities for students—particularly those from underrepresented backgrounds—to gain confidence in scientific communication. In courses where students revised experimental designs, created visual abstracts, or generated manuscript drafts with AI support, faculty noted marked improvements in clarity, coherence, and ownership of content.

For students unfamiliar with scholarly publishing, GenAI provided a bridge to understanding formatting expectations, peer-review norms, and disciplinary discourse. These interventions align with the HSI goals of expanding access to high-impact educational practices, including undergraduate research, public presentation, and authorship [20]. The result was a more democratized entry into the scientific community—an essential goal for equity-driven biology education.

8.5. Future Directions: Institutionalizing Scientific Literacy in the AI Era

The experiences at the ULV point to a broader imperative: to embed GenAI into STEM curricula in ways that not only enhance learning outcomes, but also cultivate scientific literacy, civic reasoning, and ethical AI engagement. Moving forward, efforts must shift from ad hoc integration toward institutionally scaffolded strategies that prepare students to navigate—and shape—a scientific landscape increasingly mediated by intelligent systems.

- Cross-disciplinary AI literacy modules: Faculty across disciplines are exploring shared curricula that introduce students to core concepts in AI use—such as source evaluation, algorithmic bias, transparency, and attribution ethics. These modules can be integrated into first-year seminars, science writing courses, or lab-based learning environments and aligned with information literacy frameworks commonly developed in partnership with library science [95,96].

- Empirical studies on science communication outcomes: The ULV aims to support faculty-led research that evaluates how GenAI affects students’ ability to articulate scientific claims, construct arguments, and adapt content for public or interdisciplinary audiences. These studies should also disaggregate by demographic indicators to track whether AI-supported instruction helps close—or unintentionally widens—existing participation gaps [35].

- Partnerships with library and information science: To strengthen students’ ability to distinguish credible information from misinformation, collaborations with librarians can help scaffold AI-supported inquiry skills, particularly in navigating scientific databases and interpreting source authority. These collaborations are especially relevant given the surge in AI-generated citations, abstracts, and preprints with variable credibility [97,98].

- Digital equity initiatives: Finally, the success of any institutional GenAI strategy depends on equitable access. Students from low-income, undocumented, or commuter backgrounds often face disproportionate barriers to AI tools and training. Investments in device lending programs, campus-wide AI literacy workshops, and inclusive technology policies are essential to ensure full participation in GenAI-enhanced research and learning [81,99].

Ultimately, GenAI is not a substitute for rigorous science education, nor is it a fix-all for systemic inequities. However, when approached as a sociotechnical scaffold—one that supports student agency, democratizes access to disciplinary discourse, and fosters reflective practice—it becomes a powerful tool for inclusive scientific engagement [100]. In bridging the gap between research and public understanding, GenAI offers not just a teaching innovation, but a cultural shift in how we prepare students to understand, critique, and contribute to scientific knowledge.

9. Generative AI as a Coding Mentor: Expanding Access to Computational Research in Physics

GenAI is increasingly transforming undergraduate STEM education by expanding access to research experiences that have traditionally been limited to upper-division students with advanced coursework. At the ULV, physics faculty are piloting GenAI tools as real-time coding mentors within computational research settings. This approach is particularly focused on supporting early-stage students—including first- and second-year learners—who are often excluded from research due to their limited programming experience or lack of exposure to faculty-led projects. This work directly addresses a persistent equity gap in physics education. Nationally, over 70% of students who leave the physics major do so within their first two years, frequently citing a lack of early research opportunities, difficulty accessing mentorship, and limited confidence in computational skills [101,102]. These barriers disproportionately affect first-generation students, women, and students from underrepresented racial and ethnic groups, who may be less likely to enter physics with prior programming experience or advanced mathematical preparation [103].

To disrupt these exclusionary patterns, the ULV faculty integrated GenAI—specifically ChatGPT—into a National Science Foundation (NSF)-funded planetary science project that engages students in analyzing four decades of NASA solar wind data. Students developed deep learning models to forecast geomagnetic storms—complex natural events that affect the ionosphere, satellite communications, and power grids. Through this project, students gained hands-on exposure to data science, machine learning, and scientific computing, all while receiving just-in-time, AI-enabled support. Importantly, the initiative welcomed students from diverse academic pathways—not only physics majors but also those in chemistry, mathematics, and computer science. This interdisciplinary model reflects the increasing role of data-intensive inquiry across STEM fields and aligns with national calls to diversify participation in research through scalable, inclusive mentorship models [104]. GenAI served as an on-demand assistant, helping students interpret errors, troubleshoot code, and learn key programming constructs without the intimidation often associated with self-teaching.

This pilot not only democratized access to high-impact learning experiences but also offered new insights into the evolving role of GenAI in undergraduate research mentorship—particularly in disciplines where faculty capacity and student preparation remain uneven.

9.1. Scaffolding Early Research in Space Weather Forecasting

The core research project focused on developing machine learning models to forecast geomagnetic storms based on over four decades of NASA solar wind data. These storms—caused by solar activity—can disrupt communications, satellites, and power systems. To contribute meaningfully, students needed to rapidly acquire skills in Python 3.13.5 (Anaconda distribution) programming, data wrangling, and deep learning.

The ULV’s instructional design offered three scaffolding supports:

- Faculty mentorship: students attended 15 h per week of small-group sessions that provided guided instruction and real-time troubleshooting.

- Independent learning resources: supporting resources such as Python tutorials and data science documentation supported self-paced learning.

- AI-driven support: ChatGPT acted as an always-available tutor—interpreting error messages, generating code snippets, and offering conceptual explanations.

Together, these supports allowed early-career students to engage with advanced research while developing foundational computational fluency.

9.2. Real-World Use Cases: GenAI in Student Research Workflows

Students integrated ChatGPT into various aspects of their computational workflow:

- Code generation: when students encountered unfamiliar syntax or libraries (e.g., pandas, NumPy, TensorFlow), they used AI to generate and explain code snippets tailored to their project needs.

- Algorithm design: ChatGPT provided step-by-step explanations of machine learning architectures such as Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) models, breaking down logic and implementation.

- Code translation: students with backgrounds in MATLAB (version R2023a), C++, or Java used GenAI to translate familiar logic into Python, accelerating language acquisition across platforms.

- Optimization and debugging: ChatGPT was used to troubleshoot bugs, improve runtime, and clarify logic errors—transforming technical roadblocks into learning opportunities.

These applications demonstrate how AI can support agency and problem-solving, especially for students navigating complex coding challenges for the first time.

9.3. Building AI Literacy Through Verification and Ethical Use

To ensure responsible use, faculty at the ULV emphasized the critical evaluation of AI outputs. Rather than positioning GenAI as infallible, instructors encouraged students to treat it as a learning partner—one that occasionally makes mistakes.

- Verification: students were trained to cross-reference AI-generated code with trusted documentation and test functionality across multiple datasets.

- Transparency: code generated with AI required student annotation and conceptual justification to ensure full intellectual ownership.

- Ethical engagement: classroom discussions focused on AI authorship, attribution, and responsible integration into collaborative work.

These practices reinforced the principle that GenAI should scaffold—not substitute—scientific reasoning. This aligns with the broader ethical framework presented throughout the manuscript, emphasizing transparency, academic integrity, and metacognition.

9.4. Expanding Equity Through Early Access to Research

The initiative also addressed structural barriers to research participation. By embedding AI in summer internships funded by the NSF, the ULV faculty

- Expanded participation: students from historically excluded backgrounds—including those without prior programming experience—were able to contribute meaningfully to a cutting-edge research project.

- Supported financial equity: paid research opportunities helped reduce financial barriers, particularly for commuter and working students.

- Built research identity: students prepared conference presentations and co-authored publications, accelerating their integration into the scientific community.

This model demonstrates how GenAI, when combined with mentorship and funding, can advance inclusive excellence in undergraduate STEM education.

9.5. Future Directions: Designing Scalable AI-Supported Research Training

Building on this success, the ULV’s physics faculty are exploring scalable innovations to expand AI-supported research training and make early computational experience more equitable, particularly for students from historically excluded groups in STEM. These future directions aim to institutionalize access, deepen learning, and bridge the gap between AI literacy and authentic research participation:

- Open-access pre-research modules: Faculty are co-developing modular, GenAI-augmented tutorials that introduce foundational coding skills (e.g., Python, pandas, matplotlib) and core machine learning concepts (e.g., classification, regression) to lower-division students. These resources serve as an on-ramp for students without prior programming exposure, addressing early attrition risks often linked to technical intimidation [105].

- Peer-led AI coaching models: Advanced students are being trained to support their peers not only in debugging code and interpreting model outputs, but also in critically assessing AI-generated content. This mentorship model cultivates a collaborative learning culture while distributing the cognitive load of research onboarding [106].