Three-Step Derivative-Free Method of Order Six

Abstract

1. Introduction

- (a)

- The existence of high-order derivatives not present in the method.

- (b)

- (c)

- A priori error analysis is not provided for

- (d)

- Results on the isolation of the solution case not present, either.

- (e)

- The more challenging and important semi-local analysis (SLA) is not given.

- (a)′

- (b)′

- The analysis of convergence is carried out in the more general setting of Banach space valued operators, not only on .

- (c)′

- An a priori error analysis is provided to determine upper error bounds on This allows the determination of the number of iterations in advance to be carried out in order to achieve a predecided error tolerance.

- (d)′

- Computational results on the isolation of solutions are developed based on generalized continuity conditions [3,4,5,6,7,8] on the divided differences (see conditions and in Section 2).It is also worth noting that the usual conditions in the convergence analysis of this and the other methods mentioned in the aforementioned references require that is invertible. That is, must be a simple solution of equation although derivative is not present in Method (3). Thus, the earlier results in [13] cannot assure the convergence of Method (3) in cases operator F is a nondifferentiable operator, although the method may converge. But conditions and under our approach do not require to exist or be invertible.Thus, our approach can be utilized to solve equations like (1) in cases the operator is nondifferentiable.

- (e)′

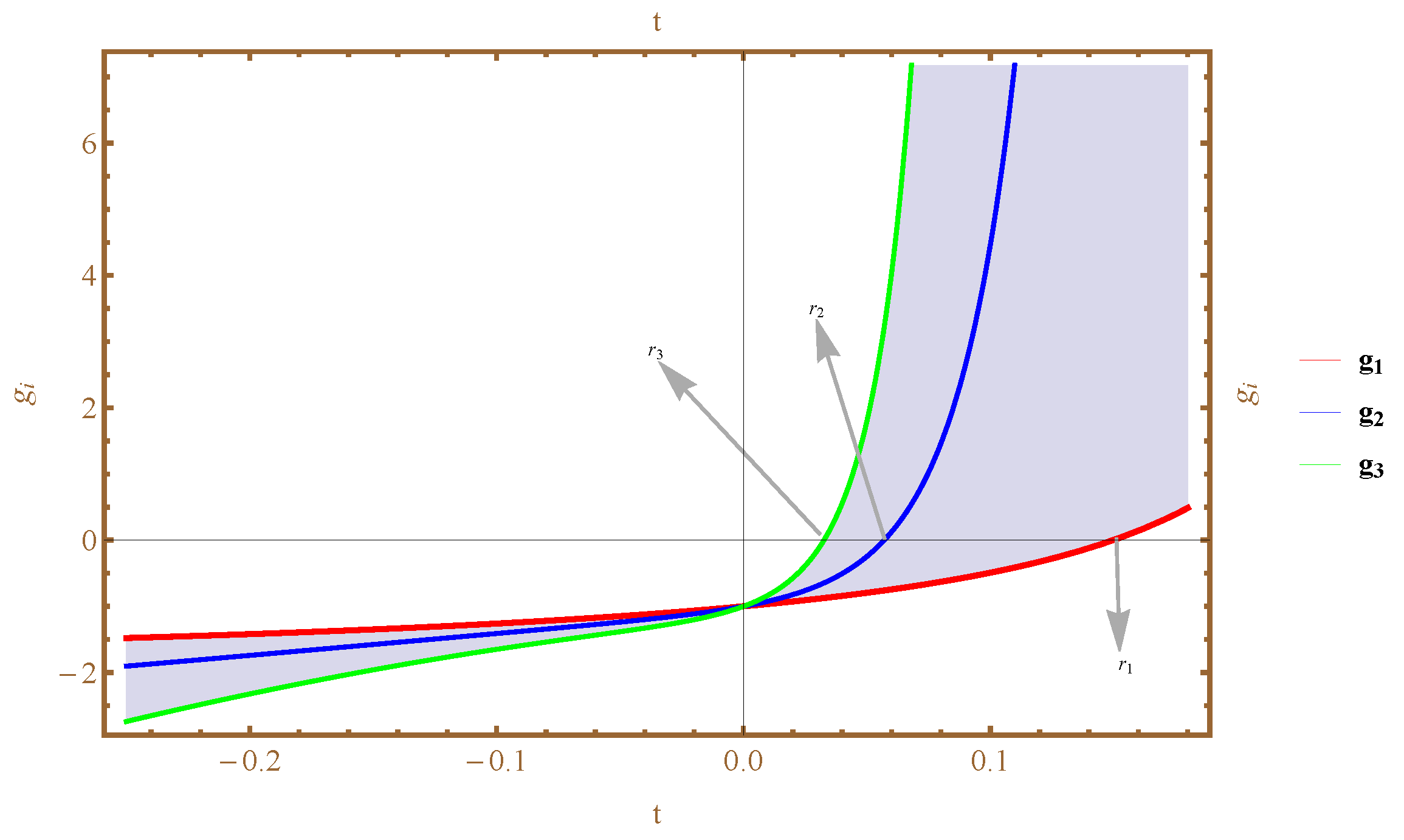

2. Local Analysis

- There exist continuous as well as nondecreasing functions and such that equationhas a positive solution, and the smallest (PSS) is denoted by Let .

- There exists an invertible linear operator L and with so that for ,where . We let

- There exist continuous as well as nondecreasing functions and for each ,

- Equation has a PSS denoted by whereWe define function by

- Equations , have PSS denoted by respectively, where areand

- with ,It is implied, if , thatand

- (i)

- The real functions and are left uncluttered in Theorem 1. But some choices are motivated by calculationsThus, we can chooseand similarly

- (ii)

- Conditions can be expressed without and like, for example,For all where is as . But then, we must require r in to beNotice, however, that condition is stronger than and function is less tight than

- (iii)

- Linear operator L is chosen so that functions are as tight as possible. Some popular choices are: (the differentiable case) or , (the non-differentiable case). It is worth noticing that the invertibility of is not assumed or implied.

3. Semi-Local Analysis

- There exist continuous as well as nondecreasing functions and such that equationhas a PSS denoted by We let and

- There exist an initial point and a linear operator L which is invertible such thatandfor eachNotice that conditions and offer, for ,Thus, is invertible and we can set for some

- There exists continuous as well as nondecreasing function so for ;We define the scalar sequence , and for and each byand

- There exists , soMoreover,so

4. Numerical Tests

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Steffensen, I.F. Remarks on iteration. Scand. Actuar. J. 1933, 16, 64–72. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Argyros, I.K. Computational Theory of Iterative Methods; Series: Studies in Computational Mathematics; Chui, C.K., Wuytack, L., Eds.; Elsevier Publ. Co.: New York, NY, USA, 2007. [Google Scholar]

- Liu, Z.; Zheng, Q.; Zhao, P. A variant of Steffensen’s method of fourth-order convergence and its applications. Appl. Math. Comput. 2010, 216, 1978–1983. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, Á.; Noguera, M. Frozen divided difference scheme for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 253, 1739–1743. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A.; Romero, N. Solving nonlinear integral equations of Fredholm type with high order iterative methods. J. Comput. Appl. Math. 2011, 36, 1449–1463. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Grau, Á.; Grau-Sánchez, M.; Hernández, M.A.; Noguera, M. Analysing the efficiency of some modifications of the secant method. Comput. Math. Appl. 2012, 64, 2066–2073. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Noguera, M. A technique to choose the most efficient method between secant method and some variants. Appl. Math. Comput. 2012, 218, 6415–6426. [Google Scholar] [CrossRef]

- Awawdeh, F. On new iterative method for solving systems of nonlinear equations. Numer. Algorithms 2010, 54, 395–409. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algorithms 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Grau-Sanchez, M.; Noguera, M.; Amat, S. On the approximation of derivatives using divided difference operators preserving the local convergence order of iterative methods. J. Comput. Appl. Math. 2013, 237, 363–372. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, T.; Qian, W.; Teng, M. Seventh-order derivative-free iterative method for solving nonlinear systems. Numer. Algorithms 2015, 70, 545–558. [Google Scholar] [CrossRef]

- Kansal, M.; Cordero, A.; Bhalla, S.; Torregrosa, J.R. Memory in a new variant of King’s family for solving nonlinear systems. Mathematics 2020, 8, 1251. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, S.; Argyros, I.K. Generalized Kung-Traub method and its multi-step iteration in Banach spaces. J. Complex. 2019, 54, 101400. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, S.; Sharma, J.R.; Argyros, I.K.; Regmi, S. Three-Step Derivative-Free Method of Order Six. Foundations 2023, 3, 573-588. https://doi.org/10.3390/foundations3030034

Kumar S, Sharma JR, Argyros IK, Regmi S. Three-Step Derivative-Free Method of Order Six. Foundations. 2023; 3(3):573-588. https://doi.org/10.3390/foundations3030034

Chicago/Turabian StyleKumar, Sunil, Janak Raj Sharma, Ioannis K. Argyros, and Samundra Regmi. 2023. "Three-Step Derivative-Free Method of Order Six" Foundations 3, no. 3: 573-588. https://doi.org/10.3390/foundations3030034

APA StyleKumar, S., Sharma, J. R., Argyros, I. K., & Regmi, S. (2023). Three-Step Derivative-Free Method of Order Six. Foundations, 3(3), 573-588. https://doi.org/10.3390/foundations3030034