Advancing Intelligent Logistics: YOLO-Based Object Detection with Modified Loss Functions for X-Ray Cargo Screening

Abstract

1. Introduction

- YOLO-based object-detection models trained with advanced IoU-based loss functions (GIoU, DIoU, CIoU, WIoU) achieve a statistically significant improvement in accuracy compared to models using the standard IoU loss function on cargo X-ray datasets.

- The combination of advanced IoU-based loss functions and soft NMS significantly reduces the rates of false positives and false negatives, particularly for occluded or overlapping prohibited items in X-ray images of cargo.

- The integration of advanced IoU-based loss functions into the latest YOLO architecture offers a more feasible trade-off between detection accuracy and computational efficiency for real-time X-ray-based cargo inspection compared to other popular deep learning object-detection architectures such as DETR (DEtection TRansformer) and Faster R-CNN (Region-based Convolutional Neural Network).

- Benchmarks standard IoU against advanced variants (i.e., GIoU, DIoU, CIoU, WIoU) to determine their effectiveness in addressing domain-specific challenges like occlusion and overlapping objects on a specialized cargo X-ray dataset.

- Demonstrates the effectiveness of modified IoU variants in optimizing object detection in X-ray cargo inspection, addressing a significant gap in the existing research.

- Highlights the scarcity of annotated cargo X-ray datasets, encouraging further research, dataset development, and collaboration in this important field in security and inspection.

- Incorporates a Soft-NMS mechanism in the latest YOLOv11, enhancing detection accuracy, especially for challenging categories of contraband items.

2. Background and Related Works

2.1. Modern YOLO Architecture

- Backbone: The backbone is all about efficiency and effective feature extraction. It often employs architectures like EfficientNet [22] or CSPNet [23]. These networks excel at capturing essential features from the input image while minimizing the number of parameters and operations. In addition to efficient architectures, the backbone may incorporate techniques like attention mechanisms to further enhance feature representation. Attention mechanisms help the network focus on the most relevant parts of the image, improving the discriminative power of the learned features.

- Neck: The neck is a crucial component that connects the backbone to the head. Its primary role is to combine features from different levels of the backbone, creating a feature pyramid that captures information at multiple scales. Feature Pyramid Networks (FPNs) [24] are commonly used in the neck, and variants like PAN (Path Aggregation Network) [25] further improve feature flow and information exchange between different levels of the pyramid. The neck’s focus is on efficient feature fusion, ensuring minimal information loss while combining features from different scales.

- Head: The head is responsible for making the final predictions, including object location, class, and confidence score. The head typically consists of several components: objectness prediction, classification, and regression. Objectness prediction determines the probability of an object being present in a specific region. Classification assigns a class label to the detected object (e.g., person, car, etc.). Regression refines the bounding-box coordinates to accurately localize the object within the image. The head utilizes loss functions that balance classification and localization accuracy, guiding the training process towards optimal performance.

2.2. Performance Metrics in Object Detection

- Model size (Parameter Count/PC) [10]: The total number of parameters (weights and biases) or the model’s storage size, which is reduced through compression techniques like pruning and quantization.Here, represents the number of input channels, is the number of output channels, K denotes the size of the convolutional kernel, bias represents the bias term, M is the number of convolutional kernels, and is an indicator function that equals 1 if bias is included and 0 otherwise.

- FLOPs (Floating-Point Operations per Second) [37]: It refers to the number of floating-point calculations that a model requires during inference. It is a key metric in evaluating the efficiency of an object-detection model, as it directly influences the model’s computational complexity and speed. Reducing FLOPs often reduces power consumption, which is critical for mobile and embedded AI. The FLOPs calculation methods for convolutional and fully connected layers are distinct. The following Equations can be used to compute FLOPs for convolutional and fully connected layers, respectively [38]:where K denotes the kernel size; H and W represent the spatial dimensions (height and width) of the output layer; and indicate the numbers of channels in the input and output layers, respectively; and I and O denote the numbers of neurons in the input and output layers.

- Model inference (latency): Latency refers to the time it takes for the model to process a single input (image or frame) and produce an output (detection or prediction). It is often measured in milliseconds (ms). Lower latency means the system can process inputs faster, which is critical for real-time applications.

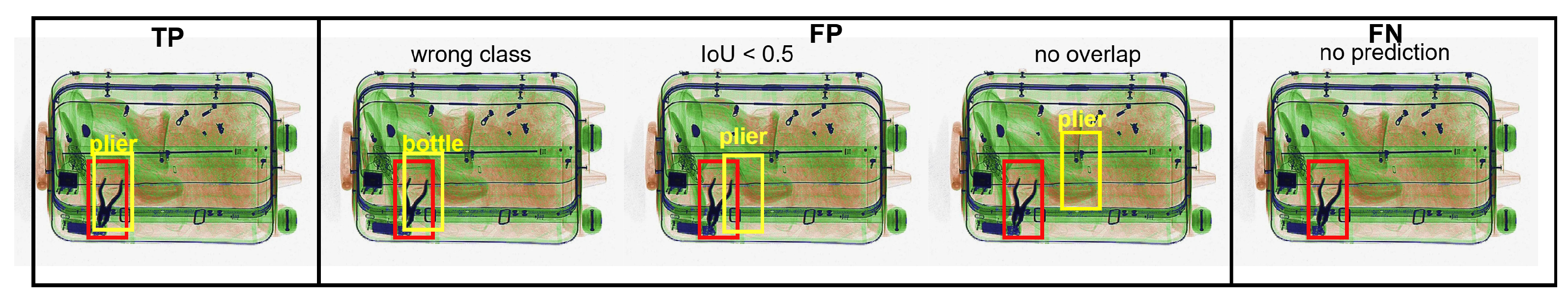

- Model accuracy: The evaluation of detection accuracy is described using Precision and Recall, respectively expressed in Equations (4) and (5).Here, refers to True Positives, indicating instances that are correctly detected as positive. denotes False Positives, referring to instances that are incorrectly detected as positive when they are actually negative. stands for False Negatives, indicating instances that are incorrectly detected as negative when they are actually positive. Since object-detection tasks involve both object classification and localization, a detection is considered positive only if it satisfies both criteria. Otherwise, it is regarded as a negative detection. The positivity of classification is straightforward—it depends on whether the predicted class matches the ground truth. In contrast, localization positivity is primarily determined by the Intersection over Union (IoU), as defined in Equation (6). A detection is considered correctly localized if the IoU exceeds a predefined threshold (e.g., 0.5). An illustration of detection accuracy in object detection is presented in Figure 3.A harmonic mean called the F1-score is calculated to ensure that both precision and recall are considered equally and is expressed as follows:Assuming the predicted bounding box is denoted as and the actual detection box as , the formula for Intersection over Union (IoU) is given as follows:When and have no intersection, IoU is 0, and when they perfectly overlap, IoU is 1. The range of IoU values is between 0 and 1, with higher values indicating greater proximity between the predicted and real boxes. Similar to other methods, YOLO structures often employ IoU as a loss function.In principle, aiming for higher values of Precision and Recall is ideal. However, in real-world situations, these two metrics frequently conflict, posing a challenge in intuitively comparing detection accuracy. Therefore, the detection task employs a more comprehensive evaluation metric called mean average precision (mAP), as shown in Equation (8).Here, m represents the number of object categories to be detected and denotes the average precision for category i, which quantifies the area under the Precision–Recall curve.

2.3. IoU Loss Functions and Their Modification

2.4. X-Ray-Based Baggage- and Cargo-Inspection Datasets

2.5. X-Ray Baggage and Cargo Inspection Using the YOLO Algorithm

| Dataset | Ref. | Year | YOLO Version | Modified IoU Loss | Metrics |

|---|---|---|---|---|---|

| CLCXray | [69] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n | none | mAP50 = 0.731, 0.755, 0.766, 0.772 |

| SIXray | [69] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n | none | mAP50 = 0.849, 0.896, 0.916, 0.920 |

| PIDray | [69] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n | none | mAP50 = 0.789, 0.803, 0.816, 0.825 |

| SIXray | [68] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n, YOLOv8s, YOLOv9, YOLOv10n | Inner-IoU loss | mAP50 = 0.853, 0.931, 0.911, 0.908, 0.940, 0.914, 0.916 |

| HiXray | [68] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n, YOLOv8s, YOLOv9, YOLOv10n | Inner-IoU loss | mAP50 = 0.756, 0.810, 0.805, 0.804, 0.823, 0.809, 0.811 |

| CLCXray | [68] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n, YOLOv8s, YOLOv9, YOLOv10n | Inner-IoU loss | mAP50 = 0.726, 0.883, 0.865, 0.880, 0.886, 0.890, 0.880 |

| PIDray | [68] | 2024 | YOLOv4, YOLOv5s, YOLOv7, YOLOv8n, YOLOv8s, YOLOv9, YOLOv10n | Inner-IoU loss | mAP50 = 0.764, 0.836, 0.832, 0.825, 0.861, 0.835, 0.825 |

| SIXray | [6] | 2023 | YOLOv3, YOLOv5, YOLOv7 | Soft-WIoU-NMS | mAP50 = 0.876, 0.910, 0.951 |

| Baggage | [49] | 2023 | YOLOv3, YOLOv5, YOLOv8 | none | mAP50 = 0.893, 0.875, 0.933 |

| OPIXray | [49] | 2023 | YOLOv8 | none | mAP = 0.880 |

| SIXray | [63] | 2022 | YOLOv3 | none | mAP50 = 0.738 |

| CargoX | [63] | 2022 | YOLOv3 | none | mAP50 = 0.931 |

3. Methodology

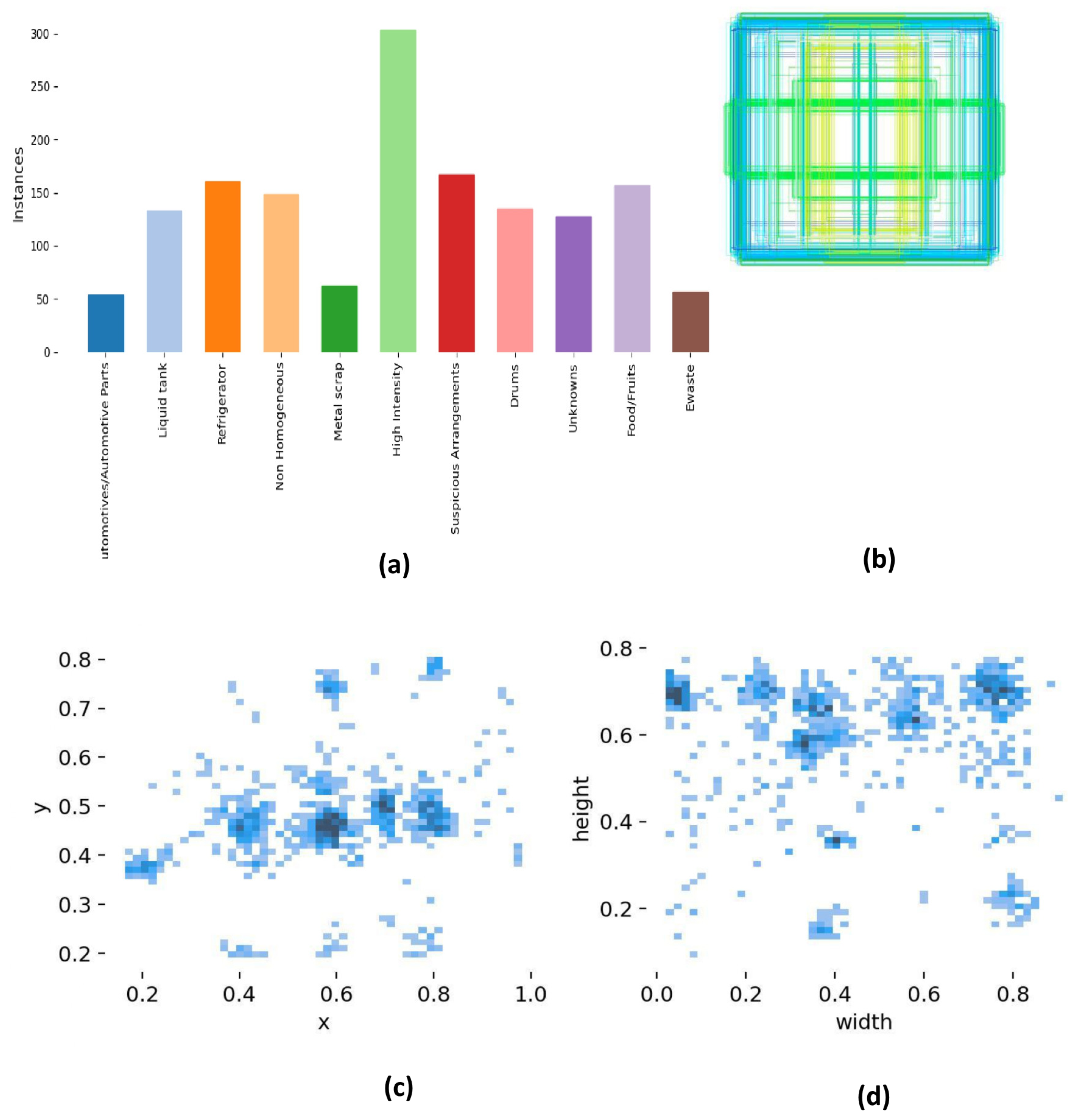

3.1. X-Ray Cargo-Image Datasets and Preprocessing

3.2. Image Annotation

3.3. Setup for Model Training

3.4. Evaluation of Model Performance

3.5. Incorporation of Soft-NMS and Modified IoU Loss Functions

4. Experimental Results

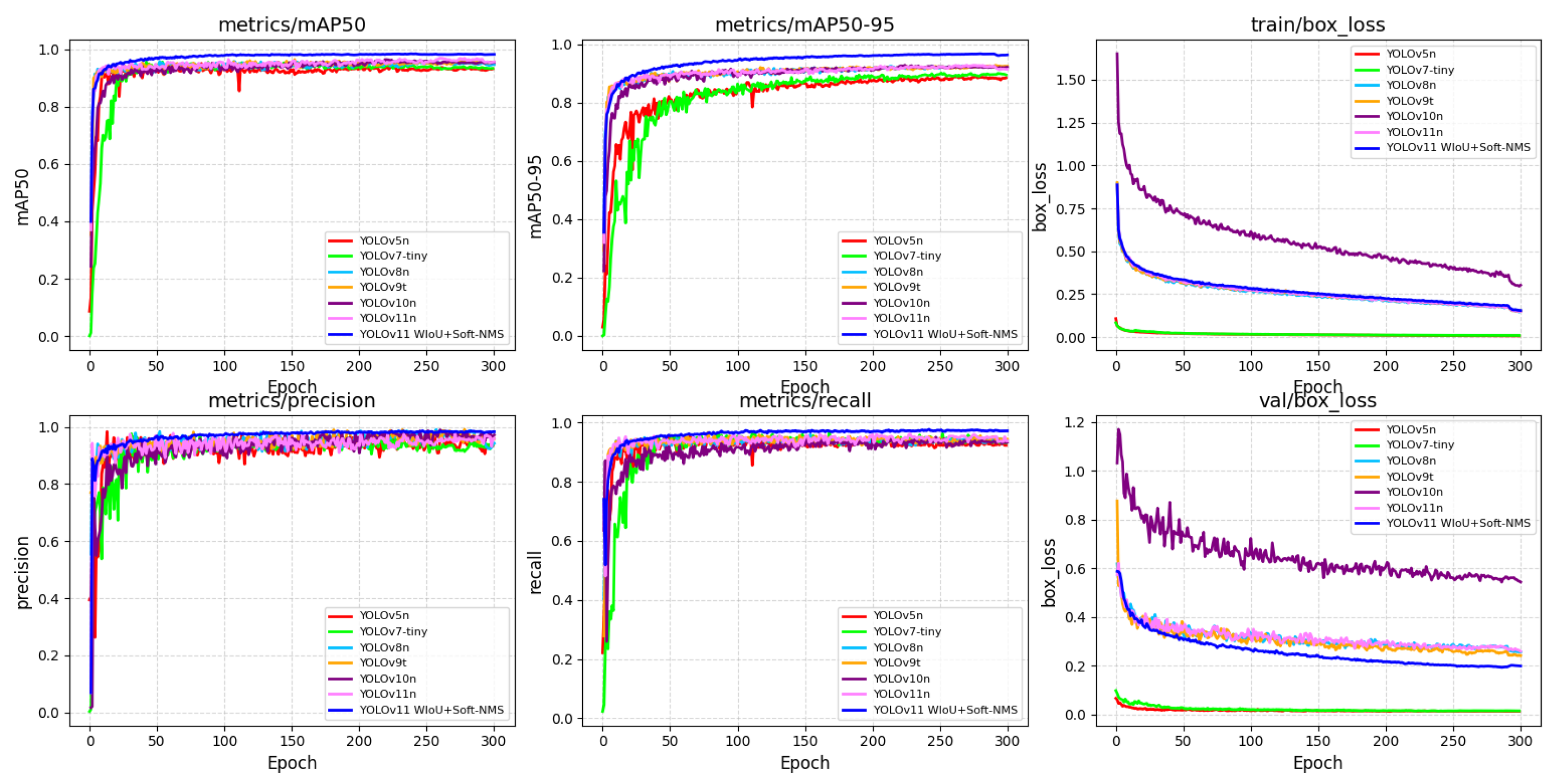

4.1. Comparing Different Object-Detection Models on the Cargo and Baggage Datasets

4.2. Experiments with Different IoU Loss Functions with Incorporation of the Soft-NMS Mechanism

5. Discussion

6. Conclusions and Recommendations

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Michel, S.; Mendes, M.; de Ruiter, J.C.; Koomen, G.C.M.; Schwaninger, A. Increasing X-ray image interpretation competency of cargo security screeners. Int. J. Ind. Ergon. 2014, 44, 551–560. [Google Scholar] [CrossRef]

- Wu, J.; Xu, X.; Yang, J. Object Detection and X-Ray Security Imaging: A Survey. IEEE Access 2023, 11, 45416–45441. [Google Scholar] [CrossRef]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A Review of Machine Learning and Deep Learning for Object Detection, Semantic Segmentation, and Human Action Recognition in Machine and Robotic Vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Li, W.; Solihin, M.I.; Nugroho, H.A. RCA: YOLOv8-Based Surface Defects Detection on the Inner Wall of Cylindrical High-Precision Parts. Arab. J. Sci. Eng. 2024, 49, 12771–12789. [Google Scholar] [CrossRef]

- Ragab, M.G.; Abdulkadir, S.J.; Muneer, A.; Alqushaibi, A.; Sumiea, E.H.; Qureshi, R.; Al-Selwi, S.M.; Alhussian, H. A Comprehensive Systematic Review of YOLO for Medical Object Detection (2018 to 2023). IEEE Access 2024, 12, 57815–57836. [Google Scholar] [CrossRef]

- Jing, B.; Duan, P.; Chen, L.; Du, Y. EM-YOLO: An X-ray Prohibited-Item-Detection Method Based on Edge and Material Information Fusion. Sensors 2023, 23, 16–19. [Google Scholar] [CrossRef]

- Dalal, S.; Lilhore, U.K.; Sharma, N.; Arora, S.; Simaiya, S.; Ayadi, M.; Almujally, N.A.; Ksibi, A. Improving smart home surveillance through YOLO model with transfer learning and quantization for enhanced accuracy and efficiency. PeerJ Comput. Sci. 2024, 10, e1939. [Google Scholar] [CrossRef]

- Wang, G.; Ding, H.; Duan, M.; Pu, Y.; Yang, Z.; Li, H. Fighting against terrorism: A real-time CCTV autonomous weapons detection based on improved YOLO v4. Digit. Signal Process. 2023, 132, 103790. [Google Scholar] [CrossRef]

- Zhao, Y.; Yang, D.; Cao, S.; Cai, B.; Maryamah, M.; Solihin, M.I. Object detection in smart indoor shopping using an enhanced YOLOv8n algorithm. IET Image Process. 2024, 18, 4745–4759. [Google Scholar] [CrossRef]

- Yang, D.; Solihin, M.I.; Zhao, Y.; Cai, B.; Chen, C.; Riyadi, S. A YOLO Benchmarking Experiment for Maritime Object Detection in Foggy Environments. In Proceedings of the 2024 IEEE 14th Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, Malaysia, 24–25 May 2024; pp. 354–359. [Google Scholar] [CrossRef]

- Vijayakumar, A.; Vairavasundaram, S. YOLO-based Object Detection Models: A Review and its Applications. Multimed. Tools Appl. 2024, 83, 83535–83574. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Hussain, M. YOLOv1 to v8: Unveiling Each Variant—A Comprehensive Review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Flores-Calero, M.; Astudillo, C.A.; Guevara, D.; Maza, J.; Lita, B.S.; Defaz, B.; Ante, J.S.; Zabala-Blanco, D.; Moreno, J.M.A. Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Math 2024, 12, 297. [Google Scholar] [CrossRef]

- Vinh, T.Q.; Anh, N.T.N. Real-Time Face Mask Detector Using YOLOv3 Algorithm and Haar Cascade Classifier. In Proceedings of the 2020 International Conference on Advanced Computing and Applications (ACOMP), Quy Nhon, Vietnam, 25–27 November 2020; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2020; pp. 146–149. [Google Scholar] [CrossRef]

- Markappa, P.S.S.; O’Leary, C.; Lynch, C. A Review of YOLO Models for Soccer-Based Object Detection. In Proceedings of the 2024 Sixth International Conference on Intelligent Computing in Data Sciences (ICDS), Marrakech, Morocco, 23–24 October 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Sohan, M.; Ram, T.S.; Reddy, C.V.R. A Review on YOLOv8 and Its Advancements. In Proceedings of the International Conference on Data Intelligence and Cognitive Informatics, Tirunelveli, India, 27–28 June 2023; Springer: Singapore, 2024; pp. 529–545. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, J.; Wang, H.; Zhang, S.; You, Y.; Yu, Z.; Peng, Y. Fused-IoU Loss: Efficient Learning for Accurate Bounding Box Regression. IEEE Access 2024, 12, 37363–37377. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Ultralytics. Ultralytics YOLO11 Has Arrived! Redefine What’s Possible in AI! Available online: https://www.ultralytics.com/blog/ultralytics-yolo11-has-arrived-redefine-whats-possible-in-ai?utm_source=chatgpt.com (accessed on 18 December 2024).

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. Available online: https://arxiv.org/abs/1905.11946v5 (accessed on 26 August 2025).

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 1571–1580. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar] [CrossRef]

- Alif, M.A.R.; Hussain, M. YOLOv1 to YOLOv10: A Comprehensive Review of YOLO Variants and Their Application in the Agricultural Domain. 2024. Available online: http://arxiv.org/abs/2406.10139 (accessed on 26 August 2025).

- Hussain, M.; Khanam, R. In-Depth Review of YOLOv1 to YOLOv10 Variants for Enhanced Photovoltaic Defect Detection. Solar 2024, 4, 351–386. [Google Scholar] [CrossRef]

- Jegham, N.; Koh, C.Y.; Abdelatti, M.; Hendawi, A. Evaluating the Evolution of YOLO (You Only Look Once) Models: A Comprehensive Benchmark Study of YOLO11 and Its Predecessors. 2024. Available online: http://arxiv.org/abs/2411.00201 (accessed on 26 August 2025).

- Ultralytics. YOLOv5–Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/models/yolov5/ (accessed on 1 January 2025).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. September 2022. Available online: https://arxiv.org/abs/2209.02976v1 (accessed on 26 August 2025).

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8–Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/models/yolov8/ (accessed on 1 January 2025).

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. February 2024. Available online: https://arxiv.org/abs/2402.13616v2 (accessed on 1 January 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. May 2024. Available online: https://arxiv.org/abs/2405.14458v2 (accessed on 1 January 2025).

- Ultralytics. YOLO11–NEW–Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/models/yolo11/ (accessed on 1 January 2025).

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024. Available online: http://arxiv.org/abs/2410.17725 (accessed on 26 August 2025).

- Yang, D.; Solihin, M.I.; Zhao, Y.; Yao, B.; Chen, C.; Cai, B.; Machmudah, A. A review of intelligent ship marine object detection based on RGB camera. IET Image Process. 2023, 18, 281–297. [Google Scholar] [CrossRef]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Inference. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; Available online: https://arxiv.org/abs/1611.06440v2 (accessed on 26 August 2025).

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. January 2023. Available online: https://arxiv.org/abs/2301.10051v3 (accessed on 19 December 2024).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. Lect. Notes Comput. Sci. 2014, 8693, 740–755. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V4: Unified Image Classification, Object Detection, and Visual Relationship Detection at Scale. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Mbwslib. GitHub–DvXray: The First Large-Scale Dual-view X-Ray Baggage Dataset. Available online: https://github.com/Mbwslib/DvXray (accessed on 14 December 2024).

- Ma, B.; Jia, T.; Li, M.; Wu, S.; Wang, H.; Chen, D. Toward Dual-View X-Ray Baggage Inspection: A Large-Scale Benchmark and Adaptive Hierarchical Cross Refinement for Prohibited Item Discovery. IEEE Trans. Inf. Forensics Secur. 2024, 19, 3866–3878. [Google Scholar] [CrossRef]

- Han, L.; Ma, C.; Liu, Y.; Jia, J.; Sun, J. SC-YOLOv8: A Security Check Model for the Inspection of Prohibited Items in X-ray Images. Electronics 2023, 12, 4208. [Google Scholar] [CrossRef]

- GitHub–MACHUNHAI/LSIray. Available online: https://github.com/MACHUNHAI/LSIray (accessed on 23 December 2024).

- GitHub–GreysonPhoenix/CLCXray: Detecting Overlapping Objects in X-Ray Security Imagery by a Label-aware Mechanism. Available online: https://github.com/GreysonPhoenix/CLCXray (accessed on 14 December 2024).

- GitHub–HiXray-author/HiXray. Available online: https://github.com/HiXray-author/HiXray (accessed on 14 December 2024).

- GitHub–lutao2021/PIDray: PIDray: A Large-Scale X-Ray Benchmark for Real-World Prohibited Item Detection. Available online: https://github.com/lutao2021/PIDray (accessed on 14 December 2024).

- GitHub–LPAIS/Xray-PI: An X-Ray Image Dataset for Prohibited Item Segmentation. Available online: https://github.com/LPAIS/Xray-PI (accessed on 14 December 2024).

- GitHub–OPIXray-author/OPIXray. Available online: https://github.com/OPIXray-author/OPIXray (accessed on 14 December 2024).

- GitHub–MeioJane/SIXray: The SIXray Dataset. Available online: https://github.com/MeioJane/SIXray (accessed on 14 December 2024).

- Miao, C.; Xie, L.; Wan, F.; Su, C.; Liu, H.; Jiao, J.; Ye, Q. Sixray: A large-scale security inspection x-ray benchmark for prohibited item discovery in overlapping images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2114–2123. [Google Scholar] [CrossRef]

- COMPASS-XP. Available online: https://zenodo.org/records/2654887 (accessed on 14 December 2024).

- Kolte, S.; Bhowmik, N.; Dhiraj. Threat Object-based anomaly detection in X-ray images using GAN-based ensembles. Neural Comput. Appl. 2023, 35, 23025–23040. [Google Scholar] [CrossRef] [PubMed]

- GitHub–Computervision-Xray-Testing/GDXray: Dataset GDXray Used Along the Book Computer Vision for X-Ray Testing. Available online: https://github.com/computervision-xray-testing/GDXray (accessed on 14 December 2024).

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragón, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The Database of X-ray Images for Nondestructive Testing. J. Nondestruct. Eval. 2015, 34, 1–12. [Google Scholar] [CrossRef]

- OSF|MFA-Net: Object Detection for Complex X-Ray Cargo and Baggage Security Imagery. Available online: https://osf.io/7hd3v/ (accessed on 16 December 2024).

- Viriyasaranon, T.; Chae, S.H.; Choi, J.H. MFA-net: Object Detection for Complex X-Ray Cargo and Baggage Security Imagery. PLoS ONE 2022, 17, e0272961. [Google Scholar] [CrossRef]

- GitHub–IS2AI/cargoxray: It Is a Dataset of X-Ray Images of Cargo Transport. The Dataset Includes Images of Railcars and Trucks with Trailers. Available online: https://github.com/IS2AI/cargoxray (accessed on 15 December 2024).

- Cho, H.; Park, H.; Kim, I.J.; Cho, J. Data Augmentation of backscatter x-ray images for deep learning-based automatic cargo inspection. Sensors 2021, 21, 7294. [Google Scholar] [CrossRef]

- Jaccard, N.; Rogers, T.W.; Morton, E.J.; Griffin, L.D. Detection of concealed cars in complex cargo X-ray imagery using Deep Learning. J. Xray. Sci. Technol. 2017, 25, 323–339. [Google Scholar] [CrossRef]

- Kolokytha, S.; Flisch, A.; Lüthi, T.; Plamondon, M.; Visser, W.; Schwaninger, A.; Hardmeier, D.; Costin, M.; Vienne, C.; Sukowski, F.; et al. Creating a reference database of cargo inspection X-ray images using high energy radiographs of cargo mock-ups. Multimed. Tools Appl. 2018, 77, 9379–9391. [Google Scholar] [CrossRef]

- Wang, A.; Yuan, P.; Wu, H.; Iwahori, Y.; Liu, Y. Improved YOLOv8 for Dangerous Goods Detection in X-ray Security Images. Electronics 2024, 13, 3238. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, E.; Yu, X.; Wang, A. Efficient X-ray Security Images for Dangerous Goods Detection Based on Improved YOLOv7. Electronics 2024, 13, 1530. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS–Improving Object Detection with One Line of Code. Proc. IEEE Int. Conf. Comput. Vis. 2017, 2017, 5562–5570. [Google Scholar] [CrossRef]

- Yang, D.; Solihin, M.I.; Zhao, Y.; Cai, B.; Chen, C.; Wijaya, A.A.; Ang, C.K.; Lim, W.H. Model compression for real-time object detection using rigorous gradation pruning. iScience 2025, 28, 111618. [Google Scholar] [CrossRef]

| Version | Released | Architectural Highlights |

|---|---|---|

| YOLOv5 [29] | June 2020 |

|

| YOLOv6 [30] | June 2022 |

|

| YOLOv7 [31] | July 2022 |

|

| YOLOv8 [32] | January 2023 |

|

| YOLOv9 [33] | February 2024 |

|

| YOLOv10 [34] | May 2024 |

|

| YOLOv11 [35,36] | September 2024 |

|

| Dataset | Subset | #Cat. | #Images | #Boxes | Boxes/Image |

|---|---|---|---|---|---|

| Pascal VOC | VOC07 | 20 | 5011 | 12,608 | 2.5 |

| VOC08 | 20 | 4332 | 10,364 | 2.4 | |

| VOC09 | 20 | 7054 | 17,218 | 2.3 | |

| VOC10 | 20 | 10,103 | 23,374 | 2.4 | |

| VOC11 | 20 | 11,540 | 27,450 | 2.4 | |

| VOC12 | 20 | 11,540 | 27,450 | 2.4 | |

| ILSVRC | ILSVRC13 | 200 | 416,030 | 401,356 | 1.0 |

| ILSVRC14 | 200 | 476,668 | 534,309 | 1.1 | |

| ILSVRC15 | 200 | 476,668 | 534,309 | 1.1 | |

| ILSVRC16 | 200 | 476,668 | 534,309 | 1.1 | |

| ILSVRC17 | 200 | 476,668 | 534,309 | 1.1 | |

| MS-COCO | MS-COCO15 | 80 | 123,287 | 896,782 | 7.3 |

| MS-COCO16 | 80 | 123,287 | 896,782 | 7.3 | |

| MS-COCO17 | 80 | 123,287 | 896,782 | 7.3 | |

| MS-COCO18 | 80 | 123,287 | 896,782 | 7.3 | |

| Open Images | OICOD18 | 500 | 1,743,042 | 12,195,144 | 7.0 |

| Dataset | Year | #Cat. | #Positive | #Negative | Annotation | #Images |

|---|---|---|---|---|---|---|

| DvXray [47,48] | 2024 | 15 | 5496 | - | Bbox, mask | 32,000 |

| LSIray [49,50] | 2023 | 21 | - | - | Bbox | 37,106 |

| CLCXRay [51] | 2022 | 12 | 9565 | 0 | Bbox | 9565 |

| HiXray [52] | 2021 | 8 | 883 | 102,045 | Bbox | 45,364 |

| PIDray [53] | 2021 | 12 | 124,486 | 0 | Bbox, mask | 124,486 |

| Xray-PI [54] | 2020 | 12 | >2409 | 10,000 | Bbox, mask | 12,409 |

| OPIXray [55] | 2020 | 5 | 8885 | 0 | Bbox | 8885 |

| SIXray [56,57] | 2019 | 6 | 8929 | 10,500,302 | Bbox | 1,059,231 |

| Compass-XP [58,59] | 2019 | 366 | 1928 | 0 | Bbox | 1928 |

| GDXray [60,61] | 2015 | 5 | 19,407 | 0 | Bbox | 19,407 |

| Dataset | Year | #Cat. | Public? | Synthetic? | Annotation | #Images |

|---|---|---|---|---|---|---|

| CargoX [62,63] | 2022 | 4 knife types | Y | Y | Bbox, mask | 64,000 |

| IS2AI [64] | 2022 | 7 types of goods | Y | N | Bbox | - |

| BSX-car [65] | 2021 | car types | N | N | Bbox, mask | 1776 |

| SoC cargo [66] | 2017 | 5 car categories | N | N | Bbox | 79 |

| ACXIS [67] | 2016 | 1 (cigarettes) | N | N | None | 38,331 |

| This paper | 2024 | 11 item categories | N | Y | Bbox | 2598 |

| Classes | Total Images |

|---|---|

| Automotives/Automotive Parts | 74 |

| Drums | 454 |

| E-waste | 72 |

| Food/Fruits | 337 |

| High Intensity | 426 |

| Liquid Tank | 166 |

| Metal Scrap | 70 |

| Nonhomogeneous | 210 |

| Refrigerator | 337 |

| Suspicious Arrangement | 238 |

| Unknowns | 214 |

| Total | 2598 |

| Loss Function | Key Feature |

|---|---|

| IoU | Basic overlap metric |

| GIoU | Penalizes nonoverlapping boxes |

| DIoU | Adds center-distance penalty |

| CIoU | Adds aspect-ratio similarity |

| WIoU | Distance-aware attention term |

| Model | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 | mAP@50:95 | FLOPs (G) | Inference (ms) |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 71.5 | 81.2 | 76.0 | 71.5 | 68.4 | 36 | 72 |

| DETR | 96.4 | 92.0 | 94.2 | 96.4 | 92.6 | 28 | 320 |

| YOLOv5n | 93.9 | 93.2 | 93.5 | 93.3 | 89.0 | 4.2 | 6.5 |

| YOLOv7t | 93.7 | 93.2 | 93.4 | 93.3 | 89.5 | 7.5 | 84 |

| YOLOv8n | 97.0 | 94.2 | 95.6 | 95.8 | 92.7 | 8.1 | 0.8 |

| YOLOv9t | 97.9 | 94.2 | 96.0 | 96.0 | 92.7 | 7.6 | 2.0 |

| YOLOv10n | 98.6 | 93.2 | 95.8 | 96.2 | 92.8 | 8.3 | 1.0 |

| YOLOv11n | 95.9 | 96.0 | 95.9 | 96.7 | 92.8 | 6.3 | 0.8 |

| Model | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 | mAP@50:95 | FLOPs (G) | Inference (ms) |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 58.4 | 65.9 | 61.9 | 58.8 | 58.3 | 36 | 71 |

| DETR | 96.8 | 85.0 | 90.5 | 95.7 | 82.2 | 28 | 500 |

| YOLOv5n | 95.2 | 89.5 | 92.3 | 94.6 | 81.2 | 4.2 | 0.7 |

| YOLOv7t | 94.9 | 86.0 | 90.2 | 93.1 | 73.2 | 7.5 | 55 |

| YOLOv8n | 95.8 | 88.1 | 91.8 | 94.1 | 81.4 | 8.1 | 0.9 |

| YOLOv9t | 95.8 | 90.7 | 93.2 | 95.6 | 83.3 | 7.6 | 0.7 |

| YOLOv10n | 94.8 | 88.9 | 91.8 | 94.4 | 81.7 | 8.3 | 0.9 |

| YOLOv11n | 96.5 | 88.4 | 92.3 | 95.0 | 82.0 | 6.3 | 0.9 |

| IoU Loss Function/Category | Automotives | Liquid Tank | Refrigerator | Nonhomogeneous | Metal Scrap | High Intensity | Suspicious Arr. | Drums | Unknowns | Food & Fruits | E-Waste | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | 99.5 | 99.5 | 99.5 | 69.3 | 99.5 | 99.5 | 99.5 | 99.5 | 99.2 | 99.5 | 99.5 | 96.7 |

| GIoU | 99.5 | 99.5 | 99.5 | 69.7 | 99.5 | 99.5 | 99.5 | 99.5 | 99.2 | 99.5 | 99.5 | 96.8 |

| DIoU | 99.5 | 99.5 | 99.5 | 61.7 | 99.5 | 99.5 | 99.5 | 99.5 | 99.4 | 99.5 | 99.5 | 96.1 |

| CIoU | 99.5 | 99.5 | 99.5 | 69.8 | 99.5 | 99.5 | 99.4 | 99.5 | 99.2 | 99.5 | 99.5 | 96.8 |

| WIoUv1 | 97.9 | 99.5 | 99.0 | 90.4 | 99.5 | 99.5 | 99.5 | 99.5 | 99.5 | 99.1 | 99.5 | 98.4 |

| IoU Loss Function/Category | Automotives | Liquid Tank | Refrigerator | Nonhomogeneous | Metal Scrap | High Intensity | Suspicious Arr. | Drums | Unknowns | Food & Fruits | E-Waste | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IoU | 99.5 | 99.3 | 91.7 | 38.5 | 99.5 | 99.5 | 98.4 | 99.5 | 96.9 | 99.2 | 98.8 | 92.8 |

| GIoU | 98.5 | 99.3 | 92.0 | 39.2 | 99.5 | 99.5 | 99.3 | 99.5 | 98.3 | 98.9 | 97.0 | 92.8 |

| DIoU | 98.6 | 99.5 | 90.1 | 30.6 | 99.5 | 99.5 | 98.1 | 99.5 | 99.2 | 99.2 | 99.5 | 92.1 |

| CIoU | 98.5 | 99.3 | 92.0 | 39.2 | 99.5 | 99.5 | 99.3 | 99.5 | 98.3 | 98.9 | 96.9 | 92.8 |

| WIoUv1 | 97.6 | 99.5 | 93.8 | 81.2 | 99.5 | 99.5 | 98.4 | 99.5 | 99.4 | 98.9 | 99.4 | 97.0 |

| Rotation | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 (%) | mAP@50:95 (%) |

|---|---|---|---|---|---|

| 0° | 95.9 | 96.0 | 95.9 | 96.7 | 92.8 |

| +5° | 95.4 | 95.6 | 95.5 | 96.3 | 92.3 |

| −5° | 95.2 | 95.5 | 95.3 | 96.1 | 92.1 |

| +10° | 94.6 | 95.0 | 94.8 | 95.6 | 91.5 |

| −10° | 94.4 | 94.8 | 94.6 | 95.4 | 91.3 |

| Model | Precision (%) | Recall (%) | F1-Score (%) | mAP@50 | mAP@50:95 |

|---|---|---|---|---|---|

| YOLOv11 | 95.90 ± 0.013 | 96.00 ± 0.010 | 95.95 ± 0.008 | 96.70 ± 0.004 | 92.80 ± 0.005 |

| Enhanced YOLOv11 (with WIoU + Soft-NMS) | 98.24 ± 0.014 | 97.65 ± 0.008 | 97.94 ± 0.009 | 98.44 ± 0.004 | 96.96 ± 0.006 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tee, J.H.; Solihin, M.I.; Chong, K.S.; Tiang, S.S.; Tham, W.Y.; Ang, C.K.; Lee, Y.J.; Goh, C.L.; Lim, W.H. Advancing Intelligent Logistics: YOLO-Based Object Detection with Modified Loss Functions for X-Ray Cargo Screening. Future Transp. 2025, 5, 120. https://doi.org/10.3390/futuretransp5030120

Tee JH, Solihin MI, Chong KS, Tiang SS, Tham WY, Ang CK, Lee YJ, Goh CL, Lim WH. Advancing Intelligent Logistics: YOLO-Based Object Detection with Modified Loss Functions for X-Ray Cargo Screening. Future Transportation. 2025; 5(3):120. https://doi.org/10.3390/futuretransp5030120

Chicago/Turabian StyleTee, Jun Hao, Mahmud Iwan Solihin, Kim Soon Chong, Sew Sun Tiang, Weng Yan Tham, Chun Kit Ang, Y. J. Lee, C. L. Goh, and Wei Hong Lim. 2025. "Advancing Intelligent Logistics: YOLO-Based Object Detection with Modified Loss Functions for X-Ray Cargo Screening" Future Transportation 5, no. 3: 120. https://doi.org/10.3390/futuretransp5030120

APA StyleTee, J. H., Solihin, M. I., Chong, K. S., Tiang, S. S., Tham, W. Y., Ang, C. K., Lee, Y. J., Goh, C. L., & Lim, W. H. (2025). Advancing Intelligent Logistics: YOLO-Based Object Detection with Modified Loss Functions for X-Ray Cargo Screening. Future Transportation, 5(3), 120. https://doi.org/10.3390/futuretransp5030120