Abstract

As the realization of fully autonomous driving becomes increasingly plausible, its rapid development raises serious privacy concerns. At present, while personal information of passengers and pedestrians is routinely collected, its purpose and usage history are rarely disclosed, and pedestrians in particular are effectively deprived of any meaningful control over their privacy. Furthermore, no institutional framework exists to prevent the misuse or abuse of such data by authorized insiders. This study proposes the application of a novel privacy protection framework—Verifiable Record of AI Output (VRAIO)—to autonomous driving systems. VRAIO encloses the entire AI system behind an output firewall, and an independent entity, referred to as the Recorder, conducts purpose-compliance screening for all outputs. The reasoning behind each decision is recorded in an immutable and publicly auditable format. In addition, institutional deterrence is enhanced through penalties for violations and reward systems for whistleblowers. Focusing exclusively on outputs rather than input anonymization or interpretability of internal AI processes, VRAIO aims to reconcile privacy protection with technical efficiency. This study further introduces two complementary mechanisms to meet the real-time operational demands of autonomous driving: (1) pre-approval for designated outputs and (2) unrestricted approval of internal system communication. This framework presents a new institutional model that may serve as a foundation for ensuring democratic acceptance of fully autonomous driving systems.

1. Introduction

In recent years, autonomous driving technology has rapidly advanced, and the realization of a fully autonomous driving society is becoming increasingly plausible [1]. This technology is expected to bring various societal benefits, including improved mobility convenience, enhanced transportation efficiency, a reduction in traffic accidents, and mitigation of traffic congestion. Furthermore, by providing a new means of transportation to diverse groups such as the elderly and people with physical constraints, it also promotes social inclusion [2], generating a paradigm shift in the transportation sector and forming part of the infrastructure for a sustainable future society.

However, the realization of autonomous driving systems requires the collection and analysis of vast amounts of data concerning passengers and pedestrians [3], and the issue of privacy protection in this process [4,5] has become a serious challenge that cannot be overlooked in democratic societies [6]. In particular, much of this data includes personally identifiable information (PII), which raises risks of unauthorized access and improper use [7,8]. Therefore, in addition to strengthening security systems, it is essential to implement strict control and monitoring over the handling of PII.

At present, passengers are not sufficiently informed of the purposes or records of data usage and are provided with few, if any, meaningful options. Moreover, pedestrians recorded by in-vehicle cameras are effectively not granted any control over their privacy.

In democratic societies, privacy protection is an essential prerequisite for securing the social acceptance of autonomous driving technologies. Here, social acceptance refers to a state in which citizens have trust and consent toward the introduction of a given technology, and in domains where privacy concerns are strong, this can be a decisive factor for determining acceptance [9,10,11]. Accordingly, it is not sufficient to merely prevent unauthorized access; it is critically important to establish institutional frameworks that can prevent misuse or arbitrary use of data by authorized internal stakeholders.

To address such challenges, a technical framework called “Privacy as a Service” has been proposed, which aims to reconcile privacy protection with information flow [12]. This framework is envisioned for future societies in which real-time communication between autonomous vehicles and infrastructure is a prerequisite and allows for dynamic acquisition and updating of user consent based on changing contexts and stakeholders. However, while such a framework emphasizes explicit user control, it lacks institutional mechanisms for preventing arbitrary use by authorized parties.

In general information systems, inside threats—such as data misuse or arbitrary operation by technically authorized administrators—are still recognized as serious problems [13]. In response to such “abuse by authorized parties,” there is growing demand for a comprehensive system that integrates social institutions with technical foundations, rather than relying on individual technical countermeasures alone.

This study proposes the concept of a Verifiable Record of AI Output (VRAIO) [14]—a framework designed to fill in this kind of institutional gap—and applies this to autonomous driving systems. Under VRAIO, the AI system is enclosed from the outside by an output firewall, and a third-party organization called the Recorder conducts formal purpose-compliance screening on all outputs. The process of such judgment is recorded in a tamper-proof and verifiable format, thus allowing citizens and audit institutions to conduct external oversight. Institutional deterrence is further enhanced through random audits of outputs, reward systems for whistleblowers, and the imposition of heavy fines for violations.

This approach, unlike input anonymization [15,16] or internal AI interpretability [17,18], focuses exclusively on output and enables a unique form of institutional and technical control, offering a realistic pathway for social implementation.

In particular, this study reconfigures VRAIO to address the real-time nature specific to autonomous driving by introducing two complementary sub-concepts: (1) pre-approval for designated outputs and (2) unrestricted approval of internal system communication. These enhancements allow the system to maintain internal real-time decision-making capabilities while applying strict governance to external outputs only.

This paper presents a novel institutional and technical governance model centered on AI output to address privacy challenges in fully autonomous AI systems, especially the risk of misuse or abuse by authorized insiders.

2. Identifying the Challenges of Privacy Protection and Introducing the Verifiable Record of AI Output

This chapter details the background leading to the institutional design of privacy protection in fully autonomous driving systems, focusing first on the actual conditions of data usage and the limitations of current privacy protection technologies. In particular, this study focuses on the issue of “misuse by authorized insiders,” referring to the arbitrary use of data by system operators themselves—an issue that constitutes a structural and institutional risk not adequately addressed by existing access control or encryption technologies.

To address such problems, both technical measures and institutional and societal frameworks are required for oversight and governance. That is, it is essential to establish a mechanism that ensures comprehensive management and social transparency at the national level regarding AI systems. However, to date, no such large-scale, institutionally organized privacy protection model exists, either domestically or internationally.

This study proposes the “Verifiable Record of AI Output (VRAIO)” as a framework to fill this institutional gap. VRAIO is a novel approach that does not interfere with AI inputs or internal processing but instead focuses solely on outputs as the target of regulation, thereby enabling institutional guarantees of privacy without compromising the operational efficiency of AI.

In the following sections, Section 2.1 clarifies the current state and challenges of data operations and privacy protection in autonomous driving systems, while Section 2.2 elaborates on the specific structure of VRAIO and the philosophy behind its institutional design.

2.1. Data Operation and Challenges of Privacy Protection Technologies in Autonomous Driving Systems

Autonomous driving systems are composed of a centralized control AI system and on-board AI systems [19,20]. The former aggregates and analyzes wide-area road information and provides the latter with recommended routes and environmental data. The latter uses various sensors and cameras to understand the surrounding environment and perform driving operations in real time [21,22]. Bidirectional, multi-layered information exchange occurs between these systems [23,24]. In addition, Vehicle-to-Everything (V2X) communication allows the vehicle to share information with other vehicles, infrastructure, and pedestrians [25,26].

Such system operations require the collection and processing of vast amounts of data, including the personal information of passengers and pedestrians, environmental data, and vehicle operation data [27,28]. However, this entails a number of risks, such as data breaches through cyberattacks, concerns about a surveillance society, and a lack of transparency in data usage. In democratic societies in particular, these concerns may undermine public trust and become obstacles to societal acceptance.

Improper use of data involves two types of threats: (1) unauthorized access from external actors such as hackers and (2) intentional or arbitrary misuse by authorized insiders such as administrators, operators, or owners. For the former, access control, authentication technologies, encryption, and intrusion detection systems are considered relatively effective security measures. In addition, an approach known as Privacy-Preserving Data Mining (PPDM) has been proposed, which removes or transforms personally identifiable information directly from the data [29]. PPDM techniques include perturbation, anonymization, encryption, secure multi-party computation, and stream data processing, but these methods also involve trade-offs between privacy protection and data accuracy, along with practical constraints such as high computational overhead.

The latter threat—misuse by authorized personnel—is a deeper and more structural issue. It is difficult to eliminate the possibility that internal stakeholders, such as system administrators or operators, may arbitrarily manipulate or exploit data using their legitimate access privileges. Access control and log monitoring alone prove insufficient. In fact, problems such as log tampering, ambiguity in operational guidelines, and the absence of audit mechanisms have been pointed out as institutional vulnerabilities. In light of these circumstances, there has been a growing recognition of the need for frameworks that enable the external verification of data use processes in a transparent and accountable manner [30], along with social and institutional oversight [31,32].

This study proposes the introduction of the Verifiable Record of AI Output (VRAIO) [14] as a countermeasure against such insider threats. This approach regulates the output only, without interfering with the AI system’s inputs or internal processing. In doing so, it seeks to maintain the system’s operational freedom and efficiency while ensuring institutional safeguards for privacy protection.

The AI system is enclosed from the outside by an outbound firewall, and all output is subject to formal review by an independent third-party organization called the Recorder, which evaluates the “purpose and summary” of the output. This review is conducted in accordance with rules established by a Government Regulatory Agency for AI, based on public deliberation. The review process is recorded in a tamper-proof format using blockchain or similar technologies. The recorded data is auditable by citizens and auditing institutions, thereby ensuring institutional transparency and accountability. This framework constitutes an unprecedentedly large-scale socio-technical governance system for privacy protection, which institutionally regulates AI outputs while preserving operational efficiency.

2.2. Proposed Approach: Verifiable Record of AI Output (VRAIO)

This study proposes the introduction of a new framework called the “Verifiable Record of AI Output” (hereinafter, VRAIO) as a means of achieving both privacy protection and safety in autonomous driving systems (see Figure 1) [14]. VRAIO was originally conceived as a privacy-preserving mechanism for AI monitoring in public spaces, where cameras connected to AI systems conduct surveillance, with the aim of institutionally overcoming the risk of a surveillance society in exchange for enhanced safety.

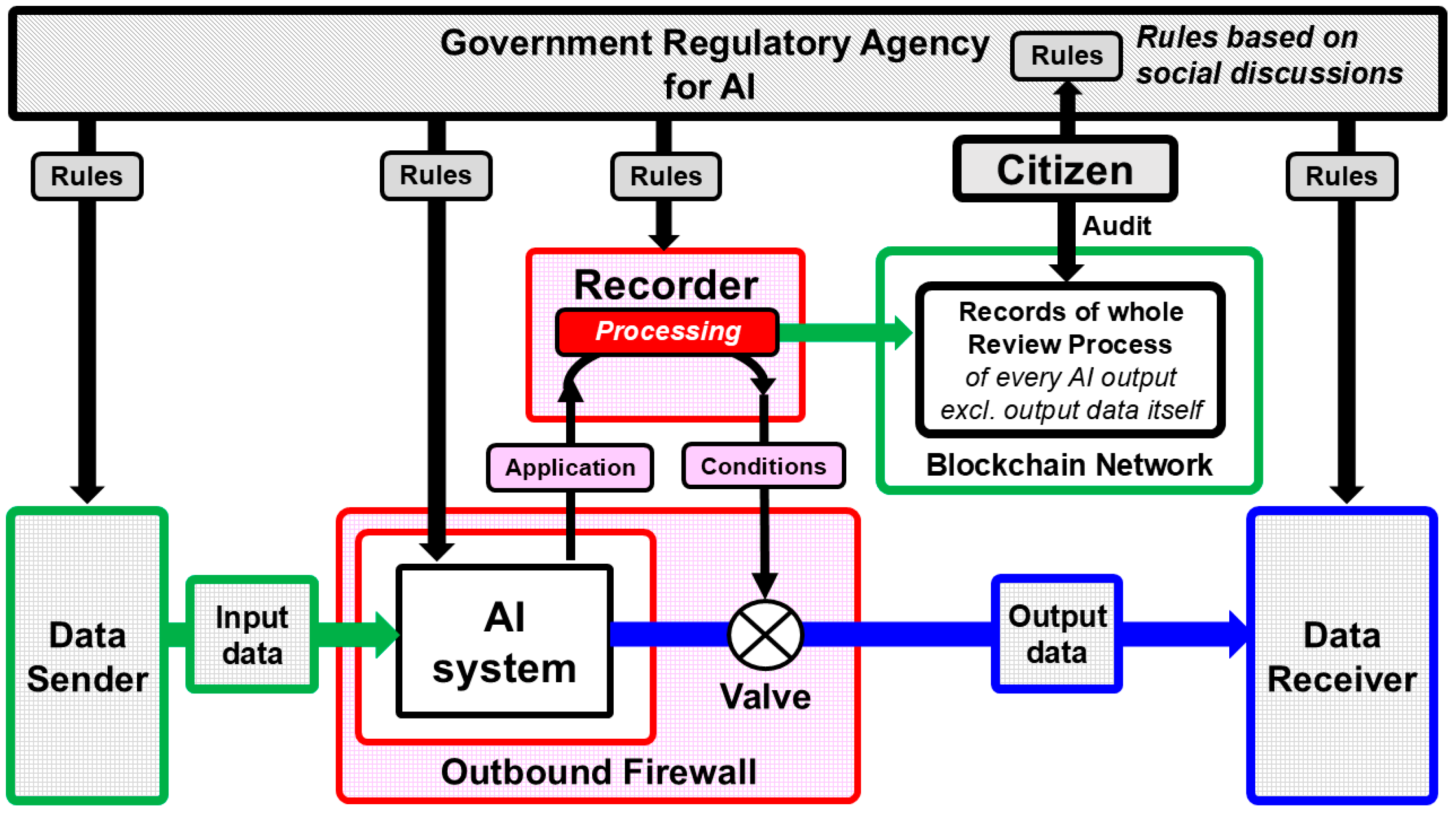

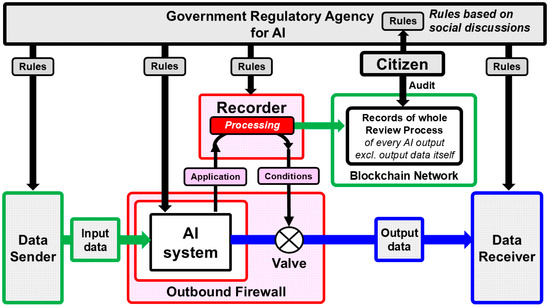

Figure 1.

Conceptual diagram of the Verifiable Record of AI Output (VRAIO).

The core principle of this framework is to impose no restrictions on the input or internal processing of AI systems, and to regulate only the output through institutional and technical mechanisms. This approach allows for the preservation of processing freedom and operational efficiency in AI systems, while institutionally guaranteeing the public legitimacy of their outputs.

The structure of VRAIO consists of the following seven elements:

- (1)

- Rulemaking based on democratic consensus.The “Rules” that define the permissible scope of AI system outputs—such as the purpose, content category, degree of inclusion or removal of personal information, volume, frequency, and recipients—are formulated through legislative and administrative procedures and public discussions based on social consensus grounded in democratic deliberation. These rules provide the normative legitimacy and societal acceptability necessary for regulatory governance.

- (2)

- Institutional oversight by a governmental agency.A Government Regulatory Agency for AI is responsible for disseminating and supervising the established rules and mandating their implementation across all relevant actors. This agency also evaluates the public interest and privacy-protection efficacy of the defined output boundaries (purpose, content category, degree of anonymization, volume, frequency, recipient, etc.) and provides feedback for democratic rule revisions through periodic public reporting.

- (3)

- External output screening via output firewall and Recorder.The AI system is enclosed within an “Outbound Firewall” maintained by an independent third-party institution called the Recorder. For each external output, the system must submit a request including metadata such as purpose, content category, degree of anonymization, volume, frequency, and recipient. The Recorder then performs a formal conformity check against the predefined rules. If the output falls within the permitted range, the Recorder authorizes the release by unlocking the firewall. Notably, the Recorder is technically restricted from accessing the content of the output itself; it only interacts with the metadata.

- (4)

- Tamper-resistant logging and disclosure.The output’s purpose, summary, and approval rationale are recorded using tamper-resistant technologies such as blockchain and are made available for public auditing to ensure transparency.

- (5)

- Third-party auditing by citizens and external institutions.Records maintained by the Recorder, after undergoing anonymization and other protective processing, are open to audits by citizens, NGOs, and external oversight bodies. This mechanism supports the transparency and accountability of the overall system.

- (6)

- Institutional deterrence mechanisms.The VRAIO framework presumes that AI systems report their output history truthfully. To enforce this assumption institutionally, penalties are imposed for false reporting, while high-value rewards are provided to whistleblowers or bounty hunters who expose violations. This structure eliminates incentives for deception and enhances systemic reliability.

- (7)

- Randomized spot checks to prevent false reporting.As an additional safeguard, randomized inspections of output data are introduced. The Recorder randomly selects certain outputs and requires AI system operators to disclose decryption methods for direct inspection by oversight bodies. Since this process constitutes an exception to the Recorder’s non-access policy and may involve privacy-sensitive data, careful institutional design is required.

Contemporary AI models, particularly those based on deep learning, often exhibit a black-box nature, limiting the transparency and interpretability of their decision-making processes. In response, the VRAIO framework adopts a design philosophy that does not attempt to trace the internal reasoning of AI systems but instead focuses on institutionally regulating the declared purpose and content of outputs.

Specifically, when an AI system intends to issue an output, a “permission request” is automatically generated and sent to the Recorder. This request includes the declared “purpose” and “summary” of the output but does not contain the output data itself. Importantly, such output requests are restricted to a predefined set of purposes, which must be configured in advance by the AI system administrator (e.g., the operator of the autonomous driving system). These predefined purposes must, in turn, be aligned with those that have been publicly accepted through prior societal deliberation and institutional approval processes.

The Recorder formally evaluates whether the submitted purpose and summary are consistent with the pre-approved set of purposes and records the evaluation results in a tamper-proof and verifiable format. Even if the output is approved through this formal review, the actual output may still be subject to retrospective auditing to verify its consistency with the declared information. If any significant discrepancy is found, the case is treated as a false declaration and subject to severe penalties, thereby establishing a strong institutional deterrent against misuse.

The permissible scope of the output (purpose, content category, degree of anonymization, volume, frequency, recipient, etc.) is defined as a set of Rules based on democratic consensus that are evaluated against the following criteria:

- [1]

- Operational and developmental efficiency for AI system operators or owners’;

- [2]

- Public safety (e.g., crime prevention, victim rescue, suspect tracking) and social efficiency (e.g., traffic signal control);

- [3]

- Privacy protection and data minimization.Examples of outputs that may be approved for transmission include the following:

- [a]

- Information about the vehicle during autonomous operation (e.g., video, sensor data) may be sent to the autonomous driving system after anonymization.

- [b]

- Information about pedestrians walking on roadways (e.g., images and metadata) may be sent to law enforcement (e.g., traffic control centers) after anonymization.

- [c]

- Information about road flooding (e.g., images and metadata) may be sent to disaster response centers after anonymization.

Examples of outputs that may not be approved are the cases [a], [b], or [c] above, where sufficient anonymization has not been applied. Sufficient anonymization refers to processing that renders individual identification impossible, for example, by masking faces in images, obfuscating voices in audio, or generalizing attribute data.

Additionally, any AI system that receives output data originating from within the VRAIO framework must also be subject to the VRAIO framework. This ensures that data entering the VRAIO ecosystem remains under regulated and protected conditions throughout its lifecycle.

The rules (i.e., the permissible scope of output) are established through democratic deliberation and social consensus. To ensure their legitimacy, these rules must be intuitively understood by the public and sufficiently general and versatile so that revisions once every few years will not cause practical disruptions. For new functions that companies wish to introduce, it is not appropriate to demand immediate incorporation; instead, such proposals should be submitted in advance as requests for consideration in the next scheduled rule revision.

In actual system operation, there may be cases where the rules are not comprehensive, or where internal inconsistencies lead to ambiguities in their application order or judgment criteria. As a result, so-called borderline or exceptional output patterns may arise. For instance, when a pedestrian is captured by a high-resolution camera, a certain level of image clarity may be necessary for accident prevention; yet, if the person’s face is clearly visible, it could constitute a privacy violation. In such cases, the required level of anonymization may vary depending on the situational context, for example, whether the output is treated as emergency-related or as part of routine traffic monitoring. Similarly, if designated recipient systems are unavailable during a disaster, the decision to transmit flood information to unofficial groups such as local volunteer fire brigades may raise questions regarding the legitimacy of the output destination. Moreover, outputs such as video recordings triggered by emergency braking or conversations between passengers often involve uncertain thresholds for assessing urgency or required anonymization, making them subject to operator discretion.

To address such highly uncertain output patterns, it is important for the Government Regulatory Agency to collect and analyze real-world operational cases and identify statistical trends. This enables flexible refinement of the judgment criteria and supplementary logic for output approval. In particular, for functions not yet covered by existing rules—such as outputs from newly installed emotion recognition sensors that detect passenger stress—methods like Federated Learning (FL) can be used to detect usage patterns and provide input for future rule revisions.

However, such technical optimizations must remain strictly within the scope of interpreting and applying rules that have been established through democratic procedures. They must not possess the authority to unilaterally alter the rules themselves. In this proposal, operational trends observed at the local level (e.g., individual AI systems) are aggregated centrally via Federated Learning and used to continuously improve automatic judgment algorithms and the operational guidance for rules, based on output metadata. For such mechanisms to be institutionally permissible, the rule documents—established through democratic procedures—must explicitly authorize the Government Regulatory Agency to collect and analyze anonymized data within a defined scope for such purposes.

Blockchain technology is one of the most promising options for ensuring tamper-proof logging of output audit records, and lightweight, high-speed implementations of blockchain are gradually reaching practical viability [33,34,35,36,37]. In addition, architecture that combines federated learning with blockchain has gained attention as a privacy-preserving distributed learning infrastructure for the Internet of Vehicles (IoV) domain [38].

That said, the present framework does not mandate any specific logging method. Instead, the recording approach should be selected flexibly based on cost-effectiveness and operational context. The Recorder does not store the output data itself, but instead logs metadata related to the output audit process—such as the purpose and summary of the output, the result of the audit, the timestamp, and information about the sender and recipient. As a result, the size of each individual record is relatively small.

To improve logging efficiency, methods such as batch recording—aggregating entries by vehicle or by day—and limited retention periods (e.g., three months or one year) may be employed. Additionally, a portion of the records may be randomly selected for long-term storage.

Moreover, a hybrid approach may be adopted in which routine records are stored using lightweight cryptographic techniques, such as digital signatures, while only a randomly selected subset is committed to the blockchain. This design is expected to offer a practical balance between scalability and tamper-resistance. As described above, VRAIO is an unprecedented multi-layered governance system composed of technical control, institutional oversight, and citizen monitoring. It institutionally deters arbitrary output operations by AI system operators. At present, there is no precedent—either domestically or internationally—for a privacy protection system of this scale and strictness. However, considering the enormous societal impact and ethical responsibility that full autonomous driving entails, a corresponding institutional response would be indispensable in democratic societies. Ensuring privacy while enabling autonomous driving systems is essential to earn public trust and true societal acceptance, making a comprehensive and robust framework like VRAIO is essential.

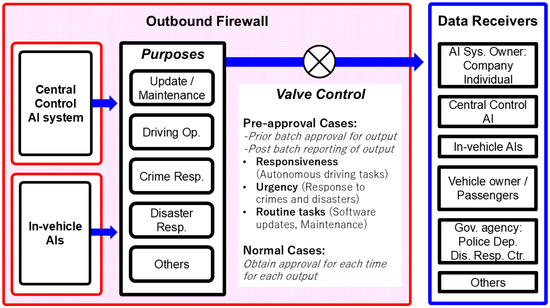

In autonomous driving systems, the output from central control AI and in-vehicle AI is regulated by an outbound firewall. Within this, outputs requiring immediacy and operational necessity—such as those related to driving responsiveness, crime response, and disaster response—can be pre-approved in batches for the sake of efficiency. These outputs are categorized by purpose, and their approval conditions are designed based on institutionally established rules. Outputs that have received pre-approval are required to undergo subsequent batch review by the Recorder, during which their purposes, summaries, and execution records are stored in a verifiable format. Deterrence against false declarations and misuse is ensured, as with normal outputs, with institutional mechanisms such as heavy penalties upon detection of violations and reward systems for whistleblowers in place. The output data are transmitted to a variety of authorized recipients, including AI system owners, the central control system, in-vehicle AI, vehicle owners and passengers, and government agencies such as police departments and disaster response centers.

3. Application to Autonomous Driving Systems and Original Proposals

In the previous chapter, we proposed the Verifiable Record of AI Output (VRAIO) as a new framework for privacy protection in autonomous driving systems and explained its core concept and components. This chapter examines how VRAIO can be adapted to the specific operational requirements of actual autonomous driving systems.

In particular, autonomous driving inevitably involves the following:

- (1)

- Real-time operation requiring immediate and high-frequency decision-making [39,40];

- (2)

- Structural complexity arising from coordination between central control AI and multiple types of in-vehicle AI [41].

In light of these characteristics, this study proposes two complementary sub-concepts in addition to the basic institutional framework of VRAIO:

- (1)

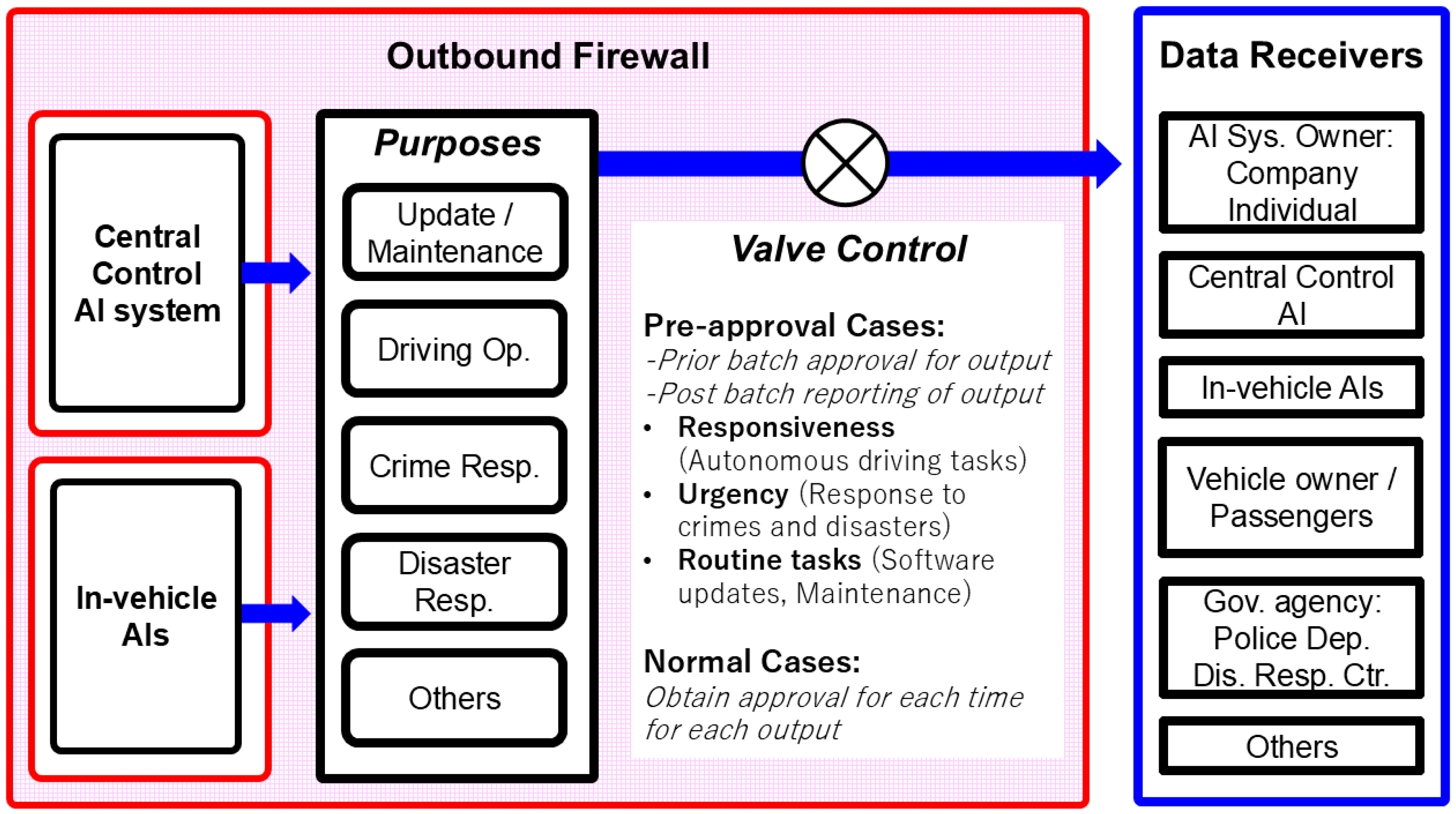

- Pre-approval for specific AI outputs in cases of emergencies or operational necessity (see Figure 2);

Figure 2. Pre-approval of specific types of AI output.

Figure 2. Pre-approval of specific types of AI output. - (2)

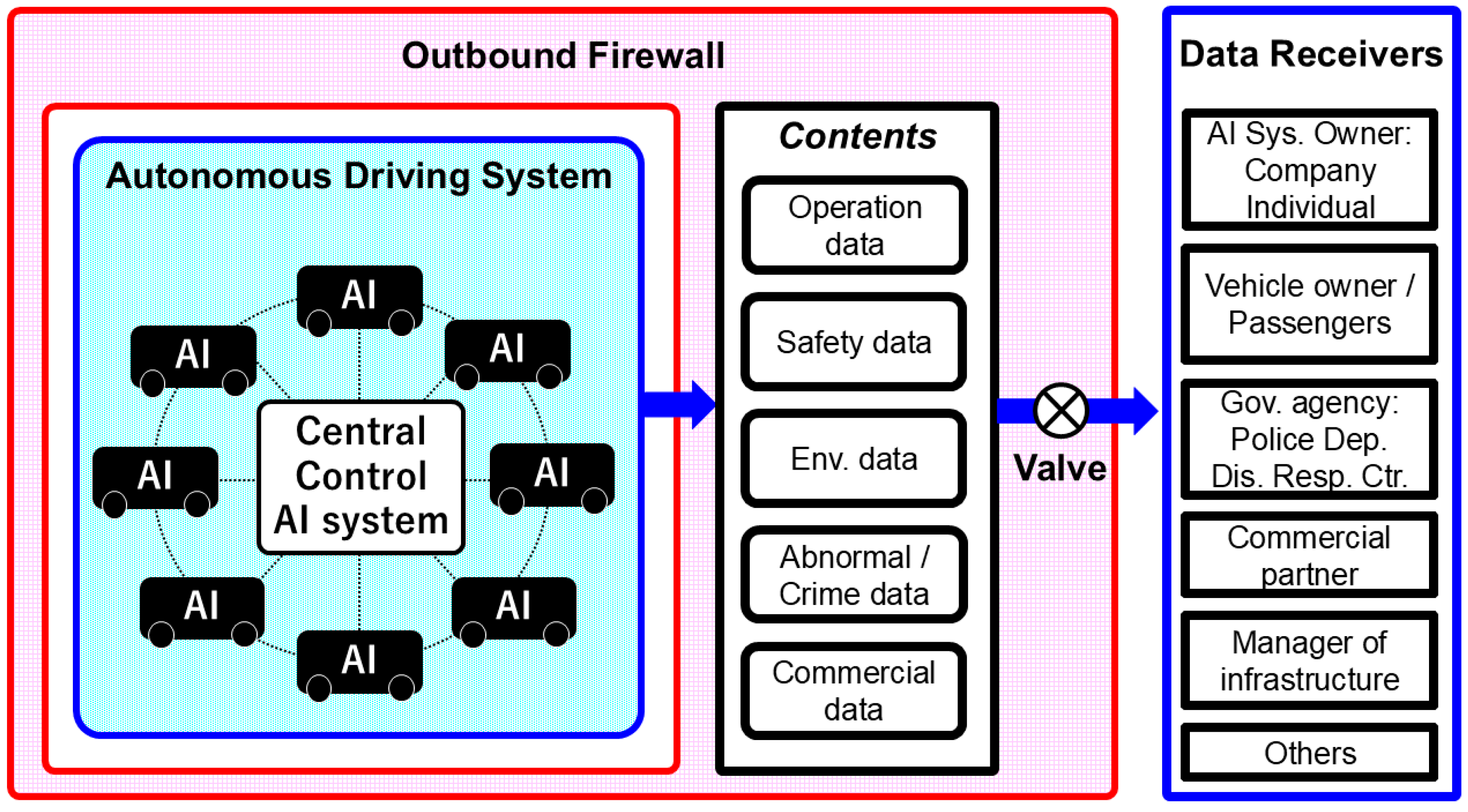

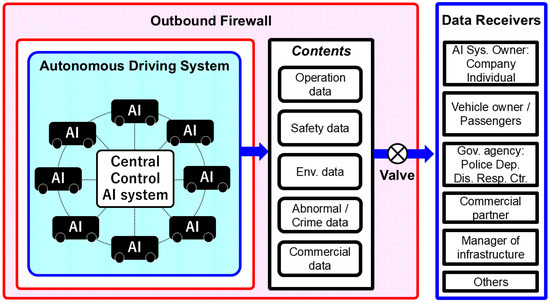

- Unrestricted internal communication within the system, exempt from regulation (see Figure 3).

Figure 3. Unrestricted internal communication within autonomous driving systems (unconditional approval).

Figure 3. Unrestricted internal communication within autonomous driving systems (unconditional approval).

3.1. Original Proposal of This Study: Pre-Approval of Specific Types of AI Output

In autonomous driving systems, many situations require real-time decision-making and response, making it impractical to obtain individual approval for each AI output. Therefore, this study proposes a method whereby, at the time of vehicle startup or software updates, batch pre-approval is granted for specific communication purposes and contents. Afterwards, the outputs are collectively reported to the Recorder for audit. For example, in the case of an emergency braking event triggered by sudden pedestrian intrusion, the pre-authorized output allows the vehicle to alert nearby infrastructure and emergency services instantly, while the summary of the event is later submitted to the Recorder for audit. This enables immediate decisions—such as emergency communications or collision avoidance—while also ensuring AI output conformity to rules and thereby securing privacy protection.

As shown in Figure 2, this method introduces two types of output regulation based on the “Purposes” and “Data Receivers” of the AI output.

- (1)

- Pre-approval Cases: These are cases where output is approved in advance, with a batch report submitted to the Recorder after execution. This applies to the following cases:

- Responsiveness: Basic tasks related to autonomous driving operations;

- Urgency: Emergency responses to crimes, accidents, and disasters;

- Routine tasks: Software updates and maintenance-related tasks.

- (2)

- Normal Cases: Cases requiring individual approval each time for each output.

For outputs that have been pre-approved, the output history is reported to the Recorder after execution, assuming a delay ranging from several minutes to several days. The Recorder conducts a formal approval assessment, following the same procedures as in real-time screening. If no issues are found, the entire decision-making process is stored and disclosed in a tamper-proof, verifiable manner. In the event that a problem is detected, it is immediately reported to the Government Regulatory Agency, and an investigation into the cause of the error is initiated.

It is also anticipated that, during system operation, outputs may be generated that were not previously designated as subject to pre-approval. In such unexpected system states, institutionally defined exception-handling procedures are required. These may include temporarily withholding the output, shifting the system to a fail-safe mode, or automatically reporting the output to the Recorder for ad hoc review. By establishing such protocols, the system can retain the benefit of rapid output authorization through pre-approval, while also ensuring comprehensive governance that encompasses responses to unforeseen outputs outside the certified scope.

The intended purposes of output from autonomous driving systems (central control AI and in-vehicle AI) are as follows:

- (1)

- Update/Maintenance:

- Output related to software updates and bug fixes;

- Real-time monitoring data of hardware status or anomalies.

- (2)

- Driving Operation:

- Real-time data necessary for vehicle operation, such as location, speed, and direction;

- Environmental perception data (e.g., obstacles, pedestrians, and traffic lights) [42];

- Information sharing with other vehicles and infrastructure, including V2X communication [43];

- Navigation information and routing instructions.

- (3)

- Crime Response:

- Real-time alerts for abnormal behavior or crimes [44,45];

- Audio/visual data needed for suspect tracking;

- Reports to relevant authorities on criminal situations;

- Crime pattern analysis results by AI.

- (4)

- Disaster Response:

- Data on fires, floods, earthquakes, and other disaster scenes [46,47];

- Audio/visual alerts for evacuation guidance;

- Sensor data such as temperature, pressure, and smoke concentration;

- Information for yielding routes to emergency vehicles.

- (5)

- Others:

- Operational data and usage history for business decision-making;

- Behavior analysis data to improve passenger satisfaction;

- Data for smart city integration and road infrastructure diagnostics;

- Records for insurance and legal responses;

- Data for commercial use, such as advertisement targeting.

The potential recipients of data output include a wide range of entities. First, AI system owners (companies or individuals) use the data to monitor system operation, plan maintenance, or perform business analytics. The central control AI system needs information for unified traffic management and response to incidents or congestion. In-vehicle AI systems exchange data for both individual vehicle operation and cooperation with other vehicles or infrastructure.

Additionally, owners and passengers of autonomous vehicles may reference data to confirm safety, respond to emergency situations like sudden braking or collisions, or ensure comfortable service. Government agencies (such as police or disaster response centers) may utilize the data for public safety and emergency response [48]. Other potential recipients include universities and research institutions [49] for academic use, insurance companies for accident response, infrastructure managers for road maintenance, advertising agencies for commercial use, and smart city entities [50] for urban function optimization.

This figure illustrates a structure in which communication between the central control AI and the numerous in-vehicle AIs within a single autonomous driving system is unrestricted. Each company’s autonomous driving system encompasses a vast number of vehicles, forming an extremely complex system in which high-frequency and multi-directional data exchange constantly occurs. Imposing a VRAIO-based output review on all such internal communications would be impractical both institutionally and technically, especially from the standpoint of operational efficiency. Therefore, this proposal limits the application of the outbound firewall to only the external outputs of the entire system and allows real-time and continuous internal communication to proceed without restrictions. This enables the institutional assurance of privacy protection while maintaining system responsiveness and operational efficiency.

3.2. Original Proposal of This Study: Unrestricted Internal Communication Within Autonomous Driving Systems (Unconditional Approval) (Figure 3)

Figure 3 illustrates a system architecture in which communication between the central control AI and a large number of onboard AI units within an autonomous driving system is made unrestricted. In this proposal, the scope of control by the outbound firewall is limited to the external outputs of the entire autonomous driving system. All real-time and high-frequency internal communications occurring within that system are fully exempt from institutional regulation. It should be emphasized that the term “unrestricted internal communication (unconditional approval)” refers only to the exemption from institutional output auditing by the Recorder under the VRAIO framework and does not imply the absence of technical security controls.

An autonomous driving system, even when managed by a single company, constitutes an extremely large-scale and complex information processing network that includes hundreds to tens of thousands of vehicles. As a structural feature, continuous and multi-directional information exchange—including communication between the central control AI and in-vehicle AIs, and vehicle-to-vehicle (V2V) communication—is indispensable. For example, the following types of communication are routinely performed:

- Information from the central control AI to in-vehicle AI: recommended routes, traffic congestion updates, and weather conditions.

- Information from in-vehicle AI to the central control AI: vehicle position, speed, obstacle data, etc.

- Vehicle-to-vehicle communication (V2V): information sharing for collision avoidance and route coordination.

It would be institutionally and technically infeasible to subject all such complex and high-frequency communications to output screening under VRAIO. Such an approach would severely impair both the responsiveness and operational efficiency of the system. Therefore, this study institutionalizes unrestricted internal communication as a deliberate design choice that accommodates the real-time operational requirements unique to autonomous driving. In doing so, the proposal maintains strict regulatory oversight for external outputs only, while improving system-wide efficiency.

It should be emphasized that “unrestricted internal communication (unconditional approval)” applies only to the institutional oversight layer governed by the Recorder. It does not imply any waiver of technical security measures. On the contrary, standard cybersecurity protection—such as encryption, authentication, access control, and anomaly detection—remains essential and non-negotiable for ensuring the confidentiality and integrity of internal data flows. This functional separation between institutional auditing and communication-layer security is central to the rationale for internal communication liberalization under the VRAIO framework.

For internal communications that may contain personally identifiable information—such as data from onboard sensors or video streams—the selective adoption of Privacy-Preserving Data Mining (PPDM) techniques is recommended. PPDM encompasses a family of technologies designed to reduce identifiability in data use contexts, including anonymization, masking, and noise injection. Practical examples include real-time video masking, voice obfuscation, and generalization of demographic attributes. However, as these techniques may incur computational overhead and latency, their application should be context-sensitive and based on system real-time constraints and the assessed privacy risk of each communication channel.

To enforce external output control at the system level, a two-layered outbound firewall architecture is proposed. The first layer consists of hardware-based VRAIO-compliant outbound firewalls embedded in individual vehicles, which are responsible for suppressing and inspecting outgoing data at the vehicle edge. The second layer consists of a software-defined (SDN-based) outbound firewall deployed at the cloud or centralized control level, which enforces VRAIO rules across the entire autonomous driving AI system. This multi-tiered architecture, combining distributed filtering and centralized enforcement, aligns with security paradigms already adopted in the IoT and connected vehicle domains and is considered highly implementable.

However, the computational overhead introduced by such a dual-layer architecture requires quantitative analysis in order to assess its scalability for deployment in mass-produced vehicles. In particular, as the number of outputs increases and communication frequency intensifies, it is necessary to clarify performance limitations in terms of processing capacity and latency—both at the vehicle hardware level and for centralized server infrastructure. Although this study does not include such an evaluation, it recognizes this issue as a critical subject for future validation during the implementation phase.

The degree to which the exemption of internal communications from VRAIO auditing increases the system’s attack surface, or to what extent such risks can be mitigated by existing security technologies, remains an important topic for future research. The current framework assumes that even if internal data are maliciously exfiltrated, they must pass through the Recorder for external transmission, where institutional auditing acts as a final barrier. This design is intended to provide a strong deterrence against intentional privacy violations. However, to validate this assumption, adversarial simulations based on compromised subsystem scenarios will be necessary, and this is a subject for future investigation.

As shown in Figure 3, data output from autonomous driving systems includes a wide variety of content, including the following:

- Operational data (position, speed, route, congestion/accident information);

- Safety data (accident videos, sensor data, and anomaly detection logs);

- Environmental data (road damage, signal malfunctions, and weather information);

- Crime and abnormality detection data (including videos and audio);

- Commercial analytics data (behavioral trends, usage history, etc.).

Expected recipients of such data include AI system owners and administrators (companies or individuals), vehicle owners and passengers, government agencies such as the police and disaster response centers, commercial partners such as advertising agencies and logistics companies, infrastructure management entities, insurance companies, and research institutions.

In this proposal, privacy protection is institutionally ensured by conducting a strict review of external outputs based on the combination of data content and recipients. Meanwhile, unrestricted internal communication yields the following benefits:

- In-vehicle AI can grasp the situation in real time, enabling swift driving decisions.

- Faster data transmission improves overall system operational efficiency.

- By limiting output control to external outputs, a high-level balance between privacy protection and technical efficiency can be achieved.

Based on the above, this communication design represents a highly novel institutional innovation for AI systems at the level of public infrastructure and can be positioned as a highly implementable institutional design that simultaneously achieves the three requirements of responsiveness, efficiency, and privacy protection.

4. Discussion

4.1. The Importance of Privacy Protection in Autonomous Driving AI Systems

In democratic societies, the protection of privacy is a fundamental institutional value that underpins individual freedom and civil rights. The introduction of fully autonomous driving systems (autonomous driving system, ADS) brings numerous social benefits, such as improved convenience and safety. However, it inherently entails the collection and analysis of vast amounts of personal data. Therefore, even more than traditional information systems, a robust and institutionally supported framework for privacy protection is required.

If such protection is inadequate, public anxiety about surveillance and concerns over social control by AI will increase, thus significantly undermining social trust in and acceptance of ADSs. On the other hand, excessive regulation may hinder technological innovation and the realization of public benefits. Thus, an appropriate balance between privacy protection and technical efficiency is necessary.

Some segments of society hold the view that individuals should not expect privacy in public spaces. However, this notion is rooted in a historical context where surveillance technologies were limited in scope and capability. In recent years, advances in AI and sensing technologies have enabled the reconstruction of individual behavior patterns even in open, outdoor environments. Under such conditions, even in public spaces, the institutional regulation of data outputs that could identify individuals should be considered essential.

The VRAIO framework proposed in this study is based on the idea that, even if data collection itself is legally permitted, the institutional regulation of how such data is output and disclosed is critical. Through this approach, VRAIO enables a normative position in which the collection of information in public spaces may be legally tolerated, but the act of disclosure must be ethically constrained. In this configuration, even if capturing, recording, or analysis of pedestrians without their consent is legally permissible, the outputs are still subject to social and institutional review, and their disclosure is allowed only if found to be consistent with declared, legitimate purposes.

The VRAIO (Verifiable Record of AI Output) proposed in this study is positioned as a framework that achieves this balance at a high level. In VRAIO, all outputs generated by AI systems are enclosed from the outside by an output firewall and managed by an independent third-party entity called the Recorder. The purpose and summary of each output are recorded in a tamper-proof and verifiable format. The Recorder conducts a formal review of the output’s permissibility but does not gain access to the content of the output itself.

Furthermore, to prevent false reporting, institutional deterrent mechanisms such as large rewards for whistleblowers and severe penalties for intentional violators are built into the system. Through this design, VRAIO functions as a large-scale governance infrastructure that enables societal monitoring and control of all AI system outputs.

This is not merely a privacy protection technology but rather an unprecedented socio-technical system that institutionally encloses all AI systems through their outputs. For fully autonomous driving to become a public infrastructure accepted in democratic societies, this level of institutional governance is essential. Without a framework like VRAIO, true societal acceptance would be difficult to achieve.

That said, fully autonomous driving AI systems constantly require immediate responsiveness and entail structurally complex communication among vehicles, infrastructure, and central systems. Applying VRAIO to such systems without modification may introduce new challenges, such as processing delays and increased operational burdens.

In the following section, we discuss two complementary mechanisms proposed in this study—“pre-approval” and “unrestricted internal communication”—as means of addressing these challenges.

4.2. Balancing Privacy and Technical Efficiency in Autonomous Driving AI Systems

In this study, VRAIO was adapted and extended for autonomous driving AI systems through the introduction of two key mechanisms: (1) pre-approval for external output communication (Figure 2), and (2) unconditional approval of internal communication within the system (Figure 3).

In the case of pre-approval for external output communication (Figure 2), outputs required for tasks that demand an immediate response can be pre-approved based on their intended purpose. This allows the AI system to issue necessary outputs without having to apply for approval each time. However, even in such cases, it is mandatory to report the purpose and outline of the executed outputs to the Recorder afterwards. As in standard VRAIO, deterrence against violations relies on the construction of a societal infrastructure that includes institutional mechanisms such as high-value rewards for identifying violations and severe penalties for intentional misconduct.

In the case of unconditional approval of internal communication (Figure 3), the obligation to install an outbound firewall is waived for internal AI systems operated and managed within each autonomous driving AI company or entity. This realizes the liberalization of communication (outputs) within the system. However, the entire AI system remains enclosed within a firewall managed by the Recorder, and VRAIO is applied overall. In this way, a balance is achieved between privacy protection and operational efficiency.

This framework envisions that audits will be conducted not only by the general public but also by registered professional auditing institutions. To prevent regulatory capture, an institutional mechanism is proposed that includes a reward system for whistleblowers who identify misconduct. Furthermore, a pool of certified auditing bodies is pre-registered, and each output record is assigned to an auditor through a rotation system. The assignment results and audit execution records are stored on a blockchain, enhancing both transparency and trust.

The rotation-based assignment can be implemented through smart contracts, enabling automated allocation. Candidates can be selected randomly based on factors such as the timestamp of the output request and its declared purpose, while additional logic can account for past assignment history and auditor trust scores to prevent concentration or biased selection. All assignment results and histories are recorded on the blockchain, making them tamper-resistant and externally verifiable by both citizens and regulatory authorities. Through this structure, institutional accountability is reinforced across the entire audit process.

This study aims to prevent the unauthorized output of data containing personal information from autonomous driving AI systems. Once data includes personal information, VRAIO must be applied not only to the immediate output but to all subsequent processing and to the AI systems handling that data. If the amount of data without personal information is large and its processing can be handled independently, then the deployment of a dedicated AI system for such data may contribute to improved operational efficiency. However, this study considers the separation of “data with privacy” and “data without privacy” to be an unrealistic approach and therefore does not pursue it further.

4.3. Implementation Challenges and Future Outlook

This paper has focused primarily on the institutional structure and output governance model of VRAIO; however, several theoretical and formal challenges remain to be addressed. These include (1) the justification of blocking outputs triggered by adversarial input commands, (2) deterrence mechanisms against false declarations, and (3) the detectability of output tampering. Future work should incorporate formal modeling and verification techniques to assess the security of the system under such conditions.

Furthermore, the cryptographic infrastructure of VRAIO will need to be redesigned in the future to account for threats posed by quantum computing. Although this study assumes the use of standard cryptographic techniques for secure communication and data integrity, the potential incorporation of post-quantum cryptography (PQC) [51] must also be explored. In particular, ensuring tamper resistance and confidentiality of output logs will require the integration of PQC-based cryptographic schemes as a critical next step.

As discussed in Section 2.2, in real-world operations, it is inevitable that a certain number of outputs will fall into gray areas due to the inherent limitations in the completeness and consistency of institutional rules. To address such borderline or exceptional cases, it is necessary to predefine policy responses on the institutional side and to flexibly adjust rule application weights and priorities based on actual operational conditions. One possible approach is the use of heuristic or metaheuristic optimization techniques. For example, in the context of short-term traffic forecasting, the GSA-KAN model [52] demonstrates how adaptive decision logic can be optimized using multivariate data, which is an approach that could also be applied to rule-based institutional frameworks.

Although such optimization methods have not been implemented in this study, they represent a promising technical option for enabling more flexible institutional responses to gray-area outputs. From the perspective of harmonizing institutional structure with technical optimization, the application of such methods may constitute a significant area of future research.

Building on these theoretical and institutional issues, the next section addresses practical implementation challenges and outlines future directions for societal integration.

To empirically validate the effectiveness of the proposed VRAIO framework, actual system implementation and performance evaluation are essential. However, due to current constraints in budget and institutional resources, implementation and validation remain significant challenges. Therefore, this study is positioned as a foundational investigation aimed at clarifying the conceptual structure and social significance of VRAIO in the context of autonomous driving systems.

Importantly, although the concept of VRAIO itself was previously proposed in another domain [14], this study expands and reconstructs it in a form tailored to the operational requirements of autonomous driving AI systems. In particular, this study introduces two original sub-concepts to meet the specific demands of real-time processing and high-frequency communication inherent to autonomous driving: (1) pre-approval for specific outputs (Figure 2), and (2) unconditional approval of internal system communication (Figure 3). These elements represent a structural redesign aligned with the operational constraints and governance needs of autonomous driving, rather than a simple adaptation of an existing model.

In future research, the plan is to secure dedicated funding and organize an interdisciplinary research team to advance the validation phase. This will involve not only performance verification in simulation environments, but also implementation testing using a functioning prototype system. The evaluation will encompass technical aspects such as system load and usability, as well as legal and societal studies related to public acceptance and institutional integration.

Although the prototype-based implementation has not yet been initiated, confirming the practical feasibility of this framework through implementation is considered essential to validate its effectiveness. Therefore, this represents a high-priority subject for upcoming research efforts.

The publication of this framework is intended to provide a foundation for academic dialog to enable such multifaceted validation and serve as a catalyst for future collaborative research aimed at practical implementation and institutionalization.

How VRAIO’s blockchain-based output recording scheme can align and interoperate with emerging global AI regulatory frameworks—such as the EU Artificial Intelligence Act [53] and the U.S. AI Risk Management Framework [54]—remains an important subject for future consideration. However, the institutional design of VRAIO, which encloses the entire AI system within an output firewall and regulates all outputs through both institutional and technical mechanisms, constitutes a conceptual framework that may surpass these existing regulations in both scope and depth. Its core elements—including purpose-compliance screening, transparency, and immutable, auditable recordkeeping—not only encompass many of the principles found in international AI governance but also offer a unified mechanism for their practical implementation.

In an era where AI technologies transcend national borders, institutional governance frameworks are likewise expected to exhibit a certain level of international alignment. VRAIO is structured to meet this challenge by incorporating a two-layered design: globally shared normative principles at the foundational level, and the flexibility for local adaptation in accordance with national laws, cultural contexts, and civic values. Moving forward, it will be important to continue engaging with international standardization bodies and policy dialog platforms to promote the institutional value and technical design of VRAIO, with the goal of maturing it into a globally shared model for AI governance.

5. Conclusions

This study proposes the introduction of a new framework, the Verifiable Record of AI Output (VRAIO), to address the growing need for privacy protection in autonomous driving AI systems. In particular, it is a model designed to institutionally and structurally address internal risks—such as the intentional or arbitrary misuse of data by operators—that conventional technical countermeasures have been insufficient to mitigate.

VRAIO centers on the control of external outputs through an output firewall and the auditing and recording of all outputs by a third-party organization known as the Recorder. It formally evaluates the purpose and compatibility of each output, ensures tamper-proof recordkeeping, and institutionalizes external auditing with citizen participation. Furthermore, in order to adapt to the real-time and complex environment of autonomous driving, this study introduces two complementary sub-concepts: (1) pre-approval for specific outputs (Figure 2), and (2) unconditional approval of internal communications (Figure 3), thereby achieving a balance between technical efficiency and institutional governance.

In addition, by incorporating social deterrent mechanisms—such as heavy penalties for violations, whistleblower reward systems, and random output inspections—VRAIO operates as a multi-layered technical and institutional privacy protection infrastructure of unprecedented scale.

The significance of this proposal lies not only in its technical design for privacy protection but also in its ability to robustly institutionalize such protection, thereby generating the following virtuous cycle in society:

- (1)

- Privacy is institutionally guaranteed, earning public trust;

- (2)

- Based on this trust, the true social acceptance of autonomous driving is realized;

- (3)

- As a result, social benefits such as safety, efficiency, and sustainability are maximized.

The realization of this vision requires technical and institutional initiatives on a scale never seen before. For democratic societies to truly embrace autonomous driving as a powerful and widespread AI technology, a comprehensive and governance-oriented privacy protection model like VRAIO must be positioned as a new form of public infrastructure.

This vision extends beyond the specific domain of autonomous driving and connects to broader societal challenges, such as the reconfiguration of democratic values in the age of AI. A regulatory framework like VRAIO, along with its social implementation, can serve not only as an effective governance tool in individual domains, but also as an institutional mechanism that seeks to safeguard democratic values—such as privacy, public accountability, transparency, and explainability—while minimizing the trade-offs with AI efficiency. In this sense, VRAIO represents one potential litmus test for how democratic societies might defend their core values in the coming AI era [55].

That said, while VRAIO offers a forward-looking institutional framework, it is not without limitations. The legitimacy of its output approval process fundamentally depends on the “honest declarations” made by the AI system or its operators. To ensure institutional reliability, extensive social incentive structures are essential, such as strict penalties for false declarations, reward systems for whistleblowers, and compensation schemes. Moreover, VRAIO cannot defend against cyberattacks such as hacking or data tampering alone; it must operate in conjunction with robust technical security mechanisms. In this regard, although VRAIO is the first proposal to enclose AI outputs within a formal governance structure, its trustworthiness can only be realized through multi-layered institutional and technical foundations.

While future challenges remain—such as refining institutional design, managing operational costs, and addressing the feasibility of technological implementation—this study provides a conceptual starting point and aims to serve as a foundation for interdisciplinary and public dialog on real-world deployment.

Funding

This research is funded by Japan Society for the Promotion of Science, Grants-in-Aid for Scientific Research (KAKENHI), Grant No. 25K00735.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the author on reasonable request.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| VRAIO | Verifiable Record of AI Output |

References

- Li, Y.; Shi, H. (Eds.) Advanced Driver Assistance Systems and Autonomous Vehicles: From Fundamentals to Applications; Springer: Singapore, 2022. [Google Scholar] [CrossRef]

- Abdel-Aty, M.A.; Lee, S.E. Autonomous vehicles: Challenges, opportunities, and future implications for transportation policies. J. Mod. Transp. 2016, 24, 284–303. [Google Scholar] [CrossRef]

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highD Dataset: A Drone Dataset of Naturalistic Vehicle Trajectories on German Highways for Validation of Highly Automated Driving Systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. Available online: https://ieeexplore.ieee.org/document/8569552 (accessed on 17 August 2025).

- Warren, S.D.; Brandeis, L.D. The Right to Privacy. Harv. Law Rev. 1890, 4, 193–220. Available online: https://docenti.unimc.it/benedetta.barbisan/teaching/2017/17581/files/the-right-to-privacy-warren-brandeis (accessed on 17 August 2025). [CrossRef]

- Solove, D.J. A Taxonomy of Privacy. Univ. Pa. Law Rev. 2006, 154, 477–560. Available online: https://scholarship.law.upenn.edu/penn_law_review/vol154/iss3/1/ (accessed on 17 August 2025). [CrossRef]

- Collingwood, L. Privacy Implications and Liability Issues of Autonomous Vehicles. Inf. Commun. Technol. Law 2017, 26, 32–45. [Google Scholar] [CrossRef]

- Shladover, S.E. Connected and Automated Vehicle Systems: Introduction and Overview. J. Intell. Transp. Syst. 2018, 22, 190–200. [Google Scholar] [CrossRef]

- Hataba, M.; Sherif, A.; Mahmoud, M.; Abdallah, M.; Alasmary, W. Security and Privacy Issues in Autonomous Vehicles: A Layer-Based Survey. IEEE Open J. Commun. Soc. 2022, 3, 811–829. [Google Scholar] [CrossRef]

- Nissenbaum, H. Privacy as Contextual Integrity. Wash. Law Rev. 2004, 79, 119–158. [Google Scholar]

- Maurer, M.; Gerdes, J.C.; Lenz, B.; Winner, H. (Eds.) Autonomous Driving: Technical, Legal and Social Aspects; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Dimitrakopoulos, G.; Tsakanikas, A.; Panagiotopoulos, E. Autonomous Vehicles: Technologies, Regulations, and Societal Impacts; Elsevier: Amsterdam, The Netherlands, 2021; p. 202. Available online: https://books.google.com/books/about/Autonomous_Vehicles.html?id=BggWEAAAQBAJ (accessed on 17 August 2025)ISBN 9780323901383.

- Sucharski, I.L.; Fabinger, P. Privacy in the Age of Autonomous Vehicles. Wash. Lee Law Rev. Online 2017, 73, 760–772. [Google Scholar]

- Cole, E.; Ring, S. Insider Threat: Protecting the Enterprise from Sabotage, Spying, and Theft; Syngress: Rockland, MA, USA, 2005; Available online: https://www.oreilly.com/library/view/insider-threat-protecting/9781597490481/ (accessed on 17 August 2025).

- Fujii, Y. Verifiable record of AI output for privacy protection: Public space watched by AI-connected cameras as a target example. AI Soc. 2024, 40, 3697–3706. [Google Scholar] [CrossRef]

- Dwork, C.; Roth, A. The Algorithmic Foundations of Differential Privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Sweeney, L. k-Anonymity: A Model for Protecting Privacy. Int. J. Uncertain. Fuzziness Knowl. Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Gunning, D.; Aha, D. DARPA’s Explainable Artificial Intelligence (XAI) Program. AI Mag. 2019, 40, 44–58. [Google Scholar] [CrossRef]

- Lipton, Z.C. The Mythos of Model Interpretability. Commun. ACM 2018, 61, 36–43. [Google Scholar] [CrossRef]

- IEEE. White Paper–AUTONOMOUS DRIVING ARCHITECTURE (ADA): Enabling Intelligent, Automated, and Connected Vehicles and Transportation; IEEE Standards Association: Piscataway, NJ, USA, 2025. [Google Scholar]

- Gerla, M.; Lee, E.K.; Pau, G.; Lee, U. Internet of Vehicles: From Intelligent Grid to Autonomous Cars and Vehicular Clouds. In Proceedings of the 2014 IEEE World Forum on Internet of Things (WF-IoT), Seoul, Republic of Korea, 6–8 March 2014; pp. 241–246. [Google Scholar]

- Yeong, D.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220. [Google Scholar] [CrossRef]

- Thrun, S.; Montemerlo, M.; Dahlkamp, H.; Stavens, D.; Aron, A.; Diebel, J.; Fong, P.; Gale, J.; Halpenny, M.; Hoffmann, G.; et al. Stanley: The Robot that Won the DARPA Grand Challenge. J. Field Robot. 2006, 23, 661–692. [Google Scholar] [CrossRef]

- Xu, H.; Chen, J.; Meng, S.; Wang, Y.; Chau, L.-P. A survey on occupancy perception for autonomous driving: The information fusion perspective. Inf. Fusion 2025, 114, 102671. [Google Scholar] [CrossRef]

- Alalewi, A.; Dayoub, I.; Cherkaoui, S. On 5G-V2X Use Cases and Enabling Technologies: A Comprehensive Survey. IEEE Access 2021, 9, 107710–107737. [Google Scholar] [CrossRef]

- Hartenstein, H.; Laberteaux, K.P. A Tutorial Survey on Vehicular Ad Hoc Networks. IEEE Commun. Mag. 2008, 46, 164–171. [Google Scholar] [CrossRef]

- Bahram, M.; Lawitzky, A.; Aeberhard, M.; Wollherr, D. A Game-Theoretic Approach to Replanning-Aware Interactive Scene Prediction and Planning. IEEE Trans. Veh. Technol. 2016, 65, 3981–3992. [Google Scholar] [CrossRef]

- Ziegler, J.; Bender, P.; Schreiber, M.; Lategahn, H.; Strauss, T.; Stiller, C.; Dang, T.; Franke, U.; Appenrodt, N.; Keller, C.G.; et al. Making Bertha Drive-An—Autonomous Journey on a Historic Route. IEEE Intell. Transp. Syst. Mag. 2014, 6, 8–20. [Google Scholar] [CrossRef]

- Hewage, U.H.W.A.; Sinha, R.; Naeem, M.A. Privacy-preserving data (stream) mining techniques and their impact on data mining accuracy: A systematic literature review. Artif. Intell. Rev. 2023, 56, 10427–10464. [Google Scholar] [CrossRef]

- Hood, C.; Heald, D. (Eds.) Transparency: The Key to Better Governance? Online edn, British Academy Scholarship Online, 31 January 2012; Oxford University Press for The British Academy: Oxford, UK, 2006. [Google Scholar] [CrossRef]

- Baimyrzaeva, M.; Kose, H.O. The Role of Supreme Audit Institutions in Improving Citizen Participation in Governance. Int. Public Manag. Rev. 2014, 15, 77–90. [Google Scholar]

- Boyte, H.C. Everyday Politics: Reconnecting Citizens and Public Life; University of Pennsylvania Press: Philadelphia, PA, USA, 2004. [Google Scholar]

- Rao, I.S.; Mat Kiah, M.L.; Hameed, M.M.; Memon, Z.A. Scalability of blockchain: A comprehensive review and future research direction. Clust. Comput. 2024, 27, 5547–5570. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Singh, R.P.; Suman, R.; Khan, S. A review of Blockchain Technology applications for financial services. BenchCouncil Trans. Benchmarks Stand. Eval. 2022, 2, 100073. [Google Scholar] [CrossRef]

- Alzoubi, Y.I.; Mishra, A. Green blockchain—A move towards sustainability. J. Clean. Prod. 2023, 430, 139541. [Google Scholar] [CrossRef]

- Haque, E.U.; Shah, A.; Iqbal, J.; Ullah, S.S.; Alroobaea, R.; Hussain, S. A scalable blockchain based framework for efficient IoT data management using lightweight consensus. Sci. Rep. 2024, 14, 7841. [Google Scholar] [CrossRef]

- Sabry, N.; Shabana, B.; Handosa, M.; Rashad, M.Z. Adapting blockchain’s proof-of-work mechanism for multiple traveling salesmen problem optimization. Sci. Rep. 2023, 13, 14676. [Google Scholar] [CrossRef] [PubMed]

- Hossain, M.S.; Muhammad, G.; Chilamkurti, N.K. Blockchain Empowered Asynchronous Federated Learning for Secure Data Sharing in Internet of Vehicles. IEEE Internet Things J. 2020, 7, 2345–2355. [Google Scholar]

- Li, Y.; Yang, S.; Zhang, Y. A Systematic Survey of Control Techniques and Applications in Connected and Automated Vehicles. IEEE Trans. Veh. Technol. 2022, 71, 9405–9424. [Google Scholar] [CrossRef]

- Gonzalez, D.; Perez, J.; Milanés, V.; Nashashibi, F. A Survey of Motion Planning and Control Techniques for Self-Driving Urban Vehicles. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1135–1145. [Google Scholar] [CrossRef]

- Zhao, J.; Liang, B.; Chen, Q. The key technology toward the self-driving car. Int. J. Intell. Unmanned Syst. 2018, 6, 2–20. [Google Scholar] [CrossRef]

- Weinland, D.; Ronfard, R.; Boyer, E. A Survey of Vision-Based Methods for Action Representation, Segmentation, and Recognition. Comput. Vis. Image Underst. 2011, 115, 224–241. [Google Scholar] [CrossRef]

- Shin, C.; Farag, E.; Ryu, H.; Zhou, M.; Kim, Y. Vehicle-to-Everything (V2X) Evolution From 4G to 5G in 3GPP: Focusing on Resource Allocation Aspects. IEEE Access 2023, 11, 18689–18703. [Google Scholar] [CrossRef]

- Huszár, V.D.; Adhikarla, V.K.; Négyesi, I.; Krasznay, C. Toward Fast and Accurate Violence Detection for Automated Video Surveillance Applications. IEEE Access 2023, 11, 18772–18793. [Google Scholar] [CrossRef]

- Sodemann, A.; Ross, M.P.; Borghetti, B.J. A Review of Anomaly Detection in Automated Surveillance. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 2020, 42, 1257–1272. [Google Scholar] [CrossRef]

- Weber, E.; Marzo, N.; Papadopoulos, D.P.; Biswas, A.; Lapedriza, A.; Ofli, F.; Imran, M.; Torralba, A. Detecting Natural Disasters, Damage, and Incidents in the Wild. In Computer Vision–ECCV 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 331–350. [Google Scholar] [CrossRef]

- Alam, F.; Alam, T.; Hasan, M.A.; Hasnat, A.; Imran, M.; Ofli, F. MEDIC: A multi-task learning dataset for disaster image classification. Neural Comput. Appl. 2023, 35, 2609–2632. [Google Scholar] [CrossRef]

- Cao, L. AI and data science for smart emergency, crisis and disaster resilience. Int. J. Data Sci. Anal. 2023, 15, 231–246. [Google Scholar] [CrossRef]

- Jung, M.; Dorner, M.; Weinhardt, M. The impact of artificial intelligence along the insurance value chain and on the insurability of risks. Geneva Pap. Risk Insur. Issues Pract. 2020, 45, 474–504. [Google Scholar] [CrossRef]

- Bibri, S.E. Data-Driven Smart Sustainable Cities of the Future: Urban Computing and Intelligence for Strategic, Short-Term, and Joined-Up Planning. Comput. Urban Sci. 2021, 1, 8. [Google Scholar] [CrossRef]

- Rawal, B.S.; Curry, P.J. Challenges and opportunities on the horizon of post-quantum cryptography. APL Quantum 2024, 1, 026110. [Google Scholar] [CrossRef]

- Lin, Z.; Wang, D.; Cao, C.; Xie, H.; Zhou, T.; Cao, C. GSA-KAN: A hybrid model for short-term traffic forecasting. Mathematics 2025, 13, 1158. [Google Scholar] [CrossRef]

- The EU Artificial Intelligence Act. Up-to-Date Developments and Analyses of the EU AI Act. Available online: https://artificialintelligenceact.eu/ (accessed on 7 August 2025).

- NIST. AI Risk Management Framework. National Institute of Standards and Technology (NIST). Available online: https://www.nist.gov/itl/ai-risk-management-framework (accessed on 7 August 2025).

- Fujii, Y. Lessons from the Roman Empire: ‘Bread and Circuses’ as a Model for Democracy in the AGI Age. AI Soc. 2025. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).