1. Introduction

Cancer is one of the leading causes of death in the world [

1]. Every year, tens of millions of people are diagnosed with cancer and more than half of these patients die. In most cases, cancers are detected at an advanced stage, making treatment more difficult and very costly. Decades of research have focused on obtaining an accurate early diagnosis of cancer so that doctors can find the best treatment at the right time. When cancer care is delayed or inaccessible, the chances of survival are lower, the problems associated with treatment are greater, and the cost of care becomes higher.

Early diagnosis is the key to effective treatment and can improve cancer outcomes by providing care in the earliest possible stage representing an important public health strategy [

2]. However, the cancer diagnosis process is complicated and requires a lot of time and human effort. Therefore, it is very important to provide efficient analysis tools that can extract relevant information from medical images to speed up diagnosis and make it safer and more reliable.

In such cases, artificial intelligence (AI) is iterative to help optimize the diagnosis process [

3,

4,

5], especially recent advances in deep learning, as we have recently noticed that AI is being thoroughly considered and widely applied to all aspects of medical image analysis [

6,

7,

8], including oncology [

9,

10,

11,

12], making a high-precision diagnosis possible. It has proven to be one of the best ways to analyze and make predictions based on large imaging data sets. The analysis of medical images is a critical task that needs to address several challenges: first, in the medical field in general and oncology in particular, we need to have the best accuracy, since it involves human lives [

13]. Secondly, the images are of different modalities (MRI, X-ray, etc.) and can contain several classes depending on the nature of the pathologies treated. And finally, the size of the data sets is relatively small and insufficient to efficiently train resource-intensive deep learning models [

14], usually addressed by generating synthetic images [

15], which is not optimal in a context of inter-modality.

Through medical image analysis, classification and segmentation tasks are extremely important and provide a better understanding of cancer and a better definition of the most effective treatment [

16]. Both techniques are useful in extracting features, analyzing, and interpreting medical images. These techniques are used to find organs, tumors and other anatomical structures, with the aim of acquiring quantitative information useful for decision making in diagnosis, surgery, or clinical studies. Many of the indicators used to assess cancer risk and severity are derived from the tumor segmentation and its grading.

Deep learning techniques and specifically convolutional neural networks (CNN) models are extremely promising in such cases. These models are bringing great success in many computer vision applications such as medical image classification [

17] and segmentation [

18], unfortunately most of the proposed deep learning approaches handle these two tasks independently despite their interconnectedness which requires much more effort, computational resources and time to execute.

Multi-task learning MTL [

19] is a more optimal and best performing solution for this kind of resources problems related to Deep Learning models. It consists in introducing a related auxiliary task in the network to learn both tasks simultaneously. This auxiliary task not only brings additional data but also helps the network to generalize better and learn a more powerful representation from the data. The idea of multi-task learning is inspired by the way humans use the knowledge gained in other related tasks, in learning the target task, in order to increase its performance.

Multi-task learning enables simultaneous training of classification and segmentation tasks within a single model by sharing internal feature representations across network layers. This approach enhances computational efficiency, reduces resource consumption, and improves predictive accuracy. It has demonstrated strong performance across various medical domains, including neurodegenerative disease analysis [

20,

21], oncology [

22,

23,

24], and COVID-19 diagnostics [

25,

26,

27]. It is also increasingly used for unstructured medical texts [

28,

29,

30], enabling models to simultaneously extract clinical entities, classify outcomes, and detect relationships within reports or notes.

However, the proposed solutions are mainly mono-task based models, and they suffer from the issue of data sparsity which can negatively impact the performances. Moreover, the proposed multi-task models, although they benefit from the advantages of MTL, they have been mostly tested and validated on a single dataset, which does not help to assert the generalization and application of the approach on the different medical images in oncology. In this context, the objective of this paper is to implement a deep learning-based solution for the classification and segmentation of different types of cancer from medical images with several modalities.

To meet this objective, we built two multi-task CNN models processing the classification and segmentation tasks. Both models are based on the UNet architecture [

31] by replacing its encoder with pretrained models (VGG16, Mobilenetv2). Multi-task models were trained and evaluated on four different datasets, and their performance was compared to a single task model of image segmentation and classification namely Attention based UNet, Mask-RCNN for segmentation and VGG16, Mobilenetv2 for classification. The obtained results show very encouraging performances of the multi-task solution compared to the single-task models.

The reminder of this paper is organized as follows: In

Section 2, we present the technical background, then we summarize in

Section 3 the related works.

Section 4 presents our proposed approach. In

Section 5 the experimental setup and finally the last section discusses the results.

2. Background

2.1. Transfer Learning with Convolutional Neural Networks

Convolutional Neural Networks (CNN) is gradually being applied to image classification and segmentation as deep learning gains traction, greatly boosting the accuracy of both tasks. A convolutional neural network is a type of acyclic (feed-forward) artificial neural network, in which the connection pattern between neurons is inspired by the visual cortex of animals. The neurons in this brain region are arranged so that they correspond to overlapping regions when paving the visual field; as the first layers in CNN learn filters for detecting basic features: edges, corners, etc. These first layers features are data or task independent, but are general to various data and tasks.

Transfer learning involves training a network on a base dataset and task, and then reassigning or transferring the learned features to a target network that will be trained on a target dataset and task. The extracted features are general and independent of the base and target tasks, allowing us to: Learn robust features and reduce the number of trainable parameters, Help the model converge much faster, and achieve higher performance compared to one trained from scratch.

2.1.1. VGG16

The pretrained VGG16 convolutional neural network model [

32] used to win ILSVR competition on ImageNet dataset, which contains over a million images and can classify images into 1000 object categories [

33]. Due to its simplicity and high performance, VGG16 model was considered as one of the excellent vision model architectures.

Based on sixteen weighted layers. VGG16 takes as a default input size 224 × 224 pixels with 3 RGB channels images. Its architecture consists of a series of convolution layers with 3 × 3 filter stride 1 and maxpool layers of 2 × 2 filter of stride 2.

2.1.2. MobileNetV2

MobileNet, mainly a lightweight deep neural network proposed by Google based on the decomposition of convolution kernel, It can effectively reduce network parameters while taking into account optimization delay, excellent robustness while keeping the number of model parameters effectively reduced.

The second version of MobileNet (MobileNetV2) [

34] is a simplified architecture that uses depth-separable convolutions to build deep and lightweight convolutional neural networks and provides an efficient model for classification applications; using lightweight depthwise convolutions to filter features in the intermediate expansion layer.

It has also improved the state of the art performance of mobile models on multiple tasks such as classification and object detection and benchmarks as well as across a spectrum of different model sizes.

2.2. Multi Task Learning

Multi-task Learning (MTL) [

19,

35] is a learning paradigm in the field of machine learning and its goal is to take advantage of the useful information contained in several related tasks in order to improve the generalization performance of all the tasks. MTL can learn robust representations between related tasks. These shared representations increase the efficiency of the data which leads to better performance and a mitigation of the risk of excessive overfitting.

In the problem of data scarcity, the number of labeled data in each task is insufficient to train an accurate model, while MTL uses more of the data from different tasks to get a more accurate result for each task, also having a single shared model instead of independent models per task reduces storage space and alleviates well-known weaknesses in deep learning: data requirements and compute demand.

Multi-task learning is commonly accomplished in deep learning using either hard or soft parameter sharing of hidden layers. Hard parameter sharing is the most commonly used approach for MTL with neural networks. It is usually applied by sharing the hidden layers among all tasks, while keeping several task-specific output layers, which greatly reduces the risk of overfitting. In Soft parameter sharing type, each task has its own model with its own parameters, the distance between the model parameters is then regularized to encourage the parameters to be similar.

3. Related Works

Several architectures based on deep learning have been proposed for the classification and segmentation of medical images.

In [

25] Amyar et al. presented a multi-task deep learning model to jointly identify whether the patient has COVID-19 and segment the COVID-19 lesion from the CT images. The architecture is composed of an encoder and two decoders for reconstruction and segmentation, and a multilayer perceptron for classification. Also in [

36], Aram et al. presented a model that combines instance segmentation, the LSTM long-term memory network, and the attention mechanism to predict COVID-19 and segment CT images. The model works by extracting a sequence of regions of interest that contain relevant class information (COVID-19, Common Pneumonia, Control) and applies two LSTMs with an attention mechanism to this sequence to extract relevant features for determining class.

Thi et al. [

37] proposed a multi-task learning scheme, which combines segmentation and classification for cancer diagnosis in mammography. The proposed architecture is based on a fully convolutional network (FCN) that allows efficient sharing of features. They concluded that joint training allows for better cooperation between tasks.

For skin lesion analysis [

38] Yang et al. proposed a multi-task deep neural network. The proposed multi-task learning model solves the tasks of lesion segmentation and lesion classification.

Gu et al. [

39] proposed an attention-based CNN (CA-Net). The attention mechanism is useful for improving the segmentation performance of CNNs because it focuses on the most relevant information in the feature maps while removing irrelevant parts. CA-Net significantly improved the mean Dice segmentation score from 87.77% to 92.08% for the skin lesion, from 84.79% to 87.08% for the placenta, and from 93.20% to 95.88% for the fetal brain, respectively, compared to UNet.

Jain et al. [

40] developed an efficient multi-task model for simultaneous segmentation and classification for dilated cardiomyopathy, using an encoder/decoder architecture with the attention mechanism. This model is based on an encoder/decoder architecture and consists of three branches: The extraction path (encoder), The attention path which has the advantage of being able to highlight features that are advantageous for classification tasks and finally the recovery path, which allows the model to learn higher level intermediate representations. The experimental results show that the multi-task network achieved an accuracy of 97.63%, an AUC of 98.32%. By comparing their model with other classification and segmentation techniques they showed that the performance of multi-task segmentation and classification is better than that of single-task segmentation or classification.

Foo et al. [

41] proposed a multi-task deep learning system called MTUnet, for lesion segmentation and classification of Diabetic retinopathy DR, the image of the diabetic retina can be classified into different severity levels 0 (no apparent DR), 1 (mild DR), 2 (moderate DR), 3 (severe DR), 4 (poliferative DR). This system is an encoder-decoder network based on Unet architecture by replacing its encoder with a classical VGG16 network to produce the DR classification and the decoder for lesion segmentation.

Zhou el al. [

24] aims to overcome the shortcomings of the popular cascading model strategy that can lead to undesirable system complexity due to its multiple separate models. As a solution, they proposed to adopt multi-task learning to integrate multiple tasks into a single run (OM-Net). They decomposed the multi-class segmentation of Brain Tumors into three distinct but interconnected tasks: (1) Segmentation to detect complete tumor, (2) Refined segmentation for the complete tumor and its intra-tumor classes and (3) Precise segmentation. Each task has its specific parameters and the shared backbone model aims to learn the correlation between tasks. The guided attention module (CGA) is added to share prediction results between OM-Net tasks, which can use the prediction results generated by the previous task to produce more refined category-specific statistics.

To preserve contextual information, Gu et al. [

42] suggested utilizing a dilated convolution block at the network’s bottleneck. In this work the authors present a contextual encoding network (called CE-Net) to capture more high-level information and preserve image integrity. This CE-Net is established for medical image segmentation and compared with UNet. The proposed CE-Net adopts a pretrained ResNet block in the encoder part. A new “Context extractor” is added to extract context-related semantic information. They applied the proposed CE-Net to different 2D medical image segmentation tasks. The results show that the proposed method exceeds the performance of the original UNet method and other methods for “Optic disc” segmentation, lung segmentation, cell contour segmentation.

Lv et al. [

43] introduced BrainTumNet, a transformer-enhanced multi-task deep learning model utilizing an advanced encoder–decoder architecture with adaptive masked Transformers and multi-scale feature fusion. This network achieved optimale performance, with an IoU of 0.921 and DSC of 0.91 for tumor segmentation, alongside a classification accuracy of 93.4% evaluated across both internal and external datasets.

4. Multi Task Unet Model Description

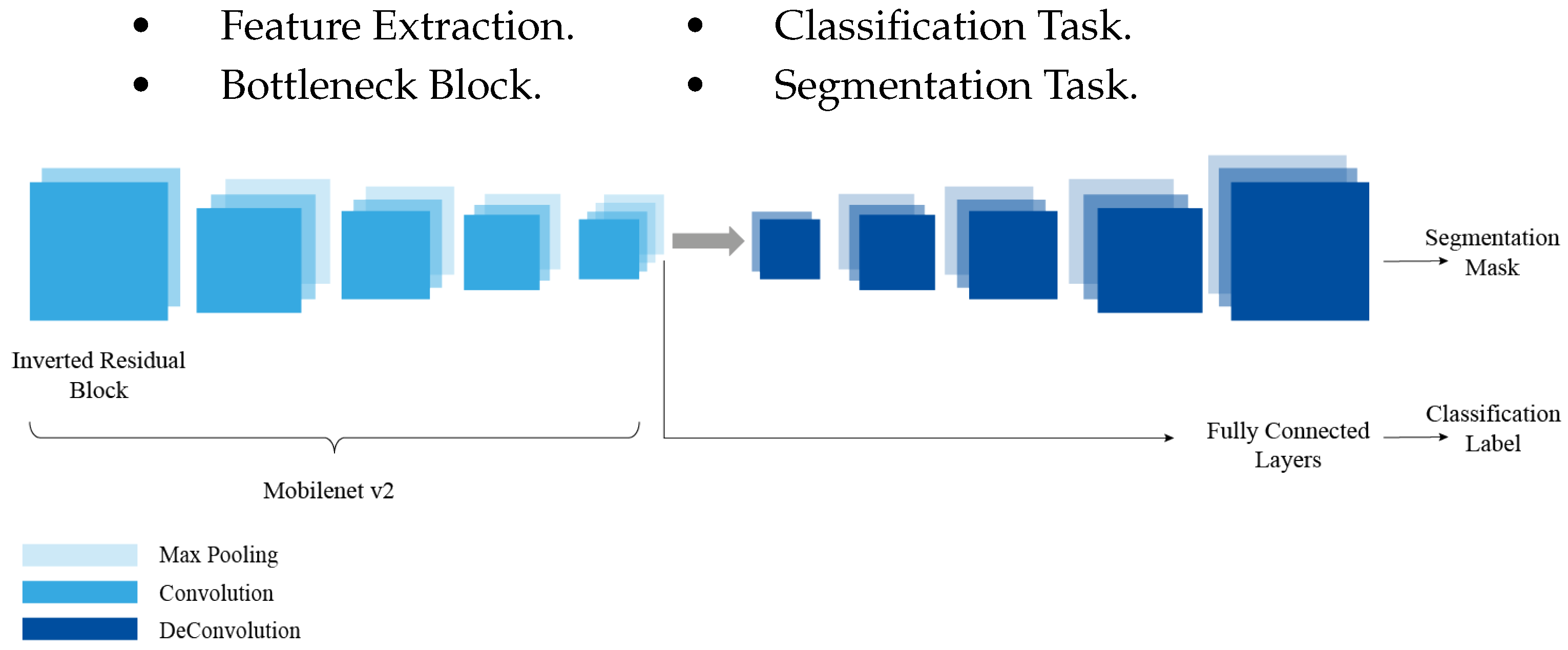

4.1. Multi-Task Model Architecture

We use the multi-task architecture that combines transfer and multi-task learning [

41], where the coding block is tested by the convolutional layers of VGG16 (

Figure 1) and MobileNetv2 (

Figure 2) networks, which are pretrained on the ImageNet dataset. The use of transfer learning allows robust features to be learned and reduces the number of trainable parameters. It also helps the model converge much faster compared to a model that is not pretrained. A pretrained model achieves high performance compared to a model trained from scratch.

The multi-task network model allows the different components to share the features detected in the first layers. The network is composed of different components that will be trained using an annotated dataset. The network is composed of four blocks for an analysis of the data set on cancer pathologies:

Figure 2.

Multi-task UNet Architecture with MobileNetV2 Encoder.

Figure 2.

Multi-task UNet Architecture with MobileNetV2 Encoder.

The detected features learned by the multi-task model can be used for the prediction of the two tasks, i.e., the classification and segmentation of the cancer cells in the dataset. The multi-task approach is an encoder-decoder network architecture and is depicted schematically in

Figure 1.

The encoder network is used to capture the context of the image. It is a traditional stack of convolution and Maxpool layers. During these convolution and subsampling operations the spatial information is lost, which allows the network to learn “What” is in the image, but at the same time it loses the “Where” information, the encoder is similar to a traditional classification network; it follows the typical architecture of a CNN with the repeated application of convolutional and subsampling layers. With this multi-task architecture we will take advantage of this path to perform the classification task instead of training an independent CNN model, so we will use the encoder as a base and we will add “Fully connected layers” that will produce a label corresponding to the input image.

The Bottleneck is the layer containing a compressed representation of input data. At this level, there are two steps to do: classification and segmentation. The first task produces the classification label using the encoder path, while the second produces the segmentation mask following the decoder path. After going through the encoder path, we get a coded version of the input image.

In semantic segmentation, the information about the spatial dimensions of the image must be preserved, so the decoder will build the output from the compressed representation, this decoder is a stack of oversampling and convolution blocks which helps to recover the lost spatial information, and to do this it uses “Skip connections” between the encoder and the decoder, at each stage of the decoder, concatenating the output of the oversampling layers with the corresponding feature maps of the encoder at the same level. These connections are useful for transferring information from the low-level layers of the encoder path to the high-level layers of the decoder path, as this information is needed to generate reconstructions that have precise details.

4.2. Optimization and Evaluation Metrics

Multi-task learning concerns the problem of multi-objective optimization of a model. In this paper, the multi-task network uses two loss functions from the segmentation and the classification tasks. More specifically, the segmentation task’s loss function affects the entire network (encoder and decoder), whereas the classification task uses a weighted categorical cross-entropy loss that only affects the encoder section. The classification loss incorporates sklearn’s balanced class weighting strategy, where weights are calculated using the inverse class frequency with the ’balanced’ method for each of the diagnostic categories, helping to overcome the challenge of class imbalance in the datasets used. The overall network loss function is a linear combination of the losses for each individual task.

The first task is a multi-class classification problem, the prediction outputs a probability vector

P consisting of the predicted probabilities of all classes. Then,

P is compared to the grading ground-truth

G. The categorical cross-entropy loss is used, as shown in Equation:

where

N is the number of examples in the training set,

C is the number of categories. The output

may be regarded as the predicted probability distribution for the

ith observation belonging to class

c, and the target

can be interpreted as true.

The segmentation task outputs a prediction map where each pixel contains a class label represented as an integer. Since it is a binary classification of whether the pixel is cancer or background, the loss function used is binary cross entropy computing by the following average:

The total loss is the average loss across all the examples, where n is the number of examples, y represents the true label, denotes a single element of that label, represents the predicted value by the model and refers to a single element of that prediction.

To enable joint optimization of both classification and segmentation tasks, we define the total loss

as a weighted sum of the individual task-specific losses:

where

denotes the segmentation loss, and

represents the classification loss. The hyperparameters

and

control the relative contribution of each task to the total loss.

In our experiments, we empirically selected a weighting of

and

, which provided the best trade-off between segmentation precision and classification performance (see

Section 5.3). This reflects the increased complexity and spatial nature of the segmentation task, which benefits from a stronger optimization signal.

To evaluate and compare the performance of the classification and segmentation tasks of the UNet multi-task model, we use two metrics:

4.2.1. Accuracy

Measuring the ratio between the correct predictions of the classification model and the total number of predictions.

4.2.2. Dice Similarity Coefficient

The most widely used metric for evaluating segmentation performance; characterizing the agreement between the segmented pixels and the ground truth. The coefficient ranges from 0 (no overlap) to 1 (complete overlap). Formulated as follows:

where

G is the ground truth, and S the segmentation pixels,

represents the common elements between the sets

G and

S, and |.| denotes the set cardinality.

5. Experiments

We conduct a comparative study to assess multi-task UNet models performance for both tasks on various datasets with several medical imaging modalities including IRM, X-Ray, dermoscopic and digital histopathology. The multi-task UNet model is used with different pre-trained deep learning models (VGG 16 and MobileNetv2).

5.1. Data Description

We propose a multi-task learning approach for the joint classification and segmentation of cancer-related medical images using a unified model. This framework requires datasets annotated for both tasks, necessitating additional preprocessing efforts to generate consistent labels and segmentation masks.

To evaluate the generalizability of the model, we curated and processed heterogeneous datasets varying in format, size, and origin, each corresponding to a distinct cancer type. Experiments were conducted on datasets covering skin lesions, brain tumors, and prostate cancer. Additionally, a dataset on pneumothorax was included to assess the applicability of the multi-task approach to non-cancerous pathologies, thereby highlighting the model’s versatility.

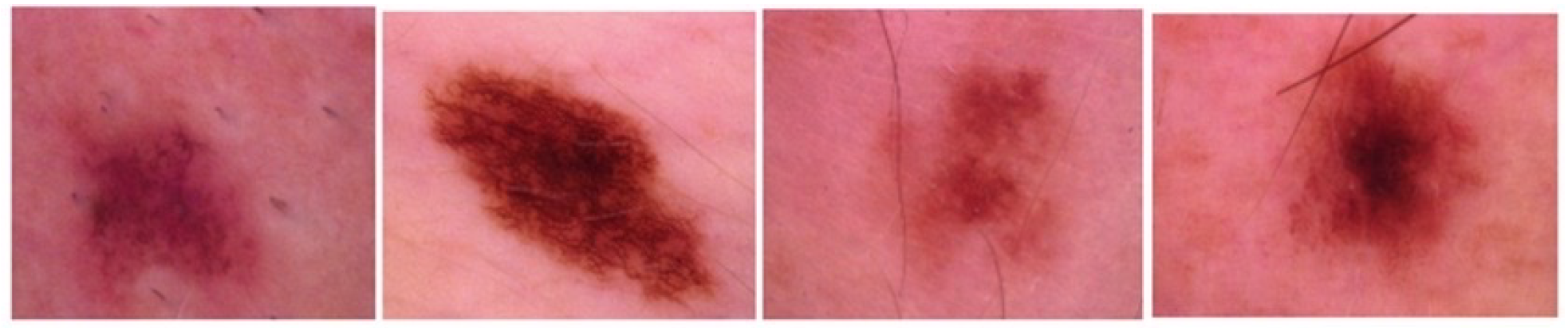

5.1.1. Skin Lesion: ISIC 2018 Dataset

The ISIC 2018 dataset [

44] was released by the International Skin Imaging Collaboration (ISIC) as a large-scale dataset of dermoscopic images. Skin lesions are characterized by distinct spots, abnormal bumps, scars, blisters, or different discoloration of the skin on normal body areas. This dataset contains 2594 RGB images of skin lesions, each with a resolution of 2166 × 3188 pixels.

Figure 3 shows sample skin lesions from the ISIC 2018 dataset.

The lesions are classified into the following labels: actinic Keratoses, basal cell carcinoma, benign keratosis, dermatofibroma, melanocytic nevi, vascular skin lesions and melanoma.

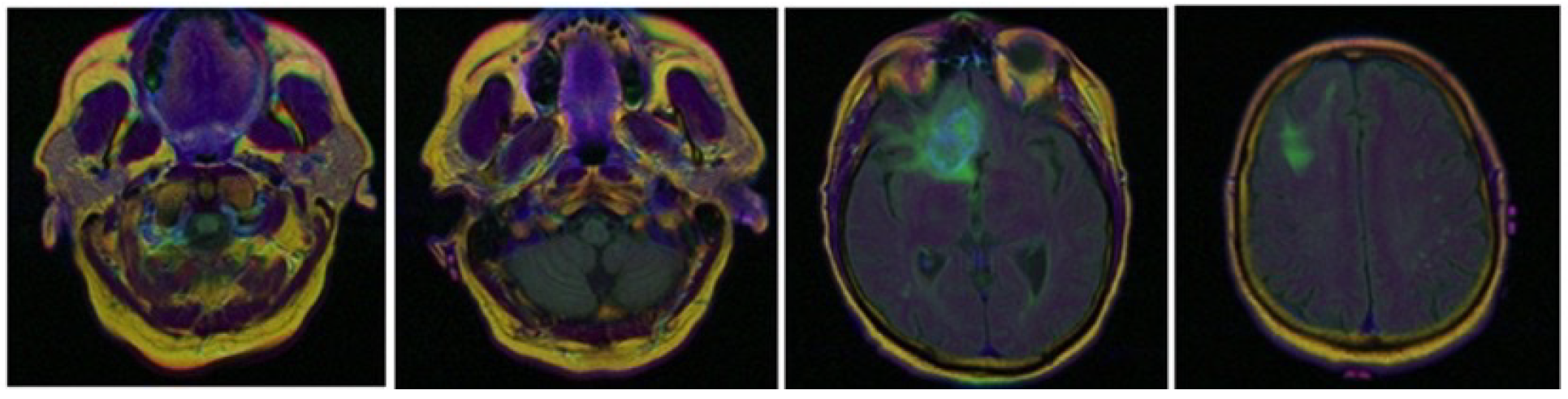

5.1.2. Brain Tumor: TCGA-LGG Dataset

Of all brain tumors, glioma is the most serious. According to World Health Organization (WHO) criteria, gliomas have been classified into four grades, from grade I to grade IV, based on the malignancy of the tumor. Normally, grade III and IV gliomas are called high-grade gliomas (HGG) and grades I and II are called low-grade gliomas (LGG). LGGs can be classified into astrocytomas, oligodendrogliomas and oligodendrocyte astrocytomas according to the pathological type. In our work we will use the LGG type gliomas.

The Cancer Genome Atlas Low Grade Glioma (TCGA-LGG) dataset will be used as part of a larger effort to create a research community focused on linking cancer phenotypes to genotypes by providing clinical images matched to Cancer Genome Atlas (TCGA) subjects (

Figure 4).

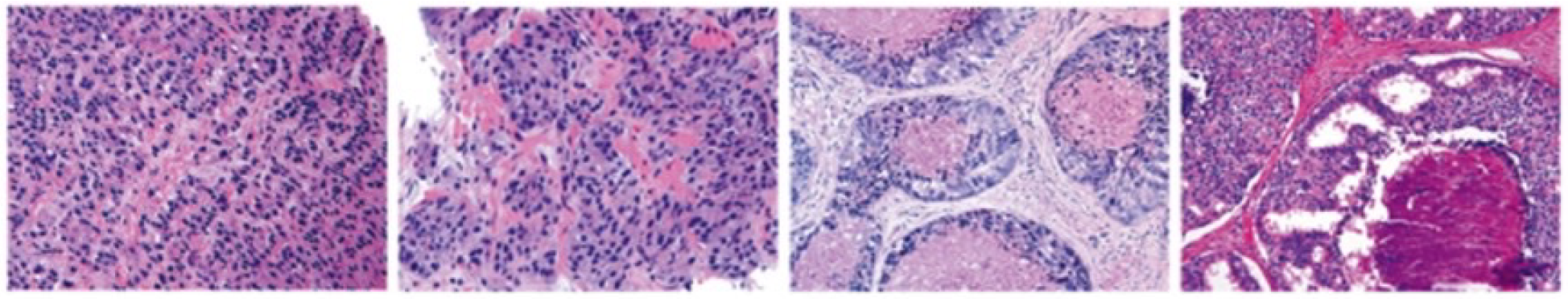

5.1.3. Prostate Cancer: PANDA Prostate Cancer Grade Assessment Dataset

The data used in this study is part of a Kaggle competition to detect prostate cancer [

45]. The Diagnosis is based on the grading of prostate tissue biopsies. These tissue samples are examined by a pathologist and scored according to the Gleason grading system. In this dataset the training set consists of about 11.000 prostate biopsies, with slide level labels and label masks. The Karolinska Institute and Radboud University Medical Center, respectively, gathered and labeled the samples.

The grading process consists of finding and classifying cancer tissue into Gleason patterns based on the tumor’s architectural growth patterns. After marking a biopsy report into the corresponding Gleason score, it is converted into an ISUP grade on a 1–5 scale.

Figure 5 shows sample prostate cancer images from the PANDA dataset.

5.1.4. SIIM-ACR Pneumothorax Segmentation Dataset

Pneumothorax is usually diagnosed by a radiologist on a chest x-ray, and can sometimes be very difficult to confirm. An accurate AI algorithm to detect and segment pneumothorax would be useful in many clinical scenarios.

The Society for Imaging Informatics in Medicine (SIIM) has organized a competition to assist in the detection of pneumothorax from radiological images. The Society is a leading healthcare organization for those interested in the current and future use of informatics in medical imaging. Its mission is to advance medical imaging informatics across the enterprise through education, research and innovation in a multidisciplinary community.

Figure 6 shows sample images from the SIIM-ACR Pneumothorax Segmentation dataset.

A general overview of the datasets is explained in

Table 1 and the distribution of the classes in the datasets is shown in

Table 2.

5.2. Preprocessing and Environment

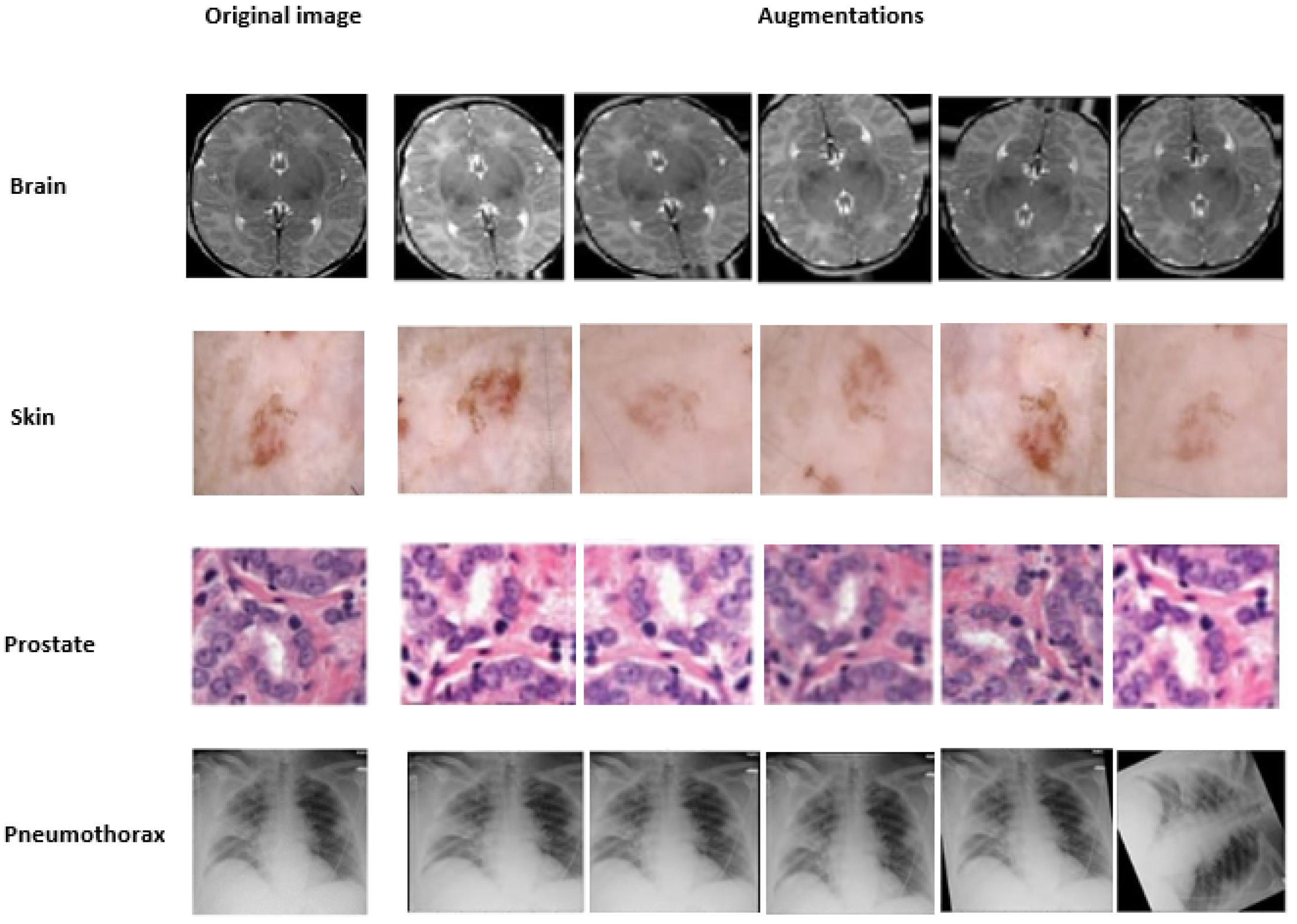

For efficient learning, deep artificial neural networks need a large corpus of training data. Collecting training data is often expensive and time-consuming. Data augmentation (DA) overcomes this problem to better serve learning by artificially extending the training set with several transformations, DA is a strategy that allows practitioners to significantly increase the diversity of data available for model training without collecting new data, Therefore, in this work, in order to expand the training datasets; the rotation, flipping and shearing modifications were used to augment the samples during our training, as shown in

Figure 7.

Regarding the environment used and the implementation of the approach; we utilized T4 GPUs from Google Colab’s cloud service environment to train our multi-task UNet architecture for classification and segmentation. The model was trained over 50 epochs with a batch size of 32, with an early stopping enabled. Also employed the Adam optimizer set to its default learning rate of 0.001.

In the next section, we present the performance results for the classification and segmentation tasks, as well as the comparison with single-task approaches.

5.3. Ablation Study

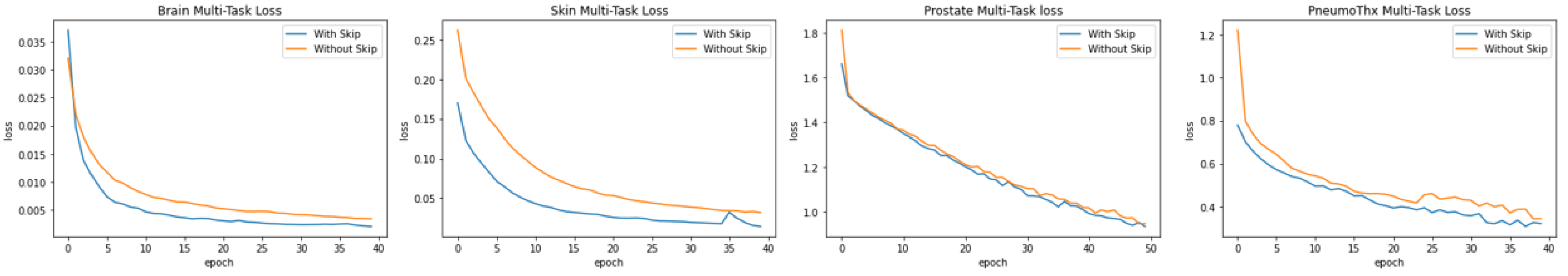

5.3.1. Skip Connections

As described in the multi-task model architecture part, our model benefits from the learning gained with The Skip connections. between the decoder and encoder layers (see

Figure 8), in order to ensure a constant information transfer helping to fine-gain more details used for the class prediction, which improves the model average loss in all four cases.

To validate our hypothesis, we performed an ablation study comparing model architectures with and without skip connections. The experimental results demonstrate that skip connections consistently boosts performance in the four distinct disease cases. As evidenced by the performance metrics, this architectural enhancement not only improves model accuracy but also mitigates information loss; a key limitation observed in models lacking skip connections.

5.3.2. Task Weight Allocation

To investigate the impact of task prioritization in our multi-task setup, we ran a small experiment over 50 with a reduced set of data, varying the segmentation-to-classification loss weights as follows: [1:1], [2:1], [5:1], [10:1], [1:2], [1:5], and [1:10], while keeping all other settings constant.

Notably, the best segmentation accuracy (0.9366) was obtained with a [5:1] weighting (Segmentation:Classification), which is the configuration used in our final proposed model. This setup allowed the segmentation branch to receive a stronger optimization signal while maintaining a reasonable classification accuracy (0.8021).

Increasing segmentation weight beyond [5:1] (e.g., [10:1]) led to negligible segmentation improvement but worsened classification performance and increased the total loss. Similarly, increasing classification weight had a destabilizing effect on segmentation. This experiment highlight the importance of carefully tuning task weight allocation and validate our design choice in giving higher weight to segmentation, which is the more complex and pixel-level task in our problem formulation.

6. Results and Discussion

The specificity of medical data analysis implies the consideration of additional factors and must meet critical challenges to effectively achieve its main purpose. Despite having relatively small data sets, we were able to achieve good classification and segmentation results by exploiting the useful information contained in these two tasks for greater generalization with an effective image representation. This representation is the driving force behind the success of the multi-task model in outperforming single-task models.

Table 3 summarizes the main results of the different experiments, we note that both multi-task models outperformed the single-task Attention-UNet model and Mask-RCNN for segmentation and VGG16/Mobilenetv2 for classification. For the classification task, the multi-task model MobilenetV2 outperforms the VGG16 one with 86%, 89% and 88% of accuracy in skin, brain and prostate respectively. Compared to the single-task equivalent models, the multi-task improves significantly the accuracy from 67% up to 90% for brain tumor classification, as well as from 74% to 86% for skin lesion classification and from 78% to 88% for prostate tumor classification.

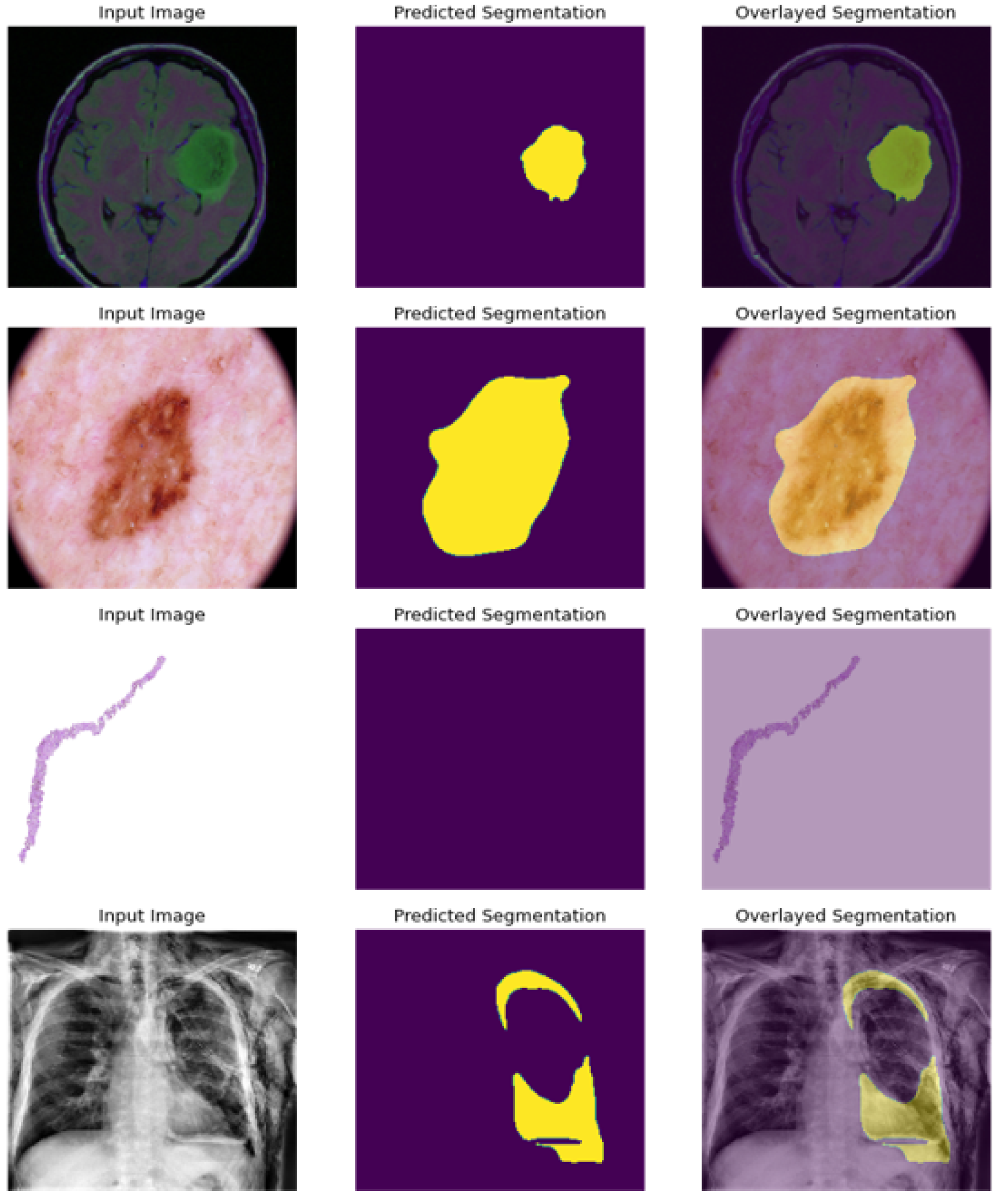

Regarding the classification task, we used the Gradient-weighted Class Activation Mapping (Grad-CAM) [

46] method as a visual tool expressing the model’s way of thinking in the process of classification, highlighting the regions indicating the probability that the image belongs to a predefined class. As the images in

Figure 9 clearly illustrate, these attention maps reflects which parts of the brain, skin, prostate or lung is getting the model’s attention most to improve its classification process.

For the segmentation task, the multi-task model produced the best image segmentation results for the three cancer datasets, with a dice coefficient of 95%, 98%, 99% respectively against 80%, 96%, 85% for the single-task Attention-UNet model, also outperforming the Mask-RCNN model with 94%, 95%, 97% respectively for the Skin, Brain and Prostate cancer’s segmentation.The best dice coefficients for the three datasets were obtained with the MobilnetV2-based multi task model.

As shown above, the multi-task model improves the performance of the single-task models of cancer tumor image classification and segmentation. To further validate our model and its power of generalization, we apply it to a different objective which is the prediction of pneumothorax. As a result, the model also improved the performance of the classification and segmentation tasks of these images from 73%, and 77% with the classification single-task models to 87% and from 83%, 95% segmentation single-task models to 99% with the proposed solution. This confirms the good generalization performance of the multi-task model.

As presented in

Figure 10; the model is able to precisely segment the region of disease in the scan image, and present an empty scan when classified as non cancerous as in the case of prostate image.

Unlike previous studies, where the proposed multi-task models are usually validated on a single cancer type. Our solution proves effectiveness in both tasks applied on different cancer types and different data set modalities such as number of classes, image shapes and sizes of cancer pathologies.

On another note, the statistical validation of our proposed method through 5-fold cross-validation demonstrates robust and significant improvements in segmentation performance. The paired t-test analysis revealed a highly significant enhancement in Dice scores (0.995 ± 0.001 vs. 0.829 ± 0.034, p < 0.001), representing a substantial 16.6 percentage point improvement over the baseline approach. This large effect size, combined with the consistent performance across all folds (Dice scores ranging from 0.994 to 0.996), indicates that our method not only achieves superior segmentation quality but does so reliably across different data partitions. While accuracy metrics remained comparable between methods (99.4%), the dramatic improvement in Dice scores suggests our approach excels particularly in precise boundary delineation and overlap accuracy, which are critical for clinical applications requiring high segmentation fidelity.

7. Conclusions

In this study, we proposed an innovative multi-task deep learning framework for the simultaneous classification and segmentation of diverse cancer types from medical imaging data. The developed approach leverages the strength of multi-task learning, effectively enhancing model performance in scenarios characterized by limited labeled datasets and multiple imaging modalities, including MRI, X-ray, dermoscopic, and digital histopathology images.

Our results demonstrate that the multi-task architecture consistently outperforms conventional single-task methods across various cancer datasets, achieving classification accuracies ranging from 86% to 90% and segmentation precisions between 95% and 99%. Notably, employing pre-trained models such as VGG16 and MobileNetV2 significantly improved the model’s feature extraction capabilities and reduced computational demands, thereby facilitating efficient and robust predictions.

Moreover, the conducted ablation studies confirmed the effectiveness of critical design choices, such as the incorporation of skip connections and optimized task-specific loss weighting, which substantially contributed to performance improvements.

This research underscores the potential of multi-task learning paradigms in overcoming data scarcity issues and enhancing diagnostic precision. By integrating classification and segmentation tasks into a single unified model, our solution offers a scalable and generalizable approach, applicable across diverse clinical scenarios and imaging modalities.

Future work will explore further architectural enhancements, including attention mechanisms [

47] and dilated convolutions [

48], to refine spatial context modeling. Additionally, incorporating multimodal strategies [

49] and multi-label classification capabilities [

50] promises to capture more nuanced pathological information, ultimately supporting more comprehensive clinical decision-making.