Fully Digital Audio Haptic Maps for Individuals with Blindness

Abstract

1. Introduction

2. Materials and Methods

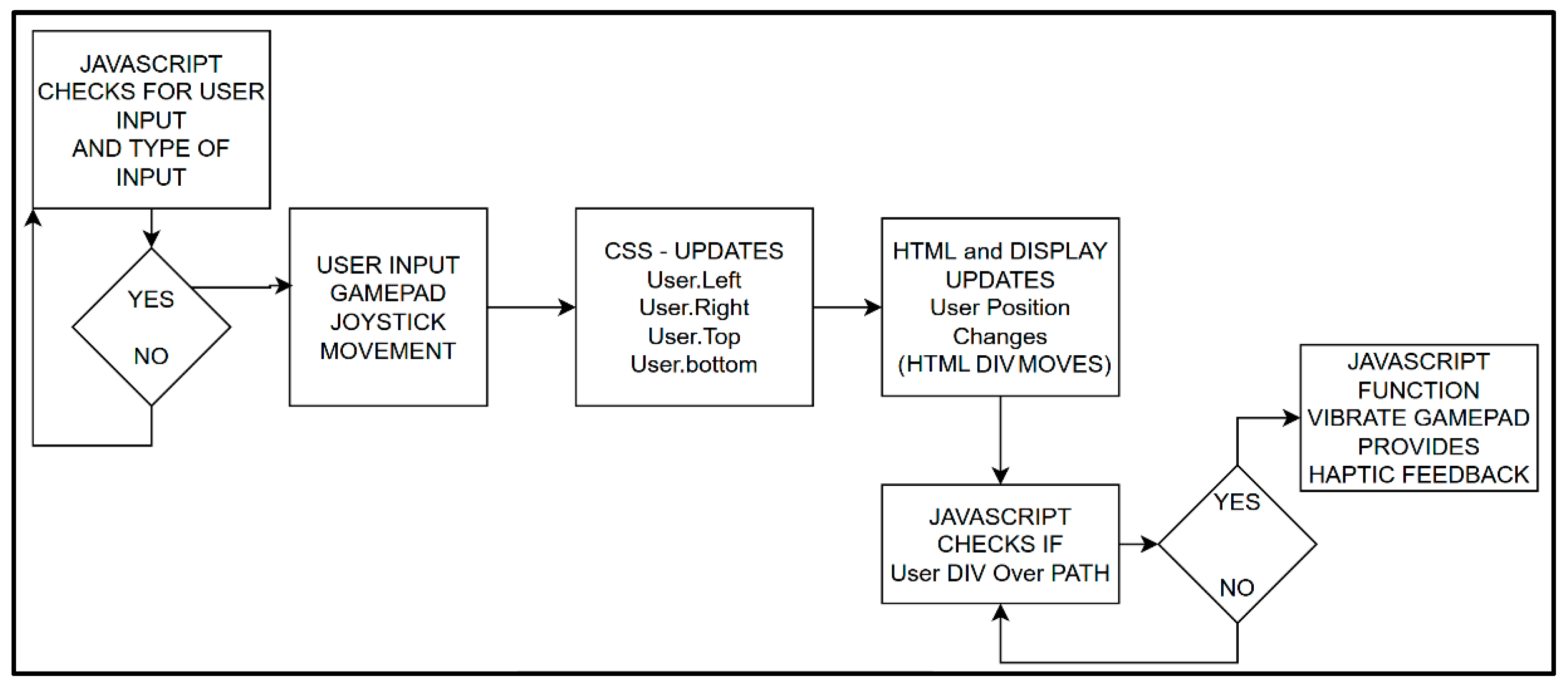

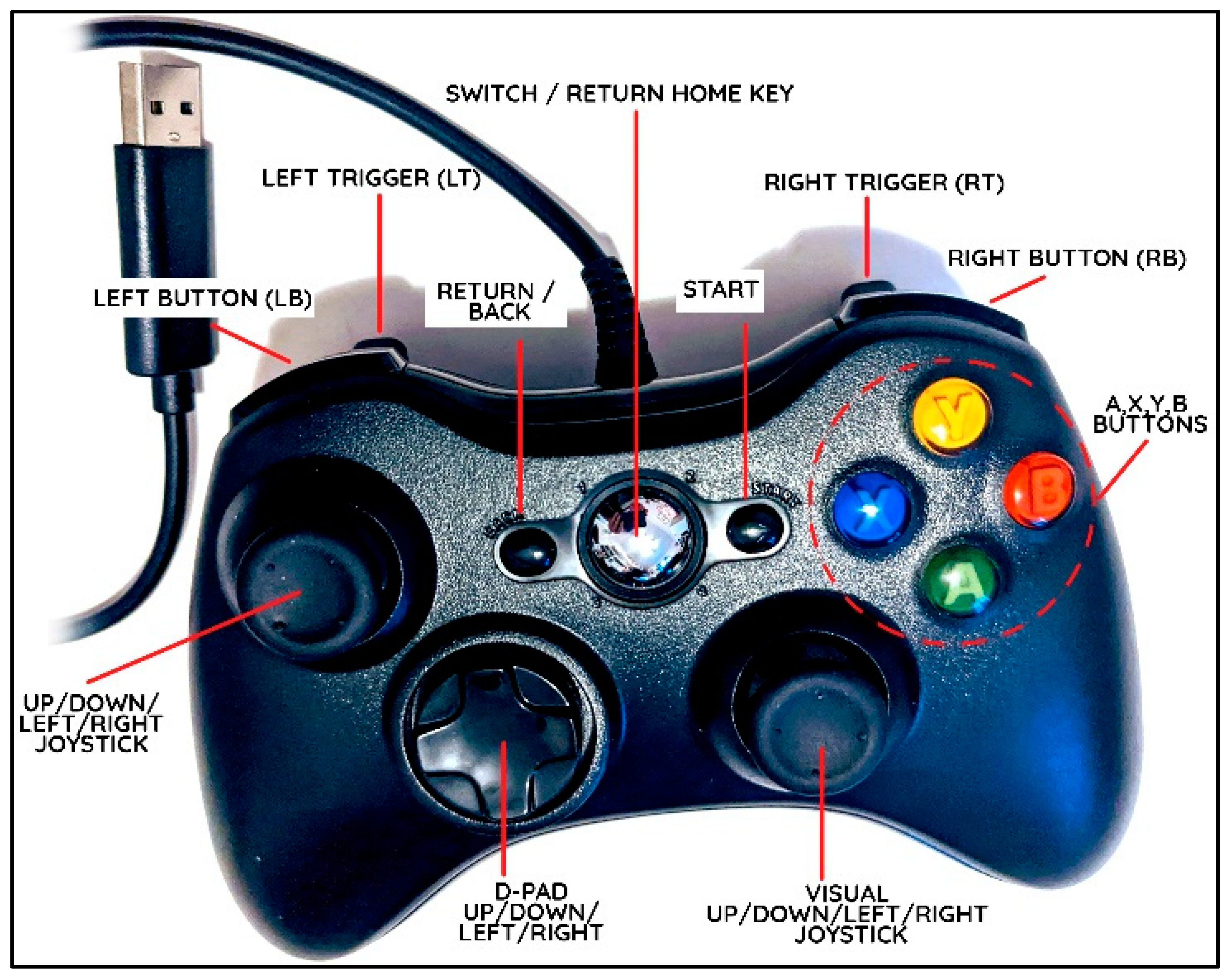

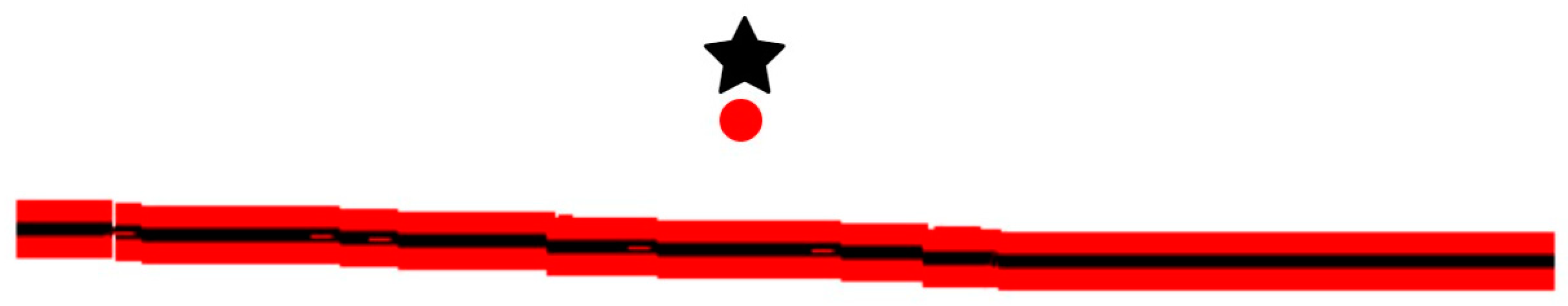

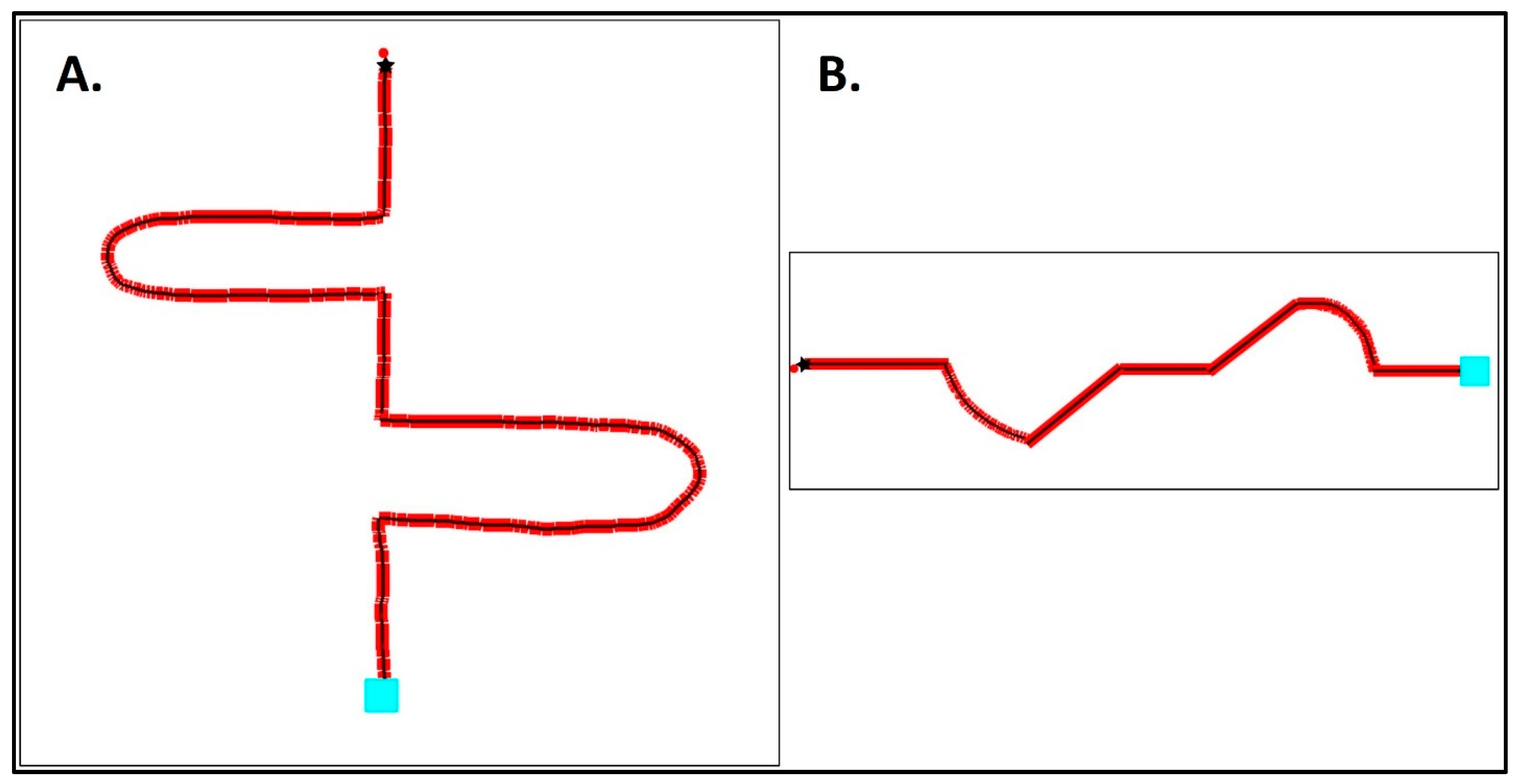

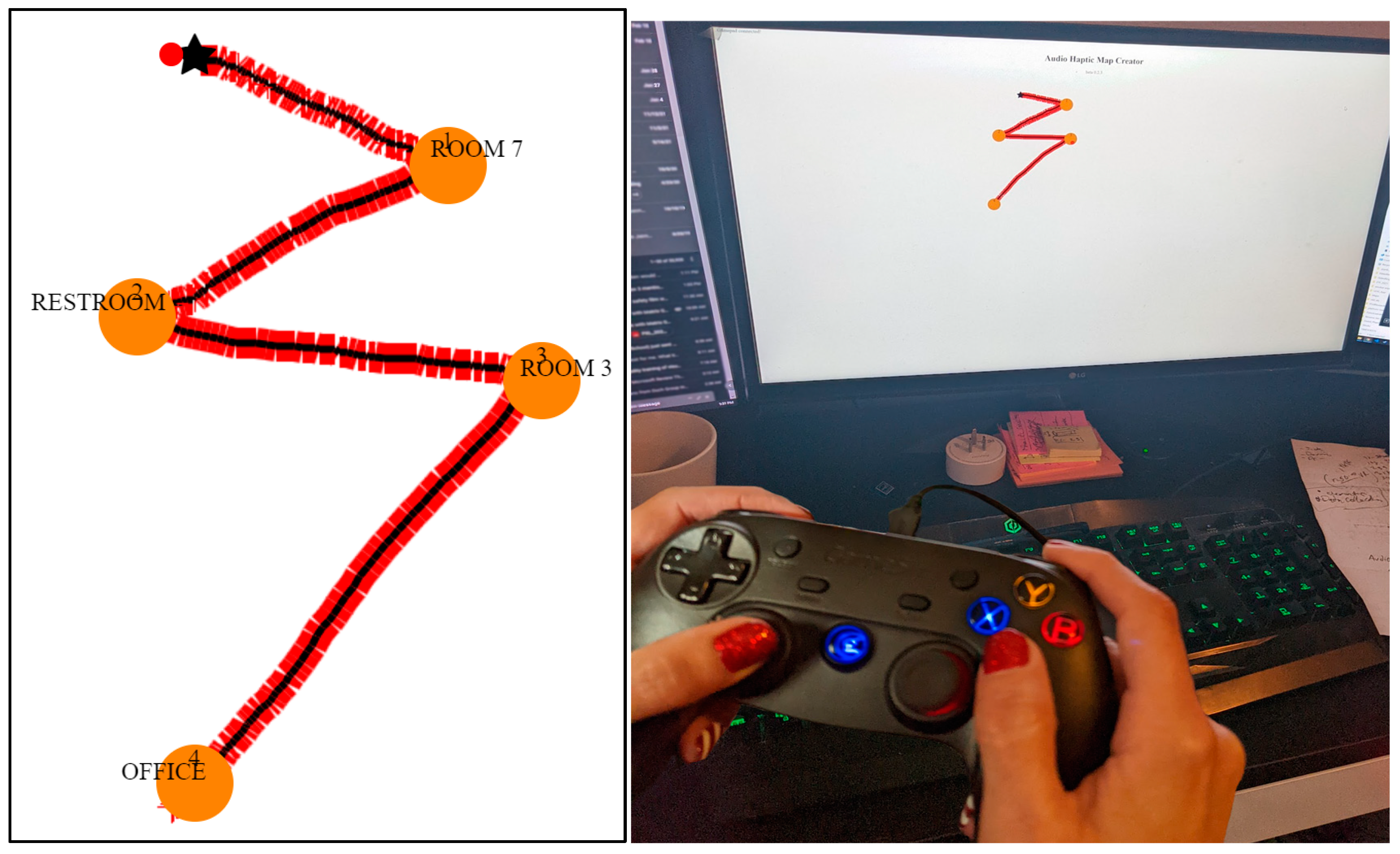

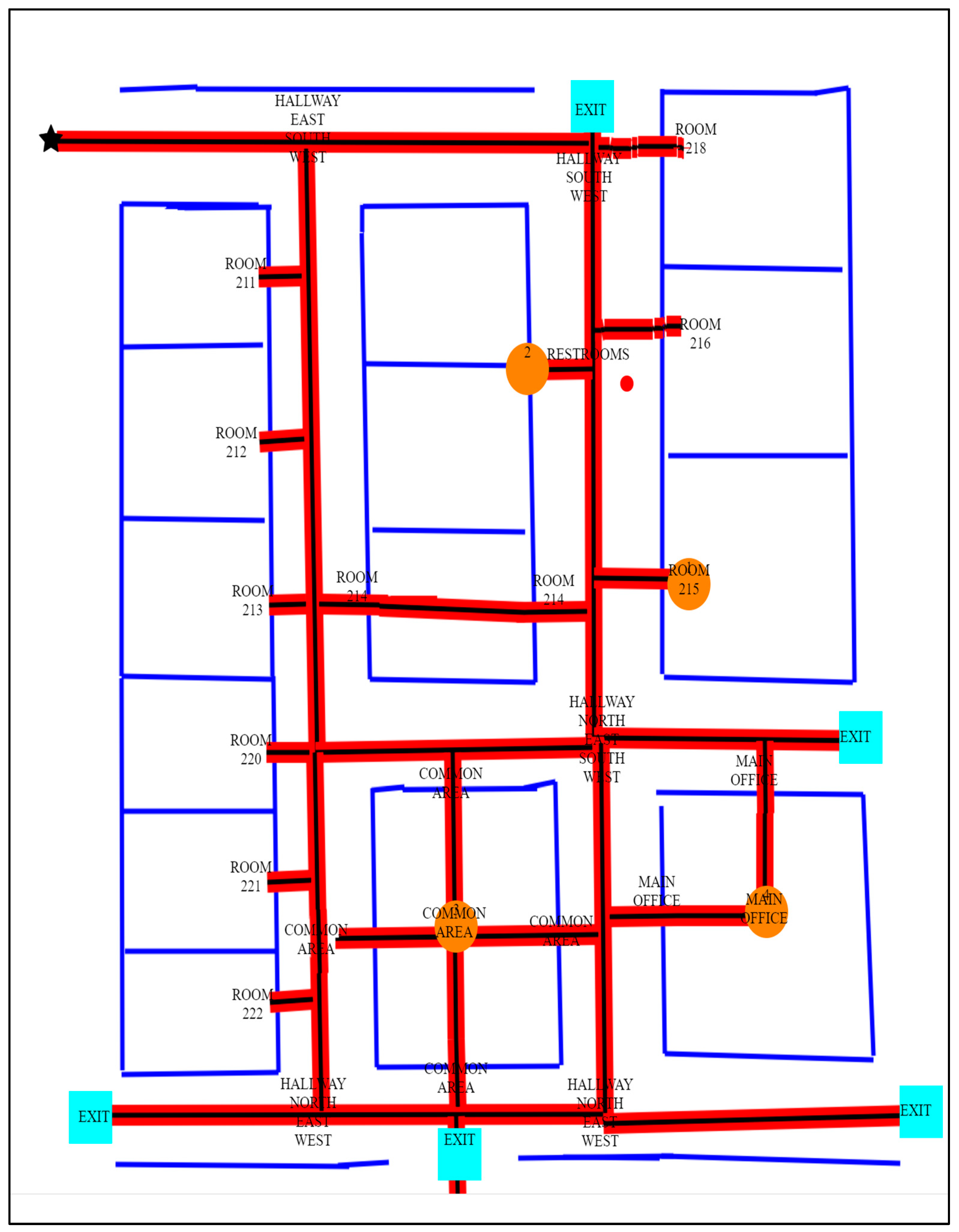

2.1. Audio-Haptic Map Creator Application Development

2.2. Participants and Recruitment

2.3. Usability Methodology

3. Experimental Results

3.1. Study: Part1: Baseline, Calibration, and Training

3.2. Study: Part 2: Map Reading

3.3. Study: Part 3: Usability Study

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Holloway, L.; Marriott, K.; Reinders, S.; Butler, M. 3D printed maps and icons for inclusion: Testing in the wild by people who are blind or have low vision. In Proceedings of the ASSETS ’19—The 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; Azenkot, S., Kane, S., Eds.; Association for Computing Machinery (ACM): New York, NY, USA, 2019; pp. 183–195. [Google Scholar] [CrossRef]

- Rowell, J.; Ungar, S. Feeling your way—A tactile map user survey. In Proceedings of the 21st International Cartographic Conference, Durban, South Africa, 10–16 August 2003. [Google Scholar]

- Kaplan, H.; Pyayt, A. Development of User Feedback-Based Optimized Encoding System for 3D-Printed Tactile Maps. Disabilities 2022, 2, 379–397. [Google Scholar] [CrossRef]

- Kaplan, H.; Pyayt, A. Development of the Tactile Map Creator (TMC) Application. Disabilities 2021, 2, 19–27. [Google Scholar] [CrossRef]

- Kaplan, H.; Pyayt, A. Tactile Visualization and 3D Printing for Education. In Encyclopedia of Computer Graphics and Games; Springer: Cham, Switzerland, 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Kaplan, H. Assistive Technologies for Independent Navigation for People with Blindness. Doctoral Dissertation, University of South Florida, Tampa, FL, USA, 2022. [Google Scholar]

- Papadopoulos, K.; Koustriava, E.; Barouti, M. Cognitive maps of individuals with blindness for familiar and unfamiliar spaces: Construction through audio-tactile maps and walked experience. Comput. Hum. Behav. 2017, 75, 376–384. [Google Scholar] [CrossRef]

- Espinosa, M.; Ochaíta, E. Using Tactile Maps to Improve the Practical Spatial Knowledge of Adults who are Blind. J. Vis. Impair. Blind. 1998, 92, 338–345. [Google Scholar] [CrossRef]

- Cappagli, G.; Finocchietti, S.; Cocchi, E.; Giammari, G.; Zumiani, R.; Cuppone, A.V.; Baud-Bovy, G.; Gori, M. Audio motor training improves mobility and spatial cognition in visually impaired children. Sci. Rep. 2019, 9, 3303. [Google Scholar] [CrossRef] [PubMed]

- Papadopoulos, K.; Koustriava, E.; Koukourikos, P. Orientation and mobility aids for individuals with blindness: Verbal description vs. audio-tactile map. Assist. Technol. 2017, 30, 191–200. [Google Scholar] [CrossRef]

- Schinazi, V.R.; Thrash, T.; Chebat, D. Spatial navigation by congenitally blind individuals. WIREs Cogn. Sci. 2015, 7, 37–58. [Google Scholar] [CrossRef] [PubMed]

- Karimi, H.A. Indoor Wayfinding and Navigation; Taylor & Francis Ltd.: London, UK, 2015; ISBN 9780429172014. [Google Scholar]

- Blades, M.; Ungar, S.; Spencer, C. Map Use by Adults with Visual Impairments. Prof. Geogr. 1999, 51, 539–553. [Google Scholar] [CrossRef]

- Guerreiro, J.; Sato, D.; Ahmetovic, D.; Ohn-Bar, E.; Kitani, K.M.; Asakawa, C. Virtual navigation for blind people: Transferring route knowledge to the real-World. Int. J. Hum.-Comput. Stud. 2019, 135, 102369. [Google Scholar] [CrossRef]

- Perkins, C. Cartography: Progress in tactile mapping. Prog. Hum. Geogr. 2002, 26, 521–530. [Google Scholar] [CrossRef]

- Aldrich, F.; Sheppard, L.; Hindle, Y. First steps towards a model of tactile graphicacy. Br. J. Vis. Impair. 2002, 20, 62–67. [Google Scholar] [CrossRef]

- Siekierska, E.; Müller, A. Tactile and Audio-Tactile Maps within the Canadian ‘Government On-Line’ Program. Cartogr. J. 2003, 40, 299–304. [Google Scholar] [CrossRef]

- Challis, B.P.; Edwards, A.D. Design principles for tactile interaction. In International Workshop on Haptic Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2000; pp. 17–24. [Google Scholar]

- Cavanaugh, T.W.; Eastham, N.P. Creating tactile graphs for students with visual impairments: 3D printing as assistive technology. In Interdisciplinary and International Perspectives on 3D Printing in Education; Santos, I.M., Ali, N., Areepattamannil, S., Eds.; IGI Global: Hershey, PA, USA, 2019; pp. 223–240. [Google Scholar] [CrossRef]

- Holloway, L.; Marriott, K.; Butler, M. Accessible Maps for the Blind: Comparing 3D Printed Models with Tactile Graphics. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems—CHI ’18, Montreal, QC, Canada, 21–26 April 2018. [Google Scholar] [CrossRef]

- Edman, P.K. Tactile Graphics; American Foundation for the Blind: New York, NY, USA, 1992. [Google Scholar]

- Touch Mapper. Available online: https://touch-mapper.org/en/ (accessed on 3 May 2019).

- Tactile Map Automated Production (TMAP). Available online: https://www.ski.org/project/tactile-map-automated-production-tmap (accessed on 3 May 2020).

- Götzelmann, T. LucentMaps: 3D printed audiovisual tactile maps for blind and visually impaired people. In Proceedings of the 18th International ACM Sigaccess Conference on Computers and Accessibility, Reno, NV, USA, 23–26 October 2016; pp. 81–90. [Google Scholar]

- Miele, J.A.; Landau, S.; Gilden, D. Talking TMAP: Automated generation of audio-tactile maps using Smith-Kettlewell’s TMAP software. Br. J. Vis. Impair. 2006, 24, 93–100. [Google Scholar] [CrossRef]

- Zeng, L.; Weber, G. ATMap: Annotated: Tactile Maps for the Visually Impaired. In Cognitive Behavioural Systems. Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7403, pp. 290–298. [Google Scholar]

- Brock, A.M.; Truillet, P.; Oriola, B.; Picard, D.; Jouffrais, C. Interactivity improves usability of geographic maps for visually impaired people. Hum. Comput. Interact. 2015, 30, 156–194. [Google Scholar] [CrossRef]

- Clew. Available online: http://www.clewapp.org/ (accessed on 13 November 2022).

- Seeing AI: Indoor Navigation. Introducing Accessible Indoor Navigation Using AI. Available online: https://www.perkins.org/resource/seeing-ai-indoor-navigation/ (accessed on 12 August 2022).

- Indoor Navigation at the NC Museum of Natural Sciences. Available online: https://www.perkins.org/resource/indoor-navigation-nc-museum-natural-sciences/ (accessed on 23 July 2022).

- Bringing Freedom Indoors. Available online: https://www.perkins.org/resource/bringing-freedom-indoors/ (accessed on 20 July 2022).

- Cognitive Assistance Lab. Available online: https://www.cs.cmu.edu/~NavCog/navcog.html (accessed on 15 June 2023).

- Three Blindness-Aware Mobile Navigation Apps. Available online: https://www.afb.org/blindness-and-low-vision/using-technology/smartphone-gps-navigation-people-visual-impairments/three (accessed on 14 May 2023).

- WeWALK App—Blind and Low Vision Navigation—Find Your Best Route. Available online: https://www.youtube.com/watch?v=fGCbSmoWgVc (accessed on 18 April 2021).

- Jabnoun, H.; Hashish, M.A.; Benzarti, F. Mobile assistive application for blind people in indoor navigation. In Proceedings of the Impact of Digital Technologies on Public Health in Developed and Developing Countries: 18th International Conference, ICOST 2020, Hammamet, Tunisia, 24–26 June 2020; pp. 395–403. [Google Scholar]

- Introducing Azure Maps Creator. Available online: https://www.microsoft.com/en-us/maps/azure/azure-maps-creator?gclid=CjwKCAiAg9urBhB_EiwAgw88mYk2nD61wBFq9TgBVrNVUqMeUKXtCEpMq-orK4jqlk4fOP53Q1aICRoCVN4QAvD_BwE (accessed on 12 July 2021).

- Brock, A.; Jouffrais, C. Interactive audio-tactile maps for visually impaired people. ACM SIGACCESS Access. Comput. 2015, 113, 3–12. [Google Scholar] [CrossRef]

- Ducasse, J.; Brock, A.; Jouffrais, C. Accessible Interactive Maps for Visually Impaired Users. In Mobility of Visually Impaired People; Springer: Berlin/Heidelberg, Germany, 2018; pp. 537–584. [Google Scholar] [CrossRef]

- Giudice, N.A.; Guenther, B.A.; Jensen, N.A.; Haase, K.N. Cognitive Mapping Without Vision: Comparing Wayfinding Performance After Learning From Digital Touchscreen-Based Multimodal Maps vs. Embossed Tactile Overlays. Front. Hum. Neurosci. 2020, 14, 87. [Google Scholar] [CrossRef] [PubMed]

- Palani, H.P.; Fink, P.D.S.; Giudice, N.A. Comparing Map Learning between Touchscreen-Based Visual and Haptic Displays: A Behavioral Evaluation with Blind and Sighted Users. Multimodal Technol. Interact. 2021, 6, 1. [Google Scholar] [CrossRef]

- Simonnet, M.; Brock, A.M.; Serpa, A.; Oriola, B.; Jouffrais, C. Comparing Interaction Techniques to Help Blind People Explore Maps on Small Tactile Devices. Multimodal Technol. Interact. 2019, 3, 27. [Google Scholar] [CrossRef]

- Trifanica, V.; Butean, A.; Moldoveanu, A.D.; Butean, D. Gamepad Vibration Methods to Help Blind People Perceive Colors. In Proceedings of the Romanian Conference on Human-Computer Interaction, Bucharest, Romania, 24 September 2015. Available online: http://rochi.utcluj.ro/proceedings/en/articles-RoCHI2015.php (accessed on 17 February 2020).

- Coldewey, D. An Xbox Controller with a Built-In Braille Display Is Microsoft’s Latest Gaming Accessibility Play. TechCrunch. Available online: https://techcrunch.com/2019/05/06/an-xbox-controller-with-a-built-in-braille-display-ismicrosofts-latest-gaming-accessibility-play/ (accessed on 14 March 2021).

- Parente, P.; Bishop, G. BATS: The Blind Audio Tactile Mapping System. In Proceedings of the ACM Southeastern Conference, Savannah, GA, USA, 7–8 March 2003; ACM Press: New York, NY, USA, 2003. [Google Scholar]

- Espinoza, M.; Sánchez, J.; Campos, M.d.B. Videogaming Interaction for Mental Model Construction in Learners Who Are Blind. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Crete, Greece, 22–27 June 2014; pp. 525–536. [Google Scholar]

- Paneels, S.; Roberts, J.C. Review of Designs for Haptic Data Visualization. IEEE Trans. Haptics 2009, 3, 119–137. [Google Scholar] [CrossRef] [PubMed]

- Magnusson, C.; Poppinga, B.; Pielot, M.; Rassmus-Gr, K. TouchOver map: Audio-tactile exploration of interactive maps. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services, Stockholm, Sweden, 30 August–2 September 2011. [Google Scholar]

- Multimodal Maps for Blind People. 2010. Available online: https://www.youtube.com/watch?v=mB-6TNHS7X0 (accessed on 8 September 2023).

- Olin College of Engineering. Clew; Revolutionary Indoor Navigation for iOS. Available online: http://www.clewapp.org/#technology (accessed on 18 January 2018).

- Gamepad. Available online: https://www.w3.org/TR/gamepad/ (accessed on 29 September 2023).

- Nyman, R. The Gamepad API. Mozilla Hacks—The Web Developer Blog. 13 December 2013. Available online: https://hacks.mozilla.org/2013/12/the-gamepad-api/ (accessed on 23 January 2021).

- Darin, T.G.R.; Andrade, R.M.C.; Merabet, L.B.; Sánchez, J.H. Investigating the Mode in Multimodal Video Games. In Proceedings of the CHI ‘17: CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2487–2495. [Google Scholar]

- Oviatt, S. Multimodal Interfaces. In The Human-Computer Interaction Handbook; CRC Press: Boca Raton, FL, USA, 2007; pp. 439–458. [Google Scholar]

- Darin, T.; Andrade, R.; Sánchez, J. Principles for Evaluating Usability in Multimodal Games for People Who Are Blind. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; pp. 209–223. [Google Scholar]

| Participant ID | Age of Blindness | Age | Gender | Mobility Device | Experience with Haptic Device | Experience with Gamepad |

|---|---|---|---|---|---|---|

| P1 | Congenital | 37 | F | Cane | Some | None |

| P2 | Congenital | 72 | M | Cane | Some | None |

| P3 | Congenital | 66 | M | Cane | Some | None |

| P4 | Congenital | 28 | F | Cane | None | None |

| P5 | Congenital | 20 | F | Guide Dog | Some | None |

| P6 | Congenital | 22 | F | Cane | Some | None |

| P7 | Congenital | 24 | M | Cane | Some | Some |

| P8 | Congenital | 19 | F | Guide Dog | Some | None |

| P9 | Congenital | 26 | F | Cane | Some | Some |

| P10 | Congenital | 59 | M | Cane | Some | None |

| P11 | Congenital | 47 | F | Cane | Some | None |

| P12 | 8 | 44 | M | Cane | None | None |

| P13 | 16 | 32 | F | Cane | None | None |

| Part1: Baseline, Calibration, and Training, Task 1 Speed Accuracy Preference Test | ||||

|---|---|---|---|---|

| Travel Path (A or B) | Accuracy Speed | Number of Adjustments | Preferred Speed | |

| P1 | B | 2 | 5 | 3 |

| P2 | B | 1 | 10 | 2 |

| P3 | B | 2 | 7 | 2 |

| P4 | B | 1 | 5 | 2 |

| P5 | B | 2 | 6 | 3 |

| P6 | B | 2 | 8 | 3 |

| P7 | B | 2 | 7 | 2 |

| P8 | A | 2 | 8 | 2 |

| P9 | A | 2 | 10 | 3 |

| P10 | A | 1 | 8 | 2 |

| P11 | A | 2 | 5 | 2 |

| P12 | A | 1 | 9 | 3 |

| P13 | A | 2 | 7 | 2 |

| Part 1: Baseline, Calibration, and Training, Task 2 Landmark Shape Test | |||

|---|---|---|---|

| ID | How Many Landmarks Are There? | Name the Landmarks | Describe the Shape of the Path? |

| P1 | 4 | YES | pointed with her fingers, across and down |

| P2 | 4 | YES | zigzag |

| P3 | 4 | YES | like a “Z” |

| P4 | 4 | YES | zigzag |

| P5 | 4 | YES | like arrows pointing to the right |

| P6 | 4 | YES | lightening |

| P7 | 4 | YES | next to each other across, like a zipper |

| P8 | 4 | YES | like lightening bolt |

| P9 | 4 | YES | like a “Z” |

| P10 | 4 | YES | back and forth, crossing |

| P11 | 4 | YES | like a “Z” |

| P12 | 4 | YES | described the directions: “It goes right then, left and down, and across to the right and then down” |

| P13 | 4 | YES | diagonal from one another |

| Part 2: Map Reading, TASK 1, Start/Star to Room 215 | |||||

|---|---|---|---|---|---|

| Completion | Number of Adjustments to Speed | Most Accurate Speed | Number of Attempts | Route Taken | Duration (the Entire Task Time) min:s |

| YES | 3—(started at 1, went to 2, then 3, back to 2) | 2 | 3 | right path/hallway 2 | 0:04:12 |

| NO—needed help | 5—(1 to 2 to 3 to 1 to 2 to 1) | 1 | 8 | 0:12:23 | |

| YES | 4, (1 to 2, then 3, then 4, back to 2.) | 1 | 4 | right path/hallway 2 | 0:04:46 |

| YES | 2—(1 to 2) | 2 | 2 | right path/hallway 2 | 0:04:31 |

| YES | 3—(1 to 2, to 3 to 2) | 2 | 4 | right path/hallway 2 | 0:05:16 |

| YES | 4—(1 to 2, to 3 to 2, to 1) | 1 | 5 | right path/hallway 2 | 0:06:09 |

| NO | 2—(2 to 3 to 2) | 2 | 4 | 0:06:24 | |

| YES | 1—(2 to 1) | 1 | 2 | right path/hallway 2 | 0:04:11 |

| YES | 2—(2 to 1 to 2) | 2 | 2 | 0:04:23 | |

| YES | 2—(1 to 2 to 1) | 1 | 3 | right path/hallway 2 | 0:06:04 |

| YES | 3—(1 to 2 to 3 to 2) | 2 | 4 | right path/hallway 2 | 0:06:23 |

| YES | 3—(1 to 2 to 3 to 2) | 2 | 4 | left path/hallway 1 to common area and then up | 0:05:05 |

| YES | 1—(2 to 1) | 1 | 2 | right path/hallway 2 | 0:04:41 |

| Average | Average Time | ||||

| 1.54 | 0:05:44 | ||||

| Strongly Disagree | Disagree | Somewhat Disagree | Neutral | Somewhat Agree | Agree | Strongly Agree | |

|---|---|---|---|---|---|---|---|

| Q1 | 1 | 2 | 6 | 4 | |||

| Q2 | 3 | 5 | 5 | ||||

| Q3 | 11 | 2 | |||||

| Q4 | 3 | 8 | 1 | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaplan, H.; Pyayt, A. Fully Digital Audio Haptic Maps for Individuals with Blindness. Disabilities 2024, 4, 64-78. https://doi.org/10.3390/disabilities4010005

Kaplan H, Pyayt A. Fully Digital Audio Haptic Maps for Individuals with Blindness. Disabilities. 2024; 4(1):64-78. https://doi.org/10.3390/disabilities4010005

Chicago/Turabian StyleKaplan, Howard, and Anna Pyayt. 2024. "Fully Digital Audio Haptic Maps for Individuals with Blindness" Disabilities 4, no. 1: 64-78. https://doi.org/10.3390/disabilities4010005

APA StyleKaplan, H., & Pyayt, A. (2024). Fully Digital Audio Haptic Maps for Individuals with Blindness. Disabilities, 4(1), 64-78. https://doi.org/10.3390/disabilities4010005