Abstract

Emotions influence processes of learning and thinking in all people. However, there is a lack of studies in the field of emotion research including people with intellectual disabilities (ID) addressing the existing diversity. The present study investigates the emotional competence of people with ID (N = 32). The first aim was to assess the emotional development using the Scale of Emotional Development (SEED). Based on these insights, the second objective was to replicate existing findings, validating the emotional reaction of people with ID to pictures of the International Affective Picture System (IAPS) based on self-reports. In an additional pilot-like analysis, the third aim was to investigate if these self-reported emotional reactions match the emotions expressed in their faces using the automated and video-based facial expression analysis software ‘Affectiva (Affdex SDK)’. In the present study, the self-reported emotional reactions of participants with ID were in line with previous research. In addition, the present study shows the general potential of this innovative approach of using and applying commercially available automated emotion recognition software for the field of special needs and social science.

1. Introduction

Emotions are omnipresent in our lives [1] and influence our learning, thinking and perceiving processes. Therefore, they have become successively established as research topic in scientific studies—also including people with intellectual disabilities (ID) [2,3].

Different perspectives of emotion research are combined in the concept of emotional competence. Usually developed through childhood and adolescence, the emotional competence can be divided into three parts: first, regulating emotional expressiveness, second, understanding emotions, and third, expressing emotions [1].

First, emotion regulation means adjusting the intensity, duration, expression, and quality of a currently experienced or imminent emotion through actions or (self-) instructions [4]. People with ID usually have a limited set of regulation strategies to deal with emotional arousal [5]. This might lead to emotion regulation difficulties, which in turn has a negative effect, for example, on concentration and attention abilities, or may even lead to mental health disorders [6]. However, the full spectrum of emotion strategies used by people with ID has not been evaluated so far [7]. A good overview of existing studies published through July 2019 is provided by Girgis et al. [6], who conclude that a suitable tool to assess emotion regulation in people with ID is still missing.

Second, the ability to understand emotions can be divided into an interpersonal decoding process (i.e., understanding the emotions of others) and an intrapersonal decoding process (i.e., understanding one’s own emotions) and includes an understanding of situations, causes, and consequences. According to previous findings, people with ID can reliably evaluate their emotions [5]. Bermejo et al. [3] as well as Martínez-González and Veas [8] investigated the personal assessment of one’s own emotions elicited by a standardized set of pictures of the International Affective Picture System (IAPS, [9]) among participants with ID. Results indicate that the participants with ID reported very similar but mostly higher values of valence and arousal compared to control groups (i.e., one group with the same chronological age and without ID as well as one with the same developmental level and without ID). Research on the ability of people with ID to recognize the emotions of others generally showed a worse performance of people with ID than of people of control groups without ID [10,11]. Moore [12] related the lower level of performance to the cognitive abilities of the participants, hence deficits in memory, attention, or information processing skills. In contrast, Scotland et al. [11] stated based on their review that the evidence concerning the cause of deficits of emotion recognition in people with ID cannot entirely be attributed to cognitive limitations. Instead, they concluded that a lack of studies with different methodological approaches makes it impossible to provide a general statement.

Third, emotional expressiveness (mostly related to facial expressions) can be defined as expressing specific basic emotions (i.e., anger, disgust, fear, sadness, and happiness) based on a discrete emotion theory (e.g., [13,14]) or using the dimensional approach locating emotions according to the intersections of multiple dimensions, such as arousal (low–high) and valence (positive–negative, e.g., [15]). Expressing emotions always has a social function, as people try to read and interpret their counterpart’s emotions, which in turn influences their social relationship [16]. For example, people usually smile less when they are not in direct interaction with others, regardless of an ongoing positive experience [17]. Identifying the emotional expressions of people with ID is, inter alia, important to promote the communicative and emotional development as well as to support direct interactions and decision-making [18]. However, this identification is more challenging in the case of people with ID compared to people without ID due to several accompanying factors such as more frequent motor impairments (e.g., spasticity), differences in physical appearance (especially regarding genetic syndromes), cognitive deficits (e.g., in perception or appraisal processes), stereotypical behaviors, or earlier aging processes [2,3]. It has been shown that joy and surprise can be expressed most effectively, and that anxiety is produced least accurately by people with ID [19].

The recognition of emotions is not limited to social interactions. The automatic emotion recognition has also found its way into areas such as marketing, healthcare, education, security, and robotics [20]. Previous work in terms of automated emotion recognition provides important conceptual, empirical, and methodological evidence on the current possibilities and challenges [21,22,23,24]. The current paper builds on this body of work but takes at the same time a rather pragmatic way in its assessment approach.

Although the literature suggests that a multimodal and holistic approach of emotion recognition could outperform an exclusively face-based recognition [21,22], various software solutions use only facial expressions to automatically analyze and predict, inter alia, a limited number of basic emotions and emotional valence [25,26]. According to Ekman’s theory, these basic emotions can be distinguished by distinctive and universal behavior signals [13,14]. To recognize these signals, the facial action coding system (FACS) by Ekman et al. [13] is used by most of the recognition technologies.

Research on facial emotion recognition is still a very challenging task, especially in real-world scenarios [27]. One key reason is that most datasets for automatic visual recognition of emotions include acted expressions instead of real emotions [28]. A review of Canal et al. [27] provides a good overview of current trends and commonly used strategies. Next to commercial software solutions, for example, Affectiva (Affdex SDK) [29], FaceReader by Noldus [30], or Face++ by Megvii [31], open-source tools, such as OpenFace [32] or Automated Facial Affect Recognition (AFAR) [33], can be used for emotion recognition. The validity of commercial software solutions has been evaluated in different studies, e.g., [26,34,35]. As was found, most advanced algorithms used for research, such as OpenFace [32] or AFAR [33], can outperform commercial software solutions, e.g., [31]. Still, even the most efficient software packages existing are not yet on par with a human classification, e.g., [27,34]. Moreover, the validity of automated emotion recognition software for people with ID has not yet been investigated due to the novelty of this approach [3,8].

Such validation studies and performance comparisons among various emotion recognition software solutions are mostly located in the field of computer science. Other studies in less technical research areas such as the social sciences, psychology, music, or medicine also use automatic emotion analysis software such as Affectiva as an analysis tool [36,37,38,39,40,41]. For these kinds of studies, the use of commercial software solutions seems more appropriate for two reasons: First, off the shelf solutions such as Affectiva (Affdex SDK) [29] come with the great advantage to work immediately and without much additional effort, especially when there is less access to expertise in computer science and coding skills. In most cases, no additional data collection or a training of the algorithms is needed since a suitable database already exists [42,43]. However, although Affectiva (Affdex SDK) claims to have the world’s largest dataset for facial emotion analysis [20,43] with a database of over five million face videos from over 75 countries in 2017, there are no information available if people with ID are represented in the data as well. Second, among the available commercial software solutions, Affectiva (Affdex SDK) stands out as one of the most established applications [44,45] with acknowledged and acceptable performance in emotion recognition as considerable studies have shown, e.g., [26,34,35,42,46,47]. Thus, Affectiva (Affdex SDK) comes with high suitability as an analysis tool for a pilot-like study as reported in this paper.

The present study focuses on two of the three above-described parts of emotional competence: understanding emotions and expressing emotions. The first aim was to assess the emotional development of the study participants with ID. It should be investigated how effectively emotional expressions of other persons or emotional situations can be evaluated, how well one’s own emotions can be expressed and how accurate one’s own emotions can be assessed by self-reports. Based on these insights, the second aim was to replicate the findings of Bermejo et al. [3] and Martínez-González and Veas [8], validating the self-reported emotional reaction of people with ID to selected pictures of the IAPS related to basic emotions (i.e., fear, happiness, sadness, anger, and disgust) and neutral content. In an additional pilot-like analysis, the third aim was to investigate if these self-reported emotional reactions match the emotions expressed in their faces when analyzed with the automated facial expression analysis of Affectiva (Affdex SDK). The approach was to use Affectiva (Affdex SDK) with its existing database to analyze facial expressions of the participants with ID and potentially gain initial insights into their emotion expression. With regard to the first aim, emotional competencies are assumed to be appropriate for the present study, since this was also the case for similar samples in previous studies [3,8]. Regarding the second objective, it was expected that the findings of Bermejo et al. [3] and Martínez-González and Veas [8] could be replicated. Concerning the third objective, the hypothesis was that the facial expressions of emotions analyzed with Affectiva (Affdex SDK) should be in line with the self-report. However, the relation between facial expressions and the self-report was expected to be fairly low due to the social function of emotions, which leads to the assumption that emotion-triggering pictures will result in fewer facial expressions of emotions than, for example, social interactions with humans [16,17].

2. Materials and Methods

2.1. Study Design and Procedure

The present study was approved by the local university’s ethics committee (EV2020/04-02).

The aim was to investigate the emotional competence of people with ID. Therefore, emotional reactions were elicited by showing IAPS pictures related to basic emotions (i.e., fear, happiness, sadness, anger, and disgust) and neutral content. Hence, data were collected in two sessions per participant, i.e., one preliminary meeting to get to know each other and one conduct session. Beforehand, the emotional development of participants was assessed using the Scale of Emotional Development (SEED, [48], Section 2.3.3). Each session was conducted in a separate room in an environment that was familiar to the participants, for example, a room in the participants’ residential or working institutions, and was videotaped. During these sessions, the participants sat in front of a computer screen with a camera attached on top. The researcher either sat across them or communicated via a video calling software. The researcher started a presentation, and the screen showed the first picture of the chosen set of IAPS pictures (Section 2.3.1) that was supposed to evoke a specific emotion (e.g., anger). After 6 s, the image was automatically minimized, and the scale to rate the emotional experience (Section 2.3.2) appeared on the screen. Each session lasted 15–25 min, depending on the duration of the participants’ self-reports.

The participants were then asked how the picture made them feel. For that purpose, they were asked to point to the corresponding picture on the scale shown on the screen (Section 2.3.2): first regarding the emotional valence and second regarding their emotional arousal. Afterwards, the procedure continued with the next IAPS picture (controlled by the researcher) and ended after 24 pictures. To ensure that the participants were familiar with the assessment situation, the procedure was practiced together using other pictures at the preliminary meeting. In a second step, all parts of the video recordings in which an IAPS picture was shown (6 s each) were processed in a pilot-like analysis using Affectiva (Affdex SDK) in the iMotions software v9.0 [29] (Section 2.3.4).

2.2. Sample

The sample consists of N = 32 adult people (46.9% female, n = 15) with mild to moderate ID. Participants from southern Germany were 23 to 77 years old, with a mean age of 43.25 years (SDage = 16.4). They were recruited via residential and working institutions for people with ID, where the study was also conducted. Missing data were handled by skipping over any not available values. Informed consent was always obtained from the participants with information in easy language and additionally from their legal representatives if required. Consent to participate in the emotional development survey (SEED) was withdrawn in the case of three participants due to personal reasons. The participants were free to leave the study at any time.

2.3. Measures

2.3.1. IAPS and SAM Scale

Lang et al. [9] published the IAPS as a standardized set of 1194 photographs aiming to evoke specific levels of the emotional dimensions of valence and arousal. These dimensions are usually evaluated with the Self-Assessment Manikin (SAM), a 9-point affective rating scale using nine figures to represent the affective level of the dimensions. The IAPS Technical Report [9] includes norm values of the SAM scale results including people without ID regarding the dimensions of valence and arousal for each photograph.

Following the approach of Martínez-González and Veas [8], 24 pictures were chosen for the present study—4 pictures for each emotion (Table 1). Due to ethical reasons, pictures that showed inappropriate representations of, for example, mutilated body parts or sexualized scenes were discarded and replaced by other pictures for the respective emotion.

Table 1.

Selection of IAPS pictures included in the study sorted by emotion.

2.3.2. SAMY Scale

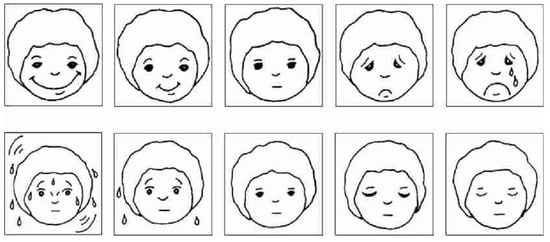

The simplified version of the original SAM scale (i.e., the SAMY scale) by Bermejo et al. [3] is an adaptation of the original SAM scale that was developed by Lang et al. [9]. For an easier understanding, the original SAM scale was simplified into the SAMY scale by showing only an illustration of a face (instead of a body with face) and the original 9-point scale was shortened to two 5-point scales, with each face corresponding to either a state of arousal (i.e., very nervous, nervous, normal, relaxed, or very relaxed) or valence (i.e., very sad, sad, normal, happy, or very happy; Figure 1). In the present study, the valence scale was defined as very negative, negative, neutral, positive, and very positive. To compare the results with those of the norm values of the original SAM scale, the results of the 5-point SAMY scale were transformed to the 9-point scale.

Figure 1.

The SAMY scale for rating valence (top row) and arousal (bottom row). Reprinted with permission from Ref. [3]. Copyright 2014. Bermejo, B.G.; Mateos, P.M.; Sánchez-Mateos, J.D.

2.3.3. SEED Scale

In the present study, the Scale of Emotional Development (SEED, [48]) was used in semi-structured interviews with professional direct support persons (DSPs) in care-taking facilities to assess the emotional development of the participants. The SEED has 200 items with binary response options (i.e., yes and no) and measures the emotional development in eight domains:

- Dealing with our own body;

- Interaction with caregivers;

- Dealing with environmental changes;

- Differentiation of emotions;

- Interaction with peers;

- Dealing with material activities;

- Communication;

- Emotion regulation.

Example items for the domain ‘Differentiation of emotions’ are:

- Emotions can be directed through the attention of caregivers (Phase II).

- The person is able to identify his or her own basal emotions (e.g., anger, sadness, happiness, fear) (Phase III).

For each of the aforementioned domains, five items are rated for one of five development phases according to the emotional reference age of typically developed children:

- Phase 1 (reference age 0–6 months; first adaption);

- Phase 2 (reference age 7–18 months; first socialization);

- Phase 3 (reference age 19–36 months; first individualization);

- Phase 4 (reference age 37–84 months; first identification);

- Phase 5 (reference age 85–156 months; incipient awareness of reality).

The phase with the most frequently affirmed answers marks the level of emotional development in the respective domain. After ranking the results of the respective domains, the fourth lowest score determines the level of general emotional development [48].

2.3.4. Automated Facial Expression Analysis

The video recordings were analyzed using Affectiva (Affdex SDK) in the iMotions software platform [29]. Affectiva (Affdex SDK) [29] is an emotion recognition software classifying recorded facial expressions using a frame-by-frame analysis. Based on the renown Facial Action Coding System (FACS) by Ekman et al. [13], six basic emotions, (i.e., joy, sadness, fear anger, disgust, surprise), as well as emotional valence (positive and negative) can be derived from recognized action units. Contracting or relaxing respective facial muscles provide evidence scores from 0 to 100 for each frame of the video. These scores represent the probability by which the corresponding emotional expression would be identified as such by a human coder. In line with recommendations in the field of psychology, a threshold of 0.6 was used for the evidence scores, meaning a statistical power of 80% for all frames [49]. Afterwards, the values were exported and represent the percentage of time (relative to the time that an IAPS picture was presented, i.e., 6 s) that Affectiva (Affdex SDK) detected an emotion.

Affectiva (Affdex SDK) [29] was founded as a spin-off of the Massachusetts Institute of Technology (MIT) Media Lab in 2009. It is used in different domains, including education, media and advertising, retail, healthcare, automotive industry and gaming. With a database of over five million face videos from over 75 countries in 2017, Affectiva (Affdex SDK) claims to have the world’s largest dataset for facial emotion analysis. However, there are no information available if people with ID are represented in the data as well [20,43]. The validity of Affectiva (Affdex SDK) has been verified in different validation studies, e.g., [26,34,35,42,46,47]. However, its accuracy is not yet as good as human classification [34].

2.4. Statistical Analysis and Data Processing

For the second objective, a two-sided paired sample t-test with an alpha level of 0.05 was calculated for each IAPS image. Regarding the dimensions of valence and arousal, it was investigated whether the SAMY scale results assessed by participants with ID differ from the norm values of the SAM scale results of the IAPS Technical Report [9].

For the third objective, the facial expression analysis of all happiness pictures that are related to positive valence of Table 1 and were rated as very positive by the participants on the SAMY scale was inspected. Furthermore, all negative pictures that were rated as very negative as indicated by the SAMY scale were inspected (Table A1). Since 16 pictures were used for negative emotions (i.e., fear, sadness, anger, and disgust) but only 4 pictures were used for a positive emotion (i.e., happiness), 4 pictures out of the 16 pictures were selected for analysis that showed the highest mean value of negative valence as detected by Affectiva (Affdex SDK) (i.e., 1300 Pit Bull, 2100 Angry Face, 9301 Toilet, 9830 Cigarettes). All data transformations and analyses were carried out in R v4.1.1 (R Core Team, 2021) and SPSS v27.

3. Results

3.1. SEED Scale

Participants showed a range from phase 2 to 5 on the SEED scale (MSEED = 3.82, SDSEED = 0.86), while mostly reaching the fourth phase (Table 2). The higher the value on the SEED scale, the more phases of emotional development have already been experienced [48].

Table 2.

Results of the SEED scale divided into domains, listing the respective number of participants.

Most of the participants (n = 16) reached phase IV as their dominant phase for the general emotional development. This phase is indicated by egocentrism, the emerging ability to abstract thinking and perspective taking, the development of social skills, and the possibility of learning through experience. The domain of emotion regulation can be described as one of the weakest, as only half of the participants reached phase 4 or 5.

3.2. SAMY Scale and SAM Scale

On the one hand, the participants with ID evaluated their emotional valence and emotional arousal during the presentation of the 24 IAPS pictures by means of the SAMY scale. On the other hand, norm values of the SAM scale results regarding the dimensions of valence and arousal for the same 24 IAPS picture were obtained from of the IAPS Technical Report [9]. Table 3 shows both the results of the 5-point SAMY scale (transformed to a 9-point scale) in comparison to the 9-point SAM scale results of the IAPS Technical Report [9]. Table 3 also includes a paired sample t-test for each IAPS picture to assess whether the SAMY scale results (Section 2.3.2) differ from the SAM scale results (Section 2.3.1). A significant result indicates a significant difference between the results in case of the specific IAPS picture.

Table 3.

Mean scores (M) and standard deviations (SD) for the valence and arousal dimensions for the 24 IAPS pictures based on the 5-point SAMY scale results (transformed to a 9-point scale) and on the 9-point SAM scale results of the IAPS Technical Report [9], as well as significant differences between SAMY scale results and SAM scale results using t-tests.

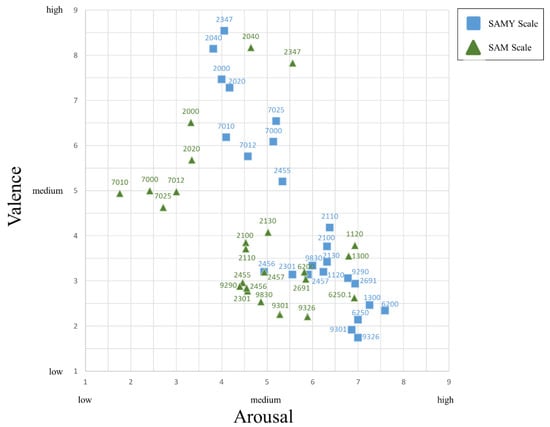

Additionally, Figure 2 shows a graphical representation of the results. A visual comparison of the responses of the participants with ID and the SAM scale results from the IAPS Technical Report [9] is provided along the valence and arousal dimensions in terms of each IAPS picture.

Figure 2.

Dispersion for the valence and arousal dimensions for the 24 IAPS pictures based on the 5-point SAMY scale results (transformed to a 9-point scale) and on the 9-point SAM scale results of the IAPS Technical Report [9].

3.3. Automated Facial Expression Analysis

In the study conduct, the selected IAPS pictures were presented to all participants for 6 s each. During this presentation, the participants showed facial emotional reactions. Using the video recordings of these reactions, the emotional expressions of the participants were investigated in a pilot-like analysis using Affectiva (Affdex SDK) as analysis tool. Affectiva (Affdex SDK) was selected as emotional analysis software from the broad area of automatic emotion recognition.

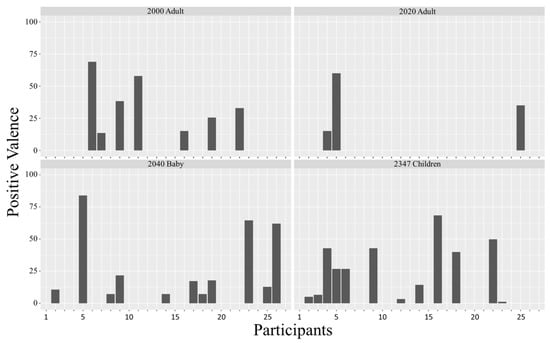

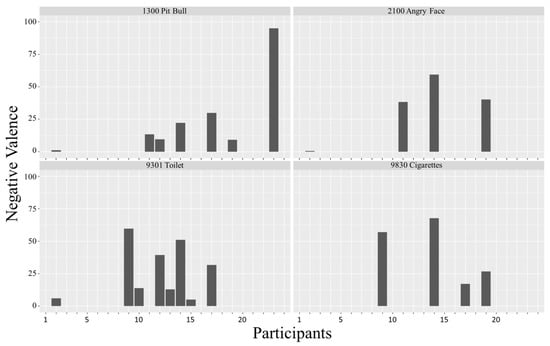

Figure 3 and Figure 4 show the results of the automated facial expression analysis. It can be stated that some of the participants’ reactions to the IAPS pictures measured by Affectiva (Affdex SDK) are in line with self-reports. In the case of positive valence, this applied to about eight participants on average, and in the case of negative valence, the average was approximately six participants. Inspecting picture 2000 adult, for example, seven of the participants that rated the picture as very positive (n = 27) showed positive valence, as detected by Affectiva (Affdex SDK), from around 10% to almost 75% of the time the picture was shown (Figure 3). Similarly, inspecting picture 1300 pit bull, seven of the participants that rated the picture as very negative (n = 24) showed negative valence, as detected by Affectiva (Affdex SDK), from around 1% to almost 100% of the time the picture was shown (Figure 4).

Figure 3.

The percentage of time positive valence was detected by Affectiva (Affdex SDK) of all participants that rated the pictures as very positive.

Figure 4.

The percentage of time negative valence was detected by Affectiva (Affdex SDK) of all participants that rated the pictures as very negative.

4. Discussion

There is a lack of studies in the field of emotion research including people with ID and addressing the existing diversity [2,3,7]. Thus, the present study had three objectives focusing on the emotional competence of people with ID:

- First, to assess the emotional development of the participants with ID to investigate how effectively emotional expressions of other persons or emotional situations can be evaluated, how well one’s own emotions can be expressed and how accurate one’s own emotions can be assessed by self-reports.

- Second, to replicate the findings of Bermejo et al. [3] and Martínez-González and Veas [8], validating the self-reported emotional reaction of people with ID to selected pictures of the IAPS.

- Third, to investigate in a pilot-like analysis if these self-reported emotional reactions match the emotions expressed in their faces using the automated facial expression analysis of Affectiva (Affdex SDK).

Concerning the first objective, the assessment of the emotional development of the participants with ID, the SEED results showed that the participants in the study were generally able to rate emotional expressions shown on the IAPS pictures, express their own emotions in facial expressions, and also rate them via self-reports using the SAMY scale.

The second objective addresses the replication of the findings of Bermejo et al. [3] and Martínez-González and Veas [8]. Figure 2 shows the results of the SAMY Scale with a greater variability and almost consistently higher in both valence and especially arousal compared to the norm values of the SAM scale. Nevertheless, the ratings of some pictures were close to each other (e.g., picture 1120, 2000, 2457, 2691, or 6250.1). Focusing on the individual emotions, the SAMY scale results always showed lower valence and mostly higher arousal regarding pictures that aimed to elicit fear. Inspecting the happiness pictures, especially valence was rated higher by participants with ID with the SAMY scale, whereas their ratings showed similar values. Regarding sadness, the results of SAMY scale and SAM scale were close to each other in the case of valence except for picture 2455 Sad Girls. By means of the SAMY scale, participants with ID also rated anger pictures as being more arousing, but both results of SAM scale and SAMY scale state a similar valence level. In terms of the disgust pictures, the SAMY scale results showed mostly similar valence, but higher arousal in comparison to the SAM scale results. Again, the results of the SAMY scale regarding the neutral pictures showed a more positive and more arousing rating.

Overall, the results regarding the second objective are consistent with previous studies to the extent that the participants with ID rated valence and especially arousal similar, but mostly higher and with a greater variability in comparison to the group of people without ID (i.e., the SAM scale results of the IAPS Technical Report [9]). This tendency might be attributed to potential deficits in the emotional competence influenced by ID [8], which is particularly evident in the domain of emotion regulation in the present study. Bermejo et al. [3] identified the reasons for the differences to people without ID either in stimulus processing (e.g., 7025 Stool, intended to elicit a neutral response, was more positively rated by people with ID, because they probably associated it with positive experiences) or in response selection (i.e., more clearly defined categories such as positive or negative are preferred).

The purpose of the third objective was to investigate if the facial expressions of emotions analyzed by Affectiva (Affdex) were in line with self-reports. It was found that 70% of the participants indeed seemed to express positive valence for up to almost 75% of the time that a picture was shown that was rated as very positive. Similarly, 41% of the participants seemed to express negative valence for up to almost 100% of the time that a picture was shown that was rated as very negative. On the one hand, these results show that there is a connection between self-reports and automatically recognized emotion expressions. On the other hand, the results also indicate that the IAPS pictures were evaluated very individually and so was the facially expressed emotional reaction to them. In this respect, technologies for automatic detection and analysis of emotions can play an important role since the prevention and detection of mental and physical health problems is often complicated by communication difficulties experienced in interactions with people with ID. Such innovative technological approaches could be used to provide complementary indications of current emotional experience [50]. Nevertheless, the performance of Affectiva (Affdex SDK) needs to be critically evaluated since the recognition accuracy is not yet as precise as human classification [34], especially including people with ID [2,8].

5. Limitations

There are also limitations, which should be considered when interpreting the findings and that could be considered as implications for future research. Compared to, for example, Bermejo et al. [3], the present study has a smaller sample size and no control group (e.g., people without ID). However, for the comparison of the results of this study to results with people without ID, the results of the IAPS Technical Report [9] were used. Nevertheless, future studies should include more participants with ID to improve the informative value of the results and its potential generalization.

An additional aspect to be critically considered is the fact that the SAMY scale was used in this study, consisting of a different scale level and different graphics than the original SAM scale, which in turn complicates direct comparison and could have influenced the response behavior. Nevertheless, this adaptation was necessary since nine answer options could have been too complex for participants with ID and thus would have biased the self-report even more. Future research should follow the path in adapting research methods for people with ID.

Moreover, the participants of this study were not engaged in a social interaction, but rather just looked at a picture shown on a screen. This setting was intended to reduce confounding influences so that the participants could focus on the IAPS images. However, this procedure could have contributed to low rates of expressions, considering the social function of emotions [16,17]. Future studies should take the social function of emotions into account by including direct human interactions in the research design.

The software Affectiva (Affdex SDK) was chosen as analysis tool in this pilot-like study because of its advantages in accessibility and usability, especially when there is less access to expertise in computer science and coding skills. However, other software solutions, especially outside the commercial market, could provide a clearly better performance in emotion recognition tasks [44]. Future research should consider either including further validity tests of Affectiva (Affdex SDK) in the respective research setting or choosing another emotion recognition tool that provides better results and has a similar usability as Affectiva (Affdex SDK).

Despite the large emotion database of Affectiva (Affdex SDK) [43], the results might be biased. The reason is that there is no information about the extent to which people with disabilities or people with ID are represented in the database. Future research should evaluate the use of Affectiva (Affdex SDK) in a comparative study including people with und without ID. Another approach for futures studies could be to build up an own database that was generated with people with ID, and to use this database for training of emotion recognition algorithms. This approach would require a significant bigger sample size and researchers with expertise in computing sciences. Furthermore, no human coding was performed with FACS. Instead, the results from Affectiva (Affdex SDK) were compared with the self-reports of the participants. Future research should consider including inter-rater reliability as well to improve the validity of the results.

6. Conclusions

Overall, the results of this study are in line with previous research [3,8]. Participants with ID rate their valence and especially arousal as similar but mostly higher and with a greater variability (in comparison to the group of people without ID) caused by emotion-triggering pictures. Despite the mentioned limitations, this study shows the potential of the innovative approach of using and applying Affectiva (Affdex SDK) as one software solution for automated emotion recognition for the field of special needs and social science. Using facial emotion recognition software in people with ID demonstrates that valuable insights into the emotional experience are possible, even though the software’s database was not customized for this target group.

Future research should follow this promising new path. More insights should be created by studies with a larger sample of people with ID. This might facilitate a generalization of the results. It would also open the opportunity for interdisciplinary research, combining the expert knowledge in the fields of special needs, social sciences, and computing sciences. Additionally, a potential database generated with people with ID would enable more accurate emotion recognition and would even gain in quality using state-of-the-art algorithms besides the commercial software solutions such as Affectiva (Affdex SDK).

Author Contributions

Conceptualization, T.H., M.M.S., P.Z., A.S. and C.E.; methodology, T.H., M.M.S. and P.Z.; validation, T.H., M.M.S., P.Z., A.S., H.-W.W. and C.E.; formal analysis, M.M.S., T.H., A.S. and C.R.; investigation, T.H. and M.M.S.; resources, P.Z., C.R., H.-W.W. and A.S.; data curation, M.M.S. and T.H.; writing—original draft preparation, T.H. and M.M.S.; writing—review and editing, T.H., M.M.S., A.S., P.Z., C.E., H.-W.W. and C.R.; visualization, T.H. and M.M.S.; supervision, P.Z., C.R., H.-W.W. and A.S.; project administration, P.Z., A.S., C.R., H.-W.W., M.M.S. and T.H.; funding acquisition, A.S., C.R., H.-W.W. and P.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This publication was part of the AI-Aging project (AI-based voice assistance for older adults with and without intellectual disabilities), which was funded by the Baden-Württemberg Stiftung within the funding line Responsible Artificial Intelligence.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by Ethics Committee of Heidelberg University of Education (protocol code EV2020/04-02, 21 May 2021).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Mean Scores and Standard Deviations of Negative Valence as Detected by Affectiva (Affdex SDK) for Negatively Rated pictures.

Table A1.

Mean Scores and Standard Deviations of Negative Valence as Detected by Affectiva (Affdex SDK) for Negatively Rated pictures.

| Valence | ||||

|---|---|---|---|---|

| IAPS No. | Description | M | SD | Emotion |

| 1120 | Snake | 9.98 | 21.42 | Fear |

| 1300 1 | Pit Bull | 10.01 | 22.96 | Fear |

| 2100 1 | Angry Face | 13.85 | 22.85 | Anger |

| 2110 | Angry Face | 4.44 | 8.56 | Anger |

| 2130 | Woman | 6.48 | 13.19 | Anger |

| 2301 | Kid Cry | 0.23 | 0.71 | Sadness |

| 2455 | Sad Girls | 6.48 | 14.49 | Sadness |

| 2456 | Crying Family | 2.93 | 4.67 | Sadness |

| 2457 | Crying Boy | 6.90 | 14.00 | Sadness |

| 2691 | Riot | 5.00 | 11.35 | Anger |

| 6200 | Aimed Gun | 0.38 | 0.88 | Fear |

| 6250.1 | Aimed Gun | 6.27 | 17.21 | Fear |

| 9290 | Garbage | 7.33 | 12.71 | Disgust |

| 9301 1 | Toilet | 12.21 | 19.51 | Disgust |

| 9362 | Vomit | 8.32 | 19.28 | Disgust |

| 9830 1 | Cigarettes | 12.98 | 23.57 | Disgust |

1 The four selected pictures are printed in boldface.

References

- Denham, S.A.; Bassett, H.H.; Wyatt, T. The Socialization of Emotional Competence. In Handbook of Socialization: Theory and Research; Grusec, J.E., Hastings, P.D., Eds.; Guilford Press: New York, NY, USA, 2015; pp. 590–613. [Google Scholar]

- Adams, D.; Oliver, C. The expression and assessment of emotions and internal states in individuals with severe or profound intellectual disabilities. Clin. Psychol. Rev. 2011, 31, 293–306. [Google Scholar] [CrossRef]

- Bermejo, B.G.; Mateos, P.M.; Sánchez-Mateos, J.D. The emotional experience of people with intellectual disability: An analysis using the international affective pictures system. Am. J. Intellect. Dev. Disabil. 2014, 119, 371–384. [Google Scholar] [CrossRef]

- McRae, K.; Gross, J.J. Emotion regulation. Emotion 2020, 20, 1–9. [Google Scholar] [CrossRef] [PubMed]

- McClure, K.S.; Halpern, J.; Wolper, P.A.; Donahue, J.J. Emotion Regulation and Intellectual Disability. J. Dev. Disabil. 2009, 15, 38–44. [Google Scholar]

- Girgis, M.; Paparo, J.; Kneebone, I. A systematic review of emotion regulation measurement in children and adolescents diagnosed with intellectual disabilities. J. Intellect. Dev. Disabil. 2021, 46, 90–99. [Google Scholar] [CrossRef]

- Littlewood, M.; Dagnan, D.; Rodgers, J. Exploring the emotion regulation strategies used by adults with intellectual disabilities. Int. J. Dev. Disabil. 2018, 64, 204–211. [Google Scholar] [CrossRef]

- Martínez-González, A.E.; Veas, A. Identification of emotions and physiological response in individuals with moderate intellectual disability. Int. J. Dev. Disabil. 2019, 67, 406–411. [Google Scholar] [CrossRef] [PubMed]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report A-8; University of Florida: Gainesville, FL, USA, 2008. [Google Scholar]

- Murray, G.; McKenzie, K.; Murray, A.; Whelan, K.; Cossar, J.; Murray, K.; Scotland, J. The impact of con-textual information on the emotion recognition of children with an intellectual disability. J. Appl. Res. Intellect. Disabil. 2019, 32, 152–158. [Google Scholar] [CrossRef] [PubMed]

- Scotland, J.L.; Cossar, J.; McKenzie, K. The ability of adults with an intellectual disability to recognise facial expressions of emotion in comparison with typically developing individuals: A systematic review. Re-Search Dev. Disabil. 2015, 41–42, 22–39. [Google Scholar] [CrossRef] [PubMed]

- Moore, D.G. Reassessing Emotion Recognition Performance in People with Mental Retardation: A Review. Am. J. Ment. Retard. 2001, 106, 481–502. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; Hager, J.C. The Facial Action Coding System; Research Nexus eBook: Salt Lake City, UT, USA, 2002. [Google Scholar]

- Ekman, P.; Cordaro, D. What is Meant by Calling Emotions Basic. Emot. Rev. 2011, 3, 364–370. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Fischer, A.H.; Manstead, A.S.R. Social Functions of Emotion and Emotion Regulation. In Handbook of Emotions, 4th ed.; Barrett, L.F., Lewis, M., Haviland-Jones, J.M., Eds.; The Guilford Press: New York, NY, USA, 2016; pp. 424–439. [Google Scholar]

- Kraut, R.E.; Johnston, R.E. Social and emotional messages of smiling: An ethological approach. J. Personal. Soc. Psychol. 1979, 37, 1539–1553. [Google Scholar] [CrossRef]

- Krämer, T.; Zentel, P. Expression of Emotions of People with Profound Intellectual and Multiple Disabilities. A Single-Case Design Including Physiological Data. Psychoeduc. Assess. Interv. Rehabil. 2020, 2, 15–29. [Google Scholar] [CrossRef]

- Stewart, C.A.; Singh, N.N. Enhancing the Recognition and Production of Facial Expressions of Emotion by Children with Mental Retardation. Res. Dev. Disabil. 1995, 16, 365–382. [Google Scholar] [CrossRef]

- Bielozorov, A.; Bezbradica, M.; Helfert, M. The Role of User Emotions for Content Personalization in e-Commerce: Literature Review. In HCI in Business, Government and Organizations. eCommerce and Consumer Behavior, 1st ed.; Nah, F.F.-H., Siau, K., Eds.; Springer International Publishing: Basel, Switzerland, 2019; pp. 177–193. [Google Scholar]

- Picard, R.W. Affective computing: Challenges. Int. J. Hum.-Comput. Stud. 2003, 59, 55–64. [Google Scholar] [CrossRef]

- Zhang, Y.; Weninger, F.; Schuller, B.; Picard, R.W. Holistic Affect Recognition using PaNDA: Paralinguistic Non-metric Dimensional Analysis. IEEE Trans. Affect. Comput. 2019, 13, 769–780. [Google Scholar] [CrossRef]

- Vallverdú, J. Para-functional engineering: Cognitive challenges. Int. J. Parallel Emergent Distrib. Syst. 2022, 37, 292–302. [Google Scholar] [CrossRef]

- Franzoni, V.; Milani, A.; Nardi, D.; Vallverdú, J. Emotional machines: The next revolution. Web Intell. 2019, 17, 1–7. [Google Scholar] [CrossRef]

- Dupré, D.; Andelic, N.; Morrison, G.; McKeown, G. Accuracy of three commercial automatic emotion recognition systems across different individuals and their facial expressions. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Athens, Greece, 7 October 2018; pp. 627–632. [Google Scholar] [CrossRef][Green Version]

- Garcia-Garcia, J.M.; Penichet, V.M.R.; Lozano, M.D. Emotion detection: Technology review. In Proceedings of the XVIII International Conference on Human Computer Interaction—Interacción’17, Cancun, Mexico, 25–27 September 2017; González-Calleros, J.M., Ed.; ACM Press: New York, NY, USA, 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; Sa Junior, A.R.; de Pozzebon, E.; Sobieranski, A.C. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Inf. Sci. 2022, 582, 593–617. [Google Scholar] [CrossRef]

- Avola, D.; Cinque, L.; Fagioli, A.; Foresti, G.L.; Massaroni, C. Deep Temporal Analysis for Non-Acted Body Affect Recognition. IEEE Trans. Affect. Comput. 2022, 13, 1366–1377. [Google Scholar] [CrossRef]

- iMotions. Affectiva—Emotion AI. Available online: https://imotions.com/affectiva/ (accessed on 30 June 2022).

- Noldus. FaceReader: Emotion Analysis. Available online: https://www.noldus.com/facereader (accessed on 18 August 2022).

- Megvii. Face++. Available online: https://www.faceplusplus.com/emotion-recognition/ (accessed on 18 August 2022).

- Baltrusaitis, T.; Zadeh, A.; Lim, Y.C.; Morency, L. OpenFace 2.0: Facial Behavior Analysis Toolkit. In Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition, Xi’an, China, 15–19 May 2018. [Google Scholar]

- Ertugrul, I.O.; Jeni, L.A.; Ding, W.; Cohn, J.F. AFAR: A Deep Learning Based Tool for Automated Facial Affect Recognition. In Proceedings of the 14th IEEE International Conference on Automatic Face & Gesture Recognition, Lille, France, 14–18 May 2019. [Google Scholar]

- Dupré, D.; Krumhuber, E.G.; Küster, D.; McKeown, G.J. A performance comparison of eight commercially available automatic classifiers for facial affect recognition. PLoS ONE 2020, 15, e0231968. [Google Scholar] [CrossRef]

- Stöckli, S.; Schulte-Mecklenbeck, M.; Borer, S.; Samson, A.C. Facial expression analysis with AFFDEX and FACET: A validation study. Behav. Res. Methods 2018, 50, 1446–1460. [Google Scholar] [CrossRef]

- Dubovi, I. Cognitive and emotional engagement while learning with VR: The perspective of multimodal methodology. Comput. Educ. 2022, 183, 104495. [Google Scholar] [CrossRef]

- Kjærstad, H.L.; Jørgensen, C.K.; Broch-Due, I.; Kessing, L.V.; Miskowiak, K. Eye gaze and facial displays of emotion during emotional film clips in remitted patients with bipolar disorder. Eur. Psychiatry 2020, 63, E29. [Google Scholar] [CrossRef]

- Kovalchuk, Y.; Budini, E.; Cook, R.M.; Walsh, A. Investigating the Relationship between Facial Mimicry and Empathy. Behav. Sci. 2022, 12, 250. [Google Scholar] [CrossRef]

- Mehta, A.; Sharma, C.; Kanala, M.; Thakur, M.; Harrison, R.; Torrico, D.D. Self-Reported Emotions and Facial Expressions on Consumer Acceptability: A Study Using Energy Drinks. Foods 2021, 10, 330. [Google Scholar] [CrossRef]

- Millet, B.; Chattah, J.; Ahn, S. Soundtrack design: The impact of music on visual attention and affective responses. Appl. Ergon. 2021, 93, 103301. [Google Scholar] [CrossRef]

- Timme, S.; Brand, R. Affect and exertion during incremental physical exercise: Examining changes using automated facial action analysis and experiential self-report. PLoS ONE 2020, 15, e0228739. [Google Scholar] [CrossRef]

- Magdin, M.; Prikler, F. Real Time Facial Expression Recognition Using Webcam and SDK Affectiva. Int. J. Interact. Multimed. Artif. Intell. 2018, 5, 7–15. [Google Scholar] [CrossRef]

- Zjiderveld, G.; Affectiva. The World’s Largest Emotion Database: 5.3 Million Faces and Counting. Available online: https://blog.affectiva.com/the-worlds-largest-emotion-database-5.3-million-faces-and-counting (accessed on 18 August 2022).

- Namba, S.; Sato, W.; Osumi, M.; Shimokawa, K. Assessing Automated Facial Action Unit Detection Systems for Analyzing Cross-Domain Facial Expression Databases. Sensors 2021, 21, 4222. [Google Scholar] [CrossRef]

- Hewitt, C.; Gunes, H. CNN-based Facial Affect Analysis on Mobile Devices. arXiv 2018, arXiv:1807.08775. [Google Scholar]

- Kartali, A.; Roglić, M.; Barjaktarović, M.; Đurić-Jovičić, M.; Janković, M.M. Real-time Algorithms for Facial Emotion Recognition: A Comparison of Different Approaches. In Proceedings of the 14th Symposium on Neural Networks and Applications, Belgrade, Serbia, 20–21 November 2018. [Google Scholar]

- Taggart, R.W.; Dressler, M.; Kumar, P.; Khan, S.; Coppola, J.F. Determining Emotions via Facial Expression Analysis Software. In Proceedings of the Student-Faculty Research Day, CSIS, Pace University, New York, NY, USA, 6 May 2016. [Google Scholar]

- Sappok, T.; Zepperitz, S.; Barrett, B.F.; Došen, A. SEED: Skala der emotionalen Entwicklung–Diagnostik: Ein Instrument zur Feststellung des Emotionalen Entwicklungsstands bei Personen mit Intellektueller Entwicklungsstörung: Manual; Hogrefe: Göttingen, Germany, 2018. [Google Scholar]

- Marx, A.K.G.; Frenzel, A.C.; Pekrun, R.; Schwartze, M.M.; Reck, C. Automated Facial Expression Analysis in Authentic Face-To-Face Classroom Settings. A Proof of Concept Study. 2022; submitted. [Google Scholar]

- Zentel, P.; Sansour, T.; Engelhardt, M.; Krämer, T.; Marzini, M. Mensch und/oder Maschine? Der Einsatz von Künstlicher Intelligenz in der Arbeit mit Menschen mit schwerer und mehrfacher Behinderung. Schweiz. Z. Heilpädagogik 2019, 25, 35–42. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).