1. Introduction

Weeds directly affect the productivity of agricultural crops by competing for natural resources such as water, light, and nutrients. This is why they are considered one of the most prominent challenges facing agriculture around the world [

1]. Detecting weeds in crops is extremely difficult, due to the great similarity in the colors of weeds and crops, as well as the possibility of similar texture, color, or shape. Identifying weed species is also important for applying control methods such as herbicides [

2]. In the past, to control weeds, farmers relied on manual methods and chemical pesticides, but these methods are often harmful to the environment and the people who are exposed to them are expensive, and are not sustainable. For example, chemical pesticides can contaminate water and soil and, over time, contribute to the development of weed resistance to pesticides [

3,

4]. For all these reasons, there is an urgent need to adopt smart and effective techniques to identify weeds and manage them sustainably.

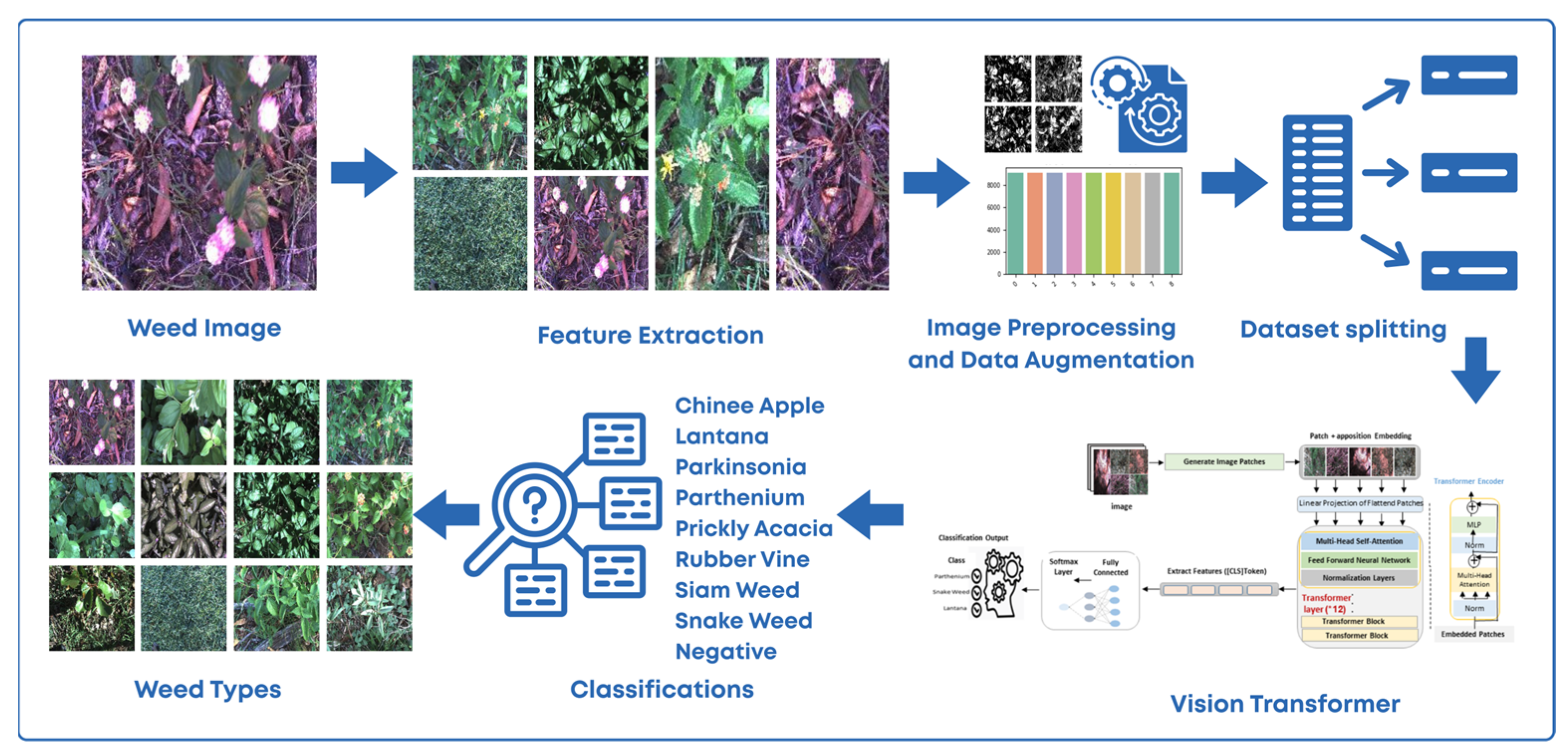

With the emergence of deep learning technologies, digital image processing, and vision transformers, recent years have witnessed remarkable progress in the application of artificial intelligence in smart agriculture [

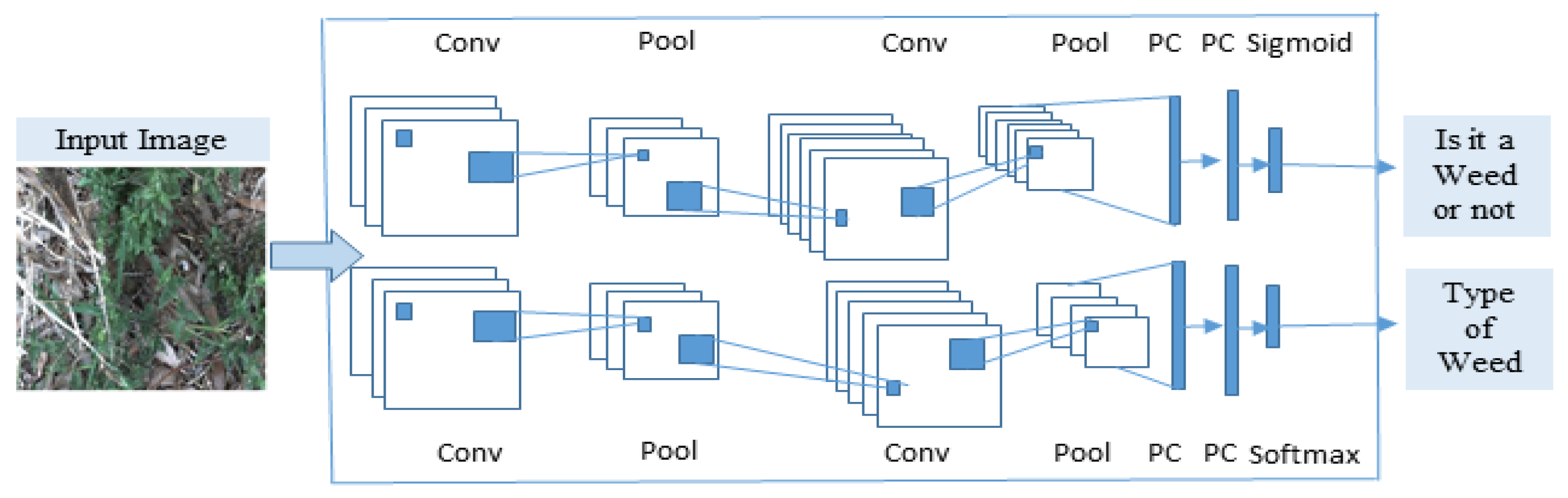

5]. Among these techniques, convolutional neural networks (CNNs) have been widely used for weed detection and classification with high accuracy [

6]. However, high-performance neural networks often depend on the design of specialized structures and may have difficulty handling data with complex and variable patterns such as those generated by weeds under diverse environmental conditions and also in the diversity of backgrounds in weed images [

7]. Hence, the need for transformer technology as a powerful and effective tool for processing complex data has become important.

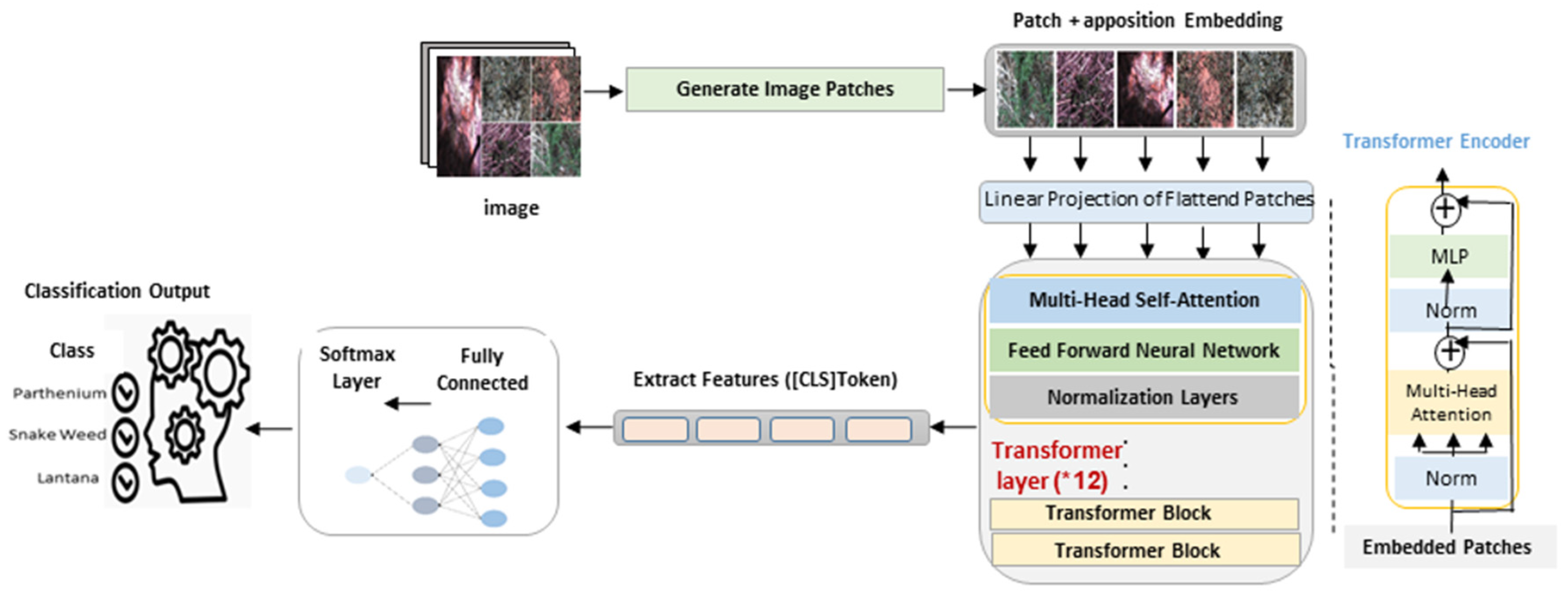

Transformer technology emerged as a powerful and effective tool in processing complex data. The use of transformers in computer vision, through models such as Vision Transformer (ViT), has proven effective in dealing with high-resolution images, although it was originally designed to process textual data [

8]. This approach has the ability to analyze spatial features and global relationships within images, making it suitable for weed classification in agricultural settings [

9]. In addition, images captured by ground cameras or drones are an important source of data in smart agriculture. This technology allows for the collection of high-quality data on a large scale, which helps improve the accuracy of AI-based models [

10]. Combining transformer-based models with large-scale agricultural imagery can significantly improve automated weed management.

Despite this progress, challenges remain. Deep learning models for weed classification still require interpretability mechanisms to explain their decisions, and the effectiveness of vision transformers in this domain remains insufficiently explored. Weed/crop vision models still require interpretability to explain decisions in field contexts, and the role of Vision Transformers in weed detection/classification is comparatively underexplored relative to CNNs (see, e.g., [

1,

2,

11,

12]). Accordingly, the aims of this work were as follows: (i) to evaluate a ViT model against strong CNN baselines on DeepWeeds; (ii) to quantify the effect of data-imbalance strategies (class weighting vs. oversampling) on accuracy; (iii) to provide initial interpretability via attention-based saliency inspection; and (iv) to assess consistency under location shift and capture perturbations using the multi-site, variable-condition imagery in DeepWeeds.

2. Literature Review

The field of weed classification using artificial intelligence has received the attention of researchers, as much of their research has focused on improving the accuracy and performance of models using different techniques. In this section, we discuss the most prominent research conducted in this field, highlighting its results, the challenges it faced, and the positives outcomes that were achieved. As reported in [

13], machine-learning methods such as Random Forest (RF), Support Vector Machine (SVM), and CNN achieved ~85–99% accuracy in plant disease classification applications, and UAV imagery was also used to classify crops and weeds. A wide range of studies have focused on CNN-based weed classification. For example, accuracy above 85% was reported in [

14], and a CNN model for real-time semantic segmentation improved performance by up to ~13% when leveraging background knowledge [

6]. The researchers presented this study [

15] on the “DeepWeeds” dataset, which contains 17,509 images classified into 9 types of weeds in Australia. The researchers used the deep learning models Inception-v3, which achieved an accuracy of 95.1%, and ResNet-50, which achieved a classification accuracy of 95.7%. Other studies [

15,

16] reviewed recent research on the application of drones using convolutional neural networks (CNNs) and computer vision techniques to weed detection.

In 2024, a team of researchers developed artificial intelligence techniques to distinguish weeds with higher accuracy using imaging data, as studies showed that using advanced image analysis algorithms helped classify weeds more accurately and at a lower cost compared to traditional methods, The researchers in [

17] used ViT model to classify plant diseases. They showed that combining ViT with a CNN architecture maintained inference speed without sacrificing accuracy and the accuracy was between 96% and 98%. The researchers in [

18] proposed an advanced application of ViT on Unmanned Aerial Vehicle (UAV) images to classify crops such as beetroot, parsley, and spinach. This model outperformed state-of-the-art CNN models using a relatively small dataset. Studies [

11,

12] were on the application of visual transformers to classify weeds and crops. They focused on taking advantage of the self-attention mechanism that characterizes transformers to facilitate the processing of complex spatial and spectral patterns in agricultural fields. Their results showed a clear improvement in accuracy compared to traditional CNNs, with some models achieving 94% accuracy. But they faced challenges related to the need for labeled data to train models.

These studies [

16,

19] presented a comprehensive study of the integration of image processing techniques with intelligent systems, focusing on the stages of image processing and enhancement, feature extraction and classification for pest detection, and increasing the accuracy of image processing techniques used in agricultural applications. However, challenges included variable lighting, complex backgrounds, overlap between weeds and target plants, and the impact of environmental changes on the accuracy of models. Also, this study [

17] conducted a comprehensive study of deep learning at the agricultural level in all its forms and found that plant stress monitoring and classification applications using CNN, ViT, transfer learning and few-sample decomposition (FSL) techniques achieved significant improvements in accuracy. Other studies [

8] presented a ViT model based on image segmentation and applied a self-attention mechanism instead of convolutional layers. This model outperformed traditional networks in terms of performance and accuracy, reaching 88.55% using ViT-B/16.

On the other hand, the researchers also worked on integrating image pre-processing techniques with deep learning to improve performance. For example, studies such as [

20] used segmentation techniques to improve crop and weed separation, which helped reduce classification errors by up to 15%. Given the challenges common to these studies, several key points emerge among them: Environmental changes, as most studies have confirmed that lighting, shade, and soil types greatly affect the quality of images and the accuracy of classification; a lack of diverse data, since the need for training data that includes different environmental conditions and patterns has been a common problem, which increases the risk of bias in the models; and implementation costs, as the use of advanced devices such as drones or multispectral cameras is challenging in terms of cost, making the application of these technologies limited in some regions [

21]. The researchers in [

22] reviewed the advanced capabilities of agricultural robots, and the results showed that the integration of vision and sensing systems with artificial intelligence improved the accuracy of classification processes by a percentage ranging between 85% and 95%. The researcher in [

23] presented and discussed remote sensing techniques, satellite data, and drones, and confirmed that Normalized Difference Vegetation Index (NDVI) and Enhanced Vegetation Index (EVI) vegetation indices are widely used in productivity estimation and early disease detection, and the prediction accuracy exceeded 90% in some studies. The authors in [

18] combined Explainable AI Techniques with deep learning to improve visual detection of wheat diseases. Two other studies [

17,

24] used Vision Transformers models after converting audio signals into spectrograms, due to the flexibility of these models in handling different data and the ability to enhance them with interpretation techniques to understand the model’s decisions. Transformers models are not only used in traditional computer vision but are also effective in classification tasks.

These studies [

22,

23,

24] talked about hybrid models that combine CNNs and transformers to improve performance while maintaining efficiency. They highlighted that combining feature extraction capabilities in convolutional networks and attention mechanisms in transformers led to improving the accuracy of weed detection by a rate ranging between 7% and 10% compared to using CNNs. The results were positive, but these models suffered from additional complexity in structure and increased training time. This study [

25] developed a hybrid multi-class leaf disease identification model combining Variational Autoencoder (VAE) and ViT and achieved an accuracy of 93.2%.

Overall, previous studies have made significant contributions to improving weed classification accuracy but have demonstrated the need for innovative solutions to overcome these challenges.

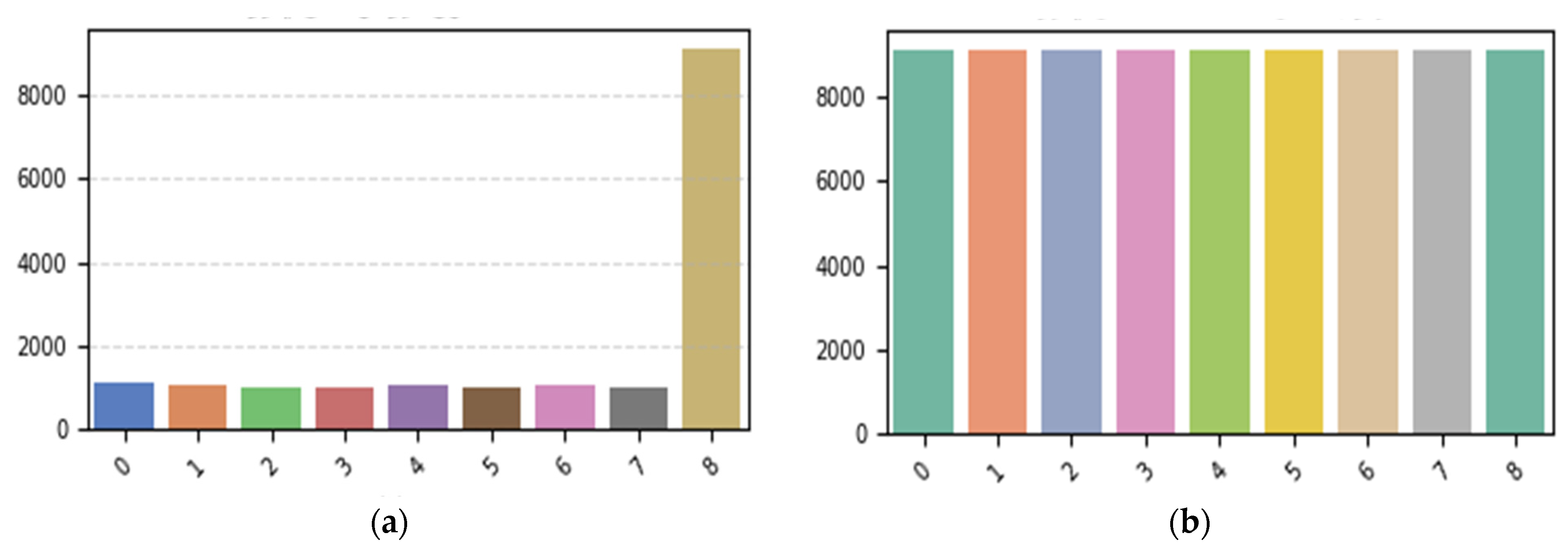

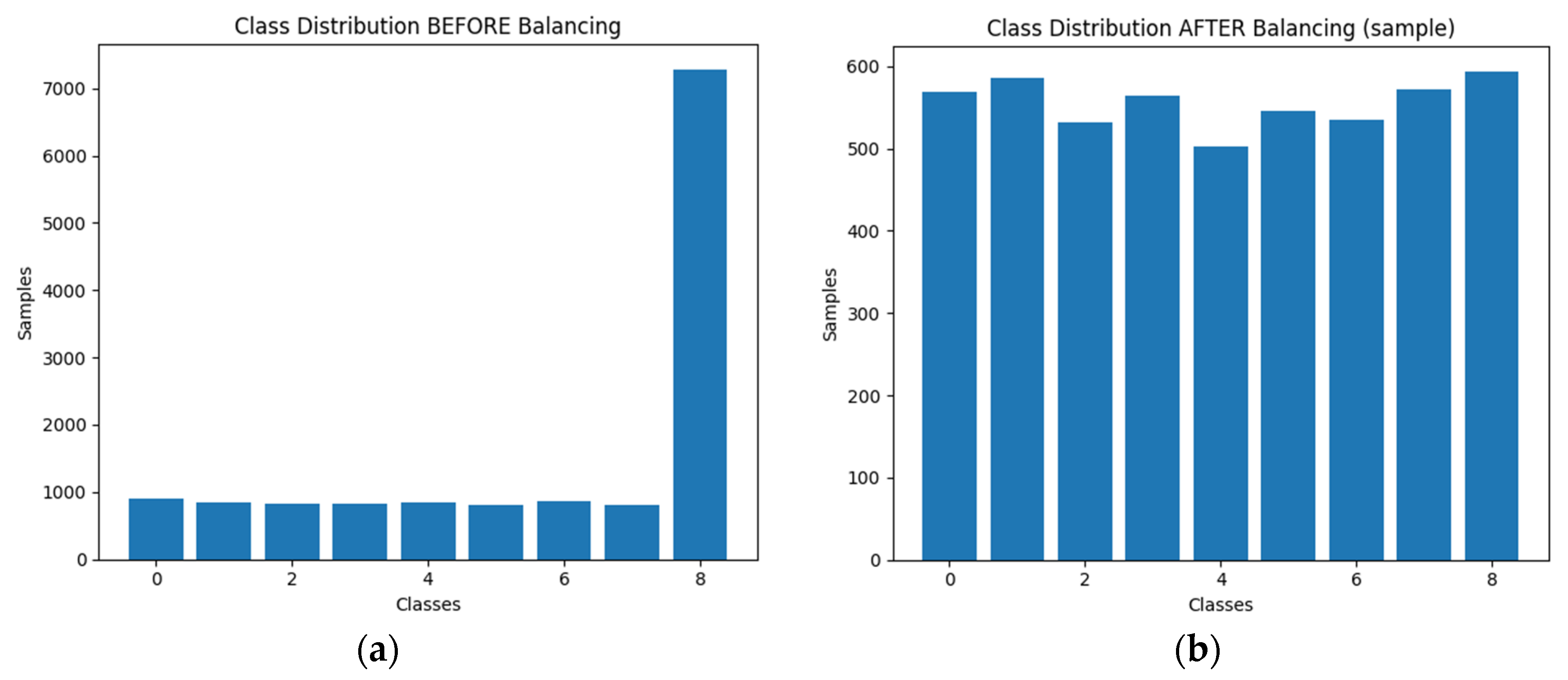

Although there has been significant progress in the use of deep learning techniques and weed classification as addressed in previous studies, there are some challenges and gaps that still exist, such as the limited environmental diversity of data: most studies have relied on environmentally limited datasets, which reduces the ability of models to generalize under variable environmental conditions (e.g., light, shade, and different soil types), Another issue is the heavy reliance on huge data for training: the models used require large amounts of high-quality data, which poses a challenge in cases where data is limited. Also, there are high computational costs: modern models such as Transformers provide outstanding performance but depend on high computational resources, which reduces their usefulness in field applications. Finally, there is a lack of real-life experience, as much research has focused on laboratory or simulated environments and has not been adequately tested in real agricultural field conditions. This study aims to address some of these gaps by developing advanced classification model based on Transformers technology, with targeted improvements to handle data in unbalanced categories by comparing the application of data augmentation techniques and class weighting technology. This study introduces a framework to solve the problem of data imbalance and rely on fewer computing resources without sacrificing accuracy. The models are also tested in real-world environments with varying environmental conditions, allowing for generalization of the models and a practical and realistic evaluation of their efficiency.

Thus, the study seeks to present a new model that balances high performance with practical applicability, which contributes to supporting smart agriculture and reducing reliance on traditional methods of weed control. The remainder of this paper is divided into several main sections, with

Section 3 examining the proposed methodology in detail. This is followed by

Section 4, which reviews the experiments, results and discussion of the findings, and

Section 5 concludes the paper by reviewing the main conclusions, while providing valuable recommendations for possible future work.

5. Conclusions and Future Works

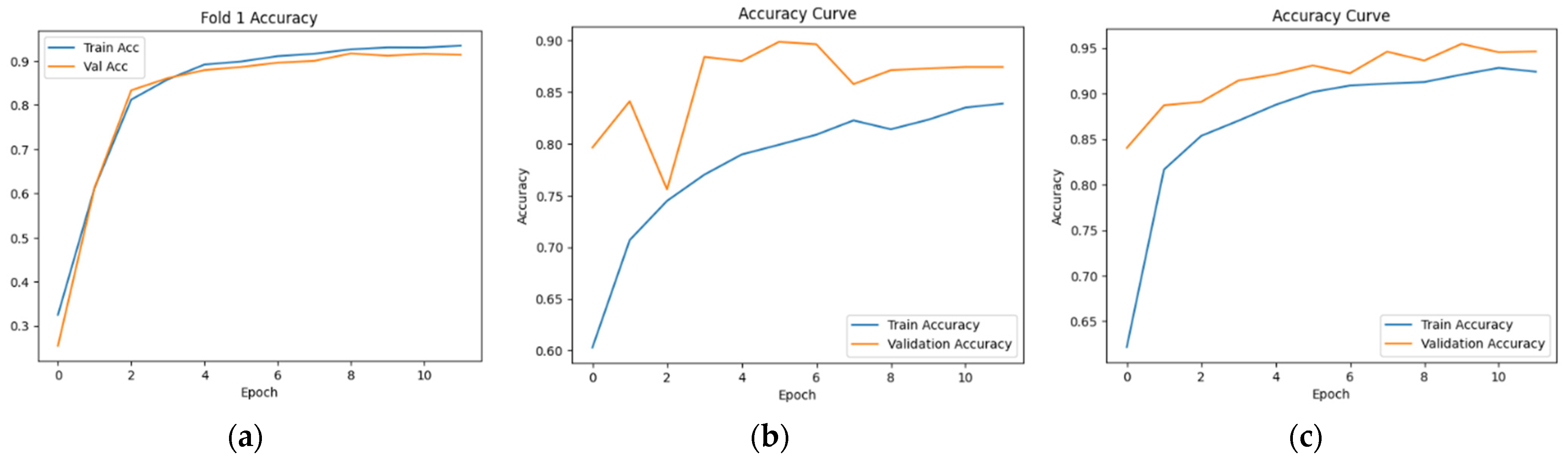

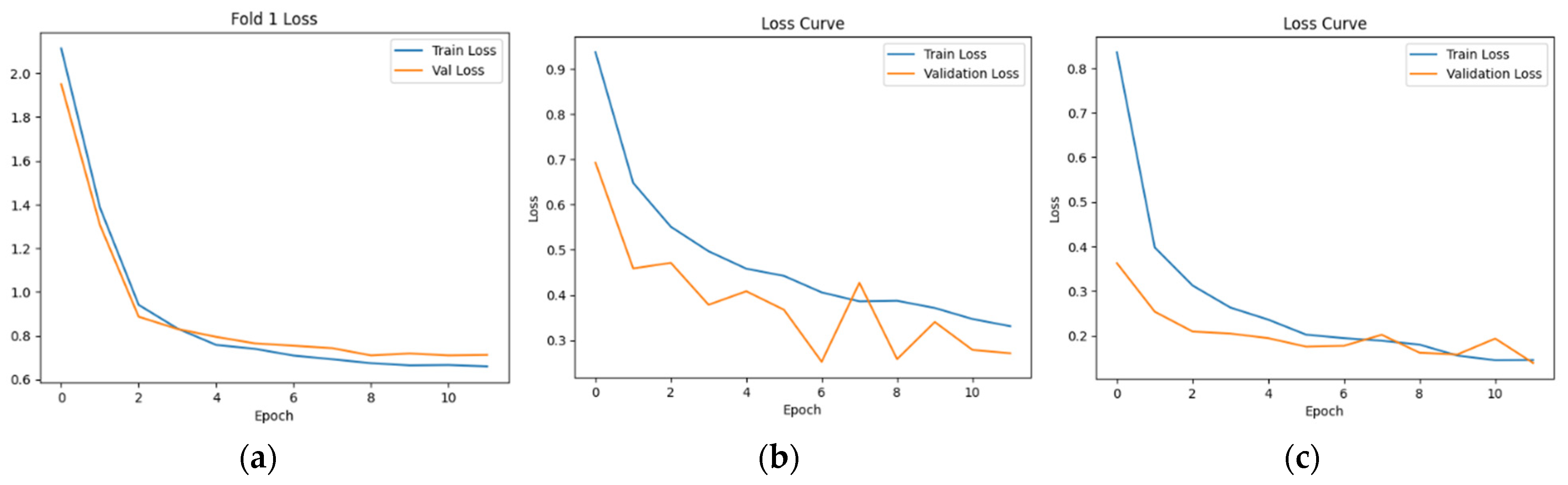

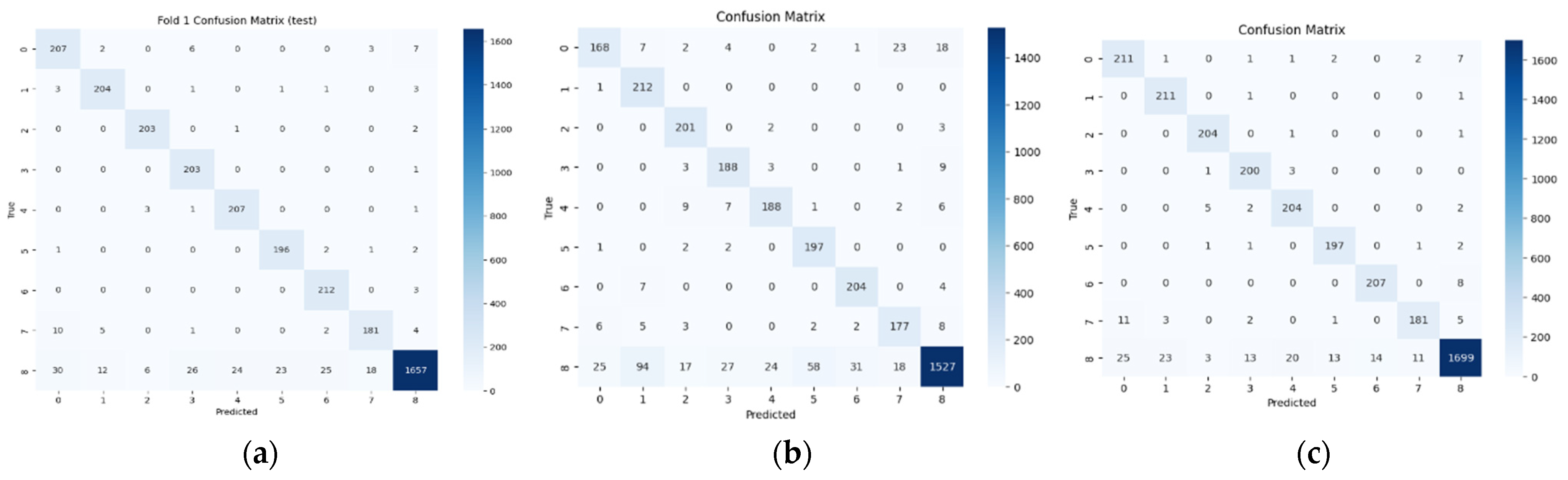

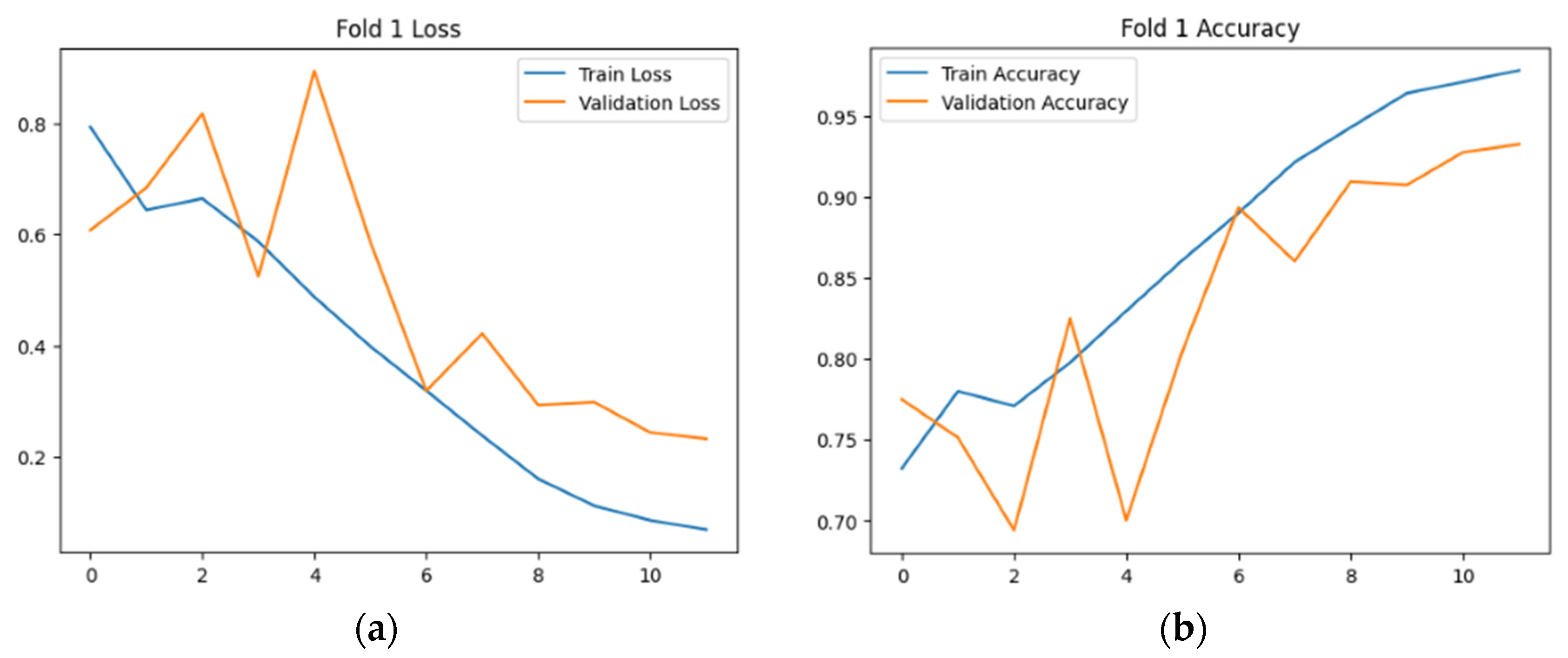

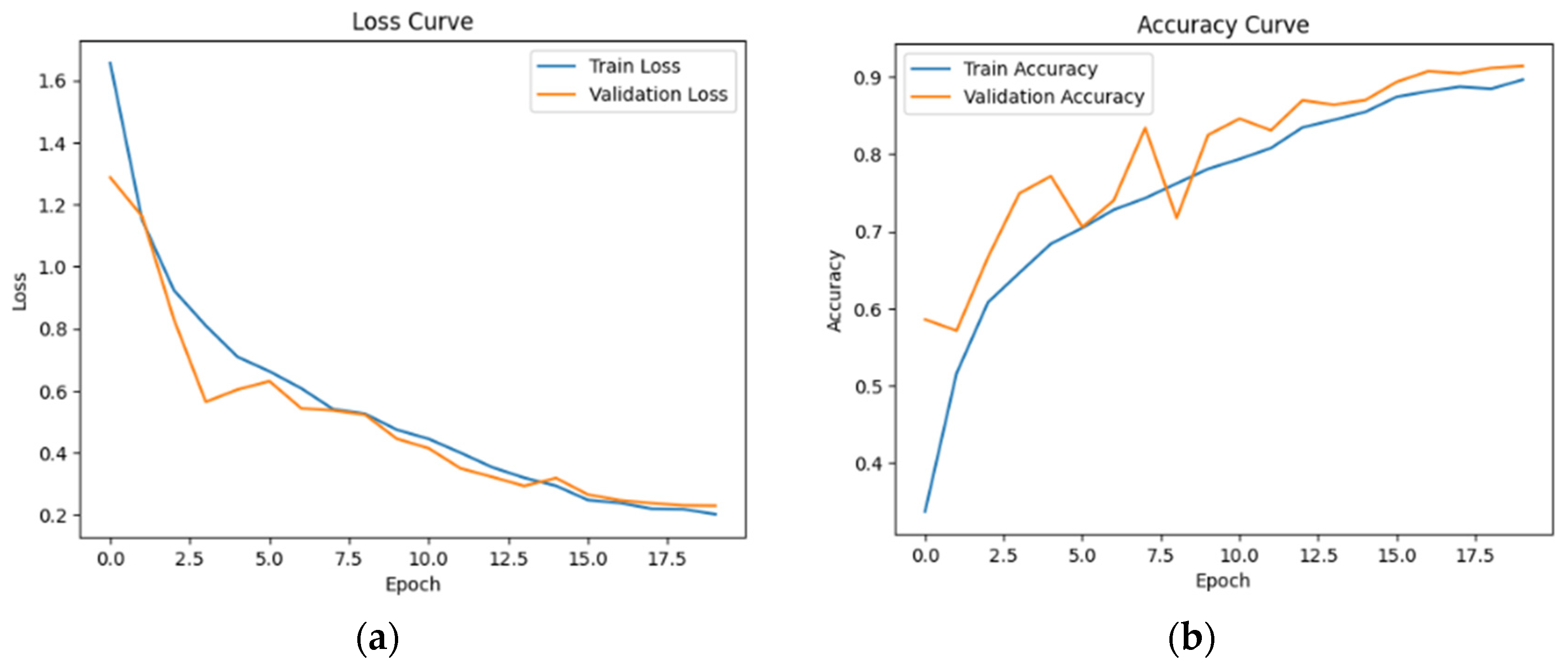

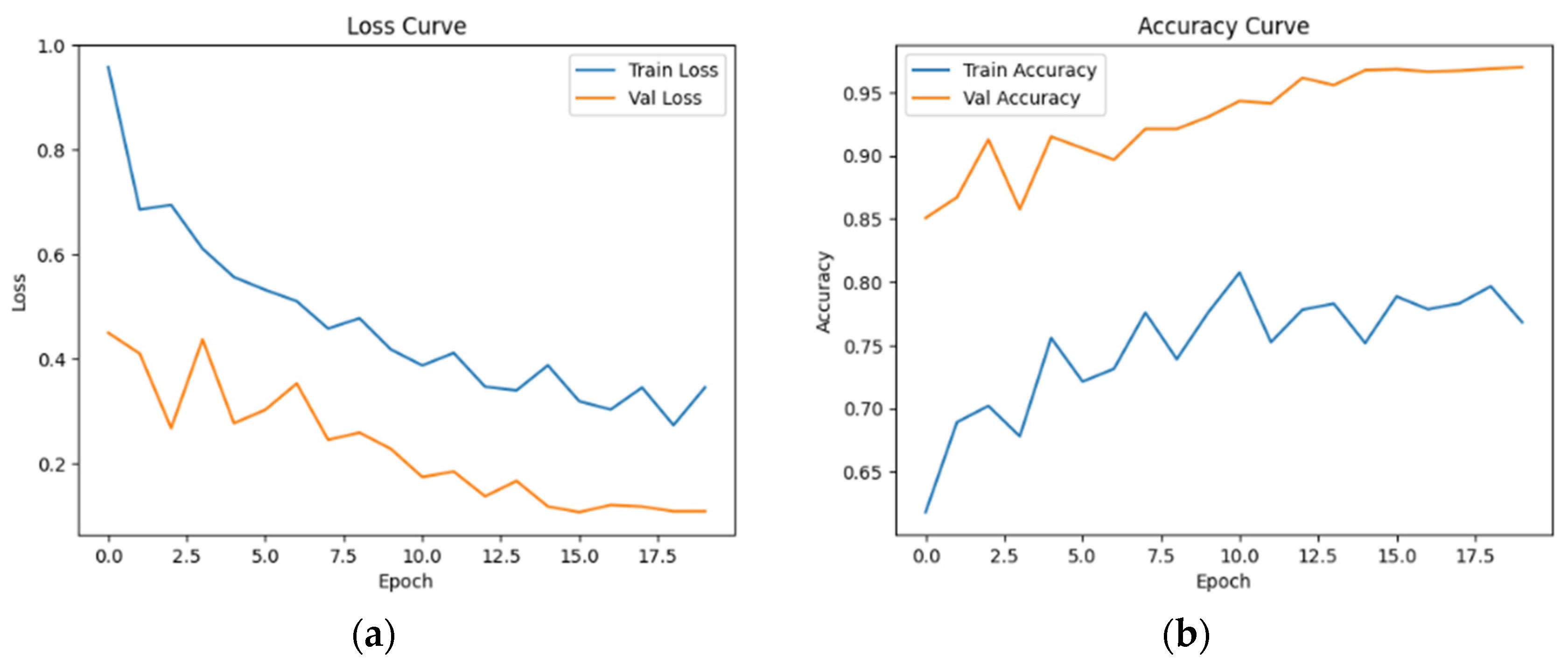

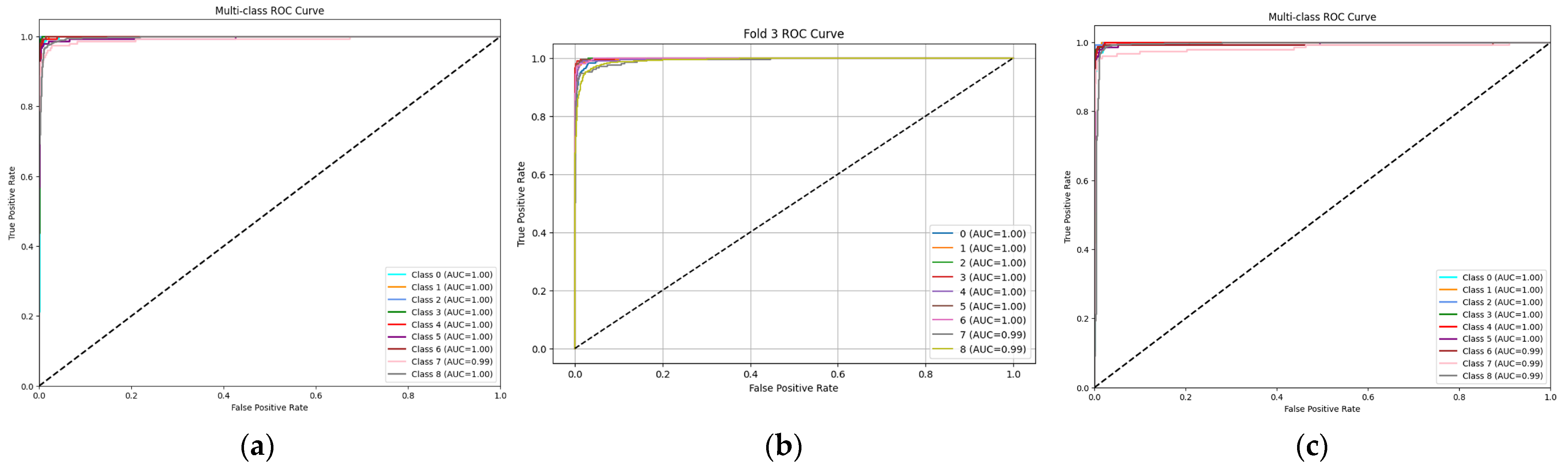

From an implementation perspective, inference speed, VRAM footprint, and model size should guide selection for edge platforms (e.g., UAVs/robots), where lighter CNNs or distilled/quantized ViTs can provide favorable trade-offs. This study addressed four aims: comparing ViT with competitive CNNs on DeepWeeds, analyzing data-imbalance strategies, providing initial interpretability via attention-based saliency inspection, and assessing consistency under location shift and capture perturbations using the dataset’s multi-site imagery. A framework was presented to solve the data imbalance problem, where the ViT model showed outstanding performance in classifying weeds using the DeepWeeds dataset. It achieved 96.9% accuracy and superior generalization ability, using class weighting and strong augmentation techniques, outperforming other models. These results can be used in smart farming applications to reduce weed damage and improve land management efficiency. The impact of imbalance on CNN and ViT will be demonstrated, as well as the sensitivity of traditional oversampling to hyper-adaptation, demonstrating the effectiveness of class weighting with strong augmentation as an alternative that achieves high accuracy.

In the future, the proposed model could be applied in precision agriculture to effectively identify weeds in agricultural land. It can also be integrated with artificial intelligence sensors such as agricultural robots to increase the efficiency of weed control operations, or unmanned aerial vehicles (UAVs), enhancing the model by using technologies to expand data or improve its distribution. Furthermore, technologies such as advanced neural networks, transfer learning, or hybrid models can be used to further improve results. Complementary techniques, such as the use of 8-bit integers used in the QWID model [

45] can also be explored to reduce model size and inference time, which could reduce the area of resources used by up to 50% compared to traditional models. It is important to note that there may be a slight impact on classification accuracy, which must be carefully evaluated to ensure that performance is not significantly impacted. Future work could explore using scale models or modified deep learning techniques that work faster and more efficiently. Future research could explore techniques to further improve the model and address challenges such as incorporating Reinforcement Learning techniques to improve the model’s performance in unstable environments. This study opens up important future prospects for the development of hybrid models that combine the efficiency of convolutional models and the self-attention feature of transformers, as well as for exploring self-learning techniques to reduce the need for labeled data.