Abstract

Continuous observation and management of agriculture are essential to estimate crop yield and crop failure. Remote sensing is cost-effective, as well as being an efficient solution to monitor agriculture on a larger scale. With high-resolution satellite datasets, the monitoring and mapping of agricultural land are easier and more effective. Nowadays, the applicability of deep learning is continuously increasing in numerous scientific domains due to the availability of high-end computing facilities. In this study, deep learning (U-Net) has been implemented in the mapping of different agricultural land use types over a part of Punjab, India, using the Sentinel-2 data. As a comparative analysis, a well-known machine learning random forest (RF) has been tested. To assess the agricultural land, the major winter season crop types, i.e., wheat, berseem, mustard, and other vegetation have been considered. In the experimental outcomes, the U-Net deep learning and RF classifiers achieved 97.8% (kappa value: 0.9691) and 96.2% (Kappa value: 0.9469), respectively. Since little information exists on the vegetation cultivated by smallholders in the region, this study is particularly helpful in the assessment of the mustard (Brassica nigra), and berseem (Trifolium alexandrinum) acreage in the region. Deep learning on remote sensing data allows the object-level detection of the earth’s surface imagery.

1. Introduction

In agricultural land, several factors affect the mapping of smallholder farm plots, such as the multiple types of land management techniques, the scale of plots, vegetation variation, and lack of accurate field boundaries among a majority of the land management system [1,2]. Thus, the mapping of smallholder farmers’ farms, their operation, and the vegetation area spatial distribution remain the key components in monitoring [3,4,5]. In India, small farmers account for approximately 86% of operational landholdings [6,7], which significantly contributes to the gross domestic product (GDP), as well as achieving sustainable development goals (SDG) [8,9,10]. Therefore, it is necessary to monitor the agricultural land on a regular basis, as crop insurance is also an integral component of agriculture planning, and estimating crop yields, acreages, and crops at the block/tehsil level [11,12]. However, there are many challenges involved in conducting field surveys, such as cost constraints, weather conditions, and topography of the land [13,14].

Currently, remote sensing via space-borne satellite sensors plays an active role in decision-making support via the delivery of accurate data with temporal and spatial resolution [15,16]. In contrast to earlier studies, it was difficult to distinguish the smaller plots from low-or-medium resolution images [16,17,18,19]. However, with the integration of deep learning in moderate-resolution datasets, detailed and accurate information can be extracted in a more accurate and detailed way. To classify or categorize the vegetation types, numerous remote sensing datasets, e.g., Sentinel-1 (microwave-based), Sentinel-2 (optical-based) [20], Scatterometer [21,22,23], Landsat-8 [24], and many more were utilized to provide the cloud-free information [25,26]. Among the various satellite datasets, Sentinel-2 attracts the interest of many researchers due to its finer spatial resolution, free availability of datasets, and applicability in vegetation type/area classification and yield forecasting [27,28,29].

The processing of satellite datasets with advanced models, i.e., object-based classification or deep learning, allows the estimation yield data for highly commercial vegetation types, such as wheat berseem and mustard [30]. Previous literature has shown the effectiveness of different machine learning or deep learning algorithms, such as autoencoder (AE), stacked AE (SAE), convolutional neural network (CNN), recurrent CNN (R-CNN), fully convolutional network (FCN), recurrent NN (RNN), graph NN (GNN), and restricted Boltzmann machine (RBM) [30,31,32,33]. Table 1 shows the summary of the applicability of different satellite datasets on classification algorithms [33]. In addition, various authors investigated the effectiveness of using deep learning with different satellite datasets, such as hyperspectral [34], Synthetic Aperture Radar (SAR) [35], POLSAR (Polarimetric SAR) data [36,37,38,39] and many more [33].

Table 1.

A brief summary of different classification algorithms.

Deep learning is dependent on the trained model for reducing user intervention and making the process semiautonomous or autonomous [51,52]. On the other hand, deep learning models require more computational power compared to machine learning algorithms [21,53,54]. Furthermore, the extraction of different land-use types from a medium-resolution satellite dataset is a challenging task in deep learning. This may be due to the impact of the atmospheric/radiometric influence on the medium-resolution satellite dataset. Thus, analysis of the impact of deep learning in the identification of land-use types from medium-resolution satellite datasets is essential.

In this study, deep learning (U-Net) has been implemented to map the different agricultural land use types using Sentinel-2. The objectives of the study included: (a) Implementation of U-Net-based deep learning over a region of Punjab, India; (b) detection of major agriculture land use types, i.e., wheat, berseem, mustard, other vegetation, water, and buildup; (c) validation and comparative analysis with RF classifier. This area was selected because of its agriculture-intensive zone, where vegetation lands have been divided into small holdings [55]. In addition, it contains a healthy mix of various types of land use and contains significant areas under both edible vegetation, most of which is wheat, and cash crops, which are mostly mustard. Furthermore, the area also contains several large species of fish as a result of the conversion of former fields into aquaculture ponds.

2. Study Area and Dataset

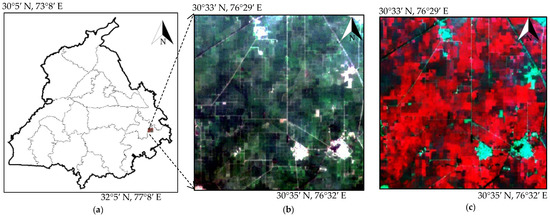

The region-of-interest (ROI) has been acquired from Fatehgarh Sahib (Punjab) India at geographic coordinates of 30°33′ N to 30°35′ N and 76°29′ E to 76°32′ E, with a coverage of 443 hectares, as shown in Figure 1. The study area includes various class categories, such as wheat, berseem, mustard, other vegetation (unable to separate using medium resolution imagery due to very small land holding), water, and buildup. The Punjab region (India) is an important food grain state of India that contributes the greatest portion of the wheat and rice stock. The ROI was acquired during the winter season on a cloud-free day, i.e., 19 February 2018, from Sentinel-2 (https://earthexplorer.usgs.gov/, accessed date: 15 September 2022). During this period, the different crops, i.e., wheat, berseem, and mustard are in the budding/flowering stage and the atmosphere is nearly clear, which helps in the acquisition of imagery with the least impact of clouds on remote sensing images. The spatial resolution of the Sentinel-2 data varies from 10 m to 60 m with respect to thirteen spectral wavelengths (0.443–2.190 µm). Moreover, the high-resolution dataset has been utilized from the Pléiades constellation for accuracy assessment and training purposes.

Figure 1.

Location of the study: (a) Punjab state map (India), (b) Sentinel-2 satellite data from 19 February 2018 in Red-Green-Blue 4-3-2 color bands (for the natural color), (c) Color infrared imagery from 19 February 2018 in Red-Green-Blue 8-4-3 color bands (color infrared highlighting the unhealthy and healthy vegetation).

3. Methodology

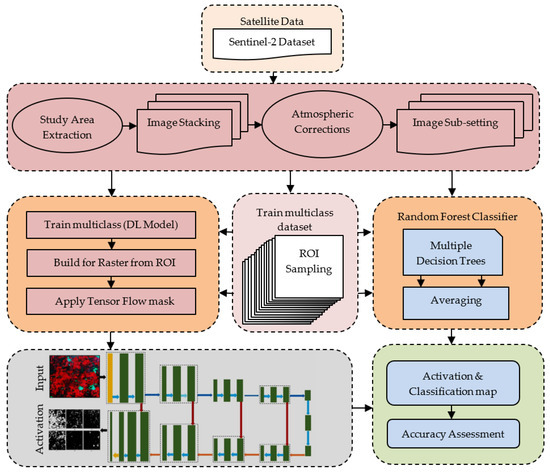

As shown in Figure 2, the proposed methodology involves the acquisition of Sentinel-2 satellite data of ROI, preprocessing of the data to overcome atmospheric and radiometric errors, implementation of deep learning-based U-Net and machine learning based RF classifier to detect the different land use types from ROI, and validation or accuracy assessment procedures. This framework solves geographic problems in detection of agricultural land (the average size of the landholdings in India was 1.08 hectares as per the Agriculture Census) with the help of deep learning-based U-Net [22,56]. It requires training the model using input label rasters that indicate known feature samples [36,57].

Figure 2.

An operational model for the mapping of agricultural land using ENVINet5-based deep learning.

3.1. Preprocessing of the Input Data

The Sentinel-2 data needs to be preprocessed to overcome atmospheric and radiometric errors. The Sen2Cor v2.9 module (for Sentinel-2 Level 2A, Sentinel application platform (SNAP) version) has been utilized to perform the atmospheric and radiometric corrections. In addition, it effectively corrects sun angle variations, daytime haze effects, and smaller haze effects, but it does not remove clouds. Hence, cloud-free images are recommended when processing the Sentinel-2 data via Sen2Cor. Once the data is preprocessed, deep learning or machine learning can be implemented to generate classified maps.

3.2. Deep Learning and Machine Learning

As an essential part of machine learning, deep learning models work on the principle of neural networks to learn and recognize data [58]. It can be defined as (a) supervised, (b) unsupervised, and (c) transfer-based [8,59,60]. A supervised learning process associates a target subject with a class affiliation explicitly; in an unsupervised learning process, data structures are used to associate class affiliation with the target concept [15]. In the third category, information is utilized across different activities in a coordinated manner to increase efficiency [17]. The object-level detection accuracy of the model generally depends on the depth of the neural network, as in CNN and GNN [31,52]. The U-Net, which evolved from the CNN, was utilized in many other applications, such as glacier research [58], biomedical imaging [61], sea-ice or ice shelf mapping [57], land boundaries [56], and big data remote sensing for next-generation mapping [62].

The U-Net as a supervised semantic segmentation network has been implemented in the present work to map agricultural land use types [61]. To implement the U-Net, deep learning (Ver. 1.1) in ENVI’s software v5.6 has been utilized. This architecture is encoder-decoder and mask-based to classify the satellite data into different land use types. To perform the classification, an open-source software library, named TensorFlow model, is utilized as a main part of the deep learning procedure. The TensorFlow model provides flexibility, portability, and optimization of performance [63]. As shown in Figure 2, there are two major parts of the U-Net architecture, i.e., downscaling (used to increase the robustness with respect to the distortion in the imagery) and upscaling (used for restoration and decoding of the object features with respect to the input imagery).

In ENVI’s deep learning model, there are two options, i.e., ENVINet5 (single class classification) and ENVI Net-Multi (multiple classes categorization), to classify input data [64]. To train the TensorFlow model, multiple samples have been selected for each class category, i.e., wheat, berseem, mustard, other vegetation, water, and buildup. In the training process, the TensorFlow model converts the spatial/spectral information in input imagery into a class activation or thematic map. Once the model is trained, the georeferenced activation and classified maps are generated. The activation maps are the fractional maps generated for each class category and they help improve the classified maps with the selection of manual or automatic threshold methods [6]. For the comparative analysis, a machine learning-based RF classifier has also been implemented. The RF classifier as a random decision forest allows the construction of multiple decision trees at training time to classify the datasets.

4. Results and Discussion

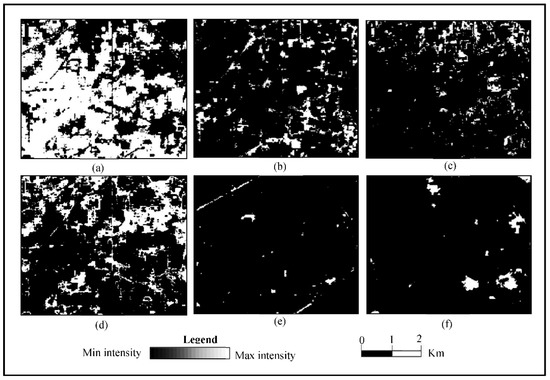

Initially, the Sentinel-2 data was acquired and preprocessed through the Sen2Cor v2.8 module to generate the reflectance imagery, as shown in Figure 1 [65,66]. To implement the deep learning classifier, the model needs to be trained for different class categories. A total of 30–35 polygons are repeated throughout the image as inputs for this training for classifying rabi season crops with deep learning and random forest classifiers [20,67]. The 100–150 pixels were considered in each polygon segment during the training process [7,68,69]. Afterwards, the activation (fractional) maps (i.e., wheat, berseem, mustard, other vegetation, water, and buildup area) are generated via ENVI’s U-Net-based deep learning, as shown in Figure 3. The intensity level of the activation maps varies from the maximum (represented by pure white) to the minimum (represented by pure black). Activation maps allow the reproduction of classified maps with improved accuracy while dealing with medium-resolution data. Furthermore, the different threshold methods could also be tested to generate the accuracy of classified maps in ENVI’s U-Net-based deep learning. Reference [6] highlighted the significance of activation maps in the detection of seasonal agricultural variations.

Figure 3.

Activation maps generated from deep learning classification for individual class category: (a) Wheat; (b) Berseem; (c) Mustard; (d) Other vegetation; (e) Water; and (f) Buildup area.

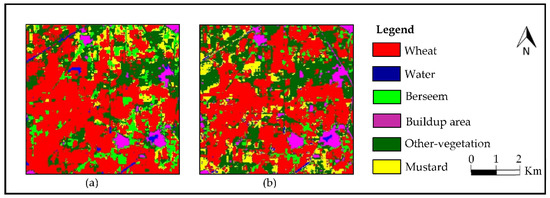

Figure 4 represents the visual interpretation of classified outputs generated from U-Net-based deep learning and RF-based machine learning. To perform the statistical analysis, the various parameters have been computed, i.e., producer’s accuracy (PA), user’s accuracy (UA), overall accuracy (OA), kappa coefficient (k), Reference Total (RT); Classified Total (CT); and Number of correct (NC). Table 2 represents the accuracy assessment computed from U-Net-based deep learning and RF-based machine learning. It was observed that U-Net-based deep learning performed better (OA = 97.8% and k = 0.9691), as compared to RF-based machine learning (OA = 96.8% and k = 0.9691).

Figure 4.

Classified output of (a) U-Net-based deep learning, (b) RF-based machine learning.

Table 2.

Accuracy assessment computed from U-Net and RF classification algorithms.

In the previous studies [6], the authors attempted to assess ENVI’s U-Net architecture in the detection of seasonal variation with limited class categories. As an objective of the present work, the performance of ENVI’s U-Net architecture was evaluated over agricultural land in the mapping of different vegetation class categories, i.e., wheat, berseem, mustard, and other vegetation using medium-resolution Sentinel-2 satellite imagery. From the results, it is depicted that ENVI’s U-Net architecture has the potential for the extraction of different vegetation class categories. As compared to many other versions of the U-Net model architecture, ENVI’s U-Net is easy to implement without any explicit programming [56,58]. It also included a greater number of features for different applications, such as boundary mapping, threshold algorithms, and an easy user interface [64].

As ENVI’s U-Net (deep learning v1.1, ENVI v5.6) is in the initial stage of development, the major challenges involved in its use included the lack of full customization of various model parameters, inability to classify the entire input raster when least values of parameters were selected, and lack of accuracy in object-level detection. However, the accuracy of classification could be improved by applying the in-built manual or automatic threshold algorithms (e.g., Otsu, empirical, etc.) on the activation maps in ENVI’s U-Net. This procedure allows the regeneration of thematic maps without the re-computation of the entire deep learning model. Furthermore, the outcomes of ENVI’s U-Net could also be improved in terms of more customization and integration with other models. As far as the cloud problem is concerned [70], microwave data can be utilized or infused with optical datasets to get accurate outcomes.

5. Conclusions

In this work, ENVI’s deep learning (U-Net) has been evaluated in the estimation and mapping of different vegetation class categories, i.e., wheat, berseem, mustard, and other vegetation, using medium-resolution Sentinel-2 satellite data. As part of the comparative analysis, a well-known and commonly used RF classifier has also been implemented and it was concluded that U-Net-based deep learning performed marginally better compared to RF-based machine learning. Although it performed well in the automatic identification of land-use features, many challenges are still involved in ENVI’s deep learning, which need to be addressed with more flexibility in the selection of parameter values and integration with self-designed programming models. It also needs to be improved by the addition of features with respect to different scientific domains. As far as agriculture is concerned, it is necessary to correctly identify different seasonal crops on land plots to successfully update images to provide an up-to-date inventory of crops across seasons, making agriculture policy and mapping more accurate. Further studies may comprise the deep learning of the multisource data fusion in the cloud-free monitoring of agricultural land types.

Author Contributions

Conceptualization, methodology, validation, original draft preparation, investigation, G.S. (Gurwinder Singh); formal analysis, supervision, data curation, S.S.; review and supervision, G.S. (Ganesh Sethi); visualization, review and editing, V.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data can be downloaded from the United States Geological Survey (USGS) online portal (https://earthexplorer.usgs.gov/, accessed date: 15 September 2022).

Acknowledgments

The authors would like to thank the United States Geological Survey, National Aeronautics and Space Administration (NASA), and Centre National d’Etudes Spatiales (CNES) for providing the Sentinel-2 and Pléiades data, respectively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nasiri, V.; Deljouei, A.; Moradi, F.; Sadeghi, S.M.M.; Borz, S.A. Land Use and Land Cover Mapping Using Sentinel-2, Landsat-8 Two Composition Methods. Remote Sens. 2022, 14, 1977. [Google Scholar] [CrossRef]

- Sicre, C.M.; Fieuzal, R.; Baup, F. Contribution of multispectral (optical and radar) satellite images to the classi fi cation of agricultural surfaces. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101972. [Google Scholar]

- Hudait, M.; Patel, P.P. Crop-type mapping and acreage estimation in smallholding plots using Sentinel-2 images and machine learning algorithms: Some comparisons. Egypt. J. Remote Sens. Space Sci. 2022, 25, 147–156. [Google Scholar] [CrossRef]

- Ahmadlou, M.; Al-Fugara, A.; Al-Shabeeb, A.R.; Arora, A.; Al-Adamat, R.; Pham, Q.B.; Al-Ansari, N.; Linh, N.T.T.; Sajedi, H. Flood susceptibility mapping and assessment using a novel deep learning model combining multilayer perceptron and autoencoder neural networks. J. Flood Risk Manag. 2021, 14, e12683. [Google Scholar] [CrossRef]

- do Nascimento Bendini, H.; Fonseca, L.M.G.; Schwieder, M.; Körting, T.S.; Rufin, P.; Sanches, I.D.A.; Leitão, P.J.; Hostert, P. Detailed agricultural land classification in the Brazilian cerrado based on phenological information from dense satellite image time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101872. [Google Scholar] [CrossRef]

- Singh, G.; Singh, S.; Sethi, G.K.; Sood, V. Detection and mapping of agriculture seasonal variations with deep learning–based change detection using Sentinel-2 data. Arab. J. Geosci. 2022, 15, 825. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Baig, M.F.; Mustafa, M.R.U.; Baig, I.; Takaijudin, H.B.; Zeshan, M.T. Assessment of Land Use Land Cover Changes and Future Predictions Using CA-ANN Simulation for Selangor, Malaysia. Water 2022, 14, 402. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondízio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Srivastav, A.L.; Kumar, A. An endeavor to achieve sustainable development goals through floral waste management: A short review. J. Clean. Prod. 2021, 283, 124669. [Google Scholar] [CrossRef]

- Aznar-sánchez, J.A.; Piquer-rodríguez, M.; Velasco-muñoz, J.F.; Manzano-agugliaro, F. Worldwide research trends on sustainable land use in agriculture. Land Use Policy 2019, 87, 104069. [Google Scholar] [CrossRef]

- Costache, R.; Arabameri, A.; Blaschke, T.; Pham, Q.B.; Pham, B.T.; Pandey, M.; Arora, A.; Linh, N.T.T.; Costache, I. Flash-flood potential mapping using deep learning, alternating decision trees and data provided by remote sensing sensors. Sensors 2021, 21, 280. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Folberth, C.; Baklanov, A.; Balkovi, J.; Skalský, R.; Khabarov, N.; Obersteiner, M. Spatio-temporal downscaling of gridded crop model yield estimates based on machine learning. Agric. For. Meteorol. 2019, 264, 1–15. [Google Scholar] [CrossRef]

- De Luca, G.; MNSilva, J.; Di Fazio, S.; Modica, G. Integrated use of Sentinel-1 and Sentinel-2 data and open-source machine learning algorithms for land cover mapping in a Mediterranean region. Eur. J. Remote Sens. 2022, 55, 52–70. [Google Scholar] [CrossRef]

- Degife, A.W.; Zabel, F.; Mauser, W. Assessing land use and land cover changes and agricultural farmland expansions in Gambella Region, Ethiopia, using Landsat 5 and Sentinel 2a multispectral data. Heliyon 2018, 4, e00919. [Google Scholar] [CrossRef]

- Ghassemi, B.; Dujakovic, A.; Żółtak, M.; Immitzer, M.; Atzberger, C.; Vuolo, F. Designing a European-Wide Crop Type Mapping Approach Based on Machine Learning Algorithms Using LUCAS Field Survey and Sentinel-2 Data. Remote Sens. 2022, 14, 541. [Google Scholar] [CrossRef]

- Schaefer, M.; Thinh, N.X. Heliyon Evaluation of Land Cover Change and Agricultural Protection Sites: A GIS and Remote Sensing Approach for Ho Chi Minh City, Vietnam. Heliyon 2019, 5, e01773. [Google Scholar] [CrossRef]

- Mercier, A.; Betbeder, J.; Rumiano, F.; Baudry, J.; Gond, V.; Blanc, L.; Bourgoin, C.; Cornu, G.; Ciudad, C.; Marchamalo, M.; et al. Evaluation of Sentinel-1 and 2 Time Series for Land Cover Classification of Forest—Agriculture Mosaics in Temperate and Tropical Landscapes. Remote Sens. 2019, 11, 979. [Google Scholar] [CrossRef]

- Shendryk, Y.; Rist, Y.; Ticehurst, C.; Thorburn, P. Deep learning for multi-modal classification of cloud, shadow and land cover scenes in PlanetScope and Sentinel-2 imagery. ISPRS J. Photogramm. Remote Sens. 2019, 157, 124–136. [Google Scholar] [CrossRef]

- Dahiya, N.; Gupta, S.; Singh, S. A Review Paper on Machine Learning Applications, Advantages, and Techniques. ECS Trans. 2022, 107, 6137–6150. [Google Scholar] [CrossRef]

- Singh, S.; Tiwari, R.K.; Gusain, H.S.; Sood, V. Potential Applications of SCATSAT-1 Satellite Sensor: A Systematic Review. IEEE Sens. J. 2020, 20, 12459–12471. [Google Scholar] [CrossRef]

- Singh, S.; Tiwari, R.K.; Sood, V.; Kaur, R.; Prashar, S. The Legacy of Scatterometers: Review of applications and perspective. IEEE Geosci. Remote Sens. Mag. 2022, 10, 39–65. [Google Scholar] [CrossRef]

- Khan, M.S.; Semwal, M.; Sharma, A.; Verma, R.K. An artificial neural network model for estimating Mentha crop biomass yield using Landsat 8 OLI. Precis. Agric. 2020, 21, 18–33. [Google Scholar] [CrossRef]

- Ndikumana, E.; Ho, D.; Minh, T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef]

- Singh, S.; Tiwari, R.K.; Sood, V.; Gusain, H.S. Detection and validation of spatiotemporal snow cover variability in the Himalayas using Ku-band (13.5 GHz) SCATSAT-1 data. Int. J. Remote Sens. 2021, 42, 805–815. [Google Scholar] [CrossRef]

- Singh, G.; Sethi, G.K.; Singh, S. Performance Analysis of Deep Learning Classification for Agriculture Applications Using Sentinel-2 Data. In Proceedings of the International Conference on Advanced Informatics for Computing Research, Gurugram, India, 18–19 December 2021; pp. 205–213. [Google Scholar]

- Shanmugapriya, P.; Rathika, S.; Ramesh, T.; Janaki, P. Applications of remote sensing in agriculture—A Review. Int. J. Curr. Microbiol. Appl. Sci. 2019, 8, 2270–2283. [Google Scholar] [CrossRef]

- Sood, V.; Gupta, S.; Gusain, H.S.; Singh, S. Spatial and Quantitative Comparison of Topographically Derived Different Classification Algorithms Using AWiFS Data over Himalayas, India. J. Indian Soc. Remote Sens. 2018, 46, 1991–2002. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep Learning for Change Detection in Remote Sensing Images: Comprehensive Review and Meta-Analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Dong, G.; Liao, G.; Liu, H.; Kuang, G. A Review of the Autoencoder and Its Variants: A Comparative Perspective from Target Recognition in Synthetic-Aperture Radar Images. IEEE Geosci. Remote Sens. Mag. 2018, 6, 44–68. [Google Scholar] [CrossRef]

- Singh, G.; Sethi, G.K.; Singh, S. Survey on Machine Learning and Deep Learning Techniques for Agriculture Land. SN Comput. Sci. 2021, 2, 487. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of Deep Learning CNN Model for Land Use Land Cover Classification and Crop Identification Using Hyperspectral Remote Sensing Images. J. Indian Soc. Remote Sens. 2019, 47, 1949–1958. [Google Scholar] [CrossRef]

- Parikh, H.; Patel, S.; Patel, V. Classification of SAR and PolSAR images using deep learning: A review. Int. J. Image Data Fusion 2020, 11, 1–32. [Google Scholar] [CrossRef]

- Hütt, C.; Koppe, W.; Miao, Y.; Bareth, G. Best accuracy land use/land cover (LULC) classification to derive crop types using multitemporal, multisensor, and multi-polarization SAR satellite images. Remote Sens. 2016, 8, 684. [Google Scholar] [CrossRef]

- Jensen, J.R. Remote Sensing of the Environment: An Earth Resource Perspective 2/e; Pearson Education India: Noida, India, 2009. [Google Scholar]

- Zhou, Z.; Li, S.; Shao, Y. Crops Classification from Sentinel-2A Multi-spectral Remote Sensing Images Based on Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 5300–5303. [Google Scholar]

- Tripathi, A.; Tiwari, R.K. Synergetic utilization of sentinel-1 SAR and sentinel-2 optical remote sensing data for surface soil moisture estimation for Rupnagar, Punjab, India. Geocarto Int. 2022, 37, 2215–2236. [Google Scholar] [CrossRef]

- Omer, G.; Mutanga, O.; Abdel-Rahman, E.M.; Adam, E. Performance of Support Vector Machines and Artificial Neural Network for Mapping Endangered Tree Species Using WorldView-2 Data in Dukuduku Forest, South Africa. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4825–4840. [Google Scholar] [CrossRef]

- Dyrmann, M.; Karstoft, H.; Midtiby, H.S. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016, 151, 72–80. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Ka, A.; Sa, A. Improved Landsat-8 Oli and Sentinel-2 Msi Classification in Mountainous Terrain Using Machine Learning on Google Earth Engine. In Proceedings of the Biennial Conference of the Society of South African Geographers, Bloemfontein, South Africa, 1–7 October 2018; pp. 632–645. [Google Scholar]

- Adagbasa, E.G.; Adelabu, S.A.; Okello, T.W. Application of deep learning with stratified K-fold for vegetation species discrimation in a protected mountainous region using Sentinel-2 image. Geocarto Int. 2019, 37, 142–162. [Google Scholar] [CrossRef]

- Ienco, D.; Interdonato, R.; Gaetano, R.; Ho, D.; Minh, T. Combining Sentinel-1 and Sentinel-2 Satellite Image Time Series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Ganesh, P.; Volle, K.; Burks, T.F.; Mehta, S.S. Orange: Mask R-CNN based Mask R-CNN based Mask R-CNN based Detection and Segmentation Orange: Mask R-CNN based Detection and Segmentation Detection and Segmentation Detection and. IFAC Pap. 2019, 52, 70–75. [Google Scholar] [CrossRef]

- El Mendili, L.; Puissant, A.; Chougrad, M.; Sebari, I. Towards a multi-temporal deep learning approach for mapping urban fabric using sentinel 2 images. Remote Sens. 2020, 12, 423. [Google Scholar] [CrossRef]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in land cover and crop classification based on temporal features learning from Sentinel-2 data using recurrent-Convolutional Neural Network (R-CNN). Appl. Sci. 2020, 10, 238. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; García-Haro, F.J.; Martínez, B.; Izquierdo-Verdiguier, E.; Atzberger, C.; Camps-Valls, G.; Gilabert, M.A. Understanding deep learning in land use classification based on Sentinel-2 time series. Sci. Rep. 2020, 10, 17188. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sloan, J.J.; Sloan, J.J. Deep learning-based water quality estimation and anomaly detection using Landsat-8/Sentinel-2 virtual constellation and cloud computing. GIScience Remote Sens. 2020, 57, 510–525. [Google Scholar] [CrossRef]

- Interdonato, R.; Ienco, D.; Gaetano, R.; Ose, K. DuPLO: A DUal view Point deep Learning architecture for time series classificatiOn. ISPRS J. Photogramm. Remote Sens. 2019, 149, 91–104. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Viana-Soto, A.; Aguado, I.; Martínez, S. Assessment of post-fire vegetation recovery using fire severity and geographical data in the mediterranean region (Spain). Environments 2017, 4, 90. [Google Scholar] [CrossRef]

- Keshtkar, H.; Voigt, W.; Alizadeh, E. Land-cover classification and analysis of change using machine-learning classifiers and multi-temporal remote sensing imagery. Arab. J. Geosci. 2017, 10, 154. [Google Scholar] [CrossRef]

- Liu, J.; Huffman, T.; Shang, J.; Qian, B.; Dong, T.; Zhang, Y. Identifying Major Crop Types in Eastern Canada Using a Fuzzy Decision Tree Classifier and Phenological Indicators Derived from Time Series MODIS Data. Can. J. Remote Sens. 2016, 42, 259–273. [Google Scholar] [CrossRef]

- Fetai, B.; Račič, M.; Lisec, A. Deep Learning for Detection of Visible Land Boundaries from UAV Imagery. Remote Sens. 2021, 13, 2077. [Google Scholar] [CrossRef]

- Baumhoer, C.A.; Dietz, A.J.; Kneisel, C.; Kuenzer, C. Automated Extraction of Antarctic Glacier and Ice Shelf Fronts from Sentinel-1 Imagery Using Deep Learning. Remote Sens. 2019, 11, 2529. [Google Scholar] [CrossRef]

- Tian, S.; Dong, Y.; Feng, R.; Liang, D.; Wang, L. Mapping mountain glaciers using an improved U-Net model with cSE. Int. J. Digit. Earth 2022, 15, 463–477. [Google Scholar] [CrossRef]

- Scott, G.J.; Marcum, R.; Davis, C.H.; Scott, G.J.; England, M.R.; Starms, W.A.; Member, S.; Marcum, R.A.; Davis, C.H. Training Deep Convolutional Neural Networks Training Deep Convolutional Neural Networks for Land—Cover Classification of High-Resolution Imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 549–553. [Google Scholar] [CrossRef]

- Bhosle, K.; Musande, V. Evaluation of CNN model by comparing with convolutional autoencoder and deep neural network for crop classification on hyperspectral imagery. Geocarto Int. 2020, 37, 813–827. [Google Scholar] [CrossRef]

- Ronnerberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Neural Networks for Biomedical Image Segmentation. Comput. Sci. Dep. Univ. Freibg. 2015. [Google Scholar]

- Parente, L.; Taquary, E.; Silva, A.; Souza, C.; Ferreira, L. Next Generation Mapping: Combining Deep Learning, Cloud Computing, and Big Remote Sensing Data. Remote Sens. 2019, 11, 2881. [Google Scholar] [CrossRef]

- Zhang, P.; Xu, C.; Ma, S.; Shao, X.; Tian, Y.; Wen, B. Automatic Extraction of Seismic Landslides in Large Areas with Complex Environments Based on Deep Learning: An Example of the 2018 Iburi Earthquake, Japan. Remote Sens. 2020, 12, 3992. [Google Scholar] [CrossRef]

- Sood, V.; Tiwari, R.K.; Singh, S.; Kaur, R.; Parida, B.R. Glacier Boundary Mapping Using Deep Learning Classification over Bara Shigri Glacier in Western Himalayas. Sustainability 2022, 14, 13485. [Google Scholar] [CrossRef]

- Janus, J.; Bozek, P. Land abandonment in Poland after the collapse of socialism: Over a quarter of a century of increasing tree cover on agricultural land. Ecol. Eng. 2019, 138, 106–117. [Google Scholar] [CrossRef]

- Lark, T.J.; Mueller, R.M.; Johnson, D.M.; Gibbs, H.K. Measuring land-use and land-cover change using the US department of agriculture’s cropland data layer: Cautions and recommendations. Int. J. Appl. Earth Obs. Geoinf. 2017, 62, 224–235. [Google Scholar] [CrossRef]

- Gaetano, R.; Ienco, D.; Ose, K.; Cresson, R. A Two-Branch CNN Architecture for Land Cover Classification of PAN and MS Imagery. Remote Sens. 2018, 10, 1746. [Google Scholar] [CrossRef]

- Lucas, R.; Rowlands, A.; Brown, A.; Keyworth, S.; Bunting, P. Rule-based classification of multi-temporal satellite imagery for habitat and agricultural land cover mapping. ISPRS J. Photogramm. Remote Sens. 2007, 62, 165–185. [Google Scholar] [CrossRef]

- Phiri, D.; Morgenroth, J. Developments in Landsat Land Cover Classification Methods: A Review. Remote Sens. 2017, 9, 967. [Google Scholar] [CrossRef]

- Singh, S.; Tiwari, R.K.; Sood, V.; Gusain, H.S.; Prashar, S. Image Fusion of Ku-Band-Based SCATSAT-1 and MODIS Data for Cloud-Free Change Detection Over Western Himalayas. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).