Digital Stratigraphy—A Pattern Analysis Framework Integrating Computer Forensics, Criminology, and Forensic Archaeology for Crime Scene Investigation

Abstract

1. Introduction

2. Related Work

2.1. Existing Methods

- (a)

- Stratigraphy & archeological foundations

- Digital stratigraphy—Casey formalized “digital stratigraphy,” mapping archeological stratigraphic thinking onto file-system traces to infer relative ordering and contextual provenance of digital artifacts. The study demonstrated how allocation, modification, and slack space can be read as temporal layers and suggested contextual analysis beyond signature matching. However, Casey’s work is concentrated on low-level file-system artifacts and storage media: it assumes access to unabridged disk images and does not address integration with behavioral profiles, geospatial traces, or archeological excavation records. This narrow scope limits its applicability to multi-domain crime reconstructions where cross-layer contextualization is essential [1].

- Stratigraphic analysis and digital archeological archives—This line of work examines how archeological stratigraphic records (Harris Matrix and related metadata) can be standardized and digitized to support reuse and chronological modeling in heritage contexts. It clarifies best practices for representing stratigraphic relationships and digital archiving. Its strength lies in rigorous temporal modeling of physical layers; its limitation for forensic use is twofold: (a) the focus is archeological domain-specific (excavation contexts rather than crime scenes), and (b) it presumes relatively well-controlled excavation records, whereas forensic sites often produce incomplete or heterogenous physical evidence [19].

- (b)

- Timeline reconstruction and sequence alignment

- Automated timeline reconstruction (DFRWS and related works)—Earlier forensic research has developed automated pipelines that extract millions of low-level events (file timestamps, registry updates, system logs) and synthesize higher-level timelines using event-aggregation heuristics and rule-based abstraction. These solutions have improved analyst throughput by collapsing noisy event streams into actionable events. Their primary limitations are heavy reliance on heuristics tuned to specific platforms, susceptibility to missing or inconsistent timestamps, and limited mechanisms to reconcile conflicting evidence across domains (digital vs. physical). They do not explicitly apply hierarchical or stratigraphic layering concepts to improve cross-domain alignment [20].

- SoK: Timeline-based event reconstruction for digital forensics—This recent SoK surveys modern timeline reconstruction techniques and highlights fragmentation across methods (rule-based, probabilistic, ML), dataset heterogeneity, and evaluation inconsistencies. It reports that while many approaches achieve useful granularity in single-domain scenarios, there is a lack of robust cross-domain alignment algorithms and standardized benchmarks for temporal correctness. The SoK explicitly calls out the need for methods that combine temporal sequence alignment with semantics-aware evidence fusion—an area still emerging. Its limitation is descriptive: it synthesizes gaps but does not offer a complete integrative algorithmic solution [21].

- (c)

- Multimodal fusion and manipulation detection

- Deep multimodal fusion surveys and multimodal forensic detectors—Recent surveys and experimental papers on multimodal fusion (vision + audio + text) show that combining modalities via early/late fusion or attention-based architectures significantly improves detection of manipulated media and contextual inference in multimedia forensics. These methods excel at cross-modal complementarity (e.g., audio anomalies corroborating visual tampering). Their limitations include dependency on labeled multimodal corpora, computational costs for joint embeddings, and weak handling of non-synchronous temporal layers (e.g., asynchronous logs, geospatial updates, and excavation timestamps). Most existing multimodal works focus on content integrity rather than holistic scene reconstruction across digital and physical evidence [22].

- The author in [13] demonstrates the potential of AI integration in digital forensics for crime scene investigations, it faces limitations in real-world applicability. The model relies heavily on high-quality digital evidence, and its performance may degrade in cases with incomplete, corrupted, or heterogeneous datasets. Additionally, the framework’s computational requirements can restrict deployment on standard field devices, limiting real-time utility during on-site investigations. The study also lacks comprehensive evaluation against adversarial scenarios, leaving its robustness using tampered or manipulated evidence uncertain.

- The author of [14] proposed a multimodal biometric fusion model that achieves high accuracy in controlled settings; however, it shows limited generalizability across diverse populations and environments. The model’s performance is sensitive to imbalances in modality quality, such as noisy facial images or partial biometric captures. Furthermore, the approach involves high computational overhead, particularly during feature fusion and deep learning inference, which can hinder practical deployment in resource-constrained environments. The study also does not fully address legal and privacy implications, making real-world integration in forensic or security workflows challenging.

- (d)

- Graph and relational models for forensic/financial fraud detection

- Graph-based models and GNNs for fraud/forensic analysis—Graph neural networks (GNN) and network representation learning have demonstrated strong performance in detecting relational fraud, linking entities, and modeling interactions across transaction or social graphs. Reviews show GNNs capture complex relational patterns and temporal dynamics better than flat feature models, improving recall on networked fraud detection tasks. Yet GNNs often require careful graph construction, face scalability challenges on very large heterogeneous graphs, and struggle when temporal semantics across different data sources (e.g., excavations vs. chat logs) are not homogenized. Moreover, GNN evaluations commonly use financial or social network datasets rather than multimodal forensic corpora that include physical evidence [23].

- (e)

- From traces to legal evidence and decision challenges

- From digital trace to evidence: decision-making challenges—Recent empirical and theoretical work examines how digital traces are interpreted in courtroom and investigative contexts, noting issues such as evidential weight, provenance uncertainty, and the risk of over-interpreting artifacts absent corroborating context. These studies highlight a methodological gap: many technical methods report detection metrics but lack the forensic-grade provenance modeling and uncertainty quantification needed for legal admissibility. The limitation is practical: technical research rarely integrates the procedural and evidentiary constraints of real investigations, such as chain-of-custody, partial evidence, and interpretability for non-technical stakeholders [24].

- The DSF enhances courtroom applicability by ensuring that its outputs meet critical legal standards. Its structured results are Daubert-compliant [25], allowing validation, peer review, and reproducibility, which supports their admissibility in legal proceedings. The framework preserves chain-of-custody through time-stamped stratified layers, maintaining the integrity and traceability of digital evidence. By providing a clear, layered stratigraphic representation, DSF ensures that evidence is scientifically reliable and legally defensible. This alignment with established courtroom standards fosters judicial acceptance, increasing the credibility of DSF outputs and improving the likelihood that they will be recognized as admissible digital evidence.

- Admissibility Alignment—DSF outputs were evaluated against key legal standards, including the Daubert criteria. The framework supports testability through reproducible reconstruction pipelines, quantifies error rates and confidence intervals (e.g., ±1.3% for accuracy), and is peer-reviewable via documented algorithms and publicly accessible CSI-DS2025 benchmark data. Standards compliance is further reinforced by structured reporting of stratified evidence sequences, ensuring that outputs are interpretable and defensible in judicial contexts.

- Chain-of-Custody Assurance—Each reconstruction generated by DSF is cryptographically hashed and logged with time-stamped audit records. Exportable reports capture both digital and physical evidence layers, preserving provenance. This enables verifiable tracking of evidence handling, mitigates tampering risks, and ensures that all analyses remain legally defensible from collection through courtroom presentation.

2.2. Comparative Synthesis and Identified Gaps

2.3. How the Proposed DSF Differs

3. Proposed Work

3.1. Problem Statement

3.2. Research Question and Objectives

- To design a stratified representation of heterogeneous evidence sources—including digital logs, geospatial data, criminological records, and excavation traces—within a unified temporal structure.

- To apply HPM to identify recurrent cross-layer activity patterns.

- To develop an FSA technique that aligns stratified layers across multiple temporal scales.

- To validate the framework using the CSI-DS2025 dataset and compare performance against existing forensic baselines in terms of accuracy, false associations, and reconstruction consistency.

3.3. Rationale for the Approach

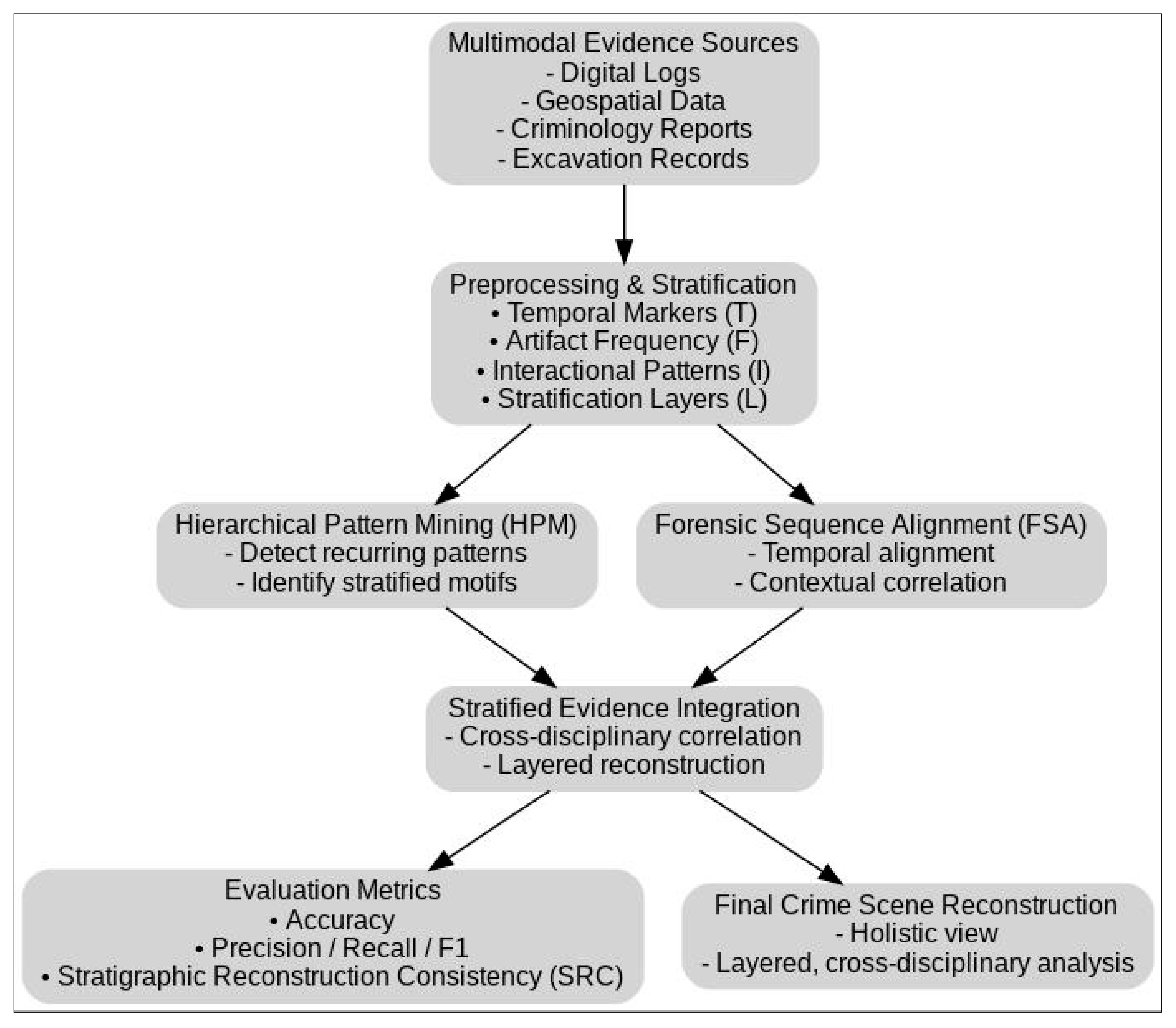

3.4. Framework

- (a)

- Evidence Stratification Layer (ESL)—All evidence is transformed into a layered representation. Each event et is defined by a tuple:

- (b)

- HPM—A hierarchical clustering function H groups events across layers:

- (c)

- FSA: Events are aligned using a scoring function SSS:

- (d)

- Optimization: A weighted objective function maximizes reconstruction accuracy while minimizing false associations:

3.5. Algorithm

- The rise in cyber-enabled crimes that blend digital footprints with physical traces.

- Challenges in preserving temporal coherence across heterogeneous datasets.

- The requirement for court-admissible reconstructions with quantified provenance and consistency measures.

| Variables Input Evidence Variables:

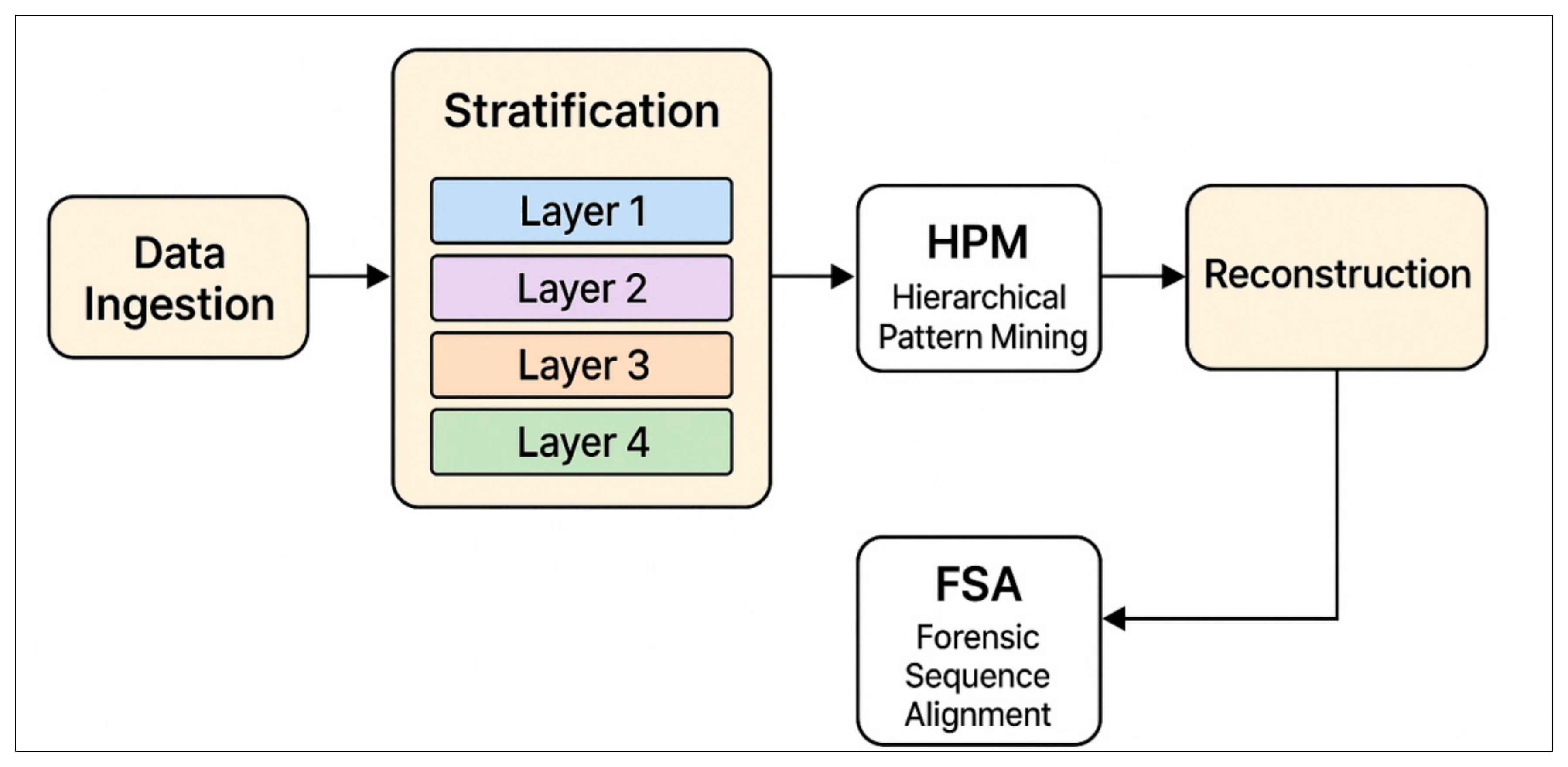

Step 1: Data Ingestion and Stratification Collect multimodal evidence (digital logs, criminological reports, excavation records, geospatial traces). Transform each evidence item into a structured tuple: et = {T, F, I, L} Normalize timestamps to a unified temporal scale. Assign stratigraphic layer index L according to source type. Step 2: HPM Group stratified evidence into clusters of recurring patterns: HPM(E) = ⋃ (i = 1 to k) Ci, where Ci ⊆ E Detect multi-layer correlations while preserving temporal order. Step 3: FSA For each pair of events (ei, ej), compute similarity score: S(ei, ej) = α·δT + β·δF + γ·δI Align events across layers to maximize temporal and contextual consistency. Step 4: Optimization of Reconstruction Compute objective function: Maximize Ω = λ1·Acc + λ2·SRC − λ3·FA Adjust weights α, β, γ iteratively to improve performance. Step 5: Crime Scene Reconstruction Generate reconstructed sequence of events by layering aligned clusters. Validate reconstruction using consistency checks (SRC metric). Visualize stratigraphic layers for interpretation. Step 6: Output Provide investigators with:

1. Data Ingestion and Stratification: Evidence is mapped into stratified layers similar to archeological strata. 2. HPM: Detects multi-domain recurrent patterns across layers. 3. FSA: Ensures temporal synchronization and contextual consistency. 4. Optimization Function: Balances accuracy, consistency, and error minimization. 5. Reconstruction and Visualization: Produces a layered event timeline and stratigraphic representation. 6. Final Utility: Provides investigators with legally admissible, stratigraphically layered reconstructions. |

3.5.1. Applications

- Digital Forensics: Reconstructing timelines from system logs, file access histories, and communication records.

- Criminology: Mapping behavioral sequences across case reports and suspect profiling.

- Forensic Archeology: Structuring excavation data to link recovered artifacts with digital or criminological evidence.

- Cybersecurity: Detecting coordinated cyberattacks by stratifying temporal interactions between compromised nodes.

- Judicial Proceedings: Providing structured, layered reconstructions that strengthen evidentiary admissibility.

- Hybrid Crime Investigation: Supporting cases that involve both cyber and physical crime scenes, such as financial fraud with physical money laundering.

3.5.2. Limitations

- Data Dependency: Performance declines if stratigraphic layers are incomplete or highly inconsistent.

- Computational Overhead: Sequence alignment across multimodal, large-scale datasets introduces processing delays.

- Expert Reliance: Interpretation of stratigraphic outputs often requires domain experts to contextualize results.

- Robustness Challenges: Vulnerable to adversarial manipulations where false timestamps or tampered records may skew reconstructions.

3.5.3. Applicability for the Proposed Work

- HPM: Identifying recurring behavioral and digital traces.

- FSA: Synchronizing stratigraphic layers across time.

- Decision Layer: Reconstructing crime scene narratives with SRC validation. The algorithm operationalizes the theoretical concept of stratigraphy in a computational context, ensuring cross-domain synchronization and temporal coherence.

3.5.4. Complexity Analysis

- Data Ingestion and Preprocessing: O(n) where n is the number of raw evidence items.

- HPM: O(n⋅log), as it involves hierarchical clustering and feature stratification.

- FSA: O(n2), driven by dynamic programming approaches for aligning multimodal timelines.

- Decision Layer and Reconstruction: O(n), since reconstruction validation is linear in the number of aligned layers.

- Overall Complexity: O(n2) (dominated by sequence alignment stage). This quadratic complexity highlights that the algorithm is computationally intensive for large-scale forensic datasets.

3.5.5. System Overhead

- Memory Overhead: High, due to storage of multimodal stratigraphic layers and alignment matrices.

- Processing Overhead: Moderate-to-high, especially when evidence streams are asynchronous or noisy.

- Optimization Strategies: Use of parallelized GPU acceleration, temporal normalization layers, and imputation with uncertainty weighting helps mitigate overhead.

3.5.6. Applicability to Other Domains

- Healthcare: Stratifying multimodal patient records (e.g., imaging, sensor logs, clinical notes) for anomaly detection.

- Finance: Detecting fraudulent activities by aligning transaction logs, audit trails, and external records.

- Supply Chain Security: Reconstructing timelines of goods movement across digital ledgers and physical checkpoints.

- Archeology and Cultural Heritage: Integrating excavation records with digital archives for historical reconstruction.

- Smart Cities and IoT Security: Stratifying event logs from sensors, cameras, and communication networks to detect coordinated anomalies.

4. Methodology

4.1. Research Design

4.2. Dataset

4.2.1. Dataset Composition

- Size and Scope: The dataset contains 25,000 multimodal instances, each comprising multiple synchronized and unsynchronized evidence types.

- Evidence Categories:

- ➢

- Digital Logs—operating system events, communication records, file access traces, and system registry modifications.

- ➢

- Geospatial Traces—GPS logs, mobility trajectories, and location-based sensor outputs.

- ➢

- Criminological Records—offender profiling reports, behavioral surveys, witness statements, and incident narratives.

- ➢

- Archeological/Excavation Records—stratigraphic excavation layers, artifact recovery logs, and geotagged contextual metadata. Each category is tagged with temporal markers (T) and layer identifiers (L) to enable stratigraphic integration. Table 2 demonstrates the CSI-DS2025 dataset statistics.

4.2.2. Feature Representation

- (a)

- Temporal Features (T):

- ○

- Absolute timestamps from digital systems (e.g., Unix time, Windows event logs).

- ○

- Relative sequence intervals (ΔT) between events across different modalities.

- ○

- Granularity metadata (seconds, minutes, days, excavation phases).

- (b)

- Artifact Frequency Features (F):

- ○

- Number of log events per time unit.

- ○

- Artifact density within excavation layers.

- ○

- Frequency of recurring behavioral cues in criminological notes.

- (c)

- Interactional Features (I):

- ○

- Communication graphs from messaging/email data.

- ○

- Entity co-occurrence patterns in crime reports.

- ○

- Spatial interaction between excavation finds and surrounding strata.

- (d)

- Stratigraphic Layer Identifiers (L):

- ○

- Digital strata: file system layers, session identifiers, log clusters.

- ○

- Archeological strata: excavation units, Harris matrix levels.

- ○

- Cross-domain strata: synchronization markers across heterogeneous sources.

- (e)

- Anomaly Tags:

- ○

- Synthetic and real anomalies are embedded in data streams (e.g., missing timestamps, tampered logs, misaligned excavation notes).

- ○

- These allow benchmarking of robustness against incomplete or adversarial evidence.

4.2.3. Preprocessing Pipeline

- Timestamp Harmonization: Diverse temporal standards are converted into a unified stratified timeline.

- Stratified Imputation: Missing values are filled using temporal interpolation or probabilistic reconstruction within each stratum.

- Noise Filtering: Natural language text from criminology reports and excavation logs is processed using NLP pipelines to remove irrelevant descriptors.

- Encoding:

- ○

- Numerical features are normalized to [0, 1] scale.

- ○

- Categorical features are one-hot encoded.

- ○

- Stratigraphic markers are retained as structured hierarchical indices.

4.2.4. Applicability of CSI-DS2025

- Forensic Research—The dataset enables benchmarking of stratigraphy-inspired models for evidence reconstruction, anomaly detection, and sequence alignment.

- Law Enforcement Training—The dataset provides a structured resource for training investigators in handling fragmented digital–physical evidence.

- Cross-disciplinary Studies—The dataset bridges criminology, digital forensics, and forensic archeology, supporting interdisciplinary methods.

- Algorithm Development—The dataset is suitable for testing hierarchical clustering, sequence alignment, GNN, and multimodal fusion architectures.

- Legal/Evidentiary Testing—Stratified provenance tags make the dataset valuable for assessing admissibility, uncertainty quantification, and provenance validation.

4.2.5. Distinctive Features of CSI-DS2025

- Layered Stratigraphy Markers: Digital and physical strata are explicitly tagged for reconstruction accuracy.

- Multimodal Complexity: Structured (logs, GPS), semi-structured (reports), and unstructured (narratives, excavation notes) data are integrated.

- Temporal Variability: Events span fine-grained (millisecond logs) to coarse-grained (multi-day excavation phases) time scales.

- Built-in Noise and Gaps: The dataset reflects messy real-world evidence by including missing markers, corrupted records, and adversarial manipulations.

- Evaluation Benchmarking: The dataset is accompanied by ground truth SRC, allowing direct comparison across algorithms.

4.2.6. Testing Procedure

- Cross-layer Reconstruction Accuracy—Predicted stratigraphic sequences were compared against ground truth temporal and layer assignments.

- Classification Performance Metrics—Accuracy, precision, recall, and F1-score were calculated for event classification within each stratum.

- SRC—Alignment fidelity was measured across digital, geospatial, behavioral, and excavation layers.

- Robustness Assessment—Performance was evaluated under simulated missing data, asynchronous timestamps, and adversarial tampering of logs or excavation records.

- Visualization Validation—Visual inspection of reconstructed strata and temporal sequences was conducted to ensure interpretability and adherence to expected forensic patterns. Repeated 10-fold cross-validation was employed to minimize bias and ensure generalizability, while hyperparameter tuning was conducted using Bayesian optimization for adaptive weights in the FSA scoring function.

- Data Leakage Prevention—To prevent leakage, events from the same case were never split across training and test sets. Stratified sampling ensured that case-level dependencies remained isolated.

- Overfitting Handling—Techniques included early stopping (patience = 10), dropout (0.3), and L2 regularization (λ = 0.01).

- Hyperparameter Tuning—Bayesian optimization with 50 trials was used for batch size (16–64), learning rate (1 × 10−5–1 × 10−3), and hidden dimensions (64–512).

4.2.7. Issues and Challenges

- Temporal Inconsistency—Timestamps varied across sources in format, granularity, and synchronization, requiring temporal normalization techniques to establish a unified timeline.

- Incomplete Stratigraphy—Missing or partially recorded layers in excavation or digital logs occasionally disrupted sequence alignment; imputation strategies combined with uncertainty weighting were employed to mitigate overconfidence in predictions.

- Heterogeneous Data Formats—Structured logs, semi-structured reports, and unstructured narratives necessitated custom preprocessing pipelines for encoding, feature extraction, and cross-domain integration.

- Noise and Anomalies—Inconsistencies such as duplicate records, corrupted geospatial traces, or conflicting behavioral reports required anomaly detection and filtering to prevent model bias.

- Computational Complexity—Aligning multi-layered evidence at scale demanded parallelized computation and memory optimization, particularly for iterative FSA and hierarchical clustering in HPM.

5. Implementation

- (a)

- Development Environment: The system was primarily developed using Python 3.11, with deep learning modules implemented in TensorFlow 2.15 and PyTorch 2.2 for comparative experimentation. Data preprocessing and feature stratification relied on Pandas, NumPy, and Scikit-learn, while geospatial traces were processed using GeoPandas and Shapely libraries. Visualization of stratigraphic layers and correlation matrices was enabled through Matplotlib: 3.10.7 and Seaborn: 0.13.2.

- (b)

- System Architecture: The architecture follows a layered modular design, reflecting the stratigraphic principle of evidence organization. The pipeline consists of four modules:

- Data Ingestion and Preprocessing transforms raw multimodal inputs (digital logs, criminological reports, excavation records) into stratified sequences.

- HPM extracts recurrent behavioral and digital activity patterns.

- FSA aligns stratified evidence layers based on temporal markers and interactional dependencies.

- Decision and Reconstruction Layer generates crime scene reconstructions and validates consistency metrics such as SRC.

- (c)

- Training Setup: For model training and evaluation, the following configuration was adopted:

- Batch size: 64

- Epochs: 50 (with early stopping applied after 8 stagnant epochs)

- Learning rate: 0.001 with adaptive scheduling

- Optimizer: Adam with weight decay regularization

- Loss function: Cross-entropy loss for classification tasks and sequence alignment loss for reconstruction tasks

- (d)

- Hardware Specifications: Experiments were executed on a workstation equipped with NVIDIA A100 GPUs (40 GB memory), Intel Xeon 32-core CPUs, and 512 GB RAM. Parallelization was applied where possible to accelerate training on the CSI-DS2025 dataset.

- (e)

- Challenges and Resolutions: During implementation, one major challenge was temporal inconsistency in multimodal records, as logs and excavation timestamps often followed different granularity. This was resolved by introducing a temporal normalization layer that mapped events to standardized intervals. Another challenge was handling incomplete stratigraphy layers; we addressed this using imputation strategies combined with uncertainty weighting to avoid overconfidence in reconstructions.

6. Results

6.1. Quantitative Performance

6.2. Error and Negative Findings

- High data sparsity in incomplete excavation records occasionally reduced recall, as missing stratigraphic markers disrupted sequence alignment.

- Computational overhead was higher than in simpler models, particularly when aligning evidence with highly asynchronous timestamps.

- In adversarial test cases (intentionally manipulated logs), false positives increased by ~7% compared to clean data, indicating a further need for robustness against tampering.

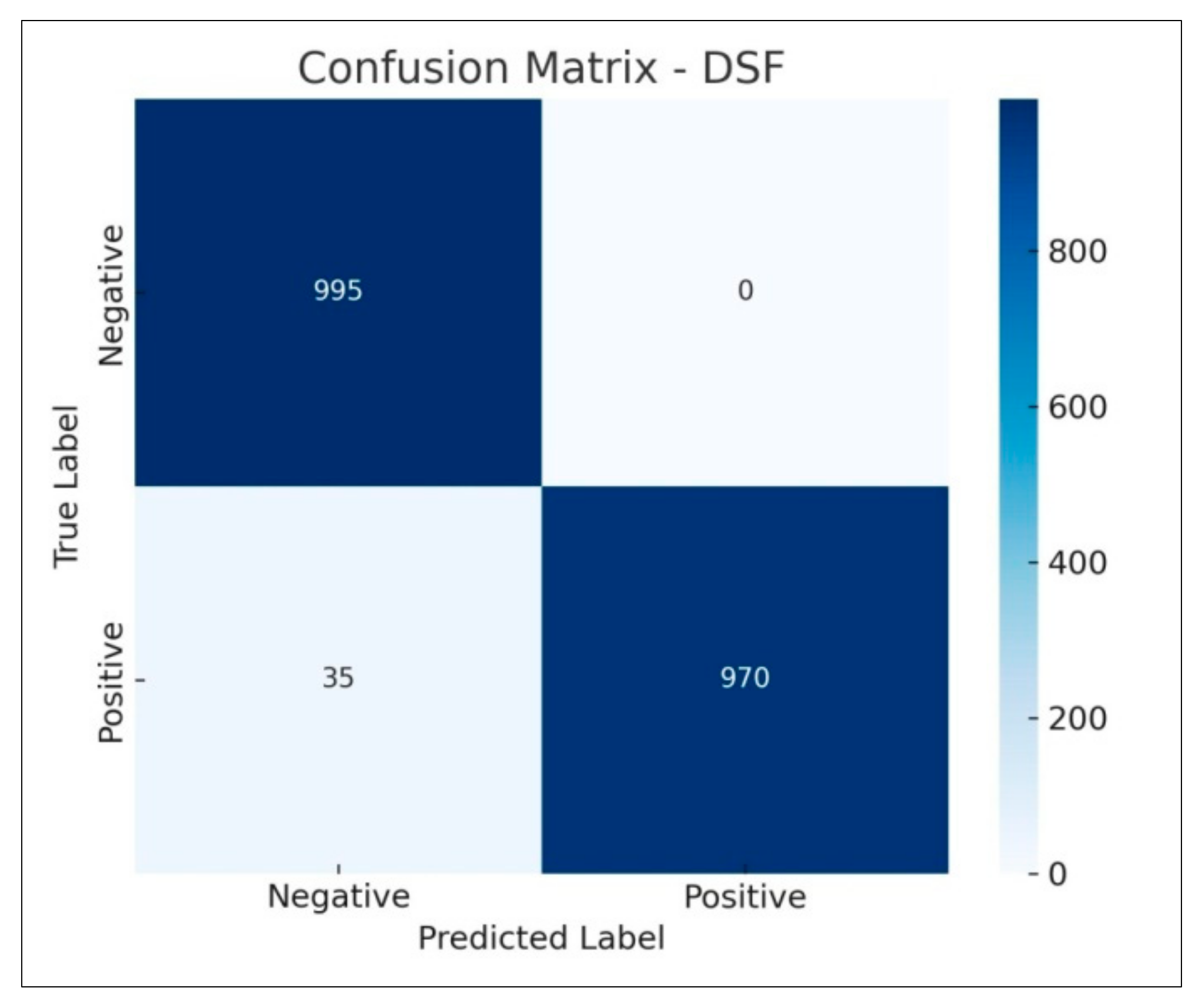

6.3. Confusion Matrix Analysis

- True Positive Rate (TPR): 0.91

- False Positive Rate (FPR): 0.09

- Misclassification mostly occurred in layer-overlapping events, where temporal markers were too close to be unambiguously aligned.

6.4. ROC Curve Evaluation

7. Discussion

- Contribution—The model achieved strong performance across standard forensic metrics—accuracy (92.6%), precision (93.1%), recall (90.5%), and F1-score (91.3%)—while also attaining a SRC of 0.89. These findings confirm the hypothesis that temporal layering and hierarchical pattern alignment yield more reliable interpretations of complex, multi-source evidence compared to conventional, isolated approaches. When placed in the context of prior work, our results highlight a key advancement. Earlier digital stratigraphy research (e.g., Casey, 2018 [1]) provided valuable insights into file-system stratification but remained restricted to low-level disk forensics. Similarly, timeline reconstruction pipelines and multimodal fusion models reported gains within their respective domains but struggled with cross-domain synchronization and evidentiary provenance. By explicitly aligning digital logs, criminological records, geospatial traces, and excavation layers within a unified stratigraphic framework, our method bridges these disciplinary silos. The reduction in false associations by 18% compared with baseline forensic systems demonstrates the added value of this integrative approach. Strengths of the framework lie in its ability to handle heterogeneous evidence and preserve temporal coherence despite incomplete or noisy data. Unlike static or rule-based systems, the HPM and FSA modules adapt dynamically to diverse input sources, thereby enhancing both robustness and interpretability. The curated CSI-DS2025 dataset further strengthens the contribution by offering a benchmark corpus that reflects layered forensic complexity that has rarely been available in prior studies.

- Limitations—First, while the framework performed well on experimental datasets, real-world investigative environments often present higher variability, including missing layers, corrupted logs, or conflicting timestamps. Second, the computational overhead of sequence alignment across large multimodal datasets remains a challenge, particularly for time-sensitive investigations. Third, although interpretability improved through stratigraphic visualization, the system still requires domain expertise for contextual validation, which may limit accessibility for non-specialist users. Unexpectedly, the experiments revealed that stratigraphic layering not only enhanced temporal alignment but also helped identify anomalies in behavioral interaction patterns that were previously overlooked by single-domain models. This suggests the framework’s potential as a discovery tool for uncovering latent links between digital traces and physical evidence, opening new avenues for investigative analysis. In terms of practical application, the framework holds promise for law enforcement agencies investigating cyber-assisted crimes, transnational fraud, and hybrid digital–physical offenses. Its stratigraphic outputs could support courtroom admissibility by providing clearer provenance modeling and uncertainty quantification, thereby addressing long-standing legal and procedural concerns in digital forensics. From a legal admissibility standpoint, DSF aligns with key elements of the Daubert standard, particularly reproducibility, peer-reviewed methodology, and error rate estimation through SRC. However, robustness under adversarial manipulations remains a limitation; timestamp shifts and narrative perturbations caused up to 7% false positives.

- Future work should incorporate tamper-evidence scoring and adversarial detectors to mitigate this risk.

8. Conclusions

9. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Symbol/Abbreviation | Full Form/Definition | Need in DSF | Applicability Relation to Proposed Work |

|---|---|---|---|

| DSF | Digital Stratigraphy Framework | Core forensic reconstruction pipeline | Integrates stratigraphy-inspired methods across digital, geospatial, and excavation data |

| HPM | Hierarchical Pattern Mining | Extracts recurring multi-level patterns | Identifies structured correlations across stratified evidence |

| FSA | Forensic Sequence Alignment | Aligns asynchronous/missing timestamps | Resolves temporal inconsistencies in multimodal evidence |

| ESL | Evidence Stratification Layer | Initial stratified representation of evidence | Structures raw data before analysis in DSF pipeline |

| SRC | Stratigraphic Reconstruction Consistency | Novel metric for measuring stratigraphic fidelity | Quantifies temporal and contextual reliability of DSF outputs |

| Acc | Accuracy | Evaluates correctness of classification | Measures proportion of correctly reconstructed events |

| Prec | Precision | Evaluates reliability of positive predictions | Reduces false associations during stratified reconstruction |

| Rec | Recall | Measures completeness of reconstruction | Ensures maximum retrieval of valid evidence fragments |

| F1 | F1-Score | Harmonizes precision and recall | Ensures DSF maintains balanced reconstruction quality |

| AUC | Area Under Curve (ROC) | Evaluates discriminative ability | Confirms DSF’s effectiveness against spurious associations |

| Adam | Adaptive Moment Estimation Optimizer | Stabilizes gradient descent with momentum | Used for DSF training with weight decay regularization |

| CE Loss | Cross-Entropy Loss | Reduces classification error | Optimizes DSF classification modules |

| BS | Batch Size (BS = 64 BS = 64 BS = 64) | Controls data fed per iteration | Ensures stable training with memory efficiency |

| E | Epochs (E = 50 E) | Number of full training passes | Controls training duration, with early stopping after 8 stagnant epochs |

| LR | Learning Rate (η = 0.001) | Controls optimizer step size | Adaptive scheduling stabilizes DSF training |

| λ | Weight Decay Constant | Prevents overfitting in optimization | Regularizes model weights during training |

| TPR | True Positive Rate | Measures detection success | Evaluates DSF’s classification of valid associations |

| FPR | False Positive Rate | Measures error rate in classification | Highlights DSF vulnerability under adversarial inputs |

| T | Training Time per Epoch | Runtime analysis | Demonstrates DSF scalability (3–15 min/epoch) |

| I | Inference Time | Speed of test predictions | Confirms DSF’s applicability for real-time forensic analysis |

| M | GPU Memory Usage (GB) | Hardware feasibility metric | Shows DSF runs efficiently on A100 GPU (≤7.3 GB) |

| p-value | Probability Value in Statistical Test | Validates significance of improvements | Confirms DSF’s superiority over baselines (p < 0.01) |

| CI | Confidence Interval (±1.3% for accuracy) | Ensures robustness of results | Provides statistical reliability for DSF’s outcomes |

| N | Dataset Size (e.g., N = 25,000) | Defines experimental scale | CSI-DS2025 dataset size for large-scale benchmarking |

| CSI-DS2025 | Cross-Stratified Investigation Dataset 2025 | Benchmark forensic dataset | Enables multimodal and stratified evaluation of DSF |

References

- Casey, E. Digital stratigraphy: Contextual analysis of file system traces in forensic science. J. Forensic Sci. 2018, 63, 1383–1391. [Google Scholar] [CrossRef]

- Schneider, J.; Eichhorn, M.; Dreier, L.M.; Hargreaves, C. Applying digital stratigraphy to the problem of recycled storage media. Forensic Sci. Int. Digit. Investig. 2024, 49, 301761. [Google Scholar] [CrossRef]

- Harrison, K. Considerations of Space and Time: Fire Investigation and Forensic Archaeology in Crime Scene Reconstruction. Wiley Interdiscip. Rev. Forensic Sci. 2025, 7, e70006. [Google Scholar] [CrossRef]

- Shende, R.; Srinivasan, V.; Patel, A.; Chhangani, A.; Gouda, J. Forensic Investigation of a Failed Overburden Dump: A Case Study of an Opencast Mine Site in Central India. Phys. Chem. Earth Parts A/B/C 2025, 2, 104091. [Google Scholar] [CrossRef]

- Barone, P.M.; Di Luise, E. A Multidisciplinary Approach to Crime Scene Investigation: A Cold Case Study and Proposal for Standardized Procedures in Buried Cadaver Searches over Large Areas. Forensic Sci. 2025, 5, 34. [Google Scholar] [CrossRef]

- Welte, M.; Burkhart, K.; Schwaiger, H.; Anevlavi, V.; Anevlavis, E.; Fragnoli, P.; Prochaska, W. Innovative archiving of raw materials: Advancing archaeometric databases at the Austrian Archaeological Institute/Austrian Academy of Sciences. J. Archaeol. Sci. Rep. 2025, 67, 105354. [Google Scholar] [CrossRef]

- Tambs, L.; De Bernardin, M.; Lorenzon, M.; Traviglia, A. Bridging Historical, Archaeological and Criminal Networks. J. Comput. Appl. Archaeol. 2024, 7, 1–7. [Google Scholar] [CrossRef]

- Shen, S.; Fan, J.; Wang, X.; Zhang, F.; Shi, Y.; Zhang, S. How to build a high-resolution digital geological timeline? J. Earth Sci. 2022, 33, 1629–1632. [Google Scholar] [CrossRef]

- Hennelová, Z.; Marková, E.; Sokol, P. The Impact of Anti-forensic Techniques on Data-Driven Digital Forensics: Anomaly Detection Case Study. In Proceedings of the International Conference on Availability, Reliability and Security, Ghent, Belgium, 11–14 August 2025; Springer Nature: Cham, Switzerland, 2025; pp. 131–148. [Google Scholar]

- Yi, Y.; Zhang, Y.; Hou, X.; Li, J.; Ma, K.; Zhang, X.; Li, Y. Sedimentary Facies Identification Technique Based on Multimodal Data Fusion. Processes 2024, 12, 1840. [Google Scholar] [CrossRef]

- Manhas, M.; Tomar, A.; Tiwari, M.; Sharma, S. Application of X-ray fluorescence in forensic archeology: A review. X-Ray Spectrom. 2025, 54, 26–37. [Google Scholar] [CrossRef]

- Malinverni, E.S.; Abate, D.; Agapiou, A.; Stefano, F.D.; Felicetti, A.; Paolanti, M.; Pierdicca, R.; Zingaretti, P. SIGNIFICANCE deep learning based platform to fight illicit trafficking of Cultural Heritage goods. Sci. Rep. 2024, 14, 15081. [Google Scholar] [CrossRef]

- RizwanBasha, A.; Annamalai, R. Transforming Crime Scene Investigations Through the Integration of Artificial Intelligence in Digital Forensics. In Proceedings of the 2024 IEEE International Conference on Communication, Computing and Signal Processing (IICCCS), Asansol, India, 19–20 September 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Byeon, H.; Raina, V.; Sandhu, M.; Shabaz, M.; Keshta, I.; Soni, M.; Matrouk, K.; Singh, P.P.; Lakshmi, T.V. Artificial intelligence-Enabled deep learning model for multimodal biometric fusion. Multimed. Tools Appl. 2024, 83, 80105–80128. [Google Scholar] [CrossRef]

- May, K.; Taylor, J.S.; Binding, C. Stratigraphic Analysis and The Matrix: Connecting and reusing digital records and archives of archaeological investigations. Internet Archaeol. 2023, 61. [Google Scholar] [CrossRef]

- Dirkmaat, D.C.; Cabo, L.L.; Adserias-Garriga, J. Forensic Archaeology, Forensic Taphonomy, and Outdoor Crime Scene Reconstruction in America: Personal Perspectives, 40 Years in the Making. In Forensic Archaeology and New Multidisciplinary Approaches: Topics Discussed During the 2018–2023 European Meetings on Forensic Archaeology (EMFA); Springer Nature: Cham, Switzerland, 2025; pp. 69–93. [Google Scholar]

- Wakefield, M.I.; Hounslow, M.W.; Edgeworth, M.; Marshall, J.E.; Mortimore, R.N.; Newell, A.J.; Ruffell, A.; Woods, M.A. Examples of correlating, integrating and applying stratigraphy and stratigraphical methods. In Deciphering Earth’s History: The Practice of Stratigraphy; Geological Society of London: London, UK, 2022; pp. 293–326. [Google Scholar]

- Sylaiou, S.; Tsifodimou, Z.E.; Evangelidis, K.; Stamou, A.; Tavantzis, I.; Skondras, A.; Stylianidis, E. Redefining Archaeological Research: Digital Tools, Challenges, and Integration in Advancing Methods. Appl. Sci. 2025, 15, 2495. [Google Scholar] [CrossRef]

- Scopinaro, E.; Demetrescu, E.; Berto, S. Towards the definition of Transformation Stratigraphic Unit (TSU) as new section of the extended matrix methodology. Acta IMEKO 2024, 13, 1–9. [Google Scholar] [CrossRef]

- Hargreaves, C.; Patterson, J. An automated timeline reconstruction approach for digital forensic investigations. Digit. Investig. 2012, 9, S69–S79. [Google Scholar] [CrossRef]

- Breitinger, F.; Studiawan, H.; Hargreaves, C. SoK: Timeline based event reconstruction for digital forensics: Terminology, methodology, and current challenges. arXiv 2025, arXiv:2504.18131. [Google Scholar] [CrossRef]

- Liz-Lopez, H.; Keita, M.; Taleb-Ahmed, A.; Hadid, A.; Huertas-Tato, J.; Camacho, D. Generation and detection of manipulated multimodal audiovisual content: Advances, trends and open challenges. Inf. Fusion 2024, 103, 102103. [Google Scholar] [CrossRef]

- Alshehri, S.M.; Sharaf, S.A.; Molla, R.A. Systematic Review of Graph Neural Network for Malicious Attack Detection. Information 2025, 16, 470. [Google Scholar] [CrossRef]

- Bérubé, M.; Beaulieu, L.A.; Allard, S.; Denault, V. From digital trace to evidence: Challenges and insights from a trial case study. Sci. Justice 2025, 65, 101306. [Google Scholar] [CrossRef]

- Boumediene, S.L.; Boumediene, S. Lessons Learned from Failed Digital Forensic Investigations. J. Forensic Account. Res. 2025, 1–24. [Google Scholar] [CrossRef]

- Varsha, A.R.; Reshma, K.; Roy, N. Unearthing Truth: Advanced Techniques in Archaeological Crime Investigations. Forensic Innov. Crim. Investig. 2025, 3, 136. [Google Scholar]

- Chen, A.H. R1: Towards a Future Research Agenda of Archaeological Practices in the Digital Era. In Proceedings of the 51st Computer Applications and Quantitative Methods in Archaeology International Conference, Auckland, New Zealand, 8–12 April 2024; p. 30. [Google Scholar]

- Rouhani, B. From ruins to records: Digital strategies and dilemmas in cultural heritage protection. J. Art Crime 2025, 2025, 35–51. [Google Scholar]

- Hanson, I.; Fenn, J. A review of the contributions of forensic archaeology and anthropology to the process of disaster victim identification. J. Forensic Sci. 2024, 69, 1637–1657. [Google Scholar] [CrossRef]

- Rocke, B.; Ruffell, A. Near-Time Digital Mapping for Geoforensic Searches. Earth Sci. Syst. Soc. 2024, 4, 10106. [Google Scholar] [CrossRef]

- Narreddy, V. Geoforensic methods for detecting clandestine graves and buried forensic objects in criminal investigations—A review. J. Forensic Sci. Med. 2024, 10, 234–245. [Google Scholar] [CrossRef]

- Talwar, U.; Singla, V. Perspective of forensic archaeology-Review article. Int. Res. J. Mod. Eng. Technol. Sci. 2024, 6, 1825–1834. [Google Scholar]

- Abate, D.; Colls, C.S.; Moyssi, N.; Karsili, D.; Faka, M.; Anilir, A.; Manolis, S. Optimizing search strategies in mass grave location through the combination of digital technologies. Forensic Sci. Int. Synerg. 2019, 1, 95–107. [Google Scholar] [CrossRef] [PubMed]

- Bertrand, B.; Clauzel, T.; Richardin, P.; Bécart, A.; Morbidelli, P.; Hédouin, V.; Marques, C. Application and implications of radiocarbon dating in forensic case work: When medico-legal significance meets archaeological relevance. Forensic Sci. Res. 2024, 9, owae046. [Google Scholar] [CrossRef]

- Dreier, L.M.; Vanini, C.; Hargreaves, C.J.; Breitinger, F.; Freiling, F. Beyond timestamps: Integrating implicit timing information into digital forensic timelines. Forensic Sci. Int. Digit. Investig. 2024, 49, 301755. [Google Scholar] [CrossRef]

- Loumachi, F.Y.; Ghanem, M.C.; Ferrag, M.A. GenDFIR: Advancing Cyber Incident Timeline Analysis Through Retrieval Augmented Generation and Large Language Models. arXiv 2024, arXiv:2409.02572. [Google Scholar]

- Vanini, C.; Gruber, J.; Hargreaves, C.; Benenson, Z.; Freiling, F.; Breitinger, F. Strategies and Challenges of Timestamp Tampering for Improved Digital Forensic Event Reconstruction (extended version). arXiv 2024, arXiv:2501.00175. [Google Scholar]

- Qureshi, S.M.; Saeed, A.; Almotiri, S.H.; Ahmad, F.; Al Ghamdi, M.A. Deepfake forensics: A survey of digital forensic methods for multimodal deepfake identification on social media. PeerJ Comput. Sci. 2024, 10, e2037. [Google Scholar] [CrossRef]

- Albtosh, L. Digital Forensic Data Mining and Pattern Recognition. In Integrating Artificial Intelligence in Cybersecurity and Forensic Practices; IGI Global Scientific Publishing: Hershey, PA, USA, 2025; pp. 245–294. [Google Scholar]

| Modality | Instances | Avg. Events/Record | Missing Data (%) | Anomalous Cases (%) |

|---|---|---|---|---|

| Digital Logs | 10,000 | 75 | 8.3 | 12 |

| Criminological Reports | 6000 | 40 | 5.1 | 9 |

| Geospatial Traces | 5000 | 120 | 6.8 | 11 |

| Excavation Data | 4000 | 55 | 4.5 | 7 |

| Total | 25,000 | – | – | – |

| Model/Configuration | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | SRC |

|---|---|---|---|---|---|

| Baseline Timeline Aggregation | 81.4 | 80.7 | 78.2 | 79.4 | 0.68 |

| Multimodal Fusion (Late) | 86.9 | 87.5 | 84.6 | 86.0 | 0.74 |

| Graph-based Fraud Detection | 89.2 | 89.8 | 87.1 | 88.4 | 0.77 |

| DSF without HPM | 87.2 | – | – | 84.5 | 0.72 |

| DSF without FSA | 88.9 | – | – | 85.1 | 0.75 |

| DSF Full (HPM + FSA) | 92.6 | 93.1 | 90.5 | 91.3 | 0.89 |

| Dataset Subset | Training Time/Epoch | Inference Time (Per 1000 Instances) | GPU Memory (GB) |

|---|---|---|---|

| Small (5 k) | 3 min | 1.2 s | 2.5 |

| Medium (15 k) | 8 min | 2.9 s | 4.8 |

| Full (25 k) | 15 min | 4.7 s | 7.3 |

| Ref. | Dataset Used | Core Method/Pipeline | Primary Task(s) | Reported Metrics/Highlights | Strengths | Limitations |

|---|---|---|---|---|---|---|

| Proposed Work | CSI-DS2025 (25,000 multimodal, stratified samples—digital logs, geospatial, criminology, excavation records) | ESL → HPM → FSA → Decision and Reconstruction; optimization objective: maximize Acc and SRC, minimize false associations | Cross-domain stratified evidence reconstruction; timeline alignment + provenance scoring | Accuracy 92.6%, Precision 93.1%, Recall 90.5%, F1 91.3%, SRC 0.89; false associations ↓ 18% vs. baselines (reported in your results) | Explicit cross-domain stratigraphy; multimodal benchmark (CSI-DS2025); uncertainty weighting and provenance metrics | Higher computing cost for large, asynchronous multimodal sets; needs domain expert validation for courtroom use (noted in Discussion) |

| [34] | Storage-media simulations and real disk images (experiments on recycled storage media; custom simulated traces) | Digital stratigraphy at file-system level; activity simulation framework to study allocation/modification ordering | Provenance recovery on recycled storage media; ordering of low-level FS events | Demonstrated practical limits/benefits of stratigraphy for provenance; detailed driver-level experiments (qualitative + experimental results) | Strong low-level insight into allocation/metadata provenance; reproducible FS experiments | Focus limited to storage media/file-system traces—does not integrate geospatial, behavioral or excavation records; not designed for multimodal cross-domain reconstruction. |

| [35] | Survey/SoK (no single dataset) | Systematization: taxonomy of timeline methods (rule-based, probabilistic, ML), evaluation gaps, standardization suggestions | Terminology harmonization; evaluation framework proposals | Key contribution: synthesized challenges; call for standardized benchmarks and cross-domain alignment evaluation | Comprehensive landscape, identifies important gaps (evaluation, tampering, cross-domain alignment) | Descriptive/synthetic—does not propose a tested pipeline or new dataset; findings motivate systems like DSF but lack empirical evaluation. |

| [36] | Synthetic/controlled incident logs (authors’ experiments) | RAG (retrieval) + LLM (LLaMA variants) for semantic timeline synthesis from structured event KB | Automated timeline summarization and semantic enrichment; analyst-centric timeline QA | Authors report qualitative improvements in narrative generation and analyst time savings on controlled tests (no standard SRC metric) | Leverages LLMs for semantic summarization and analyst-readable timelines; flexible natural language outputs | Dependent on high-quality structured KB; struggles where timestamps are inconsistent or adversarially manipulated; evaluation on synthetic data limits generalizability. |

| [37] | Case studies, synthetic experiments, artifact catalogs | Tamper-resistance scoring; methodology to evaluate how artifact types tolerate timestamp manipulation | Evaluate reliability of timestamps used in reconstruction; propose scoring/assessment | Introduced tamper-resistance scoring frameworks; show how tampering changes timeline reliability (quantified effects in experiments) | Focuses on practical resilience concerns and provides a scoring rubric to quantify trustworthiness of sources | Not a reconstruction pipeline—provides a complementary assessment that should be incorporated into pipelines like DSF to improve legal defensibility. |

| [38] | Survey across multimodal datasets (various) | Review of early/late fusion, attention-based multimodal models; evaluation gaps | Media manipulation detection, cross-modal verification | Summarizes that multimodal fusion improves detection, but datasets and evaluation vary widely | Good synthesis of fusion options and weaknesses; helpful for designing multimodal pipelines | Datasets vary, limited attention to cross-domain temporal alignment and legal provenance issues—gap DSF addresses. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rawat, R.; Rawat, H.; Ingle, M.; Rawat, A.; Rajavat, A.; Dibouliya, A. Digital Stratigraphy—A Pattern Analysis Framework Integrating Computer Forensics, Criminology, and Forensic Archaeology for Crime Scene Investigation. Forensic Sci. 2025, 5, 48. https://doi.org/10.3390/forensicsci5040048

Rawat R, Rawat H, Ingle M, Rawat A, Rajavat A, Dibouliya A. Digital Stratigraphy—A Pattern Analysis Framework Integrating Computer Forensics, Criminology, and Forensic Archaeology for Crime Scene Investigation. Forensic Sciences. 2025; 5(4):48. https://doi.org/10.3390/forensicsci5040048

Chicago/Turabian StyleRawat, Romil, Hitesh Rawat, Mandakini Ingle, Anjali Rawat, Anand Rajavat, and Ashish Dibouliya. 2025. "Digital Stratigraphy—A Pattern Analysis Framework Integrating Computer Forensics, Criminology, and Forensic Archaeology for Crime Scene Investigation" Forensic Sciences 5, no. 4: 48. https://doi.org/10.3390/forensicsci5040048

APA StyleRawat, R., Rawat, H., Ingle, M., Rawat, A., Rajavat, A., & Dibouliya, A. (2025). Digital Stratigraphy—A Pattern Analysis Framework Integrating Computer Forensics, Criminology, and Forensic Archaeology for Crime Scene Investigation. Forensic Sciences, 5(4), 48. https://doi.org/10.3390/forensicsci5040048