1. Introduction

Recommender systems (RS) have become essential components of modern digital platforms, helping users navigate vast information spaces by providing personalized content [

1]. Among various recommendation approaches, Collaborative Filtering (CF) [

2] remains one of the most effective techniques, relying on historical user-item interactions to infer preferences. CF has been successfully deployed in diverse domains such as e-commerce, streaming services, and social media [

3,

4]. However, CF models are highly sensitive to data quality. The presence of noisy, inconsistent, or malicious ratings can distort user similarity patterns and severely degrade recommendation performance [

5,

6].

To mitigate these challenges and enhance CF performance under adverse conditions, recent works have turned to Generative Adversarial Networks (GANs) as a means of modeling complex user-item interactions. GANs consist of a generator, which synthesizes data, and a discriminator, which distinguishes real from generated samples [

7]. Their adversarial training setup enables the generation of realistic and diverse rating profiles, making them suitable for tasks such as simulating user behavior, improving latent representations, and increasing sparse data [

8,

9]. For example, GANRS [

10] introduced synthetic profile generation but struggled with mode collapse, a challenge later addressed in WGANRS [

11] through Wasserstein loss. CFGAN [

12] modeled user-item interactions in a latent space, while GANMF [

13] combined matrix factorization with an autoencoder-based discriminator to enhance personalization. AACF [

14] introduced attention and virtual items to improve training efficiency in sparse conditions. Despite these advancements, existing GAN-based models generally treat all ratings as equally reliable, without considering the varying degrees of uncertainty or credibility in user feedback. This assumption limits their ability to filter or correct misleading inputs, particularly in real-world CF recommender systems where rating reliability can vary significantly.

In parallel, Dempster–Shafer Theory (DST) has established itself as a powerful mathematical framework for uncertain reasoning and evidence fusion [

15,

16]. It has been successfully applied in diverse fields such as spatial object matching [

17], hyperspectral image classification [

18], groundwater potential mapping [

19], and urban resilience assessment [

20], where data imperfections, ambiguity, and conflicts are prevalent. These applications highlight DST’s versatility in representing uncertainty, aggregating heterogeneous evidence, and supporting robust decision-making in complex environments.

Within the realm of recommender systems, DST has been effectively applied to capture user preferences under incomplete or ambiguous conditions [

21]. Prior works have used DST for trust estimation, multi-criteria recommendation [

22], and multimodal fusion [

23]. A recent approach [

24] further enhances Evidential Collaborative Filtering by employing Deng entropy and the Best Worst Method to optimize the assessment of user reliability. By assigning belief masses instead of deterministic labels, DST provides a flexible mechanism for expressing varying levels of confidence, making it particularly well suited for environments with noisy or unreliable data.

Although both DST and GANs have shown individual success in the recommendation domain, their integration remains underexplored. In this paper, we present DST-AttentiveGAN, a novel confidence-aware architecture that embeds DST-derived trust signals into both the generator and the discriminator of a GAN framework for rating denoising. This unified approach allows the model to distinguish between reliable and unreliable user feedback more effectively during training and inference. The main contributions of this work are as follows:

We propose a novel DST-based framework for quantifying the reliability of ratings using multiple sources of evidence (item popularity, variance, and user activity).

We design a generator that employs a cross-attention mechanism to selectively focus on reliable rating components, guided by the DST-derived confidence scores.

We develop a discriminator conditioned on both rating values and DST-based trust levels, trained using a Wasserstein GAN loss with gradient penalty to ensure training stability.

We perform extensive evaluations on benchmark datasets under various noise settings, showing that DST-AttentiveGAN consistently outperforms existing GAN-based recommendation models in terms of robustness and accuracy.

The remainder of this paper is organized as follows.

Section 2 details the proposed DST-AttentiveGAN architecture, including the construction of the confidence matrix, the cross-attentive generator, and the evidentially conditioned discriminator.

Section 3 describes the experimental settings, including datasets, evaluation metrics, and implementation details, and presents a detailed performance analysis compared to the state-of-the-art baselines. Finally,

Section 4 concludes the paper and outlines promising future research directions.

2. Method

Collaborative filtering recommender systems are often challenged by noisy, biased, or adversarial ratings that compromise prediction reliability. To address these issues, we propose DST-AttentiveGAN a DST-guided Generative Adversarial Network (GAN) architecture that integrates evidence-based confidence modeling and cross-attention mechanisms. The model is designed to reconstruct denoised rating profiles while being guided by the estimated trustworthiness of each user-item interaction.

Figure 1 illustrates the overall architecture of our proposed DST-AttentiveGAN.

2.1. Frame of Discernment

To systematically characterize the reliability of user–item interactions, we define the frame of discernment as the hypothesis set formulated by Equation (

1):

where Reliable corresponds to the assumption that a rating

is trustworthy and informative, while Noisy corresponds to the assumption that the rating is affected by bias, inconsistency, or adversarial manipulation.

2.2. Evidence Construction

Let be the user-item rating matrix, where N is the number of users and M the number of items. To assess the reliability of each individual rating , we construct a confidence matrix based on three forms of evidence: item popularity, item rating variance, and user activity.

First, the popularity of an item

i is defined as the proportion of users who have interacted with it, as shown in Equation (

2), where

N denotes the total number of users, and

is an indicator function that equals 1 if user

u rated item

i, and 0 otherwise.

Next, the variance of the ratings received by item

i captures the level of disagreement among users, as defined in Equation (

3):

Since this value is unbounded and can vary significantly across items, it is normalized using min-max scaling:

To reflect user involvement, we define the activity of user

u as the fraction of items they have rated, as shown in Equation (

5), where

M is the total number of items, and

is an indicator function that returns 1 if user

u rated item

i, and 0 otherwise.

Although

is also bounded within

, we apply min-max normalization for consistency across all evidence sources:

The three evidential signals are first expanded into full matrices P, V, and A, ensuring dimensional compatibility with the original rating matrix R. Together, they form the basis for a confidence matrix that captures the trust level of each user–item interaction. Since these signals provide complementary and sometimes conflicting perspectives on rating reliability, we adopt a fusion strategy guided by Dempster–Shafer Theory (DST).

DST is particularly well-suited here because it: (i) allows explicit modeling of uncertainty when evidence is incomplete, (ii) manages conflict between heterogeneous signals without forcing premature decisions, and (iii) is more flexible than classical probability theory, which generally assumes precise prior probabilities, while DST can handle situations where such priors are unavailable or only partially defined. This makes DST especially appropriate for recommender systems, where reliability signals are often noisy, incomplete, or contradictory.

In our framework, fusion is operationalized through a nonlinear aggregation that emphasizes agreements while penalizing conflicts, as shown in Equation (

7):

This formulation serves as a practical instantiation of DST’s principles: the pairwise terms strengthen consistent signals, while the triple interaction term reduces the influence of contradictory evidence. The resulting matrix , with , encodes a trust-aware confidence score for each rating. This fused representation is subsequently provided as auxiliary guidance to both the generator and discriminator in the proposed DST-AttentiveGAN.

2.3. Generator Architecture with DST-Guided Cross-Attention

The generator G aims to reconstruct a denoised rating vector for each user u, where denotes the user’s normalized (and potentially noisy) rating vector, and represents a vector of DST-based confidence scores that quantify the reliability of each rating entry.

To effectively leverage this evidential guidance, we employ a cross-attention mechanism that enables the generator to selectively focus on trustworthy components of

. As shown in Equation (

8), the input vectors are projected into a shared latent space using learnable linear transformations:

where

are trainable weight matrices, and

d represents the dimensionality of the attention space. Here,

denotes the confidence vector derived from Dempster–Shafer Theory (DST), which is used as the query, while

serves as the key and value for attention computation.

The core of the attention mechanism is the scaled dot-product operation (Equation (

9)), which computes an attention-weighted combination of the rating components based on the confidence signals:

This operation allows each dimension of the user’s rating vector to focus on the most informative dimensions of the confidence vector, thereby prioritizing trustworthy entries while down-weighting uncertain ones.

The attention output,

, is then concatenated with the original rating vector

, and the resulting representation is passed through a feedforward neural network to obtain the reconstructed rating vector, as defined in Equation (

10):

The sigmoid activation ensures that the final output lies within the normalized rating range . This cross-attention structure allows the generator to dynamically incorporate evidential trust at each layer, guiding the denoising process in a personalized and reliability-aware manner.

Generator Loss Function. The generator is trained using a composite objective that combines adversarial and reconstruction losses. As shown in Equation (

11), the total loss

includes two components:

The first term is an adversarial loss derived from the Wasserstein GAN framework, which encourages the generator to produce denoised vectors that the discriminator cannot distinguish from real ratings. The second term, weighted by hyperparameter

, enforces closeness to the ground-truth ratings through a reconstruction penalty defined by mean squared error (Equation (

12)):

The hybrid objective in Equation (

11) ensures that the generator produces outputs that are both adversarially plausible and numerically accurate, while aligning with the DST-derived evidence during the attention and reconstruction processes.

2.4. Discriminator Architecture with Evidential Conditioning

The discriminator D is designed to assess the authenticity of user rating vectors by leveraging evidential trust cues derived from the Dempster-Shafer Theory (DST). Specifically, it receives as input a pair , where denotes either a real or generated (denoised) normalized rating vector for user u, and is the associated DST-based confidence vector.

To jointly process both rating values and their corresponding confidence scores, the two vectors are concatenated into a single input as shown in Equation (

13):

This representation is passed through a sequence of fully connected layers with LeakyReLU activations, enabling the network to model non-linear dependencies and complex interactions between ratings and their associated trust signals. The final output is a scalar prediction (Equation (

14)):

which reflects the discriminator’s confidence that the input pair corresponds to an authentic (real) rating profile. By integrating DST-based evidence into the decision process, the discriminator not only evaluates statistical realism but also learns to recognize evidential consistency.

The discriminator is trained using the Wasserstein GAN with Gradient Penalty (WGAN-GP) framework, which stabilizes adversarial learning and promotes meaningful gradients. The loss function is formulated as shown in Equation (

15):

Here,

and

denote the distributions of real and generated rating vectors, respectively, and

is a regularization coefficient. To satisfy the 1-Lipschitz constraint required by WGAN, the gradient penalty term

is computed using interpolated samples

as defined in Equation (

16):

The penalty term itself is given by Equation (

17):

Equation (

15) ensures that the discriminator learns to distinguish real from generated profiles while incorporating trust-aware signals. The gradient regularization term in Equation (

17) stabilizes training, and the interpolation defined in Equation (

16) enables robust gradient estimation. Together, these formulations allow the discriminator to guide the generator toward producing outputs that are both statistically plausible and evidentially coherent.

The following Algorithm 1 summarizes the evidentially guided GAN framework for denoising user-item ratings.

| Algorithm 1 Evidentially-Conditioned GAN for Rating Denoising |

- Require:

Raw rating matrix - Ensure:

Denoised rating matrix - 1:

// Step 1: Evidence Construction - 2:

for each item i do - 3:

Compute item popularity: ▹ Equation ( 2) - 4:

Compute rating variance: ▹ Equation ( 3) - 5:

end for - 6:

for each user u do - 7:

Compute user activity: ▹ Equation ( 5) - 8:

end for - 9:

Compute confidence matrix: ▹ Equation ( 7) - 10:

// Step 2: Generator Forward Pass - 11:

for each user u do - 12:

Input: rating vector , confidence vector - 13:

Project to latent space: ▹ Equation ( 8) - 14:

Compute attention: ▹ Equation ( 9) - 15:

Concatenate and generate: ▹ Equation ( 10) - 16:

end for - 17:

// Step 3: Discriminator Forward Pass - 18:

for each user u do - 19:

Compute and using MLP ▹ Equation ( 14) - 20:

end for - 21:

// Step 4: Adversarial Training Loop - 22:

for each epoch do - 23:

Sample minibatch of users u - 24:

Discriminator Update: ▹ Equation ( 15) - 25:

Generator Update: Minimize loss using: ▹ Equation ( 11) - 26:

end for - 27:

return Denoised rating matrix

|

3. Results and Discussion

This section presents the experimental results of DST-AttentiveGAN and provides a comprehensive discussion of its performance. We start by detailing the experimental setup. Then, we report and compare the model’s performance against several baseline methods. Finally, we analyze the impact of DST-AttentiveGAN on rating distributions to highlight its ability to denoise and structure user-item interactions more effectively.

3.1. Experimental Setup

The experimental setup includes the datasets used, evaluation metrics, and implementation details. These components provide the basis for assessing the performance and robustness of DST-AttentiveGAN in various recommendation scenarios.

3.1.1. Datasets

The experiments are conducted on three real-world datasets to validate the robustness of our DST-AttentiveGAN.

Table 1 provides a detailed summary of their statistical properties.

MovieLens 1M (

https://grouplens.org/datasets/movielens/1m/) (accessed on 16 June 2025): This dataset contains 1,000,209 ratings from 943 users on 1682 movies. Ratings range from 1 to 5. It is well-structured and widely adopted in collaborative filtering research.

Amazon Electronics (

https://nijianmo.github.io/amazon/index.html (accessed on 16 June 2025): This dataset includes 874,319 ratings from 5000 users on 4000 electronic products. Ratings are in the range of 1 to 5. It presents challenges such as high sparsity and implicit noise, making it suitable for evaluating robustness.

3.1.2. Evaluation Metrics

To evaluate recommendation quality, we adopt three widely used metrics: HR@K, Precision@K, and NDCG@K, each computed at .

As shown in Equations (

19) and (

20), these metrics allow us to assess not only whether the target item is retrieved, but also how well it is ranked in the recommendation list.

3.1.3. Hyperparameters Settings

All models were implemented using Python 3.10. For the proposed DST-AttentiveGAN, we adopted the Adam optimizer, tuning the learning rate in and the batch size in to select the best configuration. Early stopping was employed with a patience of 10 epochs to prevent overfitting.

The top-K values used for evaluation were set to , consistent with standard practices in the literature. All baseline models were trained using their available implementations and hyperparameter settings reported in their respective original papers to ensure a fair comparison.

3.2. Empirical Results

To validate the effectiveness of our proposed DST-AttentiveGAN, we compare it against several strong baseline models. Each baseline is implemented with its best-reported hyperparameters and retrained on the same datasets for a fair comparison. The results are presented in

Table 2,

Table 3, and

Table 4, which report the performance on the MovieLens, Amazon Electronics, and Netflix datasets, respectively.

3.3. Evaluation of Prediction Accuracy via MAE and MSE

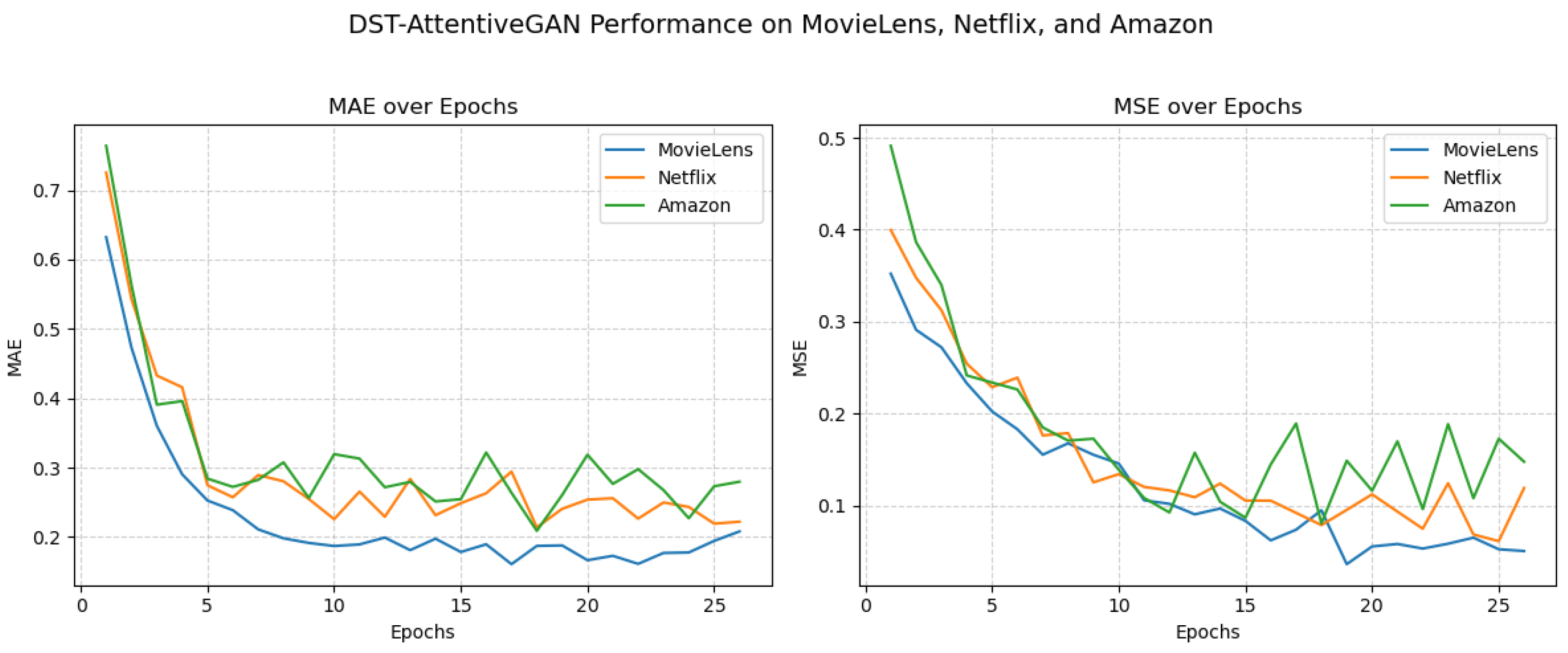

While HR@K, NDCG@K, and Precision@K are standard metrics for evaluating recommendation performance, they primarily capture ranking quality and do not directly reflect the model’s ability to predict rating values accurately. To complement these measures, we analyzed Mean Absolute Error (MAE) and Mean Squared Error (MSE) over training epochs across the MovieLens, Netflix, and Amazon datasets.

As shown in

Figure 2, both MAE and MSE decrease consistently with training, indicating that the DST-AttentiveGAN effectively reconstructs user ratings while denoising noisy inputs. MovieLens exhibits the lowest errors, reflecting its higher density and lower sparsity, whereas Amazon presents higher and more variable errors due to data sparsity. Netflix falls in between, showing moderate error reduction.

These results highlight that, beyond ranking performance, the model achieves accurate rating prediction, with MAE and MSE providing complementary insights into the reliability and stability of the learned representations.

3.4. Discussion and Analysis

In this subsection, we analyze the results obtained from our experiments and discuss the effectiveness of DST-AttentiveGAN across different datasets and conditions. We explore its impact on the structure of user-item rating distributions. These insights provide a deeper understanding of the model’s robustness and adaptability in real-world recommendation scenarios.

3.4.1. Performance Comparison

The experimental findings demonstrate that DST-AttentiveGAN consistently outperforms strong GAN-based baselines across all evaluation metrics (HR@K, NDCG@K, Precision@K). These improvements are both substantial and consistent across datasets of varying sparsity and noise characteristics, which confirms the model’s robustness and adaptability.

On the MovieLens dataset, which is relatively dense and less noisy, our model still achieves over 7% improvement in Precision@K, demonstrating its ability to leverage confidence signals even in stable rating environments. For the highly sparse and biased Amazon Electronics dataset, the model yields up to +9.59% in NDCG@10 and +8.01% in Precision@5, showcasing strong resilience to noisy feedback. In the Netflix dataset, where inconsistency in user ratings is common, DST-AttentiveGAN records consistent performance boosts across all ranks and metrics, indicating its generalization capability under varying rating behaviors.

These quantitative gains are attributed to the integration of Dempster-Shafer Theory and the cross-attention mechanism. The former provides a principled way to model belief and uncertainty in ratings, while the latter dynamically weighs informative cues from similar users and items. This synergy enables the generator to correct unreliable feedback and preserve high-confidence signals, resulting in more accurate and relevant recommendations.

3.4.2. Impact of Dst-AttentiveGAN on Rating Distribution Refinement

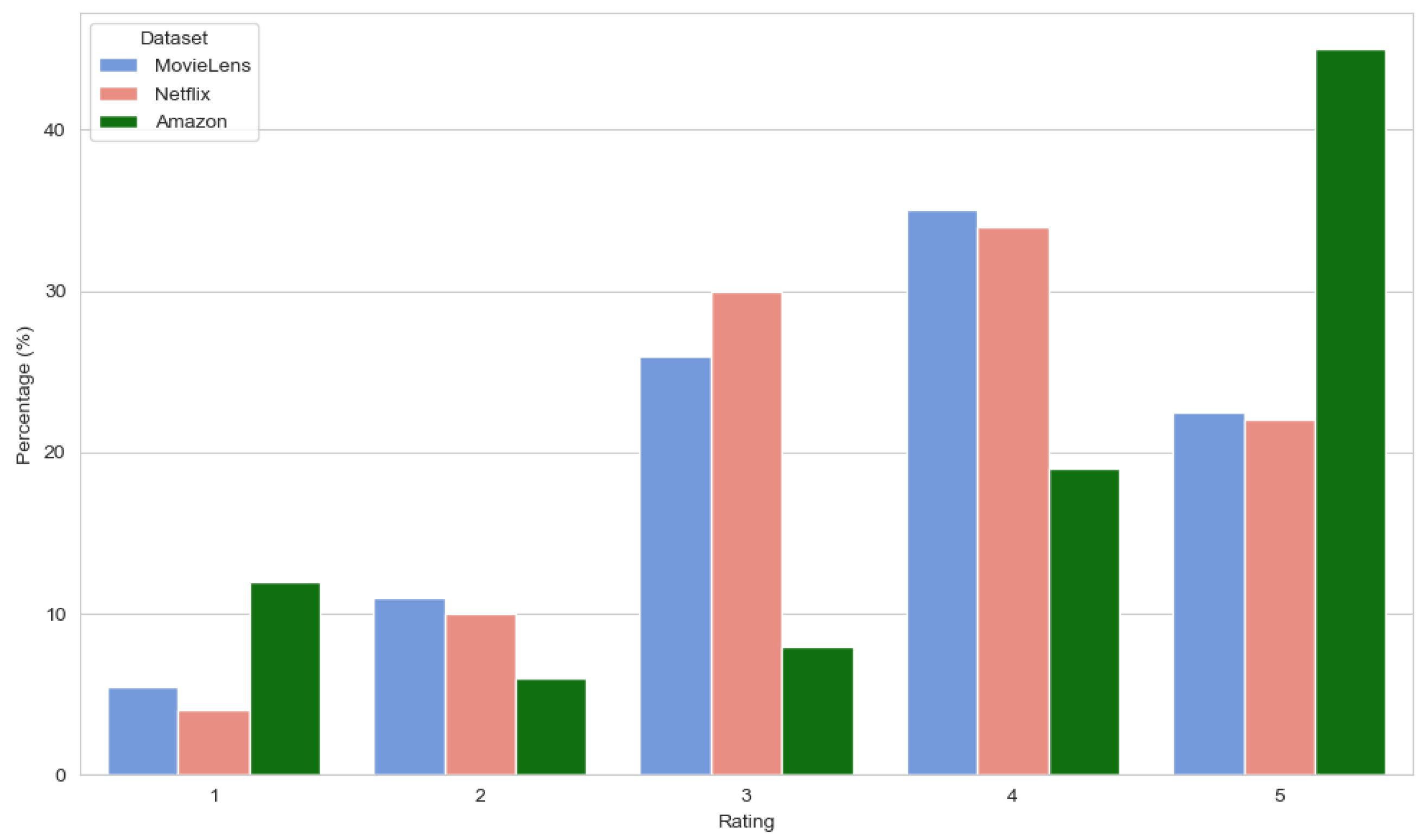

The effectiveness of Dst-AttentiveGAN is highlighted by its ability to transform noisy ratings into a more structured and reliable distribution.

Figure 3 demonstrates how the original ratings across the datasets, particularly in Amazon and Netflix, are dominated by noise. In Amazon, this is characterized by inflated 5-star ratings, leading to a skewed distribution, while Netflix exhibits ambiguity in midrange ratings, which reflect conflicting user sentiment or indecision. Such noisy distributions arise from various sources, including biased reviews, adversarial feedback, and platform-induced inflation, all of which obscure true user preferences and affect the reliability of recommendation models.

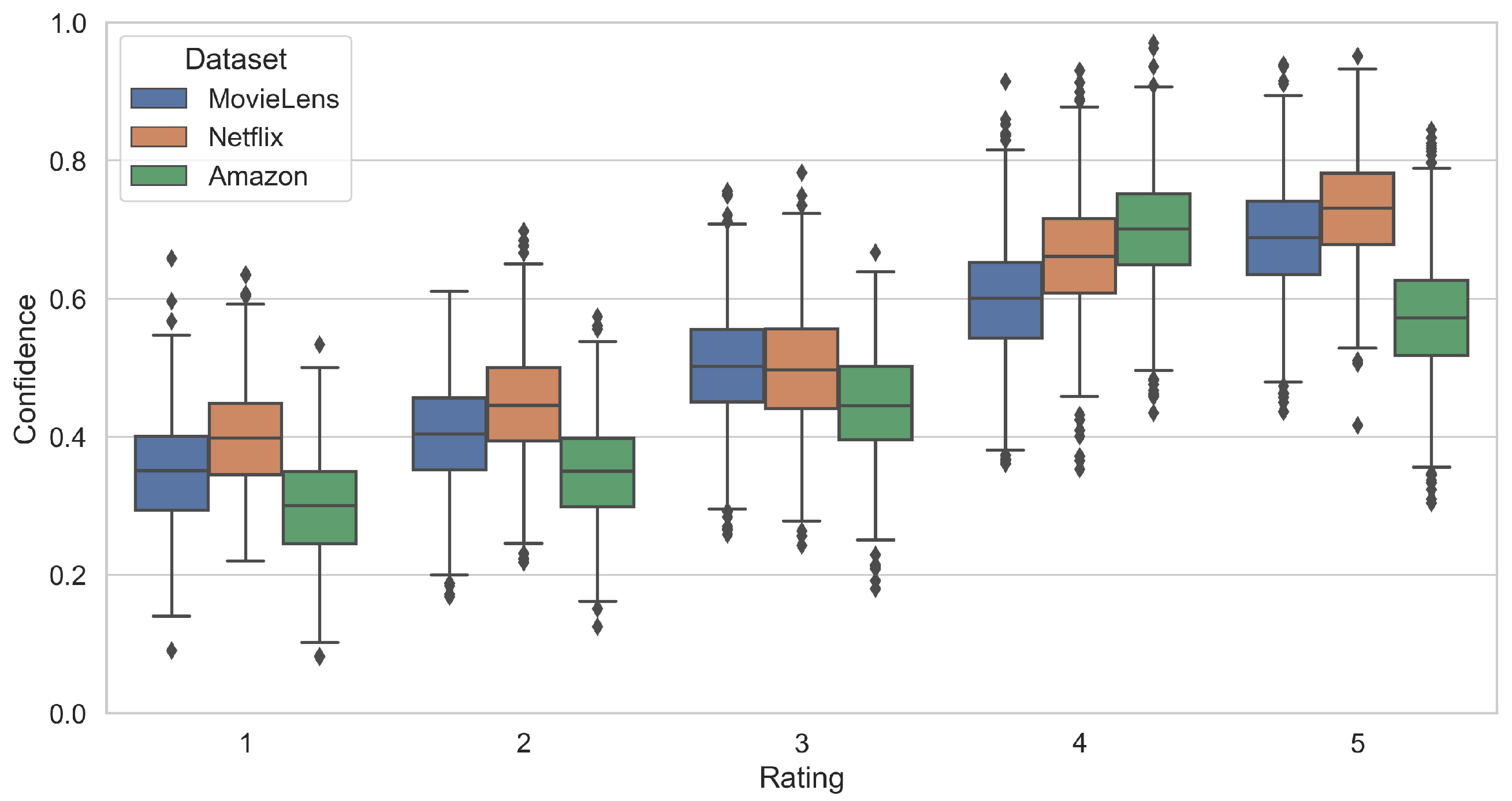

To address this, the model introduces confidence scores, as shown in

Figure 4, which quantify the model’s trust in each rating. These scores are critical in distinguishing between reliable feedback and uncertain or biased ratings. High-confidence ratings indicate strong alignment with the user’s true preferences and are thus given more weight in the denoising process. In contrast, low-confidence ratings suggest ambiguity or inconsistency and are adjusted or filtered out. By calculating confidence scores based on the consistency and reliability of ratings, the model is able to systematically identify which ratings should be preserved and which should be downweighted.

Each box in

Figure 4 illustrates the distribution of confidence values generated by our model for a given rating level. The horizontal line inside the box represents the median confidence, while the box boundaries indicate the interquartile range (IQR). The whiskers extend to values within 1.5 × IQR, and the points above or below the whiskers denote outlier confidence values that are significantly higher or lower than the main confidence distribution.

Following this process,

Figure 5 illustrates the impact of the denoising step, where the distribution of ratings is refined. The high-confidence ratings are reinforced, resulting in sharper, more prominent peaks, particularly for 4 and 5 stars. The 3-star ratings, which often represent conflicting or mixed sentiments, are smoothed or adjusted, reducing their impact on the final output. This transformation significantly improves the rating distribution, making it more interpretable and aligned with true user preferences.

The denoised distributions, especially in Amazon and Netflix, become clearer and less skewed, with more reliable feedback that is better suited for downstream recommendation systems. In datasets like MovieLens, which contains cleaner data, the denoising process is less aggressive, as the confidence scores naturally highlight the reliability of existing ratings without needing extensive adjustments. As a result, the model adapts to dataset-specific characteristics, ensuring that only necessary adjustments are made.

By integrating confidence scores, Dst-AttentiveGAN ensures that the denoising process produces a structured, reliable distribution. This results in less biased feedback that is critical for building accurate user profiles in recommendation models, ultimately leading to better personalized recommendations.

3.4.3. Potential Extensions of DST Integration

To examine the effect of DST, we compare DST-AttentiveGAN with Self-AttentiveGAN, its version without DST signals. The results show consistent gains across datasets. On MovieLens, DST improves HR and NDCG by around +10%, confirming benefits even in relatively dense settings. Amazon, which is both sparse and noisy, shows larger improvements, with +16.7% on HR@10 and over +10% on NDCG, highlighting DST’s ability to handle uncertain feedback. Netflix achieves the most pronounced gains, with HR@5 and NDCG metrics improving by up to +18%. Overall, these results validate that DST-derived confidence strengthens robustness by reducing noise and emphasizing reliable user–item interactions.

Our experiments on DST-AttentiveGAN, showing that integrating DST-derived confidence effectively reduces noise and emphasizes reliable user–item interactions. These results indicate that the DST-based fusion strategy is not limited to this architecture and opens new directions for research in recommender systems. For other GAN variants such as GANMF [

13], CFGAN [

12], WGANRS [

11] or AACF [

14], DST can provide trust-aware guidance to both the generator and discriminator, enhancing robustness to sparse or noisy ratings, while its additional computational complexity remains manageable and could be further optimized in future work.

More broadly, DST can benefit a wide range of recommendation algorithms: in Matrix Factorization, it can weight interactions according to reliability; in Neural Collaborative Filtering, it can serve as an auxiliary signal guiding network training; in Graph Neural Networks, it can modulate message propagation based on confidence; and in Sequential models, it can adjust the influence of past interactions depending on certainty. By explicitly modeling uncertainty and managing conflicts among heterogeneous signals, DST provides a versatile mechanism to improve robustness and prediction quality across diverse recommender system architectures.

4. Conclusions

In conclusion, this work proposed the DST-AttentiveGAN, an innovative evidential adversarial framework for denoising inconsistent user ratings in collaborative filtering. By incorporating Dempster-Shafer theory into both the generator and discriminator, and integrating a cross-attention mechanism, the model effectively captures confidence signals and focuses on reliable user feedback while mitigating the impact of noise. The experimental results across various benchmark datasets demonstrate that DST-AttentiveGAN outperforms existing GAN-based methods, particularly under high-noise scenarios. The use of Wasserstein loss with gradient penalty contributes to more stable training, and the model consistently achieves superior performance in terms of Hit Ratio, NDCG, and especially Precision at lower values of top-K recommendations, where accurate ranking is most critical. These findings highlight the importance of explicitly modeling uncertainty in user ratings and incorporating it directly into the learning process.

In future work, we plan to incorporate multi-modal evidence, including textual reviews, temporal patterns, and item features, to improve uncertainty modeling in sparse or cold-start scenarios. We also intend to evaluate DST-AttentiveGAN under shilling and adversarial attack settings, in addition to testing on datasets from other domains such as social media and news articles, to better assess its robustness and generalizability across diverse recommendation environments. Furthermore, we aim to conduct experiments on integrating DST with GAN variants and other recommendation algorithms to evaluate its broader applicability. Finally, extending the model to sequential and interactive recommendation tasks will enable dynamic, context-aware personalization, where uncertainty is continuously refined through user interactions.