Using LLM to Identify Pillars of the Mind Within Physics Learning Materials

Abstract

1. Introduction

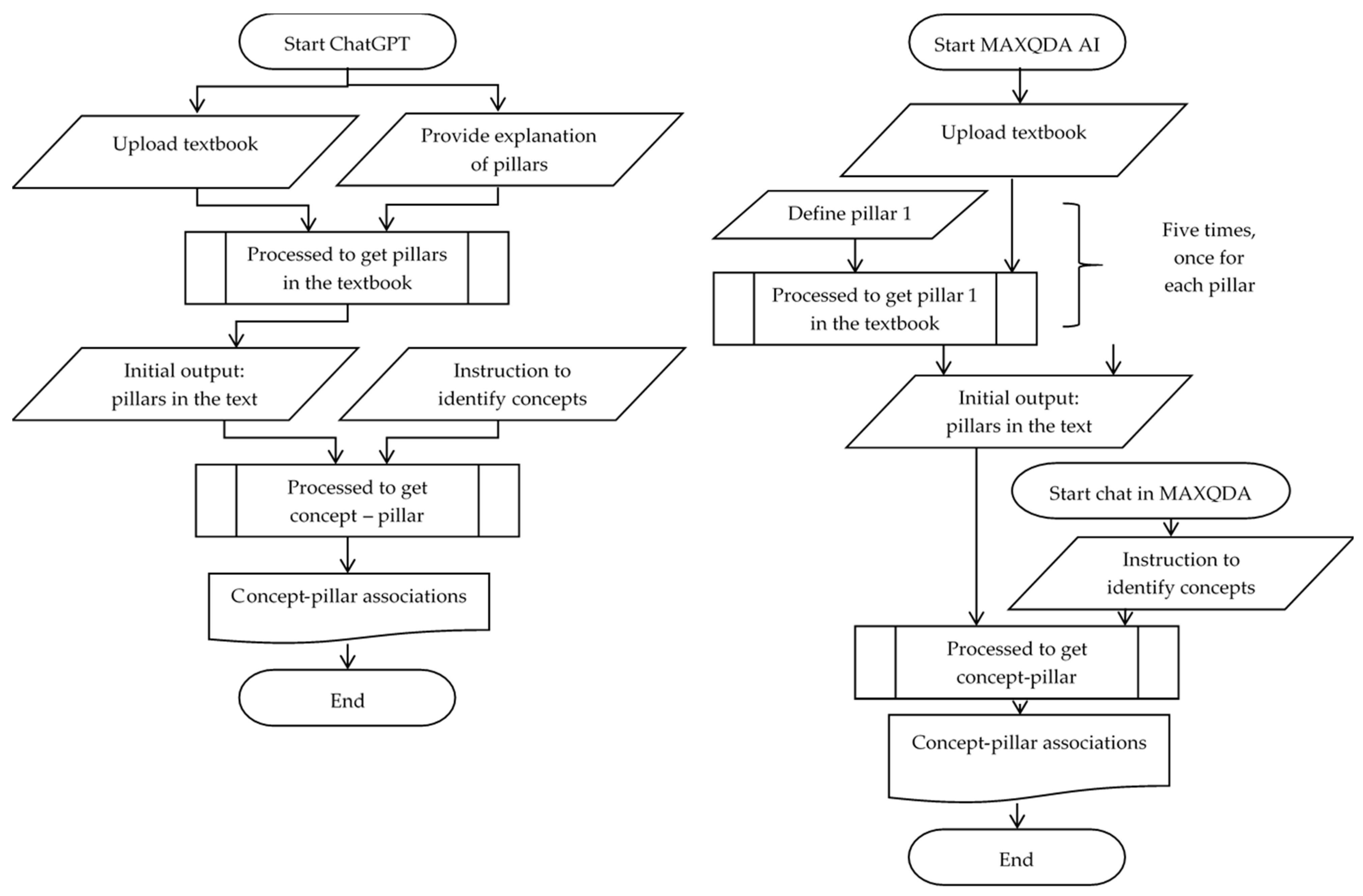

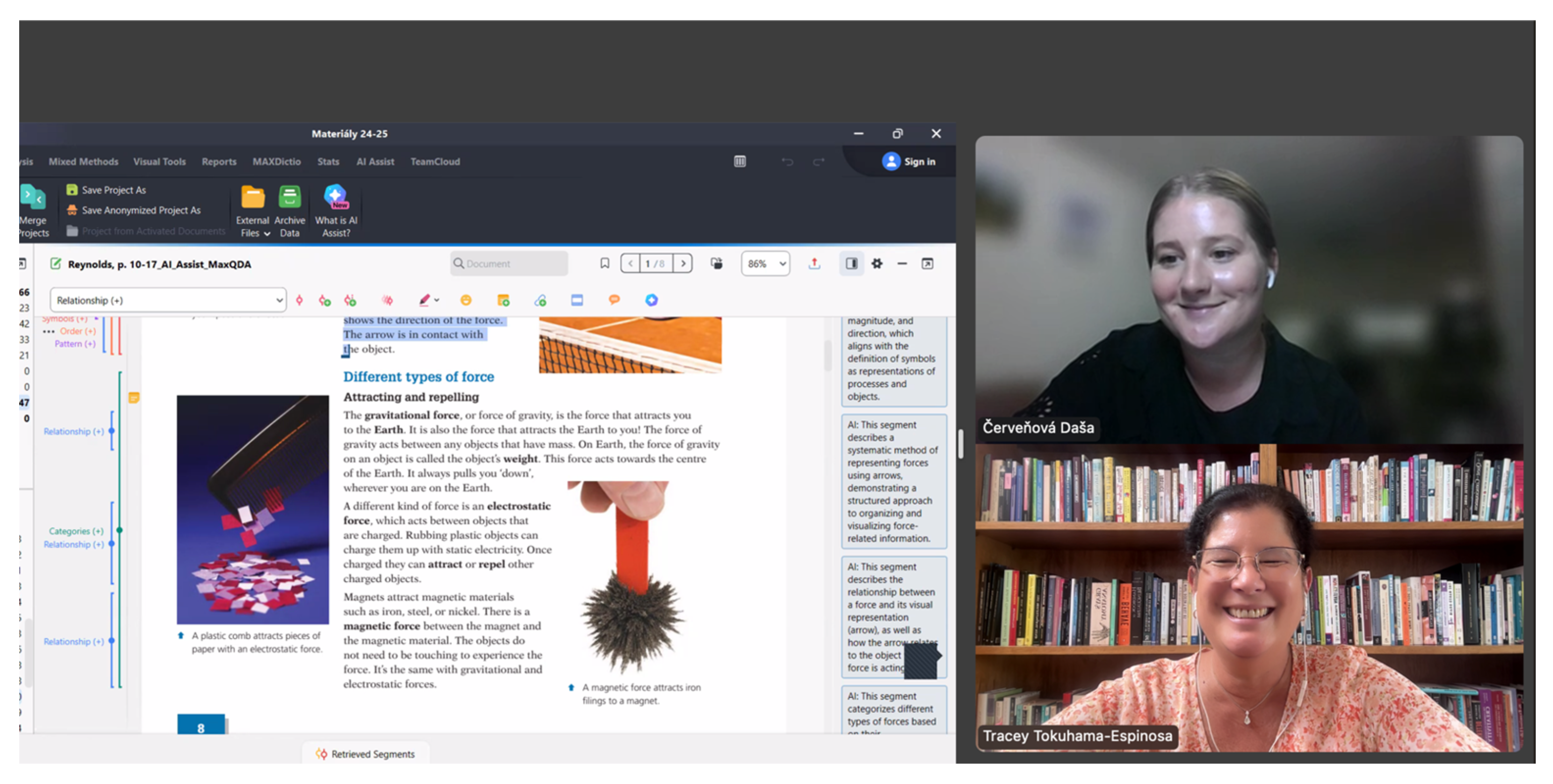

2. Methodology

2.1. Task Definition and Dataset Preparation

2.2. Evaluation Criteria and Comparative Framework

- (a)

- Physics concepts identified within a learning material.

- (b)

- Pillars assigned to the identified physics concepts.

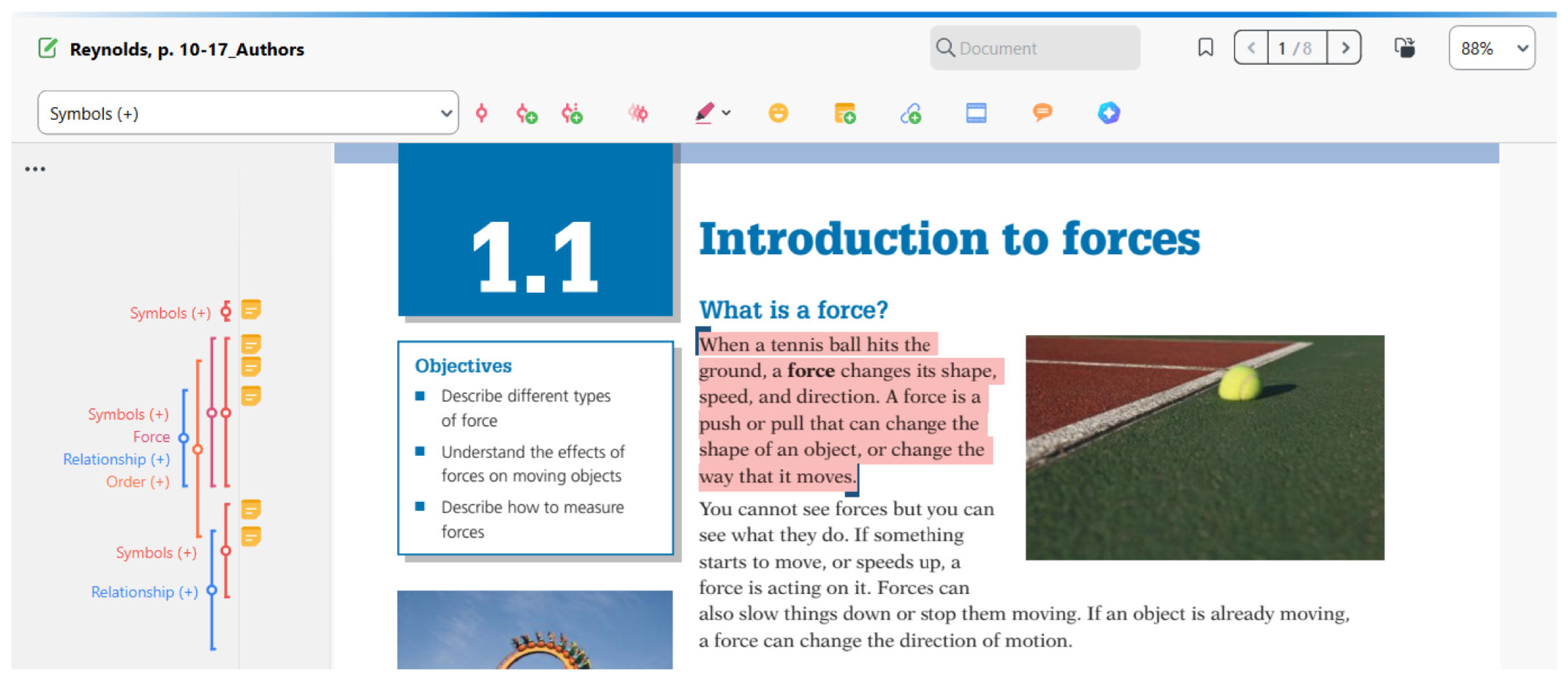

2.3. Authors’ Identification

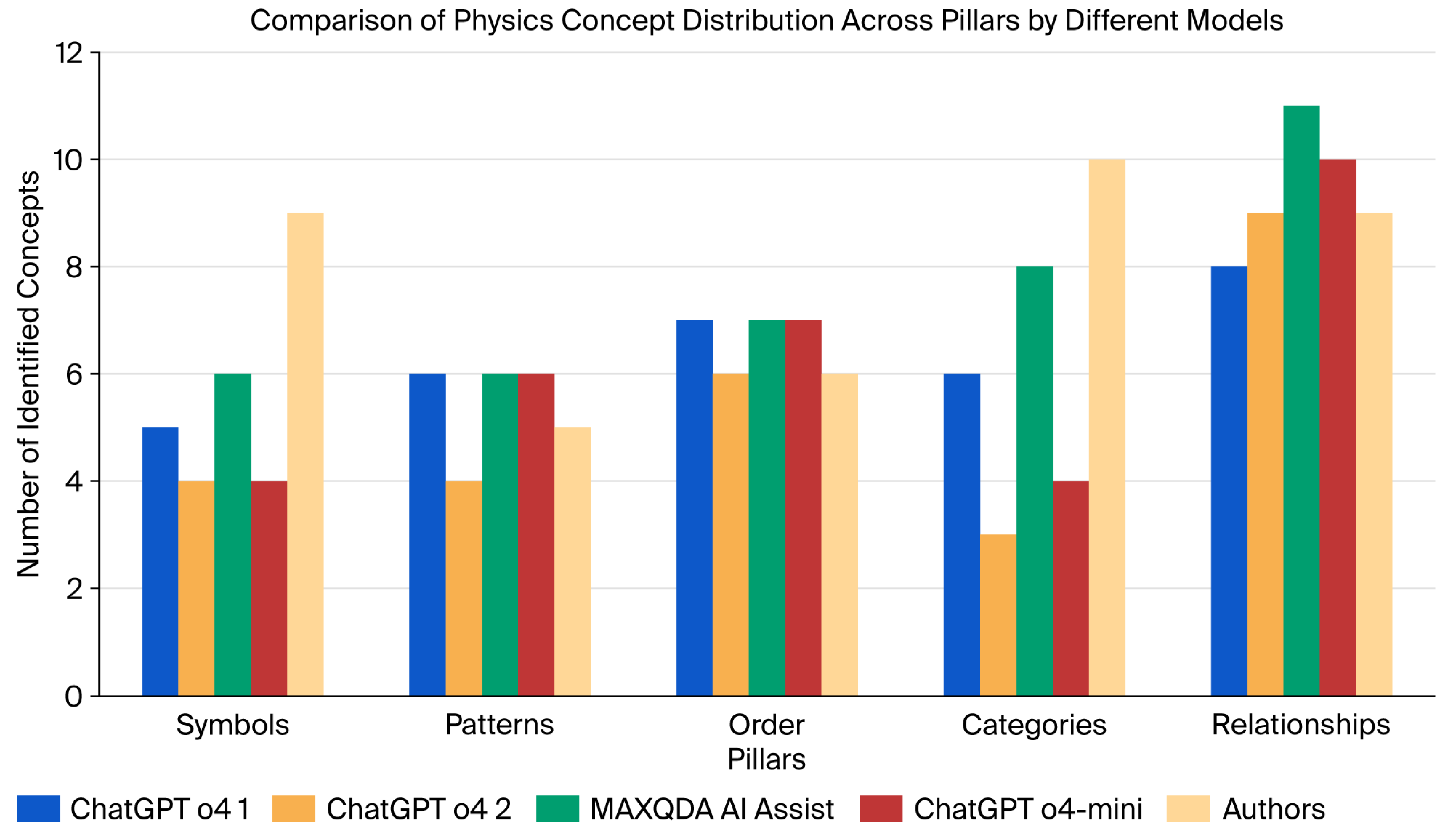

3. Results

- Relationships are, according to LLM, the most frequently developed pillar.

- Order is mostly identified in the structure of the learning material.

- Authors’ manual identification and LLM’s identification are not consistent.

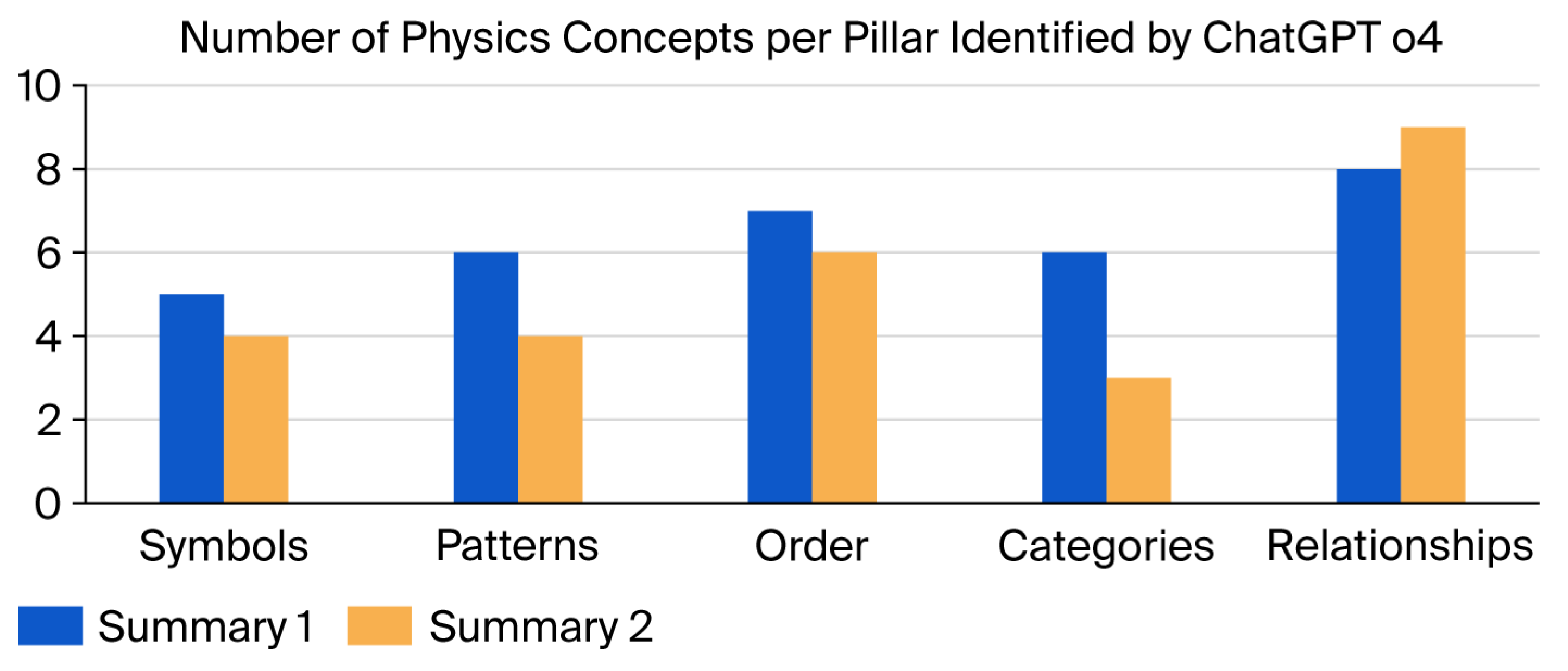

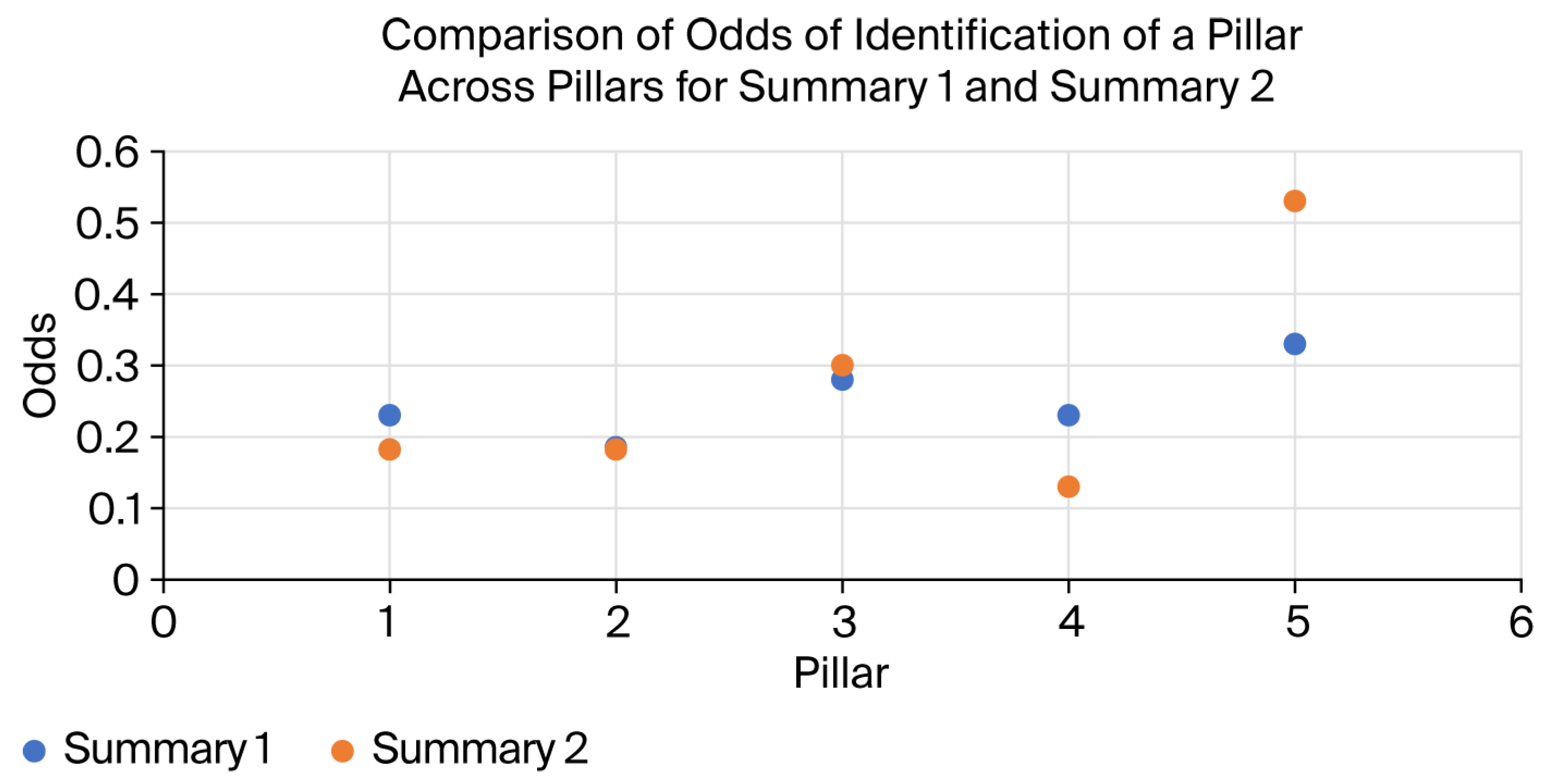

- ChatGPT is not consistent when performing a task several times.

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Marx, V. Quest for AI literacy. Nat. Methods 2024, 21, 1412–1415. [Google Scholar] [CrossRef] [PubMed]

- Birhane, A.; Kasirzadeh, A.; Leslie, D.; Wachter, S. Science in the age of large language models. Nat. Rev. Phys. 2023, 5, 277–280. [Google Scholar] [CrossRef]

- Carew, T.; Magsamen, S. Neuroscience and education: An ideal partnership for producing evidence-based solutions to guide 21st century learning. Neuron 2010, 67, 685–688. [Google Scholar] [CrossRef] [PubMed]

- Manson, R.A.; Schumacher, R.A.; Just, M. The Neuroscience of Advanced Scientific Concepts. npj Sci. Learn. 2021, 6, 29. [Google Scholar] [CrossRef] [PubMed]

- Tokuhama-Espinosa, T. Five Pillars of the Mind: Redesigning Education to Suit the Brain; W. W. Norton: New York, NY, USA, 2019. [Google Scholar]

- Dehaene, S. Reading in the Brain: The New Science of How We Read; Penguin Books: London, UK, 2009. [Google Scholar]

- Tokuhama-Espinosa, T.; Nazareno, J.R.S.; Rappleye, C. Writing, Thinking, and the Brain; Teachers College Press: New York, NY, USA, 2025. [Google Scholar]

- Červeňová, D.; Demkanin, P. The theory of Five Pillars of the Mind and Physics Education. J. Phys. Conf. Ser. 2025, 2950, 012009. [Google Scholar] [CrossRef]

- Elster, A.; Sagiv, L. Personal Values and Cognitive Biases. J. Personal. 2024. [Google Scholar] [CrossRef] [PubMed]

- Kakinohana, R.K.; Pilati, R. Differences in decisions affected by cognitive biases: Examining human values, need for cognition, and numeracy. Psicol. Reflex. Crit. 2023, 36, 26. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.M.F.A.; Shehawy, Y.M. Perceived AI Consumer-Driven Decision Integrity: Assessing Mediating Effect of Cognitive Load and Response Bias. Technologies 2025, 13, 374. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education—Where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 39. [Google Scholar] [CrossRef]

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Rospigliosi, P. Artificial intelligence in teaching and learning: What questions should we ask of ChatGPT? Interact. Learn. Environ. 2023, 31, 1–3. [Google Scholar] [CrossRef]

- OpenAI. Introducing ChatGPT. OpenAI. Available online: https://openai.com/index/chatgpt/ (accessed on 20 April 2025).

- OpenAI. Reasoning Guide. OpenAI. Available online: https://platform.openai.com/docs/guides/reasoning?api-mode=responses (accessed on 20 April 2025).

- OpenAI. Reasoning Best Practices. OpenAI. Available online: https://platform.openai.com/docs/guides/reasoning-best-practices (accessed on 20 April 2025).

- VERBI Software. MAXQDA 24 Online Manual. 2023. Available online: https://www.maxqda.com/download/manuals/MAX24-Online-Manual-Complete-EN.pdf (accessed on 20 April 2025).

- Kucartz, U.; Rädiker, S. Integrating Ai in Qualitative Content Analysis. 2024. Available online: https://qca-method.net/documents/Kuckartz-Raediker-2024-Integrating-AI-in-Qualitative-Content-Analysis.pdf (accessed on 1 May 2025).

- Reynolds, H. Complete Physics for Cambridge Secondary 1; Oxford University Press: Oxford, UK, 2013; p. 4426. ISBN 9780198394426. [Google Scholar]

- Li, I.; Fabbri, A.R.; Tung, R.R.; Radev, D.R. What should I learn first: Introducing LectureBank for NLP education and prerequisite chain learning. Proc. AAAI Conf. Artif. Intell. 2019, 33, 6674–6681. [Google Scholar] [CrossRef]

- Reales, D.; Manrique, R.; Grévisse, C. Core concept identification in educational resources via knowledge graphs and large language models. SN Comput. Sci. 2024, 5, 1029. [Google Scholar] [CrossRef]

- Zhu, W.; Wei, L.; Qin, Y. Artificial Intelligence in Education (AIEd): Publication Patterns, Keywords, and Research Focuses. Information 2025, 16, 725. [Google Scholar] [CrossRef]

- Garzón, J.; Patiño, E.; Marulanda, C. Systematic Review of Artificial Intelligence in Education: Trends, Benefits, and Challenges. Multimodal Technol. Interact. 2025, 9, 84. [Google Scholar] [CrossRef]

- Cebrián Cifuentes, S.; Guerrero Valverde, E.; Checa Caballero, S. The Vision of University Students from the Educational Field in the Integration of ChatGPT. Digital 2024, 4, 648–659. [Google Scholar] [CrossRef]

- Tokuhama-Espinosa, T.; Nouri, A. Teachers’ Mind, Brain, and Education Literacy: A Survey of Scientists’ Views. Mind Brain Educ. 2023, 17, 170–174. [Google Scholar] [CrossRef]

- Ng, D.T.K.; Tan, C.W.; Leung, J.K.L. Empowering student self-regulated learning and science education through ChatGPT: A pioneering pilot study. Br. J. Educ. Technol. 2024, 55, 1328–1353. [Google Scholar] [CrossRef]

- Morris, P.; Deo, P. Sciences. MYP by Concept 1; Hodder Education: London, UK, 2020; ISBN 9781471880377. [Google Scholar]

- di Sessa, A.A.; Levin, M. Processes of Building Theories of Learning: Three Contrasting Cases. In Engaging with Contemporary Challenges Through Science Education Research; Levrini, O., Tasquier, G., Amin, T.G., Branchetti, L., Levin, M., Eds.; Contributions from Science Education Research; Springer: Cham, Switzerland, 2021; Volume 9. [Google Scholar] [CrossRef]

- Taylor, G.R. The Natural History of the Mind; Elsevier-Dutton Publishing: New York, NY, USA, 1979; ISBN 0-525-16424-3. [Google Scholar]

- Bai, L.; Liu, X.; Su, J. ChatGPT: The cognitive effects on learning and memory. Brain-x 2023, 1, e30. [Google Scholar] [CrossRef]

- Đerić, E.; Frank, D.; Milković, M. Trust in Generative AI Tools: A Comparative Study of Higher Education Students, Teachers, and Researchers. Information 2025, 16, 622. [Google Scholar] [CrossRef]

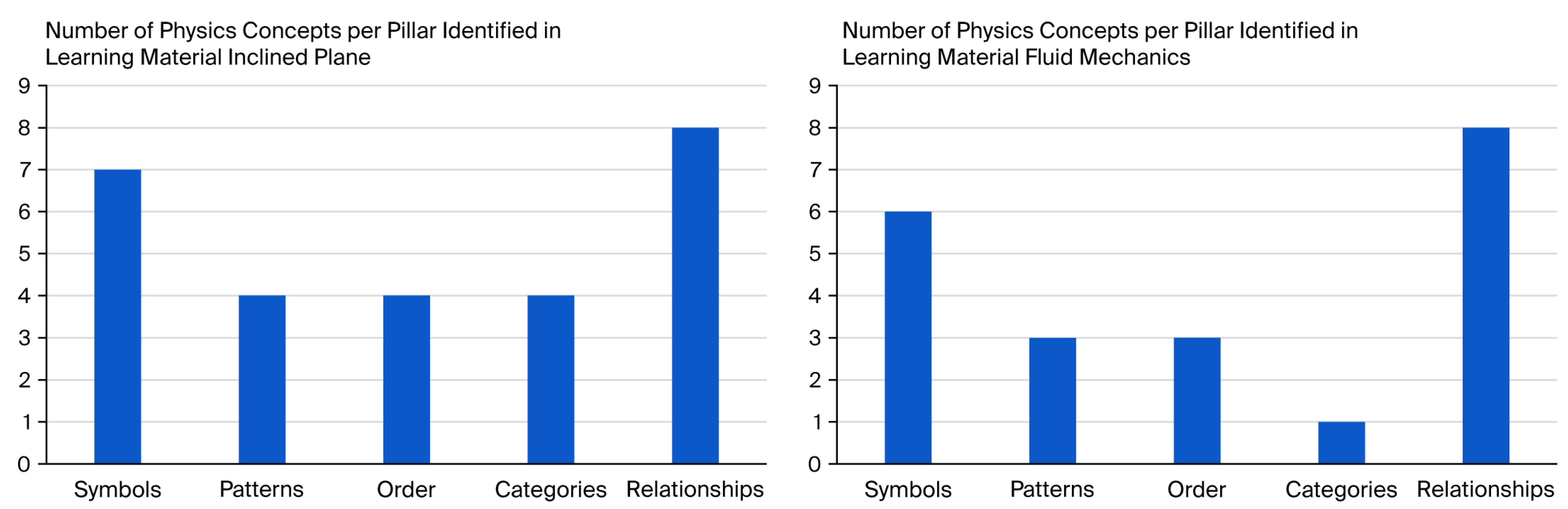

| Pillar | Discussed Topic | N (%) |

|---|---|---|

| Symbols | Inclined plane | 7 (50.0%) |

| Fluid mechanics | 6 (54.5%) | |

| Patterns | Inclined plane | 4 (28.6%) |

| Fluid mechanics | 3 (27.3%) | |

| Order | Inclined plane | 4 (28.6%) |

| Fluid mechanics | 2 (18.2%) | |

| Categories | Inclined plane | 4 (28.6%) |

| Fluid mechanics | 1 (9.1%) | |

| Relationships | Inclined plane | 8 (57.0%) |

| Fluid mechanics | 8 (72.3%) |

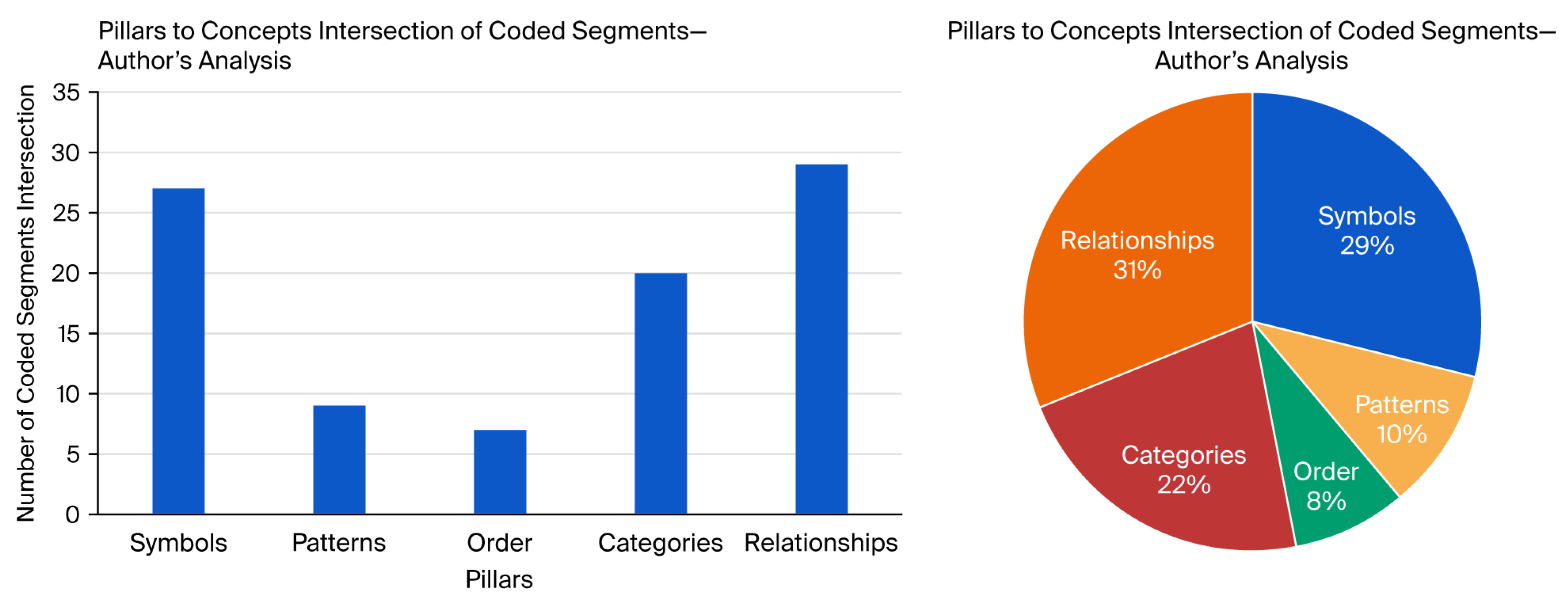

| Concept | Symbols | Patterns | Order | Categories | Relationships |

|---|---|---|---|---|---|

| Gravitational field strength | 2 | 0 | 0 | 1 | 3 |

| Gravitational field | 1 | 0 | 0 | 0 | 0 |

| Mass | 0 | 0 | 0 | 1 | 3 |

| 3rd Newton’s Law of Motion | 0 | 1 | 1 | 0 | 0 |

| 2nd Newton’s Law of Motion | 0 | 0 | 1 | 2 | 1 |

| 1st Newton’s Law of Motion | 4 | 4 | 1 | 3 | 4 |

| Measurement | 2 | 0 | 0 | 1 | 3 |

| Resistive forces | 2 | 1 | 0 | 2 | 0 |

| Friction | 1 | 0 | 2 | 3 | 1 |

| Weight | 3 | 0 | 1 | 1 | 4 |

| Gravitational force | 3 | 1 | 1 | 1 | 5 |

| Force | 9 | 2 | 0 | 5 | 5 |

| Sum | 27 | 9 | 7 | 20 | 29 |

| % | 0.29 | 0.10 | 0.08 | 0.22 | 0.32 |

| P | R | F1-Score | |

|---|---|---|---|

| ChatGPT 4o | 0.308 | 0.364 | 0.333 |

| ChatGPT o4-mini | 0.692 | 0.600 | 0.643 |

| MAXQDA AI Assist | 1.000 | 0.667 | 0.800 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Červeňová, D.; Demkanin, P. Using LLM to Identify Pillars of the Mind Within Physics Learning Materials. Digital 2025, 5, 47. https://doi.org/10.3390/digital5040047

Červeňová D, Demkanin P. Using LLM to Identify Pillars of the Mind Within Physics Learning Materials. Digital. 2025; 5(4):47. https://doi.org/10.3390/digital5040047

Chicago/Turabian StyleČerveňová, Daša, and Peter Demkanin. 2025. "Using LLM to Identify Pillars of the Mind Within Physics Learning Materials" Digital 5, no. 4: 47. https://doi.org/10.3390/digital5040047

APA StyleČerveňová, D., & Demkanin, P. (2025). Using LLM to Identify Pillars of the Mind Within Physics Learning Materials. Digital, 5(4), 47. https://doi.org/10.3390/digital5040047