Object Detection Models and Optimizations: A Bird’s-Eye View on Real-Time Medical Mask Detection

Abstract

1. Introduction

- A review and evaluation of state-of-the-art object detectors and an analysis of their speed/accuracy trade-off, using the same framework, dataset, and GPU. No other similar study has been published that includes YOLOv5 [15], whose performance has yet to be extensively tested.

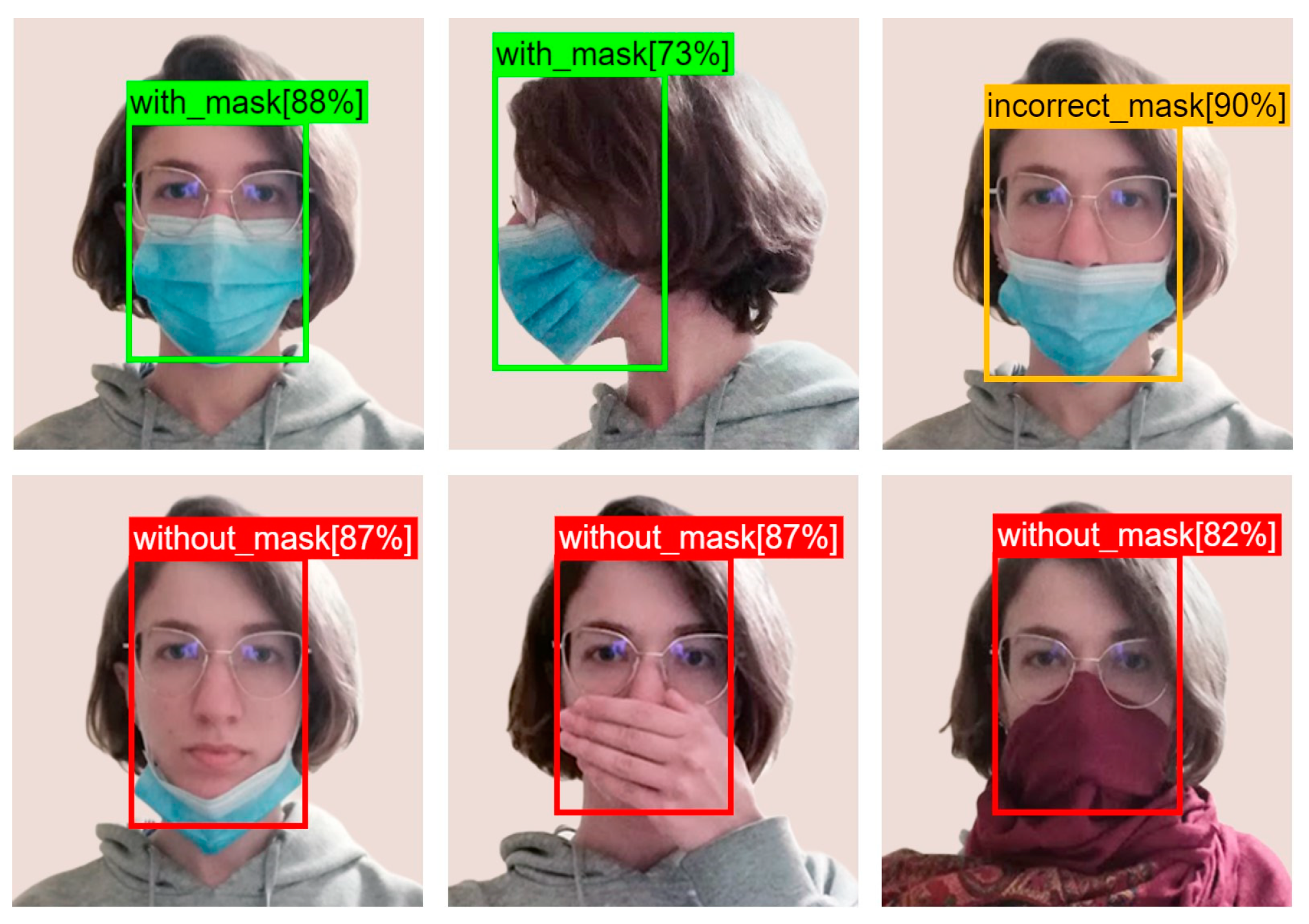

- The accuracy and speed of YOLOv5s are evaluated for the first time on the newly developed Properly Wearing Masked Faces Dataset (PWMFD) [16]. Furthermore, the effects of transfer learning, data augmentations, and attention mechanisms are assessed for medical mask detection.

- A real-time medical mask detection model based on YOLOv5 is proposed that surpassed more than 2 times in speed (69 fps) the state-of-the-art model SEYOLOv3 [16] on the PWMFD while maintaining the same level of the mean Average Precision (mAP) at 67%. This increase in speed gives room for using the model on embedded devices with lower hardware capabilities, while still achieving real-time detection.

- It presents new evaluation results of object detection models in terms of their accuracy and speed vs. computational performance (GFLOPS).

- It includes new results demonstrating the (detrimental) effect of augmenting the model with Transformer Encoder (TE) Attention blocks.

- It involves a more thorough description of the detection models reviewed and evaluated.

- It includes a new section on the characteristics, availability, and usage of datasets suitable for medical mask detection.

2. Related Work

2.1. Object Detection Models

2.2. Models Description

2.3. Speed/Accuracy Trade-Off of Object Detection Models

2.4. Medical Mask Detection

3. Materials and Methods

3.1. Evaluation Methodology of Object Detection Models

- Framework: All models are implemented in PyTorch and are offered in the Torchvision package. The only exceptions are YOLOv3, YOLOv4, and YOLOv5, which are implemented in GitHub repositories (https://github.com/ultralytics/yolov3 accessed on 1 June 2023; https://github.com/Tianxiaomo/pytorch-YOLOv4 accessed on 1 June 2023; https://github.com/ultralytics/yolov5 accessed on 1 June 2023).

- Dataset: The evaluation is performed on the val subset of the COCO2017 [25] dataset. COCO appears to be among the de facto standards for measuring accuracy and speed in modern object detection models as it strikes a balance between manageable size and adequate image scene density. It contains 118,000 examples for training and 5000 for validation belonging to 80 classes of everyday objects. In addition, COCO training has been extensively used in the past as a basis for transfer learning on the specific problem of medical mask detection. No training phase is performed as all models are already pretrained on the train subset. The data are loaded with a batch size of 1 to imitate the stream-like insertion when detecting in real time.

- Environment: The code for the experiment is organized in two Jupyter notebooks (coco17 inference.pynb, analysis.pynb) and is executed through the Google Colab platform. We chose the option of a local runtime, which uses the GPU of our system (Nvidia Geforce GTX 960, 4 GB). Every model goes through the same inference pipeline.

- Metrics: To estimate the memory usage of each model, we calculate the maximum GPU memory allocated to our GPU device by CUDA for the program. To quantify computational costs, Giga Floating Point Operations (GFLOPs) are counted using the ptflops (https://github.com/sovrasov/flops-counter.pytorch accessed on 1 June 2023) Python package. The storage cost is derived from the size of the weight file of each respective model. We measure detection speed in frames (images) per second (fps). For accuracy, we use the mean Average Precision (mAP) of all classes. According to the COCO evaluation standard (https://cocodataset.org/#detection-eval accessed on 1 June 2023), Average Precision (AP) is calculated using 101-point interpolation on the area under the P-R (Precision–Recall) curve, as follows:

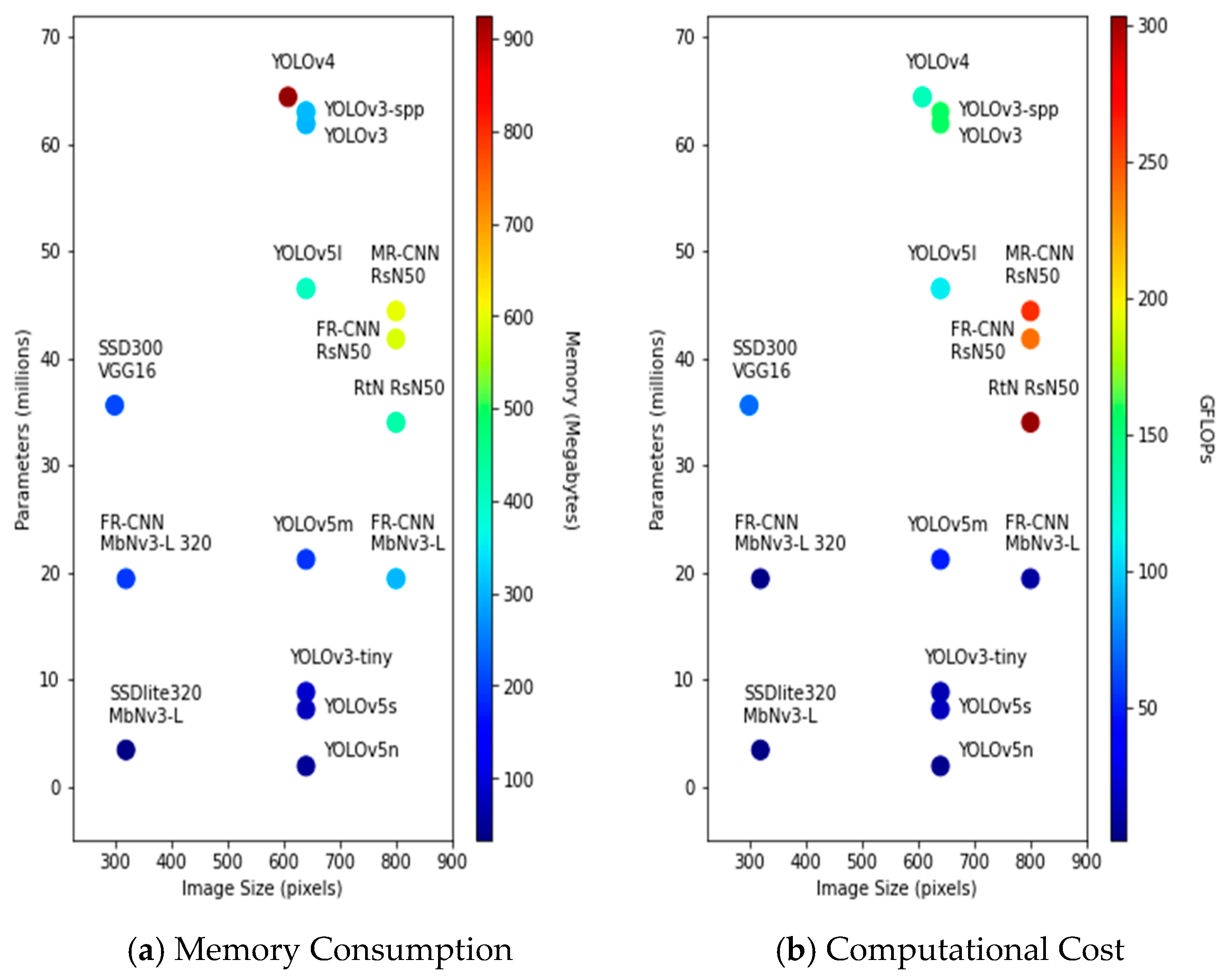

3.2. Memory Footprint

3.3. Computational Performance and Storage Costs

3.4. Accuracy and Speed

3.5. Accuracy and Speed vs. Computational Performance

4. Datasets for Medical Mask Detection

4.1. MAFA and MAFA-FMD

4.2. RMFD and SMRFD

4.3. MaskedFace-Net

4.4. PWMFD

5. Results of Optimizations for Medical Mask Detection

5.1. Configuration

5.2. Implementing Optimizations for Medical Mask Detection

5.3. Transfer Learning

5.4. Data Augmentations

5.5. Attention Mechanism with Squeeze-and-Excitation (SE) Block

5.6. Attention Mechanism with Transformer Encoder (TE) Block

5.7. Final Optimized Model

6. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lecun, Y.; Bengio, Y. Convolutional networks for images, speech and time series. In The Handbook of Brain Theory and Neural Networks; The MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Zhao, Z.; Zheng, P.; Xu, S.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S.; et al. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, Hawaii, 21–26 July 2017; pp. 3296–3297. [Google Scholar]

- Srivastava, A.; Nguyen, D.; Aggarwal, S.; Luckow, A.; Duffy, E.; Kennedy, K.; Ziolkowski, M.; Apon, A. Performance and memory trade-offs of deep learning object detection in fast streaming high-definition images. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 3915–3924. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Advances in Neural Information Processing Systems 28 (NIPS 2015); Neural Information Processing Systems Foundation, Inc.: Montreal, QC, Canada, 2015; Volume 28. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R.B. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2014, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollar, P. Focal loss for´ dense object detection. In Proceedings of the IEEE International Conference on Computer Vision 2017, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In ECCV 2016: Computer Vision–ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, K.; Girshick, R.B.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, Hawaii, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Chaurasia, A.; Borovec, J.; Kwon, Y.; Michael, K.; Changyu, L.; Fang, J.; Skalski, P.; Hogan, A.; et al. ultralytics/yolov5: v6.0-YOLOv5n ’Nano’ Models, Roboflow Integration, TensorFlow Export, OpenCV DNN Support. 2021. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 June 2023).

- Jiang, X.; Gao, T.; Zhu, Z.; Zhao, Y. Real-time face mask detection method based on YOLOv3. Electronics 2021, 10, 837. [Google Scholar] [CrossRef]

- World Health Organization. Advice on the Use of Masks in the Context of COVID-19: Interim Guidance; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Gogou, I.C.; Koutsomitropoulos, D.A. A Review and Implementation of Object Detection Models and Optimizations for Real-time Medical Mask Detection during the COVID-19 Pandemic. In Proceedings of the 2022 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Biarritz, France, 8–12 August 2022; pp. 1–6. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25 (NIPS 2012); Curran Associates, Inc.: New York, USA, 2013; pp. 1097–1105. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.M.; Zisserman, A. The Pascal Visual Object Classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P. Microsoft COCO: Common Objects in Context. In ECCV 2014: Computer Vision–ECCV 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via regionbased fully convolutional networks. In Advances in Neural Information Processing Systems 29 (NIPS 2016); Curran Associates, Inc.: New York, NY, USA, 2016. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Qin, B.; Li, D. Identifying facemask-wearing condition using image super-resolution with classification network to prevent COVID-19. Sensors 2020, 20, 5236. [Google Scholar] [CrossRef] [PubMed]

- Fan, X.; Jiang, M. RetinaFaceMask: A single stage face mask detector for assisting control of the COVID-19 pandemic. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 832–837. [Google Scholar]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. A hybrid deep transfer learning model with machine learning methods for face mask detection in the era of the COVID-19 pandemic. Measurement 2021, 167, 108288. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Loey, M.; Manogaran, G.; Taha, M.H.N.; Khalifa, N.E.M. Fighting against COVID-19: A novel deep learning model based on YOLO-v2 with ResNet-50 for medical face mask detection. Sustain. Cities Soc. 2021, 65, 102600. [Google Scholar] [CrossRef] [PubMed]

- Chowdary, G.J.; Punn, N.S.; Sonbhadra, S.K.; Agarwal, S. Face mask detection using transfer learning of InceptionV3. In BDA 2020: Big Data Analytics; Springer: Cham, Switzerland, 2020; pp. 81–90. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Kumar, A.; Kalia, A.; Sharma, A.; Kaushal, M. A hybrid tiny YOLO v4-SPP module based improved face mask detection vision system. J. Ambient Intell. Human. Comput. 2023, 14, 6783–6796. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Ge, S.; Li, J.; Ye, Q.; Luo, Z. Detecting masked faces in the wild with LLE-CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2682–2690. [Google Scholar]

- Wang, Z.; Huang, B.; Wang, G.; Yi, P.; Jiang, K. Masked face recognition dataset and application. IEEE Trans. Biom. Behav. Identity Sci. 2023, 5, 298–304. [Google Scholar] [CrossRef]

- Cabani, A.; Hammoudi, K.; Benhabiles, H.; Melkemi, M. MaskedFace-Net–A dataset of correctly/incorrectly masked face images in the context of COVID-19. Smart Health 2021, 19, 100144. [Google Scholar] [CrossRef] [PubMed]

- Smith, L.N.; Topin, N. Super-convergence: Very fast training of neural networks using large learning rates. In Proceedings of the Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications, Baltimore, MD, USA, 14–18 April 2019; pp. 369–386. [Google Scholar]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A survey on deep transfer learning. In ICANN 2018: Artificial Neural Networks and Machine Learning–ICANN 2018; Springer: Cham, Switzerland, 2018; pp. 270–279. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, QC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

| Model | Backbone | Image Size a |

|---|---|---|

| Faster R-CNN [6] | MobileNetV3-Large [23] | 800 |

| Faster R-CNN | MobileNetV3-Large | 320 |

| Faster R-CNN | ResNet-50 [31] | 800 |

| Mask R-CNN [7] | ResNet-50 | 800 |

| RetinaNet [9] | ResNet-50 | 800 |

| SSD [10] | VGG16 [37] | 300 |

| SSDlite [25] | MobileNetV3-Large | 320 |

| YOLOv3 [13] | Darknet53 [13] | 640 |

| YOLOv3-spp [13] | Darknet53 | 640 |

| YOLOv3-tiny [13] | Darknet53 | 640 |

| YOLOv4 [14] | CSPDarknet53 [14] | 608 |

| YOLOv5l [15] | Modified CSPDarknet [15] | 640 |

| YOLOv5m [15] | Modified CSPDarknet | 640 |

| YOLOv5s [15] | Modified CSPDarknet | 640 |

| YOLOv5n [15] | Modified CSPDarknet | 640 |

| mAP | mAP@50 | mAP@75 | |

|---|---|---|---|

| No TL | 0.33 | 0.59 | 0.33 |

| TL + No Freeze | 0.35 | 0.60 | 0.39 |

| TL + Freeze Backbone | 0.38 | 0.63 | 0.43 |

| TL + Freeze All a | 0.03 | 0.10 | 0.01 |

| mAP | mAP@50 | mAP@75 | |

|---|---|---|---|

| Basic Transformations a | 0.38 | 0.63 | 0.43 |

| Basic Trans. + Mosaic | 0.67 | 0.92 | 0.81 |

| Basic Trans. + Mosaic + Mixup | 0.65 | 0.91 | 0.78 |

| mAP | mAP@50 | mAP@75 | fps | |

|---|---|---|---|---|

| No SE | 0.67 | 0.92 | 0.81 | 69 |

| SE | 0.61 | 0.93 | 0.74 | 68 |

| SE + Mixup | 0.58 | 0.90 | 0.68 | 69 |

| SE + Focal Loss | 0.38 | 0.63 | 0.45 | 71 |

| SE + Mixup + Focal Loss | 0.37 | 0.62 | 0.43 | 70 |

| mAP | mAP@50 | mAP@75 | fps | |

|---|---|---|---|---|

| No TE Block | 0.67 | 0.92 | 0.81 | 69 |

| TE Block (Backbone) | 0.66 | 0.90 | 0.78 | 68 |

| TE Block (Heads) | 0.62 | 0.92 | 0.76 | 56 |

| TE Block (Backbone + Heads) | 0.60 | 0.88 | 0.75 | 55 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koutsomitropoulos, D.A.; Gogou, I.C. Object Detection Models and Optimizations: A Bird’s-Eye View on Real-Time Medical Mask Detection. Digital 2023, 3, 172-188. https://doi.org/10.3390/digital3030012

Koutsomitropoulos DA, Gogou IC. Object Detection Models and Optimizations: A Bird’s-Eye View on Real-Time Medical Mask Detection. Digital. 2023; 3(3):172-188. https://doi.org/10.3390/digital3030012

Chicago/Turabian StyleKoutsomitropoulos, Dimitrios A., and Ioanna C. Gogou. 2023. "Object Detection Models and Optimizations: A Bird’s-Eye View on Real-Time Medical Mask Detection" Digital 3, no. 3: 172-188. https://doi.org/10.3390/digital3030012

APA StyleKoutsomitropoulos, D. A., & Gogou, I. C. (2023). Object Detection Models and Optimizations: A Bird’s-Eye View on Real-Time Medical Mask Detection. Digital, 3(3), 172-188. https://doi.org/10.3390/digital3030012