Abstract

Online health communities (OHCs) are a common and highly frequented health resource. To create safer resources online, we must know how users think of credibility in these spaces. To understand how new visitors may use cues present within the OHC to establish source credibility, we conducted an online experiment (n = 373) manipulating cues for perceptions of two primary dimensions of credibility—trustworthiness and expertise—by manipulating the presence of endorsement cues (i.e., likes) and of moderators’ health credentials (i.e., medical professional) using a fake OHC. Participants were predominantly male (60.4%) and Caucasian (74.1%). Our findings showed that moderators with health credentials had an effect on both dimensions of source credibility in OHCs, however, likes did not. We also observed a correlation between the perceived social support within the community and both dimensions of source credibility, underscoring the value of supportive online health communities. Our findings can help developers ascertain areas of focus within their communities and users with how perceptions of credibility could help or hinder their own assessments of OHC credibility.

1. Introduction

Long-term health management often lands in the lap of individuals who have to largely self-manage their health conditions. Online health communities (OHCs) provide users with guidance, information, and support they might need through self-management (Nambisan 2011; Nambisan et al. 2016). However, information available online, especially in the context of OHCs, is often user generated, and thus, determining the credibility of this information becomes important. Most online communities have some type of moderation within them, but in OHCs with a large volume of users, moderation for each post prior to publication might not be feasible. A lack of this type of oversight, however, could lead to unreliable or misleading information being shared at a higher rate than otherwise. In the absence of approval for every post, one way to prevent the spread of inaccuracies in OHCs is through the presence of community-level moderators (Huh et al. 2016a). Traditionally, OHC moderators have played different roles across different communities. Some identify misinformation and correct it, whereas others make the smooth functioning of the community possible. Just as their roles within the community differ, so do their qualifications. While some OHC moderators are health professionals, others are staff administrators with no identifiable health expertise (i.e., have health credentials vs. no health credentials).

The credentials of moderators can serve as cues and be noticed by new users through cognitive heuristics (Sundar 2008; Metzger and Flanagin 2013). Therefore, moderator credentials could provide a sense of the moderators’ expertise to users and affect underlying credibility perceptions (Hovland et al. 1953; Hovland and Weiss 1951; Hu and Shyam Sundar 2010; Metzger and Flanagin 2013; Tormala and Petty 2004).

In addition to the moderators within the community, we also look at endorsement cues such as likes that provide validation from other users within the OHC. Borrowing credibility through ratings and reviews provided by other users has been observed in past work (Kanthawala et al. 2018), and likes in online platforms are akin to ratings or reviews in commercial platforms (like the App Store, for example). These one-click endorsement cues, provided through the technological affordances of the platform, affect its source credibility (Sundar 2008).

In order to understand how first-time users determine the source credibility of an OHC in such cases of quick participation (that could possibly lead to long-term use), a 2X2 experimental study was conducted. We manipulated the credentials of OHC moderators and the presence of endorsement cues (in the form of likes). The findings of this study can assist developers and designers with building more credible communities. Furthermore, since the ease of accessing health information online puts the burden of identifying what information can be trusted and what cannot on the user, these findings help users determine if their perceptions of credibility are warranted or not.

2. Literature Review

2.1. Online Health Communities

The internet provides a space for people to seek information about their health conditions. Self-managing health issues might fall on the shoulders of patients and caregivers for various reasons. These include gathering additional information about medication or learning from others’ personal experiences (Kanthawala et al. 2016). Online communities are spaces where people with similar interests congregate to discuss common topics. Those focused on health, often referred to as online health communities, run on a similar principle but focus on health-specific topics. For instance, specific communities or sub-communities might be dedicated to individual health issues, like diabetes, weight loss, specific types of cancer, etc. The discussions within these spaces are dedicated to the health condition in question, and people’s long or short-term experiences with it. People are separated geographically and may even be located on different continents, but having a shared space online allows them to discuss things asynchronously. For example, for many years one of the most common and largest OHCs was the WebMD community where different sub-communities focus on different health conditions. Their message boards have transitioned to a new space, however people can still access discussions from the past (https://exchanges.webmd.com, accessed on 24 June 2021). Appendix A shows screenshots from pre-existing discussions on WebMD (non-moderator usernames are hidden for privacy reasons). In addition to WebMD, many other OHCs perform similar functions. For instance, Patient (https://patient.info/forums, accessed on 24 June 2021) performs a similar function with discussion posts dedicated to health conditions. Appendix A also has images from Patient. Other popular OHCs include Patients Like Me, HealthBoards, MedHelp, HealthUnlocked, Med.Over.Net (Slovenian OHC), etc.

Among credibility actions taken by these platforms, WebMD (and other OHCs) use moderators within their communities as oversight (as evidenced by the presence of moderators in Appendix A). Such monitoring of content alongside the ratings of posts, and the presence of user profiles all affect the credibility of OHCs (Hajli et al. 2015). Additionally, due to the complex nature of online credibility, Sundar’s (2008) MAIN Model proposed that people focus on cues which trigger heuristics and lead to credibility judgment (for e.g., authority cues, bandwagon cues, etc.).

An important and attractive resource of OHCs is the social support people get from them. This is indicative from the current landscape of OHC research. For instance, Gui et al. (2017) explored how pregnant women use specific communities to garner peer support to overcome limited access or dissatisfaction with healthcare, and lack of information and social support. Other research has found that all forms of social support positively affect belongingness, which in turn affects information sharing, and advocacy behaviors among other things (Liu et al. 2020), and linguistic signals such as readability, post length, and spelling, also impact the amount of social support received by people (Chen et al. 2020).

Other research in the area focuses on the types of people within these communities and the information shared between them. For instance, Yang et al. (2019) categorize users of OHCs into specific behavioral roles they fulfill in the community. Rueger et al. (2021) analyzed OHC data over twelve years to show how users valued peer or lay advice from people with similar interests, diverse health expertise, and people with the latest knowledge. The danger of misinformation is always present when discussing user-generated content, and four types of misinformation have been identified in OHCs—advertising, propaganda, misleading information, and unrelated information (Zhao et al. 2021). Reciprocity and altruism affect knowledge sharing intentions in OHCs (Zhang et al. 2017). Finally, in a systematic review conducted by Carron-Arthur, Ali, Cunningham and Griffiths (Carron-Arthur et al. 2015) the authors aimed to understand how people participate and contribute to OHCs. Their findings indicated that there was no consistent participatory style across different OHCs, however they acknowledged that the research included in their study was relatively recent at the time.

As evidenced, much research has been conducted in the OHC space, but with evolving media landscapes and users, newer questions are constantly being raised. This work attempts to tackle two vital aspects of OHC functioning—a potential source for social support, and the presence of experts within the community.

2.2. Perceived Source Credibility

Research on online credibility has explored how different elements of online affordances might play a role in credibility perceptions among users. Metzger and colleagues (Metzger et al. 2003) explicated three primary forms of online credibility in their work, namely message credibility, medium credibility, and source credibility.

Message credibility refers to a message’s characteristics specifically, and how they affect people’s perceptions of the message itself. They also outline four different dimensions of message credibility, namely message structure (i.e., how the message is structured for the audience’s consumption), message content (the actual information contained in the message), language intensity (how opinionated a message is), and message delivery (how the message is presented or delivered to audiences). Despite this explication, message credibility has been one of the more challenging forms of credibility to measure. This is possibly because of the high levels of variability in message characteristics that can make it difficult to measure them.

Medium credibility refers to the media channel through which a message is shared with audiences. This research has been explored to study legacy media, such as newspapers, and eventually radio industry, television, and new media, such as the internet. The two primary factors affecting media credibility are technological features, and structural features. Medium credibility has been measured by prior research and with the landscape of media evolving at the rapid pace that it does, new research continues to explore the credibility of newer forms of media (Baum and Rahman 2021; Hmielowski et al. 2020; Li and Wang 2019; Martin and Hassan 2020).

Source credibility, the most frequently studied form of credibility, is the one that most users seem to focus on when developing credibility judgments (Austin and Dong 1994; Xie et al. 2011; Luo et al. 2013; Sparks et al. 2013). In a research context, source credibility is a multi-dimensional, and complex construct. Most researchers, however, agree that at least two dimensions—(1) expertise and (2) trustworthiness—underlie it. Furthermore, this is a perceived construct, i.e., it is dependent upon the interpretation of the user (for instance, its underlying dimension perceived expertise could differ from actual expertise of the source) (Hovland et al. 1953; Hovland and Weiss 1951; Hu and Shyam Sundar 2010; Metzger and Flanagin 2013; Tormala and Petty 2004).

Traditional credibility judgment studies focus on the source of information, such as an author, for instance (Hu and Shyam Sundar 2010; Luo et al. 2013; Spence et al. 2013). However, with the evolution of the internet, this construct has become ambiguous and imprecise especially due to multiple layers of sources in online transmission of information (Fogg et al. 2001; Sundar 2008; Yamamoto and Tanaka 2011). For example, an article written by one person could be shared by another on one website and then re-shared on another, thus diluting the focus of the source.

It is this ambiguity of online source credibility that this research aimed to explore. In addition to the complexity presented by the layers of sources explicated above, in OHCs, a user could determine the credibility of a specific post or discussion by looking at the author’s credentials, or gauge based on the post’s content if the individual has any medical credentials or authority on the matter. However, determining the expertise of the whole OHC with multiple authors is different. To explore this, expertise is manipulated at the community level, as opposed to the post level, in this study.

Sundar (2008) argued that due to the large volumes of information on the internet and because of the multiplicity of sources embedded in the numerous layers of online diffusion of content, assessment of credibility becomes difficult. Therefore, users are more likely to evaluate information based on cues, which provide mental shortcuts for effortlessly assessing the believability of information being received. An authority heuristic is triggered when a “topic expert or official authority is identified as the source of content” (p. 84). If an interface, such as a website, identifies itself as an authority even simply through “social presence”, it bestows “importance, believability, and pedigree to the content provided by that source” (p. 84), therefore, positively impacting its credibility (Sundar 2008). In fact, information from health professional moderators is viewed by users as that from an expert whom users view as an authority (Vennik et al. 2014). Additionally, manipulation of source expertise through credentials in OHCs has also been used for credibility research to study the effect of attractiveness of OHC comments (Jung et al. 2018).

In the same discussion about technological affordances, Sundar (2008) also stated that interaction cues present on an interface solicit a user’s input and trigger the interaction heuristic. This heuristic indicates that users have the option to specify their needs and preferences in the technology on an ongoing basis. Additionally, endorsements through likes can also trigger the bandwagon heuristic by acting as solicitors of interaction, engagement, and endorsement of content. They encourage users to participate in content being discussed online and lead to quick, one-click engagement among users of a platform. This cues to interactivity and on-going conversations and even cues to the presence of social support—an important element of OHCs (Carr et al. 2016; Hayes et al. 2016a, 2016b; Wohn et al. 2016). Therefore, these cues might play a role in credibility determination among new users.

2.3. Moderator Credentials and Expertise

One of the underlying dimensions of source credibility is expertise. Based on the complex “source” in the case of OHCs, moderators act as authority figures that view and regulate the community. Moderators with health-related credentials would provide ‘expertise’ within an OHC framework. The lack of expertise in an OHC can lead to the spread of misinformation among its users (Huh et al. 2016a). Health professional moderators can reduce this potentially problematic issue in OHCs by assisting users to distinguish between valuable and misleading medical information, and providing them with clinical expertise (Atanasova et al. 2017). New users may view their credentials as an indication of this expertise, thereby increasing credibility perceptions. Furthermore, past research dictates that users want health professionals as moderators. Huh et al. (2016) discovered that even in communities with health professional moderators, like WebMD, users complained if, having been promised an expert within the community, they did not encounter them. This happened in situations where explicit signatures for the professional moderators were lacking, indicating that users pay attention to the professional’s signatures (or something equivalent that clarified their professional training). This shows that users notice cues to process the presence of expertise more than the actual presence of expertise. Another study conducted by Vennik et al. (2014) found that patients actually prefer responses from health professionals due to the ‘expert status’ and expertise in healthcare.

As discussed above, credibility evaluation often occurs online through cues, which in turn trigger heuristics (Metzger and Flanagin 2013; Metzger et al. 2010). In this case, the presence of credentials (such as ‘Dr.’ or ‘RN’) indicating moderators’ expertise in an OHC would potentially make it seem more credible. In fact, the “Dr” versus “Mr” argument has been recognized in research (Crisci and Kassinove 1973; Fogg et al. 2001; Sundar 2008) where participants tend to accept recommendations more when the source is identified as “Dr” rather than “Mr” Additionally, users tend to equate expertise to credibility, making a generalization based on their past experiences (Sundar 2008). For these reasons, having credentialed moderators might affect the expertise dimension of OHCs. Thus, we propose the following:

Hypothesis 1 (H1).

Presence of credentialed moderators in an OHC will lead to higher perceived expertise within the community among new users as compared to uncredentialed moderators.

In this study we hypothesize an effect of moderator credentials on the expertise dimension of source credibility. However, the question about the moderator credentials on trustworthiness (the other dimension of source credibility) can also be raised. For instance, Jessen and Jørgensen (2012) state that in assessing credibility, online users would look at who wrote the information to determine whether the author is trustworthy and an authority on the matter, and if yes, strong credentials would serve as credibility cues. It is possible that this argument could also apply to OHCs where health experts moderate the community and having these topic experts makes the community trustworthy. Therefore, it would be worthwhile to explore if the presence of health expert credentials affects the trustworthiness dimension of credibility. Therefore, the following is posed:

RQ1:

Do moderator credentials in OHCs have an effect on perceived trustworthiness within the community among new users?

2.4. Endorsement Cues and Trustworthiness

The second dimension underlying source credibility is trustworthiness. In keeping with the goal of determining how new visitors of OHCs might come to credibility decisions, we focused on endorsement cues available to users. A commonplace presence of online platforms are engagement tools, such as, ‘likes’ or ‘favorites’ to show a user’s preference for a post. While Facebook has expanded their engagement tools to include many different reactions (such as love, funny, anger, etc.), most platforms, including OHCs, use a single form of engagement. These forms of engagement cue to users of a platform the endorsement of others toward specific content posted. Additionally, likes on Facebook that cue endorsement or liking “serve as important signals of trustworthiness, because they indicate the extent to which the profile owner is liked, approved of, supported, and socially integrated” (Toma 2014, p. 497). Further, the more likes a user receives, the more trustworthy they are perceived to be. This observation is notable because perceived trustworthiness is determined by a third-party viewing liked posts, and not by the person who created the post. The endorsement itself could imply different things. For instance, the like provides social feedback (Sutcliffe et al. 2011), and acts as an indicator of online popularity (Jin et al. 2015). Additionally, features like the Facebook like and comments have been linked to bridging social capital (Ellison et al. 2014) purchase intentions, brand evaluation (Naylor et al. 2012), quality relationships, enhanced social presence (Hajli et al. 2015; Liang and Turban 2011; Naylor et al. 2012) and social support (Ellison et al. 2014; Liang and Turban 2011). More importantly, the likes provide endorsement that can be viewed as the most trusted source of influence (Nielsen 2015).

These technological tools help users engage in “lightweight acts of communication” (p. 172) and have been conceptualized as paralinguistic digital affordances (PDAs) by Hayes et al. (2016b). They help users communicate without using specific language. Thus, providing a like to a post is akin to stating an agreement or endorsement of a post. In addition to straightforward endorsement of a post, the number of PDAs received on a post was found to be a significant predictor of perceived social support in social media across different social media platforms (Wohn et al. 2016). This is a notable finding when viewed through the context of OHCs because of the importance of social support exchanged in OHCs (Huh et al. 2016; Kanthawala et al. 2016; Nambisan 2011; Nambisan et al. 2016).

Now, Jessen and Jørgensen (2012) explicate that social elements such as likes promote an “aggregated trustworthiness”. They state that feedback of others in online contexts plays an important role when assessing credibility (Ljung and Wahlforss 2008; Weinschenk 2009). By evaluating other individuals’ assessments (such as likes, upvotes, etc.) users are exposed to a kind of collective judgment that helps with their own evaluations (Weinschenk 2009). Therefore, features such as endorsement cues or PDAs play a key role in large-scale feedback systems and guide users’ evaluation of credibility. These features provide a broader spectrum of validation, and this kind of social validation shows that the more people acknowledge a certain piece of information, the more trustworthy it is perceived to be (Jessen and Jørgensen 2012). It is important to note that the number of endorsements received by a post has been a cause for concern for users’ mental health on some platforms. Facebook and Instagram, for instance, will allow users to choose to hide like counts on their posts as well as the posts they see on their feeds. This action, however, is unlikely to bring about actual change in users’ mental health (Heilweil 2021). However, this disabling of likes has not occurred in OHCs (or most other forum-like platforms) and their presence continues to persist.

Through the above arguments we can see how endorsement cues might promote trustworthiness. In order to emulate real OHCs and determine if endorsement cues on these platforms do promote trustworthiness, we hypothesize:

Hypothesis 2 (H2).

Endorsement cues in an OHC will lead to a higher perceived trustworthiness within the community among new users than an OHC without endorsement cues.

Although virtually no literature is available regarding the relationship between endorsement cues and perceived expertise, it is worthwhile to explore whether cues also affect the second dimension of credibility. Previous studies have often focused on credibility as a whole. By delving into expertise, this study looks at source credibility dimensionally and provides insights into which dimension the PDAs affect. Therefore, the following research question is posed:

RQ2:

Do endorsement cues in OHCs have an effect on perceived expertise within the community among new users?

3. Materials and Methods

3.1. Study Design

For the purposes of this study, a fake OHC, which we called Health Connect, was created on a Drupal platform. A fake discussion forum was created emulating a number of real OHCs observed through internet searches. A discussion forum (or forum) refers to the specific space on the OHC where posts occurred (i.e., where questions and responses were posted). The terms OHC and discussion forum are sometimes used interchangeably, but here we make this distinction for the sake of simplicity (where the community at large is the OHC and the “forums” are where the posts are). The forum was filled with a small sample of publicly available discussion posts from WebMD’s weight loss community that were deleted and saved to a SQL server. A weight loss community was selected for this study due to the popularity of OHCs in this sphere and the higher likelihood of participants being familiar with navigating through weight loss.

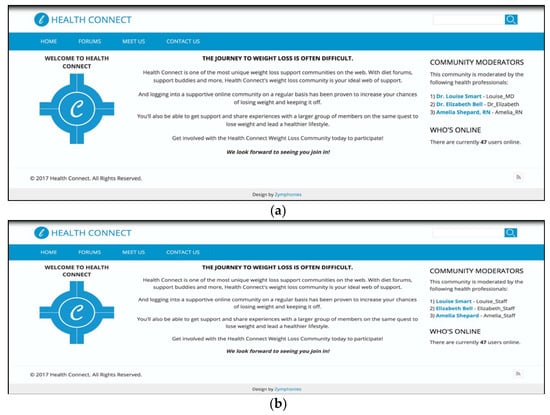

Three additional copies of Health Connect were created (one for each 2X2 condition). The same selected discussion threads (question + responses) were presented across all conditions to keep information within the communities constant. Each version of Health Connect had a homepage containing a description of Health Connect and a list of the moderators of the community (along with their health credentials such as Dr. or RN or stating that they were simply staff moderators). These were real moderators identified from the WebMD dataset, but their names were highlighted on the homepage (Figure 1a,b shows screenshots of the homepage).

Figure 1.

Health Connect stimuli: (a) Health Connect with credentialed moderators; (b) Health Connect with staff moderators.

The second page of Health Connect was the ‘forum’. Two out of four conditions contained likes on the questions and responses (number of likes assigned according to explanation below), whereas the other two did not. The third page was a standard ‘contact us’ form. In order to fine tune these stimuli, they were pre-tested by having people (N = 20; recruited from the local community and compensated with $20 for their participation) come into a physical lab space and complete a think-aloud exercise (Lewis 1982). In addition to determining if the stimuli were successful, the pre-test also allowed us to determine if the participants believed that Health Connect could be a real, small, and new OHC. Based on participants’ feedback, an additional page was added to Health Connect to include the moderators’ biographies. We reviewed biographies of real moderators in OHCs (three with professional credentials and three without) and edited their usernames to match those in our study.

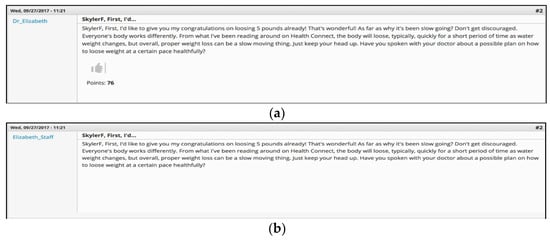

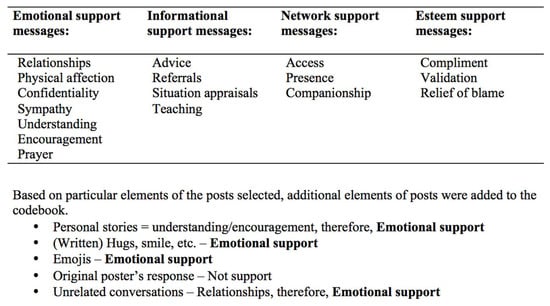

Since the original posts from WebMD did not include likes on them, we had to decide what posts should have likes and how many. Since this was a manual procedure, the number of discussion threads had to be kept at a manageable number (instead of including all the WebMD discussion posts). To do this, we first selected all posts with 5 responses to the original question posed. This number was selected so as to have the flexibility to manage the discussion posts while at the same time allowing for the perception of existing conversations within the OHC. From these posts, 10 randomly selected threads were loaded into the communities. We had to keep in mind to not like posts that might give out seemingly unhelpful information. Therefore, instead of arbitrarily assigning likes to posts we deemed “good” or “helpful”, a decision was made to assign likes according to the social support these posts provided (since social support is an important and common element of OHCs and is also affected by the number of likes on a post, as discussed above). Braithwaite et al. (1999) categorized elements of social support posts in an online platform. A codebook developed based on these codes can be found in Appendix B. The first author coded each post manually for how many types social support it provided. The coded posts were reviewed by both authors to resolve any disagreements. Next, posts with one type of social support were assigned anywhere between 1–50 likes, two types of social support had 50–75 likes, and three types of social support had 75–100 likes. The conditions without endorsement cues had no like button. Figure 2a,b include the screen captures of posts with vs. without PDAs.

Figure 2.

(a) Health Connect post with PDAs; (b) Health Connect with no PDAs.

Once the final versions of Health Connect were created, the study was launched on Amazon’s Mechanical Turk (MTurk) where a Qualtrics link randomly assigned participants to one of the four study conditions. Participants for this study were located in the United States, above the age of 18, and compensated with $2 to participate in the study. The inclusion criteria of participants were (1) they currently or in the past had been interested in weight management and (2) whether they had used any online communities at any time in the last 5 years. In total, 373 participants took part in the study. Among them 60.4% were male and 74.1% were Caucasian. A more detailed description of participant demographics can be found in Table 1. Additionally, 72.2% said they used social media sites (such as Facebook) every day. Participants were moderately interested in weight management (M = 2.89, SD = 0.42), and used social media and online communities about once a week (M = 4.60, SD = 1.07).

Table 1.

Participant demographics.

3.2. Measures

Participants were first prompted to explore and interact with the community, and then respond to the questions in the survey measured as explained below.

Perceived credibility. Ohanian (1990) developed and validated 7-point semantic differential scales for perceived trustworthiness and expertise and established their reliability and validity. Additionally, based on the idea that different website features might lead to an overall perception of trustworthiness and expertise, and therefore, source credibility, Flanagin and Metzger (2007) developed a 22-item scale adapted from past source credibility scales. Items from both these scales were adapted to create one scale measuring perceived OHC expertise and trustworthiness and were measured on a 7-point Likert scale ranging from 1 (strongly disagree) to 7 (strongly agree).

A reliability test was run on both dimensions of the credibility scale. Cronbach’s alpha for the expertise dimension was 0.93. Three items were dropped to improve the reliability of the trustworthiness dimension (α = 0.92). A confirmation factor analysis was also conducted to validate the measure. The model fit indices for the credibility scale made up of two dimensions, expertise and trustworthiness, were as follows: chi-squared was 500.327, degrees of freedom was 118, and the p value associated with the chi-squared test was <0.001. The comparative fit index was 0.92, and the root mean square error of approximation was 0.095. The fit indices indicate that the model fit the data adequately.

Perceived social support. Measured as a manipulation check since the likes were operationalized through social support, measures for a validated perceived social support scale (α = 0.96) were adapted to the requirements of this study (Nambisan et al. 2016). These items were measured on a 7-point Likert scale ranging from 1 (extremely unlikely) to 7 (extremely likely).

Demographics. Demographic information including age, gender, social media and other Internet use (such as forums, Q&A websites, blogs, etc., asked on a 7-pont scale from 1 = never to 7 = daily), and weight management involvement (interest in health, exercise, and fitness asked as 3 separated items on a 4-point scale where 1 = not at all interested, 2 = slightly interested, 3 = moderately interested, and 4 = neither interested nor uninterested).

4. Results

We had two forms of manipulation checks in our survey. First, we checked whether participants had noticed the moderators’ health credentials in the relevant conditions and found that the sample included 55 respondents who answered opposite to what condition they were in. Eliminating these participants did not lead to a change in the results and therefore, instead of treating this pool of participants as individuals who failed a manipulation check, we recognize that users might sometimes misinterpret what a ‘moderator’ or ‘credentials’ could be in a between-subjects experiment since they do not have a frame of reference. They could have interpreted the presence of staff moderators as those with credentials, for instance.

First, we ran a one-way between subjects ANOVA to compare the effect of moderators with health credentials on the expertise dimension of perceived credibility. We found a significant effect of health credentials on perceived expertise, F(1, 371) = 56.14, p = 0.000, η2 = 0.131. However, the assumption of homogeneity of variance was not met for this data, therefore, so we used the Welch’s adjusted F ratio, which was statistically significant, F(1, 341.99) = 55.74, p < 0.001. Thus, H1 was supported.

We also ran a one-way between-subjects ANOVA to compare the effect of moderators with health credentials on the trustworthiness dimension of perceived credibility. We found a significant effect of health credentials on trustworthiness, F(1, 371) = 15.71, p < 0.001, η2 = 0.041. Since the assumption of homogeneity of variance was not met for this data, we used the Welch’s adjusted F ratio, which was statistically significant, F(1, 344.14) = 15.61, p < 0.001. Therefore, we see that moderators’ health credentials have an effect on the trustworthiness dimension of source credibility too, thereby answering RQ1 (Table 2).

Table 2.

Results of One-Way ANOVA tests.

Next, we checked perceived social support that users felt from Health Connect. An independent-samples t-test was conducted to compare perceived social support in endorsement cues and no endorsement cues conditions. We found there was no significant difference for endorsement cues (M = 5.47, SD = 0.87) and no endorsement cues (M = 5.48, SD = 0.88) conditions; t(371) = 0.077, p = 0.93. This indicated that the social support was not elicited successfully through the endorsement cues (i.e., likes). Therefore H2 was not supported and RQ2 could not be successfully answered. The means and standard deviations of all measures across conditions are in Table 3.

Table 3.

Means and standard deviations. M—Mean; SD—Standard Deviation; Condition 1—Moderators with health credentials + PDAs; Condition 2—Moderators with no health credentials + PDAs; Condition 3—Moderators with health credentials + no PDAs; Condition 4—Moderators with no health credentials + no PDAs.

Finally, we ran post-hoc correlations between perceived social support, perceived credibility, and covariates. There was a positive correlation between perceived social support and perceived expertise, r = 0.623, n = 373, p < 0.001, as well as between perceived social support and perceived trustworthiness, r = 0.721, n = 373, p < 0.001.

5. Discussion

We conducted an experiment to determine how two specific types of cues present in OHCs affect new users’ perceptions of credibility of the OHC. By manipulating the credentials of the moderators, we aimed to examine its effect on the expertise dimension of credibility. We also manipulated the presence of endorsement cues to examine its effect on the trustworthiness dimension of credibility. Our findings are unique since, to our knowledge, this is the only study that has created a simulated version of a functional OHC. Even past studies that emulate OHCs have generally provided screenshots or similar stimuli to participants to solicit opinions.

We found that the presence of professional credentials had an effect on the expertise dimension of credibility, thereby answering H1. These credentials also had an effect on the trustworthiness dimension of credibility, thereby positively answering RQ1.

Effect sizes (partial eta square) between conditions are important to note. Moderators’ credentials had a near-large effect of 13.1% (large effect size = 0.137) (Cohen 1988) on perceived expertise of credibility in OHCs. Credentials also led to a near-medium effect of a 4.1% in perceived trustworthiness (medium effect size = 0.053) (Cohen 1988), indicating that expert moderators do play a moderate role in perceived trustworthiness of credibility in OHCs. These effect sizes and their impacts are comparable or higher than other studies that focus on credibility research within the field (Srivastava 2013; Xu 2013; Jung et al. 2016; Hawkins et al. 2021). Despite this, we acknowledge that there in much discussion within the field about the meaningfulness of effect sizes (Schäfer and Schwarz 2019) and the calls for updating the benchmark values (Bakker et al. 2019). While the cut offs for small, medium, and large effect sizes might be subjective, the effects do fall under the higher ranges of acceptability. Furthermore, while it is outside the scope of this work to re-define effect size benchmarks, we note that moderator credentials have an effect on both major dimensions of credibility, we extrapolate that this variable has an effect on the perceived source credibility of OHCs.

Moderators play an important role in online communities, in general (Coulson and Shaw 2013; Huh 2015; Huh et al. 2013, 2016; Park et al. 2016). However, in OHCs there is an added layer of the dangers of misinformed or incorrect health information. Now, it is probably unlikely that any moderator would be able to read every post unless the community requires moderator approval prior to posting. However, we hypothesized that the presence of medically trained moderators will still lend a sense of perceived credibility to the community as a whole. This means that, while we manipulated only the credentials of the moderators, this had a rolling impact and affected the credibility of the OHC as a whole.

Thus, it is possible that OHCs have two levels at which an individual can determine credibility—the content level, i.e., determining if specific content in the community is credible, and community level, i.e., determining if the community as a whole is credible. This finding has two implications. First, it builds on past literature that explicates the role of expertise in the determination of credibility. Where most of the traditional work done with regard to source credibility, even on online platforms, has looked at more traditional website platforms (Eastin 2001; Hu and Shyam Sundar 2010; Morahan-Martin 2004; Spence et al. 2013) this work focuses on OHC-type platforms with discussion forums where most of the content is user-generated. This finding is interesting to note because it can have implications beyond just in OHCs. Anywhere there is a presence of oversight on a platform, especially in the health context, it is possible that the presence of credentialed experts raises the credibility of the whole platform.

Second, for a platform such as an OHC, with primarily user-generated content, expertise cues (in this case, moderator credentials), lend it authority (Sundar 2008). Where past research has focused on source credibility online by defining the source as an individual that creates the content that is disseminated among an audience (a one-to-many approach), OHCs have a large number of content creators (users of the community), many of whom create information for anyone to consume (many-to-many approach). Thus, our finding can be applied not only to different types of OHCs, but also different platforms that deal with similar user-generated content. For example, the largest type of user-generated platforms are social media platforms. It would be difficult to have moderators for such a platform, especially considering their size and variation in topics of discussion. However, novel approaches for equivalent moderation should be considered. Some platforms already have moderation in place. For example, Twitch, a livestreaming platform where people broadcast their live videos and interact with viewers via a chat function, has different “layers” of moderation, including crowdsourced, algorithmic, and human moderation, on their platform (Wohn 2019). Therefore, providing oversight that lends expertise to a platform can increase credibility. This role of providing topic expertise in such existing spaces and delving into how this can be applied to other online platforms should be explored. Furthermore, past research has shown that credibility perceptions lead to behavioral outcomes. This is especially important in the health context and providing users with credentialed oversight can only help improve the quality of information exchanged in these platforms and increase their sense of comfort within this space.

As discussed above, endorsement cues play various roles in online platforms. Our findings indicated no difference between the conditions with likes vs. no likes. While this could be viewed as a limitation, we consider it to be a valuable finding that helps determine that the presence of likes in OHCs does not play a role in determining perceived trustworthiness and by extension, credibility perceptions within the community.

Therefore, while endorsement cues might provide social validation, depict signals of endorsement, and serve as signs of trustworthiness for pieces of information or how much a profile owner maybe liked (Jessen and Jørgensen 2012; Toma 2014), their absence does not play a role in credibility determination. It is possible that the commonplace nature of these cues in and around social media platforms has made users immune to their presence. Based on these findings we can say that H2 is not supported. Additionally, endorsement cues do not have an effect on the expertise dimension of credibility either, thereby answering RQ2.

Due to the important role played by social support in OHCs and the fact that likes have been known to indicate social support, we conducted a post-hoc analysis to determine if there was a correlation between social support and the dimensions of perceived source credibility. We found there was a positive correlation between perceived social support and both dimensions of perceived credibility indicating that both these variables vary similarly (i.e., a user perceiving social support within the OHC would mean they would perceive it to be credible as well). This indicates that social support is positively related to perceived credibility, thereby demonstrating the importance of having a supporting community. Social support is an already well-established component of OHCs, thus, it is possible that the social support exchanged through the content of the community plays a strong enough role to affect credibility. Our work adds to the literature that despite not being the recipient of the social support, people still viewed it being exchanged and perceived its presence.

Therefore, developers should strive to create a supportive environment within the OHC since such an environment can attest to positive credibility perceptions of the community. Additionally, future studies can attempt to parse the different types of social support in an effort to determine if one type plays a larger role in relation to credibility or not.

Thus, our findings are important to OHC designers and developers who now have more insights into what users want and what helps them feel safe and would make them trust an OHC. Additionally, our findings could also help users themselves get better insights into what affects their own perceptions of credibility. Finally, we add to the knowledge of social support within OHCs and demonstrate that simply perceiving social support within the community correlates to higher credibility perceptions, though this perception is not due to likes within an OHC. This especially adds to past work that has found that PDAs, such as likes, result in social support when the like is received on a message one posted themselves (Carr et al. 2016; Hayes et al. 2016a, 2016b; Wohn et al. 2016). Here we note that observing social support within the community (i.e., a third-person perspective) also has an important role in OHCs.

6. Conclusions

Despite our best efforts, we acknowledge some limitations of this study. Since this study focuses specifically on cues that assist with credibility determination, it could be argued that simply including false or inaccurate credentials assigned to moderators can raise a platform’s credibility. However, in today’s media landscape filled with fake news and misinformation, this can occur anywhere. Our findings provide insight into the design and affordances that such platforms ideally should provide to better serve their users, rather than guide misusers.

One of our manipulations did not produce the intended outcome. Future research could further manipulate PDAs in OHCs to better understand the role they play in affecting perceived credibility within OHCs. Despite this failure, our participants did feel the presence of social support within the community (across both conditions). Thus, it is likely that this perception of social support for first timers comes organically from the content itself (i.e., the discussions among existing users of the OHC) rather than PDA cues and is therefore processed more in-depth, such as through HSM’s systematic route or ELM’s central processing. Future research should manipulate the content of these posts in order to determine the role of social support on credibility.

The participants for this study were recruited through MTurk. While this participant pool was selected because of their familiarity with online platforms such as OHCs, it is possible that this sample affected our results—these participants could be overly familiar with the PDAs employed in the study and therefore, ignored it. Future studies can employ these manipulations on different populations (for e.g., those less active on social media) to see if different outcomes are obtained. While this is a limitation of the study, it is also important to note that it is more likely that more frequent users of the Internet and social media would choose to visit platforms such as Health Connect.

Finally, our data were collected from a US sample. Future research should expand this focus to users in different countries to better understand what people in different countries focus on as important in terms of the credibility of OHCs. These communities are especially attractive since they can connect people across the planet, and future work should take this globalization into account.

We conducted an online experiment to test the effects of interface cues on the trustworthiness and expertise dimensions of source credibility in OHCs. We found that having moderators with professional health credentials has an effect on both the expertise and trustworthiness dimensions of source credibility, whereas the presence of PDAs do affect credibility perceptions in an OHC. Finally, our post-hoc analyses showed that perceived social support was positively related to perceived credibility. This established the inter-connected nature between social support and credibility, indicating that a socially supportive community would be more likely perceived to be credible, and vice-versa—something OHC developers should keep in mind.

Overall, OHCs are an established and still growing platform for users of a multitude of illnesses, their caretakers, as well as healthy people who wish to manage their well-being. Where this study focuses specifically on a weight loss OHC, future research may examine whether the findings can potentially be extended to OHCs focusing on different health conditions. By understanding how users determine credibility of OHCs, we can better learn what specific elements are important when developing these OHCs. While some factors such as social support cannot be created and added to a community, technical affordances that can create an atmosphere where users could organically begin to support one another can be crafted. Other factors, such as moderators with health credentials, can be specifically added to the community. It is possible that what users perceive as credible is something they might choose to use in the future.

Author Contributions

Conceptualization, S.K. and W.P.; methodology, S.K. and W.P.; formal analysis, S.K. and W.P.; writing—original draft preparation, S.K. and W.P.; writing—review and editing, S.K. and W.P. Both authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Institutional Review Board of Michigan State University (IRB# x17-505eD and date of approval 4 April 2017).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All the datasets are available through the corresponding author.

Acknowledgments

This material is based upon work supported by the National Science Foundation under Grant No. 1350253.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

WebMD Communities example post.

Figure A2.

Patient example page.

Appendix B

Figure A3.

Codebook for types of social support based on Braithwaite et al. (1999).

References

- Atanasova, Sara, Tanja Kamin, and Gregor Petrič. 2017. Exploring the benefits and challenges of health professionals’ participation in online health communities: Emergence of (dis)empowerment processes and outcomes. International Journal of Medical Informatics 98: 13–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Austin, Erica Weintraub, and Qingwen Dong. 1994. Source v. content effects on judgments of news believability. Journalism & Mass Communication Quarterly 71: 973–83. [Google Scholar] [CrossRef]

- Bakker, Arthur, Jinfa Cai, Lyn English, Gabriele Kaiser, Vilma Mesa, and Wim Van Dooren. 2019. Beyond small, medium, or large: Points of consideration when interpreting effect sizes. Educational Studies in Mathematics 102: 1–8. [Google Scholar] [CrossRef] [Green Version]

- Baum, Julia, and Abdel Rasha Rahman. 2021. Emotional news affects social judgments independent of perceived media credibility. Social Cognitive and Affective Neuroscience 16: 280–91. [Google Scholar] [CrossRef] [PubMed]

- Braithwaite, Dawn O., Vincent R. Waldron, and Jerry Finn. 1999. Communication of social support in computer-mediated groups for people with disabilities. Health Communication 11: 123–51. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carr, Caleb T., D. Yvette Wohn, and Rebecca A. Hayes. 2016. As social support: Relational closeness, automaticity, and interpreting social support from paralinguistic digital affordances in social media. Computers in Human Behavior 62: 385–93. [Google Scholar] [CrossRef]

- Carron-Arthur, Bradley, Kathina Ali, John Alastair Cunningham, and Kathleen Margaret Griffiths. 2015. From help-seekers to influential users: A systematic review of participation styles in online health communities. Journal of medical Internet Research 17: e271. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, Langtao, Aaron Baird, and Detmar Straub. 2020. A linguistic signaling model of social support exchange in online health communities. Decision Support Systems 130: 113233. [Google Scholar] [CrossRef]

- Cohen, Jacob. 1988. Statistical Power Analysis for the Behavioral Sciences, 2nd ed. Hillsdale: Erlbaum. [Google Scholar]

- Coulson, Neil S., and Rachel L. Shaw. 2013. Nurturing health-related online support groups: Exploring the experiences of patient moderators. Computers in Human Behavior 29: 1695–701. [Google Scholar] [CrossRef] [Green Version]

- Crisci, Richard, and Howard Kassinove. 1973. Effect of perceived expertise, strength of advice, and environmental setting on parental compliance. The Journal of Social Psychology 89: 245–50. [Google Scholar] [CrossRef]

- Eastin, Matthew S. 2001. Credibility assessments of online health information: The effects of source expertise and knowledge of content. Journal of Computer-Mediated Communication 6. [Google Scholar] [CrossRef]

- Ellison, Nicole B., Jessica Vitak, Rebecca Gray, and Cliff Lampe. 2014. Cultivating social resources on social network sites: Facebook relationship maintenance behaviors and their role in social capital processes. Journal of Computer-Mediated Communication 19: 855–70. [Google Scholar] [CrossRef] [Green Version]

- Flanagin, Andrew J., and Miriam J. Metzger. 2007. The role of site features, user attributes, and information verification behaviors on the perceived credibility of web-based information. New Media & Society 9: 319–42. [Google Scholar] [CrossRef]

- Fogg, Brian J., Jonathan A Marshall, Othman Laraki, Alex Osipovich, Chris Varma, Nicholas Fang, Jyoti Paul, Akshay Rangnekar, John Shon, Preeti Swani, and et al. 2001. What makes web sites credible? A report on a large quantitative study. SIGCHI Conference on Human Factors in Computing Systems, 61–68. [Google Scholar] [CrossRef]

- Gui, Xinning, Yu Chen, Yubo Kou, Katie Pine, and Yunan Chen. 2017. Investigating support seeking from peers for pregnancy in online health communities. Proceedings of the ACM on Human-Computer Interaction 1: 1–19. [Google Scholar] [CrossRef]

- Hajli, M. Nick, Julian Sims, Mauricio Featherman, and Peter E. D. Love. 2015. Credibility of information in online communities. Journal of Strategic Marketing 23: 238–53. [Google Scholar] [CrossRef]

- Hawkins, Lily, Claire Farrow, and Jason M. Thomas. 2021. Does exposure to socially endorsed food images on social media influence food intake? Appetite 165: 105424. [Google Scholar] [CrossRef]

- Hayes, Rebecca. A., Caleb. T. Carr, and D. Yvette Wohn. 2016a. It’s the audience: Differences in social support across social media. Social Media + Society 2. [Google Scholar] [CrossRef] [Green Version]

- Hayes, Rebecca A., Caleb T. Carr, and D. Yvette Wohn. 2016b. One click, many meanings: Interpreting paralinguistic digital affordances in social media. Journal of Broadcasting & Electronic Media 60: 171–87. [Google Scholar] [CrossRef]

- Heilweil, Rebecca. 2021. Facebook’s empty promise of hiding “Likes”. Vox. Available online: https://www.vox.com/recode/2021/5/26/22454869/facebook-instagram-likes-follower-count-social-media (accessed on 24 June 2021).

- Hmielowski, Jay D., Sarah Staggs, Myiah J. Hutchens, and Michael A. Beam. 2020. Talking Politics: The Relationship Between Supportive and Opposing Discussion with Partisan Media Credibility and Use. Communication Research. [Google Scholar] [CrossRef]

- Hovland, Carl I., and Walter Weiss. 1951. The influence of source credibility on communication effectiveness. Public Opinion Quarterly 15: 635–50. [Google Scholar] [CrossRef]

- Hovland, Carl I., Irving L. Janis, and Harold H. Kelley. 1953. Communication and persuasion: Psychological studies of opinion change. American Sociological Review 19: 355. [Google Scholar] [CrossRef]

- Hu, Yifeng, and S. Shyam Sundar. 2010. Effects of online health sources on credibility and behavioral intentions. Communication Research 37: 105–32. [Google Scholar] [CrossRef]

- Huh, Jina. 2015. Clinical questions in online health communities: The case of “see your doctor” Threads. Paper presented at 18th ACM Conference on Computer Supported Cooperative Work & Social Computing—CSCW’15, Vancouver, BC, Canada, March 14–18; pp. 1488–99. [Google Scholar] [CrossRef] [Green Version]

- Huh, Jina, David W. Mcdonald, Andrea Hartzler, and Wanda Pratt. 2013. Patient moderator interaction in online health communities. Proceedings of American Medical Informatics Association 2013: 627–36. [Google Scholar]

- Huh, Jina, Rebecca Marmor, and Xiaoqian Jiang. 2016. Lessons learned for online health community moderator roles: A mixed-methods study of moderators resigning from WebMD communities. Journal of Medical Internet Research 18: e247. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jessen, Johan, and Anker Helms Jørgensen. 2012. Aggregated trustworthiness: Redefining online credibility through social validation. First Monday 17. [Google Scholar] [CrossRef]

- Jin, Seunga Venus, Joe Phua, and Kwan Min Lee. 2015. Telling stories about breastfeeding through Facebook: The impact of user-generated content (UGC) on pro-breastfeeding attitudes. Computers in Human Behavior 46: 6–17. [Google Scholar] [CrossRef]

- Jung, Eun Hwa, Kim Walsh-Childers, and Hyang-Sook Kim. 2016. Factors influencing the perceived credibility of diet-nutrition information web sites. Computers in Human Behavior 58: 37–47. [Google Scholar] [CrossRef]

- Jung, Wan S., Mun-Young Chung, and E. Soo Rhee. 2018. The Effects of Attractiveness and Source Expertise on Online Health Sites. Health Communication 33: 962–71. [Google Scholar] [CrossRef]

- Kanthawala, Shaheen, Amber Vermeesch, Barbara Given, and Jina Huh. 2016. Answers to health questions: Internet search results versus online health community responses. Journal of Medical Internet Research 18: e95. [Google Scholar] [CrossRef] [Green Version]

- Kanthawala, Shaheen, Eunsin Joo, Anastasia Kononova, Wei Peng, and Shelia Cotten. 2018. Folk theorizing the quality and credibility of health apps. Mobile Media and Communication 7: 175–94. [Google Scholar] [CrossRef]

- Lewis, Clayton. 1982. Using the “Thinking Aloud” Method in Cognitive Interface Design. IBM Research Report RC-9265. Yorktown Heights: IBM TJ Watson Research Center. [Google Scholar]

- Li, You, and Ye Wang. 2019. Brand disclosure and source partiality affect native advertising recognition and media credibility. Newspaper Research Journal 40: 299–316. [Google Scholar] [CrossRef]

- Liang, Ting-Peng, and Efraim Turban. 2011. Introduction to the special issue social commerce: A research framework for social commerce. International Journal of Electronic Commerce 16: 5–13. [Google Scholar] [CrossRef] [Green Version]

- Liu, Shan, Wenyi Xiao, Chao Fang, Xing Zhang, and Jiabao Lin. 2020. Social support, belongingness, and value co-creation behaviors in online health communities. Telematics and Informatics 50: 101398. [Google Scholar] [CrossRef]

- Ljung, Alexander, and Eric Wahlforss. 2008. People, Profiles and Trust: On Interpersonal Trust in Web-Mediated Social Spaces. Berlin: Lulu.com. [Google Scholar]

- Luo, Chuan, Xin Robert Luo, Laurie Schatzberg, and Choon Ling Sia. 2013. Impact of informational factors on online recommendation credibility: The moderating role of source credibility. Decision Support Systems 56: 92–102. [Google Scholar] [CrossRef]

- Martin, Justin D., and Fouad Hassan. 2020. News Media Credibility Ratings and Perceptions of Online Fake News Exposure in Five Countries. Journalism Studies 21: 2215–33. [Google Scholar] [CrossRef]

- Metzger, Miriam. J., and Andrew J. Flanagin. 2013. Credibility and trust of information in online environments: The use of cognitive heuristics. Journal of Pragmatics 59: 210–20. [Google Scholar] [CrossRef]

- Metzger, Miriam. J., Andrew. J. Flanagin, Karen Eyal, Daisy R. Lemus, and Robert M. McCann. 2003. Credibility for the 21st century: Integrating perspectives on source, message, and media credibility in the contemporary media environment. Annals of the International Communication Association 27: 293–335. [Google Scholar] [CrossRef]

- Metzger, Miriam J., Andrew J. Flanagin, and Ryan B. Medders. 2010. Social and heuristic approaches to credibility evaluation online. Journal of Communication 60: 413–39. [Google Scholar] [CrossRef] [Green Version]

- Morahan-Martin, Janet M. 2004. How internet users find, evaluate, and use online health information: A cross-cultural review. Cyberpsychology & Behavior: The Impact of the Internet, Multimedia and Virtual Reality on Behavior and Society 7: 497–510. [Google Scholar] [CrossRef]

- Nambisan, Priya. 2011. Information seeking and social support in online health communities: Impact on patients’ perceived empathy. Journal of the American Medical Informatics Association 18: 298–304. [Google Scholar] [CrossRef] [Green Version]

- Nambisan, Priya, David H. Gustafson, Robert Hawkins, and Suzanne Pingree. 2016. Social support and responsiveness in online patient communities: Impact on service quality perceptions. Health Expectations 19: 87–97. [Google Scholar] [CrossRef] [Green Version]

- Naylor, Rebecca Walker, Cait Poynor Lamberton, and Patricia M. West. 2012. Beyond the “like” button: The impact of mere virtual presence on brand evaluations and purchase intentions in social media settings. Journal of Marketing 76: 105–20. [Google Scholar] [CrossRef] [Green Version]

- Nielsen. 2015. Global Trust in Advertising: Winning Strategies for an Evolving Media Landscape. Available online: http://www.nielsen.com/content/dam/nielsenglobal/apac/docs/reports/2015/nielsen-global-trust-in-advertising-report-september-2015.pdf (accessed on 24 June 2021).

- Ohanian, Roobina. 1990. Construction and validation of a scale to measure celebrity endorsers’ perceived expertise, trustworthiness, and attractiveness. Journal of Advertising 19: 39–52. [Google Scholar] [CrossRef]

- Park, Deokgun, Simranjit Sachar, Nicholas Diakopoulos, and Niklas Elmqvist. 2016. Supporting comment moderators in identifying high quality online news comments. Paper presented at Conference on Human Factors in Computing Systems, San Jose, CA, USA, May 7–12; pp. 1114–25. [Google Scholar] [CrossRef]

- Rueger, Jasmina, Wilfred Dolfsma, and Rick Aalbers. 2021. Perception of peer advice in online health communities: Access to lay expertise. Social Science & Medicine 277: 113117. [Google Scholar]

- Schäfer, Thomas, and Marcus A. Schwarz. 2019. The meaningfulness of effect sizes in psychological research: Differences between sub-disciplines and the impact of potential biases. Frontiers in Psychology 10: 813. [Google Scholar]

- Sparks, Beverley A., Helen E. Perkins, and Ralf Buckley. 2013. Online travel reviews as persuasive communication: The effects of content type, source, and certification logos on consumer behavior. Tourism Management 39: 1–9. [Google Scholar] [CrossRef] [Green Version]

- Spence, Patric R., Kenneth A. Lachlan, David Westerman, and Stephen A. Spates. 2013. Where the gates matter less: Ethnicity and perceived source credibility in social media health messages. The Howard Journal of Communications 24: 1–16. [Google Scholar] [CrossRef]

- Srivastava, Jatin. 2013. Media multitasking performance: Role of message relevance and formatting cues in online environments. Computers in Human Behavior 29: 888–95. [Google Scholar] [CrossRef]

- Sundar, S. Shyam. 2008. The MAIN model: A heuristic approach to understanding technology effects on credibility. In Digital Media, Youth, and Credibility. Cambridge: The MIT Press, pp. 73–100. [Google Scholar] [CrossRef]

- Sutcliffe, Alistair G., V. Gonzalez, Jens Binder, and G. Nevarez. 2011. Social mediating technologies: Social affordances and functionalities. International Journal of Human-Computer Interaction 27: 1037–65. [Google Scholar] [CrossRef]

- Toma, Catalina Laura. 2014. Counting on friends: Cues to perceived trustworthiness in facebook profiles. Paper presented at Eighth International AAAI Conference on Weblogs and Social Media Counting, Ann Arbor, MI, USA, June 1–4; pp. 495–504. [Google Scholar]

- Tormala, Zakary L., and Richard E. Petty. 2004. Source credibility and attitude certainty: A metacognitive analysis of resistance to persuasion. Journal of Consumer Psychology 14: 427–42. [Google Scholar] [CrossRef]

- Vennik, Femke D., Samantha A. Adams, Marjan J. Faber, and Kim Putters. 2014. Expert and experiential knowledge in the same place: Patients’ experiences with online communities connecting patients and health professionals. Patient Education and Counseling 95: 265–70. [Google Scholar] [CrossRef] [PubMed]

- Weinschenk, Susan M. 2009. Neuro Web Design: What Makes Them Click? Available online: http://dl.acm.org/citation.cfm?id=1538269 (accessed on 20 December 2017).

- Wohn, D. Yvette. 2019. Volunteer moderators in twitch micro communities: How they get involved, the roles they play, and the emotional labor they experience. Paper presented at Conference on Human Factors in Computing Systems, Glasgow, UK, May 4–9; pp. 1–13. [Google Scholar] [CrossRef]

- Wohn, D. Yvette, Caleb T. Carr, and Rebecca A. Hayes. 2016. How affective is a “like”?: The effect of paralinguistic digital affordances on perceived social support. Cyberpsychology, Behavior, and Social Networking 19: 562–66. [Google Scholar] [CrossRef] [PubMed]

- Xie, Jimmy Hui, Li Miao, Pei-Jou Kuo, and Bo-Youn Lee. 2011. Consumers’ responses to ambivalent online hotel reviews: The role of perceived source credibility and pre-decisional disposition. International Journal of Hospitality Management 30: 178–83. [Google Scholar] [CrossRef]

- Xu, Qian. 2013. Social recommendation, source credibility, and recency: Effects of news cues in a social bookmarking website. Journalism & Mass Communication Quarterly 90: 757–75. [Google Scholar]

- Yamamoto, Yusuke, and Katsumi Tanaka. 2011. Enhancing credibility judgment of web search results. Paper presented at SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, May 7–12; pp. 1235–44. [Google Scholar]

- Yang, Diyi, Robert E. Kraut, Tenbroeck Smith, Elijah Mayfield, and Dan Jurafsky. 2019. Seekers, providers, welcomers, and storytellers: Modeling social roles in online health communities. Paper presented at 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, May 4–9; pp. 1–14. [Google Scholar]

- Zhang, Xing, Shan Liu, Zhaohua Deng, and Xing Chen. 2017. Knowledge sharing motivations in online health communities: A comparative study of health professionals and normal users. Computers in Human Behavior 75: 797–810. [Google Scholar] [CrossRef]

- Zhao, Yuehua, Jingwei Da, and Jiaqi Yan. 2021. Detecting health misinformation in online health communities: Incorporating behavioral features into machine learning based approaches. Information Processing & Management 58: 102390. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).