Abstract

This study investigates the application of several machine learning models using PyCaret to forecast the monthly demand for electricity consumption; we analyze historical data of monthly consumption readings for the Cuajone Mining Unit of the company Minera Southern Peru Copper Corporation, recorded in the electricity yearbooks from the decentralized office of the Ministry of Energy and Mines in the Moquegua region between 2008 and 2018. We evaluated the performance of 27 machine learning models available in PyCaret for the forecast of monthly electricity consumption, selecting the three most effective models: Exponential Smoothing, AdaBoost with Conditional Deseasonalize and Detrending and ETS (Error-Trend-Seasonality). We evaluated the performance of these models using eight metrics: MASE, RMSSE, MAE, RMSE, MAPE, SMAPE, R2, and calculation time. Among the analyzed models, Exponential Smoothing demonstrated the best performance with a MASE of 0.8359, an MAE of 4012.24 and an RMSE of 5922.63; among the analyzed models, Exponential Smoothing demonstrated the best performance with a MASE of 0.8359, an MAE of 4012.24 and a RMSE of 5922.63, followed by AdaBoost with Conditional Deseasonalize and Detrending, while ETS also provided competitive results. Forecasts for 2018 were compared with actual data, confirming the high accuracy of these models. These findings provide a robust energy management and planning framework, highlighting the potential of machine learning methodologies to optimize electricity consumption forecasting.

1. Introduction

Predicting has always been fascinating; Thales of Miletus predicted an eclipse on 28 May 585 BC.

Since the invention of the electric light bulb in the 19th century, electric energy has been introduced into home lighting [1]. As a strategic and valuable resource, electric energy decisively determines the functioning of a chain of economic variables that influence a region’s economy and, therefore, a country. In the case of strategic companies in the country’s regions, it constitutes a convenient and valuable resource to make predictions about, as this would allow for more precise and efficient management [2].

The data from the research in this area are at the regional level. Due to this issue, it is possible to make projections regarding this valuable resource, which is becoming increasingly necessary to manage efficiently.

Electricity consumption forecasting has been the subject of extensive research, with numerous models and methodologies developed over the years. Traditional statistical methods, such as Exponential Smoothing and ARIMA, have been widely used for their simplicity and effectiveness in capturing linear patterns in time series data [3]. However, with the advent of machine learning, more sophisticated models have emerged, offering the potential to capture complex, non-linear relationships in the data.

Numerous machine learning models have been used to predict electricity consumption in recent years, each with advantages and disadvantages. For example, decision trees and random forests have received praise for their interpretability and robustness [4], while neural networks and support vector machines are known for their ability to handle large amounts of data and capture complex patterns [5].

PyCaret, an open source and low-code Python-based machine learning library, offers a variety of tools to automate machine learning workflows [6], such as Random Forest [7], Gradient Boosting [8], Light Gradient Boosting [9], Polynomial Trend Forecaster [10], Extreme Gradient Boosting [11], Theta Forecaster, ARIMA [12], Seasonal Naive Forecaster [13], Lasso Least Angular Regressor [14], Decision Tree with Conditional Deseasonalize and Detrending [15], Extra Trees Regressor [16], Gradient Boosting Regressor [17], Random Forest Regressor [18], Light Gradient Boosting Machine [19] Naive Forecaster [20], Croston [21], Auto ARIMA [22], and Bayesian Ridge [23]. By leveraging PyCaret, this study aims to systematically evaluate and compare 27 different machine learning models for forecasting monthly electricity consumption. We assess the models using eight evaluation metrics: MASE, RMSSE, MAE, RMSE, MAPE, SMAPE, R2, and computation time (TT) [6,24,25].

PyCaret offers a wide variety of machine-learning modules, such as regression, classification, anomaly detection, clustering, and natural language processing, allowing us to perform many machine learning tasks. PyCaret presents 27 machine learning models for the treatment of time series, and below is a detailed description of each method, including its creator, along with relevant bibliographic references, which can be seen in Table 1.

Table 1.

Overview of Machine Learning Methods for Electricity Consumption Forecasting in PyCaret.

The objectives of this research are:

- To evaluate the performance of 27 machine learning models available in PyCaret for forecasting monthly electricity consumption.

- To identify the three most effective models based on a comprehensive analysis of eight evaluation metrics.

2. Materials and Methods

2.1. Study Area

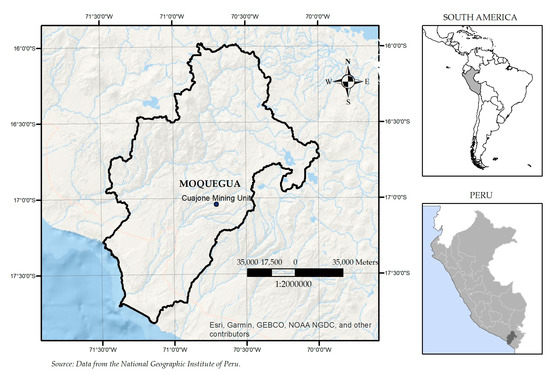

The study was carried out in the Mariscal Nieto province of the department of Moquegua, using information from the electrical yearbooks from 2008 to 2018 on the monthly electrical energy consumption of the Cuajone Mining Unit of the mining and metallurgical company Southern Peru Copper Corporación de Energía y Minas of the Moquegua Region.

The Southern Copper Corporation (México Group) remains active in adding larger volumes of copper to national production, supporting Peru’s path to becoming the world’s leading producer, due to the growing demand for copper in support of the energy transition towards renewable sources [46]. The location of the mining company Southern Peru Copper Corporation can be seen in Figure 1.

Figure 1.

Location of Moquegua.

2.2. Methodology

We used the software Python 3.11.7 | packaged by Anaconda, Inc. based in Austin, TX, United States (main, 15 December 2023, 18:05:47) [MSC v.1916 64 bit (AMD64)] on win32 and PyCaret 3.3.2. This is a powerful tool for processing and forecasting time series through its libraries, available in the Repository (CRAN).

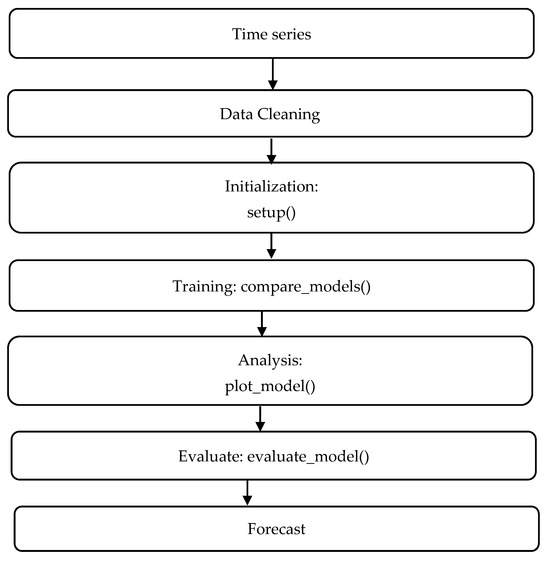

The study utilizes monthly electricity consumption data from the Southern Peru Copper Corporation from 2008 to 2017. We used the data for 2018 for validation and comparison with forecasted values. The methodology involves the following steps and workflow of methodology in PyCaret, as seen in Figure 2:

Figure 2.

Workflow of methodology in Pycaret.

- 3.

- Data Collection: We extracted historical monthly electricity consumption data from electrical yearbooks.

- 4.

- Data Preprocessing: We handled missing values and normalized the data. We also applied seasonal decomposition to understand underlying patterns.

- 5.

- Feature Engineering: We created lag features and rolling statistics to capture temporal dependencies.

- 6.

- Model Selection: Various models were selected, including Exponential Smoothing, Adaptive Boosting with Conditional Deseasonalization and Detrending, and ETS.

- 7.

- Model Training and Tuning: We trained the models using PyCaret and tuned the hyperparameters using GridSearchCV.

- 8.

- Evaluation Metrics: Models were evaluated based on MASE, RMSSE, MAE, RMSE, MAPE, SMAPE, R2, and computation time.

PyCaret’s variety of features makes the model selection processes easier. These functions are initialization, model comparison, instruction, analysis model, and evaluation model. The Setup () function in PyCaret allows you to direct the preprocessing and ML workflow. This involves entering the dataset and the slope or target variable, which must be carried out before.

PyCaret trains multiple models based on performance metrics and ranks them from best to worst. The compare_models() function ranks models based on their metrics and also considers the time this process takes, which varies depending on the size and type of data file. The plot_model() function is included in PyCaret, allowing visualization of the capacity of trained machine learning models.

2.2.1. Data

The scope of the study includes the monthly consumption readings recorded in the electricity yearbooks of the decentralized office of the Ministry of Energy and Mines in the Moquegua region between 2008 and 2018, in megawatt-hours (MWh) [47].

2.2.2. Application of Metrics

The metrics that allow the analysis and selection of the best model are:

- 1.

- Mean Absolute Scaled Error (MASE):

MASE is a measure of forecast accuracy that scales the mean absolute error based on the in-sample absolute mean mistake from a naïve forecast method (usually the previous period value). It is particularly useful for comparing forecast accuracy across different data scales.

where is the actual value, is the forecasted value, and is the number of observations.

- 2.

- Root Mean Squared Scaled Error (RMSSE):

RMSSE is similar to MASE but uses squared errors instead of absolute errors. It measures the average magnitude of the forecast errors, penalizing more significant errors more heavily.

- 3.

- Mean Absolute Error (MAE):

MAE measures the average magnitude of the errors in a set of forecasts without considering their direction. It is the average of absolute differences between forecasted and actual observations over the test sample.

- 4.

- Root Mean Squared Error (RMSE):

RMSE measures the square root of the average of the squared differences between forecasted and actual values. Compared to MAE, it is more sensitive to large errors.

- 5.

- Mean Absolute Percentage Error (MAPE):

MAPE expresses forecast accuracy as a percentage. It is the average of the absolute percentage errors of forecasts. It is scale-independent but can be biased if actual values are minimal.

- 6.

- Symmetric Mean Absolute Percentage Error (SMAPE):

SMAPE is an alternative to MAPE that symmetrically treats over- and under-forecasts. It is more robust when dealing with low actual values.

- 7.

- R2 Comparison:

R2 indicates the proportion of the variance in the dependent variable that is predictable from the independent variables. It ranges from 0 to 1, with higher values indicating better model performance.

where y represents the mean of the actual values.

- 8.

- Computation Time (TT):

Computation Time (TT) is the time it takes the model to train and generate forecasts. It is a crucial metric in practical applications where time efficiency is essential.

Measurement: TT is typically measured in seconds (s), and we record it using system functions that track the duration of the forecasting process.

3. Results and Discussion

3.1. Data Cleaning and Initialization

Data cleaning and imputation were automatic and fast for the entire series. For the Setup (), the following is considered: the target is consumption, the total data items consist of 132 for training, 120 reserved, and 12 for testing; we reserve these last readings for the validation of the forecast corresponding to the year 2018, the cross-validation is with k-fold = 3 and the rest in the standard PyCaret configuration.

3.2. Model Comparison

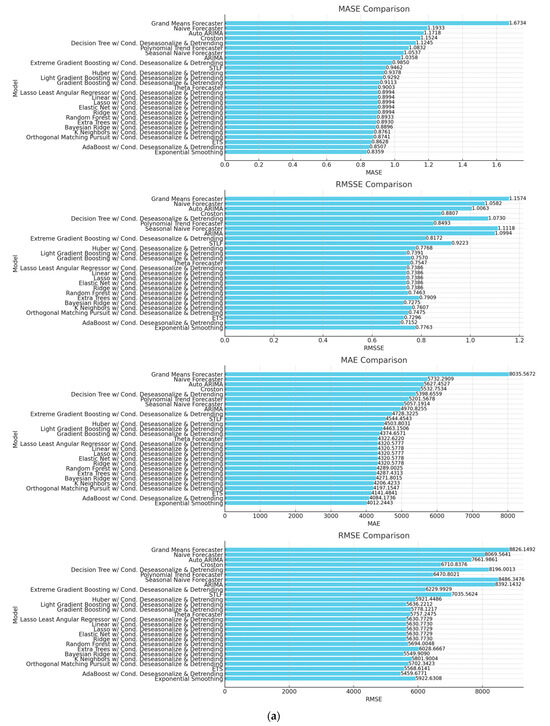

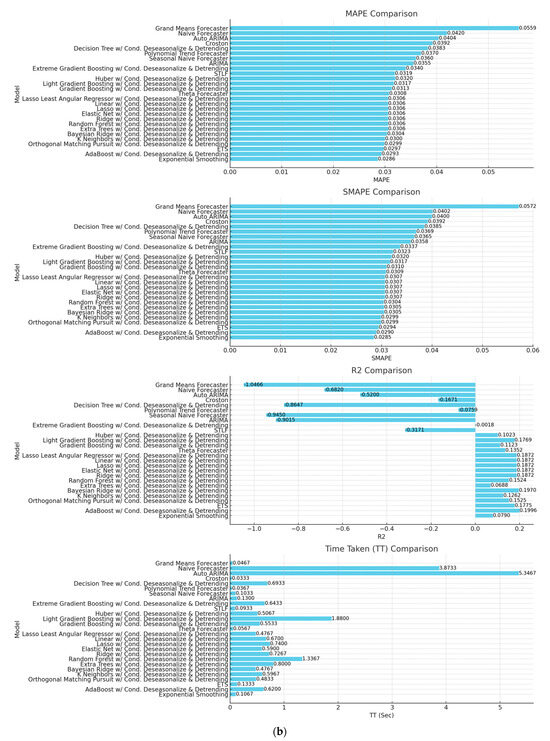

PyCaret chooses the best_model with the compare_models() function, which uses all eight metrics. Figure 3a,b compares performance metrics between different models and provides a summary of the best-performing models based on the combined metrics.

Figure 3.

(a) Comparison of eight metrics. (b) Comparison of eight metrics.

The bar charts above compare the performance of different models based on various metrics. We summarize the critical observations:

| MASE and RMSSE: |

| Top Models: Exponential Smoothing, AdaBoost with Conditional Deseasonalize and Detrending, ETS. |

| Observation: Exponential Smoothing shows the lowest MASE and RMSSE values, indicating it performs well regarding these error metrics. |

| MAE and RMSE: |

| Top Models: Exponential Smoothing, AdaBoost with Conditional Deseasonalize and Detrending, ETS. |

| Observation: Exponential Smoothing has the lowest MAE and RMSE, making it a strong candidate for minimizing absolute and squared errors. |

| MAPE and SMAPE: |

| Top Models: Exponential Smoothing, AdaBoost with Conditional Deseasonalize and Detrending, ETS. |

| Observation: These models consistently show lower values for MAPE and SMAPE, indicating better performance in percentage error metrics. |

| R2: |

| Top Models: AdaBoost with Conditional Deseasonalize and Detrending, ETS. |

| Observation: AdaBoost with Conditional Deseasonalize and detrending shows the highest R2 value, indicating that it explains the most variance in the data. |

| TT (Sec): |

| Top Models (Least Time): Exponential Smoothing, ETS. |

| Observation: Exponential Smoothing and ETS are among the fastest models, making them efficient in terms of computation time. |

| Recommendations |

| Best Overall Model: Exponential Smoothing |

| Reason: It consistently performs well across all error metrics and has a low computation time. |

| Runner-Up Models: |

| AdaBoost with Conditional Deseasonalize and Detrending: Shows high R2 and good performance across other metrics. |

| ETS: Good balance of performance and computation time. |

| We should consider these models for forecasting monthly electricity consumption demand due to their strong performance across multiple metrics and efficiency in computation. |

3.3. Model Creation and Training

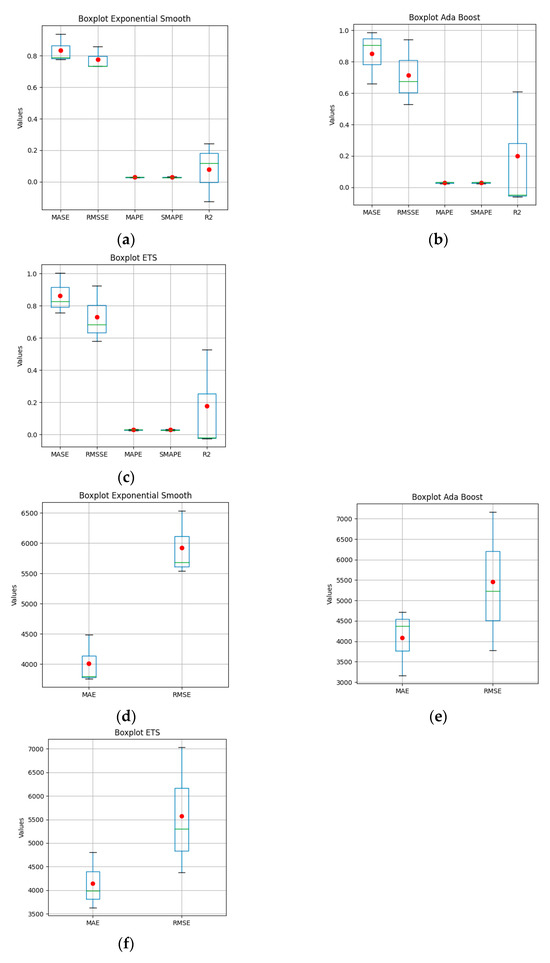

When creating the models, the hyperparameters are adjusted through cross-validation with k-fold = 3. The results of the 3 models are shown in Table 2 and Figure 4.

Table 2.

Cross-validation for the three best-performing models.

Figure 4.

Cross-validation boxes and whiskers of the three best models with the metrics MASE, RMSE, MAPE, SMAPE and R2 between −0.1246 and 1.00481 (a–c) and the metrics MAE and RMSE between 3162.6573 and 7167.3498 (d–f).

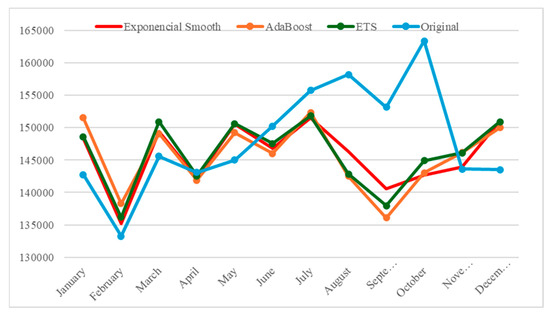

3.4. Model Comparison

Table 3.

Accurate readings and forecasting readings of electricity consumption demand for 2018.

Figure 5.

Accurate readings and forecasting readings of electricity consumption demand for 2018.

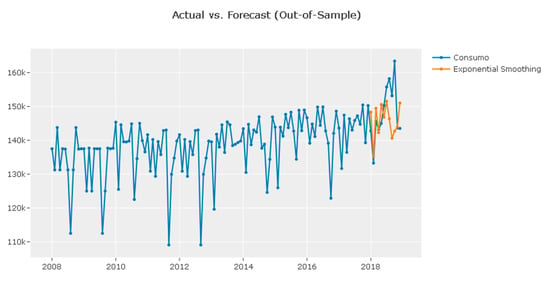

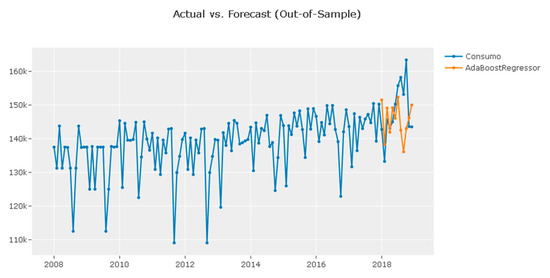

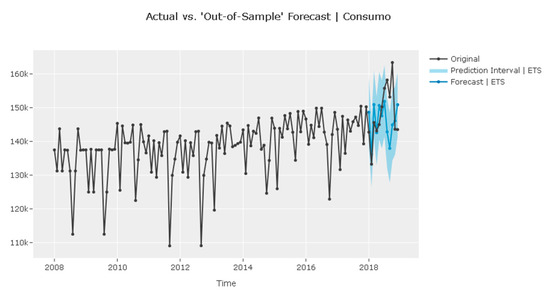

3.5. Forecast Validation

Below, Table 4 and Figure 6, Figure 7 and Figure 8 show the metrics for comparing the actual value and the forecast of monthly readings for the 2018 forecast.

Table 4.

Metrics of the forecasting readings.

Figure 6.

Dataset and its forecast, Exponential Smoothing.

Figure 7.

Dataset and its forecast, Ada boost Regressor.

Figure 8.

Dataset and its forecast, ETS.

Table 4 and Figure 6 show the electricity consumption prediction metrics for the year 2018. The Exponential Smoothing model has the lowest values for all evaluation metrics (MASE, RMSSE, MAE, RMSE, MAPE, SMAPE) and the least negative R2. Therefore, it is the best-performing model among those listed.

4. Conclusions

This study demonstrates that PyCaret’s machine learning models, particularly exponential smoothing and adaptive boosting with deseasonalization and conditional detrending, can effectively forecast monthly electricity consumption in industrial environments. These models provide accurate predictions and can be critical for energy planning and management. Future research should explore integrating additional external factors and advanced deep-learning models to improve forecast accuracy.

Author Contributions

A.C.F.Q. and N.C.L.C.: software, formal analysis, writing—original draft, visualization. J.O.Q.Q.: conceptualization, methodology, resources, writing—review and editing. O.C.T.: conceptualization, methodology, resources, writing—review and editing, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chong, M.; Aguilar, R. Proyección de Series de Tiempo para el Consumo de la Energía Eléctrica a Clientes Residenciales en Ecuador. Rev. Tecnológica ESPOL-RTE 2016, 29, 56–76. [Google Scholar]

- Mariño, M.D.; Arango, A.; Lotero, L.; Jimenez, M. Modelos de series temporales para pronóstico de la demanda eléctrica del sector de explotación de minas y canteras en Colombia. Rev. EIA 2021, 18, 35007. [Google Scholar] [CrossRef]

- Hyndman, R.J.; Khandakar, Y. Automatic Time Series Forecasting: The forecast Package for R. J. Stat. Softw. 2008, 27, 1–22. [Google Scholar] [CrossRef]

- Manzella, F.; Pagliarini, G.; Sciavicco, G.; Stan, I.E. The voice of COVID-19: Breath and cough recording classification with temporal decision trees and random forests. Artif. Intell. Med. 2023, 137, 102486. [Google Scholar] [CrossRef]

- Ersin, M.; Emre, N.; Eczacioglu, N.; Eker, E.; Yücel, K.; Bekir, H. Enhancing microalgae classification accuracy in marine ecosystems through convolutional neural networks and support vector machines. Mar. Pollut. Bull. 2024, 205, 116616. [Google Scholar] [CrossRef]

- Westergaard, G.; Erden, U.; Mateo, O.A.; Lampo, S.M.; Akinci, T.C.; Topsakal, O. Time Series Forecasting Utilizing Automated Machine Learning (AutoML): A Comparative Analysis Study on Diverse Datasets. Information 2024, 15, 39. [Google Scholar] [CrossRef]

- Arnaut, F.; Kolarski, A.; Srećković, V.A. Machine Learning Classification Workflow and Datasets for Ionospheric VLF Data Exclusion. Data 2024, 9, 17. [Google Scholar] [CrossRef]

- Kilic, K.; Ikeda, H.; Adachi, T.; Kawamura, Y. Soft ground tunnel lithology classification using clustering-guided light gradient boosting machine. J. Rock Mech. Geotech. Eng. 2023, 15, 2857–2867. [Google Scholar] [CrossRef]

- Jose, R.; Syed, F.; Thomas, A.; Toma, M. Cardiovascular Health Management in Diabetic Patients with Machine-Learning-Driven Predictions and Interventions. Appl. Sci. 2024, 14, 2132. [Google Scholar] [CrossRef]

- Effrosynidis, D.; Spiliotis, E.; Sylaios, G.; Arampatzis, A. Time series and regression methods for univariate environmental forecasting: An empirical evaluation. Sci. Total Environ. 2023, 875, 162580. [Google Scholar] [CrossRef]

- Malounas, I.; Lentzou, D.; Xanthopoulos, G.; Fountas, S. Testing the suitability of automated machine learning, hyperspectral imaging and CIELAB color space for proximal in situ fertilization level classification. Smart Agric. Technol. 2024, 8, 100437. [Google Scholar] [CrossRef]

- Gupta, R.; Yadav, A.K.; Jha, S.K.; Pathak, P.K. Long term estimation of global horizontal irradiance using machine learning algorithms. Optik 2023, 283, 170873. [Google Scholar] [CrossRef]

- Arunraj, N.S.; Ahrens, D. A hybrid seasonal autoregressive integrated moving average and quantile regression for daily food sales forecasting. Int. J. Prod. Econ. 2015, 170, 321–335. [Google Scholar] [CrossRef]

- Packwood, D.; Nguyen, L.T.H.; Cesana, P.; Zhang, G.; Staykov, A.; Fukumoto, Y.; Nguyen, D.H. Machine Learning in Materials Chemistry: An Invitation. Mach. Learn. Appl. 2022, 8, 100265. [Google Scholar] [CrossRef]

- Moreno, S.R.; Seman, L.O.; Stefenon, S.F.; dos Santos Coelho, L.; Mariani, V.C. Enhancing wind speed forecasting through synergy of machine learning, singular spectral analysis, and variational mode decomposition. Energy 2024, 292, 130493. [Google Scholar] [CrossRef]

- Slowik, A.; Moldovan, D. Multi-Objective Plum Tree Algorithm and Machine Learning for Heating and Cooling Load Prediction. Energies 2024, 17, 3054. [Google Scholar] [CrossRef]

- Abdu, D.M.; Wei, G.; Yang, W. Assessment of railway bridge pier settlement based on train acceleration response using machine learning algorithms. Structures 2023, 52, 598–608. [Google Scholar] [CrossRef]

- Muqeet, M.; Malik, H.; Panhwar, S.; Khan, I.U.; Hussain, F.; Asghar, Z.; Khatri, Z.; Mahar, R.B. Enhanced cellulose nanofiber mechanical stability through ionic crosslinking and interpretation of adsorption data using machine learning. Int. J. Biol. Macromol. 2023, 237, 124180. [Google Scholar] [CrossRef] [PubMed]

- Xin, L.; Yu, H.; Liu, S.; Ying, G.G.; Chen, C.E. POPs identification using simple low-code machine learning. Sci. Total Environ. 2024, 921, 171143. [Google Scholar] [CrossRef] [PubMed]

- Lynch, C.J.; Gore, R. Application of one-, three-, and seven-day forecasts during early onset on the COVID-19 epidemic dataset using moving average, autoregressive, autoregressive moving average, autoregressive integrated moving average, and naïve forecasting methods. Data Br. 2021, 35, 106759. [Google Scholar] [CrossRef] [PubMed]

- Prestwich, S.D.; Tarim, S.A.; Rossi, R. Intermittency and obsolescence: A Croston method with linear decay. Int. J. Forecast. 2021, 37, 708–715. [Google Scholar] [CrossRef]

- Nguyen, H.V.; Naeem, M.A.; Wichitaksorn, N.; Pears, R. A smart system for short-term price prediction using time series models. Comput. Electr. Eng. 2019, 76, 339–352. [Google Scholar] [CrossRef]

- Adam, M.L.; Moses, O.A.; Mailoa, J.P.; Hsieh, C.Y.; Yu, X.F.; Li, H.; Zhao, H. Navigating materials chemical space to discover new battery electrodes using machine learning. Energy Storage Mater. 2024, 65, 103090. [Google Scholar] [CrossRef]

- Yang, Y.; Yang, Y. Hybrid method for short-term time series forecasting based on EEMD. IEEE Access 2020, 8, 61915–61928. [Google Scholar] [CrossRef]

- Tolios, G. Simplifying Machine Learning with PyCaret A Low-Code Approach for Beginners and Experts! Leanpub: Victoria, BC, Canada, 2022. [Google Scholar]

- Hyndman, R.J.; Athanasopoulos, G. Forecasting: Principles and Practice; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Freund, Y.; Schapire, R.E. A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Pati, Y.C.; Rezaiifar, R.; Krishnaprasad, P.S. Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of the 27th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, USA, 1–3 November 1993; Volume 1, pp. 40–44. [Google Scholar]

- Fix, E.; Hodges, J.L. Discriminatory Analysis. Nonparametric Discrimination: Consistency Properties. Int. Stat. Rev./Rev. Int. Stat. 1989, 57, 238–247. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Biased Estimation for Nonorthogonal Problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification And Regression Trees, 1st ed.; Routledge: New York, NY, USA, 2017; ISBN 9781315139470. [Google Scholar]

- Tibshirani, R. Regression Shrinkage and Selection via the Lasso. R. Stat. Soc. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B Stat. Methodol. 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Draper, N.R.; Smith, H. Applied Regression Analysis, 3rd ed.; John Wiley Sons, Inc.: Hoboken, NJ, USA, 1998. [Google Scholar]

- Efron, B.; Hastie, T.; Johnstone, I.; Tibshirani, R.; Ishwaran, H.; Knight, K.; Loubes, J.M.; Massart, P.; Madigan, D.; Ridgeway, G.; et al. Least angle regression. Ann. Stat. 2004, 32, 407–499. [Google Scholar] [CrossRef]

- Assimakopoulos, V.; Nikolopoulos, K. The theta model: A decomposition approach to forecasting. Int. J. Forecast. 2000, 16, 521–530. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Collins, J.R. Robust Estimation of a Location Parameter in the Presence of Asymmetry. Ann. Stat. 1976, 4, 68–85. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpnning, I. STL: A Seasonal-Trend Decomposition Procedure Based on Loess. J. Off. Stat. 1974, 6, 477–482. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Volume 13–17, pp. 785–794. [Google Scholar]

- Box, G. Box and Jenkins: Time Series Analysis, Forecasting and Control. In A very British Affair; Palgrave Macmillan: London, UK, 2013; ISBN 978-1-349-35027-8. [Google Scholar]

- Croston, J.D. Forecasting and Stock Control for Intermittent Demands. Oper. Res. Q. 1972, 23, 289–303. [Google Scholar] [CrossRef]

- Aiolfi, M.; Capistrán, C.; Timmermann, A. Forecast Combinations. In The Oxford Handbook of Economic Forecasting; Oxford Academic: Oxford, UK, 2012; p. 35. ISBN 9780199940325. [Google Scholar]

- Quinde, B. Southern: Perú puede convertirse en el primer productor mundial de cobre. Available online: https://www.rumbominero.com/peru/peru-productor-mundial-de-cobre/ (accessed on 28 September 2023).

- Tabares Muñoz, J.F.; Velásquez Galvis, C.A.; Valencia Cárdenas, M. Comparison of Statistical Forecasting Techniques for Electrical Energy Demand. Rev. Ing. Ind. 2014, 13, 19–31. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).