Abstract

The capability of U-Net methods and aerial orthoimagery to identify tree crown mortality in study areas in Bavaria, Germany was evaluated and aspects such as model transferability were investigated. We trained the models with imagery from May to September for the years 2019–2023. One goal was to differentiate between damaged crowns of deciduous, coniferous, and pine trees. The results from a validation area containing an independent dataset showed the best average F1-scores of 68%, 52%, and 66% for deciduous, coniferous, and pine trees, respectively. This study highlights the potential of U-Net methods for detecting tree mortality in large areas.

1. Introduction

Due to the increased occurrence of climate-induced forest disturbances, the automatic monitoring of large forest areas has become increasingly important. In this sense, optical imagery provided by satellites [1,2,3,4,5], aerial multispectral sensors, and unmanned aerial vehicles (UAVs) [6,7,8] have been extensively used in research and in operational scenarios for forest damage monitoring. In particular, aerial imagery allows information to be captured at a high-resolution scale (10–60 cm) for extensive areas at moderate cost, which enables detection of damages at the tree crown level. Using deciduous and coniferous trees as a target, several studies have successfully exploited aerial images to detect standing deadwood and tree damage [9,10,11,12,13,14,15,16,17,18,19]. However, they mostly focus on detecting damage to individual species rather than differentiating damage to different groups of tree species.

Methods used for tree damage and tree mortality detection with high resolution optical imagery vary from conventional pixel-based machine learning approaches such as Random Forest and Support Vector Machine [12,20] to more sophisticated methods such as Convolutional Neural Networks (CNNs) [16,21,22,23]. Over the last years, CNNs have gained popularity in remote sensing applications due to their ability to exploit both structural patterns and spectral information to optimally identify target objects [24,25]. Particularly, standard CNN architectures such as U-Net [26] are very popular in the fields of semantic segmentation and remote sensing [27,28,29,30]. U-Net models assign a class to each image pixel, providing precise localization and delineation of the classified objects [24].

The aim of this work is to evaluate U-Net architectures to detect advanced damage in tree crowns using aerial orthoimagery. Special emphasis is placed on differentiating damage in various tree groups such as deciduous, pine, and other conifers. Equally important is developing robust classification models that can be transferred to other datasets and that are invariant to factors such as heterogenous illumination in the images and different phenological stages of the forest trees.

2. Materials and Methods

2.1. Study Areas

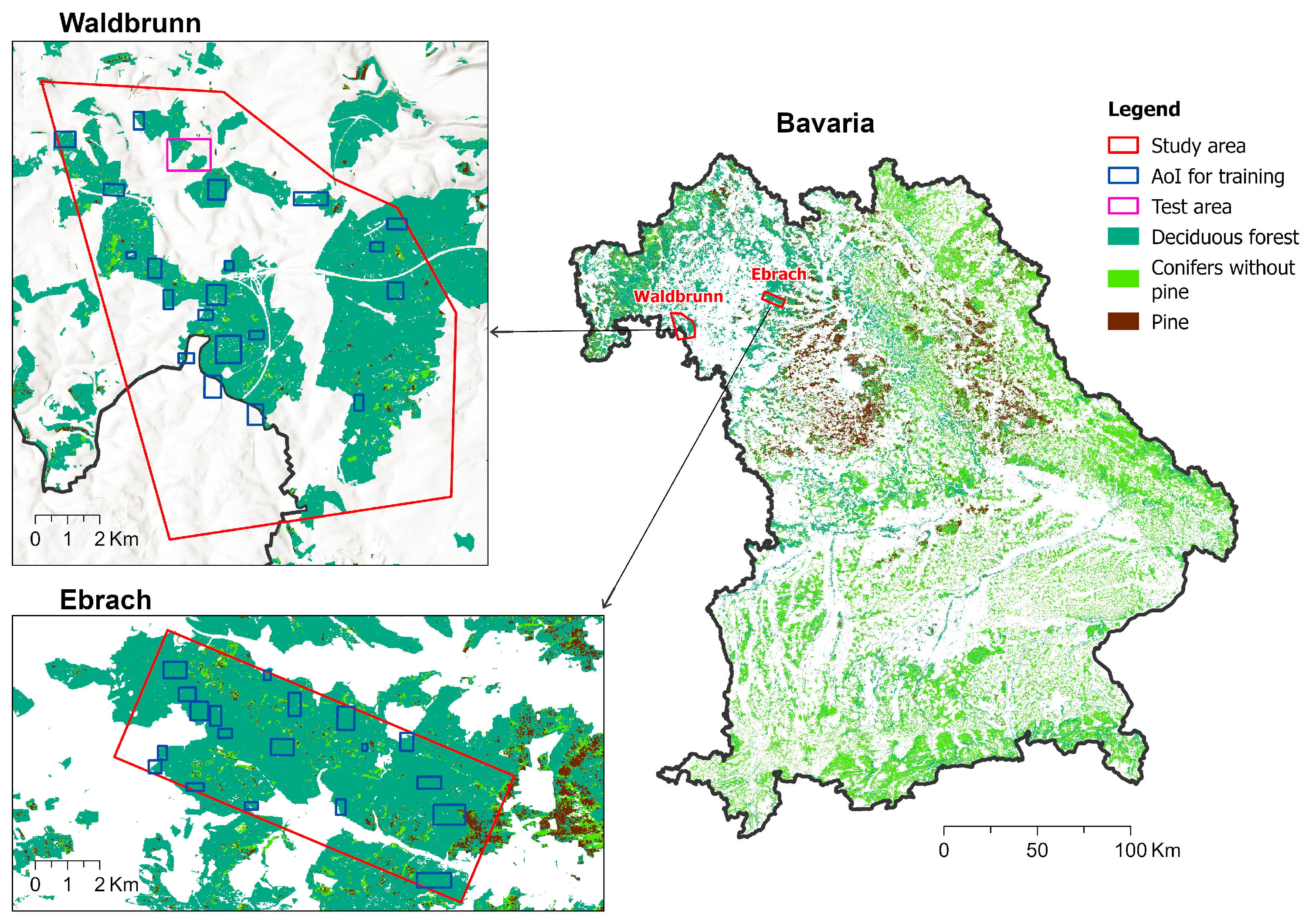

This study comprises two sites situated in the northern part of the German state of Bavaria and covers around 125 km2 in Waldbrunn and 50 km2 in Ebrach (Figure 1). Elevations vary from 225 m to 476 m above sea level over a hilly terrain. Both areas are covered predominantly by deciduous forests but also contain agricultural and developed areas. The results of forest inventories reveal that the species composition of tree individuals with a minimum diameter at breast height (DBH) of 10 cm in the area is 45% beech (Fagus sylvatica), 21% oak (Quercus robur), 7% spruce (Picea abies), and 7% pine (Pinus sylvestris). The effects of the severe droughts, which occurred in Central Europe in 2018 and the following years [31,32,33], are evident in the study areas through extended wilting and high mortality of beech individuals. Other tree species that were affected to a lesser extent include spruce, larch, Douglas fir, pine, and oak.

Figure 1.

The study areas in Waldbrunn and Ebrach. The blue rectangles represent areas of interest (AOI) from which training data was extracted. The test area in Waldbrunn used for validation is shown in pink.

2.2. Orthomosaics and Reference Data

We used 20 cm aerial orthomosaics from different years, 2019 (June and August), 2020 (May), 2021 (September), and 2023 (May), including the blue (B), green (G), red (R), and infrared (I) channels. Imagery from August 2019 and May 2020 was acquired from commissioned flights, while the other three datasets were obtained from the Bavarian Agency for Digitization, High-Speed Internet and Surveying—LDBV [34].

Reference data for training and validation was generated by manually digitalizing polygons encircling dead parts of tree crowns and partial dieback. We considered a crown portion to be dead if visible branches or twigs did not exhibit any foliage. All polygons (n = 28.835) were labeled according to three categories, damaged deciduous trees, damaged pines, and other damaged conifers, which comprised 83.9%, 6.4%, and 9.7% of all training polygons, respectively. The class “other damaged conifers” included primarily spruce but also consisted of less common species such as European larch and Douglas fir.

2.3. Methods

We implemented a semantic segmentation approach using modifications of the original U-Net architecture. In our case, the semantic segmentation allows the generation of a seamless map of damaged crowns at the original pixel resolution (20 cm). Input training data of U-Net models takes the form of image patches and their corresponding label masks, which delineate all the classes present within the patches. We extracted the non-overlapping image patches of 256 × 256 pixels from 39 areas of interest (AOI) of different sizes which were distributed across the forest areas, exhibiting high tree mortality (Figure 1).

The class label masks were generated in the following scenarios:

- Binary: Background and damaged trees.

- Three-Class: Background, damaged deciduous trees, and damaged coniferous trees.

- Multiclass: Similar classes as in the previous case, but the damaged conifer class was split into pine and other conifers.

We trained several U-Net variants including the Attention U-Net model [35], and U-Net models incorporating backbone networks such as Resnet34, Resnet101 [36], Efficient-B7 [37], and InceptionV3 [38]. The models were implemented in Python version 3.9.15 using the libraries Keras version 2.9.0 [39] and Tensorflow version 2.9.1 [40]. The final input parameters of the model included Adam optimizer with a training rate of 0.0001, batch size of 5, a maximum number of 50 epochs for training, and a categorical focal Jaccard loss as loss function. During model fitting, 80% of the data was used for training and 20% for validation.

For the final classifications we used all available image channels (IRGB) because preliminary tests showed that the inclusion of the infrared channel slightly improved accuracy values. Due to the strong imbalances in the class distribution within the training data, we applied image augmentation with the albumentations package to generate more samples for underrepresented classes such as conifers. The augmentation process consisted of horizontal and vertical image flipping, image rotation, and random changes in image brightness and contrast.

To validate the models, a single test area of 1.3 km2, which was not used for training, was set aside in Waldbrunn (Figure 1). This area contains numerous examples of dead trees of all assessed classes and is characterized by the presence of different land covers. This entire area was classified for all datasets used in training (August 2019, May 2020, September 2021, and May 2023) as well as for a dataset corresponding to the month of June 2019, which was unknown to the model. We calculated the F1-score at pixel level for the test area to assess model performance and generalization.

3. Results

Table 1, Table 2 and Table 3 summarize the F1-score values obtained for the three evaluated scenarios. In general, detection accuracy decreases when more damaged tree classes are involved in the classification. Thus, the highest detection rate of damaged trees attained 71.8% for the binary, 69% for the three-class, and 62.3% for the multiclass cases. F1-scores for the background class were always above 99%. The best results were obtained with Attention U-Net, closely followed by U-Net-ResNet34 models.

Table 1.

Average F1-score (%) of binary semantic segmentation over all test areas.

Table 2.

Average F1-score (%) of three-class semantic segmentation over all test areas.

Table 3.

Average F1-score (%) of multiclass semantic segmentation over all test areas.

Table 4 and Table 5 show the F1-scores for the three-class and multiclass scenarios and for different datasets using Attention U-Net. Detection rates of damaged deciduous and damaged coniferous categories varied significantly depending on the input image set. As a trend, damaged coniferous exhibited slightly higher detection values than deciduous across most of the methods evaluated. When applying the best model (Attention U-Net) to previously unseen data (June 2019), F1 achieved a value of 67% for the damaged deciduous, which was in the same range as the F1 values for some training years (2020 and 2021) and even higher than the value for year 2023 (55%). However, the detection of damaged conifers was less successful for the unseen June 2019 dataset with 59%.

Table 4.

F1-score (%) for detection of damaged deciduous and coniferous trees using U-Net Attention method across different years.

Table 5.

F1-score (%) for detection of damaged deciduous, damaged pine, and damaged coniferous trees using U-Net Attention method across different years.

Splitting of damaged conifers into pine and other conifer classes leads to lower detections in conifers (F1 values of 40–67%) than in deciduous (F1 values of 57–77%), as shown in Table 5. In contrast, the Attention U-Net performed well in detecting mortality in pine with an average F1 value of 66% (55–78%).

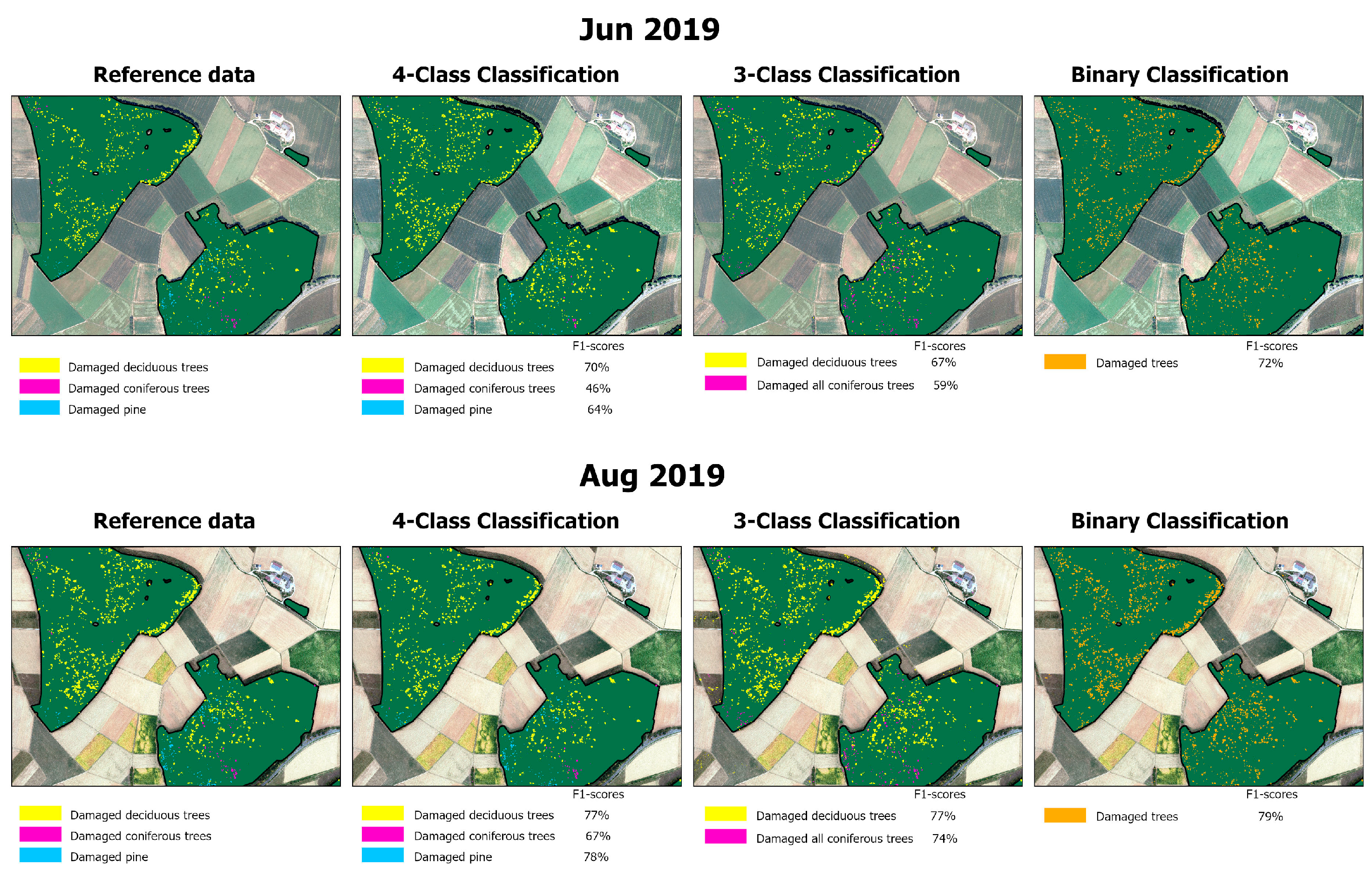

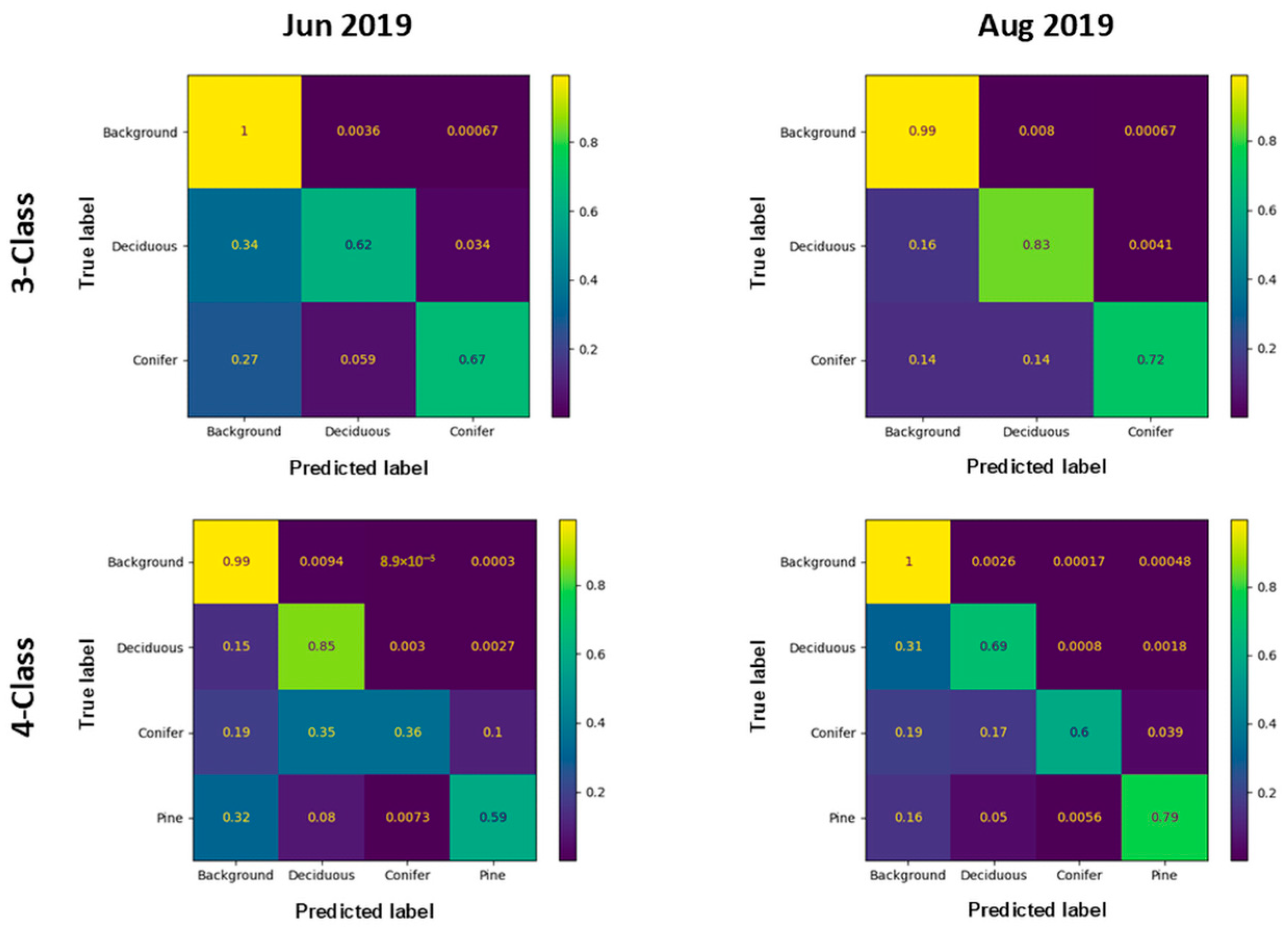

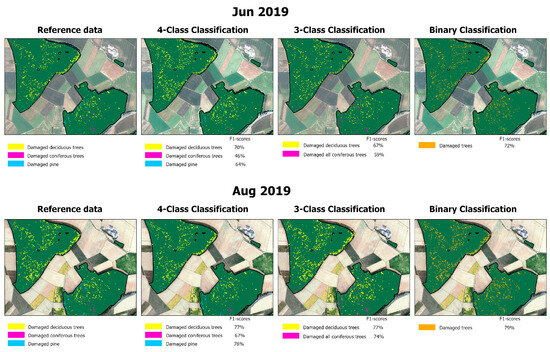

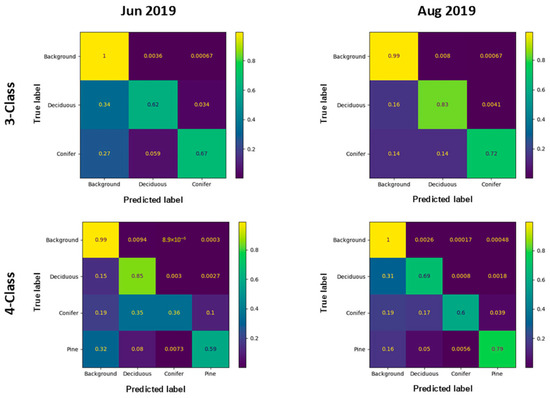

The classification of the test dataset of August 2019 (Figure 2) showed the highest F1-scores across all evaluated scenarios and datasets, with general damaged tree detection reaching 79%. Possible reasons for this are the presence of compact and well-structured damaged crowns and the excellent image quality. For comparison, the classification of the unseen test dataset corresponding to June 2019 is also shown. In this case, there is a reduction in the overall detection of damage to 72% and the uncertainty among the damaged tree classes was greater than in August 2019, resulting in much lower F1 values in both deciduous and coniferous classes. The confusion matrices for the same datasets are shown in Figure 3 and illustrate the overall lower detection rates for the evaluated classes in the June 2019 dataset.

Figure 2.

Classification of test area with Attention U-Net based on datasets of June (above) and August (below) 2019.

Figure 3.

Confusion matrices show the producer’s accuracy of the three-class (top row) and multiclass segmentation (bottom row) of the test area using Attention U-Net and the June 2019 (left column) and August 2019 (right column) datasets.

4. Discussion

Our results show the ability of U-Net models to successfully capture crown damage across orthomosaics, exhibiting great heterogeneity in illumination conditions.

This research addresses the following aspects:

4.1. Damage Detection

Crown damage detection is a common research topic in remote sensing. Reported detection rates are typically high but difficult to compare due to differences in class definition and number of classes, image spatial and spectral resolution, classification methods, and evaluation metrics and methods.

In this study, the use of aerial imagery with 20 cm spatial resolution in combination with U-Net approaches has not only resulted in high detection rates, but also delimits damaged crown portions or entire crowns well. Detection success measured with F1 values of around 70% are in accordance with some reported values in other classification studies. For example, reference [19] reports F1-scores of 68% using aerial imagery to detect tree mortality; Reference [22] attained best F1-values of 67% using DeepLab v3+ and 70% using SegFormer models applying to 60 cm aerial images; Reference [10] attained a maximum F1 value of 75% using UAV images with 2 cm spatial resolution for the detection of deadwood class along with another nine classes of tree species in the green stage. Better results were obtained by [20] with F1 values of 86–93% in forests of Finland using three different years and 50 cm aerial orthofotos. Reference [17] also reached an average F1-score of 87% over 20 test datasets from the Bavarian Forest National Park, but using Mask R-CNN models.

4.2. Class Differentiation

The distinction between damaged trees belonging to deciduous and coniferous species showed promising results. When comparing general F1-scores of these two groups (67.2% for deciduous vs. 69.2% for coniferous) using Attention U-Net, there is no significant difference, even though conifers were markedly underrepresented in the training data. On the contrary, reference [19] obtained much higher detections of dead trees in conifers (F1 values of 76% vs. 47%) and attributed this to the predominance of conifers in the training dataset, the better-defined radial structure of conifer crowns, and their more homogenous spectral appearance across the images.

In our study, the further detection of damaged pine crowns only works well in some datasets. One possible cause for this is the low representation of this class in the training data.

4.3. Model Transferability

Studies attempting a model generalization are relatively scarce in the literature [19]. Most of the reported results are based on the classification of data used in training. Our study represents an attempt to test the transferability of the generalized classification models that were developed based on various heterogenous datasets into a new dataset. The orthoimagery used here for training showed strong striping and mosaicking effects in many cases. Furthermore, the images were obtained from different months of the year, which adds more variability in relation to image shadow orientation and vegetation phenology.

The performance of our U-Net models on unseen test data allows us to infer the possibility of successfully transferring U-Net models into other regions. However, our research was only validated in a relatively small area. In this context, examples of the successful application of CNN methods on a large scale have only recently emerged in the literature. Thus, reference [19] successfully attempted to generalize EfficientUNet++ models at the country scale of Luxemburg, using official 20 cm aerial imagery from the years 2017 and 2019 and additionally predicting mortality for the years 2018 and 2020. In this case, F1-scores were slightly lower (3.2–6.4%) for those prediction years without training data. Reference [20] trained custom U-Net models for the entirety of Finland for different years and achieved a relatively constant performance. Reference [18] conducted a deep learning based multi-year mapping of dead trees for California based on aerial imagery from the year 2020. Reference [21] proposed a global, multiscale transformer-based segmentation model for mapping both dead tree crowns and partial canopy dieback with F1-scores between 34% and 57% across biomes. These results are impressive given the variability of image resolutions (1–28 cm) and image sources used as well as the number of biomes and forest types analyzed.

The observed discrepancies in detection rates between the different datasets can be attributed to several factors. They include aspects related to image characteristics such as image quality, orthomosaic generation effects, and artifacts [21,41], and biological aspects such as damage extension, crown compactness, and the development of secondary crowns in some species (e.g., beech). In this sense, reference [19,41] underline aspects such as image quality, poor lightning image conditions, and the yearly variation in the shadows cast by trees as limiting factors for the generalization of classification models.

In this study we used classical U-Net methods because they have a relatively low computational complexity, high transferability, and show similar results in some classification tasks in comparison to other DL architectures [7,17]. Although U-Net architecture is still widely used in semantic segmentation studies, architectures like SegFormer have gained popularity in recent years due to their superior performance compared with conventional CNN architectures. For example, reference [19] described a better boundary definition and more accurate segmentation of damaged trees among its advantages. Future work should provide better insights into whether modern architectures can provide higher performance and improved transferability of the classification models to new datasets, as suggested by several studies [19,41,42].

5. Conclusions

This paper presents the results of the semantic segmentation of high-resolution aerial imagery using U-Net models to detect standing damaged trees. Our generalized models showed robust performance across all image datasets, which is remarkable considering that the images were acquired at different times of the year across different years. Moreover, the results are promising in the sense that U-Net models can be applied to new aerial orthoimages with little or no performance loss. Despite the potential observed in this study, further investigation is needed into the transferability of these models to large areas at the state or national level and using other sources of aerial imagery.

The attempt to distinguish between multiple types of damaged trees was successful in some datasets, showing the potential of this technique to capture the distinct damaged crown structures exhibited by tree family groups. The impact of factors such as crown compactness and image quality and resolution on classification results requires further analysis.

Author Contributions

Conceptualization, J.F.G. and A.W.; methodology, J.F.G.; software, J.F.G.; validation, J.F.G.; formal analysis, J.F.G.; investigation, J.F.G. and A.W.; resources, A.W.; data curation, J.F.G.; writing—original draft preparation, J.F.G.; writing—review and editing, A.W.; visualization, J.F.G.; supervision, A.W.; project administration, A.W.; funding acquisition, A.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Federal Ministry of Food and Agriculture (BMEL) and the German Federal Ministry of Environment, Nature Conservation, Nuclear Safety and Consumer Protection (BMUV) based on a resolution of the German Bundestag [number 2220WK81C4].

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding authors as they are part of an ongoing research project and restrictions apply.

Acknowledgments

The research is part of the collaborative project “Use of earth observation to record climate-induced damage to forests in Germany—ForstEO”. We thank our colleagues Yan Zhang and Vincent Richter for their support in digitalizing the training data, and Daniel Morovitz for his help in editing this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AOI | Area of Interest |

| DBH | Diameter at Breast Height |

| CNN | Convolutional Neural Network |

| IRGB | Infrared, Red, Green, Blue |

| R-CNN | Region-based Convolutional Neural Network |

| UAV | Unmanned Aerial Vehicle |

References

- Waser, L.; Jütte, K.; Küchler, M.; Stampfer, T. Evaluating the potential of WorldView-2 data to classify tree species and different levels of ash mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef]

- Abdullah, H.; Skidmore, A.K.; Darvishzadeh, R.; Heurich, M. Sentinel-2 accurately maps green-attack stage of European spruce bark beetle (Ips typographus, L.) compared with Landsat-8. Remote Sens. Ecol. Conserv. 2019, 5, 87–106. [Google Scholar] [CrossRef]

- Zhan, Z.; Yu, L.; Li, Z.; Ren, L.; Gao, B.; Wang, L.; Youqing, L. Combining GF-2 and Sentinel-2 images to detect tree mortality caused by red turpentine beetle during the early outbreak stage in North China. Forests 2020, 11, 172. [Google Scholar] [CrossRef]

- Thonfeld, F.; Gessner, U.; Holzwarth, S.; Kriese, J.; Da Ponte, E.; Huth, J.; Kuenzer, C. A first assessment of canopy cover loss in Germany’s forests after the 2018–2020 drought years. Remote Sens. 2022, 14, 562. [Google Scholar] [CrossRef]

- Reinosch, E.; Backa, J.; Adler, P.; Deutscher, J.; Eisnecker, P.; Hoffmann, K.; Langner, N.; Puhm, M.; Rüetschi, M.; Straub, C.; et al. Detailed validation of large-scale Sentinel-2-based forest disturbance maps across Germany. For. Int. J. For. Res. 2025, 98, 437–453. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based photogrammetry and hyperspectral imaging for mapping bark beetle damage at tree-level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Ecke, S.; Stehr, F.; Frey, J.; Tiede, D.; Dempewolf, J.; Klemmt, H.-J.; Endres, E.; Seifert, T. Towards operational UAV-based forest health monitoring: Species identification and crown condition assessment by means of deep learning. Comput. Electron. Agric. 2024, 219, 108785. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Hicke, J.A.; Vierling, L.A. Evaluating the potential of multispectral imagery to map multiple stages of tree mortality. Remote Sens. Environ. 2011, 115, 1632–1642. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Detection of single standing dead trees from aerial color infrared imagery by segmentation with shape and intensity priors. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 181–188. [Google Scholar] [CrossRef]

- Sylvain, J.-D.; Drolet, G.; Brown, N. Mapping dead forest cover using a deep convolutional neural network and digital aerial photography. ISPRS J. Photogramm. Remote Sens. 2019, 156, 14–26. [Google Scholar] [CrossRef]

- Krzystek, P.; Serebryanyk, A.; Schnörr, C.; Červenka, J.; Heurich, M. Large-scale mapping of tree species and dead trees in Šumava national park and Bavarian Forest national park using lidar and multispectral imagery. Remote Sens. 2020, 12, 661. [Google Scholar] [CrossRef]

- Chiang, C.-Y.; Barnes, C.; Angelov, P.; Jiang, R. Deep learning-based automated forest health diagnosis from aerial images. IEEE Access 2020, 8, 144064–144076. [Google Scholar] [CrossRef]

- Sani-Mohammed, A.; Yao, W.; Heurich, M. Instance segmentation of standing dead trees in dense forest from aerial imagery using deep learning. ISPRS Open J. Photogramm. Remote Sens. 2022, 6, 100024. [Google Scholar] [CrossRef]

- Cheng, Y.; Oehmcke, S.; Brandt, M.; Rosenthal, L.; Das, A.; Vrieling, A.; Saatchi, S.; Wagner, F.; Mugabowindekwe, M.; Verbrugge, W.; et al. Scattered tree death contributes to substantial forest loss in California. Nat. Commun. 2024, 15, 641. [Google Scholar] [CrossRef] [PubMed]

- Schwarz, S.; Werner, C.; Fassnacht, F.E.; Ruehr, N.K. Forest canopy mortality during the 2018–2020 summer drought years in Central Europe: The application of a deep learning approach on aerial images across Luxembourg. For. Int. J. For. Res. 2024, 97, 376–387. [Google Scholar] [CrossRef]

- Junttila, S.; Blomqvist, M.; Laukkanen, V.; Heinaro, E.; Polvivaara, A.; O’Sullivan, H.; Yrttimaa, T.; Vastaranta, M.; Peltola, H. Significant increase in forest canopy mortality in boreal forests in Southeast Finland. For. Ecol. Manag. 2024, 565, 122020. [Google Scholar] [CrossRef]

- Möhring, J.; Kattenborn, T.; Mahecha, M.D.; Cheng, Y.; Schwenke, M.B.; Cloutier, M.; Denter, M.; Frey, J.; Gassilloud, M.; Göritz, A.; et al. Global, multi-scale standing deadwood segmentation in centimeter-scale aerial images. TechRxiv 2025. [Google Scholar] [CrossRef]

- Joshi, D.; Witharana, C. Vision transformer-based unhealthy tree crown detection in mixed northeastern US forests and evaluation of annotation uncertainty. Remote Sens. 2025, 17, 1066. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C. Early detection of bark beetle infestation in Norway spruce (Picea abies, L.) using WorldView-2 data. Photogramm. Fernerkund. Geoinf. 2014, 5, 351–367. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of fir trees (Abies sibirica) damaged by the bark beetle in Unmanned Aerial Vehicle images with deep learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Schiefer, F.; Schmidtlein, S.; Frick, A.; Frey, J.; Klinke, R.; Zielewska-Büttner, K.; Junttila, S.; Uhl, A.; Kattenborn, T. UAV-based reference data for the prediction of fractional cover of standing deadwood from Sentinel time series. ISPRS Open J. Photogramm. Remote Sens. 2023, 8, 100034. [Google Scholar] [CrossRef]

- Anwander, J.; Brandmeier, M.; Paczkowski, S.; Neubert, T.; Paczkowska, M. Evaluating different deep learning approaches for tree health classification using high-resolution multispectral UAV data in the Black Forest, Harz region, and Göttinger Forest. Remote Sens. 2024, 16, 561. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Dimitrovski, I.; Kitanovski, I.; Kocev, D.; Simidjievski, N. Current trends in deep learning for Earth Observation: An open-source benchmark arena for image classification. ISPRS J. Photogramm. Remote Sens. 2023, 197, 18–35. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Deigele, W.; Brandmeier, M.; Straub, C. A hierarchical deep-learning approach for rapid windthrow detection on Planetscope and high-resolution aerial image data. Remote Sens. 2020, 12, 2121. [Google Scholar] [CrossRef]

- Kislov, D.E.; Korznikov, K.A.; Altman, J.; Vozmishcheva, A.S.; Krestov, P.V. Extending deep learning approaches for forest disturbance segmentation on very high-resolution satellite images. Remote Sens. Ecol. Conserv. 2021, 7, 355–368. [Google Scholar] [CrossRef]

- Minařík, R.; Langhammer, J.; Lendzioch, T. Detection of bark beetle disturbance at tree level using UAS multispectral imagery and deep learning. Remote Sens. 2021, 13, 4768. [Google Scholar] [CrossRef]

- Zhang, J.; Cong, S.; Zhang, G.; Ma, Y.; Zhang, Y.; Huang, J. Detecting pest-infested forest damage through multispectral satellite imagery and improved UNet++. Sensors 2022, 22, 7440. [Google Scholar] [CrossRef] [PubMed]

- Brun, P.; Psomas, A.; Ginzler, C.; Thuiller, W.; Zappa, M.; Zimmermann, N.E. Large-scale early-wilting response of Central European forests to the 2018 extreme drought. Glob. Change Biol. 2020, 26, 7021–7035. [Google Scholar] [CrossRef] [PubMed]

- Schuldt, B.; Buras, A.; Arend, M.; Vitasse, Y.; Beierkuhnlein, C.; Damm, A.; Gharun, M.; Grams, T.E.E.; Hauck, M.; Hajek, P.; et al. A first assessment of the impact of the extreme 2018 summer drought on Central European forests. Basic Appl. Ecol. 2020, 45, 86–103. [Google Scholar] [CrossRef]

- West, E.; Morley, P.J.; Jump, A.S.; Donoghue, D.N.M. Satellite data track spatial and temporal declines in European beech forest canopy characteristics associated with intense drought events in the Rhön Biosphere Reserve, Central Germany. Plant Biol. 2022, 24, 1120–1131. [Google Scholar] [CrossRef] [PubMed]

- LDBV. Landesamt für Digitalisierung, Breitband und Vermessung—Agency for Digitisation, High-Speed Internet and Surveying. Available online: https://www.ldbv.bayern.de/produkte/luftbilder/orthophotos.html (accessed on 20 May 2025).

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 20 May 2025).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. Tensorflow: A system for large-scale machine learning. In Proceedings of the 12th Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Mosig, C.; Vajna-Jehle, J.; Mahecha, M.D.; Cheng, Y.; Hartmann, H.; Montero, D.; Junttila, S.; Horion, S.; Schwenke, M.B.; Adu-Bredu, S.; et al. Deadtrees.earth—An open-access and interactive database for centimeter-scale aerial imagery to uncover global tree mortality dynamics. bioRxiv 2024. [Google Scholar] [CrossRef]

- Li, P.; Tao, H.; Zhou, H.; Zhou, P.; Deng, Y. Enhanced Multiview attention network with random interpolation resize for few-shot surface defect detection. Multimed. Syst. 2025, 31, 36. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).