1. Introduction

A total of 72,000 traffic-related accidents or 4% of all road accidents are caused by drowsy drivers [

1], as driving performance declines with drowsiness [

2]. In the Philippines, 38 children die in road crashes daily. The Metro Manila Development Authority (MMDA) reported 65,032 accidents in the region, averaging 156.65 per day [

1]. Cameras are essential for detecting drowsiness [

3] as it is used to monitor the driver’s eyes for signs of sleepiness [

4]. However, challenges remain in drowsiness detection systems, particularly in accurately distinguishing between different skin tones and individuals wearing glasses. When datasets include biased data, inaccurate results are obtained because the model struggles to distinguish facial features. Additionally, lighting conditions negatively impact detection accuracy.

Therefore, we developed an optimized drowsiness detection model using the NTHU-DDD dataset, long short-term memory (LSTM) deep learning algorithm, and the behavioral features eye aspect ratio (EAR), mouth aspect ratio (MAR), and head pose angles (yaw, pitch, and roll) for real-time prediction. We also developed a hardware device to capture live image frames. In the system with the device and the model, the camera position was adjusted for the optimal distance, height, and angle to capture the faces of drivers inside the car. The developed system was evaluated for all behavioral features in different conditions. The developed system is beneficial as it increases a driver’s awareness while driving. Whenever the system classifies the driver’s drowsiness or loss of focus while driving, it alerts and wakes up the driver.

The performance of the developed drowsiness detection system was evaluated. Drowsiness was detected by analyzing eye and mouth features, as well as head pose angles, for individuals with or without glasses in various lighting conditions. Conditions causing irregular body movements such as Tourette syndrome (TS) were also considered by the system. The behaviors of drivers included in the NTHU-DDD dataset were used in the system. The algorithm was developed using Python version 3.13.2 and Mediapipe version 0.10.7. The system’s effectiveness was evaluated in a controlled environment during stationary and slow-paced driving scenarios.

2. Related Works

Sleep is an essential human function, and deprivation reduces responsiveness and causes a loss of alertness, visual impairment, information processing difficulties, and impaired short-term memory. These symptoms, known as drowsiness or fatigue, significantly increase the risk of fatal accidents while driving [

2]. Behavioral-based systems show comparable or superior accuracy to non-behavioral systems [

5,

6,

7].

2.1. Machine Learning (ML)

ML, a subset of artificial intelligence (AI), is used to learn patterns in data without explicit programming [

8]. We used two key techniques in image classification: data augmentation and class imbalance handling. Data augmentation is used to improve the model’s generalization by offering diverse image scenarios for enhanced information extraction [

9].

2.2. Deep Learning (DL)

DL is used to extract abstract features from large datasets using hierarchical neural networks [

10,

11]. It uses trainable and non-trainable parameters. Convolutional neural networks (CNNs), implemented using TensorFlow version 2.14.0 and MediaPipe version 0.10.7 and recurrent neural networks (RNNs) in DL are used to enhance computer vision [

12]. CNNs process images, videos, time series, and natural language data quickly [

13].

2.3. Facial Recognition

In facial recognition, facial features are extracted to detect drowsiness. Multiple features are detected at once using ML [

14].

2.4. Drowsiness Detection

Different behaviors are detected to determine drowsiness. However, environmental factors including brightness affect DL and the reliability of the system [

15,

16,

17,

18].

2.5. EAR

For drowsiness detection, behavioral features such as eye and mouth movement and the positioning of the head are monitored. The most common feature is eye movement, wherein EAR is used [

19].

2.6. MAR

In the analysis of mouth openness, eight points are used to assess the degree of mouth openness. To determine mouth openness, the distance between the top and bottom lip is also calculated. An increase in MAR indicates a widened mouth [

19].

2.7. Head Pose Estimation

In estimating the head pose of the driver, 3D facial landmarks are used as key points to present distinct features of the face [

20].

2.8. Model Evaluation Metrics

Several metrics are used to measure the accuracy of drowsiness detection and evaluate the model performance [

21,

22].

3. Methodology

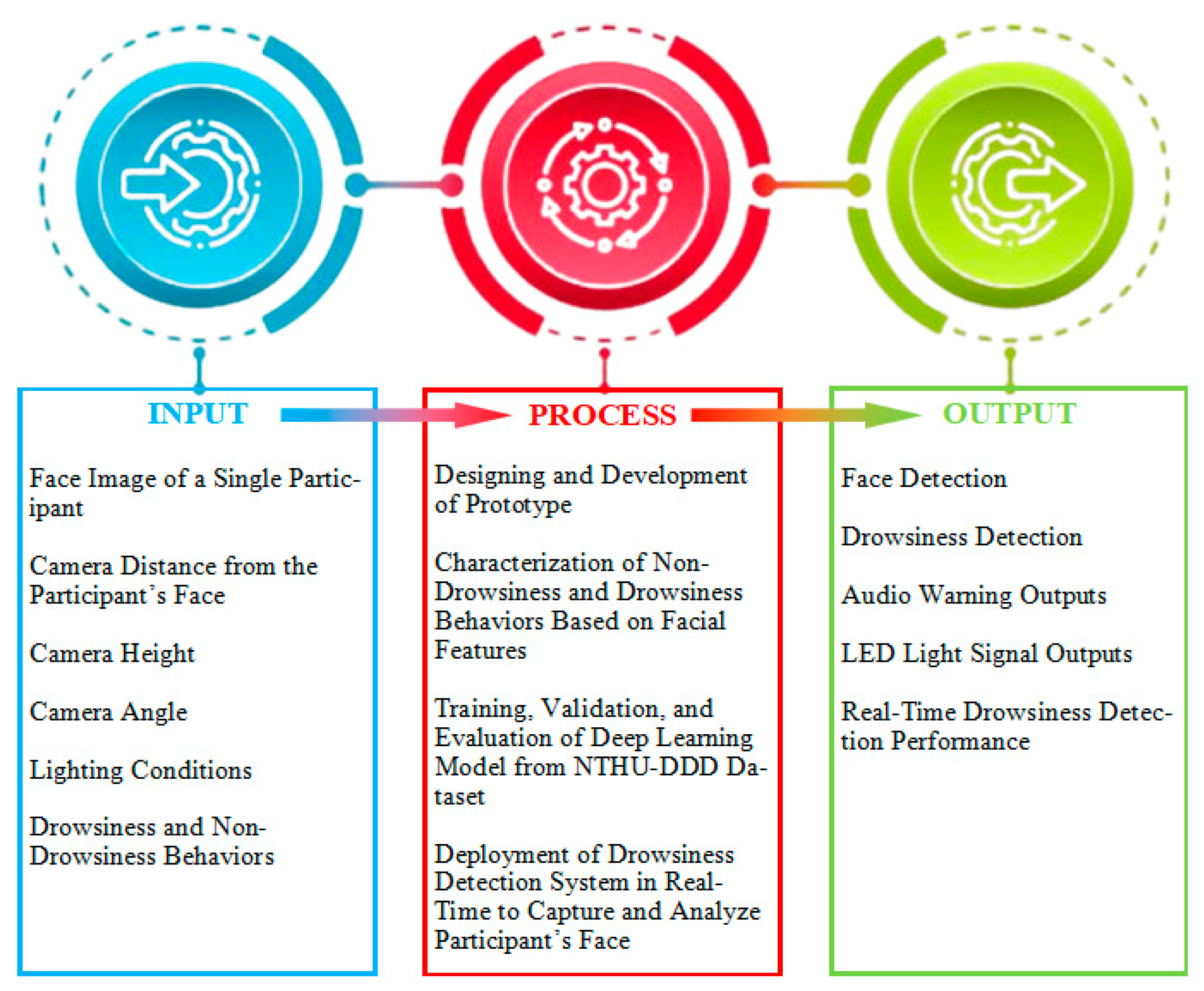

The conceptual framework of the study is shown in

Figure 1, including the input, process, and output of the developed system.

3.1. Methodology Flowchart

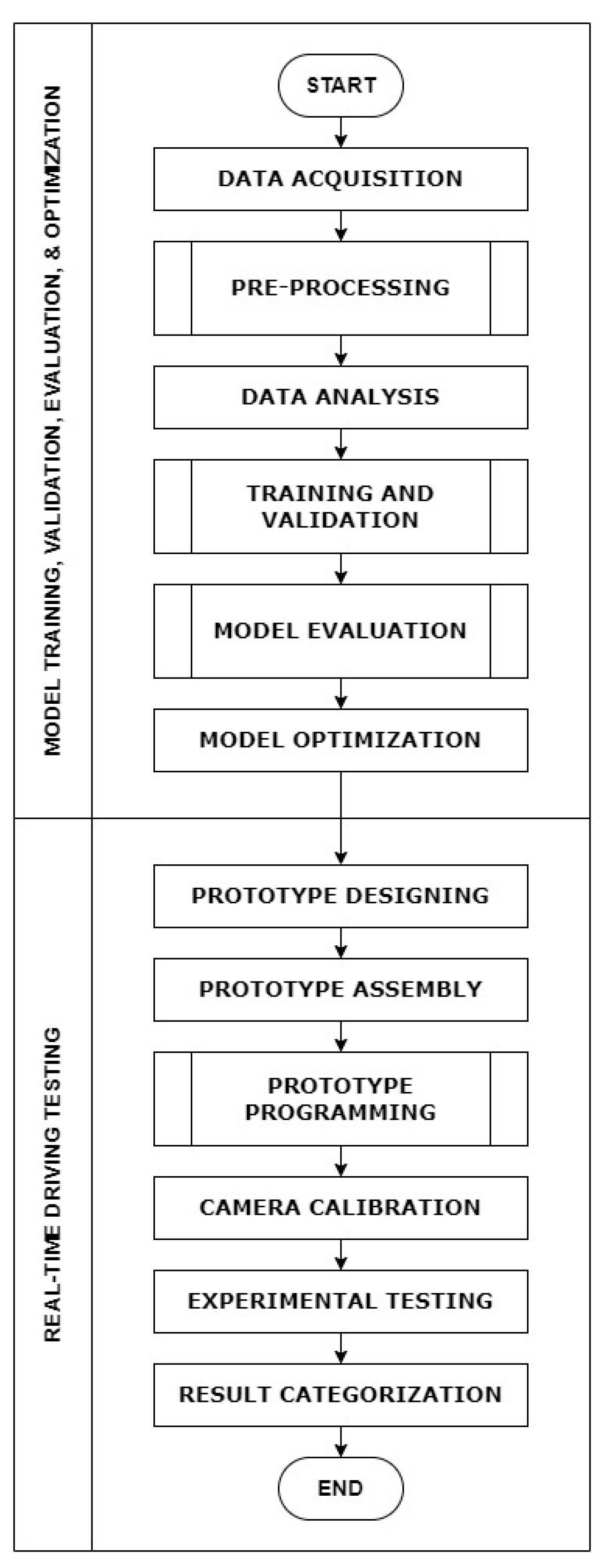

Figure 2 shows the methodology of this study, especially the training and testing of the developed model.

3.2. Model Training, Validation, Evaluation, and Optimization

3.2.1. Database

The dataset for training, validation, and evaluation was obtained from NTHU-DDD. Data processing was conducted for face detection and feature extraction [

23].

3.2.2. Pre-Processing

Pre-processing was performed for face and facial detection using Mediapipe. The pre-computed EAR, MAR, yaw, pitch, and roll features were used for data analysis to determine the processing parameters before the actual training, validation, evaluation, or implementation of the model.

3.2.3. Data Analysis

The data was pre-processed to extract features using box plots of each image. Optimal processing parameters were obtained, and data were compared before and after data processing.

3.2.4. Training and Validation

Video data were downsampled to a target processing speed of 10 FPS for the training, evaluation, and simulation of the model using the parameters. The model architecture, the “Sleepy_Model”, and “NonSleepy_Model” were used to mitigate the complexity of drowsiness detection. LSTM layers were used to avoid overfitting, which is a common issue in DL models with many layers.

3.2.5. Model Evaluation

The trained model was evaluated using the evaluation dataset. On the dataset with 25,797 drowsy frames and 23,901 non-drowsy frames, the model demonstrated accuracies of 0.9573 and 0.9181 in day and night conditions.

3.2.6. Model Optimization

The model comprised three LSTM layers, each followed by dropout layers to prevent overfitting. The first LSTM layer contained 32 units, followed by a dropout layer with 64 units and another dropout layer.

3.3. Simulated Driving Test

3.3.1. System Architecture

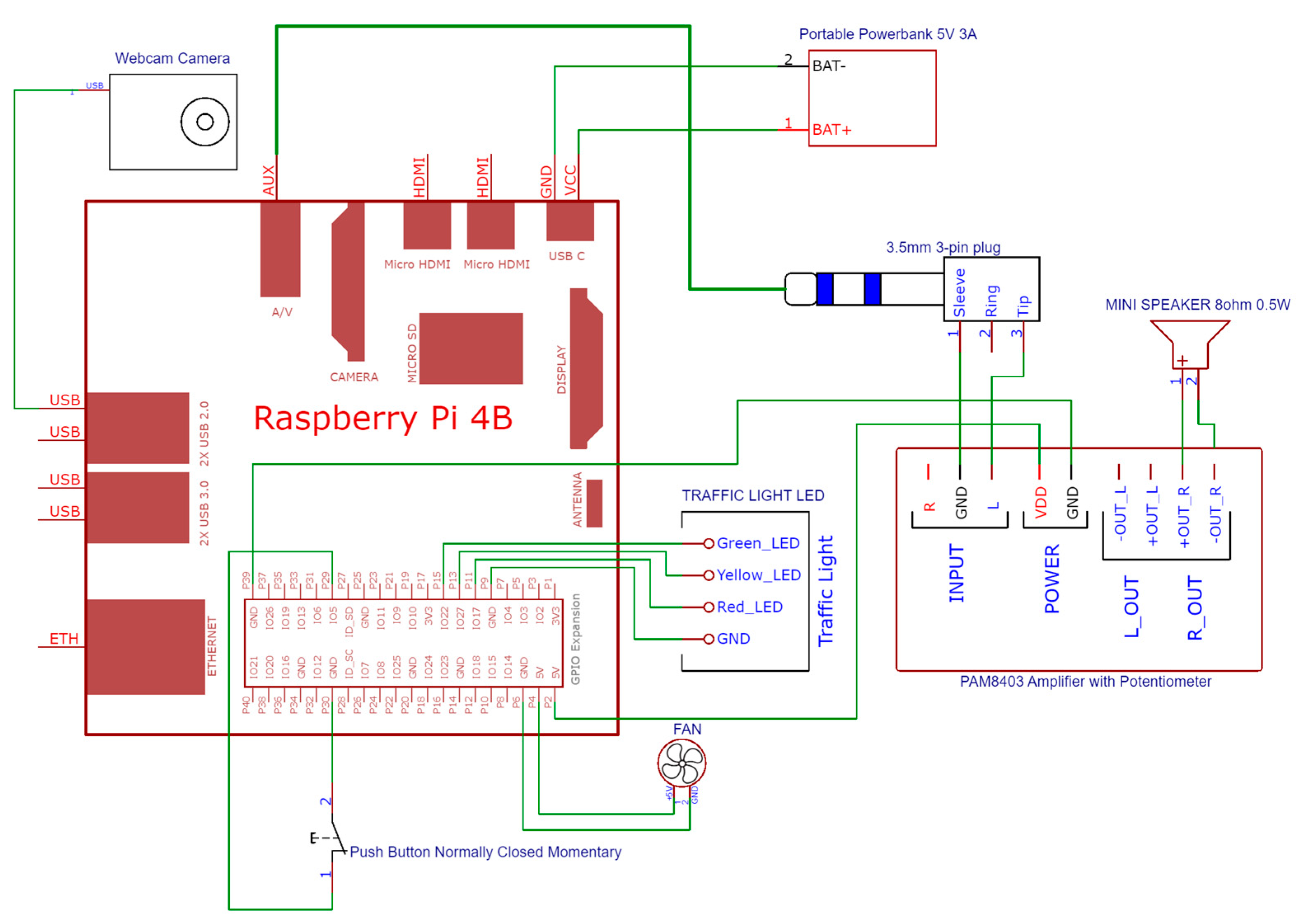

The system consisted of a Raspberry Pi 4 microcontroller, a web camera, a portable power bank, an LED module, an audio amplifier, a speaker, and an audio jack as shown in

Figure 3. Every component was soldered except the connections to and from the microcontroller by USB, audio jack, or GPIO pins. Each pin was assigned based on the function requirement. The power was supplied from the portable power bank. Images were captured by the web camera. The outputs were light signals from the LED module and audio signals from the mini speaker. Components were sourced from MakerLab, Quezon City, Philippines.

3.3.2. Setup Calibration

The camera height, distance, and angle were adjusted to maximize the system’s detection performance. Various positions were tested to find the best angle for capturing the entire facial region of drivers with minimal distortion (

Figure 4). The distance between the camera and a driver was measured for each position, and camera angles were adjusted based on the measured height and distance.

Ambient illumination was measured before testing to assess its impact on drowsiness detection. In the calibration process, visual inspections and adjustments were performed iteratively until the optimal setup was obtained. This ensures that the system operated in the best conditions, minimizing obstruction of the view while simulating driving.

3.3.3. Experimental Setup

The model’s performance was evaluated in an actual driving environment. Three participants were recruited for data collection. The camera was set up with ambient light levels measured for day and night. The camera’s height and distance were calibrated to optimize the system performance. Data on drowsy and non-drowsy behaviors were recorded multiple times per participant. Data were collected to create a confusion matrix and calculate performance metrics, ensuring a thorough evaluation of the model.

4. Results and Discussion

4.1. Training, Validation, and Evaluation

The developed drowsiness detection system with an LSTM-based model was trained on the NTHU-DDD dataset, which includes data from different drivers in different conditions. The dataset was pre-processed to extract features such as EAR, MAR, and head poses (yaw, pitch, roll). The results showed a 90.87% accuracy for drowsiness and a 99.54% accuracy for non-drowsiness. The overall accuracy was 95.23%, demonstrating high detection capabilities. For drivers with glasses, the drowsiness detection accuracy was 94.04%. The results highlighted the excellent performance of the LSTM-based model. While previous models showed high accuracies, they still lacked real-time applicability. The developed system in this study showed the potential for real-time drowsiness detection in simulated driving.

4.2. Real-Time Detection

A real-time drowsiness detection performance was evaluated in a simulated driving environment with three live drivers. The simulation was conducted in day and night conditions, highlighting the significance of camera calibration for optimal detection. Three cameras were placed near the A-pillar, clipped under the rear-view mirror, and above the dashboard. The camera height, distance, and angle were adjusted to maximize facial detection and minimize obstruction. The impact of lighting on calibration and detection accuracy was evaluated with daytime illumination levels ranging from 200 to 260 Lux and nighttime levels from 0 to 20 Lux. These lighting conditions were used to calibrate the camera positions for effective drowsiness detection.

The performance metrics for stationary vehicles are presented in

Table 1. Detection accuracy varied with the presence of glasses. Without glasses, the detection accuracy was higher as unobstructed visibility enabled reliable detection. Wearing glasses resulted in the reduction of features, demonstrating how obstructions hindered the system’s ability to accurately assess facial expressions.

In driving, the dynamics of a vehicle complicate the detection of eye and mouth behaviors, decreasing accuracy. However, by integrating head poses and angles, drowsiness detection accuracy can be enhanced. While motions complicate drowsiness detection, a combination of features enhances the system’s effectiveness, underscoring the importance of a multi-faceted approach in real-time drowsiness detection in various environments.

The accuracy was influenced by varying conditions and features in moving vehicles (

Table 2). During the daytime without wearing glasses, the combination of eye, mouth, and head poses increased the effectiveness of drowsiness detection. While eye movement, head poses, and angles contribute positively, their synergy enhances detection reliability. In comparing the performance of the model during the day and night, the features play an important role in low-light scenarios. Accuracy during the night without glasses on the face decreases under lighting. However, glasses on the face at night became obstacles in detection, decreasing accuracy and highlighting the difficulties in drowsiness detection in low lighting and with obstructions. Multiple features in various scenarios helped maintain high accuracy and robustness in real-time drowsiness detection. Their integration is crucial for optimizing detection performance in various conditions.

5. Conclusions

We developed and evaluated a robust drowsiness detection model utilizing multiple facial behavioral cues, including EAR, MAR, and head pose angles (yaw, pitch, and roll). Leveraging the NTHU-DDD dataset for training, the model achieved a high accuracy of 95.23% in training and validation, reflecting its capability to recognize drowsiness in varied conditions. The ensembled LSTM-based model was optimized for class imbalance and performance, demonstrating accuracies ranging from 91.81 to 95.82% with an average of 94.04%.

The model’s accuracy varied significantly based on features and conditions. In a moving vehicle, the accuracy varied from 51.85 to 85.71%. The calibration of camera angles was crucial to ensure optimal camera positioning and enhance drowsiness detection accuracy in varying conditions. Accuracies were higher in stationary vehicles, highlighting the influence of moving vehicles on detection performance. The combined features (EAR, MAR, yaw, pitch, and roll) enabled a high accuracy in a moving vehicle (80.95 to 85.71%). With a single feature, the accuracy was lower, ranging from 51.85 to 72.22%, while with dual features, the accuracy improved from 64.44 to 77.78%. The model maintained an average frame rate of 10 FPS, aligning with deployment specification, which underscored the need for optimization to enhance performance consistency and accuracy. Prediction delays were evident with longer detection times when transitioning from non-drowsy to drowsy states, while maintained drowsiness was detected rapidly in 5 s.

The observed lower accuracy in certain conditions, particularly at night or with glasses on the face, was attributed to low-light conditions, reduced illumination, and the obstruction of detecting facial features, complicating the detection process. These factors increased variability in performance, resulting in higher false positives or missed detections. To address these issues, advanced feature extraction techniques and lighting adjustments are required. Enhancing the model’s robustness to varying environmental factors is also crucial for reliable performance and effective drowsiness detection in diverse real-world scenarios.

To enhance the effectiveness and applicability of the drowsiness detection model, improvements are needed. Given the variable accuracy in a moving vehicle, the model’s architecture and feature sets must be optimized using edge computing. By exploring advanced model quantization, computational efficiency can be enhanced while maintaining high accuracy in different conditions. Additionally, it is necessary to refine feature extraction algorithms and integrate adaptive learning methods for the model to dynamically adjust to diverse environmental conditions and individual user characteristics. This will improve overall robustness and accuracy. It is also required us to expand the dataset to encompass a broader range of demographic profiles, environmental scenarios, and lighting conditions for effective evaluation and generalizability. Incorporating additional features such as heart rate variability, auditory cues, and visual indicators enhances the model’s capability to detect drowsiness in various conditions. Addressing the challenges posed by low-light conditions and obstructions is crucial to develop advanced feature extraction techniques and adaptive algorithms to improve detection accuracy.

The variability in performance and prediction delays must be addressed. As the model’s performance was inconsistent, particularly when transitioning from non-drowsy to drowsy states, the model’s algorithms and processing strategies must be refined for consistent accuracy and timely detection. By incorporating real-time feedback mechanisms using human-in-the-loop systems, user engagement, and usability, safer driving practices can be executed and drowsiness-related accidents can be reduced. Drowsiness detection technologies need to be developed to secure safety and user experience in real-world applications.

Author Contributions

Conceptualization, J.D.S.C., M.J.R.M. and J.B.G.I.; methodology, J.D.S.C. and M.J.R.M.; software, M.J.R.M.; formal analysis, M.J.R.M.; investigation, J.D.S.C.; data curation, J.D.S.C.; writing—original draft preparation, J.D.S.C. and M.J.R.M.; writing—review and editing, J.D.S.C., M.J.R.M., and J.B.G.I.; visualization, M.J.R.M.; supervision, J.B.G.I.; funding acquisition, J.B.G.I. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The Article Processing Charge (APC) was covered by the conference committee as part of their agreement with the journal.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the nature of the study involving low-risk driving in a controlled, safe environment.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. However, the NTHU-DDD video dataset must be requested directly from the licensed provider, National Tsing Hua University.

Acknowledgments

The researchers express sincere gratitude to their advisor, Joseph Bryan Ibarra, for his invaluable guidance and support throughout the research. They are also deeply thankful to their families for their unwavering love and encouragement. Special thanks go to the participants, including Symon, Denise, Akki, and others, for their generous contributions of time and insights. Lastly, the researchers acknowledge Mapua University for providing the resources and environment necessary for this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Freedom of Information (FOI). Metropolitan Manila Development Authority. 2020. Available online: https://mmda.gov.ph/2-uncategorised/3345-freedom-of-information-foi.html (accessed on 17 June 2023).

- Adochiei, I.R.; Știrbu, O.I.; Adochiei, N.I.; Pericle-Gabriel, M.; Larco, C.M.; Mustata, S.M.; Costin, D. Drivers’ Drowsiness Detection and Warning Systems for Critical Infrastructures. In Proceedings of the 2020 International Conference on e-Health and Bioengineering (EHB), Iasi, Romania, 29–30 October 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Ayachi, R.; Afif, M.; Said, Y.; Abdelali, A.B. Drivers Fatigue Detection Using EfficientDet In Advanced Driver Assistance Systems. In Proceedings of the 2021 18th International Multi-Conference on Systems, Signals & Devices (SSD), Monastir, Tunisia, 22–25 March 2021; pp. 738–742. [Google Scholar] [CrossRef]

- Ananthi, S.; Sathya, R.; Vaidehi, K.; Vijaya, G. Drivers Drowsiness Detection using Image Processing and I-Ear Techniques. In Proceedings of the 2023 7th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 17–19 May 2023; pp. 1326–1331. [Google Scholar] [CrossRef]

- Awasthi, A.; Nand, P.; Verma, M.; Astya, R. Drowsiness detection using behavioral-centered technique-A Review. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 28–29 January 2021; pp. 1008–1013. [Google Scholar] [CrossRef]

- Josephin, J.S.F.; Lakshmi, C.; James, S.J. A review on the measures and techniques adapted for the detection of driver drowsiness. IOP Conf. Ser. Mater. Sci. Eng. 2020, 993, 012101. [Google Scholar] [CrossRef]

- Ramzan, M.; Khan, H.U.; Awan, S.M.; Ismail, A.; Ilyas, M.; Mahmood, A. A Survey on State-of-the-Art Drowsiness Detection Techniques. IEEE Access 2019, 7, 61904–61919. [Google Scholar] [CrossRef]

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Review of DL for Screening, Diagnosis, and Detection of Glaucoma Progression. Transl. Vis. Sci. Technol. 2020, 9, 42. [Google Scholar] [CrossRef] [PubMed]

- Kumar, T.; Mileo, A.; Brennan, R.; Bendechache, M. Image Data Augmentation Approaches: A Comprehensive Survey and Future directions. arXiv 2023, arXiv:2301.02830. [Google Scholar] [CrossRef]

- Jin, S.; Zeng, X.; Xia, F.; Huang, W.; Liu, X. Application of DL methods in biological networks. Brief. Bioinform. 2020, 22, 1902–1917. [Google Scholar] [CrossRef] [PubMed]

- Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H. A review on DL approaches in healthcare systems: Taxonomies, challenges, and open issues. J. Biomed. Inform. 2020, 113, 103627. [Google Scholar] [CrossRef]

- Xie, D.; Zhang, L.; Bai, L. DL in Visual Computing and Signal Processing. Appl. Comput. Intell. Soft Comput. 2017, 2017, 1320780. [Google Scholar] [CrossRef]

- Balaban, S. DL and face recognition: The state of the art. In Proceedings of the Biometric and Surveillance Technology for Human and Activity Identification XII, Baltimore, MD, USA, 15 May 2015. [Google Scholar] [CrossRef]

- Filali, H.; Riffi, J.; Mahraz, A.M.; Tairi, H. Multiple face detection based on ML. In Proceedings of the 2018 International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 2–4 April 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Abad, M.; Genavia, J.C.; Somcio, J.L.; Vea, L. An Innovative Approach on Driver’s Drowsiness Detection through Facial Expressions using Decision Tree Algorithms. In Proceedings of the 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 1–4 December 2021; pp. 0571–0576. [Google Scholar] [CrossRef]

- Srivastava, S. On the Physiological Measures based Driver’s Drowsiness Detection. In Proceedings of the 2021 5th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 22–23 October 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Bekhouche, S.E.; Ruichek, Y.; Dornaika, F. Driver drowsiness detection in video sequences using hybrid selection of deep features. Knowl.-Based Syst. 2022, 252, 109436. [Google Scholar] [CrossRef]

- Tabal, K.M.; Caluyo, F.S.; Ibarra, J.B. Microcontroller-implemented artificial neural network for electrooculography-based wearable drowsiness detection system. In Advanced Computer and Communication Engineering Technology; Springer International Publishing: Cham, Switzerland, 2015; pp. 461–472. [Google Scholar] [CrossRef]

- Albadawi, Y.; AlRedhaei, A.; Takruri, M. Real-Time ML-Based Driver Drowsiness Detection Using Visual Features. J. Imaging 2023, 9, 91. [Google Scholar] [CrossRef] [PubMed]

- Vezzetti, E.; Marcolin, F. 3D human face description: Landmarks measures and geometrical features. Image Vis. Comput. 2012, 30, 698–712. [Google Scholar] [CrossRef]

- Parel, E.L.P.; Miranda, G.J.Y.; Vergara, E. Smart Vehicle Safety System with Alcohol and Drowsiness Detection, Eye Tracking, and SMS Alert System. In Proceedings of the 2023 IEEE 5th Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 27–29 October 2023; pp. 254–258. [Google Scholar] [CrossRef]

- Vezzetti, E.; Moos, S.; Marcolin, F.; Stola, V. A pose-independent method for 3D face landmark formalization. Comput. Methods Programs Biomed. 2012, 108, 1078–1096. [Google Scholar] [CrossRef] [PubMed]

- Weng, C.-H.; Lai, Y.-H.; Lai, S.-H. Driver Drowsiness Detection via a Hierarchal Temporal Deep Belief Network. In Proceedings of the Computer Vision—ACCV 2016 Workshops, Taipei, Taiwan, 20–24 November 2016; pp. 117–133. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).