Abstract

Pansharpening is a remote sensing image fusion technique that combines a high-resolution (HR) panchromatic (PAN) image with a low-resolution (LR) multispectral (MS) image to produce an HR MS image. The primary challenge in pansharpening lies in preserving the spatial details of the PAN image while maintaining the spectral integrity of the MS image. To address this, this article presents a generative adversarial network (GAN)-based approach to pansharpening. The GAN discriminator facilitated matching the generated image’s intensity to the HR PAN image and preserving the spectral characteristics of the LR MS image. The performance in generating images was evaluated using the peak signal-to-noise ratio (PSNR). For the experiment, original LR MS and HR PAN satellite images were partitioned into smaller patches, and the GAN model was validated using an 80:20 training-to-testing data ratio. The results illustrated that the super-resolution images generated by the SRGAN model achieved a PSNR of 31 dB. These results demonstrated the developed model’s ability to reconstruct the geometric, textural, and spectral information from the images.

1. Introduction

Remote sensing technology has become increasingly popular across various fields, including business, agriculture, environmental monitoring, disaster management, land management, and natural resource utilization. However, it is costly, with the usage fees depending on the user’s data requirements, such as the shooting area, resolution, and cloud cover. In Refs. [1,2,3,4], the size and resolution of different cameras were reviewed, noting that high-resolution satellite images are more expensive and cover smaller areas.

Multispectral (MS) imagery, which typically includes different bands such as visible light and other spectra, has a lower resolution. Panchromatic (PAN) imagery is captured in a single band that encompasses all visible light bands, resulting in a higher resolution. PAN imagery is often combined with MS imagery to leverage the advantages of both. However, there is a fee difference between PAN and MS images, with PAN typically being more expensive, depending on the specifications. In pansharpening, a high-resolution single-band image is combined with a lower-resolution multiband image to improve the overall image quality. The high-resolution single-band imagery is used to upsample lower-resolution multiband images, improving the spatial details and visual interpretation [3,4].

With the advancement of artificial intelligence (AI) technology, super-resolution approaches are often used to generate high-resolution PAN images. Super-resolution techniques enhance image quality through upsampling, and there are many approaches to this available today. Many researchers have adopted generative adversarial networks (GANs) [1,2,3,4,5,6,7,8,9,10], a deep learning architecture that trains two neural networks to oppose each other. They utilize a deep residual network (ResNet) with a mean squared error (MSE) loss function as the base. An adversarial loss function is used for the discriminator, and content loss training is performed to extract the similarity of object features using visual geometry group (VGG) loss. Super-resolution ResNet (SRResNet) employs only the generator network architecture, while SRGAN utilizes both the generator and discriminator network architectures.

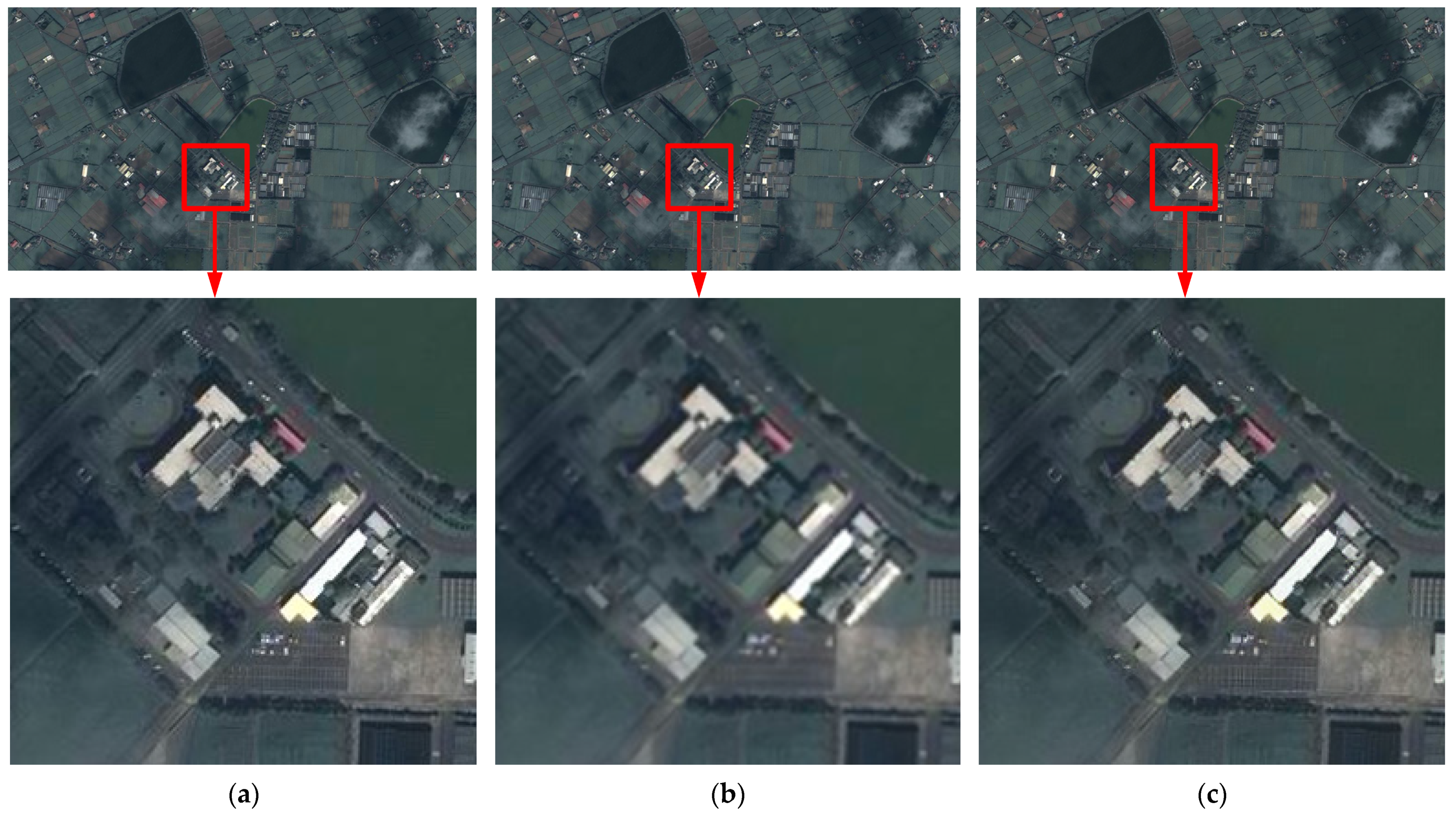

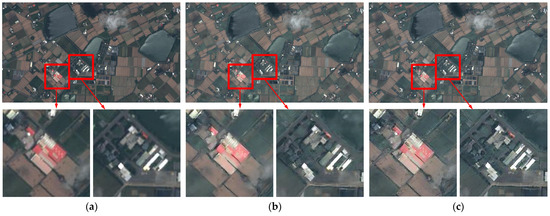

In the training stage, an HR image is used as the input and then downsampled to create an LR image. The original HR image and the downsampled LR image are used to train the model. In the execution stage, the original HR image is used again as the input. The process involves downsampling first and then upsampling using the trained SRGAN model. The resulting image is compared with the original HR image to validate the model. Figure 1 illustrates the results of applying this approach to satellite images. This article compares the differences in the amount of altered data and notes that these differences become apparent during the downsampling process and the subsequent upsampling process.

Figure 1.

(a) The pansharpened image (as the ground truth) [11]; (b) a downsampled image from (a); (c) an SRGAN image from (b).

2. Methodology

2.1. The Ground Truth from Pansharpening

In this article, a pansharpening program was developed based on the theories from Refs. [10,12] to obtain HR color images as the ground truth. Most satellite sensors capture PAN and MS images, providing complementary information about the same scene. The goal of pansharpening is to fuse PAN and MS images to generate a high-quality image.

First, the LR color MS image is resized to match the dimensions of the HR black-and-white PAN image. The LR color multispectral (MS) image is converted from the RGB color space into the YCrCb color space based on (1)–(3).

where the Y channel represents the luminance component, the Cr channel represents the red-difference chroma component, and the Cb channel represents the blue-difference chroma component.

Next, the Y channel of the LR color image is replaced with the Y channel of the HR image. Finally, the LR color MS image is converted back from the YCrCb color space into RGB according to (4)–(6).

where R, G, and B are the red, green, and blue values of each pixel in any area of the original image.

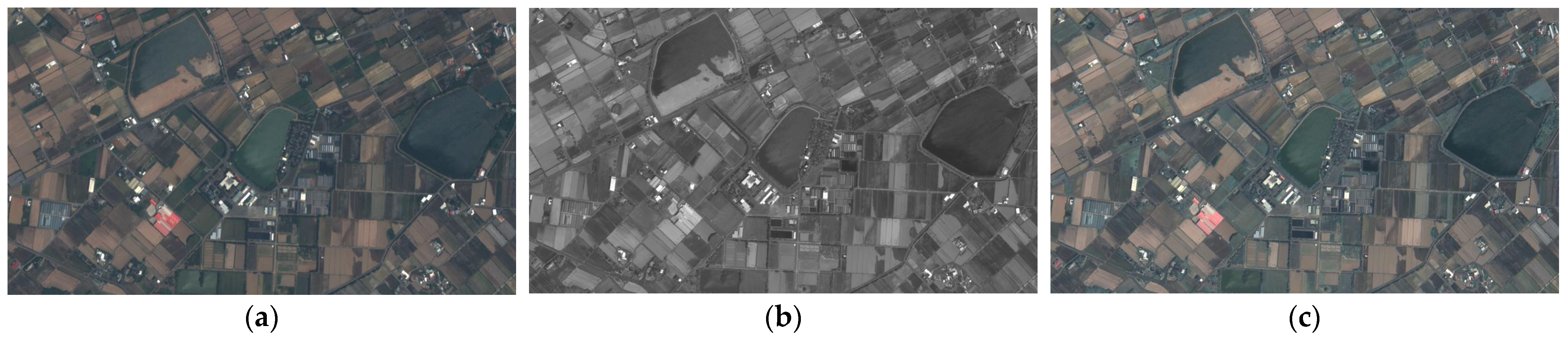

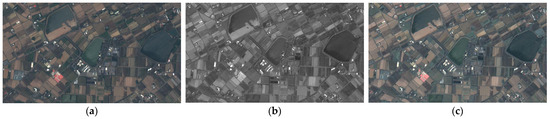

Using the above approach, the ground truth for this research was obtained. Figure 2 illustrates the original MS image, the original PAN image, and the pansharpened image, which combines the original MS image with the PAN image.

Figure 2.

(a) The MS image [11], (b) the PAN image [11], and (c) the pansharpened image, which is a combination of (a) and (b).

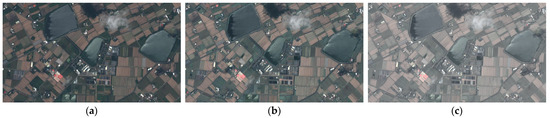

2.2. The Ground Truth from Adobe Photoshop

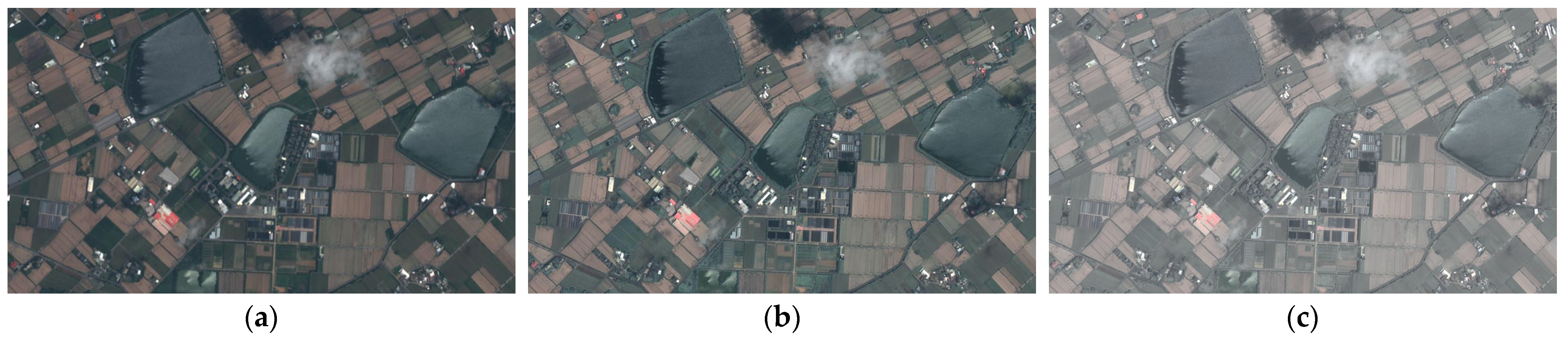

The ground truth was obtained using Adobe Photoshop [13]. First, the LR color image is resized to match the dimensions of the HR black-and-white image. The LR color image is placed in the upper layer while the HR black-and-white image is in the lower layer. Then, the upper and lower layers are decomposed separately from red, green, and blue (RGB) into hue (H), saturation (S), and luminance (L). Figure 3 illustrates the original MS image, the pansharpened image derived from the MS and PAN images, and the image processed using Adobe Photoshop. Next, the H and S are extracted from the upper layer, and L is extracted from the lower layer. Finally, the new HSL values are converted back into RGB to generate the HR color image.

Figure 3.

(a) Original MS image [11], (b) pansharpened image, and (c) Adobe photoshop image.

2.3. The Network Architecture

Traditionally, when restoring HR images using upsampling approaches, the MSE loss is used as the loss function. Despite the better evaluation scores, it does not significantly enhance the visual quality from a human perspective, as it causes the loss of high-frequency signals in the image. To address this challenge, this article utilizes a new loss function, perceptual loss, which combines content loss and adversarial loss [7]. In the SRResNet model, it is adopted as the backbone and paired with a discriminator network to form the SRGAN architecture (Figures 1 and 2 in Ref. [5], Figure 4 in Ref. [6]). In the absence of an HR dataset, the pansharpening program is used [10,12]. The resolution of the synthesized HR dataset must match the scaling factor of the LR dataset, and any slight discrepancies must be adjusted to a 2:1 ratio. After importing the two datasets, the step of obtaining LR data through downsampling is skipped; instead, the existing LR data are used as the scaling factor. The LR data are then scaled to match the HR data using this scaling factor. The image size is adjusted based on the crop ratio, ensuring that both crops align with the scaling factor. This alignment ensures that the corresponding blocks in the image are correctly imported into the model for training.

2.4. The Loss Function

In GANs [9], the DLoss function is a minimax process, where the objective function V(G,D) is defined as (7).

where IHR is the real image, and ILR is the LR image. The discriminator D distinguishes between real HR images and the super-resolution images generated by the generator. Therefore, it is crucial to minimize the loss for distinguishing real images and the loss for distinguishing generator-generated super-resolution images. The discriminator loss (DLoss) is the sum of the losses in (8), as shown below.

where y is the label for the real image (with a value between 0 and 1), D(x) is the predicted probability of the real image, and D(G(x)) is the predicted probability of the super-resolution image generated by the generator.

3. Results and Discussion

3.1. The Dataset

The training dataset was provided by the Center for Space and Remote Sensing Research (CSRSR), National Central University [11]. It consists of 12 MS satellite images and 12 PAN satellite images in JPG format with slight size differences. The MS satellite images range from 932 × 524 to 936 × 528 pixels, while the PAN images range from 1864 × 1048 to 1872 × 1056 pixels. In the initial acquisition of these image pairs, several of the images did not have an exact 2:1 size ratio.

For model training, two datasets are required: an HR dataset and an LR dataset. The HR dataset utilizes the true values generated by pansharpening, while the LR dataset consists of the original MS satellite images. The relative sizes of the images are adjusted based on the scaling factor, which is 2 in this study, meaning the image sizes must have a 2:1 ratio. However, the size ratio of the paired images is not always 2:1. For instance, an MS satellite image of 932 × 524 pixels might correspond to a PAN satellite image of 1872 × 1048 pixels. Failure to scale the images according to this scaling factor results in unsuccessful pansharpening of the ground truth images and leads to the model learning the incorrect parameters during training. Therefore, in this study, the MS satellite images are scaled to 932 × 524 pixels, and the PAN satellite images are scaled to 1864 × 1048 pixels.

Given the limited amount of data provided, data augmentation is applied to enhance the data’s richness. Specifically, different portions of the images are randomly cropped, and the brightness and contrast are adjusted to simulate various weather conditions. This allows the images to transition from day to night, winter to summer, sunny to rainy, etc., enabling the model to learn more diverse parameters. Additionally, the randomly cropped images must maintain the scaling factor ratio, and the crop positions must be adjusted accordingly. The data from eight pairs of images are decomposed into a total of 120,000 pairs for training, while another eight pairs of images are reserved for testing. The remaining four pairs of images are used as a test dataset to evaluate the model’s performance.

3.2. The Performance Evaluation

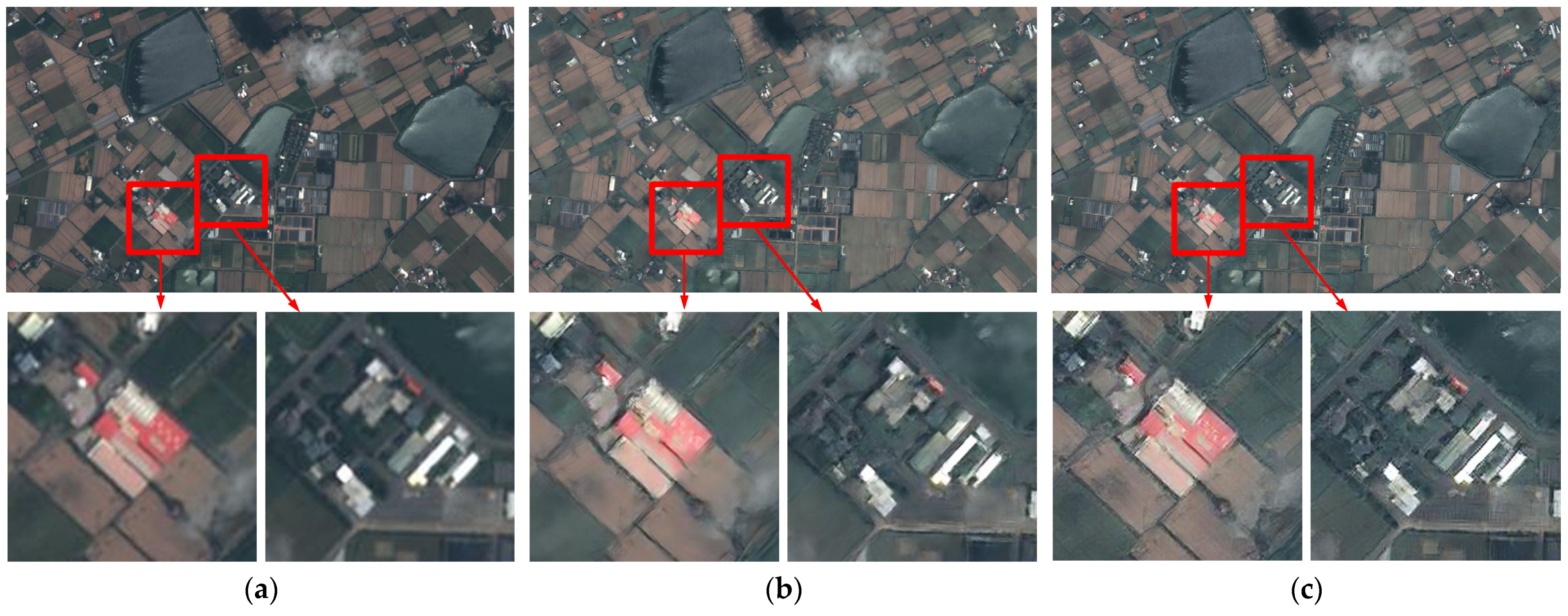

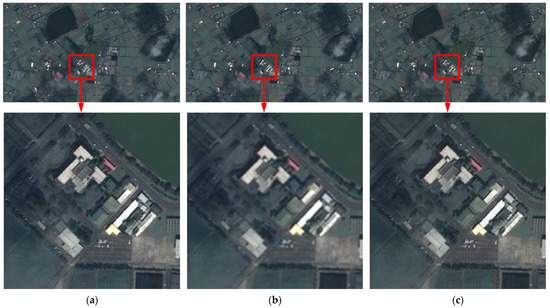

In the execution stage, the input image bypasses the downsampling process and undergoes super-resolution processing, and the resulting image is then compared with the ground truth image to calculate the peak signal-to-noise ratio (PSNR). Figure 4a illustrates the original MS satellite image resized to twice its size, Figure 4b presents the original MS image upsampled using SRResNet, and Figure 4c describes the original MS image upsampled using SRGAN. For comparison, certain areas such as houses and parking lots are highlighted with red boxes, and the red-boxed areas are zoomed in. Due to the limited data, an 8:4 training-to-testing split is used for validation.

Figure 4.

(a) Original MS image [11], (b) SRResNet image from (a), and (c) SRGAN image from (a).

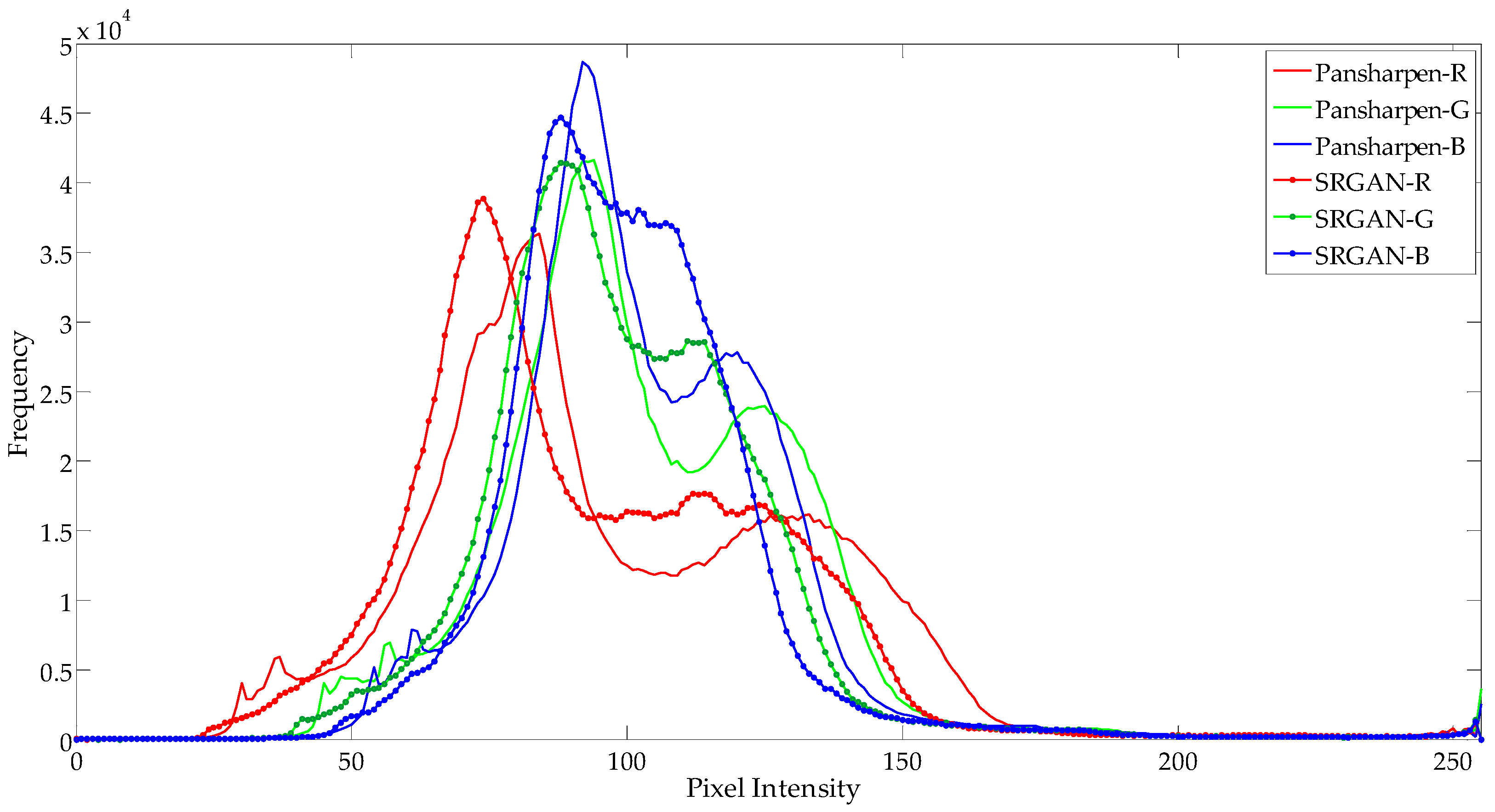

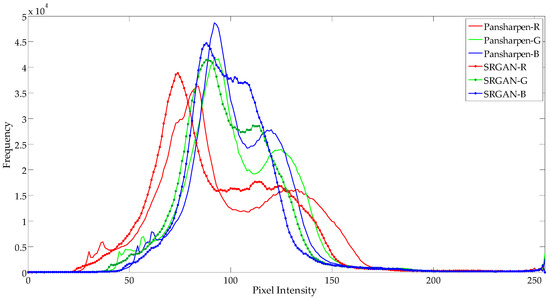

In addition, an image histogram is created to record the number of pixels at each RGB level. The horizontal axis represents the intensity, ranging from 0 to 255, and the vertical axis represents the number of pixels at each intensity. In terms of the number of pixels at each level, the RGB channels are compared with the SRGAN-generated image shown in Figure 4c and with the pansharpening ground truth shown in Figure 2c. Figure 5 illustrates a comparison of the results, indicating that the color channels in both images are very similar.

Table 1 presents a comparison of the images generated by SRResNet and SRGAN with the ground truth image produced by pansharpening and Adobe Photoshop. Table 1 and Table 2 present the PSNR values for both images and the execution times of their respective algorithms. The results indicate that the resolution of the original MS satellite image is significantly improved, with its PSNR value increasing to 28 dB compared to that of the original image. Additionally, regarding the ground truth comparison of the PSNR values, the value for SRResNet is higher than that for SRGAN. This result confirms the findings of the SRGAN article [6] that SRResNet’’s higher PSNR scores make it favorable for machine interpretation. On the other hand, SRGAN tends to produce realistic results in terms of visual perception. In Ref. [6], the mean opinion score (MOS) is used as an alternative scoring metric, and SRGAN scores significantly higher in terms of its visual performance compared to that of SRResNet. The MOS methodology and corresponding data charts are thoroughly discussed in Reference [6], which demonstrates that SRGAN’s MOS scores more closely approximate those of the original high-resolution images.

Table 1.

The performance in terms of the PSNR of SRResNet and SRGAN.

Table 2.

Execution times of SRResNet and SRGAN.

4. Conclusions

This article employed GAN approaches to approximate pansharpening of remote sensing images. The data input approach for training was modified through the implementation of a deep ResNet with a perceptual loss function, utilizing adversarial and content losses for discriminator and generator training. Instead of using an HR dataset input and downsampling to obtain the LR dataset, simultaneous input of both the HR and LR datasets was performed. This approach enabled a clear definition of the initial and target states of the image, facilitating learning of the optimal parameters. To resolve any potential issues encountered, the differences between various ground truths were compared. A comparison of the super-resolution results for satellite imagery was conducted between models trained using the original SRGAN data input approach and those trained with the modified approach to ascertain which yielded superior results.

Author Contributions

Conceptualization, Y.-S.C. and F.T.; data curation, B.-H.C. and J.-H.J.; formal analysis, Y.-S.C., B.-H.C., J.-H.J., M.-J.S. and F.T.; funding acquisition, Y.-S.C.; investigation, Y.-S.C., B.-H.C., J.-H.J. and M.-J.S.; methodology, Y.-S.C., B.-H.C., J.-H.J. and F.T.; project administration, Y.-S.C.; resources, Y.-S.C. and F.T.; software, B.-H.C., J.-H.J., and M.-J.S.; supervision, Y.-S.C.; writing—original draft, Y.-S.C., B.-H.C. and J.-H.J.; writing—review & editing, Y.-S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Science and Technology, Taiwan, under Grant number of MOST 111-2121-M-033-001, and the National Science and Technology Council, Taiwan, under Grant number of NSTC 112-2121-M-033-001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created.

Acknowledgments

This research was supported partly by the Ministry of Science and Technology, Taiwan, and by the National Science and Technology Council, Taiwan. The remote sensing images were provided by the Center for Space and Remote Sensing Research (CSRSR), National Central University, Taiwan [11].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, Y.-C.; Chiou, Y.-S.; Shih, M.-J. Interpretation of transplanted positions based on image super-resolution approaches for rice paddies. In Proceedings of the 2023 Sixth International Symposium on Computer, Consumer and Control (IS3C), Taichung, Taiwan, 30 June–3 July 2023; pp. 358–361. [Google Scholar]

- Shih, M.-J.; Chiou, Y.-S.; Chen, Y.-C. Detection and interpretation of transplanted positions using drone’s eye-view images for rice paddies. In Proceedings of the 2021 IEEE 3rd Eurasia Conference on IOT, Communication and Engineering (ECICE), Yunlin, Taiwan, 29–31 October 2021; pp. 1–5. [Google Scholar]

- Gastineau, A.; Aujol, J.-F.; Berthoumieu, Y.; Germain, C. Generative adversarial network for pansharpening with spectral and spatial discriminators. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4401611. [Google Scholar] [CrossRef]

- Liu, Q.; Zhou, H.; Xu, Q.; Liu, X.; Wang, Y. PSGAN: A generative adversarial network for remote sensing Image Pan-Sharpening. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10227–10241. [Google Scholar] [CrossRef]

- Li, W.; He, D.; Liu, Y.; Wang, F.; Huang, F. Super-resolution reconstruction, recognition, and evaluation of laser confocal images of hyperaccumulator Solanum nigrum endocytosis vesicles based on deep learning: Comparative study of SRGAN and SRResNet. Front. Plant Sci. 2023, 14, 1146485. [Google Scholar] [CrossRef] [PubMed]

- Ledig, C.; Theis, L.; Husz, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F.F. Perceptual losses for real-time style transfer and super-resolution. arXiv 2016, arXiv:1603.08155. Available online: https://arxiv.org/abs/1603.08155 (accessed on 29 September 2024).

- Bruna, J.; Sprechmann, P.; LeCun, Y. Super-resolution with deep convolutional sufficient statistics. arXiv 2016, arXiv:1511.05666. Available online: https://arxiv.org/abs/1511.05666 (accessed on 29 September 2024).

- Goodfellow, I.J.; Pouget-Abadiey, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozairz, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process Syst. 2014, 3, 1–9. [Google Scholar]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Center for Space and Remote Sensing Research, National Central University. Available online: https://www.csrsr.ncu.edu.tw/ (accessed on 29 September 2024).

- Lakhwani, K.; Murarka, P.D.; Chauhan, N.S. Color space transformation for visual enhancement of noisy color image. Int. J. ICT Manag. 2015, 3, 9–13. [Google Scholar]

- Saravanan, G.; Yamuna, G.; Nandhini, S. Real time implementation of RGB to HSV/HSI/HSL and its reverse color space models. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016; pp. 462–466. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).