A Review of the Image Classification Models Used for the Prediction of Bed Defects in the Selective Laser Sintering Process †

Abstract

1. Introduction

- To replicate and apply the commonly used VGG-16 on the SLS PBDs dataset [4].

- To build and test an Efficientnet_v2 [5] model on the same dataset.

- To compare the accuracy and sensitivity of the VGG-16 and Efficientnet_v2 models.

- Based on the comparison, identify any improvements in using the Efficientnet_v2 model for defect detection.

2. Method

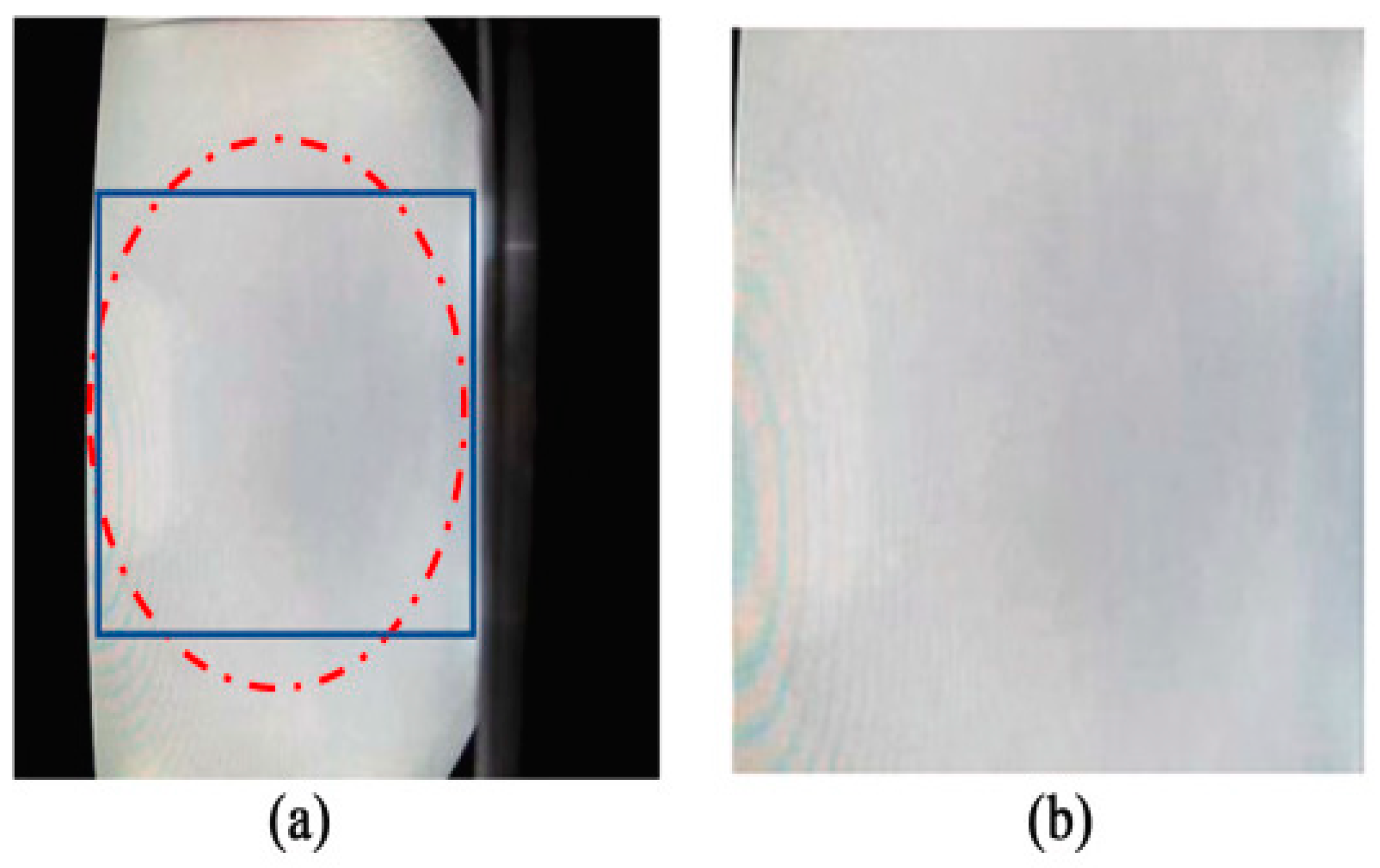

2.1. Dataset and Pre-Processing

2.2. Modelling, Hyperparameters and Performance Metrics

3. Results

3.1. Model Accuracy and Sensitivity

3.2. Confusion Matrix

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Malekipour, E.; El-Mounayri, H. Common defects and contributing parameters in powder bed fusion AM process and their classification for online monitoring and control: A review. Int. J. Adv. Manuf. Technol. 2017, 95, 527–550. [Google Scholar] [CrossRef]

- Pulsar. Available online: https://www.pulsarplatform.com/blog/2018/brief-history-computer-vision-vertical-ai-image-recognition/ (accessed on 12 July 2023).

- Builtin. Available online: https://builtin.com/data-science/transfer-learning (accessed on 12 July 2023).

- Westphal, E.; Seitz, H. A machine learning method for defect detection and visualization in selective laser sintering based on convolutional neural networks. Addit. Manuf. 2021, 41, 101965. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th ICML 2019, Long Beach Convention & Entertainment Center, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Aggarwal, C.C. Neural Networks and Deep Learning: A Textbook, 1st ed.; Springer: Cham, Switzerland, 2018; pp. 315–371. [Google Scholar]

| Cost Function | Learning Rate | Optimiser | Epochs | Batch Size | Lr Decay | Early Stoppage |

|---|---|---|---|---|---|---|

| Binary cross-entropy | 0.001 | Adam ß1 = 0.9 ß2 = 0.999 | 120 | 16 | Patience = 5 | Patience = 20 |

| Predicted Values | |||||

|---|---|---|---|---|---|

| VGG-16 | EfficientNet_v2 | ||||

| OK | DEF | OK | DEF | ||

| OK | 460 | 20 | OK | 479 | 7 |

| DEF | 38 | 478 | DEF | 19 | 491 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Colville, M.; Kerr, E.; Nikam, S. A Review of the Image Classification Models Used for the Prediction of Bed Defects in the Selective Laser Sintering Process. Eng. Proc. 2024, 65, 3. https://doi.org/10.3390/engproc2024065003

Colville M, Kerr E, Nikam S. A Review of the Image Classification Models Used for the Prediction of Bed Defects in the Selective Laser Sintering Process. Engineering Proceedings. 2024; 65(1):3. https://doi.org/10.3390/engproc2024065003

Chicago/Turabian StyleColville, Matthew, Emmett Kerr, and Sagar Nikam. 2024. "A Review of the Image Classification Models Used for the Prediction of Bed Defects in the Selective Laser Sintering Process" Engineering Proceedings 65, no. 1: 3. https://doi.org/10.3390/engproc2024065003

APA StyleColville, M., Kerr, E., & Nikam, S. (2024). A Review of the Image Classification Models Used for the Prediction of Bed Defects in the Selective Laser Sintering Process. Engineering Proceedings, 65(1), 3. https://doi.org/10.3390/engproc2024065003