Sensor Virtualization for Anomaly Detection of Turbo-Machinery Sensors—An Industrial Application †

Abstract

1. Introduction

1.1. Problem Statement

- Early detection is required: only a prompt action allows to avoid the high potential costs of unnecessary shutdowns.

- Up to few thousand sensors need to be checked daily.

- Recall is key: anomalies detected by the tool will be checked by operators and vice versa, where if no alert is given, the anomaly may remain undetected.

- Precision should be kept under control: too many false positives would increase the set of signals to be checked and may invalidate the benefits.

1.2. Related Works

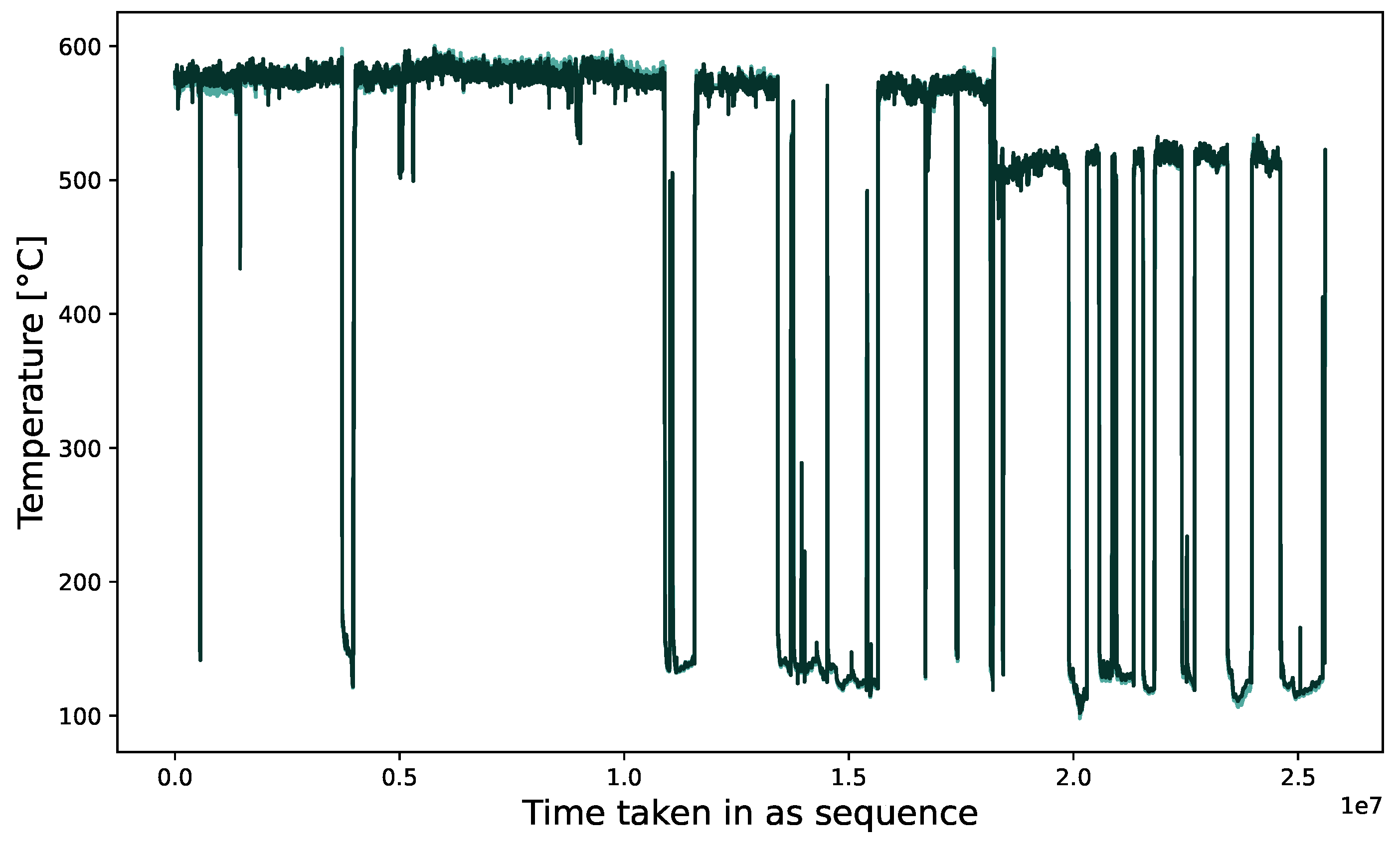

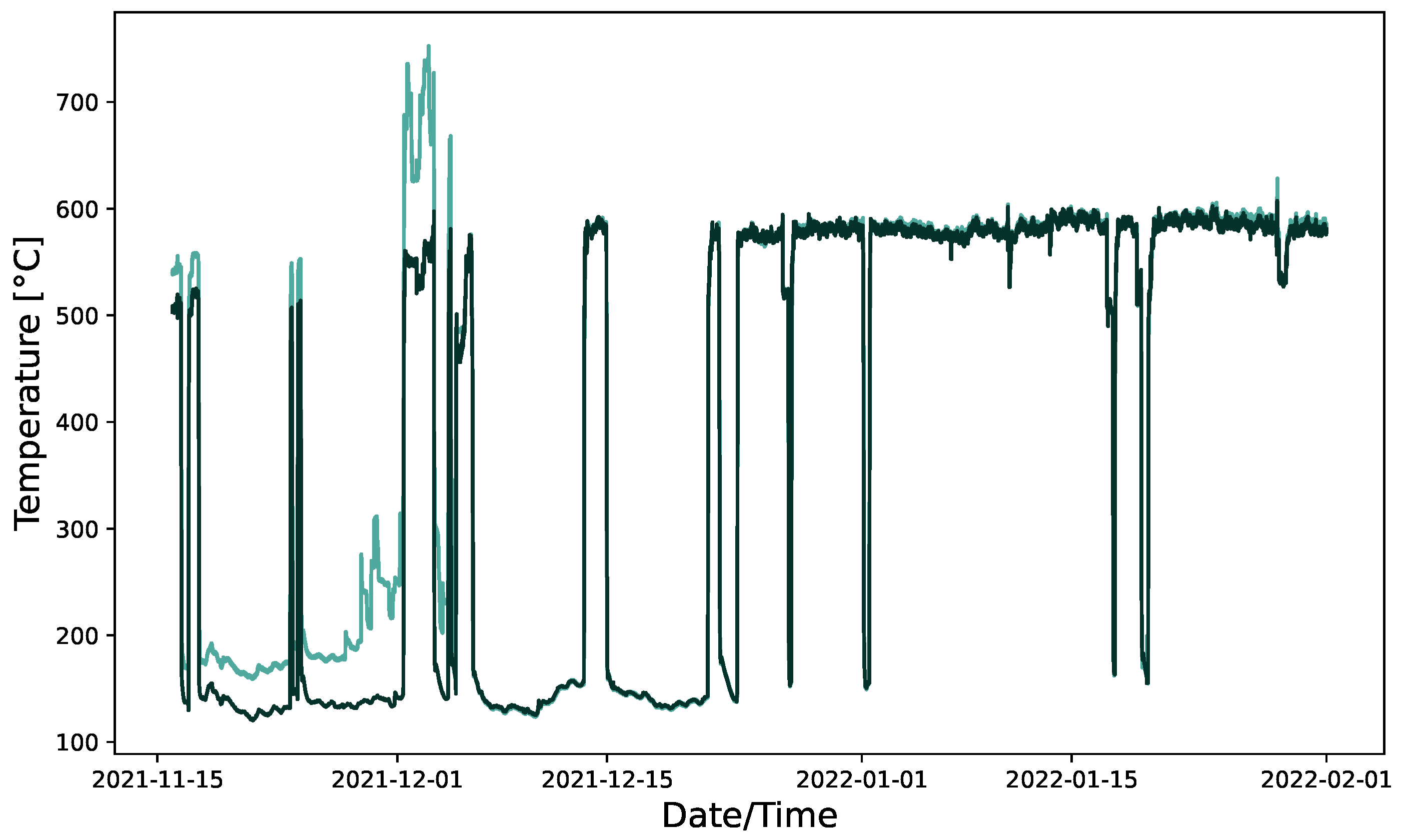

2. The Dataset

3. The Model

3.1. Selection of Input Sensors

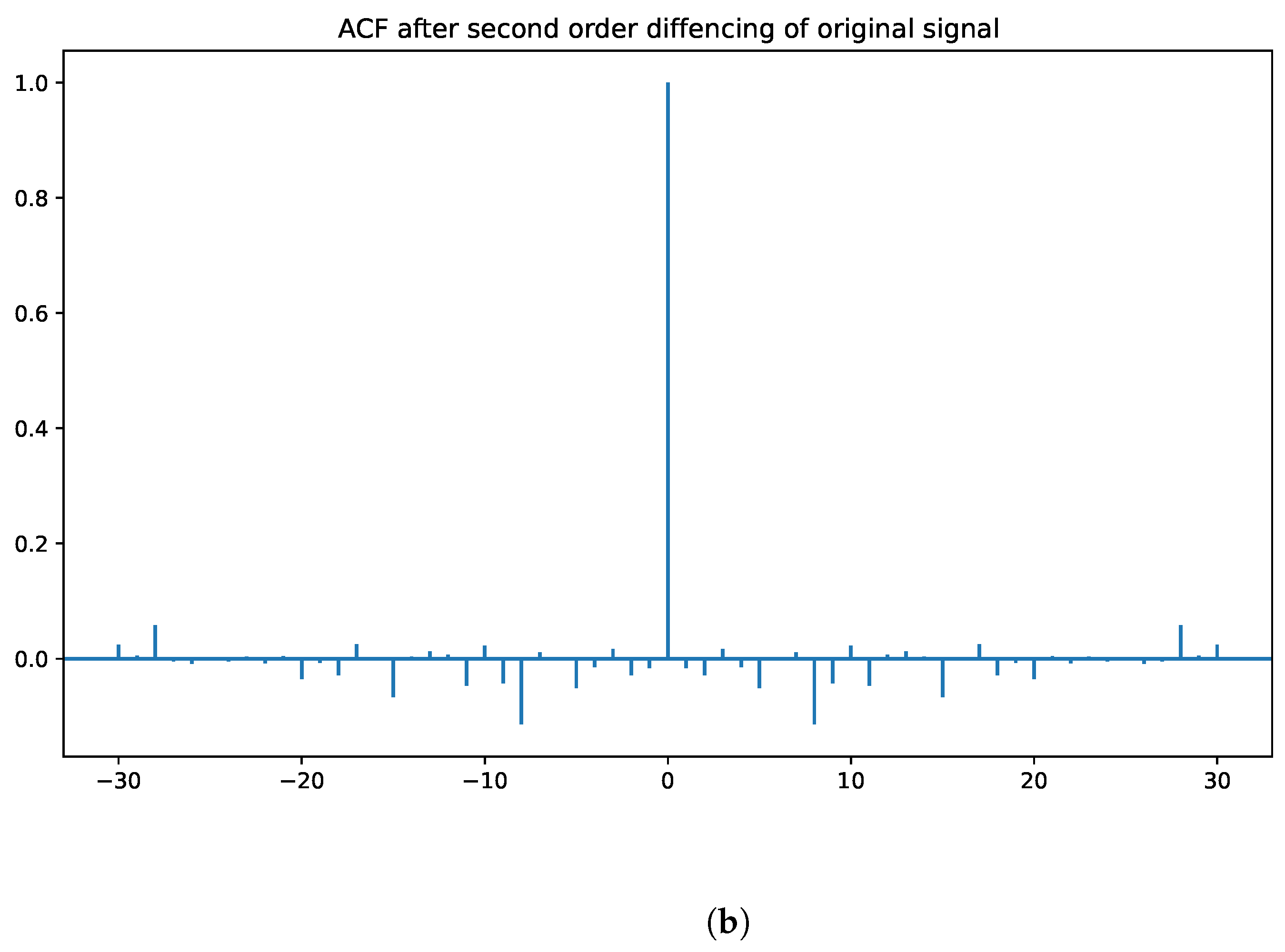

3.2. Selection of Lookback Window

3.3. Model Training

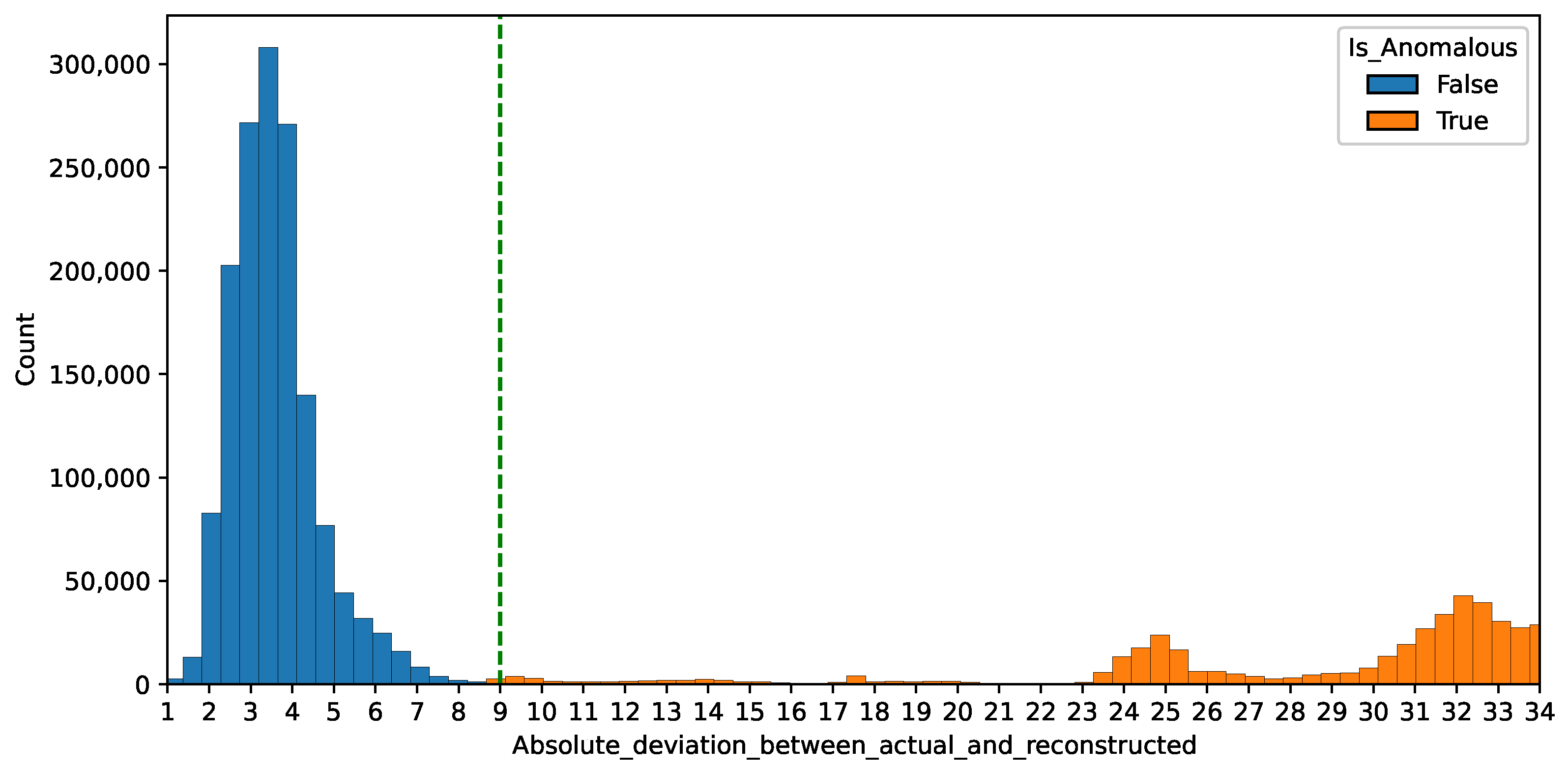

3.4. Inference Logic

4. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Strazzera, L.; Gori, V.; Veneri, G. DANNTe: A Case Study of a Turbo-Machinery Sensor Virtualization under Domain Shift. arXiv 2021, arXiv:2201.03850. [Google Scholar]

- Gori, V.; Veneri, G.; Ballarini, V. Continual Learning for anomaly detection on turbomachinery prototypes—A real application. In Proceedings of the 2022 IEEE Congress on Evolutionary Computation (CEC), Padua, Italy, 18–23 July 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Michelassi, V.; Allegorico, C.; Cioncolini, S.; Graziano, A.; Tognarelli, L.; Sepe, M. Machine Learning in Gas Turbines. Mech. Eng. 2018, 140, S54–S55. [Google Scholar] [CrossRef][Green Version]

- Wu, Y.; Yuan, M.; Dong, S.; Lin, L.; Liu, Y. Remaining useful life estimation of engineered systems using vanilla LSTM neural networks. Neurocomputing 2018, 275, 167–179. [Google Scholar] [CrossRef]

- Capasso, A. Hands-On Industrial Internet of Things: Create a Powerful Industrial IoT Infrastructure Using Industry 4.0; Packt Publishing: Birmingham, UK, 2018. [Google Scholar]

- Hodge, V.J.; Austin, J. A Survey of Outlier Detection Methodologies. Artif. Intell. Rev. 2004, 22, 85–126. [Google Scholar] [CrossRef]

- Zimek, A.; Schubert, E.; Kriegel, H.P. A survey on unsupervised outlier detection in high-dimensional numerical data. Stat. Anal. Data Mining Asa Data Sci. J. 2012, 5, 363–387. [Google Scholar] [CrossRef]

- Akcay, S.; Atapour-Abarghouei, A.; Breckon, T.P. GANomaly: Semi-Supervised Anomaly Detection via Adversarial Training. arXiv 2018, arXiv:cs.CV/1805.06725. [Google Scholar]

- Akçay, S.; Atapour-Abarghouei, A.; Breckon, T.P. Skip-GANomaly: Skip Connected and Adversarially Trained Encoder-Decoder Anomaly Detection. arXiv 2019, arXiv:cs.CV/1901.08954. [Google Scholar]

- Nanduri, A.; Sherry, L. Anomaly detection in aircraft data using Recurrent Neural Networks (RNN). In Proceedings of the 2016 Integrated Communications Navigation and Surveillance (ICNS), Herndon, VA, USA, 19–21 April 2016; pp. 5C2–1–5C2–8. [Google Scholar]

- Malhotra, P.; Vig, L.; Shroff, G.M.; Agarwal, P. Long Short Term Memory Networks for Anomaly Detection in Time Series. In Proceedings of the 23rd European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, (ESANN 2015), Bruges, Belgium, 22–24 April 2015; pp. 89–94. [Google Scholar]

- Park, D.; Hoshi, Y.; Kemp, C.C. A Multimodal Anomaly Detector for Robot-Assisted Feeding Using an LSTM-Based Variational Autoencoder. IEEE Robot. Autom. Lett. 2018, 3, 1544–1551. [Google Scholar] [CrossRef]

- Pereira, J.; Silveira, M. Learning Representations from Healthcare Time Series Data for Unsupervised Anomaly Detection. In Proceedings of the 2019 IEEE International Conference on Big Data and Smart Computing (BigComp), Kyoto, Japan, 27 February–2 March 2019; pp. 1–7. [Google Scholar]

- Geiger, A.; Liu, D.; Alnegheimish, S.; Cuesta-Infante, A.; Veeramachaneni, K. TadGAN: Time Series Anomaly Detection Using Generative Adversarial Networks. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 33–43. [Google Scholar] [CrossRef]

- Li, Y.; Peng, X.; Zhang, J.; Li, Z.; Wen, M. DCT-GAN: Dilated Convolutional Transformer-based GAN for Time Series Anomaly Detection. IEEE Trans. Knowl. Data Eng. 2021, 35, 3632–3644. [Google Scholar] [CrossRef]

- Sabuhi, M.; Zhou, M.; Bezemer, C.P.; Musilek, P. Applications of Generative Adversarial Networks in Anomaly Detection: A Systematic Literature Review. IEEE Access 2021, 9, 161003–161029. [Google Scholar] [CrossRef]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long Short-Term Memory Network for Remaining Useful Life estimation. In Proceedings of the 2017 IEEE International Conference on Prognostics and Health Management (ICPHM), Dallas, TX, USA, 19–21 June 2017; pp. 88–95. [Google Scholar]

- Zhang, C.; Gupta, C.; Farahat, A.; Ristovski, K.; Ghosh, D. Equipment Health Indicator Learning Using Deep Reinforcement Learning. In Proceedings of the Machine Learning and Knowledge Discovery in Databases; Brefeld, U., Curry, E., Daly, E., MacNamee, B., Marascu, A., Pinelli, F., Berlingerio, M., Hurley, N., Eds.; Springer: New York, NY, USA, 2019; pp. 488–504. [Google Scholar]

- Jacobs, W.R.; Edwards, H.; Li, P.; Kadirkamanathan, V.; Mills, A.R. Gas turbine engine condition monitoring using Gaussian mixture and hidden Markov models. Int. J. Progn. Health Manag. 2018, 9, 1–15. [Google Scholar] [CrossRef]

- Yang, C.; Gunay, B.; Shi, Z.; Shen, W. Machine Learning-Based Prognostics for Central Heating and Cooling Plant Equipment Health Monitoring. IEEE Trans. Autom. Sci. Eng. 2020, 18, 346–355. [Google Scholar] [CrossRef]

- Pawełczyk, V.; Fulara, S.; Sepe, M.; De Luca, A.; Badora, M. Industrial gas turbine operating parameters monitoring and data-driven prediction. Eksploat. I Niezawodn. 2020, 22, 391–399. [Google Scholar] [CrossRef]

- Yan, Z.; Sun, J.; Yi, Y.; Yang, C.; Sun, J. Data-Driven Anomaly Detection Framework for Complex Degradation Monitoring of Aero-Engine. Int. J. Turbomach. Propuls. Power 2023, 8, 3. [Google Scholar] [CrossRef]

- Ernesto Escobedo, E.; Arguello, L.; Sepe, M.; Parrella, I.; Cioncolini, S.; Allegorico, C. Enhanced early warning diagnostic rules for gas turbines leveraging on bayesian networks. In Proceedings of the ASME Turbo Expo 2020: Turbomachinery Technical Conference and Exposition, Virtual, 21–25 September 2020. [Google Scholar]

- Granger, C. Investigating Causal Relations by Econometric Models and Cross-Spectral Methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Rosoł, M.; Młyńczak, M.; Cybulski, G. Granger causality test with nonlinear neural-network-based methods: Python package and simulation study. Comput. Methods Programs Biomed. 2022, 216, 106669. [Google Scholar] [CrossRef]

- Straubinger, D.; Illés, B.; Busek, D.; Codreanu, N.; Géczy, A. Modelling of thermocouple geometry variations for improved heat transfer monitoring in smart electronic manufacturing environment. Case Stud. Therm. Eng. 2022, 33, 102001. [Google Scholar] [CrossRef]

- Tran, K.P.; Nguyen, H.D.; Thomassey, S. Anomaly detection using Long Short Term Memory Networks and its applications in Supply Chain Management. IFAC-PapersOnLine 2019, 52, 2408–2412. [Google Scholar] [CrossRef]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental Classifier and Representation Learning. arXiv 2017, arXiv:cs.CV/1611.07725. [Google Scholar]

- Gou, J.; Yu, B.; Maybank, S.J.; Tao, D. Knowledge Distillation: A Survey. Int. J. Comput. Vis. 2021, 129, 1789–1819. [Google Scholar] [CrossRef]

| ME | MAPE | P90 | |

|---|---|---|---|

| Training set | 0.12 | 0.61 | 5.06 |

| Validation set (non-anomalous samples only) | 1.45 | 1.61 | 5.97 |

| Test set (non-anomalous samples only) | 1.89 | 0.65 | 6.52 |

| Precision | Recall | |

|---|---|---|

| Full test set | 96% | 100% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shetty, S.; Gori, V.; Bagni, G.; Veneri, G. Sensor Virtualization for Anomaly Detection of Turbo-Machinery Sensors—An Industrial Application. Eng. Proc. 2023, 39, 96. https://doi.org/10.3390/engproc2023039096

Shetty S, Gori V, Bagni G, Veneri G. Sensor Virtualization for Anomaly Detection of Turbo-Machinery Sensors—An Industrial Application. Engineering Proceedings. 2023; 39(1):96. https://doi.org/10.3390/engproc2023039096

Chicago/Turabian StyleShetty, Sachin, Valentina Gori, Gianni Bagni, and Giacomo Veneri. 2023. "Sensor Virtualization for Anomaly Detection of Turbo-Machinery Sensors—An Industrial Application" Engineering Proceedings 39, no. 1: 96. https://doi.org/10.3390/engproc2023039096

APA StyleShetty, S., Gori, V., Bagni, G., & Veneri, G. (2023). Sensor Virtualization for Anomaly Detection of Turbo-Machinery Sensors—An Industrial Application. Engineering Proceedings, 39(1), 96. https://doi.org/10.3390/engproc2023039096