Design of the Speech Emotion Recognition Model †

Abstract

1. Introduction

2. Previous Research

2.1. Excitation Source Features

2.2. Prosodic Features

2.3. Spectral Features

2.4. Joint Features

3. Design of the Speech Emotion Recognition Model

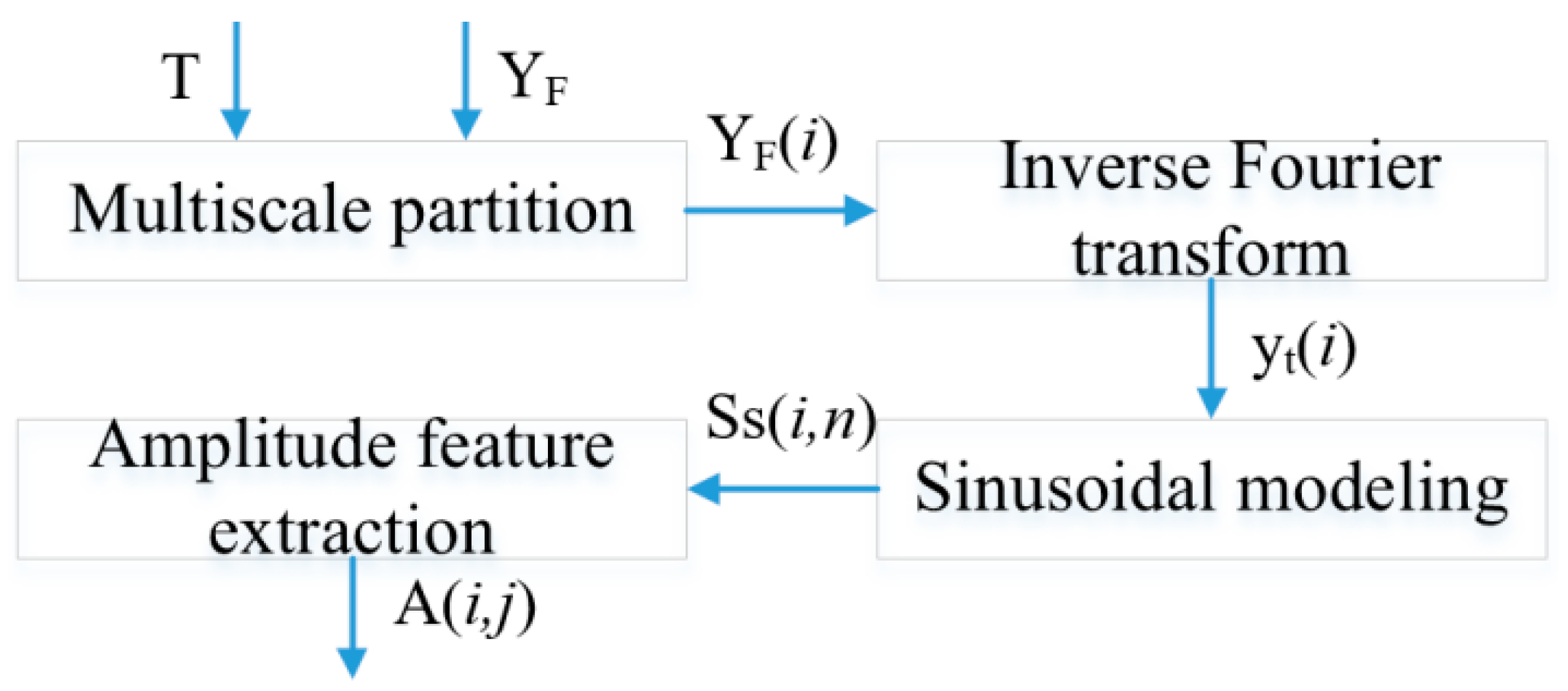

3.1. Multi-Scale Spectral Feature Extraction Model

3.2. Speech Emotion Recognition System

3.3. Feature Analysis

3.4. Feature Extraction

3.5. Emotional Recognition

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Swain, M.; Routray, A.; Kabisatpathy, P. Databases, features and classifiers for speech emotion recognition: A review. Int. J. Speech Technol. 2018, 21, 93–120. [Google Scholar] [CrossRef]

- Koolagudi, S.G.; Rao, K.S. Emotion recognition from speech: A review. Int. J. Speech Technol. 2012, 15, 99–117. [Google Scholar] [CrossRef]

- Wakita, H. Residual energy of linear prediction to vowel and speaker recognition. IEEE Trans. Audio Speech Signal Process. 1976, 24, 270–271. [Google Scholar] [CrossRef]

- Prasanna, S.R.M.; Gupta, C.S.; Yegnanarayana, B. Extraction of speaker-specific excitation information from linear prediction residual of speech. Speech Commun. 2006, 48, 1243–1261. [Google Scholar] [CrossRef]

- Yegnanarayana, B.; Gangashetty, S.V. Epoch-based analysis of speech signals. Sadhana 2015, 36, 651–697. [Google Scholar] [CrossRef]

- Perez-Espinosa, H.; Gutierrez-Serafin, B.; Martinez-Miranda, J.; Espinosa-Curiel, I.E. Automatic children’s personality assessment from emotional speech. Expert Syst. Appl. 2022, 187, 115885. [Google Scholar] [CrossRef]

- Pravena, D.; Govind, D. Significance of incorporating excitation source parameters for improved emotion recognition from speech and electroglottographic signals. Int. J. Speech Technol. 2019, 4, 787–797. [Google Scholar] [CrossRef]

- Wenjing, H.; Haifeng, L. Research on Speech and Emotion Recognition Method Based on Prosodic Paragraph. J. Tsinghua Univ. (Nat. Sci. Ed.) 2015, s1, 1363–1368. [Google Scholar]

- Ying, S.; Hui, Y.; Xueying, Z. Based on Chaos Characteristics. J. Tianjin Univ. (Nat. Sci. Eng. Technol. Ed.) 2018, 48, 681–685. [Google Scholar]

- Lotfian, R.; Busso, C. Emotion recognition using synthetic speech as neutral reference. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Jing, S.; Mao, X.; Chen, L. Prominence features: Effective emotional features for speech emotion recognition. Digit. Signal Process. 2019, 72, 216–231. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ke, H.; Luo, F.; Shi, M. Design of the Speech Emotion Recognition Model. Eng. Proc. 2023, 38, 86. https://doi.org/10.3390/engproc2023038086

Ke H, Luo F, Shi M. Design of the Speech Emotion Recognition Model. Engineering Proceedings. 2023; 38(1):86. https://doi.org/10.3390/engproc2023038086

Chicago/Turabian StyleKe, Hanping, Feng Luo, and Manyin Shi. 2023. "Design of the Speech Emotion Recognition Model" Engineering Proceedings 38, no. 1: 86. https://doi.org/10.3390/engproc2023038086

APA StyleKe, H., Luo, F., & Shi, M. (2023). Design of the Speech Emotion Recognition Model. Engineering Proceedings, 38(1), 86. https://doi.org/10.3390/engproc2023038086