Deep Learning for Detecting Dangerous Objects in X-rays of Luggage †

Abstract

:1. Introduction

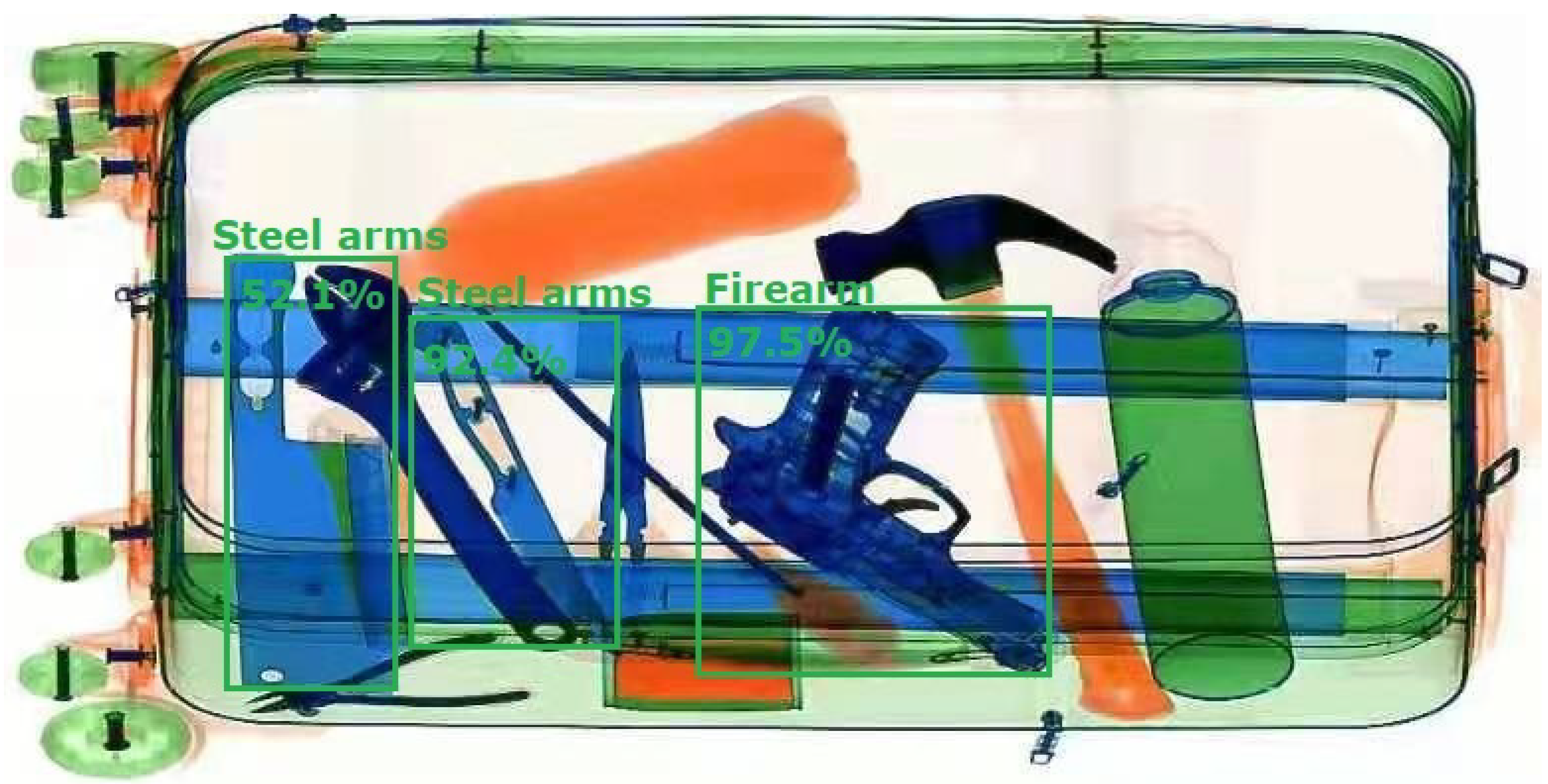

2. Description of the Baggage and Hand Luggage Images Set

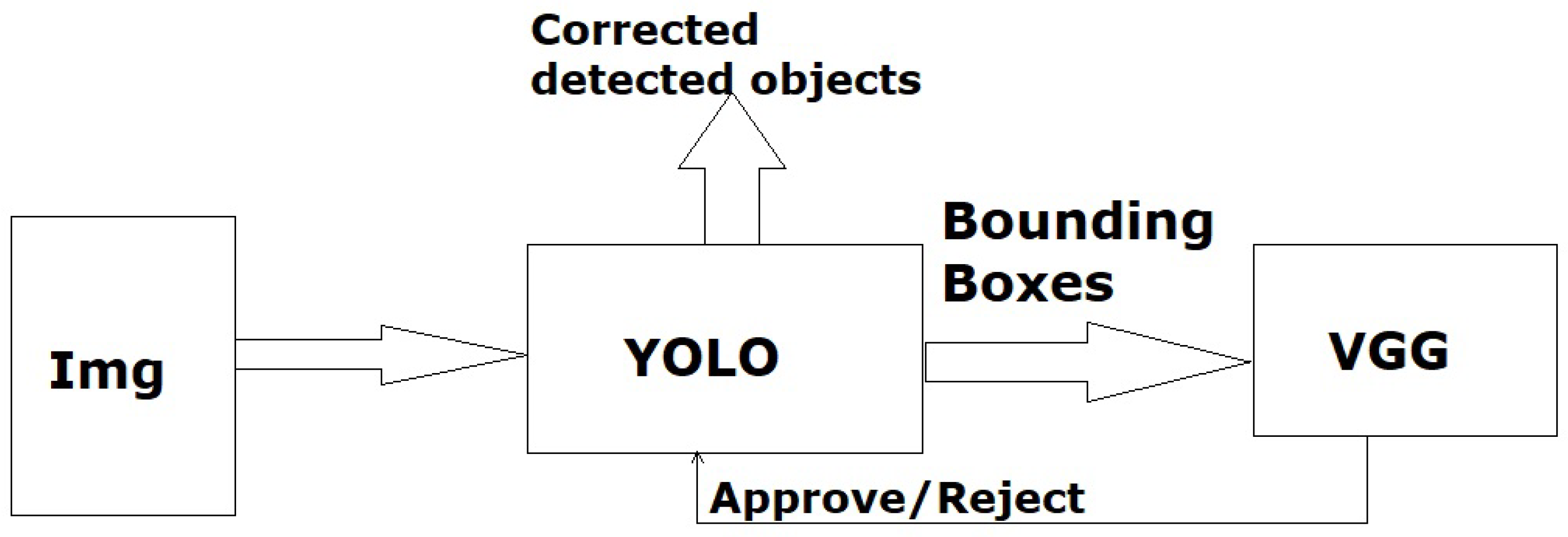

3. Methods for Detecting Dangerous Objects in Images

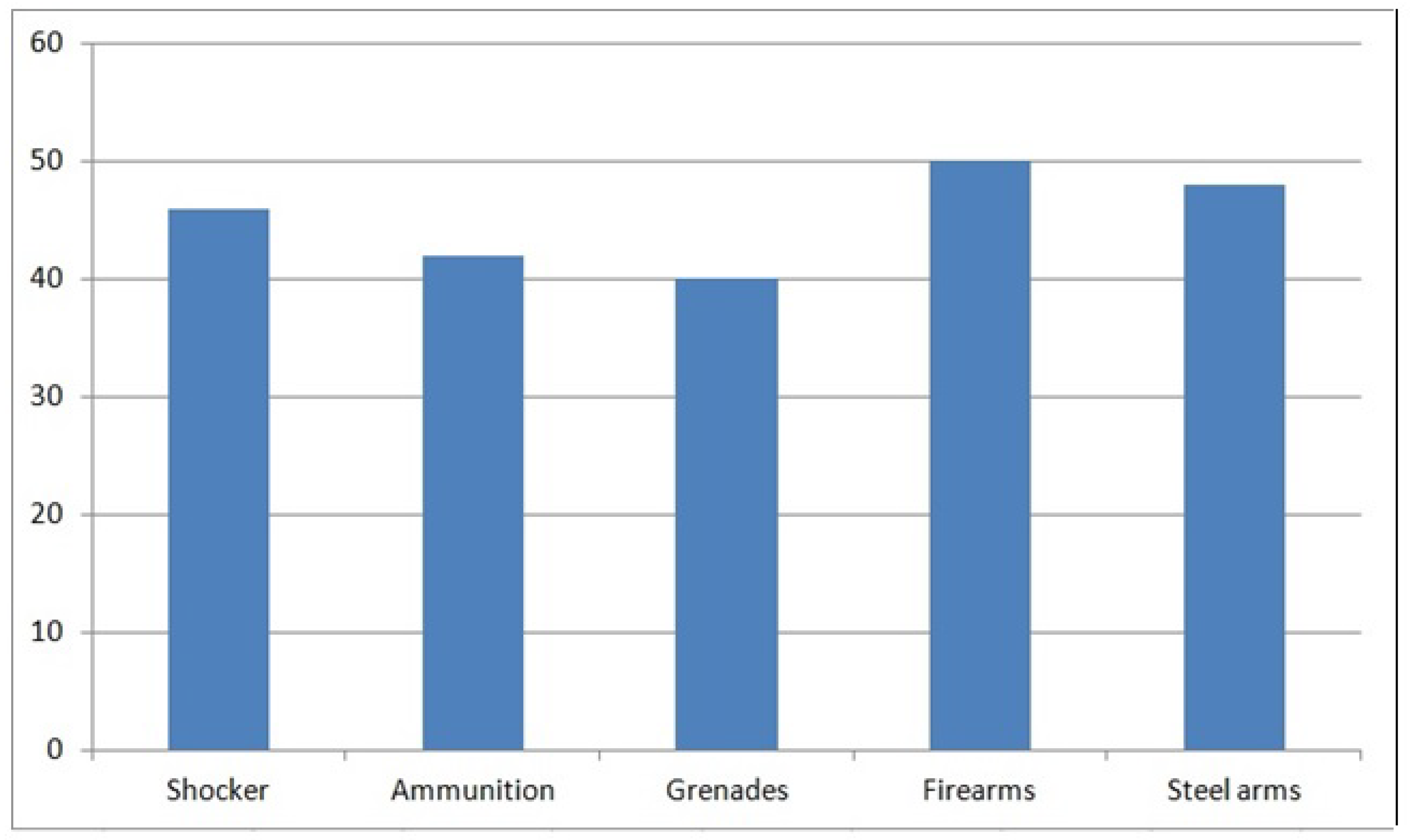

4. Results and Discussion

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Janssen, S.; van der Sommen, R.; Dilweg, A.; Sharpanskykh, A. Data-Driven Analysis of Airport Security Checkpoint Operations. Aerospace 2020, 7, 69. [Google Scholar] [CrossRef]

- Kim, M.H.; Park, J.W.; Choi, Y.J. A Study on the Effects of Waiting Time for Airport Security Screening Service on Passengers’ Emotional Responses and Airport Image. Sustainability 2020, 12, 10634. [Google Scholar] [CrossRef]

- Asmer, L.; Popa, A.; Koch, T.; Deutschmann, A.; Hellman, M. Secure rail station—Research on the effect of security checks on passenger flow. J. Rail Transp. Plan. Manag. 2019, 10, 9–22. [Google Scholar] [CrossRef]

- Janssen, S.; Berg, A.; Sharpanskykh, A. Agent-based vulnerability assessment at airport security checkpoints: A case study on security operator behavior. Transp. Res. Interdiscip. Perspect. 2020, 5, 1–14. [Google Scholar] [CrossRef]

- Andriyanov, N.A.; Dementiev, V.E.; Tashlinskii, A.G. Detection of objects in the images: From likelihood relationships towards scalable and efficient neural networks. Comput. Opt. 2022, 46, 139–159. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. Available online: https://arxiv.org/abs/2005.12872 (accessed on 27 July 2022).

- Andriyanov, N.A.; Volkov, A.K.; Volkov, A.K.; Gladkikh, A.A. Research of recognition accuracy of dangerous and safe X-ray baggage images using neural network transfer learning. IOP Conf. Ser. Mater. Sci. Eng. 2020, 1061, 012002. [Google Scholar] [CrossRef]

- Hättenschwiler, N.; Michel, S.; Kuhn, M. A first exploratory study on the relevance of everyday object knowledge and training for increasing efficiency in airport security X-ray screening. IEEE ICCSTAt 2015, 49, 12–25. [Google Scholar]

- Andriyanov, N.A.; Volkov, A.K.; Volkov, A.K.; Gladkikh, A.A.; Danilov, S.D. Automatic X-ray image analysis for aviation security within limited computing resources. IOP Conf. Ser. Mater. Sci. Eng. 2020, 862, 052009. [Google Scholar] [CrossRef]

- Hassan, T.; Shafay, M.; Akçay, S.; Khan, S.; Bennamoun, M.; Damiani, E.; Werghi, N. Meta-Transfer Learning Driven Tensor-Shot Detector for the Autonomous Localization and Recognition of Concealed Baggage Threats. Sensors 2020, 20, 6450. [Google Scholar] [CrossRef] [PubMed]

- Computer Vision Annotation Toolbox. Available online: https://cvat.ai/ (accessed on 28 July 2022).

- Roboflow. Available online: https://app.roboflow.com/ (accessed on 28 July 2022).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. Available online: https://arxiv.org/abs/1409.1556 (accessed on 29 July 2022).

- YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 29 July 2022).

| Class | Train, N = 1200 Samples | Test, N = 300 Samples |

|---|---|---|

| Shocker | 122 | 34 |

| Ammunition | 109 | 28 |

| Grenades | 135 | 31 |

| Firearms | 180 | 46 |

| Steel arms | 166 | 37 |

| Model | mAR |

|---|---|

| YOLOv5 | 0.843 |

| SSD | 0.672 |

| DETR | 0.697 |

| Ours | 0.871 |

| Class | Average Recall |

|---|---|

| Shocker | 0.912 |

| Ammunition | 0.714 |

| Grenades | 0.839 |

| Firearms | 1 |

| Steel arms | 0.892 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Andriyanov, N. Deep Learning for Detecting Dangerous Objects in X-rays of Luggage. Eng. Proc. 2023, 33, 20. https://doi.org/10.3390/engproc2023033020

Andriyanov N. Deep Learning for Detecting Dangerous Objects in X-rays of Luggage. Engineering Proceedings. 2023; 33(1):20. https://doi.org/10.3390/engproc2023033020

Chicago/Turabian StyleAndriyanov, Nikita. 2023. "Deep Learning for Detecting Dangerous Objects in X-rays of Luggage" Engineering Proceedings 33, no. 1: 20. https://doi.org/10.3390/engproc2023033020

APA StyleAndriyanov, N. (2023). Deep Learning for Detecting Dangerous Objects in X-rays of Luggage. Engineering Proceedings, 33(1), 20. https://doi.org/10.3390/engproc2023033020