Generative Adversarial Networks for the Synthesis of Chest X-ray Images †

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Data

3.2. Image Pre-Processing

3.3. Deep Convolutional Generative Adversarial Network

3.4. Wasserstein Generative Adversarial Networks with Gradient Penalty (WGAN-GP)

3.5. Experiment Methodology with GANs for Image Generation

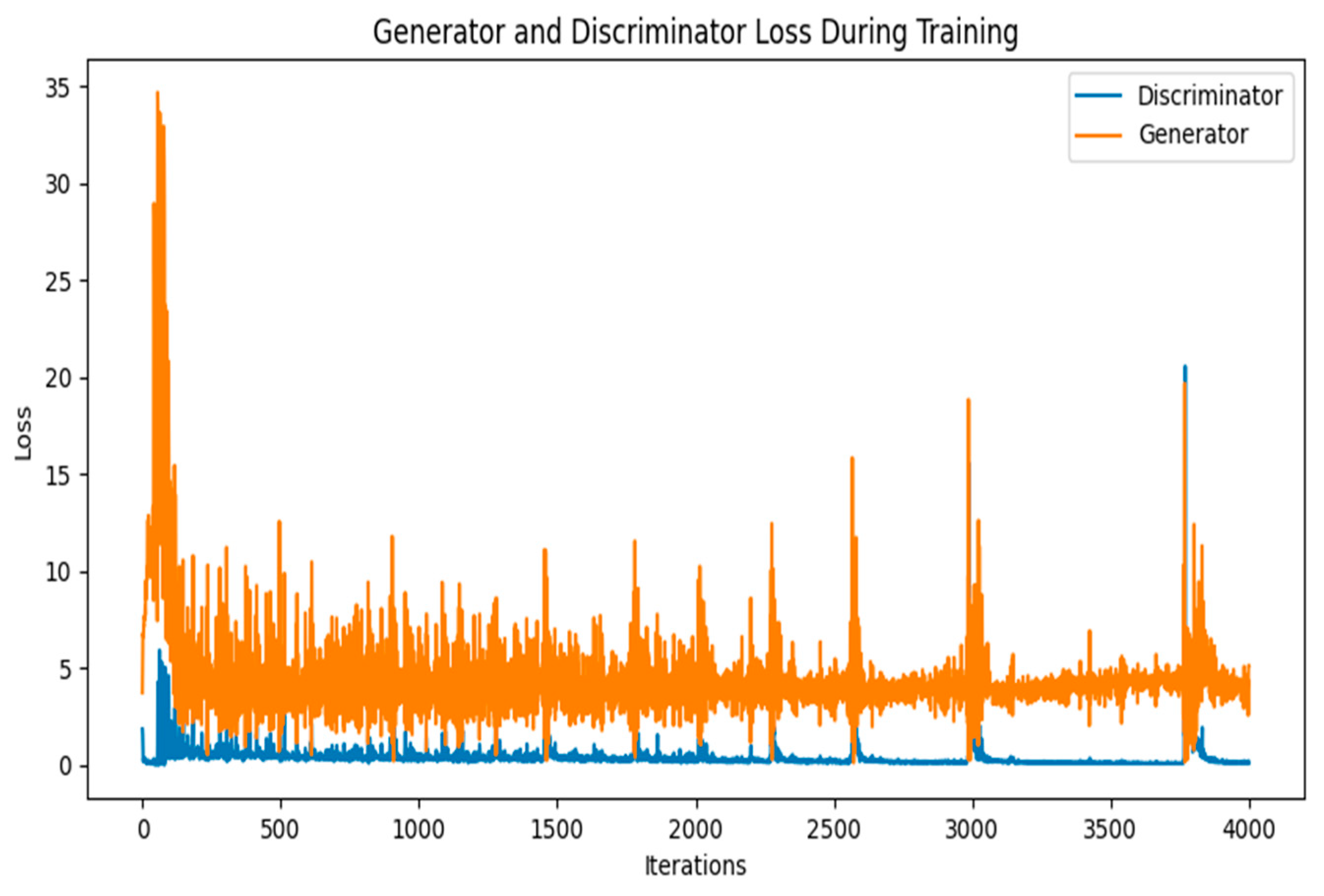

4. Comparison and Results of the DCGAN and the WGAN-GP

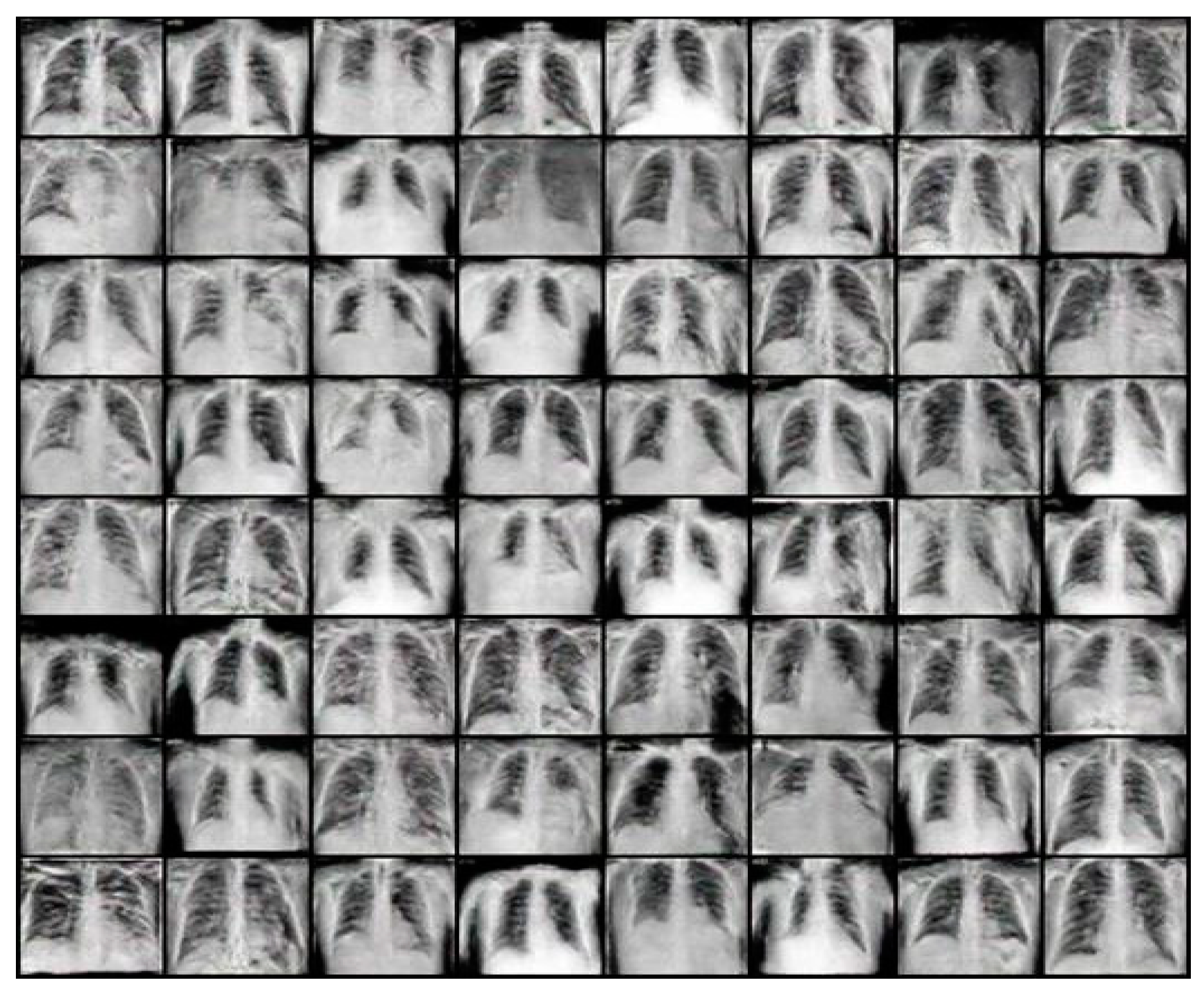

4.1. Results of the DCGAN

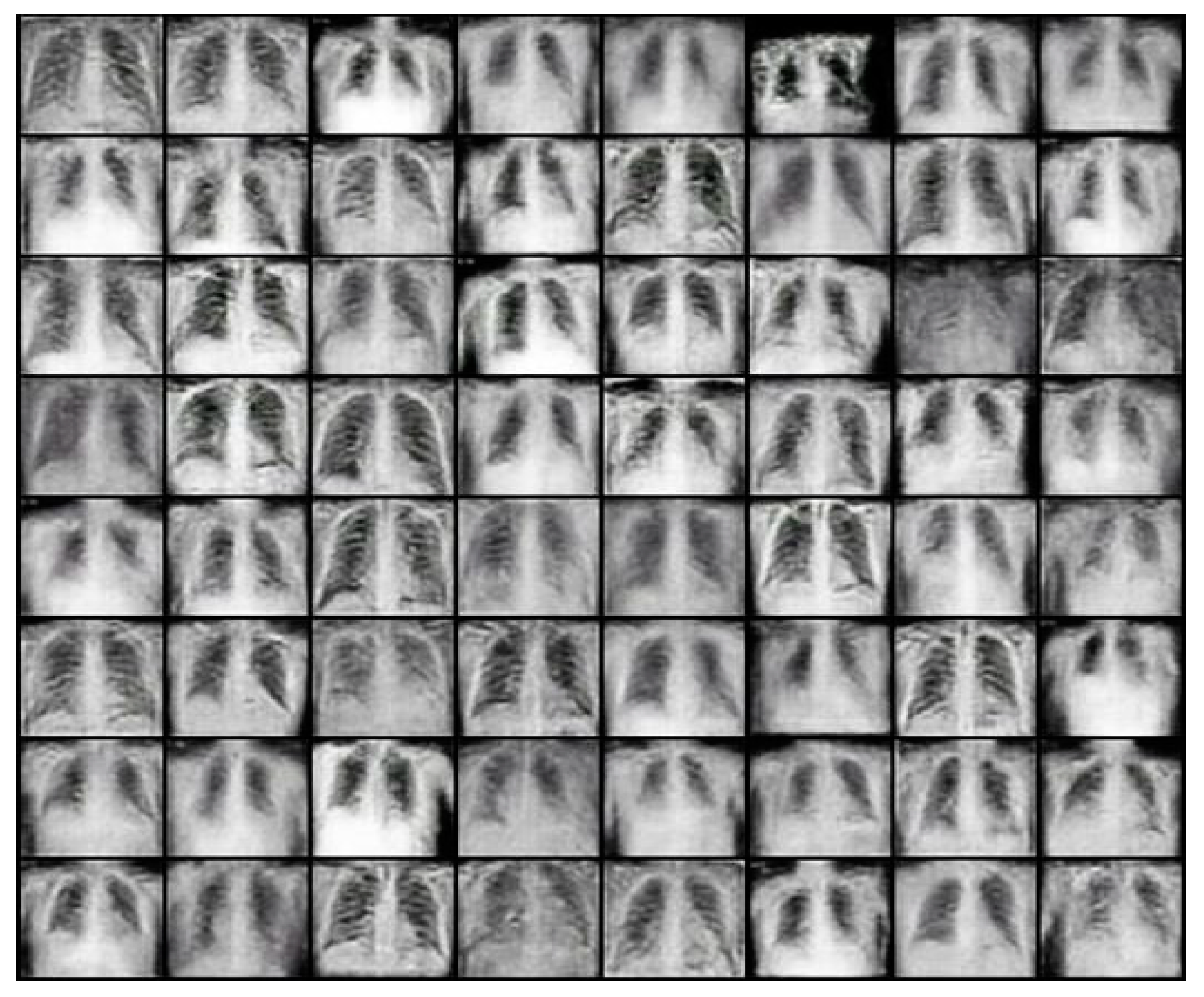

4.2. Results for the WGAN-GP

4.3. Comparison of the DCGAN and WGAN-GP

5. Limitations of GANs

6. Conclusions

7. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Albahli, S. Efficient GAN-Based Chest Radiographs (CXR) Augmentation to Diagnose coronavirus Disease Pneumonia. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7330663/ (accessed on 27 February 2022).

- Venu, S.K.; Ravula, S. Evaluation of Deep Convolutional Generative Adversarial Networks for Data Augmentation of Chest X-ray Images. Available online: https://www.mdpi.com/1999-5903/13/1/8 (accessed on 1 March 2022).

- Sharmila, V.J. Deep Learning Algorithm for COVID-19 Classification Using Chest X-ray Images. NCBI. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8594989/ (accessed on 4 March 2022).

- Mann, P.; Jain, S.; Mittal, S.; Bhat, A. Generation of COVID-19 Chest CT Scan Images using Generative Adversarial Networks. arXiv 2021, arXiv:2105.11241. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. arXiv 2015, arXiv:1512.00567. [Google Scholar]

- Rehman, N.U.; Zia, M.S.; Meraj, T.; Rauf, H.T.; Damaševičius, R.; El-Sherbeeny, A.M.; El-Meligy, M.A. A self-activated CNN approach for multi-class chest-related COVID-19 detection. Appl. Sci. 2021, 11, 9023. [Google Scholar] [CrossRef]

- Khan, M.A.; Alhaisoni, M.; Tariq, U.; Hussain, N.; Majid, A.; Damaševičius, R.; Maskeliūnas, R. COVID-19 case recognition from chest CT images by deep learning, entropy-controlled firefly optimization, and parallel feature fusion. Sensors 2021, 21, 7286. [Google Scholar] [CrossRef] [PubMed]

- Allioui, H.; Mohammed, M.A.; Benameur, N.; Al-Khateeb, B.; Abdulkareem, K.H.; Garcia-Zapirain, B.; Damaševičius, R.; Maskeliūnas, R. A multi-agent deep reinforcement learning approach for enhancement of COVID-19 CT image segmentation. J. Pers. Med. 2022, 12, 309. [Google Scholar] [CrossRef] [PubMed]

- Chowdhury, M.E.; Rahman, T.; Khandakar, A.; Mazhar, R.; Kadir, M.A.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Emadi, N.A.; et al. Can AI help in screening viral and COVID-19 pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Abul Kashem, S.B.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X- ray images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef] [PubMed]

- Histograms—2: Histogram Equalization. OpenCV. Available online: https://docs.opencv.org/3.4/d5/daf/tutorial_py_histogram_equalization.html (accessed on 4 March 2022).

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. arXiv 2017, arXiv:1704.00028. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. arXiv 2017, arXiv:1706.08500. [Google Scholar]

- DCGAN Tutorial. DCGAN Tutorial—PyTorch Tutorials 1.10.1+cu102 Documentation. Available online: https://pytorch.org/tutorials/beginner/dcgan_faces_tutorial.html (accessed on 4 March 2022).

- Tejanirla. Image_classification/transfer_learning.ipynb at Master Tejanirla/Image_Classification. GitHub. Available online: https://github.com/tejanirla/image_classification/blob/master/transfer_learning.ipynb (accessed on 4 March 2022).

- Seitzer, M. pytorch-fid: FID Score for PyTorch. Opgehaal van. Available online: https://github.com/mseitzer/pytorch-fid (accessed on 4 March 2022).

- Keras Documentation: Inceptionv3. Keras. Available online: https://keras.io/api/applications/inceptionv3/ (accessed on 4 March 2022).

- Aladdinpersson. (n.d.). Machine-Learning- Collection/ml/pytorch/gans/4.WGAN-GP Aladdinpersson/Machine-Learning-Collection. GitHub. Available online: https://github.com/aladdinpersson/Machine-Learning-Collection (accessed on 4 March 2022).

| Dataset Size (Number of Actual COVID-19 Positive Images) | FID Score of the Chest X-ray Images Generated |

|---|---|

| 500 | 1.763 |

| 1000 | 1.494 |

| 1500 | 1.405 |

| 2000 | 1.249 |

| Epoch | FID Score |

|---|---|

| 500 | 1.451 |

| 600 | 1.415 |

| 700 | 1.399 |

| 800 | 1.640 |

| GAN Type | FID Score |

|---|---|

| DCGAN | 1.399 |

| WGAN-GP | 1.583 |

| Dataset | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Only COVID-19 positive | 0.96 | 0.94 | 0.97 | 0.95 |

| COVID-19 positive + images generated from DCGAN | 0.98 | 0.97 | 1 | 0.98 |

| COVID-19 positive + images generated from WGAN-GP | 0.98 | 0.99 | 0.98 | 0.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, M.F.; Hargreaves, C.A. Generative Adversarial Networks for the Synthesis of Chest X-ray Images. Eng. Proc. 2023, 31, 84. https://doi.org/10.3390/ASEC2022-13954

Ng MF, Hargreaves CA. Generative Adversarial Networks for the Synthesis of Chest X-ray Images. Engineering Proceedings. 2023; 31(1):84. https://doi.org/10.3390/ASEC2022-13954

Chicago/Turabian StyleNg, Mai Feng, and Carol Anne Hargreaves. 2023. "Generative Adversarial Networks for the Synthesis of Chest X-ray Images" Engineering Proceedings 31, no. 1: 84. https://doi.org/10.3390/ASEC2022-13954

APA StyleNg, M. F., & Hargreaves, C. A. (2023). Generative Adversarial Networks for the Synthesis of Chest X-ray Images. Engineering Proceedings, 31(1), 84. https://doi.org/10.3390/ASEC2022-13954