Deep Learning for the Prediction of Temperature Time Series in the Lining of an Electric Arc Furnace for Structural Health Monitoring at Cerro Matoso (CMSA) †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data

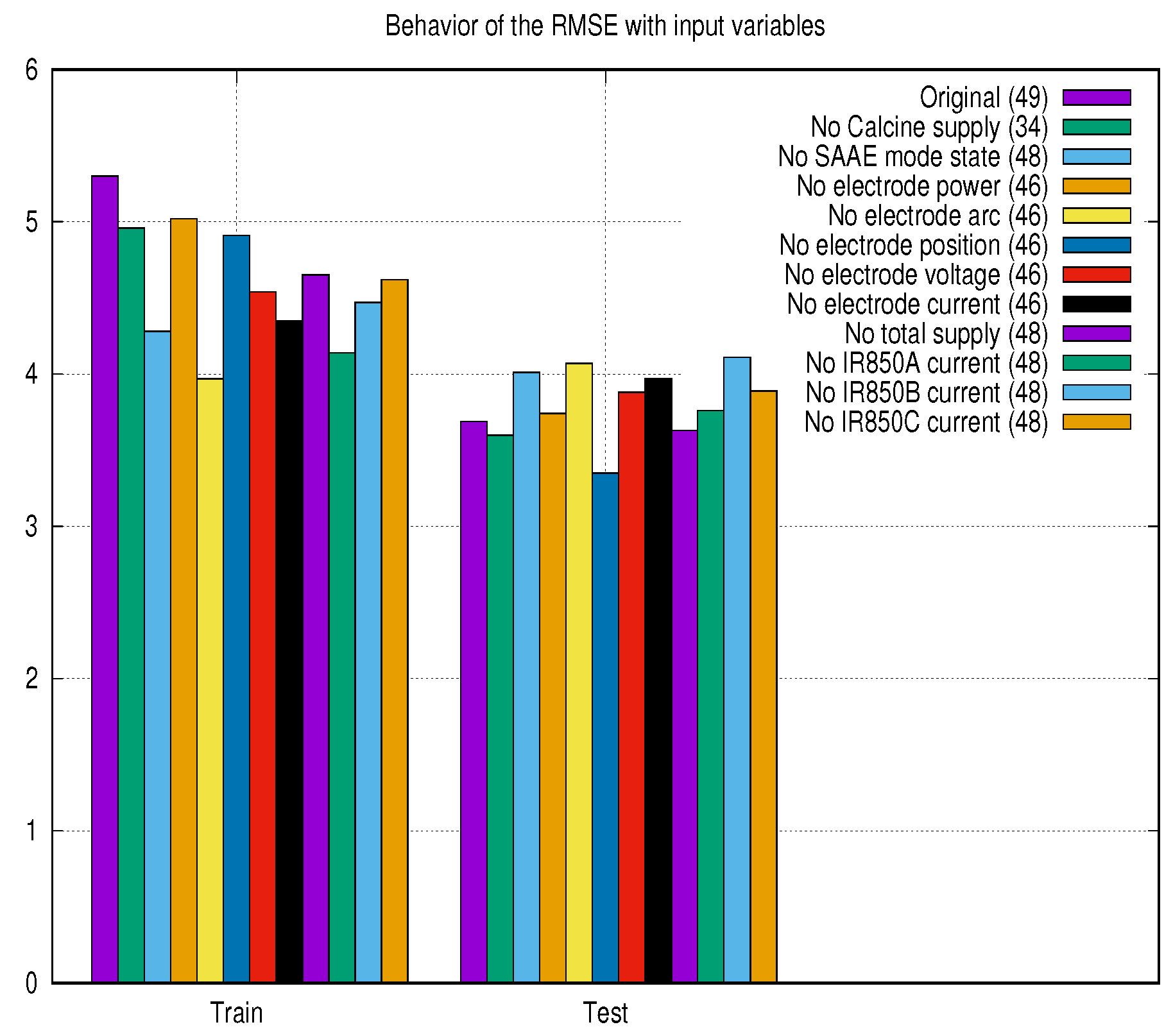

- Input variables: 49 input variables consisting of electrode current, electrode voltage, electrode relative position, electrode arc, electric oven power, electrode power, electrode current, total feeding calcine by hour, calcine chemical composition, and thermocouple temperature by furnace sector and position.

- Time period: Each one of the input variables was sampled using a 15-min window.

- Output variables: 16 output variables refer to 16 thermocouples distributed radially every 90 degrees in the furnace in four groups and spaced at four different heights of the furnace lining.

2.2. Predictive Methods

2.3. Development of the Deep Learning Models

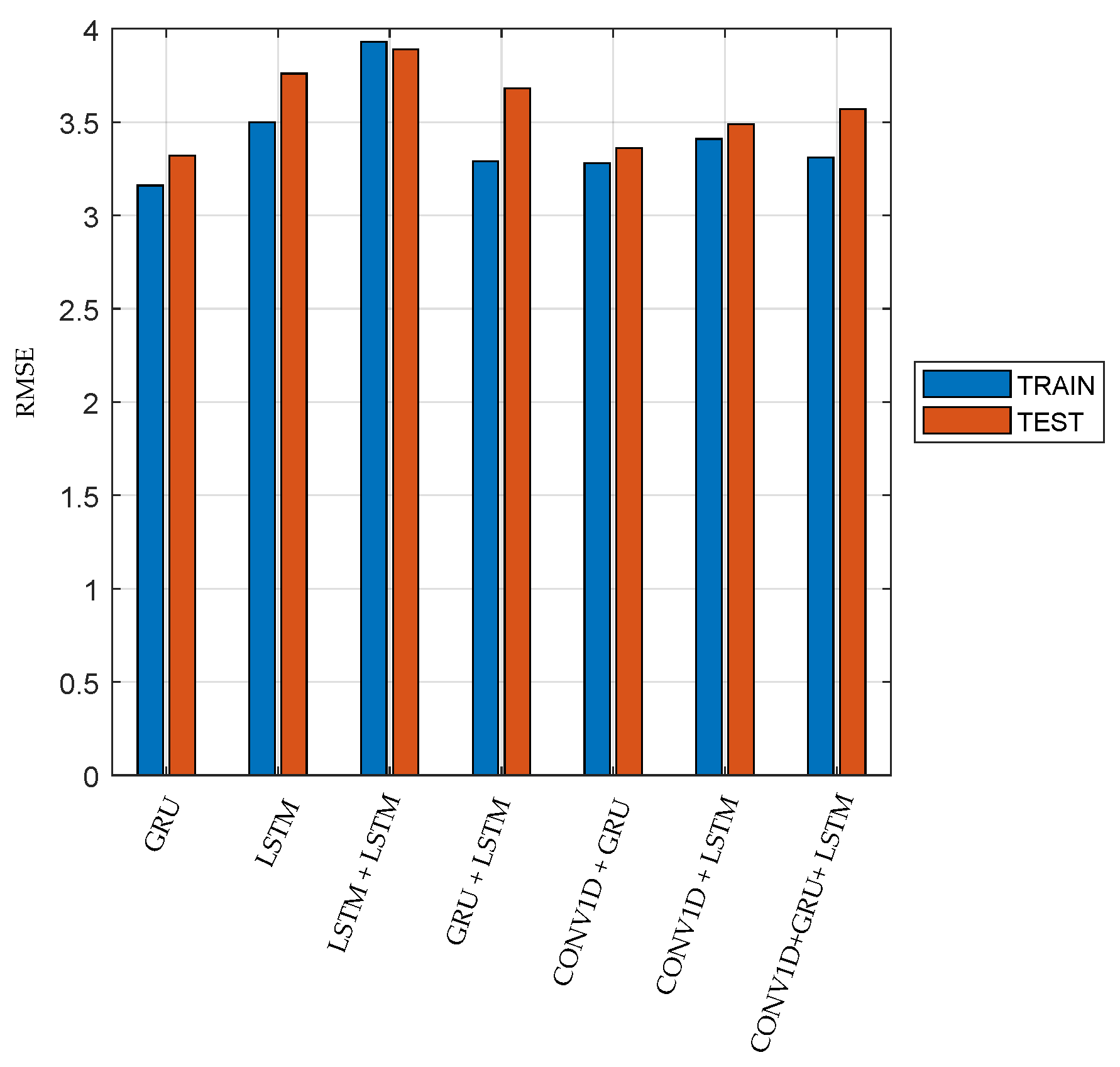

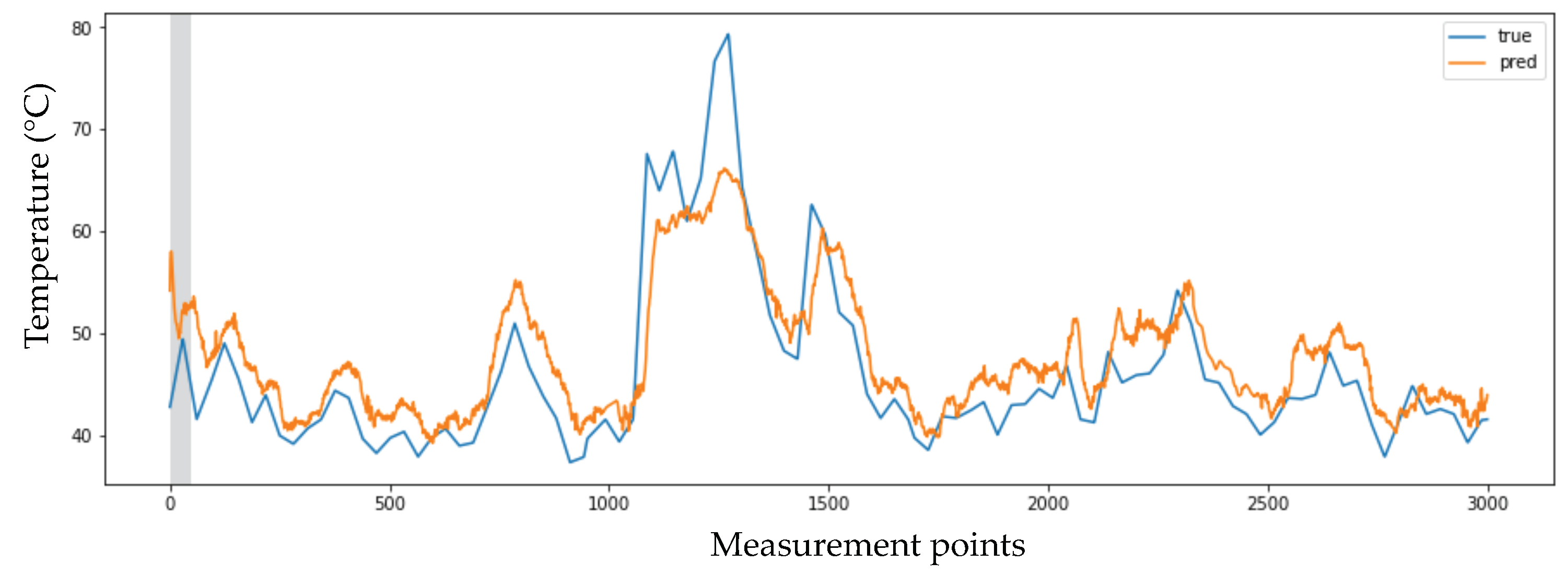

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Janzen, J.; Gerritsen, T.; Voermann, N.; Veloza, E.R.; Delgado, R.C. Integrated Furnace Controls: Implementation on a Covered-Arc ( Shielded Arc ) Furnace At Cerro Matoso. In Proceedings of the 10th International Ferroalloys Congress, Cape Town, South Africa, 1–4 February 2004; pp. 659–669. [Google Scholar]

- Garcia-Segura, R.; Castillo, J.V.; Martell-Chavez, F.; Longoria-Gandara, O.; Aguilar, J.O. Electric Arc furnace modeling with artificial neural networks and Arc length with variable voltage gradient. Energies 2017, 10, 1424. [Google Scholar] [CrossRef]

- Tibaduiza, D.; Leon, J.; Bonilla, L.; Rueda, B.; Zurita, O.; Forero, J.; Vitola, J.; Segura, D.; Forero, E.; Anaya, M. Gap Monitoring in Refurbishment Tasks in a Ferronickel Furnace at Cerro Matoso SA. In Proceedings of the XI International Conference on Structural Dynamics-EURODYN 2020, Athens, Greece, 23–26 November 2020; pp. 4722–4729.

- Golestani, S.; Samet, H. Generalised Cassie-Mayr electric arc furnace models. IET Gener. Transm. Distrib. 2016, 10, 3364–3373. [Google Scholar] [CrossRef]

- Janabi-Sharifi, F.; Jorjani, G. An adaptive system for modelling and simulation of electrical arc furnaces. Control. Eng. Pract. 2009, 17, 1202–1219. [Google Scholar] [CrossRef]

- Martín, R.D.; Obeso, F.; Mochón, J.; Barea, R.; Jiménez, J. Hot metal temperature prediction in blast furnace using advanced model based on fuzzy logic tools. Ironmak. Steelmak. 2007, 34, 241–247. [Google Scholar] [CrossRef]

- Chen, C.; Liu, Y.; Kumar, M.; Qin, J. Energy Consumption Modelling Using Deep Learning Technique—A Case Study of EAF. Procedia CIRP 2018, 72, 1063–1068. [Google Scholar] [CrossRef]

- Najafabadi, M.M.; Villanustre, F.; Khoshgoftaar, T.M.; Seliya, N.; Wald, R.; Muharemagic, E. Deep learning applications and challenges in big data analytics. J. Big Data 2015, 2, 1–21. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2013, 7, 197–387. [Google Scholar] [CrossRef]

- Karim, F.; Majumdar, S.; Darabi, H.; Harford, S. Multivariate LSTM-FCNs for time series classification. Neural Netw. 2019, 116, 237–245. [Google Scholar] [CrossRef] [PubMed]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches; Association for Computational Linguistics: Doha, Qatar, 2015; pp. 103–111. [Google Scholar] [CrossRef]

| GRU | LSTM | LSTM+ LSTM | GRU+ LSTM | CONV1D+ GRU | CONV1D+ LSTM | CONV1D+ GRU + LSTM | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Thermocouple (T) | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | Train | Test |

| T1 (L4,NW) (42.05 ± 5.18) | 2.14 | 4.03 | 2.43 | 5.21 | 2.42 | 5.07 | 2.15 | 4.50 | 2.08 | 4.19 | 2.29 | 4.98 | 2.15 | 4.44 |

| T2 (L4,SW) (47.73 ± 6.82) | 2.73 | 3.70 | 3.13 | 4.22 | 3.16 | 4.05 | 2.73 | 4.12 | 2.86 | 3.82 | 3.04 | 4.21 | 2.81 | 3.80 |

| T3 (L4,SE) (45.19 ± 3.23) | 2.25 | 2.51 | 2.40 | 3.05 | 2.52 | 3.04 | 2.23 | 2.88 | 2.23 | 2.46 | 2.27 | 2.69 | 2.23 | 2.63 |

| T4 (L4,NE) (41.88 ± 2.82) | 1.46 | 1.23 | 1.66 | 1.35 | 1.75 | 1.58 | 1.47 | 1.31 | 1.46 | 1.26 | 1.64 | 1.21 | 1.53 | 1.55 |

| T5 (L3,NW) (48.86 ± 9.71) | 3.45 | 4.65 | 3.89 | 5.85 | 3.76 | 5.68 | 3.36 | 5.05 | 3.21 | 4.47 | 3.63 | 5.33 | 3.41 | 4.47 |

| T6 (L3,SW) (54.08 ± 10.33) | 3.72 | 4.70 | 4.67 | 5.24 | 4.56 | 4.98 | 3.87 | 5.51 | 3.98 | 5.56 | 4.23 | 4.99 | 4.08 | 5.77 |

| T7 (L3,SE) (46.65 ± 4.69) | 2.57 | 3.81 | 2.76 | 4.53 | 2.89 | 4.15 | 2.47 | 3.68 | 2.61 | 3.73 | 2.71 | 3.70 | 2.57 | 3.57 |

| T8 (L3,NE) (42.23 ± 3.37) | 1.66 | 1.41 | 1.83 | 1.55 | 1.90 | 1.75 | 1.72 | 1.40 | 1.64 | 1.51 | 1.73 | 1.32 | 1.67 | 1.54 |

| T9 (L2,NW) (50.13 ± 6.92) | 2.40 | 2.49 | 2.77 | 2.82 | 2.80 | 3.20 | 2.41 | 2.53 | 2.31 | 2.28 | 2.59 | 2.65 | 2.40 | 2.54 |

| T10 (L2,SW) (53.70 ± 6.92) | 2.59 | 2.34 | 3.29 | 2.93 | 3.08 | 2.78 | 2.64 | 2.55 | 2.73 | 2.50 | 2.85 | 2.38 | 2.86 | 2.84 |

| T11 (L2,SE) (49.32 ± 4.96) | 2.52 | 3.02 | 2.91 | 3.62 | 2.90 | 3.55 | 2.52 | 3.18 | 2.65 | 3.19 | 2.80 | 3.43 | 2.59 | 3.61 |

| T12 (L2,NE) (44.72 ± 3.65) | 1.70 | 1.77 | 1.94 | 1.52 | 1.87 | 2.00 | 1.70 | 1.78 | 1.69 | 1.87 | 1.77 | 1.85 | 1.71 | 1.99 |

| T13 (L1,NW) (75.21 ± 18.32) | 7.58 | 8.64 | 8.76 | 9.52 | 8.29 | 9.02 | 7.68 | 10.11 | 7.56 | 7.96 | 8.14 | 9.40 | 7.54 | 8.70 |

| T14 (L1,SW) (81.17 ± 18.72) | 6.84 | 7.24 | 8.66 | 9.30 | 8.09 | 7.98 | 6.96 | 7.40 | 7.03 | 7.37 | 7.50 | 7.14 | 7.39 | 7.95 |

| T15 (L1,SE) (64.96 ± 9.44) | 4.24 | 6.07 | 5.35 | 7.08 | 5.20 | 6.81 | 4.37 | 6.37 | 4.62 | 5.96 | 4.70 | 6.74 | 4.49 | 6.60 |

| T16 (L1,SE) (58.86 ± 7.39) | 2.92 | 3.80 | 3.62 | 3.01 | 3.40 | 4.34 | 3.06 | 4.26 | 3.05 | 4.62 | 3.19 | 3.84 | 3.14 | 4.57 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leon-Medina, J.X.; Vargas, R.C.G.; Gutierrez-Osorio, C.; Jimenez, D.A.G.; Cardenas, D.A.V.; Torres, J.E.S.; Camacho-Olarte, J.; Rueda, B.; Vargas, W.; Esmeral, J.S.; et al. Deep Learning for the Prediction of Temperature Time Series in the Lining of an Electric Arc Furnace for Structural Health Monitoring at Cerro Matoso (CMSA). Eng. Proc. 2020, 2, 23. https://doi.org/10.3390/ecsa-7-08246

Leon-Medina JX, Vargas RCG, Gutierrez-Osorio C, Jimenez DAG, Cardenas DAV, Torres JES, Camacho-Olarte J, Rueda B, Vargas W, Esmeral JS, et al. Deep Learning for the Prediction of Temperature Time Series in the Lining of an Electric Arc Furnace for Structural Health Monitoring at Cerro Matoso (CMSA). Engineering Proceedings. 2020; 2(1):23. https://doi.org/10.3390/ecsa-7-08246

Chicago/Turabian StyleLeon-Medina, Jersson X., Ricardo Cesar Gomez Vargas, Camilo Gutierrez-Osorio, Daniel Alfonso Garavito Jimenez, Diego Alexander Velandia Cardenas, Julián Esteban Salomón Torres, Jaiber Camacho-Olarte, Bernardo Rueda, Whilmar Vargas, Jorge Sofrony Esmeral, and et al. 2020. "Deep Learning for the Prediction of Temperature Time Series in the Lining of an Electric Arc Furnace for Structural Health Monitoring at Cerro Matoso (CMSA)" Engineering Proceedings 2, no. 1: 23. https://doi.org/10.3390/ecsa-7-08246

APA StyleLeon-Medina, J. X., Vargas, R. C. G., Gutierrez-Osorio, C., Jimenez, D. A. G., Cardenas, D. A. V., Torres, J. E. S., Camacho-Olarte, J., Rueda, B., Vargas, W., Esmeral, J. S., Restrepo-Calle, F., Burgos, D. A. T., & Bonilla, C. P. (2020). Deep Learning for the Prediction of Temperature Time Series in the Lining of an Electric Arc Furnace for Structural Health Monitoring at Cerro Matoso (CMSA). Engineering Proceedings, 2(1), 23. https://doi.org/10.3390/ecsa-7-08246