A Data Cleaning Approach for a Structural Health Monitoring System in a 75 MW Electric Arc Ferronickel Furnace †

Abstract

:1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Data Cleaning Process

- When observing values outside the operational range, a general premise of data quality is determined, which establishes that those data should not be taken into account.

- There is only one categorical variable, which represents the furnace’s operating mode: manual or automatic. Thus, the other variables must be numerical.

- The existence of negative values in the temperature data should not be considered, since the ferronickel smelting process is carried out at high temperatures, and nowhere there are values below 0 C. Hence, a negative temperature can be seen as an instrumentation failure. Similarly, peaks of positive values that are above the normal operating range can also be considered in the same way (outliers) and should be discarded.

- When sensors fail and provide erroneous data, false alarms occur for that reason. Hence, some variables have been manually manipulated within the data, remaining at a fixed value during some time, while the damaged sensor is repaired.

- Due to the heat transfer process present in the furnace, especially in the refrigeration system, it is not possible to have large temperature variations in short periods of time. Therefore, data with high variability correspond to possible faults in the instrumentation, and they do not represent the real behavior of the furnace.

- In order to know the normal behavior of the data, together with experts, time windows were defined where the furnace had a stable operation. This permitted us to select small subsets of data, where it was possible to extract the normal behaviour of the variables.

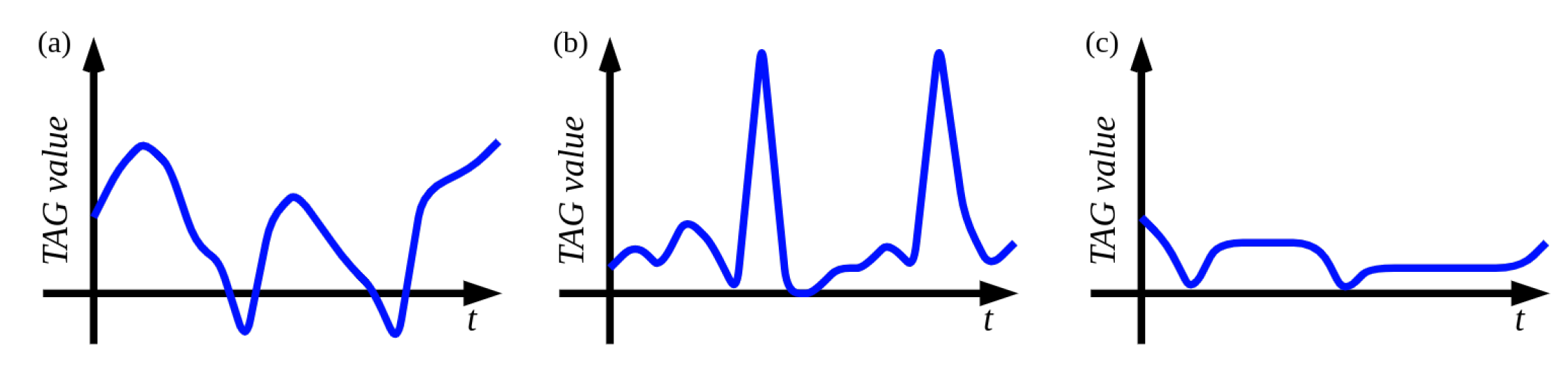

- Remove duplicates: the first rule consists of removing duplicated variables (see Figure 3a).

- Empty and null values: ehen a variable (TAG) presents more than 98% of missing values, it means that the data collected is from a short period of time compared to the total. Thus, these TAGs should be discarded (see Figure 3b).

- Unique values:TAGs that remain at the same value throughout the time do not provide any useful information. Hence, all of the variables that present a single value in the 175,297 records should be eliminated (see Figure 3c).

- Strings: given that there are non-numeric values in the dataset, all the strings found are extracted to identify with the experts their relevance. It is decided to encode them with numerical values and, in some cases, to remove them and reuse the Empty and null values rule (see Figure 3d).

- Negative temperatures: the normal operating ranges of temperatures in the furnace do not include values below zero and, for this reason, the TAGs that present this problem are removed (Figure 4a).

- High variance: for extreme values occurring between normal operating values of the TAGs, it is applied a univariate rule for quality measurement, which is made based on percentage variations. The procedure to find these values is:

- (a)

- Calculation of percentage variations, as follows:where is the observation at an instant t and is the previous one.

- (b)

- Calculation of interquartile ranges: .

- (c)

- From the interquartile range, a factor of is defined as a threshold.

- (d)

- TAGs having more than 10% of data above the defined threshold are eliminated (Figure 4b).

- Zero variance: through the percentage variations, it is also possible to identify those values that remain constant over time. This occurs when the percentage variation is zero. The criterion consists on removing the TAG if it has more than 50% of the data without variance (Figure 4c).

3. Results and Discussion

- Remove duplicates: 80 duplicated TAGs were identified and eliminated, reducing the total amount of TAGs to 1104: 945 with decimal numbers, six of integer data, and 153 with categorical values. Table 1 shows TAGs types and their corresponding variable counts.

- Empty and null values: applying this rule, two TAGs were identified with more than 98% of the empty data, they were eliminated and a dataset with 1102 TAGs was obtained.

- Unique values: using this rule, 60 TAGs were found, which were variables that remain unchanged over time and, for this reason, were eliminated, leaving a total of 1042 TAGs.

- Strings: extracting all of the string data, 13 common strings were found. Most of them (11) were due to failures in the data acquisition. Thus, they were replaced by nulls. The remaining valid strings were found in only one TAG, representing the manual or automatic furnace operation. In this case, string modes were replaced by bool values “1” and “0”. After this, the rule Empty and null values was applied once again, resulting in another five TAGs being eliminated. Finally, a dataset with a total of 1037 TAGs was obtained, with a numeric dataset exclusively.

- Negative temperatures: finding the temperatures that present negative values, 74 TAGs were eliminated, for which a characterization was also carried out to know their location in the furnace. As indicated in Table 2, upper zones of the furnace presented more erroneous values than the lower ones. A dataset with 963 TAGs was obtained after applying this rule.

- High variance: identifying the variables that present abrupt changes in the data in at least 10%, 97 TAGs are identified and eliminated finishing with a dataset with 866 TAGs.

- Zero variance: through the percentage variations, 22 TAGs are found, which remain at the same value for more than half of the time window. They were also removed.

4. Conclusions

Funding

Conflicts of Interest

References

- Chu, X.; Ilyas, I.F.; Krishnan, S.; Wang, J. Data Cleaning: Overview and Emerging Challenges. In Proceedings of the 2016 International Conference on Management of Data (SIGMOD ’16), San Francisco, CA, USA, 26 June–1 July 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 2201–2206. [Google Scholar] [CrossRef]

- Pearson, R.K. Data cleaning for dynamic modeling and control. In Proceedings of the 1999 European Control Conference (ECC), Karlsruhe, Germany, 31 August–3 September 1999; pp. 2584–2589. [Google Scholar] [CrossRef]

- Tang, N. Big RDF data cleaning. In Proceedings of the 2015 31st IEEE International Conference on Data Engineering Workshops, Seoul, Korea, 13–17 April 2015; pp. 77–79. [Google Scholar] [CrossRef]

- Wang, X.; Wang, C. Time Series Data Cleaning with Regular and Irregular Time Intervals. arXiv 2020, arXiv:2004.08284. [Google Scholar]

- Wang, X.; Wang, C. Time Series Data Cleaning: A Survey. IEEE Access 2020, 8, 1866–1881. [Google Scholar] [CrossRef]

- Hu, K.; Li, L.; Hu, C.; Xie, J.; Lu, Z. A dynamic path data cleaning algorithm based on constraints for RFID data cleaning. In Proceedings of the 2014 11th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), Xiamen, China, 19–21 August 2014; pp. 537–541. [Google Scholar] [CrossRef]

- Lin, J.; Sheng, G.; Yan, Y.; Zhang, Q.; Jiang, X. Online Monitoring Data Cleaning of Transformer Considering Time Series Correlation. In Proceedings of the 2018 IEEE/PES Transmission and Distribution Conference and Exposition (T&D), Denver, CO, USA, 16–19 April 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Dai, J.; Song, H.; Sheng, G.; Jiang, X. Cleaning Method for Status Monitoring Data of Power Equipment Based on Stacked Denoising Autoencoders. IEEE Access 2017, 5, 22863–22870. [Google Scholar] [CrossRef]

- Ge, C.; Gao, Y.; Miao, X.; Yao, B.; Wang, H. A Hybrid Data Cleaning Framework Using Markov Logic Networks. IEEE Trans. Knowl. Data Eng. 2020. [Google Scholar] [CrossRef]

- Lv, Z.; Deng, W.; Zhang, Z.; Guo, N.; Yan, G. A Data Fusion and Data Cleaning System for Smart Grids Big Data. In Proceedings of the 2019 IEEE Intl Conf on Parallel & Distributed Processing with Applications (ISPA/BDCloud/SocialCom/SustainCom), Xiamen, China, 16–18 December 2019; pp. 802–807. [Google Scholar] [CrossRef]

- Alipour-Langouri, M.; Zheng, Z.; Chiang, F.; Golab, L.; Szlichta, J. Contextual Data Cleaning. In Proceedings of the IEEE 34th Int Conf Data Engineering Workshops (ICDEW), Paris, France, 16–20 April 2018; pp. 21–24. [Google Scholar] [CrossRef]

| Tag Type | Frequency | Tag Type | Frequency |

|---|---|---|---|

| Temperature | 801 | Voltage | 6 |

| Heat flux | 220 | Position | 6 |

| Concentration | 61 | Impedance | 4 |

| Weight | 43 | Pressure | 3 |

| Power | 11 | Flow | 3 |

| Current | 11 | Vibration | 2 |

| Operation | 9 |

| Location | Initial TAGs | Removed TAGs |

|---|---|---|

| Refractory lining 1 | 180 | 15 |

| Refractory lining 2 | 144 | 18 |

| Bottom lining | 26 | 1 |

| Inferior sidewall | 8 | 3 |

| Superior sidewall | 16 | 7 |

| Refractory roof | 30 | 30 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Camacho-Olarte, J.; Torres, J.E.S.; Jimenez, D.A.G.; Medina, J.X.L.; Vargas, R.C.G.; Cardenas, D.A.V.; Gutierrez-Osorio, C.; Rueda, B.; Vargas, W.; Burgos, D.A.T.; et al. A Data Cleaning Approach for a Structural Health Monitoring System in a 75 MW Electric Arc Ferronickel Furnace. Eng. Proc. 2020, 2, 21. https://doi.org/10.3390/ecsa-7-08245

Camacho-Olarte J, Torres JES, Jimenez DAG, Medina JXL, Vargas RCG, Cardenas DAV, Gutierrez-Osorio C, Rueda B, Vargas W, Burgos DAT, et al. A Data Cleaning Approach for a Structural Health Monitoring System in a 75 MW Electric Arc Ferronickel Furnace. Engineering Proceedings. 2020; 2(1):21. https://doi.org/10.3390/ecsa-7-08245

Chicago/Turabian StyleCamacho-Olarte, Jaiber, Julián Esteban Salomón Torres, Daniel A. Garavito Jimenez, Jersson X. Leon Medina, Ricardo C. Gomez Vargas, Diego A. Velandia Cardenas, Camilo Gutierrez-Osorio, Bernardo Rueda, Whilmar Vargas, Diego Alexander Tibaduiza Burgos, and et al. 2020. "A Data Cleaning Approach for a Structural Health Monitoring System in a 75 MW Electric Arc Ferronickel Furnace" Engineering Proceedings 2, no. 1: 21. https://doi.org/10.3390/ecsa-7-08245

APA StyleCamacho-Olarte, J., Torres, J. E. S., Jimenez, D. A. G., Medina, J. X. L., Vargas, R. C. G., Cardenas, D. A. V., Gutierrez-Osorio, C., Rueda, B., Vargas, W., Burgos, D. A. T., Bonilla, C. A. P., Esmeral, J. S., & Restrepo-Calle, F. (2020). A Data Cleaning Approach for a Structural Health Monitoring System in a 75 MW Electric Arc Ferronickel Furnace. Engineering Proceedings, 2(1), 21. https://doi.org/10.3390/ecsa-7-08245