1. Introduction

Due to economic progress and population growth, global electricity consumption has recently increased. According to the Energy Report realized in 2019, global power demand will increase by 2.1% per year, twice the rate of the primary energy production of the stated policy scenario [

1]. Since electricity is used simultaneously in power plant production, it is vital to estimate energy usage ahead of time for a regular supply. Forecasting electricity consumption is a difficult problem to predict over a time series. The data collected by the smart sensor may contain redundancies, outliers, missing values and uncertainties. In addition, forecasting power consumption is a difficult time-series forecasting task. Additionally, the electrical load has irregular trend elements, which makes it very difficult to predict the demand for electrical energy using simple forecasting techniques. Therefore, various forecasting strategies to predict electricity usage have recently been developed. Energy consumption forecasting has been examined using various methodologies, which can be divided into two categories: traditional and artificial techniques [

2].

Historically, statistical techniques were mainly used to forecast power demand. In [

3], to predict electricity consumption, the SARIMA model (seasonal autoregressive integrated moving average) and the fuzzy neural model were compared to predict power consumption. The study household’s hourly and daily electricity use was analyzed using linear regression and quadratic regression models [

4]. In [

5], a multiple regression approach was proposed using genetic algorithm technology to forecast the daily power consumption of the administration building. Significant drawbacks of both models include the lack of occupancy data and the fact that neither model has been studied to predict the energy demand of comparable homes. In another study, the authors used the bootstrap aggregate autoregressive integrated moving average (ARIMA) and an exponential smoothing method in order to predict energy demand in different countries [

6]. In general, statistical methods have shown weaknesses in predicting and capturing the non-linear behavior of energy consumption data long-term. Furthermore, the computational approach has limited predictive capacity due to its non-stationary trend and the sharp patterns in energy consumption. As a result, machine-learning approaches have been used to test a variety of prediction models in order to increase predictive quality [

7,

8,

9]. For example, Liu et al. [

10] created a support vector machine (SVM) model to predict and evaluate the energy consumption of public buildings.

Chen et al. [

11] proposed a model that predicts power consumption as a function of ambient temperature, which was driven by the regression capability of the solid nonlinear support vector. Pinto et al. [

12] proposed a paradigm for ensemble learning that combines three machine-learning methods. Nevertheless, due to the problem of dynamic correlation between the variables and data qualities that change throughout time, existing machine-learning algorithms suffer greatly from overfitting. It is difficult to establish a durable and reliable use in case of overfitting. Similarly, many deep-sequential-learning neural networks are set up to predict power consumption. With one-hour resolution forecasts, a recurrent neural network model was used to estimate energy demand profiles for business and residence databases [

13]. A pooling methodology using a recurrent neural network algorithm was developed to solve the task of overfitting by boosting the number of data and the diversity [

14]. RNN architecture with LSTM cells was developed to predict power consumption in [

15]. Individual household power usage patterns are frequently unpredictable due to a range of factors such as weather conditions and holidays. Therefore, it is unreliable to predict power consumption using methods that are based solely on energy consumption data. Our work in this article shows that deep-learning algorithms are not always reliable and accurate in terms of power-consumption prediction.

2. Deep-Learning Algorithms

2.1. Long Short-Term Memory Neural Network (LSTM)

Long short-term memory neural networks (LSTMs) are an advanced form of recurrent neural networks (RNNs) that replace the original cell neurons. The LSTM inherits the unique features of the RNN, which treats the input as a connected time series. Additionally, the complex structure of the LSTM cell finds a solution to the problem of vanishing gradients and disappearance. The LSTM model flowchart has four key elements: input gates, cell status, forget gates and output gates. The information included in the cell status is maintained, updated and deleted via forget gates, input gates and output gates.

The forget gate, as the name implies, is responsible for deciding what information to discard or retain from the last step. This occurs through the first sigmoid layer. The following step is to determine what information should be stored in the new state of the cell. The update value is determined by a sigmoid function as the input gate layer. Following this, the tanh layer generates a new vector of values that can be injected to the state. The following step is to put them together to make a new status update. The cell state acts as memory for the LSTM. Here, it outperforms vanilla RNNs when processing longer input sequences. The previous state of the cell is coupled to the forget gate at each time step to decide which data to broadcast. It is then combined with the input gate to form a new memory for the cell. Finally, the LSTM cell must provide some output. The cell state obtained above passes through a hyperbolic function named tanh, so the cell state value is filtered between −1 and 1.

2.2. Gated Recurrent Unit (GRU)

The internal structure of an LSTM cell has three gates—input, forget and output—whereas the structure of a GRU cell only has two gates: a reset gate and an update gate [

16]. The update gate determines if the preceding cell’s memory is still active, and the reset gate combines the next cell’s input sequence with the previous cell’s memory. However, LSTMs differ slightly in some respects. To begin, the GRU cell has two gates, while the LSTM has three gates. The input and forget gates of the LSTM are then blended with the update gate and applied to the hidden reset gate directly.

2.3. Convolutional Neural Network (CNN)

A convolutional neural network (CNN) is a type of neural network that was created specifically to solve image-classification problems requiring 2D data. A CNN is also used to analyze 1-dimensional data in a time-series task. A CNN uses the principle of weight sharing to provide more performance for difficult problems such as time-series forecasting and power-demand forecasting. When you apply convolutions to the input data, they are converted to a feature map. The pooling layer is used after the convolution layer to model the collected feature maps in order to transform them into a more abstract format.

3. Results and Discussion

Accurate forecasting of electricity consumption improves energy utilization rates and helps building management to make better energy management decisions, and thus, saves significant amounts of energy and money. However, due to the dynamics and random noise of the data, the accurate prediction of power consumption is a difficult goal. In this paper, a framework was designed to obtain accurate results for power-demand prediction. The methodology used in this work involved three steps: data processing, training the data, and evaluation. We designed a three-step system to calculate short-term electricity consumption in this research. First, the incoming data are preprocessed to remove outliers, missing values, and redundant values. To normalize the input dataset to a given range, we used a typical scalar technique. Then, the data are analyzed and sent to the training phase. Then, we perform tests on the CNN, LSTM, GRU and 3-layer LSTM models. Finally, we evaluate our models using metric parameters such as RMSE and MSE. Basically, these measurements calculate the difference between the predicted value and the actual value. Therefore, the MSE calculates the mean squared between the actual and the predicted values. On the other side, RMSE calculates the percentage difference between actual and predicted values. The performance of the models was validated using the IHEPC dataset available on the UCI repository [

17]. IHEPC is a household dataset freely accessible from the UCI machine-learning store, containing information on electricity consumption from 2006 to 2010. It contains more than 2 million values, with a total of around 26,000 remaining values. Missing values account for 1.25 percent of the total information and are processed during the preprocessing step. For nearly four years, this dataset has contained power-demand measurements at a 1-min sampling rate. We divided the data into a training set and a test set for our tests. The model is tuned using a training set and the model function predicts the output values for data not seen in the test set. This method is appropriate for home applications and saves time during simulation. In this method, 75% of the data are used as a training set, with the remaining 25% used for testing. In addition, we ran several deep-learning tests. We also performed various tests on different deep-learning models for comparison, such as the LSTM, CNN, GRU, and 3-layer LSTM models. Using the aforementioned methods, the predictive models were trained up to 25 epochs.

The model was trained on an HP Omen PC with a Core i5 intel processor and 16 GB of RAM. The implementation was performed with Python3 software using Keras with TensorFlow libraries in the backend and the Adam optimizer.

In this article, we studied several deep-learning models to find an optimal model for short-term power consumption. We performed tests on deep-learning models including CNN, LSTM, GRU and 3-layer LSTM. The simulation results demonstrate that the 3-layer LSTM model is the best model compared to the other three models.

For example, CNN achieved 0.05 and 0.23 MSE and RMSE, LSTM achieved 0.04 and 0.21 MSE and RMSE, GRU scored 0.04 and 0.22 MSE and RMSE, and 3-layer LSTM attained 0.04 and 0.19 MSE and RMSE, as shown in

Table 1.

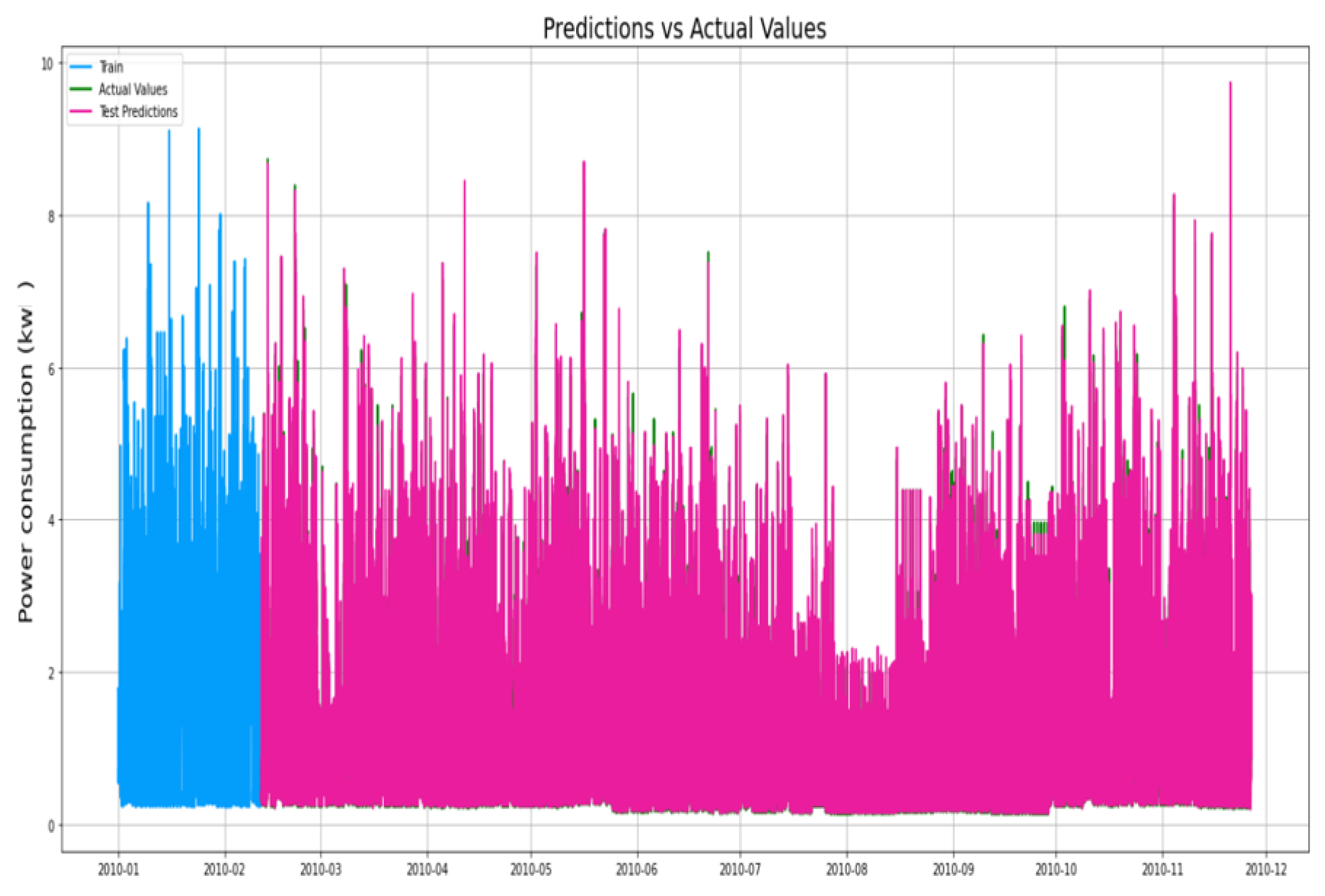

Figure 1 presents the prediction performance of the 3-layer LSTM model over the IHEPC dataset. We can see that we correctly predicted the difficult values linked to a strong variation in electricity consumption. Therefore, the results are precise and reliable for the prediction of power consumption.

Figure 2 presents the architecture of the 3-layer LSTM model used in our work. We used three LSTM layers. In the first layer, we used 128 neurons and tanh as the activation function. Next, in the second LSTM layer, we used 128 neurons, linearly, as the activation function, and a dropout equal to 0.25. The third LSTM layer had 64 neurons, Relu as the activation function, and a dropout equal to 0.25. These results present a time stamp prediction of 1 min. However, in the real application, it takes a higher time stamp, such as 15 min or 60 min, because it is used in smart meters. For this reason, we tested the best model, 3-layer LSTM, with different time stamps at 5 min, 15 min and 60 min, in order to show the reliability and accuracy of this model. After the simulation results, it is seen that the performance of the prediction is reduced significantly when the timestamp is increased. This greatly affects the reliability of the 3-layer LSTM model.

Table 2 shows the results for each timestamp used.

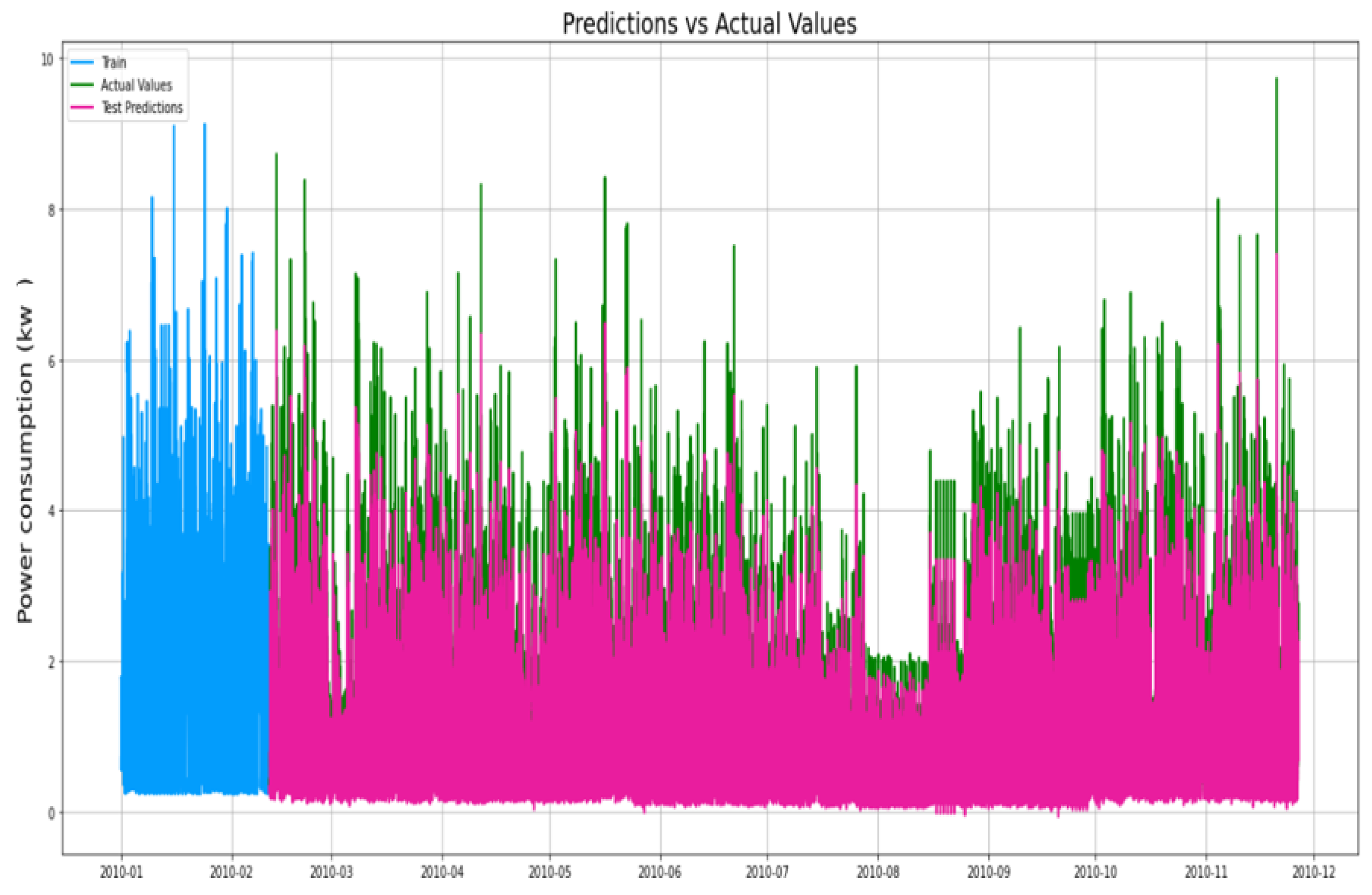

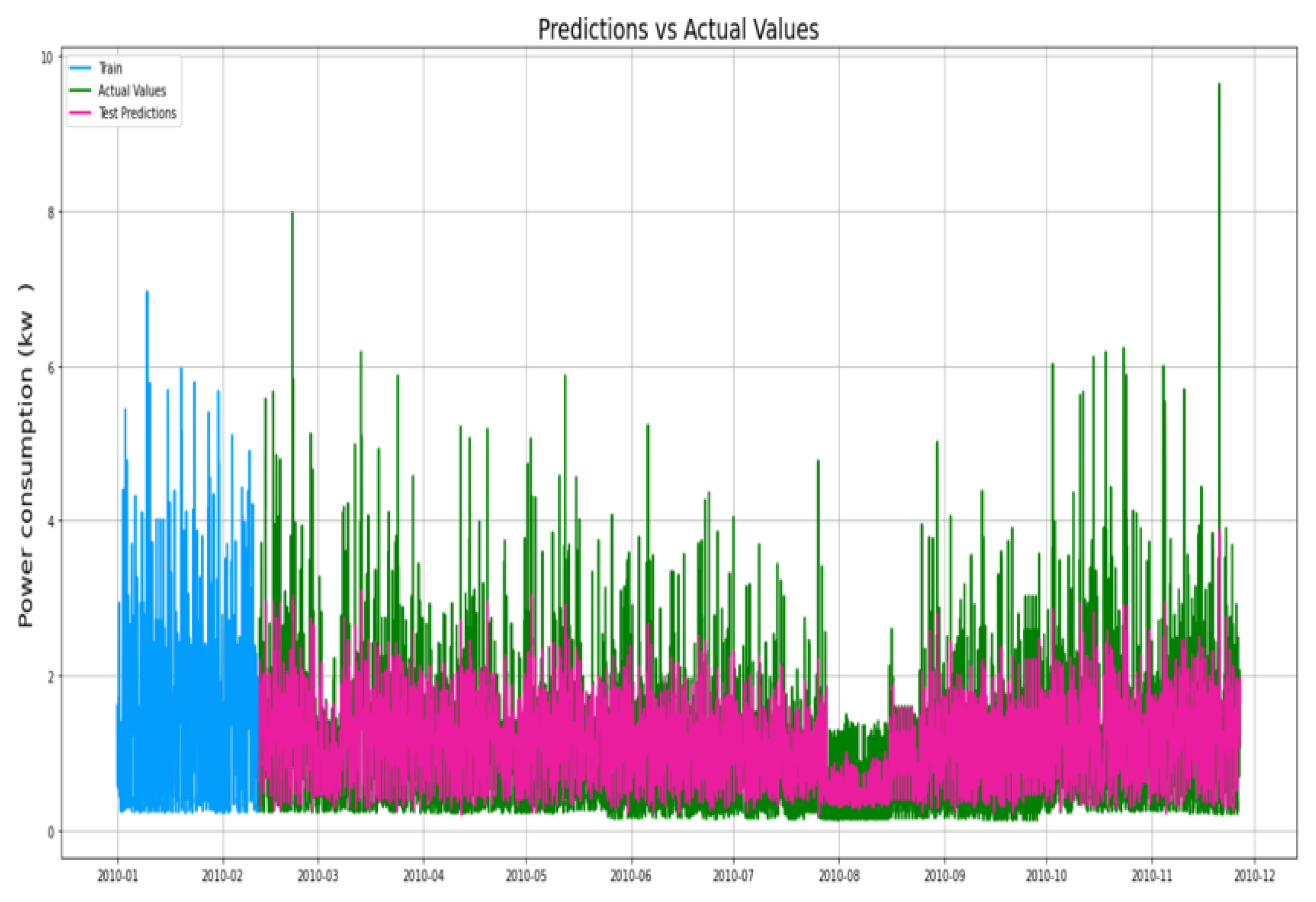

Figure 3 and

Figure 4 present the results of the prediction at 15 min and 60 min timestamps. We see that when there is a sudden change or a peak in consumption, the model fails to predict the exact value. The obtained results show that using deep-learning algorithms is not always reliable to predict power consumption. Several factors affect the performance of the prediction, such as reducing the amount of data in the database used in the model. However, in a real application, we would not always be able to use 2 million datapoints for 60 min or 15 min timestamps; this is because we would need to have 60 years of data for a 15 min timestamp. Therefore, we need to obtain an algorithm that can efficiently predict power consumption in all databases at any timestamp.