Abstract

Nano quadcopters are small, agile, and cost-effective Internet of Things platforms, especially appropriate for narrow and cluttered environments. We developed a model-free control policy combined with FlowDep, an efficient optical flow depth estimation algorithm that computes object depth information using vision. FlowDep was successfully deployed on the Bitcraze Crazyflie 2.1 (with weight ~34 g) using its monocular camera for obstacle avoidance. FlowDep calculated depth information from images and use multizone scheme for control policy. Successful obstacle avoidance is demonstrated. The developed policy showed its potential for future applications in complex environment exploration to enhance the autonomous flight and perception abilities of drones.

1. Introduction

Safe and reliable navigation of autonomous aerial systems in narrow, cluttered, global positioning system (GPS)-denied, and unknown environments is one of the main open challenges in robotics. Because of their small size and agility, micro air vehicles (MAVs) are optimal for this task [1,2]. Nano quadcopters are a variant of MAVs, characterized by minimal weight (typically below the range of ~100 g) and size (typically with rotor to rotor distance of 10 cm). Despite their small size, these nano quadcopters show impressive performance on exploration [3] and gas source seeking [4]. The traditional approach of passive depth estimation is based on stereo vision, which requires two precisely calibrated cameras, and the depth information is calculated based on disparity. However, this method requires higher costs (two cameras) and a physical distance between the two cameras. On a nano quadcopter, the rotor-to-rotor distance is at most on the order of ~10 cm and hence offers a maximal distance range of estimation to a few meters for stereo vision. We turn our attention to the optical flow, one of the most important monocular visual cues for navigation. MAVs are used for high payload capacity [5,6] and nano quadcopter [7]. De Croon’s group developed NanoFlowNet based on real-time dense optical flow on a nano quadcopter using a lightweight convolution neural network [7]. Motivated by the low-resolution but efficient motion-detection mechanisms in insects, we developed an efficient depth estimation algorithm, Flow Dep [8,9]. The capability of the FlowDep was validated by deploying it on a Bitcraze Crazyflie, a 30 g nano quadcopter for obstacle avoidance with a single monocular camera.

2. Materials and Methods

2.1. Nano Quadcopter Platform, Test Environment Construction

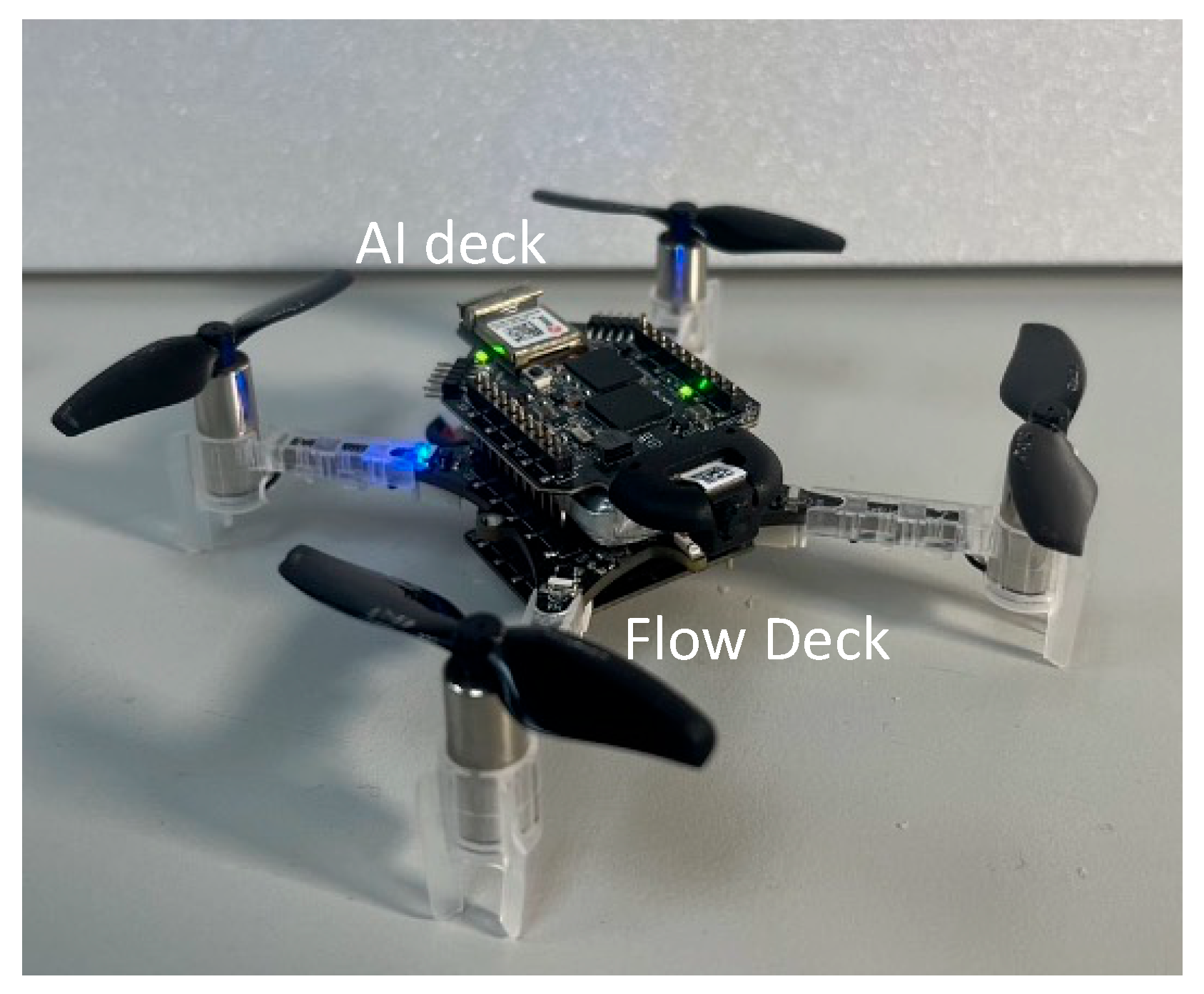

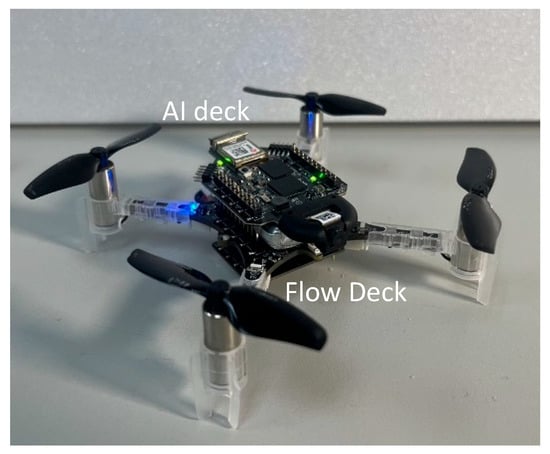

We deployed the developed FlowDep algorithm on a Crazyflie 2.x equipped with the AI deck and the Flow deck for vision-based obstacle avoidance (Figure 1). The high-level flight control command was computed in the laptop with the control strategy and sent back to the nano quadcopter. The image, inertial measurement unit, and position data in the FlowDep algorithm were used for depth estimation [8,9]. Specifically, the optical flow was estimated based on a dense inverse search algorithm [10]. We constructed the indoor environment for an obstacle avoidance experiment. A cluttered environment was constructed with obstacles, including textured poles placed inside.

Figure 1.

Nano quadcopter platform featuring a flow deck on the bottom of the Crazyflie and AI deck.

2.2. Control Policy

The control strategy was the refinement of the four-zone control reported in the literature [11,12]. We defined six zones: a ceiling and ground zone for MAVs not to fly too close, a danger and caution zone in the center of the field of view, and left and right zones.

A decision tree is used to generate flight commands.

3. Results and Discussion

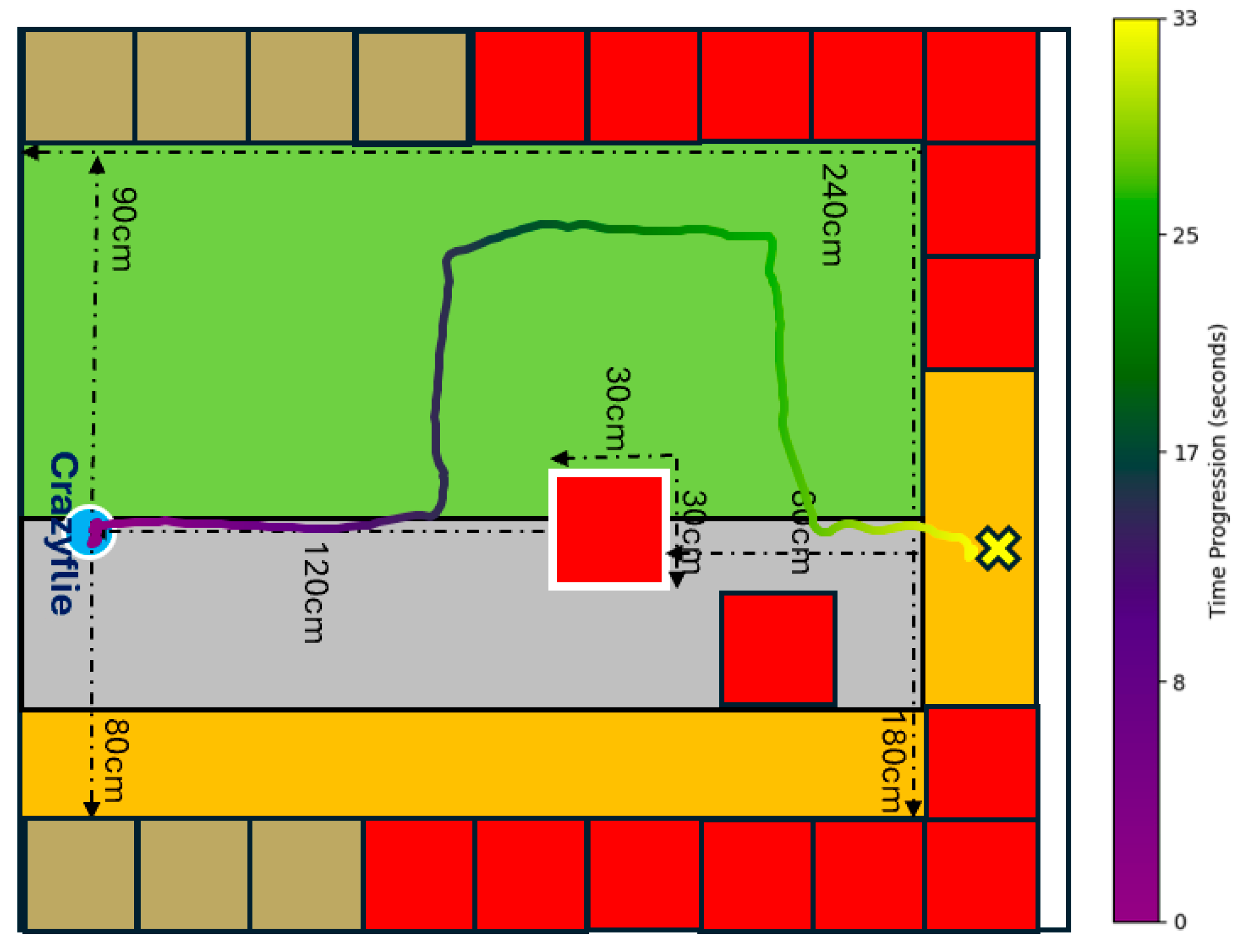

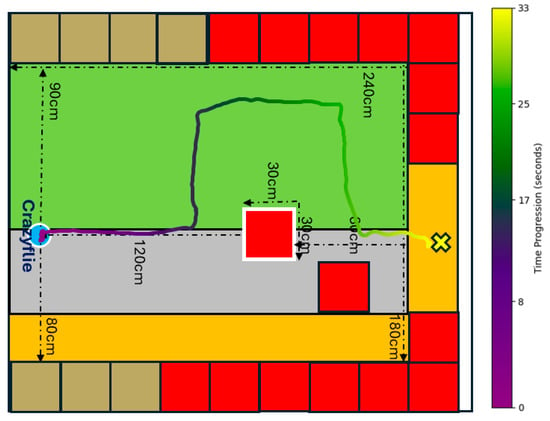

Successful obstacle avoidance was demonstrated in two dimensional test environment (Figure 2). The flight trajectory is colored to show the time. For all tests, the nano quadcopter maintained an altitude of 25 cm and a forward velocity of 0.18 m/s. The developed control policy based on a decision tree offers the following advantages. It imposes minimal computational load when implemented on a microcontroller system, as the algorithm relies on a small set of rules derived from distance measurements. The developed policy can be applied to indoor narrow-space inspection and surveying, as users can monitor real-time video streams as the nano quadcopter operates autonomously. The policy also helps extend dynamic obstacle avoidance and integrates with object recognition to support multi-modal operations.

Figure 2.

Flight trajectory in two dimensional testing environment.

Author Contributions

Conceptualization, Y.-T.Y. and C.-C.L.; software, J.-J.L., S.-Q.L., F.-K.H. and C.-F.Y.; validation, J.-L.L., J.-H.L., and C.-F.Y.; formal analysis, Y.-T.Y. and C.-C.L.; investigation, J.-J.L.; writing—original draft preparation, Y.-T.Y.; writing—review and editing, J.-J.L. and Y.-T.Y.; funding acquisition, Y.-T.Y. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to acknowledge funding support from the National Science and Technology Council under the grant number 113-2218-E-007-019 and National Tsing Hua University under grant numbers 113Q2703E1.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are available upon email communication with the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Floreano, D.; Wood, R.J. Science, technology and the future of small autonomous drones. Nature 2015, 521, 460–466. [Google Scholar] [CrossRef] [PubMed]

- Bodin, B.; Wagstaff, H.; Saecdi, S.; Nardi, L.; Vespa, E.; Mawer, J.; Nisbet, A.; Luján, M.; Furber, S.; Davison, A.J.; et al. SLAMBench2: Multi-Objective Head-to-Head Benchmark- ing for Visual SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 3637–3644. [Google Scholar]

- McGuire, K.N.; De Wagter, C.; Tuyls, K.; Kappen, H.J.; de Croon, G.C.H.E. Minimal navigation solution for a swarm of tiny flying robots to explore an unknown environment. Sci. Robot. 2019, 4, eaaw9710. [Google Scholar] [CrossRef] [PubMed]

- Duisterhof, B.P.; Li, S.; Burgues, J.; Reddi, V.J.; de Croon, G.C.H.E. Sniffy Bug: A Fully Autonomous Swarm of Gas-Seeking Nano Quadcopters in Cluttered Environments. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 15–19 July 2021; pp. 9099–9106. [Google Scholar]

- Gao, P.; Zhang, D.; Fang, Q.; Jin, S. Obstacle avoidance for micro quadrotor based on optical flow. In Proceedings of the 29th Chinese Control and Decision Conference, CCDC, Chongqing, China, 28–30 May 2017; pp. 4033–4037. [Google Scholar]

- Sanket, N.J.; Singh, C.D.; Ganguly, K.; Fermuller, C.; Aloimonos, Y. GapFlyt: Active vision based minimalist structure- less gap detection for quadrotor flight. IEEE Robot. Autom. Lett. 2018, 3, 2799–2806. [Google Scholar] [CrossRef]

- Bouwmeester, R.J.; Paredes-Vallés, F.; de Croon, G.C.H.E. NanoFlowNet: Real-time Dense Optical Flow on a Nano Quadcopter. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 1996–2003. [Google Scholar] [CrossRef]

- Yeh, C.F.; Tang, C.Y.; Chen, T.C.; Chang, M.F.; Hsieh, C.C.; Liu, R.S.; Tang, K.T.; Lo, C.C. FlowDep—An efficient and optical-flow-based algorithm of obstacle detection for autonomous mini-vehicles. TechRxiv 2024, 03. [Google Scholar] [CrossRef]

- Yeh, C.-F.; Lai, J.-J.; Li, S.-Q.; Hsiao, F.-K.; Yu, K.-C.; Jhang, J.-M.; Lo, C.-C.; Yang, Y.-T. Implementation of an efficient and optical-flow-based algorithm of depth estimation on autonomous nano quadcopters for obstacle avoidance. In Proceedings of the 2025 11th International Conference on Control, Automation and Robotics ICCAR, Kyoto, Japan, 18–20 April 2025. [Google Scholar]

- Kroeger, T.; Timofte, R.; Dai, D.; Van Gool, L. Fast Optical Flow using Dense Inverse Search. arXiv 2016, arXiv:1603.03590. Available online: http://arxiv.org/abs/1603.03590 (accessed on 3 September 2025). [CrossRef]

- Cho, G.; Kim, J.; Oh, H. Vision-Based Obstacle Avoidance Strategies for MAVs Using Optical Flows in 3-D Textured Environments. Sensors 2019, 19, 2523. [Google Scholar] [CrossRef] [PubMed]

- Müller, H.; Niculescu, V.; Polonelli, T.; Magno, M.; Benini, L. Robust and Efficient Depth-Based Obstacle Avoidance for Autonomous Miniaturized UAVs. IEEE Trans. Robot. 2023, 39, 4935–4951. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).