Lightweight Model for Weather Prediction †

Abstract

1. Introduction

2. Preliminary Studies

- SqueezeNet is a lightweight convolutional neural network architecture designed to achieve high accuracy with a drastically reduced model size. This design minimizes the number of parameters without compromising performance. Also, it is ideal for deployment on embedded systems and Internet of Things (IoT) applications where memory and computational resources are limited [5].

- ResNet-50 is a deep convolutional neural network with 50 layers, part of the ResNet family. It uses residual learning to address the problem of vanishing gradients in deep networks, where ResNet layers learn residual functions with shortcut connections that skip one or more layers. This allows the model to train much deeper networks effectively [6].

- EfficientNet-b0 is the baseline model of the EfficientNet family. Unlike traditional CNNs that arbitrarily scale depth, width, or resolution, it uses a compound scaling method to balance all three dimensions systematically. EfficientNet-b0 is particularly well-suited for deployment in real-world applications with critical speed and resource usage. It provides a balance between speed, size, and accuracy [7].

3. Method

3.1. Training and Testing

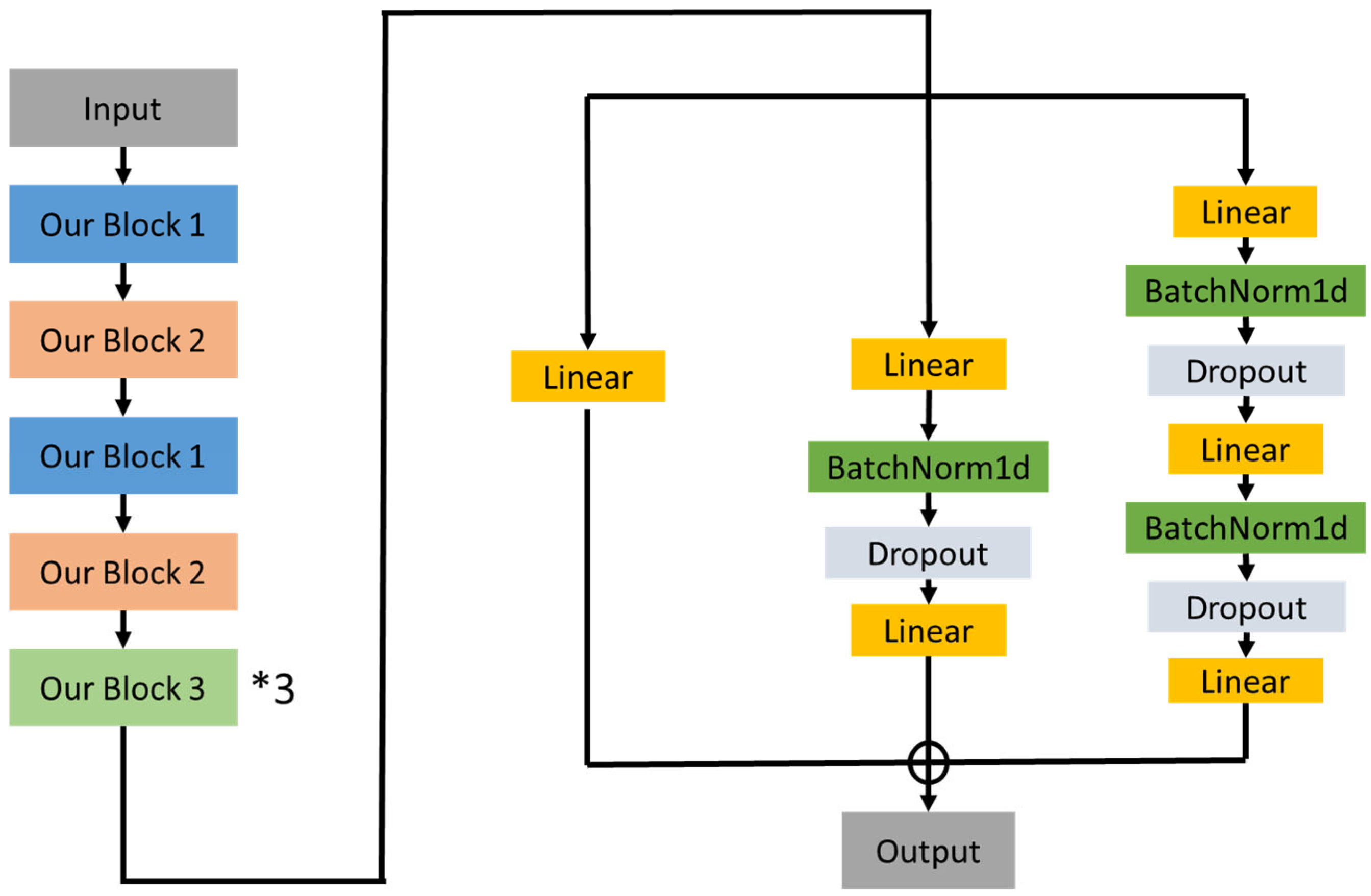

3.2. Architecture of Neural Network

4. Results and Discussions

- Original datasets: MCWRD2018 and DAWN2020 in seven categories (Figure 3).

- Data augmentation: Original image, horizontally flipped image, and the original image cropped by 10% from each of the four sides.

- Training and testing datasets: We split the augmenting dataset into the training (80%) and the testing datasets (20%). To ensure a fair evaluation, the two datasets’ contents are mutually exclusive.

- Input resolution: 224 × 224.

- Epoch: 300.

- Batch size: 16.

- Learning rate: 0.0001.

- Loss function: CrossEntropyLoss.

- Optimizer: Adam.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al-Haija, Q.A.; Gharaibeh, M.; Odeh, A. Detection in Adverse Weather Conditions for Autonomous Vehicles via Deep Learning. AI 2022, 3, 303–317. [Google Scholar] [CrossRef]

- Multi-Class Weather Dataset for Image Classification—Mendeley Data. Available online: https://data.mendeley.com/datasets/4drtyfjtfy/1 (accessed on 8 April 2025).

- DAWN—Mendeley Data. Available online: https://data.mendeley.com/datasets/766ygrbt8y/3 (accessed on 8 April 2025).

- Ooi, Y.-M.; Chang, C.-C.; Su, Y.-M.; Chang, C.-M. Vision-Based UAV Localization on Various Viewpoints. IEEE Access 2025, 13, 38317–38324. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Hwang, K. Cloud Computing for Machine Learning and Cognitive Applications; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Welcome to Python.org. Available online: https://www.python.org/ (accessed on 8 April 2025).

- PyTorch. Available online: https://pytorch.org/ (accessed on 8 April 2025).

| Procedures: Training and testing procedures in our weather perception approach. |

| input: (01) data: a combination of MCWRD2018 and DAWN2020; variables: (02) augmenting-data: a dataset; (03) training-data: a dataset; (04) testing-data: a dataset; (05) model: our machine learning model; (06) benchmarks: the models used in [1]; initial phase: (07) augmenting-data ← augment data; (08) training-data ← split augmenting-data into 80% for training; (09) testing-data ← split augmenting-data into 20% for testing; training phase: (10) train model via training-data; (11) train benchmarks via training-data; evaluating phase: (11) evaluate model via testing-data; (12) evaluate benchmarks via testing-data. |

| Model | Epoch | Accuracy | Parameter (Million) | GFLOPs |

|---|---|---|---|---|

| Developed model | 290 | 98.37 | 0.3972 | 0.0955 |

| EfficientNet-b0 | 282 | 98.61 | 4.0165 | 0.4139 |

| ResNet-50 | 297 | 96.75 | 23.5224 | 4.1317 |

| SqueezeNet | 140 | 73.82 | 1.2554 | 0.8191 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, P.-T.; Tsai, T.-Y.; Chang, C.-C. Lightweight Model for Weather Prediction. Eng. Proc. 2025, 108, 18. https://doi.org/10.3390/engproc2025108018

Wu P-T, Tsai T-Y, Chang C-C. Lightweight Model for Weather Prediction. Engineering Proceedings. 2025; 108(1):18. https://doi.org/10.3390/engproc2025108018

Chicago/Turabian StyleWu, Po-Ting, Ting-Yu Tsai, and Che-Cheng Chang. 2025. "Lightweight Model for Weather Prediction" Engineering Proceedings 108, no. 1: 18. https://doi.org/10.3390/engproc2025108018

APA StyleWu, P.-T., Tsai, T.-Y., & Chang, C.-C. (2025). Lightweight Model for Weather Prediction. Engineering Proceedings, 108(1), 18. https://doi.org/10.3390/engproc2025108018