1. Introduction

High blood glucose levels brought on by either inadequate insulin production impaired insulin action or a combination of the two are the main cause of diabetes, a chronic metabolic disease. Uncontrolled diabetes can result in major and frequently fatal side effects like heart disease, renal failure, neuropathy, retinopathy, which causes blindness, and even the loss of a limb because of nerve damage. According to the World Health Organization (WHO), millions of people worldwide spanning all age groups and socioeconomic backgrounds suffer from diabetes, making it one of the most common non-communicable diseases. Rapid urbanization, shifting dietary patterns, sedentary lifestyles, elevated stress levels, and unhealthy behavioral patterns are all contributing to the alarming rise in the prevalence of diabetes. Diabetes is one of the most common chronic lifestyle-related diseases that have increased as a result of these environmental and societal factors. As the global health burden increases, healthcare systems everywhere are turning more and more to creative solutions that go beyond conventional clinic-based treatment. The limitations of traditional diabetes management techniques can be effectively addressed with remote patient monitoring or RPM(Remote Patient Monitoring). Using digital tools like wearable sensors, smartphone apps, and cloud-based platforms, RPM systems allow for the continuous tracking of patient health data. This greatly lessens the need for frequent hospital stays and minimizes complications by enabling real-time monitoring, early detection of abnormal glucose fluctuations, and prompt interventions. RPM improves overall quality of life, patient engagement, and compliance by offering individualized and data-driven care. Conventional approaches to diabetes threat detection and frequently depend on manual score estimations or static rule-based algorithms, which are insufficiently flexible and accurate for changing patient circumstances. In contrast, machine learning (ML) approaches have a lot to offer in terms of spotting intricate patterns in patient data, which improves their ability to forecast the course of a disease, and customize treatments. New avenues for the intelligent diagnosis and treatment of diabetes have been made possible by recent developments in machine learning, especially in the areas of feature engineering and deep learning. Mobile apps for self-managed insulin therapy, AI-driven virtual coaching programs, automated short message service (SMS) alerts for risk notification, and wearable continuous glucose monitors (CGMs) are a few examples of such intelligent systems. The combination of RPM technologies and machine learning has been repeatedly demonstrated in the literature to improve glycemic control while simultaneously increasing patient autonomy and satisfaction. It has been shown that proactive healthcare practices and routine self-monitoring can lower average blood glucose levels and improve patient outcomes. Nevertheless, a number of difficulties still exist in spite of these developments. The creation of separate predictive models that do not smoothly integrate with real-time patient data or customized health records is one of the main drawbacks of the systems in use today. Furthermore, standardized vendor-neutral platforms that can integrate various healthcare data streams and devices are lacking. Significant obstacles to scalability and usability in actual healthcare settings are presented by this fragmentation. In order to fill these gaps, a thorough intelligent and integrated remote monitoring framework that facilitates real-time data collection, sophisticated analytics, and customized feedback mechanisms is desperately needed. This study suggests a framework that makes use of wearable technology, smartphones, and machine learning to effectively detect diabetes early monitor it continuously and manage it effectively.

2. Literature Review

The authors of the study Comparative Performance Analysis of Quantum Machine Learning with Deep Learning for Diabetes [

1] employed a Multi-Layer Perceptron (MLP) architecture with four hidden layers each consisting of 16, 32, 8 and 2 neurons to build the DL model in order to predict diabetes. This setup was chosen because it performed better. With a 95 percent accuracy rate, the DL model performed exceptionally well. A support vector machine (SVM) model was employed by the authors of the research paper [

2] A Remote Healthcare Monitoring Framework for Diabetes Prediction Using Machine Learning to assess diabetes risk. Using the tenfold stratified cross-validation method, the SVM model has achieved an accuracy of 83 to 20 percent. With a sensitivity of 87.20% and a specificity of 79% it performs well in determining diabetes risk. In order to better manage diabetes and identify it early, this framework facilitates communication between the patient and the physician. The study [

3] Indians Pima Diabetes Mellitus Classification Based on Machine Learning (ML) Algorithms employed a number of machine learning models to categorize diabetes using the Pima Indians dataset. The Bayes classifier was known to perform well in binary classification tasks, especially when optimized with particular features. In addition, the random forest classifier achieved an accuracy of 94%, demonstrating that internal performance is challenging when analyzing a large feature set. With a 94.44 percent accuracy rate, the J48 decision tree model demonstrated good performance. The significance of testing models using metrics like accuracy precision, sensitivity, and specificity is emphasized in the paper. It demonstrates the significant contribution that machine learning algorithms—particularly the J48 decision tree—provide to improve diabetes diagnosis. Deep neural networks (DNNs) were highlighted in the systematic review. Machine learning and deep learning prediction models for type 2 diabetes [

4] examined models to evaluate their propensity to predict type 2 diabetes. In terms of handling big datasets with some instances achieving accuracy levels of 90%, DNN had a low average accuracy when compared to other techniques. In classification tasks, traditional machine learning methods like gradient growth trees (GBTs), random forests (RFs), and support vector machines (SVMs) produced reliable and consistent results. Both deep learning and conventional machine learning techniques are highlighted in the study along with the necessity of precise comparisons between ranking models, including those pertaining to type 2 diabetes prognosis.

Machine learning models for diabetes prediction were examined in the research paper [

5] Retraction Retracted: A Novel Diabetes Healthcare Disease Prediction Framework Using Machine Learning Techniques. Logistic regression (LR), random forest (RF), support vector machine (SVM), and K-Nearest Neighbors (KNN) are the four models that the authors employed. The dataset was meticulously preprocessed by eliminating outliers and managing missing values prior to model training. With an accuracy rate of 83%, the random forest and support vector machine models demonstrated strong performance. The accuracy of the logistic regression model increased by 3% (though the precise percentage is not specified) following the application of hyper-parameter tuning. The study demonstrated the potential of machine learning techniques with the RF and SVM models being accurate in this framework. The evaluation assessed the models using accuracy precision recall and F1 scores, and offered a thorough assessment of their performance. Both short-term memory (LSTM) and multilevel perceptron (MLP) models were employed by the authors of the study [

6] Machine Learning Based Diabetes Classification and Prediction of Healthcare Applications to categorize and forecast diabetes. Additionally, they were able to classify diabetes with a residual of 1 percent. The blood LSTM model for determining glucose levels performed even better, with an accuracy of 87.26 percent. The PIMA Indian diabetes database was used to compare the two models with other approaches. The results showed that MLP LSTM and new machine learning techniques have the potential to manage diabetes with accurate classification and prediction, while LSTM achieved very high accuracy. Because the Hidden Markov Model (HMM) can successfully track the progression of diabetes over time with repeated clinical measurements using electronic medical record (EMR) data, it was chosen by the author of the study [

7] to validate the Framingham Diabetes Risk Score Model (FDRSM) to predict the 8-year risk of diabetes. Using the area receiver operating characteristic curve (AROC), the accuracy of the HMM model was evaluated. In a sample of 911 people, the AROC value was 86.9 percent, which is better than the earlier AROC values of 78.6 percent and 85 percent. Using FDRSM, other studies demonstrated that HMM outperformed these models in predicting the risk of diabetes. Given its high predictive accuracy, the HMM model is a useful tool for determining who is at risk of acquiring diabetes in the future. The study Predicting Diabetes Mellitus With Machine Learning Techniques [

8] examined a number of machine learning models for diabetes prediction. When tested on the Luzhou dataset, the decision tree (J48) in the implemented model performed well, achieving an accuracy greater than 0.76. Even though the random forest (RF) model was less effective, it produced accurate diabetes prediction results. When blood glucose levels were the only features used, the neural network model did not perform well, suggesting that it is not the best option for this set of data. Using prediction techniques, the random forest model obtained the highest accuracy of 0.8084 period. Their play was strong.

In the study [

9], the authors used the Extreme Gradient Boosting (XGBoost) model to classify diabetes-related conditions based on electrocardiogram (ECG) data. The model was trained to predict three groups: ‘no diabetes’, ‘prediabetes’, and ‘diabetes type 2’. The XGBoost model showed the best performance, achieving 97.1% accuracy, 96.2% recall, and 96.8% overall accuracy. Its F1 score, combining accuracy and recall, is 96.6%. These results indicate that the model is highly accurate and reliable in the diagnosis of diabetes and prediabetes. Also, the calibrated model had a low mean measurement error of 0.06, further confirming its efficiency. The study reveals XGBoost as a powerful tool for diagnosis of diabetes using ECG data. A separate research study [

10] has been conducted to forecast the changes in three-month fasting blood glucose and glycated hemoglobin levels in patients diagnosed with Type 2 Diabetes Mellitus, using various machine learning algorithms. The information for this study was sourced from the PHS System and the MRHM System of Health. The paper [

11] introduces a method to explain machine learning predictions. It basically addresses the challenge of how these models work in healthcare applications. This approach ensures that predictions remain accurate while also providing clear explanations. The method was tested on predicting the diagnosis of type 2 diabetes. Within a year, it successfully provided explanations for 87.4% of the patients whose outcomes were correctly predicted. In the study [

12] “Predicting Type 2 Diabetes Using Logistic Regression and Machine Learning Approaches,” it shows an accuracy of 78.26%. The classification tree is used to validate the logistic regression results. It highlighted glucose, BMI, and age as key predictors of diabetes. It also showed a cross-validation error rate of 21.74%, reflecting reasonable accuracy. Additionally, the study found that a specific logistic regression model, which included four interaction terms, performed better than other combinations, indicating the model’s robustness.

This paper [

13] discuss various methods in diabetes prediction. It uses machine learning and deep learning techniques. One study shows a remote healthcare monitoring framework for diabetes risk prediction. This system uses machine learning and integrates multiple healthcare devices to collect data. It achieved competitive performance in diabetes prediction using ML. Another study focuses on early detection of diabetes risk factors. It employs the SMOTE- ENN method to balance the dataset and uses K-Nearest Neighbors (KNN). It had achieved a high accuracy of 98.38. The paper [

14] introduces a method to explain machine learning predictions. It basically addresses the challenge of how these models work in healthcare applications. This approach ensures that predictions remain accurate by also providing clear explanations. The method was tested on predicting the diagnosis of type 2 diabetes. Within a year, it successfully provided explanations for 87.4% of the patients whose outcomes were correctly predicted. A recent article [

15] discusses the use of various classifiers to detect type 2 diabetes. The article reports that the highest accuracy achieved in their experiments was 90.91% using the Generalized boosting regression classifier.

3. Methodology

This section will discuss the methodical process used to conduct this study. The data was analyzed using Python (3.10.0). The ensuing subsections will cover the remaining section. Data Gathering and Data collection was conducted using data gathered from the behavioral threat factor and made available by Kaggle (20) monitoring system.

Table 1 displays a sample of the data set with its attributes description.

The data acquired represents the BRFSS in a void manner, i.e., E. A total of 253,680 records that represent the factual feedback of the CDCs BRFSS2015 check. A total of nine attributes that addressed the class point were included in the data set. A double variable that determines whether a case has diabetes is the diabetics class variable (Diabetes binary). Additionally, the entire point set was used in this work to train the hypothesized models. Preparing data. One of the time-consuming methods for creating ratiocination models, and particularly for healthcare decision support systems, is to prepare data in a way that is favorable for achieving reliable outcomes. Real-world scripts typically yield raw data that is unreliable, unbalanced, and unclean. Colorful pre-processing techniques must therefore be used to improve the quality of the data before training the model with real-world data. ML offers a variety of data drawing styles. For example, imputers can be used to deal with missing values. Various approaches were taken in this study to address dataset inconsistencies. The quantity of a particular type of data in a dataset is lower than that of the other types of data in an imbalanced data script. Research typically focuses on the nonage class type in order to balance the class types in a dataset.

3.1. Machine Learning

The following are the ML algorithms we applied on our dataset using Rapid Miner 9.10.

KNN

One machine learning algorithm, KNN, bases its prediction on how close the training samples are to a point set (k = 30). In this algorithm, the classifier predicts the class marker of a new sample by attempting to determine the k number of training samples that are nearly identical. To make it easier to honor a class maturity, the k number is also made an odd number. For the system to achieve greater delicacy and other evaluation metrics, the k number is set to 3 (where k = the number of nearest neighbors considered when making a prediction).

Logistic Regression

In order to forecast the likelihood of the outcomes, this supervised machine learning algorithm makes use of logistic functions. Binary classification uses logistic regression, and the output takes the form of 0 or 1.

Naive Bayes

Naive Bayes classifiers make predictions about the class of data instance using Bayes Theoram. These classifiers operate under the assumption that all feature pairs within the data is independent of each other. This simplifies the model and make it computationally more efficient. Naive Bayes classifiers are commonly used in natural language processing, email filtering and text classification task due to their speed, accuracy, and simplicity.

Random Forest

One popular supervised machine learning algorithm that works well for both classification and regression tasks is called Random Forest. Several decision trees are combined in this assemble learning algorithm to generate predictions. Every random forest decision tree is trained using a different dataset.

3.2. Frame Work

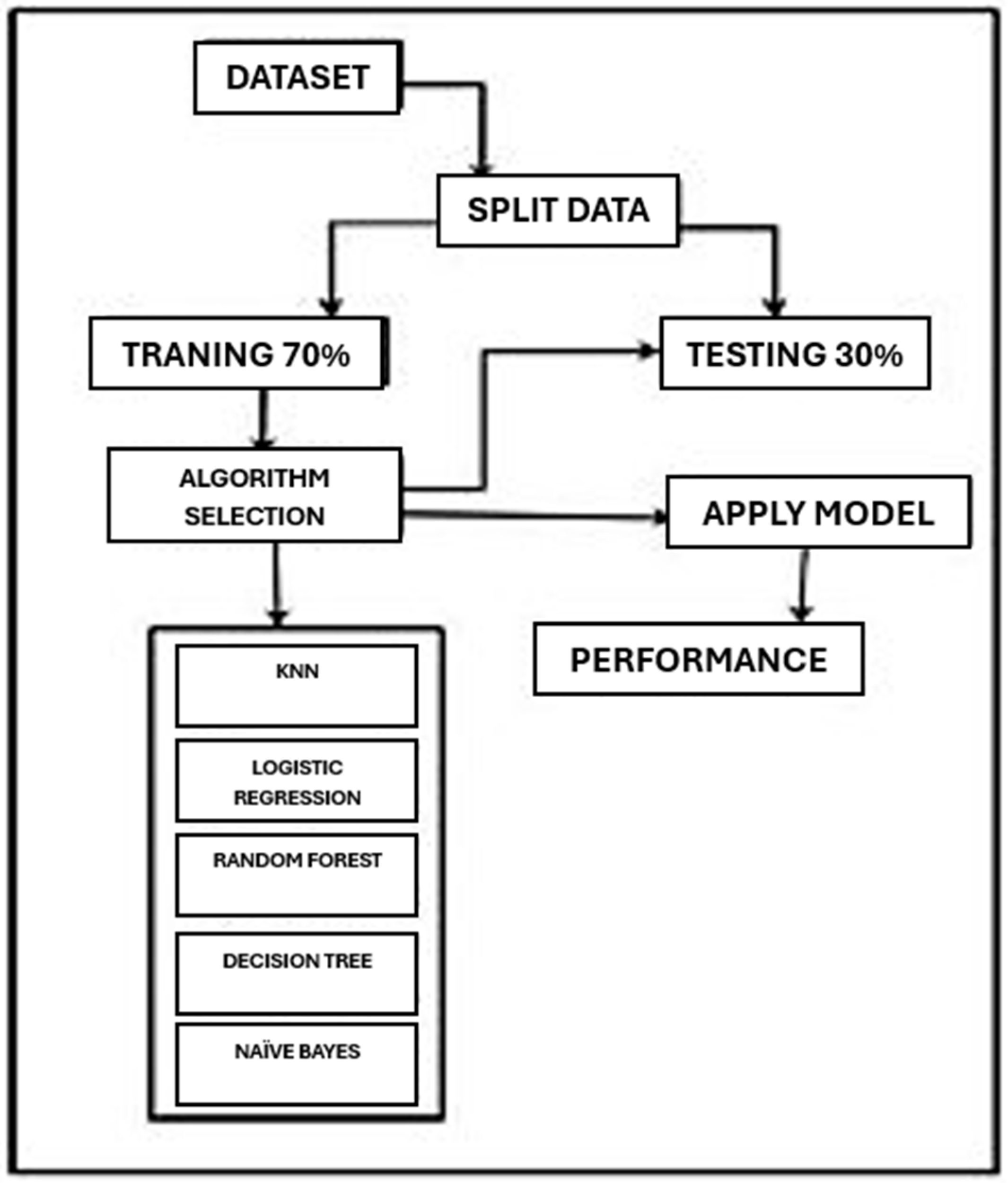

Now we will discuss the framework of how we applied different algorithms to get results. The framework of our process is shown in

Figure 1.

4. Result

This part of the document is designated for a comprehensive analysis of the outcome. Following the implementation of multiple machine learning techniques, a detailed examination of the results will be presented in this section. The confusion matrix is shown in

Table 2.

In

Table 3, we have shown all our results using different algorithms. Accuracy, precision, recall, and F1 score has been calculated.

In this segment, we will provide a brief overview of our findings on the model as shown in

Figure 2. We applied various algorithms to the given dataset and obtained different predictions based on the model’s accuracy, recall, precision, f-measure, and confusion matrix using Rapid Miner. The K-Nearest Neighbor algorithm had an accuracy rate of 76.61%. Conversely, the Decision Tree algorithm achieved 94.89% accuracy, while the Random Forest algorithm achieved 95.12% accuracy, and Logistic Regression achieved 95.05% accuracy. Additionally, Naïve Bayes achieved 94.81% accuracy. We noticed that Random Forest had the highest accuracy rate of 95.12%, even without using SMOTE, which can be used to predict type 2 diabetes for this dataset. These are the algorithmic performances that we recorded. In the future, we will try to improve it. The table below shows the comparison of the results of the different algorithms. This table provides a brief explanation of precision, recall, and F1 measure for the dataset. Random Forest achieved the highest accuracy, precision, recall, and F1 score. Various methods and techniques can be applied to improve the results.

5. Conclusions

In this research paper, a thorough investigation was carried out to investigate and apply different tools and methods for the early-stage prediction of type 2 diabetes, a chronic illness that still affects millions of people worldwide. The development of insulin resistance in the body’s cells or insufficient insulin production by the pancreas cause type 2 diabetes, which is characterized by high and uncontrolled blood sugar levels. A higher quality of life, preventative care, and better health outcomes can all be greatly enhanced by early detection of those who are at risk of contracting this illness. It becomes feasible to manage the illness more skillfully and possibly stop its onset entirely by acting before it worsens. We used machine learning techniques to create and train a predictive model that accurately predicts the likelihood of type 2 diabetes in order to address this pressing healthcare issue. The Logistic Regression algorithm, which was selected for our main model due to its ease of use interpretability and effectiveness in binary classification tasks, is used. According to WHO guidelines, the dataset used for training and assessment was carefully selected to guarantee the accuracy and applicability of the features. Several other machine learning algorithms such as K-Nearest Neighbors (KNN), Random Forest Decision Tree, Naïve Bayes, and Linear Regression were also used to guarantee reliable and comparable analysis. The ability of each of these models to forecast type 2 diabetes was assessed. The Random Forest algorithm performed better than the others, attaining the highest accuracy rate of 95.12%. This demonstrates how well the model captures intricate data relationships and generates precise predictions, which makes it the perfect fit for this application. The study’s findings highlight the potential of machine learning and data-driven strategies to revolutionize established healthcare systems. Healthcare providers can move from reactive to proactive disease management by incorporating these predictive models into remote patient monitoring and real-world clinical frameworks. This study opens the door for the creation of intelligent, easily accessible, and scalable healthcare solutions in the future in addition to advancing scholarly knowledge of diabetes prediction. In summary, machine learning-based early detection of type 2 diabetes presents encouraging prospects for lowering the disease’s worldwide burden. Future research can concentrate on improving model performance using deep learning and hybrid approaches, integrating real-time patient data and creating user-friendly applications for wider accessibility. Such predictive systems have the potential to be extremely useful instruments in the battle against chronic illnesses and the advancement of world health if they continue to advance.