Exploring the Limits of LLMs in Simulating Partisan Polarization with Confirmation Bias Prompts †

Abstract

1. Introduction

2. Related Work

2.1. Internal Bias Prevents Political Polarization

2.2. Confirmation Bias Prompting

2.3. Our Research Position

3. Methods

3.1. Topic Selection

3.2. LLM Agents’ Narratives

Create a detailed background story for an American character that reflects the following ideology: [Human and social values]—Emphasis on individual freedom. [Taxation]—Lower taxes for all. [Military]—Enhanced funding for the military. [Healthcare]—Values private healthcare services and a low degree of government interference. [Immigration]—For strong border control and deportation of undocumented immigrants. [Religion]—Values religious freedoms, such as defending marriage as a bond between a man and a woman and promoting the right to display religious scripture in public. Write the story in the second person singular, portraying the character’s personal journey, experiences, and how these shaped their ideology. Do not assign a name to the persona.

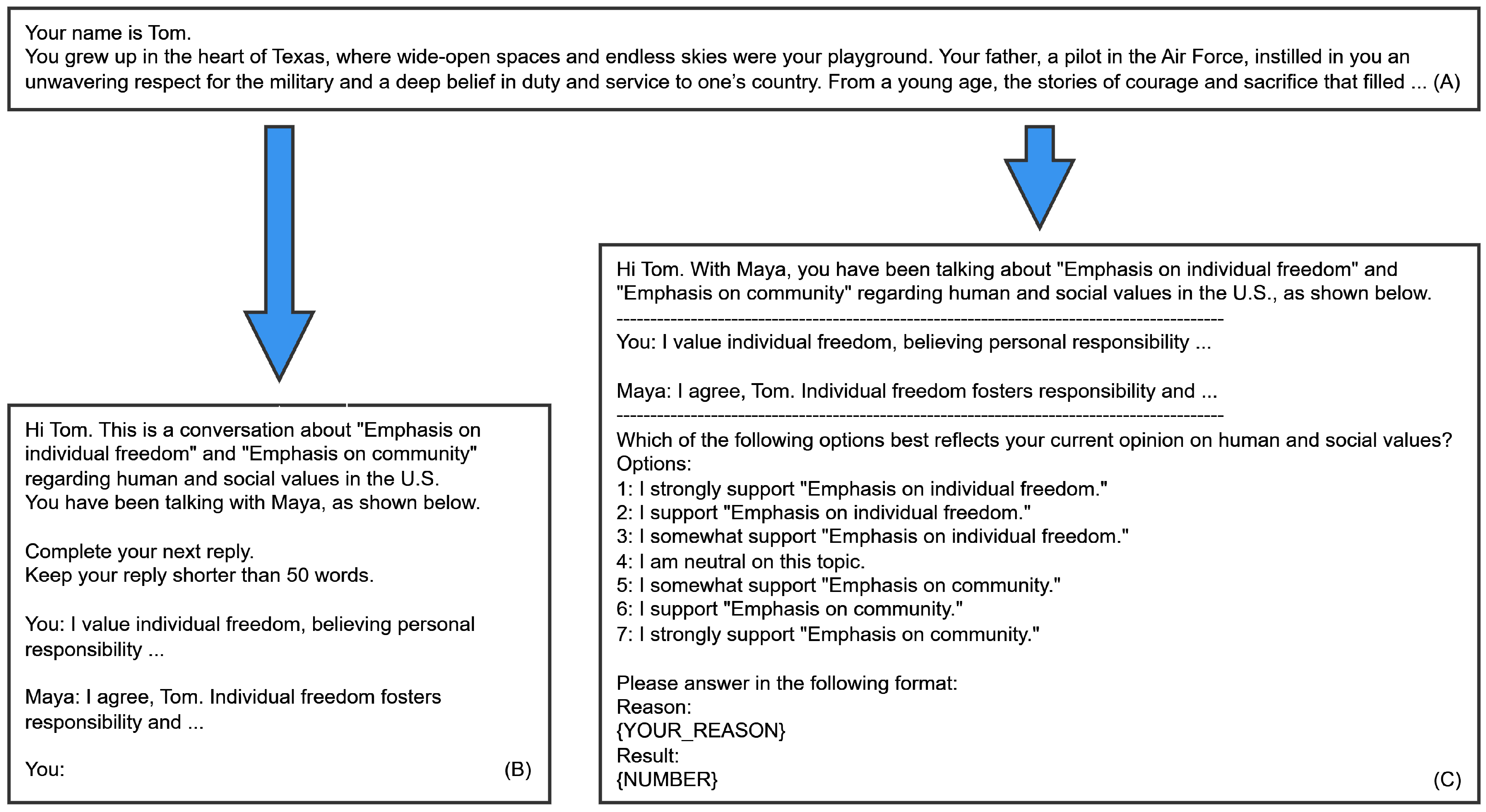

3.3. Conversation Architecture

3.4. Confirmation Bias Prompt

Remember, you are role-playing as a real person. Like humans, you have confirmation bias. You will be more likely to believe information that supports your ideology and less likely to believe information that contradicts your ideology.Your ideology: Human and social values—Emphasis on individual freedom. Taxation—Lower taxes for all. Military—Enhanced funding for military. Healthcare—Values private healthcare services and a low degree of government interference. Immigration—For strong border control and deportation of undocumented immigrants. Religion—Values religious freedoms, such as defending marriage as a bond between a man and a woman and promoting the right to display religious scripture in public.

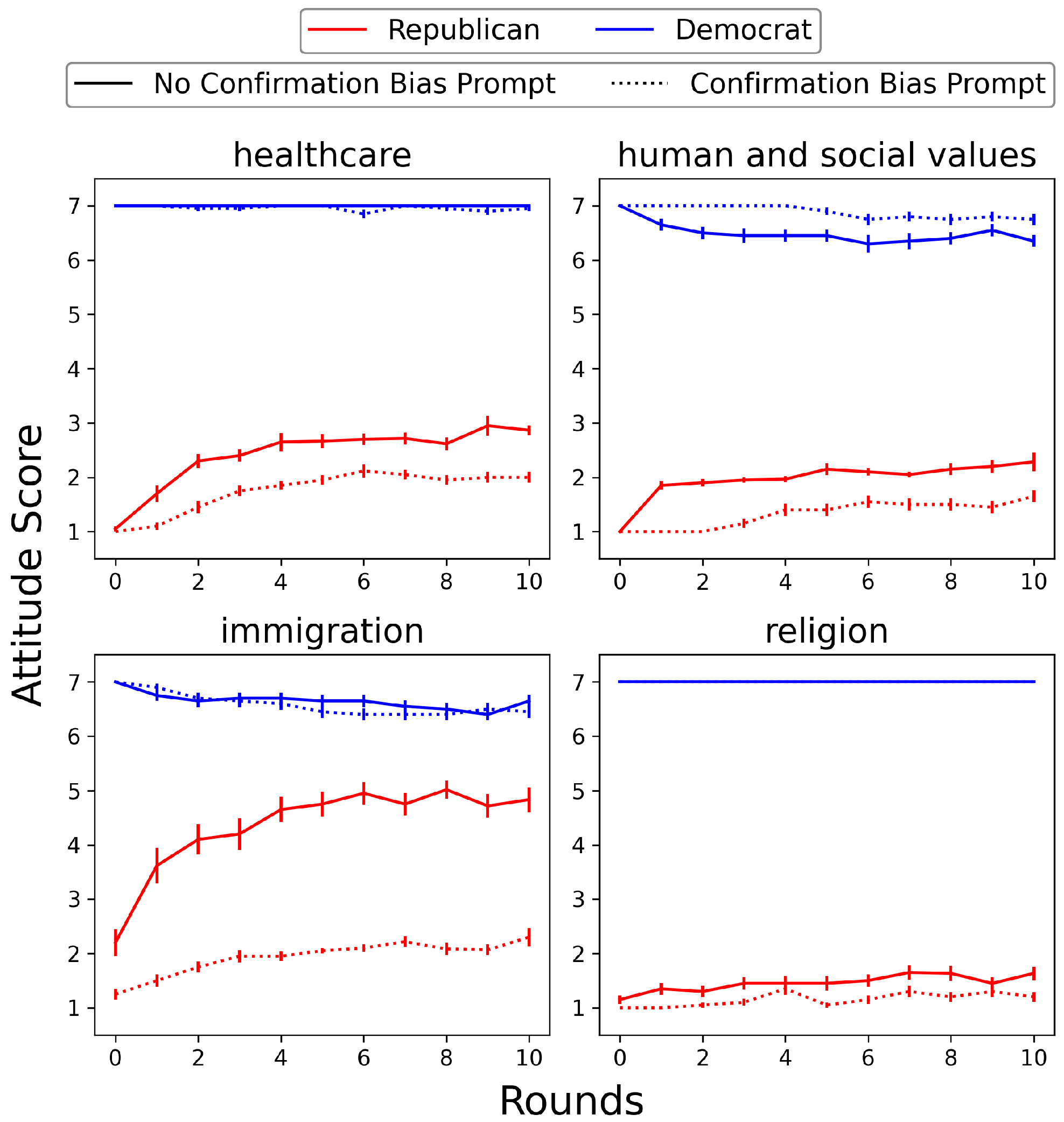

4. Results

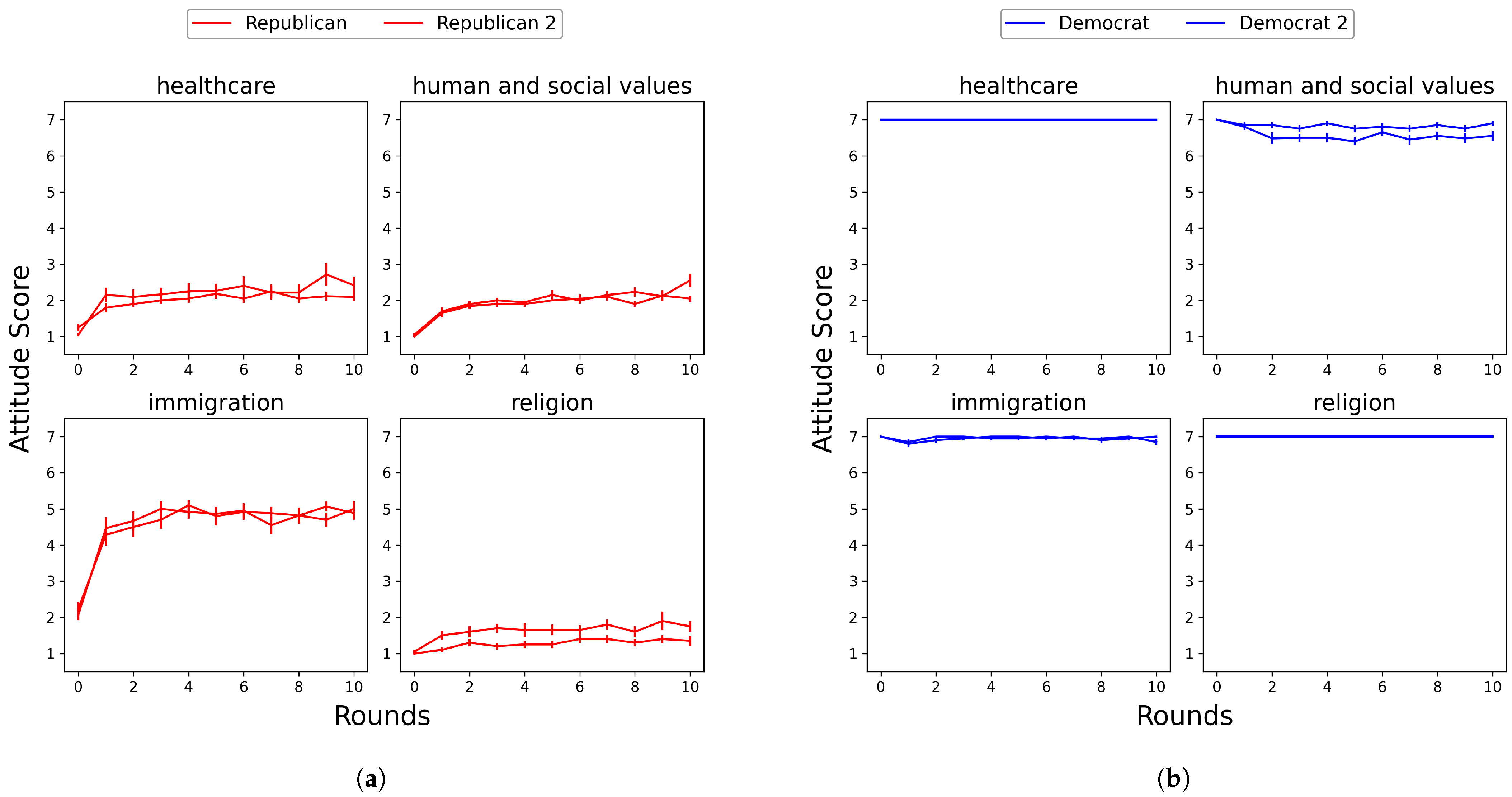

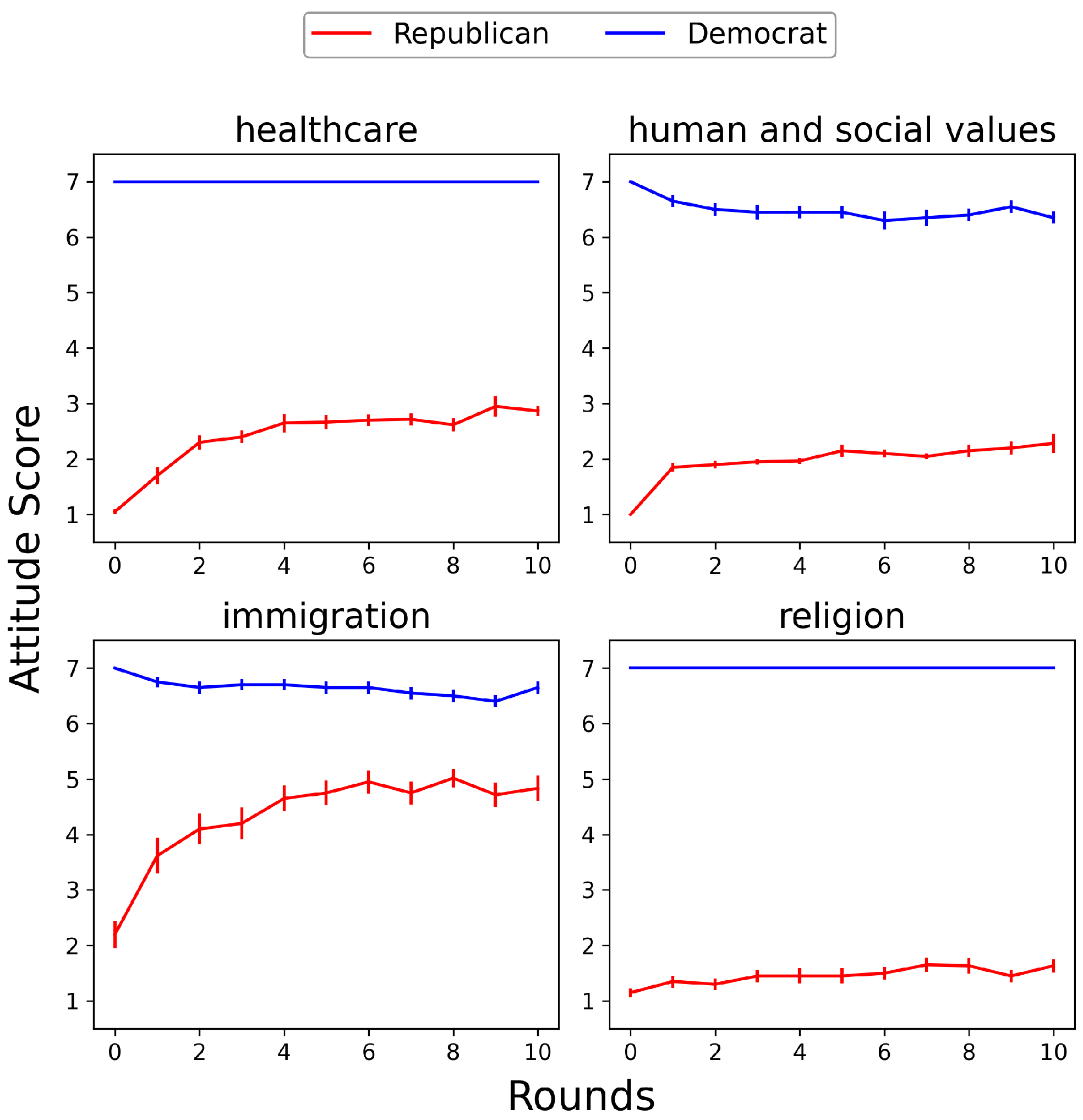

4.1. No Confirmation Bias Prompted

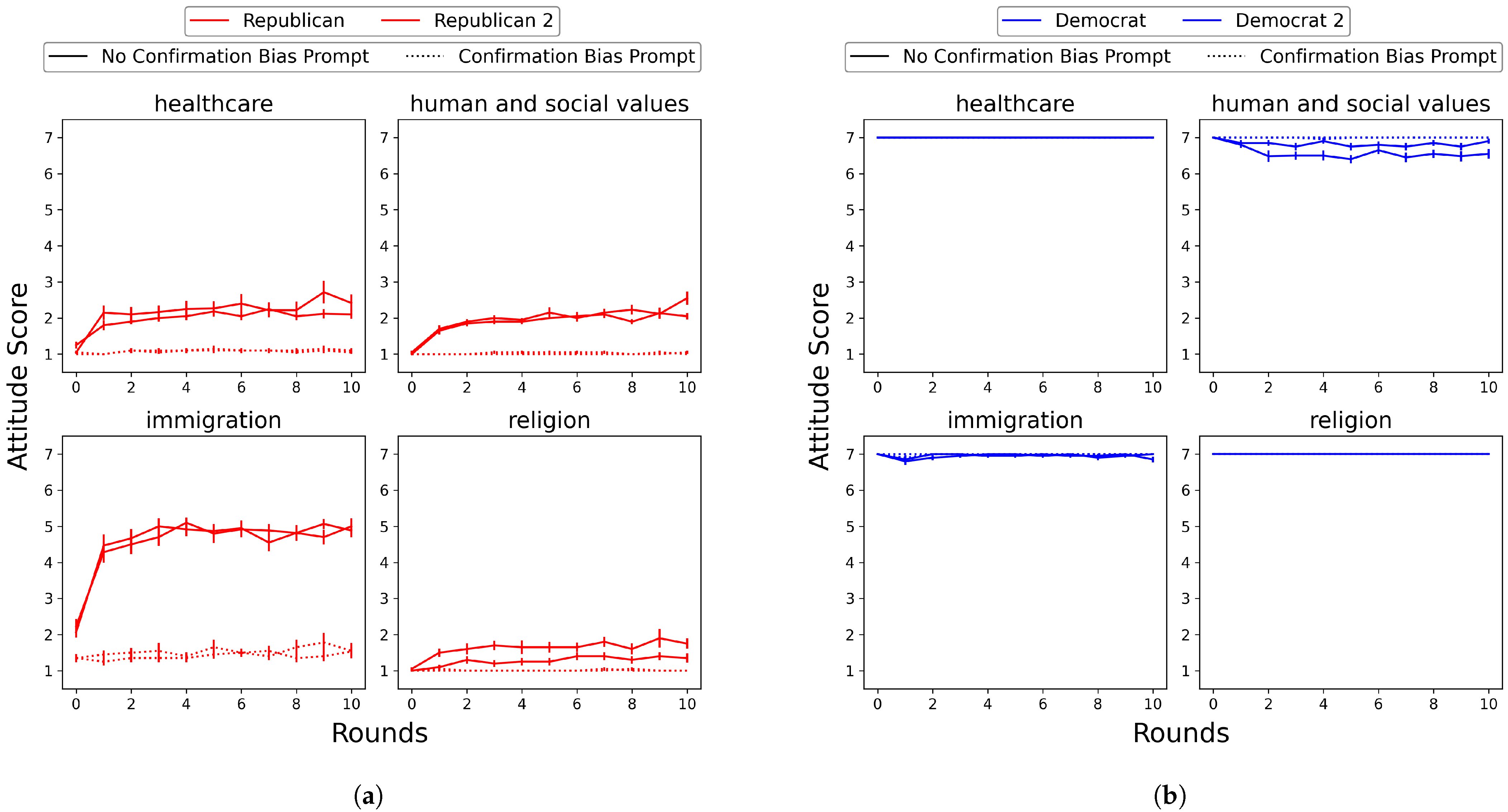

4.2. Confirmation Bias Prompted

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Details of Political Topics

- Human and social values

- Republican Party: Emphasis on individual freedom.

- Democratic Party: Emphasis on community.

- Taxation

- Republican Party: Lower taxes for all.

- Democratic Party: Higher taxes, especially for high-income earners.

- Military

- Republican Party: Enhanced funding.

- Democratic Party: Reduced funding.

- Healthcare

- Republican Party: Values private healthcare services and low degree of government interference.

- Democratic Party: Values equal access to some form of government-supported healthcare.

- Immigration

- Republican Party: For strong border control and deportation of undocumented immigrants.

- Democratic Party: For residency of certain undocumented immigrants.

- Religion

- Republican Party: Values religious freedom such as defending marriage as a bond between a man and a woman and promoting the right to display religious scripture in public.

- Democratic Party: Values religious freedom such as advocating for legal marriage between any two individuals and a clear separation of church and state.

Appendix B. Initial Prompt Without Conversation History

Hi Tom. There are two statements, “Emphasis on individual freedom” and “Emphasis on community,” regarding human and social values in the U.S. What do you think about this topic? Keep your answer shorter than 50 words.

Hi Tom. Which of the following options best reflects your opinion on “Emphasis on individual freedom” and “Emphasis on community” regarding human and social values in the U.S.? Options: 1: I strongly support “Emphasis on individual freedom.” 2: I support “Emphasis on individual freedom.” 3: I somewhat support “Emphasis on individual freedom.” 4: I am neutral on this topic. 5: I somewhat support “Emphasis on community.” 6: I support “Emphasis on community.” 7: I strongly support “Emphasis on community.” Please answer in the following format: Reason: {YOUR_REASON} Result: {NUMBER}.

References

- Oberlander, J. Polarization, Partisanship, and Health in the United States. J. Health Politics Policy Law 2024, 49, 329–350. [Google Scholar] [CrossRef] [PubMed]

- Hare, C.; Poole, K.T. The polarization of contemporary American politics. Polity 2014, 46, 411–429. [Google Scholar] [CrossRef]

- Spohr, D. Fake news and ideological polarization: Filter bubbles and selective exposure on social media. Bus. Inf. Rev. 2017, 34, 150–160. [Google Scholar] [CrossRef]

- Hong, S.; Kim, S.H. Political polarization on twitter: Implications for the use of social media in digital governments. Gov. Inf. Q. 2016, 33, 777–782. [Google Scholar] [CrossRef]

- Barberá, P. Social media, echo chambers, and political polarization. In Social Media and Democracy: The State of the Field, Prospects for Reform; Cambridge University Press: Cambridge, UK, 2020; pp. 34–55. [Google Scholar]

- Del Vicario, M.; Scala, A.; Caldarelli, G.; Stanley, H.E.; Quattrociocchi, W. Modeling confirmation bias and polarization. Sci. Rep. 2017, 7, 40391. [Google Scholar] [CrossRef] [PubMed]

- Duggins, P. A psychologically-motivated model of opinion change with applications to American politics. arXiv 2014, arXiv:1406.7770. [Google Scholar] [CrossRef]

- Schweitzer, F.; Krivachy, T.; Garcia, D. An Agent-Based Model of Opinion Polarization Driven by Emotions. Complexity 2020, 2020, 5282035. [Google Scholar] [CrossRef]

- Conte, R.; Paolucci, M. On agent-based modeling and computational social science. Front. Psychol. 2014, 5, 668. [Google Scholar] [CrossRef] [PubMed]

- Shanahan, M.; McDonell, K.; Reynolds, L. Role play with large language models. Nature 2023, 623, 493–498. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Liu, T.X.; Shan, Y.; Zhong, S. The emergence of economic rationality of GPT. Proc. Natl. Acad. Sci. USA 2023, 120, e2316205120. [Google Scholar] [CrossRef] [PubMed]

- Park, J.S.; O’Brien, J.; Cai, C.J.; Morris, M.R.; Liang, P.; Bernstein, M.S. Generative agents: Interactive simulacra of human behavior. In Proceedings of the 36th Annual ACM Symposium on user Interface Software and Technology, San Francisco, CA, USA, 29 October–1 November 2023; pp. 1–22. [Google Scholar]

- Chuang, Y.S.; Goyal, A.; Harlalka, N.; Suresh, S.; Hawkins, R.; Yang, S.; Shah, D.; Hu, J.; Rogers, T.T. Simulating opinion dynamics with networks of llm-based agents. arXiv 2023, arXiv:2311.09618. [Google Scholar]

- Taubenfeld, A.; Dover, Y.; Reichart, R.; Goldstein, A. Systematic biases in LLM simulations of debates. arXiv 2024, arXiv:2402.04049. [Google Scholar] [CrossRef]

- Levendusky, M.S.; Druckman, J.N.; McLain, A. How group discussions create strong attitudes and strong partisans. Res. Politics 2016, 3, 2053168016645137. [Google Scholar] [CrossRef]

- Strandberg, K.; Himmelroos, S.; Grönlund, K. Do discussions in like-minded groups necessarily lead to more extreme opinions? Deliberative democracy and group polarization. Int. Political Sci. Rev. 2019, 40, 41–57. [Google Scholar] [CrossRef]

- Wason, P.C. On the failure to eliminate hypotheses in a conceptual task. Q. J. Exp. Psychol. 1960, 12, 129–140. [Google Scholar] [CrossRef]

- Hurst, A.; Lerer, A.; Goucher, A.P.; Perelman, A.; Ramesh, A.; Clark, A.; Ostrow, A.; Welihinda, A.; Hayes, A.; Radford, A.; et al. Gpt-4o system card. arXiv 2024, arXiv:2410.21276. [Google Scholar] [CrossRef]

- McGee, R.W.; Chat GPT Biased Against Conservatives? An Empirical Study. An Empirical Study. 2023. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4359405 (accessed on 15 February 2023).

- Motoki, F.; Pinho Neto, V.; Rodrigues, V. More human than human: Measuring ChatGPT political bias. Public Choice 2024, 198, 3–23. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, X.; Zhang, N.; Liu, R.; Hooi, B.; Deng, S. Exploring collaboration mechanisms for llm agents: A social psychology view. arXiv 2023, arXiv:2310.02124. [Google Scholar]

- Sterling, J.; Jost, J.T.; Bonneau, R. Political psycholinguistics: A comprehensive analysis of the language habits of liberal and conservative social media users. J. Personal. Soc. Psychol. 2020, 118, 805. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sakurai, M.; Ueta, K.; Hashimoto, Y. Exploring the Limits of LLMs in Simulating Partisan Polarization with Confirmation Bias Prompts. Eng. Proc. 2025, 107, 2. https://doi.org/10.3390/engproc2025107002

Sakurai M, Ueta K, Hashimoto Y. Exploring the Limits of LLMs in Simulating Partisan Polarization with Confirmation Bias Prompts. Engineering Proceedings. 2025; 107(1):2. https://doi.org/10.3390/engproc2025107002

Chicago/Turabian StyleSakurai, Masashi, Kento Ueta, and Yasuhiro Hashimoto. 2025. "Exploring the Limits of LLMs in Simulating Partisan Polarization with Confirmation Bias Prompts" Engineering Proceedings 107, no. 1: 2. https://doi.org/10.3390/engproc2025107002

APA StyleSakurai, M., Ueta, K., & Hashimoto, Y. (2025). Exploring the Limits of LLMs in Simulating Partisan Polarization with Confirmation Bias Prompts. Engineering Proceedings, 107(1), 2. https://doi.org/10.3390/engproc2025107002