Heart Attack Prediction Using Machine Learning Models: A Comparative Study of Naive Bayes, Decision Tree, Random Forest, and K-Nearest Neighbors †

Abstract

1. Introduction

2. Literature Review

3. Methodology

3.1. Data Overview

3.2. Data Preprocessing

- Age;

- Chest Pain Type (cp), Maximum Heart Rate (thalach), ST Depression (oldpeak), and Number of Major Vessels (ca).

3.3. Model Selection

3.3.1. K-Nearest Neighbors (KNN)

3.3.2. Random Forest

3.3.3. Decision Tree

3.3.4. Naïve Bayes

3.4. Model Evaluation

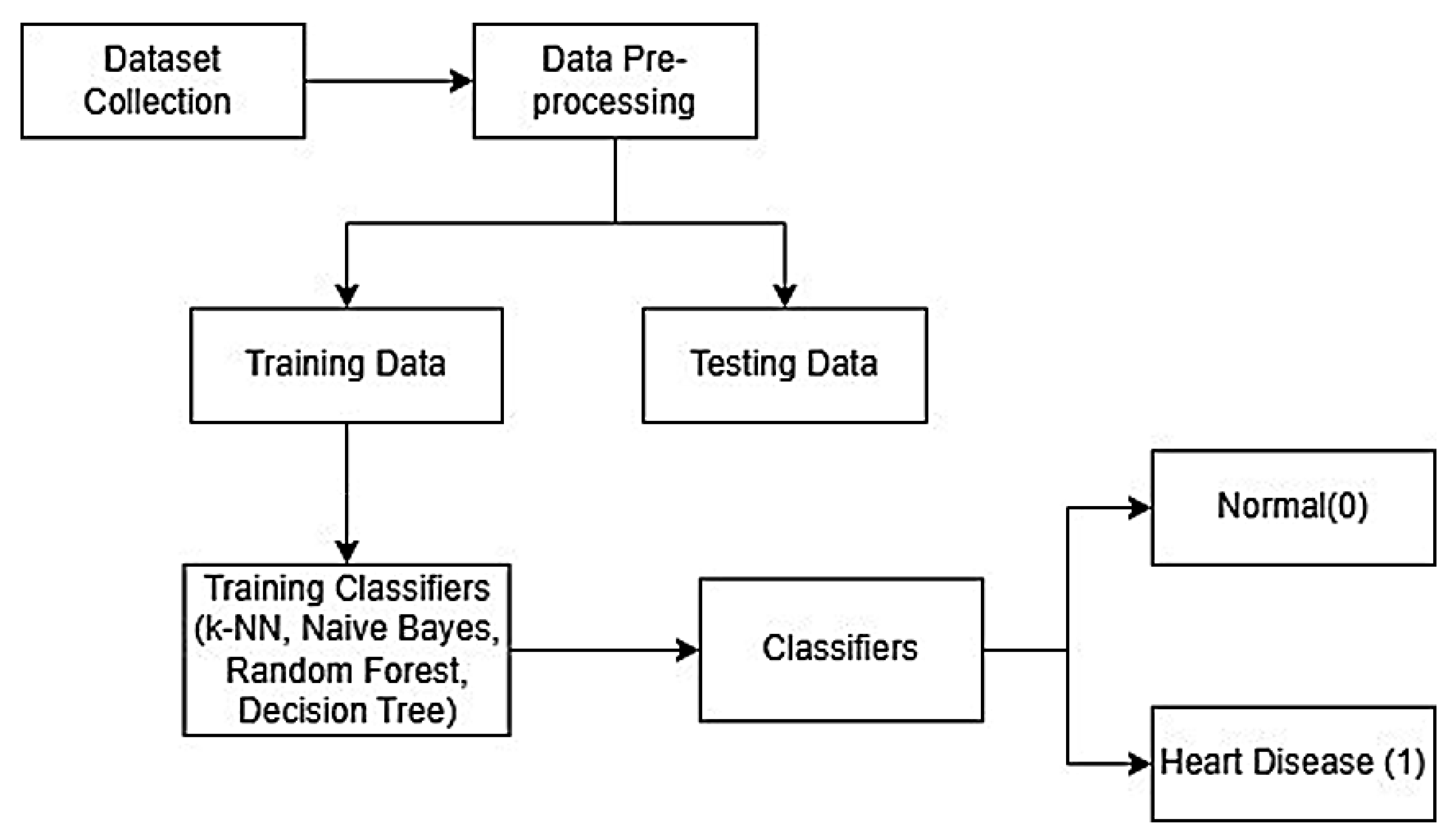

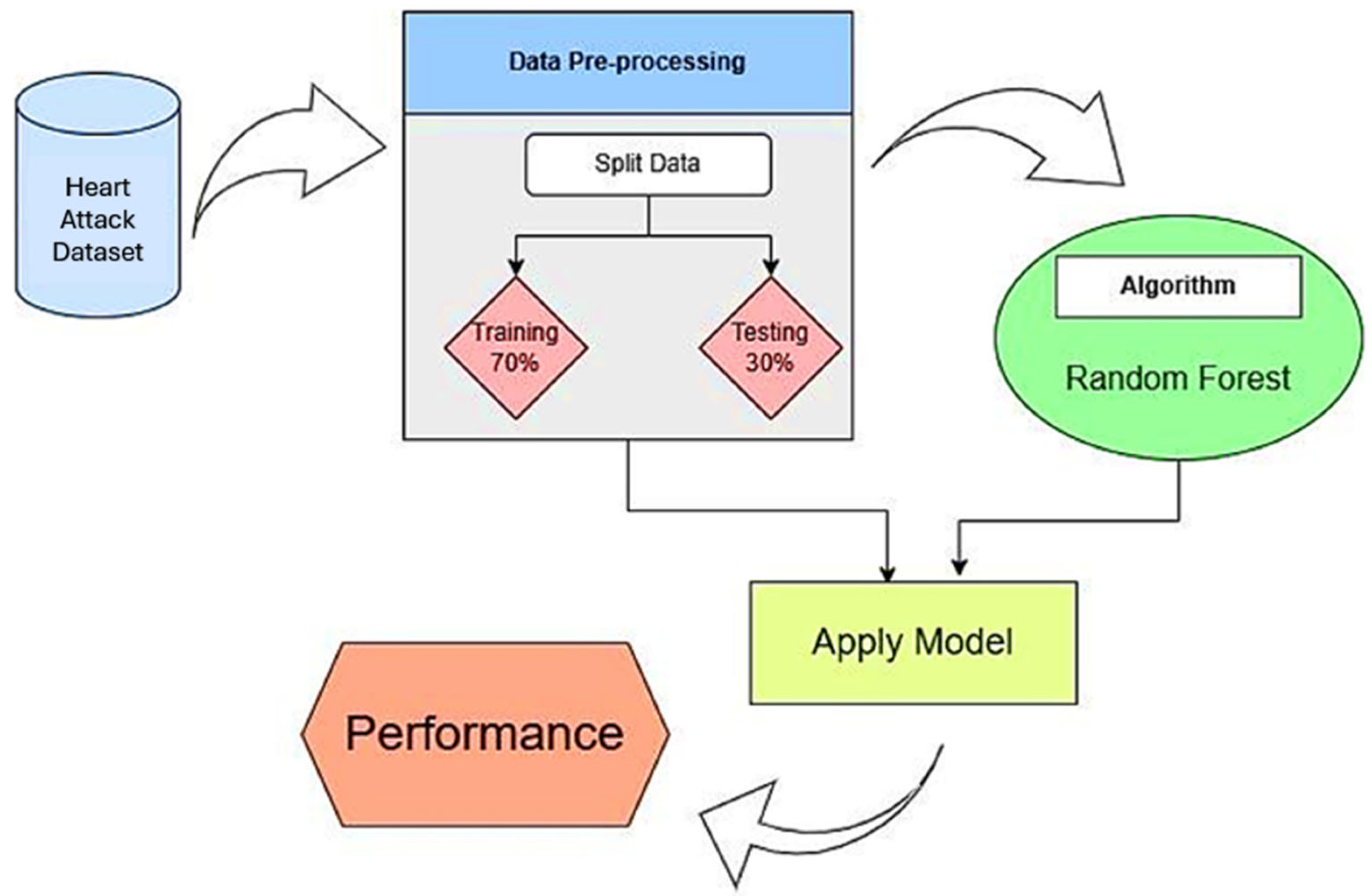

3.5. Proposed Workflow

- Data Collection: We collect 14 features related to cardiovascular health from the Kaggle heart attack data.

- Data Preprocessing: After preprocessing, we handle the categorical variables, normalize the continuous features, and find the most relevant features using correlation analysis.

- Model Training: The preprocessed dataset is trained on four machine learning models (KNN, Random Forest, Decision Tree, and Naive Bayes).

- Model Evaluation: We evaluate the accuracy of the models. To ensure robustness and to counteract overfitting, we use cross-validation.

- Comparison of Results: Performance of the models is compared, and the best models are determined.

4. Result Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rajdhan, A.; Agarwal, A.; Sai, M.; Ravi, D.; Ghuli, D.P. Heart disease prediction using machine learning. Int. J. Eng. Res. Technol. 2020, 9, 659–662. [Google Scholar] [CrossRef]

- Khateeb, N.; Usman, M. Efficient heart disease prediction system using K-nearest neighbor classification technique. In Proceedings of the international conference on big data and internet of thing, London, UK, 20–22 December 2017; pp. 21–26. [Google Scholar] [CrossRef]

- Fatima, M.; Pasha, M. Survey of Machine Learning Algorithms for Disease Diagnostic. J. Intell. Learn. Syst. Appl. 2017, 9, 1–16. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep Learning in Medical Image Analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [PubMed]

- Mondal, A.; Mondal, B.; Chakraborty, A.; Kar, A.; Biswas, A.; Majumder, A.B. Heart disease prediction using support vector machine and artificial neural network. Artif. Intell. Appl. 2023, 2, 45–51. [Google Scholar] [CrossRef]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Liu, X.; Marcus, J.; Sun, M.; et al. Scalable and Accurate Deep Learning with Electronic Health Records. npj Digit. Med. 2018, 1, 18. [Google Scholar] [CrossRef] [PubMed]

- Diwaker, C.; Tomar, P.; Solanki, A.; Nayyar, A.; Jhanjhi, N.Z.; Abdullah, A.; Supramaniam, M. A New Model for Predicting Component-Based Software Reliability Using Soft Computing. IEEE Access 2019, 7, 147191–147203. [Google Scholar] [CrossRef]

- Kok, S.H.; Abdullah, A.; Jhanjhi, N.Z.; Supramaniam, M. A Review of Intrusion Detection System Using Machine Learning Approach. Int. J. Eng. Res. Technol. 2019, 12, 8–15. [Google Scholar]

- Airehrour, D.; Gutierrez, J.; Kumar Ray, S. GradeTrust: A Secure Trust Based Routing Protocol for MANETs. In Proceedings of the 25th International Telecommunication Networks and Applications Conference (ITNAC), Sydney, Australia, 18–20 November 2015; pp. 65–70. [Google Scholar] [CrossRef]

| Authors | Models | Accuracy |

|---|---|---|

| Kumar [1] | Logistic Regression, Decision Tree, Random Forest | 75% (Decision Tree), 82% (Random Forest) |

| Gupta [2] | K-Nearest Neighbors (KNN) | 72% |

| Fatima [3] | Naive Bayes, K-Nearest Neighbors | 70% (Naive Bayes), 72% (KNN) |

| Shen [4] | Convolutional Neural Networks (CNN) | 85% |

| Rajkomar [6] | Recurrent Neural Networks (RNN), LSTM | 88% (LSTM) |

| Model | Accuracy |

|---|---|

| k-NN | 53.00% |

| Random Forest | 65.08% |

| Decision Tree | 65.03% |

| Naïve Bayes | 53.40% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haider, M.; Hussain, M.; Insany, G.P. Heart Attack Prediction Using Machine Learning Models: A Comparative Study of Naive Bayes, Decision Tree, Random Forest, and K-Nearest Neighbors. Eng. Proc. 2025, 107, 121. https://doi.org/10.3390/engproc2025107121

Haider M, Hussain M, Insany GP. Heart Attack Prediction Using Machine Learning Models: A Comparative Study of Naive Bayes, Decision Tree, Random Forest, and K-Nearest Neighbors. Engineering Proceedings. 2025; 107(1):121. https://doi.org/10.3390/engproc2025107121

Chicago/Turabian StyleHaider, Makhdoma, Manzoor Hussain, and Gina Purnama Insany. 2025. "Heart Attack Prediction Using Machine Learning Models: A Comparative Study of Naive Bayes, Decision Tree, Random Forest, and K-Nearest Neighbors" Engineering Proceedings 107, no. 1: 121. https://doi.org/10.3390/engproc2025107121

APA StyleHaider, M., Hussain, M., & Insany, G. P. (2025). Heart Attack Prediction Using Machine Learning Models: A Comparative Study of Naive Bayes, Decision Tree, Random Forest, and K-Nearest Neighbors. Engineering Proceedings, 107(1), 121. https://doi.org/10.3390/engproc2025107121