Abstract

The eye, in a dominant sense, can suffer disorders, such as myopia or nearsightedness, because of VDU radiation exposure. One symptom which is often caused by excessive use of VDU is eye strain. It is usually marked by an increase in the sensitivity of the eyes to light. It is known by comparing the diameter of the normal eye’s pupil and the strained eye’s pupil. People can prevent this disorder by detecting changes in the pupil’s diameter compared to the iris. Changes in the iris and pupil can be detected by using the Hough transformation to detect their shape and train perceptron neural network algorithms to recognize the patterns. As a VDI tool, an eye strain detection application can determine the condition of the user’s eyes. The level of accuracy of the method used to detect the iris and pupil using the Hough transformation is 100% for brown irises, 50% for blue irises, 33.3% for green irises, and it has a 100% accuracy in detecting an iris that is similar to the pupil and a 28.6% accuracy in detecting a pupil that is a similar color to the iris. There is also a difference in the level of accuracy of these case studies when different detection tools are used. The smartphone camera showed a 100% accuracy in detecting the iris and 28.6% accuracy in detecting the pupil. The SLR camera had a 100% accuracy in detecting the irises and 71.4% accuracy in detecting pupils, while the digital camera had 14.28% accuracy in detecting irises and a 0% accuracy in detecting a pupil. The accuracy of the perceptron algorithm in recognizing a pattern of eye strain is 70% with 20 sets of test data.

1. Introduction

Since the beginning of the 21st century, technological developments have been accelerating, especially in the field of information and communication technology (ICT), making it one of the most significant eras of discovery in the history of technology [1]. Some important inventions that have taken place are devices such as personal computers (PCs) and laptops, as well as smartphones and tablets that are used for communication, entertainment, and office work. The ease of communicating, working, and enjoying entertainment are some of the benefits of ICT. These benefits are most evident when technology is used routinely.

The development of information and communication technology (ICT) in the 21st century has advanced rapidly [2]. Devices such as computers, laptops, smartphones, and tablets are widely used for communication, entertainment, and work. However, excessive use can negatively affect health, especially that of the eyes. Prolonged screen time can cause eye fatigue, an early symptom of serious eye disorders such as myopia and glaucoma [3]. It is estimated that one-third of the world’s population will suffer from myopia by the end of the decade.

Early detection of eye fatigue is important to prevent further complications. Image processing technology can be used to detect signs of fatigue, such as blinking [4]. One useful method is the Hough transformation, which identifies key areas in the eye—specifically the iris and pupil—as Regions of Interest (ROI) [5]. In this study, eye fatigue is measured based on increased sensitivity to light, observed from a change in the distance between the pupil and iris under normal and fatigued conditions [6,7].

To recognize patterns of eye fatigue, an artificial neural network (ANN) algorithm is used. The combination of Hough transformation and ANN is expected to provide accurate fatigue detection [8]. This approach is still rarely explored and offers a potential solution for monitoring eye health and controlling screen exposure.

2. Eye Strain Detection Method

The eye helps focus light and send visual signals to the brain through the optic nerve [9]. Too much screen time can cause eye strain, also called Computer Vision Syndrome (CVS). Common symptoms include tired eyes, dry eyes, headaches, and blurred vision. One sign of eye strain is a change in pupil size due to increased light sensitivity [10].

To detect this, the Hough transformation can be used to find the iris and pupil in eye images. It measures pupil size changes between normal and tired eyes. Then, artificial neural networks (ANNs) help analyze these patterns and classify whether the eye is normal or strained. This method works well with images from webcams or phones and can help prevent serious eye problems by warning users early [11].

3. Hough Transformation

For the first time, the Hough transformation was introduced by Paul Hough in 1962 to detect straight lines. The Hough transformation is a technique of image transformation that can be used to isolate an object from the image by finding its limits (boundary detection) [12]. Because the purpose of the transformation is to obtain more specific features, the Classical Hough Transformation is the most common technique used to detect objects in the shape of curves such as a lines, circles, ellipses, or parabolas. The main advantage of the Hough transformation is its ability to detect edges along boundary features, while remaining relatively unaffected by noise. The Hough transformation also involves the application of specific mathematical formulas.

If the object being sought is in the form of a circle, then the transformation of a circle of Hough should be used. The procedure used in detecting circles is equal to the transformation of Hough on the object outline, but it is performed using complex dimensions within the parameters of 3D space (x, x0, y, y0, r). Where x0 and y0 are the coordinates of the center of the circle and r is the radius of the circle as shown in the Equation (1) [13].

(x − x0)2 + (y − y0)2 = r2

The process of the transformation of a circle of Hough covers three basic parts, as follows.

- Detection of the edge. The purpose of this step is to decrease the number of dots in the search space for the object. When the algorithm of Hough transformation discovers the point of the edge detector, The calculation is performed only at that particular point on the boundary. Edge detection in this study was performed using the Canny, Roberts Cross, and Sobel operators, with the purpose of maximizing the signal-to-noise ratio and minimizing error.

- The circle Hough transformation formed a circle in line with the edge with a radius of r.

- After the depiction of a circle aligned with the edge was completed, the area most frequently intersected by the circle was identified, and this area was assumed to be the midpoint of the detected image.

4. Perceptron

Artificial neural network (ANN) is an information processing method inspired by how the human brain works, where learning happens through examples [14]. ANN is used in tasks like pattern recognition and classification by adjusting its structure through a learning process. One simple type of ANN is perceptron, a single-layer network developed in 1962 by Rosenblatt, and later discussed by Minsky and Papert [15,16]. The structure of a simple perceptron is illustrated in Figure 1.

Figure 1.

The architecture of perceptron.

4.1. Perceptron Training Algorithms

4.1.1. Initialize All Weighted with Bias (Initial Value = 0)

Set the learning rate to α (0 ≤ α < 1). For simplification, the learning rate can be set equal to 1. The threshold (θ) is used within the activation function.

4.1.2. For Each Training Sample, Do:

- ▪

- Set the activation input:xi=Si

- ▪

- Compute the net input:Yin = b + Σi xiwi

- ▪

- Apply the activation function:

- ▪

- Compare the output y with the target t:If y ≠ t, update the weights and bias:wi(new) = wi (old) + α × t × xib(new) = b(old) + α × t

- If y = t, no change in weight or bias:wi(new) = wi (old)b(new) = b(old)

Continue the iteration until all of the outputs have a pattern similar to that of the target network. If all outputs are equal to the target network, then the network has recognized the pattern, and iteration is stopped.

Perceptron training algorithms are used for bipolar or binary input with a particular bias, and the threshold (θ) can be set as required. One cycle of training involves all of the input data, which is called one epoch [14,15,16].

5. The Design of the System

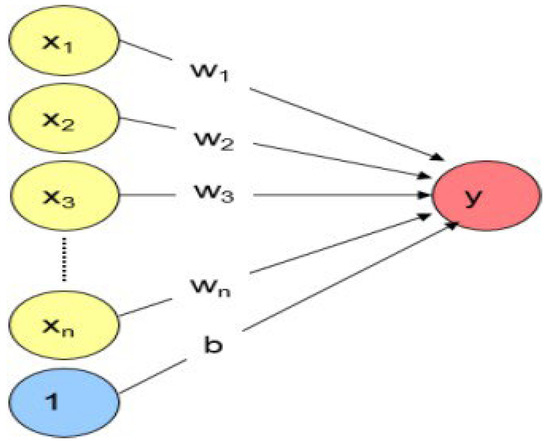

Eye strain detection using Hough transformation helps control PC usage. The system combines a Haar cascade classifier to detect eyes, the Hough transformation to locate the iris and pupil, and an artificial neural network (ANN) to analyze eye conditions [17]. Hough transformation is key for selecting pupil and iris areas in normal and eye strain data. The system workflow is shown in Figure 2.

Figure 2.

Tired eyes detection system (AI—generated by the authors using ChatGPT/DALL·E, 2025).

The system starts by detecting the user’s eyes, then selects the iris and pupil as training data. It compares new data with existing training data and prompts if data needs updating. After training, the ANN classifies the current eye condition using test data extracted by Hough transformation. The results provide information and recommendations for ICT use, with reminders to check eye condition regularly via notifications.

6. Detection Measures

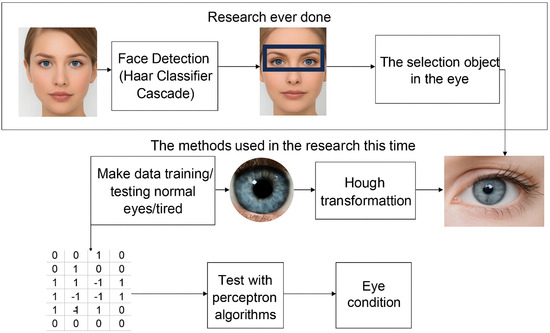

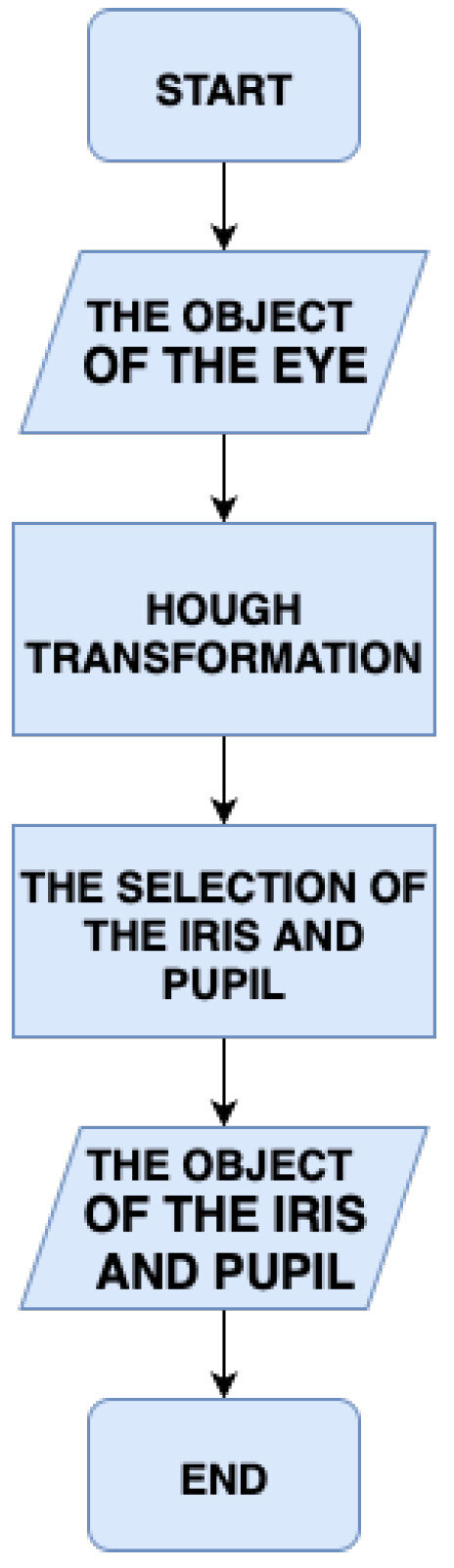

Several stages are involved in obtaining iris and pupil training data for analyzing whether the eye is normal or strained [4]. These stages include (1) eye detection, (2) iris and pupil selection, (3) training data creation, (4) training data analysis, (5) neural network design, and (6) eye condition detection. The Haar cascade classifier detects eyes on the face, then selects the iris and pupil as the Regions of Interest (ROI), as shown in Figure 3.

Figure 3.

Object selection flowchart for iris and pupil.

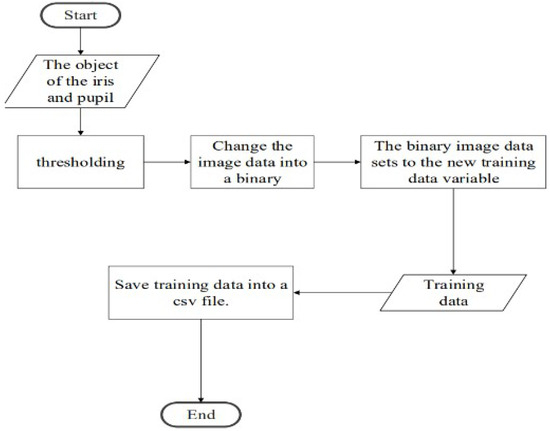

Training data is created by thresholding the iris and pupil images to convert them into binary values to be input into the neural network. The data is categorized as detected, fault detected, or not detected, and saved in Excel for retraining to improve accuracy. The training data consists of normal and eye strain categories, where eye strain is indicated by a dilation in the pupil–iris distance due to increased light sensitivity [18]. The training data process is shown in Figure 4.

Figure 4.

Training flowchart data.

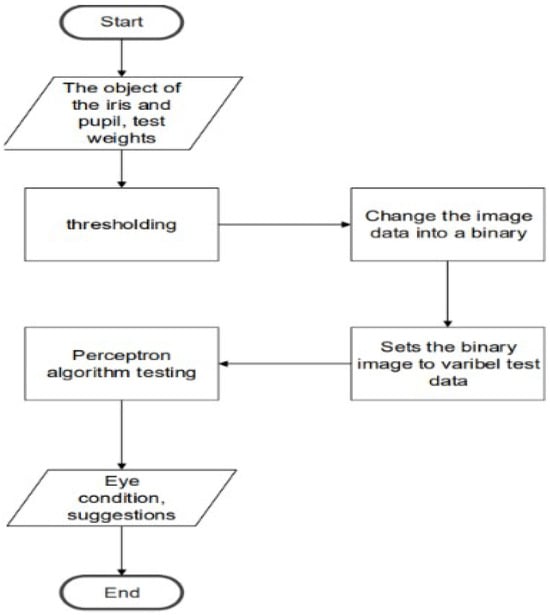

A single-layer perceptron neural network is used with 400 binary inputs, suitable for classifying two eye conditions. During detection, the perceptron uses weights from training to test eye images converted to binary. The eye condition detection process and PC usage recommendations are as shown in Figure 5.

Figure 5.

Eye condition detection flowchart.

The resulting outputs of the perceptron algorithm are calculations for testing the eye condition data along with a suggestion for the use of a PC. The eye condition detection process can be carried out after the user employs the neural network training perceptron.

7. Result and Discussion

The eye strain detection system was developed based on the design of a system that can be used to test some images of eyes with the purpose of knowing the level of accuracy of the detection method used.

7.1. Testing Hough Transformation

Initial testing was carried out to detect irises and pupils in 14 images, which were divided into two groups: 7 images with different iris colors and 7 images with almost the same iris and pupil colors. The threshold for the iris was set at 125, while that for the pupil was set at 50. These threshold values were taken based on the results of the best experiment on the existing training data [10].

The results of iris and pupil detection testing:

- Iris detection: five irises detected correctly, and two incorrect detections.

- Pupil detection: four pupils detected correctly, three incorrect detections, and two not detected. In tests with almost identical iris and pupil colors, the results found are as follows:

- Iris detection: seven irises detected correctly.

- Pupil detection: two pupils detected correctly, five incorrect detections, one not detected.

- Further testing was carried out with three different camera models (smartphone, SLR camera, and digital camera), producing the following results:

- Smartphone (Xiaomi mi 4, 13 MP):

- Irises detected, one not detected.

- Incorrect pupil detections, with two not detected.

- SLR camera (Nikon E5700, 16 MP):

- Irises detected, five pupils correctly detected, one incorrect detection, one not detected.

- Digital Camera (Kodak EasyShare Z981, 10 MP):

- Two irises detected, one incorrect detection, four not detected.

- Three incorrect pupil detections, and four not detected.

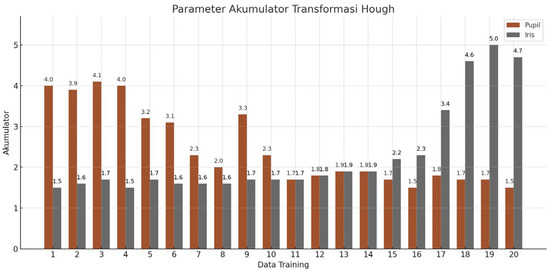

This image shows a graph of the accumulator parameters for irises and pupils in the training data. The graph illustrates that the larger the accumulator value, the smaller the resulting selection area, as shown in Figure 6.

Figure 6.

Hough transform accumulator parameters.

7.2. Testing Neural Network

In this section, artificial neural network (ANN) testing is carried out to detect eye conditions (normal or tired) based on the detection results of the iris and pupil obtained using Hough Transform. This testing process aims to measure the accuracy and precision of the application in detecting the level of eye fatigue after prolonged use of information and communication technology (ICT) [19].

Bipolar Conversion Process

The data used in artificial neural network testing is the result of iris and pupil detection that has been converted into bipolar format. The iris is given a value of 1, the pupil is given a value of −1, and the sclera is given a value of 0. This data is then stored in a matrix that will be used as an input for the artificial neural network.

This conversion process is important for preparing data before inputting it into an artificial neural network for training, as shown in Table 1, which presents the bipolar conversion results.

Table 1.

Bipolar conversion results.

7.3. Artificial Neural Network Training

Artificial neural network training is carried out using a single-layer perceptron algorithm. Data [20] that has been converted into bipolar is used to train the model, where the model will learn to recognize eye conditions, whether normal or tired, based on the results of iris and pupil detection.

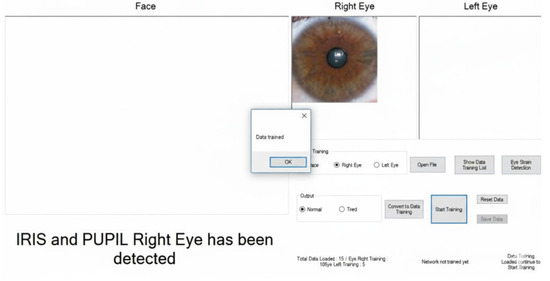

The training process is carried out for five iterations (epochs). In each epoch, the model adjusts the weights until the appropriate weights are found to classify the eye condition properly. The training process is stopped after five epochs or when the optimal weights are found, as shown in Figure 7, which illustrates the conducting of artificial neural network training.

Figure 7.

Conducting artificial neural network training.

7.4. Binary Conversion of Eye Images

After the artificial neural network has been trained, testing is carried out using image data that has been converted to bipolar format. This test data covers normal and tired eye conditions, and the detection results are compared with the actual conditions. This testing process aims to determine how accurate the model is in detecting eye conditions based on the given input image.

The following are the test results on several sets of test data, which include input image patterns, detection results, and actual eye conditions, as shown in Table 2.

Table 2.

Test data.

The following graph shows the distribution of eye detection results between normal and tired eye conditions.

7.5. Testing Results Analysis

7.5.1. The Test Results Show

That the artificial neural network is able to detect eye conditions with fairly good accuracy. Based on the results of testing image data, this model can classify eye conditions as normal or tired with an adequate level of success, although some data experience detection errors.

This test also shows that the image quality, lighting, and conditions of the image used can influence the level of iris and pupil detection. Therefore, the setting of thresholds and other parameters in iris and pupil detection needs to be considered so that the detection results are more accurate.

From the tests carried out, this eye fatigue detection application using artificial neural networks is quite effective in detecting eye conditions based on iris and pupil detection. Although the accuracy rate obtained was around 70% in tests with 20 sets of test data, these results indicate that this model can be a good basis for the development of better eye fatigue detection applications in the future.

7.5.2. Testing and Detection Results

The research on eye strain detection using Hough transformation leads to several important findings. The Region of Interest (ROI) selection process is critical for the accurate detection of eye strain. Using the Haar Cascade Classifier method, the face and eyes are identified in the image. Once the eyes are detected, Hough transformation processes the image to extract circular areas of the iris and pupil, enabling focused analysis of the regions that indicate eye strain.

Eye fatigue detection is accomplished with the help of an artificial neural network (ANN), which takes data from the iris and pupil selections, converting them into bipolar numbers. The ANN then uses these numbers to classify eye conditions based on the changes in the shape of the pupil and iris. Combining Hough transformation and ANN effectively captures both geometric features and patterns related to eye strain. The accuracy of the method was evaluated using the Single Layer Perceptron algorithm with 20 training data samples. The accuracy for iris detection was 100% for brown irises, 50% for blue irises, and 33.3% for green irises. The method showed perfect results for detecting irises similar in color to the pupil, with 100% accuracy. Detection accuracy dropped to 28.6% when the pupil and iris were similar in color. This highlights challenges when differentiating these features under certain conditions.

Testing with various camera models revealed differences in accuracy. The smartphone camera detected the iris with 100% accuracy but only detected the pupil with 28.6% accuracy. The SLR camera showed 100% accuracy for the iris and 71.4% for the pupil. The digital camera, however, had much lower performance, with only 14.28% for iris detection and 0% for the pupil. These findings stress the importance of using higher-quality imaging equipment for better performance.

The perceptron algorithm’s accuracy in recognizing eye strain patterns was 70% with 20 test data samples. Although this is a decent result, further refinement of the system is needed to improve detection accuracy across a wider range of individuals and conditions. Expanding the training data set, including more varied eye conditions, and applying advanced machine learning models could help enhance accuracy.

Hough transformation and ANN present a promising approach to detecting eye strain, with room for improvement. The results form a solid foundation for developing a reliable eye strain detection system, which can prevent more severe eye conditions like myopia and glaucoma. Future improvements can include real-time monitoring, the use of alternative imaging techniques, and more advanced algorithms for better detection capabilities.

Author Contributions

Conceptualization, A.S. and R.R.; Methodology, A.S.; Software, R.R.; Validation, S. and A.M.; Formal Analysis, A.S.; Investigation, R.R.; Resources, S.; Data Curation, A.M.; Writing—Original Draft Preparation, A.S. and R.R.; Writing—Review and Editing, A.S. and A.M.; Visualization, R.R.; Supervision, A.S.; Project Administration, A.S.; Funding Acquisition, R.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study are openly available as the Iris of Eye Dataset by Mokhtar [20], which can be accessed at https://www.kaggle.com/datasets/mohmedmokhtar/iris-of-eye-dataset (accessed on 2 May 2025).

Acknowledgments

During the preparation of this manuscript, the authors used OpenAI’s ChatGPT (GPT-4, 2025) and DALL·E 3 for generating and refining Figure 2. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Palagolla, W.; Wickramarachchi, R. Effective Integration of ICT to Facilitate the Secondary Education in Sri Lanka. arXiv 2019, arXiv:1901.00181. [Google Scholar] [CrossRef]

- Riis, U. ICT Literacy: An Imperative of the Twenty-First Century. Found. Sci. 2017, 22, 575–583. [Google Scholar] [CrossRef]

- Buabbas, A.J.; Hasan, H.; Shehab, A.A. Parents’ attitudes toward school students’ overuse of smartphones and its detrimental health impacts: Qualitative study. JMIR Pediatr. Parent. 2021, 4, e24196. [Google Scholar] [CrossRef] [PubMed]

- Renuga Devi, S.; Gopalakrishnan, B.; Gayathri, K. Detection of Eye Strain using Retina Medical Images through CNN. In Proceedings of the 2021 Smart Technology, Communication and Robotics (STCR), Kuala Lumpur, Malaysia, 3–4 July 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Srikrishna, M.; Nirmala, G. Realization of Human Eye Pupil Detection System using Canny Edge Detector and Circular Hough Transform Technique. In Proceedings of the 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Chennai, India, 16–17 March 2023; pp. 861–865. [Google Scholar] [CrossRef]

- Abdurahman; Sutarno; Passarella, R.; Prihanto, Y.; Gultom, R. System Design of Iris Ring Detection Using Circular Hough Algorithm for Iris Localization. In Proceedings of the Sriwijaya International Conference on Information Technology and Its Applications (SICONIAN 2019), Palembang, Indonesia, 13–14 November 2019. [Google Scholar] [CrossRef]

- Gokulavani, D.; Professor, E. A Performance Analysis of Different Detection Techniques of Human Eye Pupils. In Proceedings of the 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), Chennai, India, 6–7 April 2023; pp. 824–827. [Google Scholar] [CrossRef]

- Tanazri, A.; Kusuma, P.; Setianingsih, C. Detection of Pterygium Disease Using Hough Transform and Forward Chaining. In Proceedings of the 2021 1st International Conference on Cyber Management and Engineering (CyMaEn), Jakarta, Indonesia, 6–7 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Gupta, S.; Thakur, S.; Gupta, A. An enhancive Watershed transformation approach to segment Optic Disk from Smartphone Fundus Images. In Proceedings of the 2021 International Conference on Technological Advancements and Innovations (ICTAI), Pune, India, 22–23 October 2021; pp. 188–191. [Google Scholar] [CrossRef]

- Kaur, R.; Guleria, A. Digital Eye Strain Detection System Based on SVM. In Proceedings of the 2021 5th International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 3–5 June 2021; pp. 1114–1121. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, J.; Xue, Y. Iris boundary localization based on Hough transform and the quadratic circle data compensation. Int. J. Imaging Syst. Technol. 2021, 31, 1357–1365. [Google Scholar] [CrossRef]

- Chen, D.; Zheng, P.; Chen, Z.; Lai, R.; Luo, W.; Liu, H. Privacy-Preserving Hough Transform and Line Detection on Encrypted Cloud Images. In Proceedings of the 2021 IEEE 20th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Shenyang, China, 22–24 October 2021; pp. 486–493. [Google Scholar] [CrossRef]

- Sutarno; Abdurahman; Passarella, R.; Prihanto, Y.; Gultom, R.A.G. Mathematical Implementation of Circle Hough Transformation Theorem Model Using C# For Calculation Attribute of Circle. In Proceedings of the Sriwijaya International Conference on Information Technology and Its Applications (SICONIAN 2019), Palembang, Indonesia, 13–14 November 2019. [Google Scholar] [CrossRef]

- Chai, J. Optimizing neural network training with genetic algorithms. Appl. Comput. Eng. 2024, 42, 220–224. [Google Scholar] [CrossRef]

- Rosenblatt, F. The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Minsky, M.; Papert, S. Perceptrons: An Introduction to Computational Geometry; MIT Press: Cambridge, MA, USA, 1969; ISBN 978-0262630221. [Google Scholar]

- Shamil, H.; Kindy, B.; Abbas, A. Detection of Iris Localization in Facial Images Using Haar Cascade Circular Hough Transform. J. Southwest Jiaotong Univ. 2020, 55, 4. [Google Scholar] [CrossRef]

- Tang, Q.; Wei, S.; He, X.; Zheng, X.; Tao, F.; Tu, P.; Gao, B. Lutein-rich beverage alleviates visual fatigue in the hyperglycemia model of Sprague–Dawley rats. Metabolites 2023, 13, 1110. [Google Scholar] [CrossRef] [PubMed]

- Wang, G.; Cui, Y. Meta-Analysis of Visual Fatigue Based on Visual Display Terminals. BMC Ophthalmol. 2024, 24, 489. [Google Scholar] [CrossRef] [PubMed]

- Mokhtar, M. Iris of Eye Dataset; Kaggle: 2020. Available online: https://www.kaggle.com/datasets/mohmedmokhtar/iris-of-eye-dataset (accessed on 2 May 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).