Approximation of Dynamic Systems Using Deep Neural Networks and Laguerre Functions †

Abstract

1. Introduction

- -

- -

2. Material and Methods

2.1. Problem Statement

- -

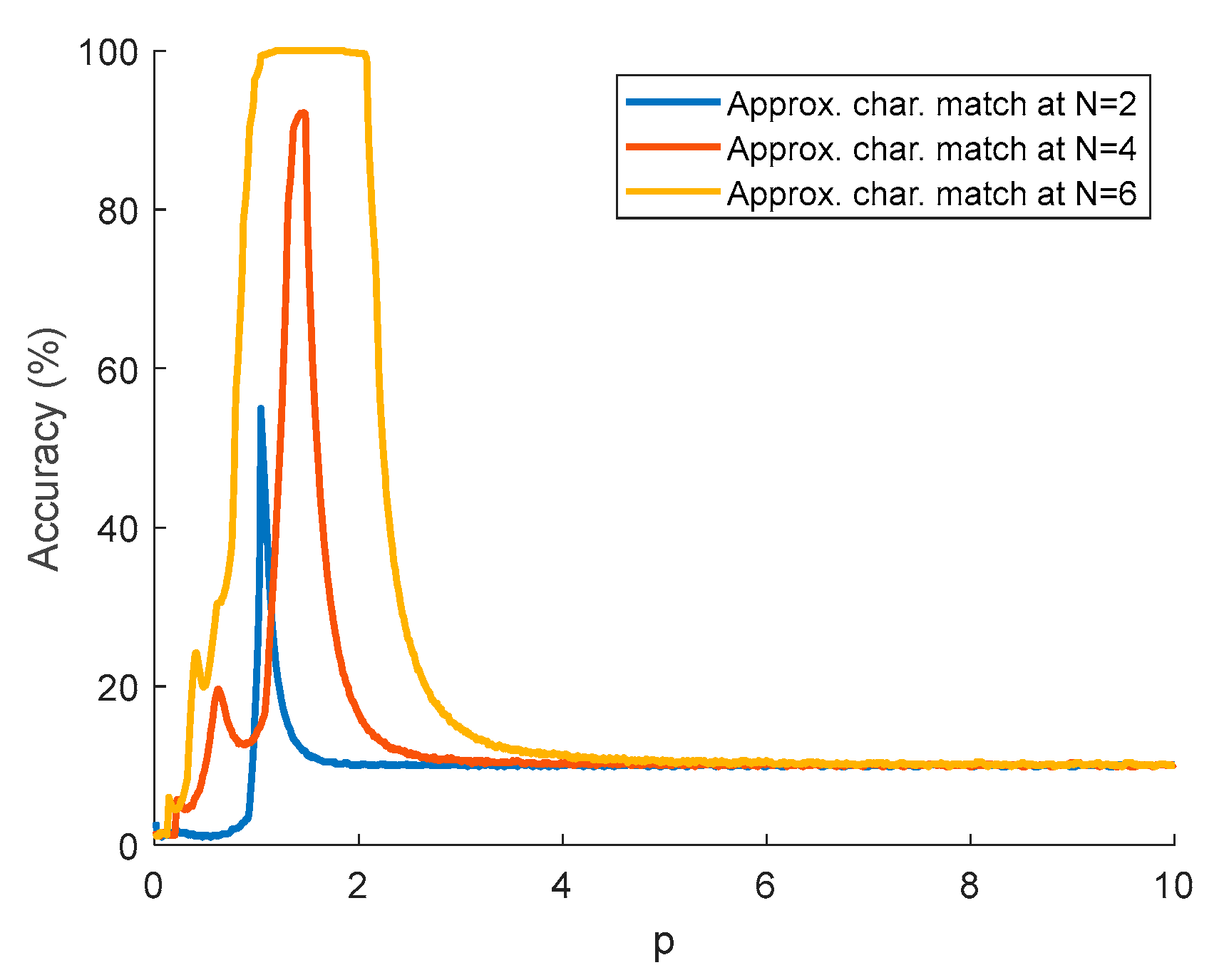

- Selection of the time scaling factor—although the influence of this parameter on orthonormal functions has been well studied, there is no universal method for determining it, especially in the context of approximation tasks.

- -

- Determining the number of orthonormal functions—although a definition for the completeness of the set of orthonormal functions exists [35], it assumes a finite number of functions for approximation. However, excessively increasing this number leads to significant computational complexity. It is necessary to find the optimal number of functions that is sufficient for an accurate representation of a system of a given order.

- -

- Calculation of the decomposition coefficients—this process is computationally intensive, especially when implementing MPC with a long prediction horizon, using adaptive basis functions, or dealing with objects exhibiting parametric uncertainty and noise. In such cases, continuous recalculation of the coefficients can complicate the application of the method. The problem is further exacerbated by the fact that Laguerre orthonormal functions cannot directly approximate the transient characteristics of open systems.

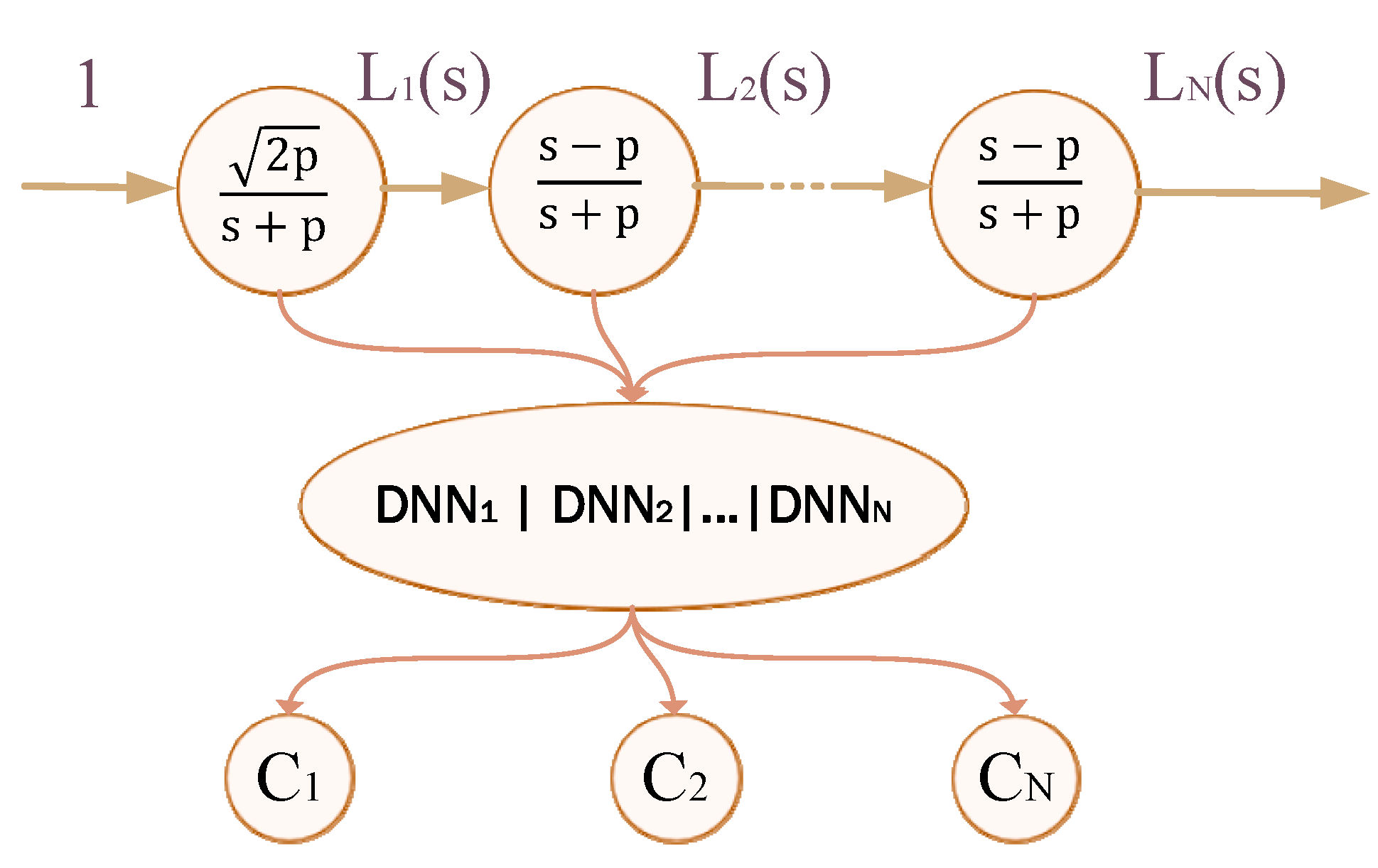

2.2. Laguerre Orthonormal Functions

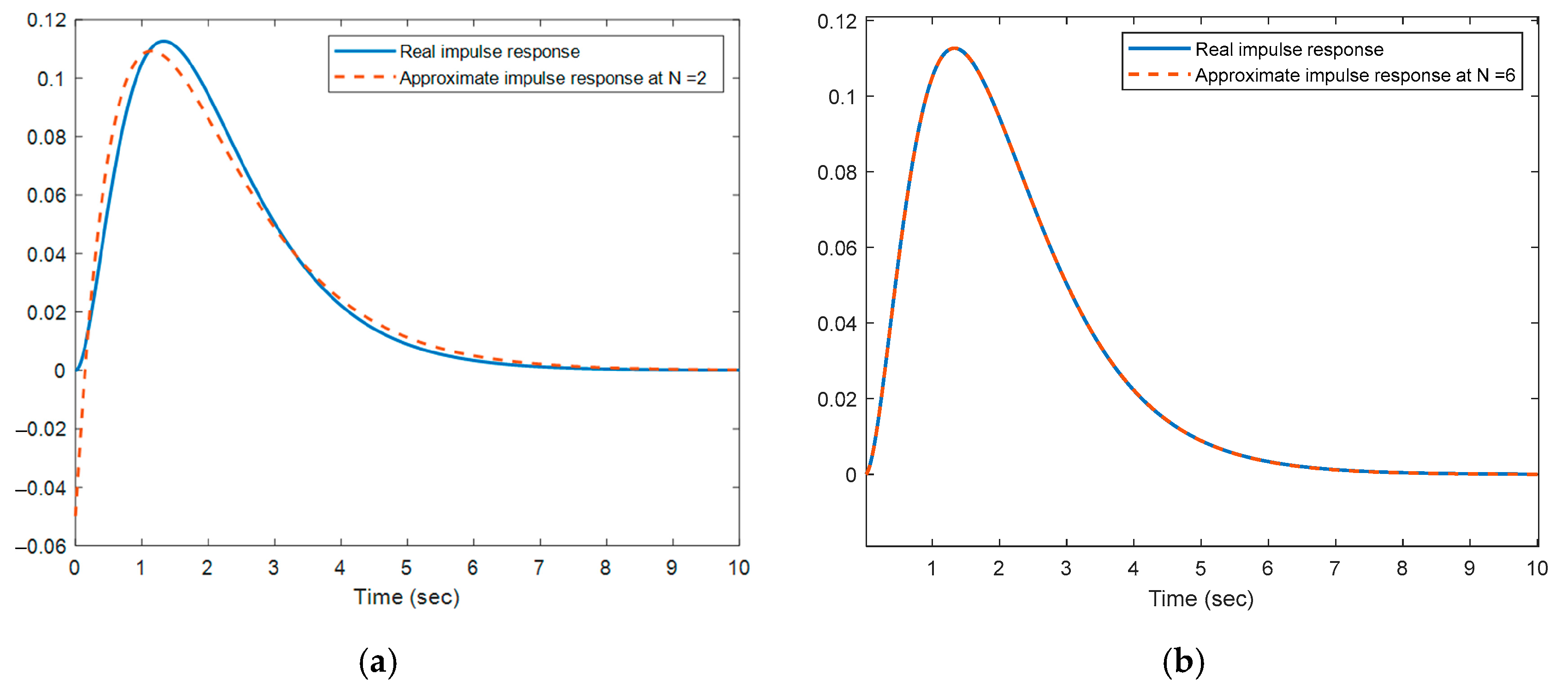

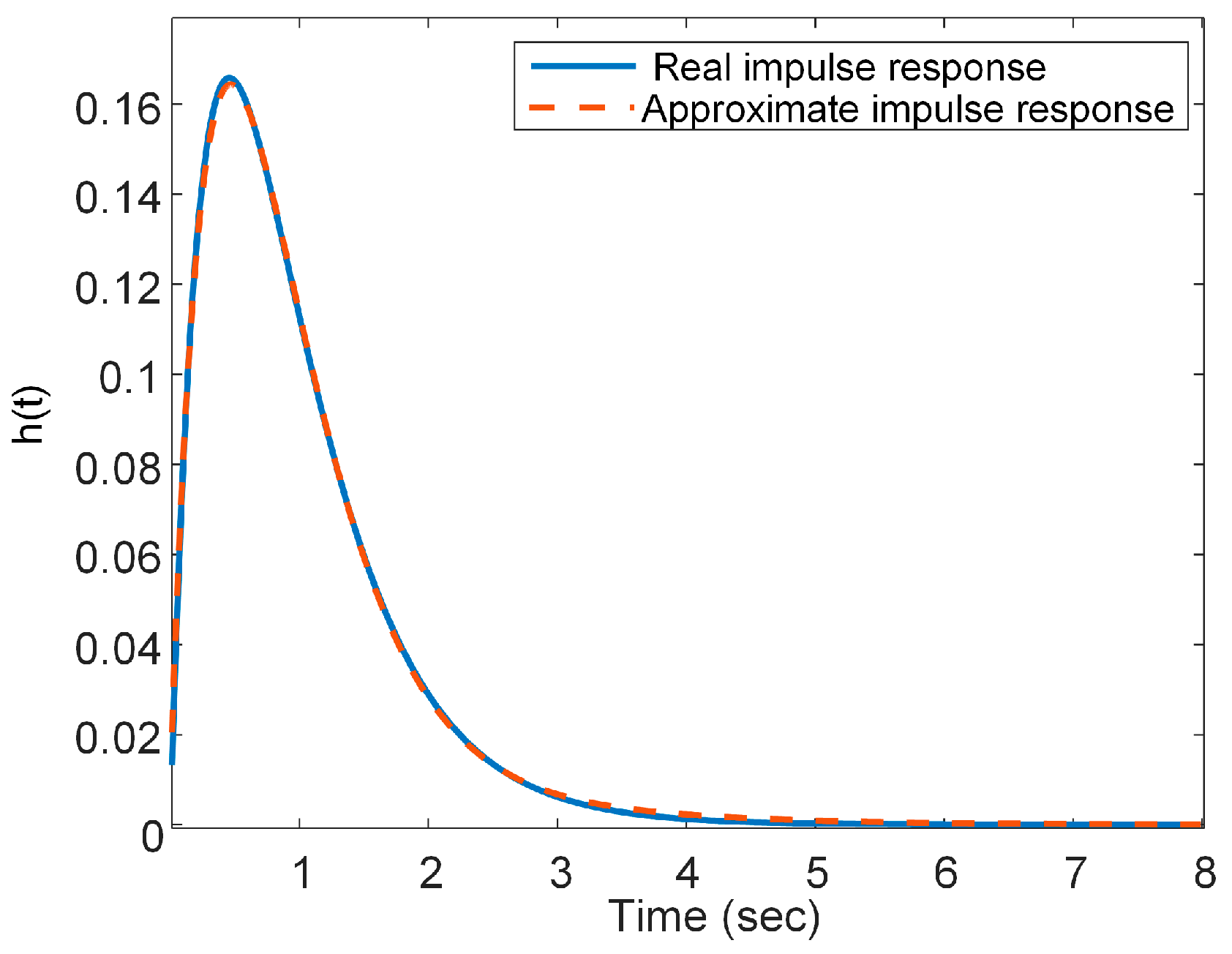

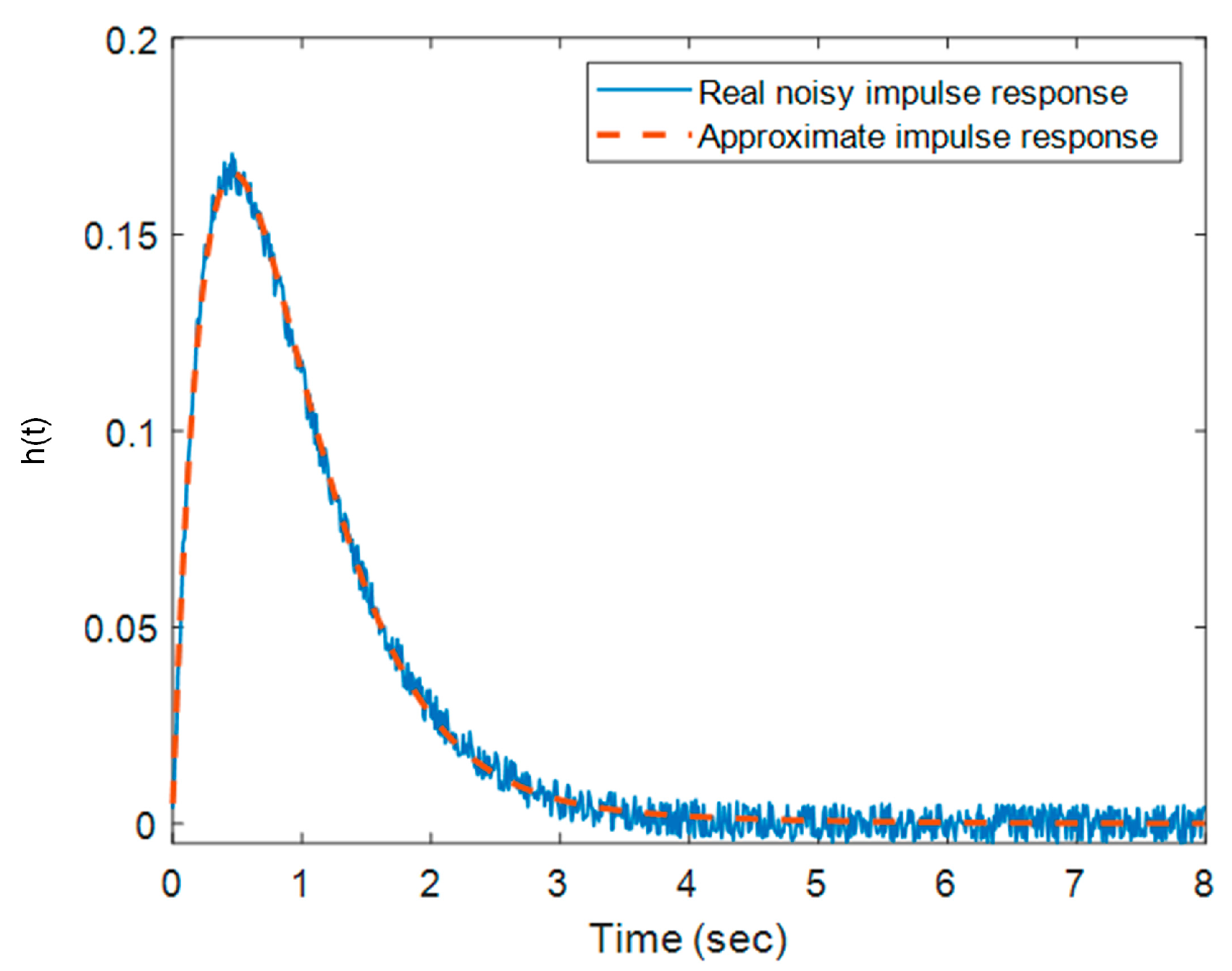

2.3. Approximation of Systems Using Laguerre Functions

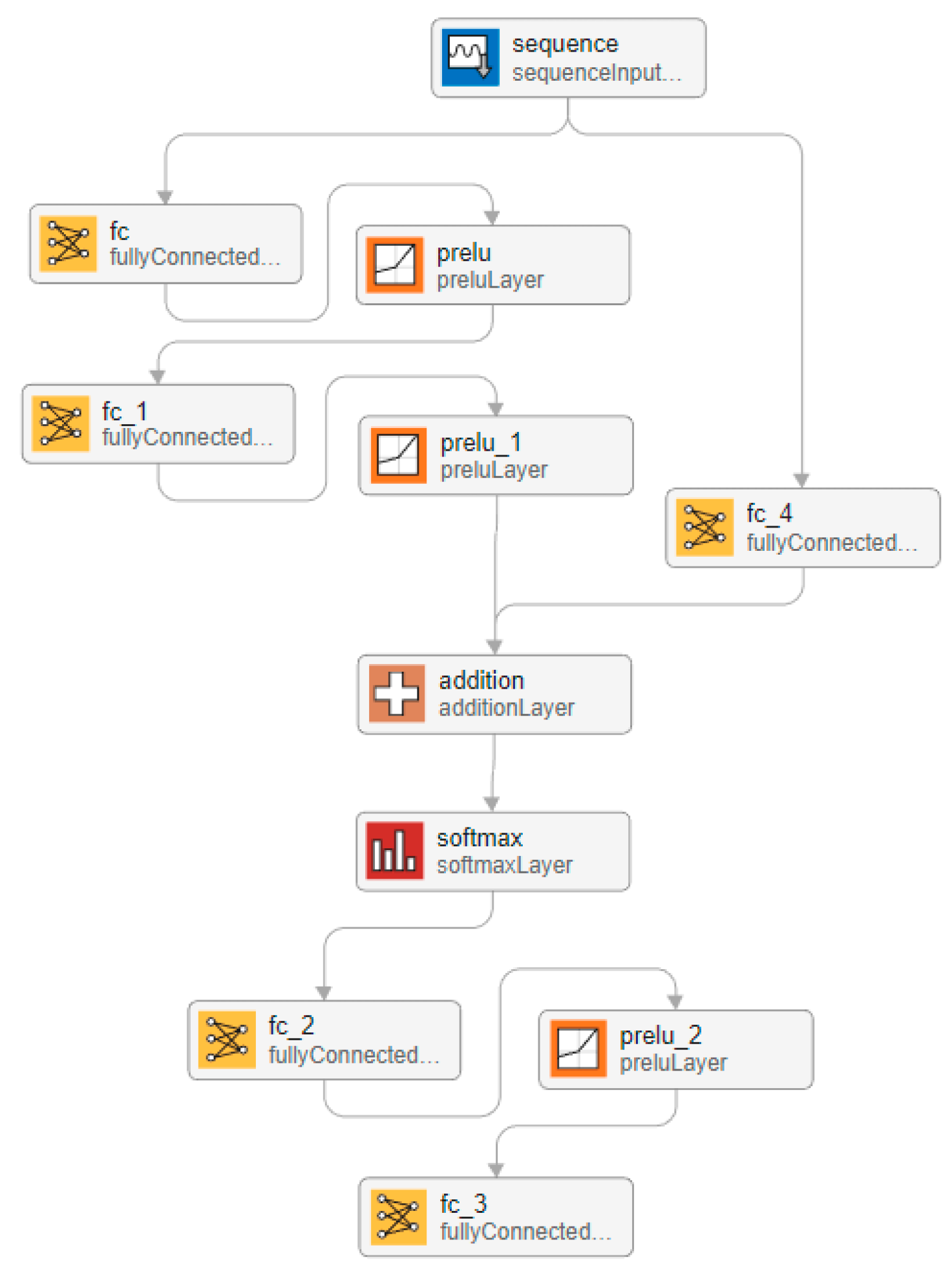

2.4. Approximating Systems Using Laguerre Functions and DNN

3. Simulation Results and Analyses

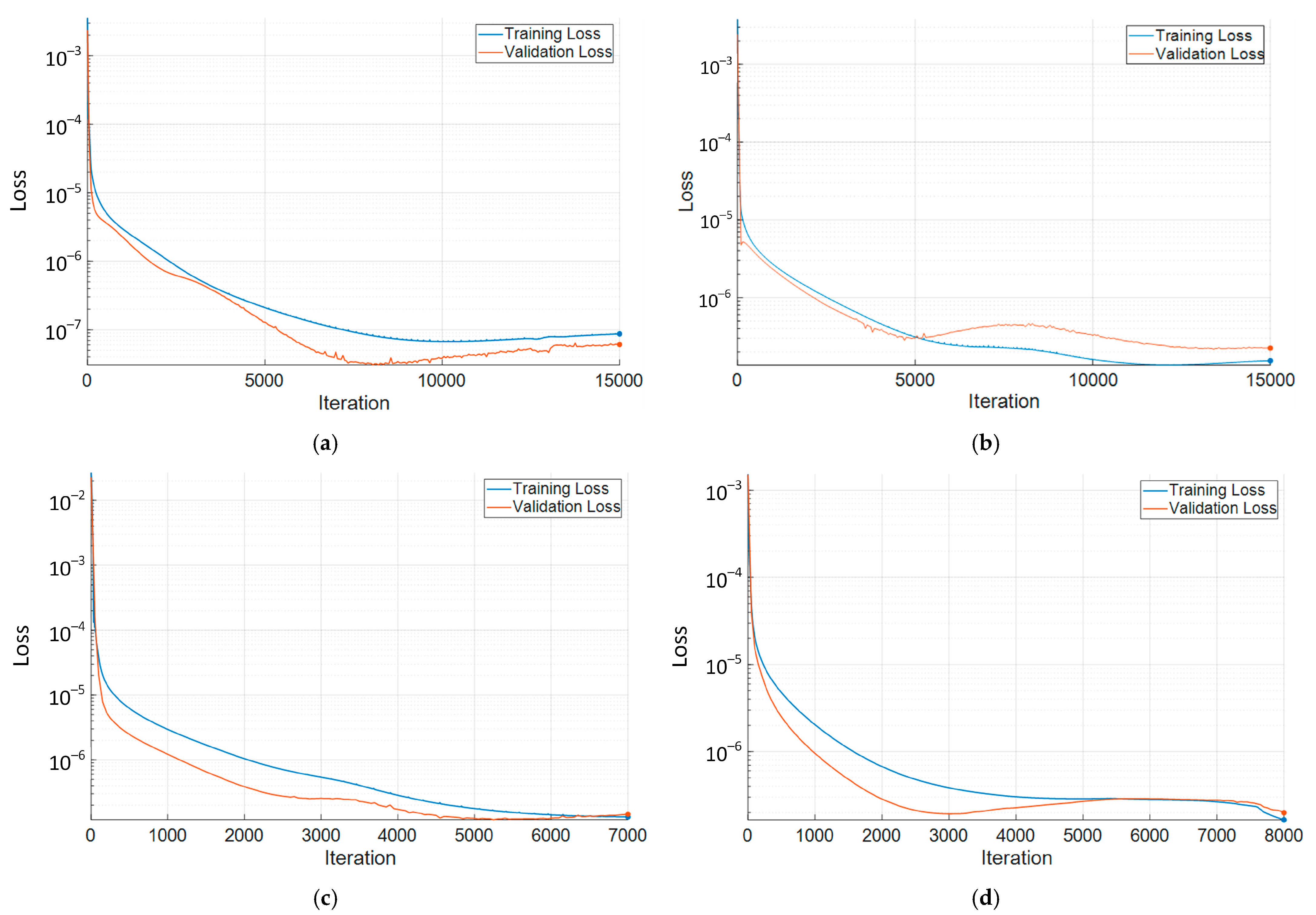

- Generation of Laguerre functions—usually up to 10 are sufficient depending on the order of the approximated system.

- The impulse characteristics of the selected system are calculated—their number depends on the number of parameters, the degree of the assumed parametric uncertainty and the step of change for the parameters. A set of characteristics is generated when fully combining the possible combinations of uncertainties. Subsequently, the procedure is repeated with noise included at different levels.

- The data for training the DNN is formed—Simpson’s algorithm is used to calculate a definite integral, which gives better accuracy compared to other simplified methods. N input data arrays are formed with the size of the number of values of the function and the number of training samples obtained. For the desired result, a one-dimensional array with the decomposition coefficients is again formed.

- Training of neural networks—Neural networks are trained using the ADAM optimizer. The maximum number of epochs is 4500, InitialLearn-Rate = 1 × 10−5, and GradientThreshold = 0.001. The values of these parameters provide a short training time, while stabilizing it, which is very important for approximating complex functions and prevents an explosive gradient, which is especially useful in DNNs. Usually, in the training process, a percentage ratio is chosen of what part of the data is used for training (those that are fed to the DNN during training) and validation (used to measure the state to stop training). The percentage ratio used is 85% for training and 15% for validation.

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Y.; Tang, L.; Wen, J.; Zhan, Q. Recognition of concrete microcrack images under fluorescent excitation based on attention mechanism deep recurrent neural networks. Case Stud. Constr. Mater. 2024, 20, e03160. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, Y.; Cheng, Z.; Song, Z.; Tang, C. MCA: Multidimensional collaborative attention in deep convolutional neural networks for image recognition. Eng. Appl. Artif. Intell. 2023, 126, 107079. [Google Scholar] [CrossRef]

- Zhao, M.; Li, S.; Chen, H.; Ling, M.; Chang, H. Distributed solar photovoltaic power prediction algorithm based on deep neural network. J. Eng. Res. 2024; in press. [Google Scholar] [CrossRef]

- Xu, Z.-Q.J.; Zhang, L.; Cai, W. On understanding and overcoming spectral biases of deep neural network learning methods for solving PDEs. arXiv 2025, arXiv:2501.09987. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Hu, J.; Yang, Z. Deep neural networks-prescribed performance optimal control for stochastic nonlinear strict-feedback systems. Neurocomputing 2024, 610, 128633. [Google Scholar] [CrossRef]

- Aydogmus, O.; Gullu, B. Implementation of singularity-free inverse kinematics for humanoid robotic arm using Bayesian optimized deep neural network. Measurement 2024, 229, 114471. [Google Scholar] [CrossRef]

- Wang, M.; He, J.; Zheng, L.; Alkhalifah, T.; Marzouki, R. Optimizing Energy Capacity, and Vibration Control Performance of Multi-Layer Smart Silicon Solar Cells Using Mathematical Simulation and Deep Neural Networks. Aerosp. Sci. Technol. 2025, 159, 109983. [Google Scholar] [CrossRef]

- Balaji, T.S.; Srinivasan, S. Detection of safety wearable’s of the industry workers using deep neural network. Mater. Today Proc. 2023, 80, 3064–3068. [Google Scholar] [CrossRef]

- Cadoret, A.; Goy, E.D.; Leroy, J.M.; Pfister, J.L.; Mevel, L. Linear time periodic system approximation based on Floquet and Fourier transformations for operational modal analysis and damage detection of wind turbine. Mech. Syst. Signal Process. 2024, 212, 111157. [Google Scholar] [CrossRef]

- Discacciati, N.; Hesthaven, J.S. Model reduction of coupled systems based on non-intrusive approximations of the boundary response maps. Comput. Methods Appl. Mech. Eng. 2024, 420, 116770. [Google Scholar] [CrossRef]

- Moya, A.A. Low-frequency approximations to the finite-length Warburg diffusion impedance: The reflexive case. J. Energy Storage 2024, 97, 112911. [Google Scholar] [CrossRef]

- Liu, X. Approximating smooth and sparse functions by deep neural networks: Optimal approximation rates and saturation. J. Complex. 2023, 79, 101783. [Google Scholar] [CrossRef]

- Noguer, I.A. Dimensional Constraints and Fundamental Limits of Neural Network Explainability: A Mathematical Framework and Analysis. 2025. Available online: https://ssrn.com/abstract=5095275 (accessed on 13 January 2025).

- Lin, S.-B. Limitations of shallow nets approximation. Neural Netw. 2017, 94, 96–102. [Google Scholar] [CrossRef]

- Berner, J.; Grohs, P.; Kutyniok, G.; Petersen, P. The modern mathematics of deep learning. arXiv 2021, arXiv:2105.04026 78. [Google Scholar]

- Siegel, J.W.; Xu, J. Characterization of the variation spaces corresponding to shallow neural networks. Constr. Approx. 2023, 57, 1109–1132. [Google Scholar] [CrossRef]

- Fefferman, C.; Sanjoy, M.; Hariharan, N. Testing the manifold hypothesis. J. Am. Math. Soc. 2016, 29, 983–1049. [Google Scholar] [CrossRef]

- Shaham, U.; Cloninger, A.; Coifman, R.R. Provable approximation properties for deep neural networks. Appl. Comput. Harmon. Anal. 2018, 44, 537–557. [Google Scholar] [CrossRef]

- Han, Z.; Yu, S.; Lin, S.B.; Zhou, D.X. Depth Selection for Deep ReLU Nets in Feature Extraction and Generalization. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1853–1868. [Google Scholar] [CrossRef]

- Lin, S.-B. Generalization and expressivity for deep nets. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 1392–1406. [Google Scholar] [CrossRef]

- Chui, C.K.; Lin, S.-B.; Zhou, D.-X. Deep neural networks for rotation-invariance approximation and learning. Anal. Appl. 2019, 17, 737–772. [Google Scholar] [CrossRef]

- Yan, Y.; Tang, M.; Wang, W.; Zhang, Y.; An, B. Trajectory tracking control of wearable upper limb rehabilitation robot based on Laguerre model predictive control. Robot. Auton. Syst. 2024, 179, 104745. [Google Scholar] [CrossRef]

- Abdelwahed, I.B.; Bouzrara, K. Control of pH neutralization process using NMPC based on discrete time NARX-Laguerre model. Comput. Chem. Eng. 2024, 189, 108802. [Google Scholar] [CrossRef]

- Zhang, G.; Gao, L.; Yang, H.; Mei, L. A novel method of model predictive control on permanent magnet synchronous machine with Laguerre functions. Alex. Eng. J. 2021, 60, 5485–5494. [Google Scholar] [CrossRef]

- Mihalev, G.; Yordanov, S.; Ormandzhiev, K.; Stoycheva, H.; Todorov, T. Synthesis of Model Predictive Control for an Electrohydraulic Servo System Using Orthonormal Functions. In Proceedings of the 5th International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Veliko Tarnovo, Bulgaria, 20–22 November 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Artioli, M.; Dattoli, G.; Zainab, U. Theory of Hermite and Laguerre Bessel function from the umbral point of view. Appl. Math. Comput. 2025, 488, 129103. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, S.; Kong, D. On optimal convergence rates of Laguerre polynomial expansions for piecewise functions. J. Comput. Appl. Math. 2023, 425, 115053. [Google Scholar] [CrossRef]

- Wang, X.; Xu, K.; Li, L. Model order reduction for discrete time-delay systems based on Laguerre function expansion. Linear Algebra Its Appl. 2024, 692, 160–184. [Google Scholar] [CrossRef]

- Manngård, M.; Toivonen, H.T. Identification of low-order models using Laguerre basis function expansions. IFAC-PapersOnLine 2018, 51, 72–77. [Google Scholar] [CrossRef]

- Zaidi, D.; Talib, I.; Riaz, M.B.; Alam, M.N. Extending spectral methods to solve time fractional-order Bloch equations using generalized Laguerre polynomials. Partial. Differ. Equ. Appl. Math. 2025, 13, 101049. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, H.; Ma, P. Wind power forecasting based on singular spectrum analysis and a new hybrid Laguerre neural network. Appl. Energy 2020, 259, 114139. [Google Scholar] [CrossRef]

- Nizami, T.K.; Chakravarty, A. Laguerre neural network driven adaptive control of DC-DC step down converter. IFAC-PapersOnLine 2020, 53, 13396–13401. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, H.; Meng, X.; Xie, X.; Hou, M.; Chevallier, J. Numerical solving of the generalized Black-Scholes differential equation using Laguerre neural network. Digit. Signal Process. 2021, 112, 103003. [Google Scholar] [CrossRef]

- Ye, J.; Xie, L.; Ma, L.; Bian, Y.; Xu, X. A novel hybrid model based on Laguerre polynomial and multi-objective Runge–Kutta algorithm for wind power forecasting. Int. J. Electr. Power Energy Syst. 2023, 146, 108726. [Google Scholar] [CrossRef]

- Peachap, A.B.; Tchiotsop, D. Epileptic seizures detection based on some new Laguerre polynomial wavelets, artificial neural networks and support vector machines. Inform. Med. Unlocked 2019, 16, 100209. [Google Scholar] [CrossRef]

- Agarwal, H.; Mishra, D.; Kumar, A. A deep-learning approach for turbulence correction in free space optical communication with Laguerre–Gaussian modes. Opt. Commun. 2024, 556, 130249. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhao, H.; Bhattacharjee, S.S.; Christensen, M.G. Quantized information-theoretic learning based Laguerre functional linked neural networks for nonlinear active noise control. Mech. Syst. Signal Process. 2024, 213, 111348. [Google Scholar] [CrossRef]

- Wang, L. Model Predictive Control System Design and Implementation Using MATLAB; Springer: London, UK, 2009; Volume 3. [Google Scholar]

- Zill, D.G. Advanced Engineering Mathematics; Jones & Bartlett Learning: Burlington, MA, USA, 2020. [Google Scholar]

- Petkov, P.; Slavov, T.; Kralev, J. Design of Embedded Robust Control Systems using MATLAB®/Simulink®; Control, Robotics and Sensors; Institution of Engineering and Technology: Lucknow, India, 2018; Volume 113. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mihalev, G. Approximation of Dynamic Systems Using Deep Neural Networks and Laguerre Functions. Eng. Proc. 2025, 104, 22. https://doi.org/10.3390/engproc2025104022

Mihalev G. Approximation of Dynamic Systems Using Deep Neural Networks and Laguerre Functions. Engineering Proceedings. 2025; 104(1):22. https://doi.org/10.3390/engproc2025104022

Chicago/Turabian StyleMihalev, Georgi. 2025. "Approximation of Dynamic Systems Using Deep Neural Networks and Laguerre Functions" Engineering Proceedings 104, no. 1: 22. https://doi.org/10.3390/engproc2025104022

APA StyleMihalev, G. (2025). Approximation of Dynamic Systems Using Deep Neural Networks and Laguerre Functions. Engineering Proceedings, 104(1), 22. https://doi.org/10.3390/engproc2025104022