Wearable EEG-Based Brain–Computer Interface for Stress Monitoring

Abstract

1. Introduction

1.1. Quantifying Stress with a BCI System

1.2. Novelty and Contributions

2. Materials and Methods

2.1. Inducing Stress

2.2. Cognitive Tasks

2.2.1. Cognitive Vigilance Task (CVT)

2.2.2. Multi-Modal Integration Task (MMIT)

2.3. Self-Report of Stress

2.4. Task Performance Metrics

2.5. Design of Experimental Sessions

2.5.1. Sequence of Events

2.5.2. Experimental Timeline and Participants

2.6. Recording and Processing ECG Signals

- Remove outliers in the list of R-R intervals (R-R intervals that are lower than 300 ms or greater than 2000 ms).

- Replace NaN values via linear interpolation.

- Remove ectopic beats using the Malik method [54]. This gives the N-N interval, which now contains NaNs for the removed ectopic beats.

- Replace NaN values via linear interpolation.

2.7. Recording and Processing EEG Signals

2.8. EEG Feature Extraction

2.8.1. Sample Entropy

2.8.2. Fractal Dimension

2.8.3. Hjorth Activity and Hjorth Complexity

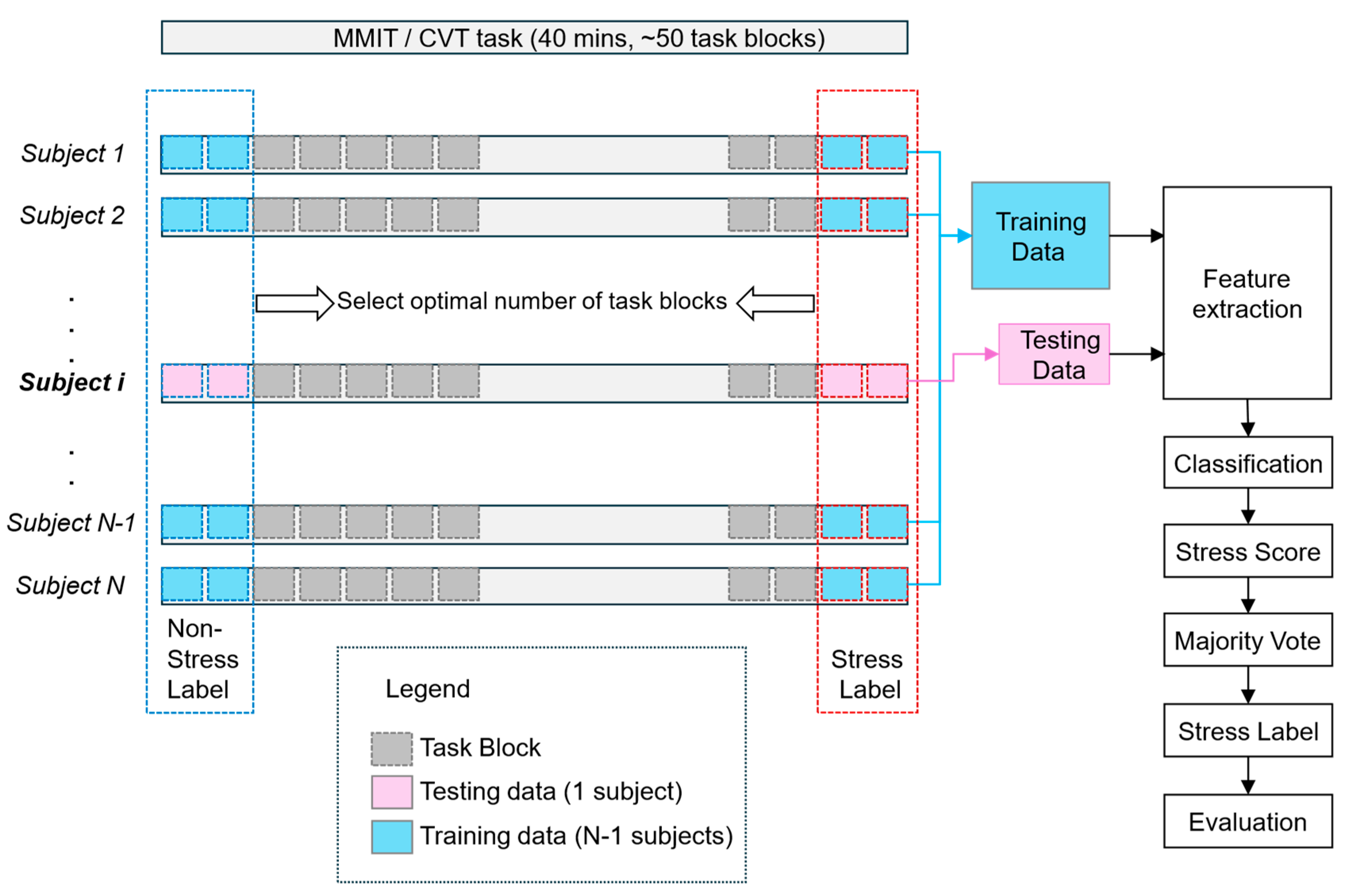

2.9. Computational Models for Stress Classification

3. Results

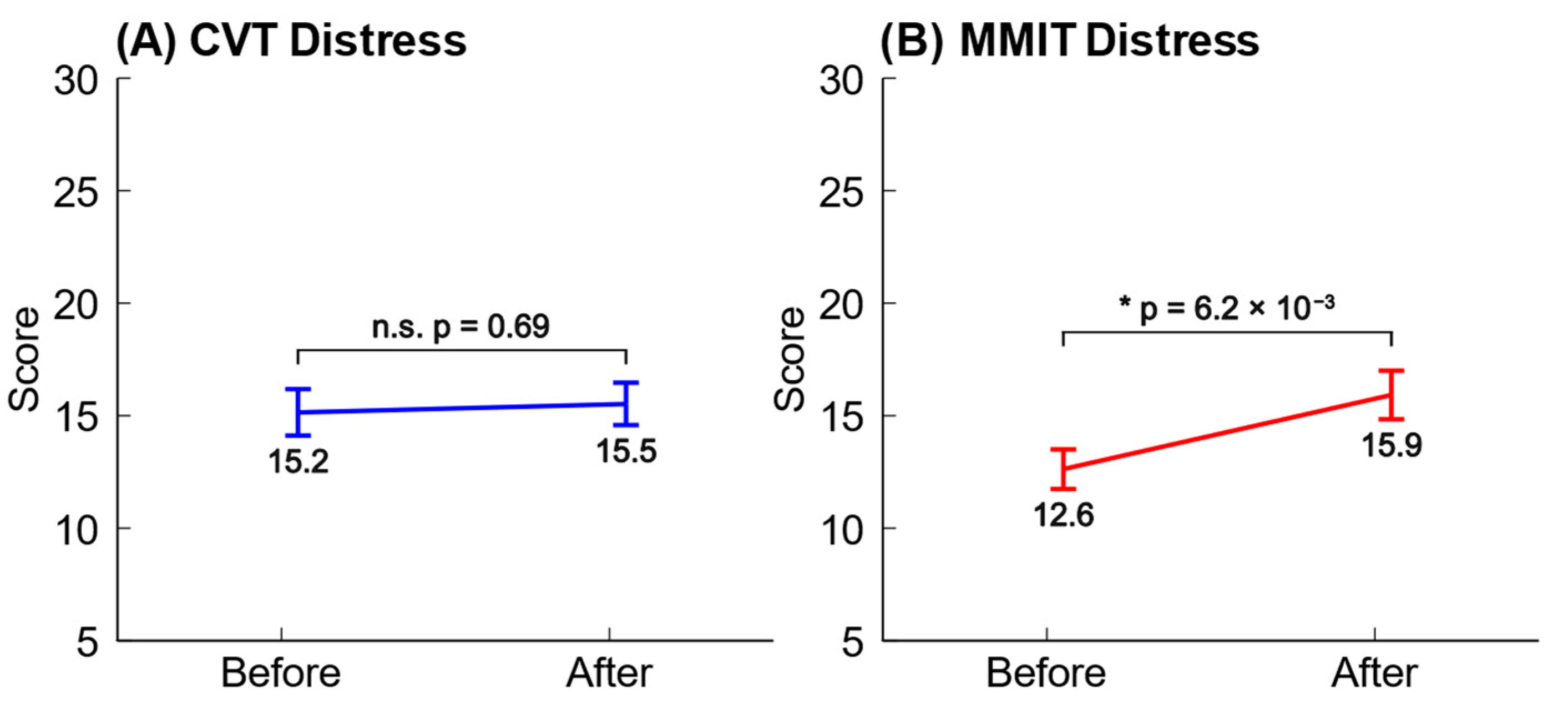

3.1. Analysis of Dundee Stress State Questionnaire (DSSQ) Responses

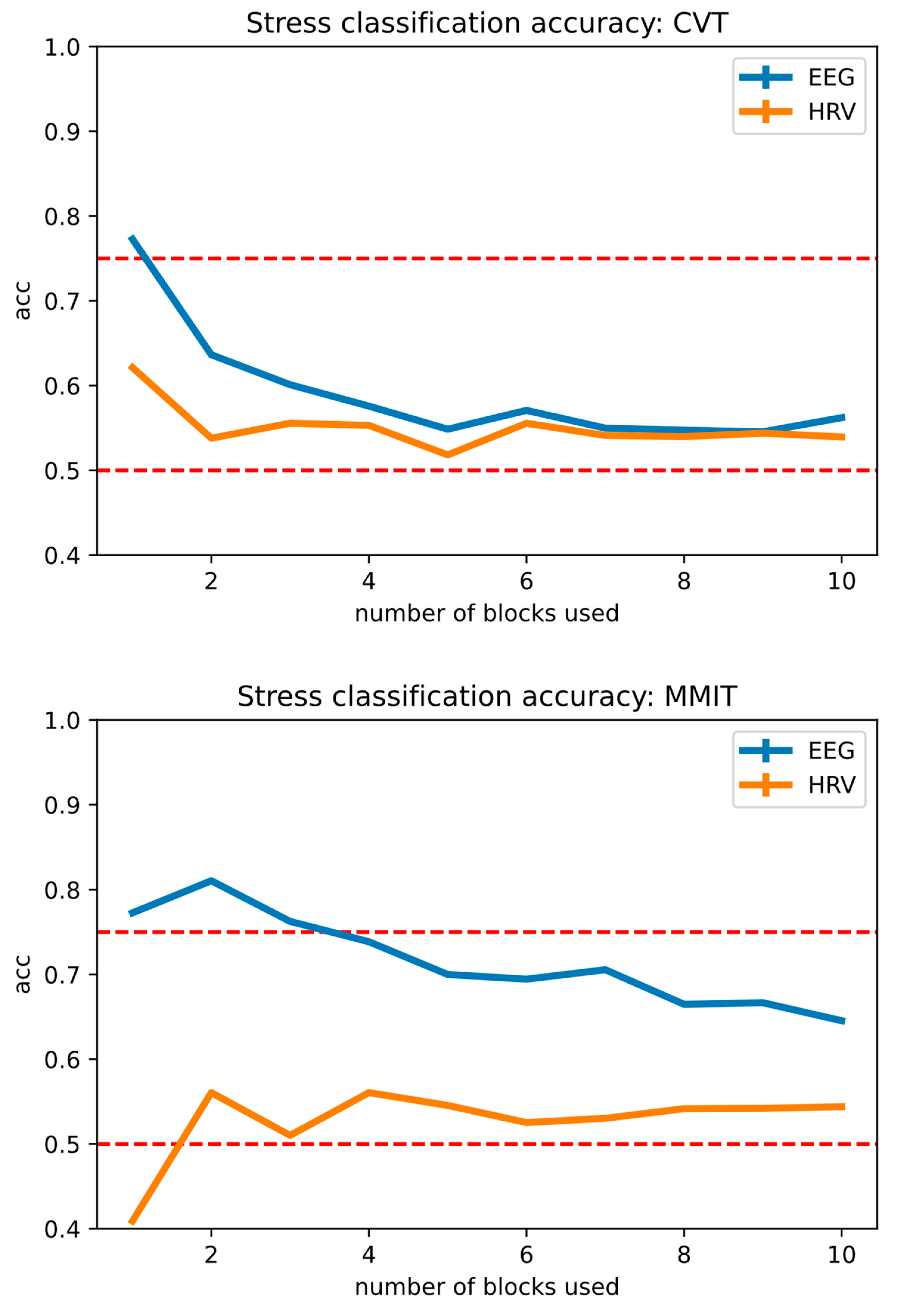

3.2. Stress Level Predictions

3.3. Performance of Stress Classification Models

4. Discussion

4.1. Inducing Stress with Cognitive Tasks

4.2. Decoding Stress with EEG Data

4.3. Decoding Stress with HRV Data

4.4. Limitations and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Borghini, G.; Bandini, A.; Orlandi, S.; Di Flumeri, G.; Arico, P.; Sciaraffa, N.; Ronca, V.; Bonelli, S.; Ragosta, M.; Tomasello, P.; et al. Stress Assessment by Combining Neurophysiological Signals and Radio Communications of Air Traffic Controllers. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; Volume 2020, pp. 851–854. [Google Scholar] [CrossRef]

- Joseph, M.; Ray, J.M.; Chang, J.; Cramer, L.D.; Bonz, J.W.; Yang, T.J.; Wong, A.H.; Auerbach, M.A.; Evans, L.V. All Clinical Stressors Are Not Created Equal: Differential Task Stress in a Simulated Clinical Environment. AEM Educ. Train. 2022, 6, e10726. [Google Scholar] [CrossRef] [PubMed]

- Kjellberg, A.; Toomingas, A.; Norman, K.; Hagman, M.; Herlin, R.-M.; Tornqvist, E.W. Stress, Energy and Psychosocial Conditions in Different Types of Call Centres. Work 2010, 36, 9–25. [Google Scholar] [CrossRef] [PubMed]

- Arora, S.; Sevdalis, N.; Nestel, D.; Woloshynowych, M.; Darzi, A.; Kneebone, R. The Impact of Stress on Surgical Performance: A Systematic Review of the Literature. Surgery 2010, 147, 318–330.e1-6. [Google Scholar] [CrossRef] [PubMed]

- Luers, P.; Schloeffel, M.; Prüssner, J.C. Working Memory Performance Under Stress. Exp. Psychol. 2020, 67, 132–139. [Google Scholar] [CrossRef] [PubMed]

- Riddell, C.; Yonelinas, A.P.; Shields, G.S. When Stress Enhances Memory Encoding: The Beneficial Effects of Changing Context. Neurobiol. Learn. Mem. 2023, 205, 107836. [Google Scholar] [CrossRef]

- Juster, R.-P.; McEwen, B.S.; Lupien, S.J. Allostatic Load Biomarkers of Chronic Stress and Impact on Health and Cognition. Neurosci. Biobehav. Rev. 2010, 35, 2–16. [Google Scholar] [CrossRef]

- Yaribeygi, H.; Panahi, Y.; Sahraei, H.; Johnston, T.P.; Sahebkar, A. The Impact of Stress on Body Function: A Review. EXCLI J 2017, 16, 1057–1072. [Google Scholar] [CrossRef]

- Tomei, G.; Cinti, M.E.; Cerratti, D.; Fioravanti, M. Attention, repetitive works, fatigue and stress. Ann. Ig. 2006, 18, 417–429. [Google Scholar]

- Roos, L.E.; Giuliano, R.J.; Beauchamp, K.G.; Berkman, E.T.; Knight, E.L.; Fisher, P.A. Acute Stress Impairs Children’s Sustained Attention with Increased Vulnerability for Children of Mothers Reporting Higher Parenting Stress. Dev. Psychobiol. 2020, 62, 532–543. [Google Scholar] [CrossRef]

- Ochiai, Y.; Takahashi, M.; Matsuo, T.; Sasaki, T.; Sato, Y.; Fukasawa, K.; Araki, T.; Otsuka, Y. Characteristics of Long Working Hours and Subsequent Psychological and Physical Responses: JNIOSH Cohort Study. Occup. Environ. Med. 2023, 80, 304–311. [Google Scholar] [CrossRef]

- Katmah, R.; Al-Shargie, F.; Tariq, U.; Babiloni, F.; Al-Mughairbi, F.; Al-Nashash, H. A Review on Mental Stress Assessment Methods Using EEG Signals. Sensors 2021, 21, 5043. [Google Scholar] [CrossRef] [PubMed]

- Vanhollebeke, G.; De Smet, S.; De Raedt, R.; Baeken, C.; van Mierlo, P.; Vanderhasselt, M.-A. The Neural Correlates of Psychosocial Stress: A Systematic Review and Meta-Analysis of Spectral Analysis EEG Studies. Neurobiol. Stress 2022, 18, 100452. [Google Scholar] [CrossRef] [PubMed]

- Föhr, T.; Tolvanen, A.; Myllymäki, T.; Järvelä-Reijonen, E.; Rantala, S.; Korpela, R.; Peuhkuri, K.; Kolehmainen, M.; Puttonen, S.; Lappalainen, R.; et al. Subjective Stress, Objective Heart Rate Variability-Based Stress, and Recovery on Workdays among Overweight and Psychologically Distressed Individuals: A Cross-Sectional Study. J. Occup. Med. Toxicol. 2015, 10, 39. [Google Scholar] [CrossRef] [PubMed]

- Christensen, D.S.; Dich, N.; Flensborg-Madsen, T.; Garde, E.; Hansen, Å.M.; Mortensen, E.L. Objective and Subjective Stress, Personality, and Allostatic Load. Brain Behav. 2019, 9, e01386. [Google Scholar] [CrossRef]

- Shields, G.S.; Fassett-Carman, A.; Gray, Z.J.; Gonzales, J.E.; Snyder, H.R.; Slavich, G.M. Why Is Subjective Stress Severity a Stronger Predictor of Health Than Stressor Exposure? A Preregistered Two-Study Test of Two Hypotheses. Stress Health 2023, 39, 87–102. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; Abdul Majeed, A.P.P.; Musa, R.M.; Ab. Nasir, A.F.; Bari, B.S.; Khatun, S. Current Status, Challenges, and Possible Solutions of EEG-Based Brain-Computer Interface: A Comprehensive Review. Front. Neurorobot. 2020, 14, 25. [Google Scholar] [CrossRef]

- Yadav, H.; Maini, S. Electroencephalogram Based Brain-Computer Interface: Applications, Challenges, and Opportunities. Multimed. Tools Appl. 2023, 82, 47003–47047. [Google Scholar] [CrossRef]

- Lim, R.Y.; Lew, W.-C.L.; Ang, K.K. Review of EEG Affective Recognition with a Neuroscience Perspective. Brain Sci. 2024, 14, 364. [Google Scholar] [CrossRef]

- Lopez-Gordo, M.A.; Sanchez-Morillo, D.; Valle, F.P. Dry EEG Electrodes. Sensors 2014, 14, 12847–12870. [Google Scholar] [CrossRef]

- Pope, L. Papers, Please. 2013. Available online: https://papersplea.se/ (accessed on 4 September 2024).

- McKernan, B. Digital Texts and Moral Questions About Immigration: Papers, Please and the Capacity for a Video Game to Stimulate Sociopolitical Discussion. Games Cult. 2021, 16, 383–406. [Google Scholar] [CrossRef]

- Sievers, J.M. Papers, Please as Philosophy. In The Palgrave Handbook of Popular Culture as Philosophy; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–14. ISBN 978-3-319-97134-6. [Google Scholar]

- Sussman, R.F.; Sekuler, R. Feeling Rushed? Perceived Time Pressure Impacts Executive Function and Stress. Acta Psychol. 2022, 229, 103702. [Google Scholar] [CrossRef] [PubMed]

- Szalma, J.L.; Teo, G.W.L. Spatial and Temporal Task Characteristics as Stress: A Test of the Dynamic Adaptability Theory of Stress, Workload, and Performance. Acta Psychol. 2012, 139, 471–485. [Google Scholar] [CrossRef]

- Claypoole, V.L.; Dever, D.A.; Denues, K.L.; Szalma, J.L. The Effects of Event Rate on a Cognitive Vigilance Task. Hum. Factors 2019, 61, 440–450. [Google Scholar] [CrossRef] [PubMed]

- Attar, E.T.; Balasubramanian, V.; Subasi, E.; Kaya, M. Stress Analysis Based on Simultaneous Heart Rate Variability and EEG Monitoring. IEEE J. Transl. Eng. Health Med. 2021, 9, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Perez-Valero, E.; Vaquero-Blasco, M.A.; Lopez-Gordo, M.A.; Morillas, C. Quantitative Assessment of Stress Through EEG During a Virtual Reality Stress-Relax Session. Front. Comput. Neurosci. 2021, 15, 684423. [Google Scholar] [CrossRef]

- Badr, Y.; Al-Shargie, F.; Tariq, U.; Babiloni, F.; Al Mughairbi, F.; Al-Nashash, H. Classification of Mental Stress Using Dry EEG Electrodes and Machine Learning. In Proceedings of the 2023 Advances in Science and Engineering Technology International Conferences (ASET), Dubai, United Arab Emirates, 20–23 February 2023; pp. 1–5. [Google Scholar]

- Bali, A.; Jaggi, A.S. Clinical Experimental Stress Studies: Methods and Assessment. Rev. Neurosci. 2015, 26, 555–579. [Google Scholar] [CrossRef]

- Kirschbaum, C.; Pirke, K.-M.; Hellhammer, D.H. The “Trier Social Stress Test”: A Tool for Investigating Psychobiological Stress Responses in a Laboratory Setting. Neuropsychobiology 1993, 28, 76–81. [Google Scholar] [CrossRef]

- Allen, A.P.; Kennedy, P.J.; Dockray, S.; Cryan, J.F.; Dinan, T.G.; Clarke, G. The Trier Social Stress Test: Principles and Practice. Neurobiol. Stress 2016, 6, 113–126. [Google Scholar] [CrossRef]

- Brouwer, A.-M.; Hogervorst, M.A. A New Paradigm to Induce Mental Stress: The Sing-a-Song Stress Test (SSST). Front. Neurosci. 2014, 8, 224. [Google Scholar] [CrossRef]

- van der Mee, D.J.; Duivestein, Q.; Gevonden, M.J.; Westerink, J.H.D.M.; de Geus, E.J.C. The Short Sing-a-Song Stress Test: A Practical and Valid Test of Autonomic Responses Induced by Social-Evaluative Stress. Auton. Neurosci. 2020, 224, 102612. [Google Scholar] [CrossRef]

- Hines, E.A.; Brown, G.E. The Cold Pressor Test for Measuring the Reactibility of the Blood Pressure: Data Concerning 571 Normal and Hypertensive Subjects. Am. Heart J. 1936, 11, 1–9. [Google Scholar] [CrossRef]

- Lamotte, G.; Boes, C.J.; Low, P.A.; Coon, E.A. The Expanding Role of the Cold Pressor Test: A Brief History. Clin. Auton. Res. 2021, 31, 153–155. [Google Scholar] [CrossRef] [PubMed]

- Fyer, M.R.; Uy, J.; Martinez, J.; Goetz, R.; Klein, D.F.; Fyer, A.; Liebowitz, M.R.; Gorman, J. CO2 Challenge of Patients with Panic Disorder. Am. J. Psychiatry 1987, 144, 1080–1082. [Google Scholar] [CrossRef] [PubMed]

- Vickers, K.; Jafarpour, S.; Mofidi, A.; Rafat, B.; Woznica, A. The 35% Carbon Dioxide Test in Stress and Panic Research: Overview of Effects and Integration of Findings. Clin. Psychol. Rev. 2012, 32, 153–164. [Google Scholar] [CrossRef] [PubMed]

- Minkley, N.; Schröder, T.P.; Wolf, O.T.; Kirchner, W.H. The Socially Evaluated Cold-Pressor Test (SECPT) for Groups: Effects of Repeated Administration of a Combined Physiological and Psychological Stressor. Psychoneuroendocrinology 2014, 45, 119–127. [Google Scholar] [CrossRef]

- Kolotylova, T.; Koschke, M.; Bär, K.-J.; Ebner-Priemer, U.; Kleindienst, N.; Bohus, M.; Schmahl, C. [Development of the “Mannheim Multicomponent Stress Test” (MMST)]. Psychother. Psychosom. Med. Psychol. 2010, 60, 64–72. [Google Scholar] [CrossRef]

- Scarpina, F.; Tagini, S. The Stroop Color and Word Test. Front. Psychol. 2017, 8, 557. [Google Scholar] [CrossRef]

- Warm, J. Sustained Attention in Human Performance; Wiley: New York, NY, USA, 1984; ISBN 978-0-471-10322-6. [Google Scholar]

- Craw, O.A.; Smith, M.A.; Wetherell, M.A. Manipulating Levels of Socially Evaluative Threat and the Impact on Anticipatory Stress Reactivity. Front. Psychol. 2021, 12, 622030. [Google Scholar] [CrossRef]

- Von Baeyer, C.L.; Piira, T.; Chambers, C.T.; Trapanotto, M.; Zeltzer, L.K. Guidelines for the Cold Pressor Task as an Experimental Pain Stimulus for Use with Children. J. Pain 2005, 6, 218–227. [Google Scholar] [CrossRef]

- Warm, J.S.; Parasuraman, R.; Matthews, G. Vigilance Requires Hard Mental Work and Is Stressful. Hum. Factors 2008, 50, 433–441. [Google Scholar] [CrossRef]

- Dillard, M.B.; Warm, J.S.; Funke, G.J.; Nelson, W.T.; Finomore, V.S.; McClernon, C.K.; Eggemeier, F.T.; Tripp, L.D.; Funke, M.E. Vigilance Tasks: Unpleasant, Mentally Demanding, and Stressful Even When Time Flies. Hum. Factors 2019, 61, 225–242. [Google Scholar] [CrossRef] [PubMed]

- Meule, A. Reporting and Interpreting Task Performance in Go/No-Go Affective Shifting Tasks. Front. Psychol. 2017, 8, 701. [Google Scholar] [CrossRef] [PubMed]

- Matthews, G.; Campbell, S.E.; Falconer, S.; Joyner, L.A.; Huggins, J.; Gilliland, K.; Grier, R.; Warm, J.S. Fundamental Dimensions of Subjective State in Performance Settings: Task Engagement, Distress, and Worry. Emotion 2002, 2, 315–340. [Google Scholar] [CrossRef] [PubMed]

- Matthews, G.; Szalma, J.; Panganiban, A.R.; Neubauer, C.; Warm, J.S. Profiling Task Stress With The Dundee State Questionnaire. In Psychology of Stress: New Research; Nova Science Pub Inc.: New York, NY, USA, 2013; pp. 49–91. ISBN 978-1-62417-109-3. [Google Scholar]

- Vaessen, T.; Rintala, A.; Otsabryk, N.; Viechtbauer, W.; Wampers, M.; Claes, S.; Myin-Germeys, I. The Association between Self-Reported Stress and Cardiovascular Measures in Daily Life: A Systematic Review. PLoS ONE 2021, 16, e0259557. [Google Scholar] [CrossRef]

- Jhangiani, R.S.; Cuttler, C.; Leighton, D.C. Research Methods in Psychology, 4th ed.; University of Minnesota Libraries Publishing: Minneapolis, MN, USA, 2019; ISBN 978-1-946135-22-3. [Google Scholar]

- Dalmeida, K.M.; Masala, G.L. HRV Features as Viable Physiological Markers for Stress Detection Using Wearable Devices. Sensors 2021, 21, 2873. [Google Scholar] [CrossRef]

- Bagliani, G.; Della Rocca, D.G.; De Ponti, R.; Capucci, A.; Padeletti, M.; Natale, A. Ectopic Beats: Insights from Timing and Morphology. Card. Electrophysiol. Clin. 2018, 10, 257–275. [Google Scholar] [CrossRef]

- Acar, B.; Savelieva, I.; Hemingway, H.; Malik, M. Automatic Ectopic Beat Elimination in Short-Term Heart Rate Variability Measurement. Comput. Methods Programs Biomed. 2000, 63, 123–131. [Google Scholar] [CrossRef]

- Aura-Healthcare/Hrv-Analysis. Package for Heart Rate Variability Analysis in Python. Available online: https://github.com/Aura-healthcare/hrv-analysis (accessed on 9 April 2024).

- Shaffer, F.; Ginsberg, J.P. An Overview of Heart Rate Variability Metrics and Norms. Front. Public Health 2017, 5, 258. [Google Scholar] [CrossRef]

- Richman, J.S.; Moorman, J.R. Physiological Time-Series Analysis Using Approximate Entropy and Sample Entropy. Am. J. Physiol. Heart Circ. Physiol. 2000, 278, H2039–H2049. [Google Scholar] [CrossRef]

- Higuchi, T. Approach to an Irregular Time Series on the Basis of the Fractal Theory. Phys. D Nonlinear Phenom. 1988, 31, 277–283. [Google Scholar] [CrossRef]

- Hjorth, B. EEG Analysis Based on Time Domain Properties. Electroencephalogr. Clin. Neurophysiol. 1970, 29, 306–310. [Google Scholar] [CrossRef] [PubMed]

- Stam, C.J. Nonlinear Dynamical Analysis of EEG and MEG: Review of an Emerging Field. Clin. Neurophysiol. 2005, 116, 2266–2301. [Google Scholar] [CrossRef] [PubMed]

- Veeranki, Y.R.; Diaz, L.R.M.; Swaminathan, R.; Posada-Quintero, H.F. Nonlinear Signal Processing Methods for Automatic Emotion Recognition Using Electrodermal Activity. IEEE Sens. J. 2024, 24, 8079–8093. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, Y.; Sun, X.; Li, D.; Voss, L.J.; Sleigh, J.W.; Hagihira, S.; Li, X. EEG Entropy Measures in Anesthesia. Front. Comput. Neurosci. 2015, 9, 16. [Google Scholar] [CrossRef]

- Akar, S.A.; Kara, S.; Agambayev, S.; Bilgic, V. Nonlinear Analysis of EEG in Major Depression with Fractal Dimensions. In Proceedings of the 2015 37th annual international conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–28 August 2015; Volume 2015, pp. 7410–7413. [Google Scholar] [CrossRef]

- Ruiz-Padial, E.; Ibáñez-Molina, A.J. Fractal Dimension of EEG Signals and Heart Dynamics in Discrete Emotional States. Biol. Psychol. 2018, 137, 42–48. [Google Scholar] [CrossRef]

- Vicchietti, M.L.; Ramos, F.M.; Betting, L.E.; Campanharo, A.S.L.O. Computational Methods of EEG Signals Analysis for Alzheimer’s Disease Classification. Sci. Rep. 2023, 13, 8184. [Google Scholar] [CrossRef]

- Veeranki, Y.R.; Ganapathy, N.; Swaminathan, R. Non-Parametric Classifiers Based Emotion Classification Using Electrodermal Activity and Modified Hjorth Features. Stud. Health Technol. Inf. 2021, 281, 163–167. [Google Scholar] [CrossRef]

- Hag, A.; Al-Shargie, F.; Handayani, D.; Asadi, H. Mental Stress Classification Based on Selected Electroencephalography Channels Using Correlation Coefficient of Hjorth Parameters. Brain Sci. 2023, 13, 1340. [Google Scholar] [CrossRef]

- Foong, R.; Ang, K.K.; Zhang, Z.; Quek, C. An Iterative Cross-Subject Negative-Unlabeled Learning Algorithm for Quantifying Passive Fatigue. J. Neural Eng. 2019, 16, 056013. [Google Scholar] [CrossRef]

- Wong, K.; Chan, A.H.S.; Ngan, S.C. The Effect of Long Working Hours and Overtime on Occupational Health: A Meta-Analysis of Evidence from 1998 to 2018. Int J Env. Res Public Health 2019, 16, 2102. [Google Scholar] [CrossRef]

- Vidaurre, C.; Blankertz, B. Towards a Cure for BCI Illiteracy. Brain Topogr. 2010, 23, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Bennett, M.M.; Tomas, C.W.; Fitzgerald, J.M. Relationship between Heart Rate Variability and Differential Patterns of Cortisol Response to Acute Stressors in Mid-life Adults: A Data-driven Investigation. Stress Health 2024, 40, e3327. [Google Scholar] [CrossRef] [PubMed]

- Stephens, M.A.C.; Wand, G. Stress and the HPA Axis. Alcohol. Res. 2012, 34, 468–483. [Google Scholar] [PubMed]

- Meaney, M.J.; Szyf, M. Environmental Programming of Stress Responses through DNA Methylation: Life at the Interface between a Dynamic Environment and a Fixed Genome. Dialogues Clin. Neurosci. 2005, 7, 103–123. [Google Scholar] [CrossRef] [PubMed]

- Henrich, J.; Heine, S.J.; Norenzayan, A. The Weirdest People in the World? Behav. Brain Sci. 2010, 33, 61–83, discussion 83–135. [Google Scholar] [CrossRef]

- Immanuel, S.; Teferra, M.N.; Baumert, M.; Bidargaddi, N. Heart Rate Variability for Evaluating Psychological Stress Changes in Healthy Adults: A Scoping Review. Neuropsychobiology 2023, 82, 187–202. [Google Scholar] [CrossRef]

- Hemakom, A.; Atiwiwat, D.; Israsena, P. ECG and EEG Based Detection and Multilevel Classification of Stress Using Machine Learning for Specified Genders: A Preliminary Study. PLoS ONE 2023, 18, e0291070. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Advances in Psychology, 52; North-Holland: Oxford, UK, 1988; pp. 139–183. ISBN 978-0-444-70388-0. [Google Scholar]

- Chan, S.F.; La Greca, A.M. Perceived Stress Scale (PSS). In Encyclopedia of Behavioral Medicine; Gellman, M.D., Turner, J.R., Eds.; Springer: New York, NY, USA, 2013; pp. 1454–1455. ISBN 978-1-4419-1005-9. [Google Scholar]

- Rho, G.; Callara, A.L.; Bernardi, G.; Scilingo, E.P.; Greco, A. EEG Cortical Activity and Connectivity Correlates of Early Sympathetic Response during Cold Pressor Test. Sci. Rep. 2023, 13, 1338. [Google Scholar] [CrossRef]

- Chou, P.-H.; Lin, W.-H.; Hung, C.-A.; Chang, C.-C.; Li, W.-R.; Lan, T.-H.; Huang, M.-W. Perceived Occupational Stress Is Associated with Decreased Cortical Activity of the Prefrontal Cortex: A Multichannel Near-Infrared Spectroscopy Study. Sci. Rep. 2016, 6, 39089. [Google Scholar] [CrossRef]

- Martínez-Cañada, P.; Ness, T.V.; Einevoll, G.T.; Fellin, T.; Panzeri, S. Computation of the Electroencephalogram (EEG) from Network Models of Point Neurons. PLoS Comput. Biol. 2021, 17, e1008893. [Google Scholar] [CrossRef]

- Daubechies, I. Ten Lectures on Wavelets: 61; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1992; ISBN 978-0-89871-274-2. [Google Scholar]

- Ang, K.K.; Chin, Z.Y.; Zhang, H.; Guan, C. Filter Bank Common Spatial Pattern (FBCSP) in Brain-Computer Interface. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 2390–2397. [Google Scholar]

- Singh, A.K.; Krishnan, S. Trends in EEG Signal Feature Extraction Applications. Front. Artif. Intell. 2023, 5, 1072801. [Google Scholar] [CrossRef] [PubMed]

| S1 | S2 | S3 | S4 | S5 | S6 | S7 | S8 | |

|---|---|---|---|---|---|---|---|---|

| 50% | Preparation | Questionnaire Block 1 | Baseline (calm video) | Task 1—CVT | Questionnaire Block 2 | Rest | Task 2—MMIT | Questionnaire Block 4 |

| 50% | Preparation | Questionnaire Block 1 | Baseline (calm video) | Task 1—MMIT | Questionnaire Block 2 | Rest | Task 2—CVT | Questionnaire Block 4 |

| 5 min | 10 min | 10 min | 40 min | 10 min | 15 min | 40 min | 10 min |

| Feature | Description |

|---|---|

| mean_nni | Mean NN interval |

| Sdnn | Standard deviation of NN interval |

| Sdsd | Standard deviation of successive RR interval differences |

| Nni_50 | Number of NN intervals that differ by more than 50 ms |

| Pnni_50 | Percentage of successive RR intervals that differ by more than 50 ms |

| Nni_20 | Number of NN intervals that differ by more than 20 ms |

| Pnni_20 | Percentage of successive RR intervals that differ by more than 20 ms |

| rmssd | Root mean square of successive RR interval differences |

| median_nni | Median NN interval |

| range_nni | Range of NN intervals |

| cvsd | The root mean square of successive differences (RMSSD) divided by the mean of the RR intervals (MeanNN) |

| cvnni | The standard deviation of the RR intervals (SDNN) divided by the mean of the RR intervals (MeanNN). |

| mean_hr | Mean heart rate |

| max_hr | Max heart rate |

| Task | EEG Decoding Accuracy | HRV Decoding Accuracy |

|---|---|---|

| MMIT | 81.0 ± 3.4% | 56.0 ± 4.3% |

| CVT | 77.2 ± 5.2% | 62.1 ± 6.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Premchand, B.; Liang, L.; Phua, K.S.; Zhang, Z.; Wang, C.; Guo, L.; Ang, J.; Koh, J.; Yong, X.; Ang, K.K. Wearable EEG-Based Brain–Computer Interface for Stress Monitoring. NeuroSci 2024, 5, 407-428. https://doi.org/10.3390/neurosci5040031

Premchand B, Liang L, Phua KS, Zhang Z, Wang C, Guo L, Ang J, Koh J, Yong X, Ang KK. Wearable EEG-Based Brain–Computer Interface for Stress Monitoring. NeuroSci. 2024; 5(4):407-428. https://doi.org/10.3390/neurosci5040031

Chicago/Turabian StylePremchand, Brian, Liyuan Liang, Kok Soon Phua, Zhuo Zhang, Chuanchu Wang, Ling Guo, Jennifer Ang, Juliana Koh, Xueyi Yong, and Kai Keng Ang. 2024. "Wearable EEG-Based Brain–Computer Interface for Stress Monitoring" NeuroSci 5, no. 4: 407-428. https://doi.org/10.3390/neurosci5040031

APA StylePremchand, B., Liang, L., Phua, K. S., Zhang, Z., Wang, C., Guo, L., Ang, J., Koh, J., Yong, X., & Ang, K. K. (2024). Wearable EEG-Based Brain–Computer Interface for Stress Monitoring. NeuroSci, 5(4), 407-428. https://doi.org/10.3390/neurosci5040031