The Morphospace of Consciousness: Three Kinds of Complexity for Minds and Machines

Abstract

1. Introduction

- Behave flexibly as a function of the environment

- Exhibit adaptive (rational, goal-oriented) behavior

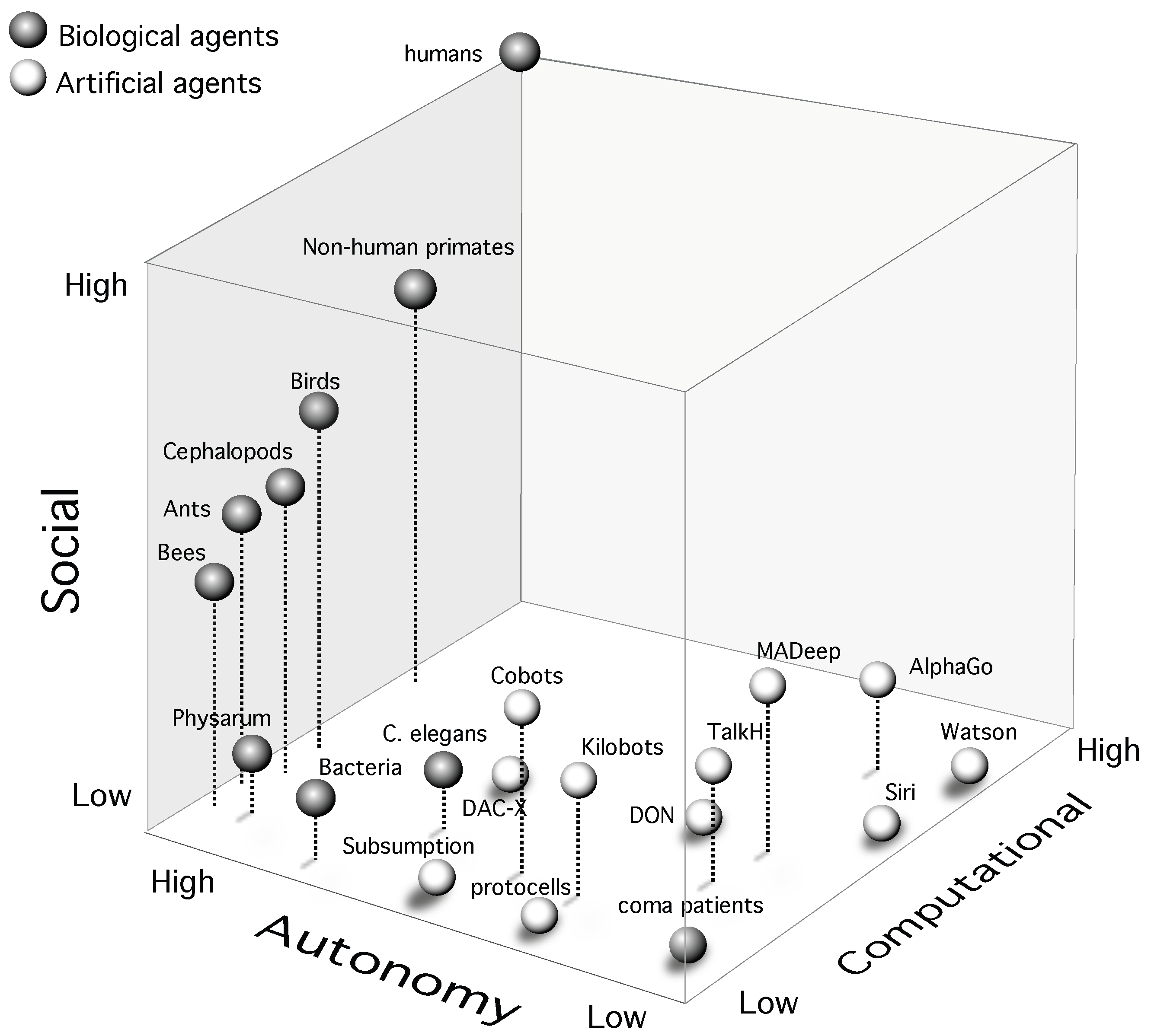

- Operate in real-time

- Operate in a rich, complex, detailed environment (that is, perceive an immense amount of changing detail, use vast amounts of knowledge, and control a motor system of many degrees of freedom)

- Use symbols and abstractions

- Use language, both natural and artificial

- Learn from the environment and from experience

- Acquire capabilities through development

- Operate autonomously, but within a social community

- Be self-aware and have a sense of self

- Be realizable as a neural system

- Be constructible by an embryological growth process

- Arise through evolution

2. Biological Consciousness: Insights from Clinical Neuroscience

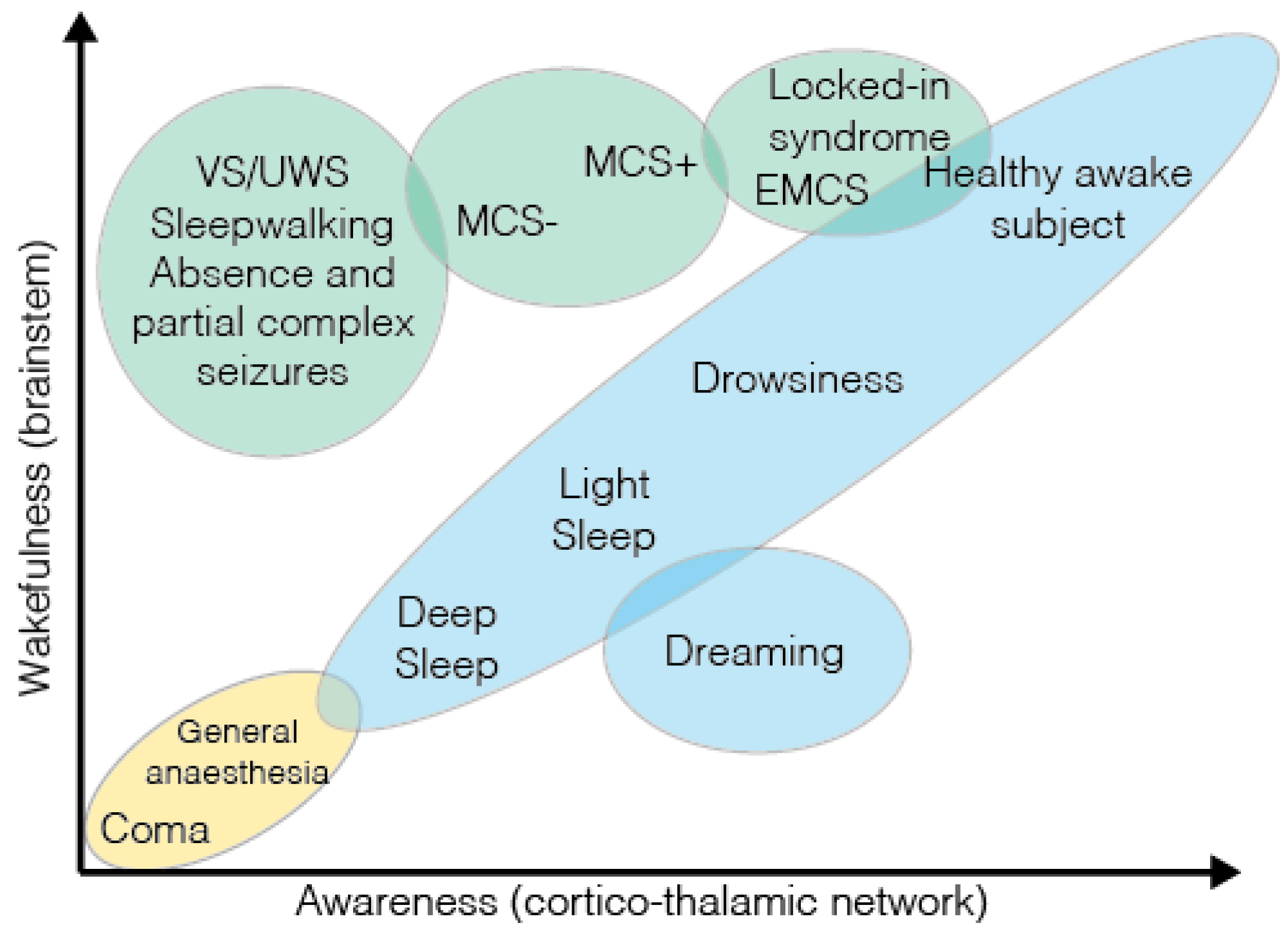

2.1. Clinical Consciousness and its Disorders

2.2. Candidate Measures in Brain and Behavioral Studies

3. Synthetic Consciousness? Insights from Synthetic Biology and Artificial Intelligence

4. The Function of Consciousness: Insights from Evolutionary Game Theory and Cognitive Robotics

4.1. The H5W Problem

4.2. Evolutionary Game Theory

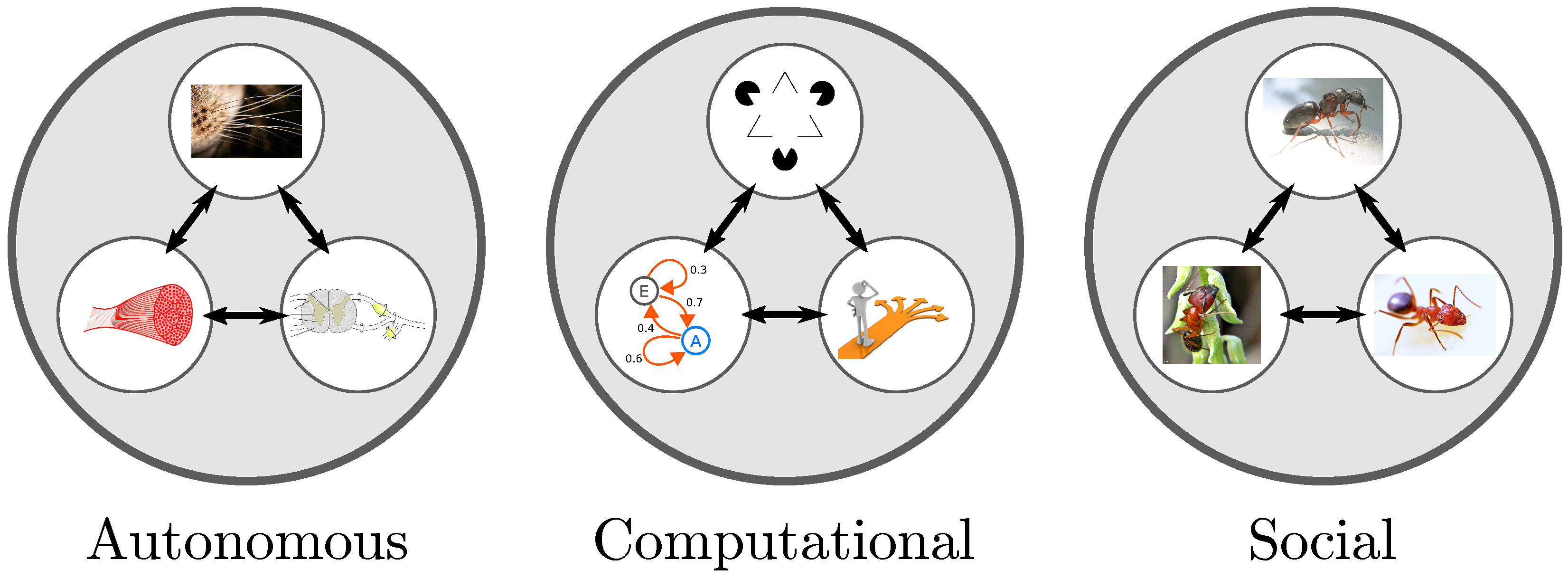

5. Three Kinds of Complexity to Characterize Consciousness

5.1. Why Distinguish Between Complexity?

5.2. Candidate Complexity Measures

5.3. Constructing the Morphospace

5.4. Relation to General Intelligence

5.5. Other Embodiments of Consciousness in the Morphospace

5.5.1. Synthetic Consciousness

5.5.2. Group Consciousness

5.5.3. Simulated Consciousness

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Laureys, S.; Owen, A.M.; Schiff, N.D. Brain function in coma, vegetative state, and related disorders. Lancet Neurol. 2004, 3, 537–546. [Google Scholar] [CrossRef] [PubMed]

- Laureys, S. The neural correlate of (un) awareness: Lessons from the vegetative state. Trends Cogn. Sci. 2005, 9, 556–559. [Google Scholar] [CrossRef] [PubMed]

- McGhee, G.R. Theoretical Morphology: The Concept and Its Applications; Columbia University Press: New York, NY, USA, 1999. [Google Scholar]

- Avena-Koenigsberger, A.; Goñi, J.; Solé, R.; Sporns, O. Network morphospace. J. R. Soc. Interface 2015, 12, 20140881. [Google Scholar] [CrossRef] [PubMed]

- Ollé-Vila, A.; Duran-Nebreda, S.; Conde-Pueyo, N.; Montañez, R.; Solé, R. A morphospace for synthetic organs and organoids: The possible and the actual. Integr. Biol. 2016, 8, 485–503. [Google Scholar] [CrossRef]

- Seoane, L.F.; Solé, R. The morphospace of language networks. Sci. Rep. 2018, 8, 10465. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Herreros, I.; Verschure, P. On Three Categories of Conscious Machines. Proceeding of the Biomimetic and Biohybrid Systems: 5th International Conference, Living Machines 2016, Edinburgh, UK, 19–22 July 2016; Lepora, N.F., Mura, A., Mangan, M., Verschure, P.F., Desmulliez, M., Prescott, T.J., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 389–392. [Google Scholar] [CrossRef]

- Baars, B.J. Global workspace theory of consciousness: Toward a cognitive neuroscience of human experience. Prog. Brain Res. 2005, 150, 45–53. [Google Scholar]

- Koch, C.; Massimini, M.; Boly, M.; Tononi, G. Neural correlates of consciousness: Progress and problems. Nat. Rev. Neurosci. 2016, 17, 307–321. [Google Scholar] [CrossRef]

- Tononi, G.; Boly, M.; Massimini, M.; Koch, C. Integrated information theory: From consciousness to its physical substrate. Nat. Rev. Neurosci. 2016, 17, 450–461. [Google Scholar] [CrossRef]

- Mischiati, M.; Lin, H.T.; Herold, P.; Imler, E.; Olberg, R.; Leonardo, A. Internal models direct dragonfly interception steering. Nature 2015, 517, 333–338. [Google Scholar] [CrossRef]

- Newell, A. Unified Theories of Cognition; Harvard University Press: Cambridge, MA, USA, 1994. [Google Scholar]

- Rashevsky, N. Outline of a physico-mathematical theory of excitation and inhibition. Protoplasma 1933, 20, 42–56. [Google Scholar] [CrossRef]

- McCulloch, W.S.; Pitts, W. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386. [Google Scholar] [CrossRef]

- Hopfield, J.J. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA 1982, 79, 2554–2558. [Google Scholar] [CrossRef]

- Sole, R. Rise of the Humanbot. arXiv 2017, arXiv:1705.05935. [Google Scholar]

- Arsiwalla, X.D.; Freire, I.T.; Vouloutsi, V.; Verschure, P. Latent Morality in Algorithms and Machines. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Nara, Japan, 9–12 July 2019; Springer: Cham, Switzerland, 2019; pp. 309–315. [Google Scholar]

- Freire, I.T.; Urikh, D.; Arsiwalla, X.D.; Verschure, P.F. Machine Morality: From Harm-Avoidance to Human-Robot Cooperation. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Freiburg, Germany, 28–30 July 2020; Springer: Cham, Switzerland, 2020; pp. 116–127. [Google Scholar]

- Verschure, P.F.; Pennartz, C.M.; Pezzulo, G. The why, what, where, when and how of goal-directed choice: Neuronal and computational principles. Phil. Trans. R. Soc. B 2014, 369, 20130483. [Google Scholar] [CrossRef] [PubMed]

- Laureys, S.; Celesia, G.G.; Cohadon, F.; Lavrijsen, J.; León-Carrión, J.; Sannita, W.G.; Sazbon, L.; Schmutzhard, E.; von Wild, K.R.; Zeman, A.; et al. Unresponsive wakefulness syndrome: A new name for the vegetative state or apallic syndrome. BMC Med. 2010, 8, 68. [Google Scholar] [CrossRef]

- Bruno, M.A.; Vanhaudenhuyse, A.; Thibaut, A.; Moonen, G.; Laureys, S. From unresponsive wakefulness to minimally conscious PLUS and functional locked-in syndromes: Recent advances in our understanding of disorders of consciousness. J. Neurol. 2011, 258, 1373–1384. [Google Scholar] [CrossRef]

- Blumenfeld, H. Impaired consciousness in epilepsy. Lancet Neurol. 2012, 11, 814–826. [Google Scholar] [CrossRef]

- Giacino, J.T.; Ashwal, S.; Childs, N.; Cranford, R.; Jennett, B.; Katz, D.I.; Kelly, J.P.; Rosenberg, J.H.; Whyte, J.; Zafonte, R.; et al. The minimally conscious state definition and diagnostic criteria. Neurology 2002, 58, 349–353. [Google Scholar] [CrossRef]

- Giacino, J.T. The vegetative and minimally conscious states: Consensus-based criteria for establishing diagnosis and prognosis. NeuroRehabilitation 2004, 19, 293–298. [Google Scholar] [CrossRef]

- Parton, A.; Malhotra, P.; Husain, M. Hemispatial neglect. J. Neurol. Neurosurg. Psychiatry 2004, 75, 13–21. [Google Scholar] [PubMed]

- Weinstein, N.; Przybylski, A.K.; Ryan, R.M. The index of autonomous functioning: Development of a scale of human autonomy. J. Res. Personal. 2012, 46, 397–413. [Google Scholar] [CrossRef]

- Wibral, M.; Vicente, R.; Lindner, M. Transfer Entropy in Neuroscience. In Directed Information Measures in Neuroscience; Wibral, M., Vicente, R., Lizier, J.T., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 3–36. [Google Scholar] [CrossRef]

- Haggard, P. Human volition: Towards a neuroscience of will. Nat. Rev. Neurosci. 2008, 9, 934–946. [Google Scholar] [CrossRef]

- Lopez-Sola, E.; Moreno-Bote, R.; Arsiwalla, X.D. Sense of agency for mental actions: Insights from a belief-based action-effect paradigm. Conscious. Cogn. 2021, 96, 103225. [Google Scholar] [CrossRef] [PubMed]

- Deci, E.L.; Ryan, R.M. The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychol. Inq. 2000, 11, 227–268. [Google Scholar] [CrossRef]

- Tononi, G.; Sporns, O.; Edelman, G.M. A measure for brain complexity: Relating functional segregation and integration in the nervous system. Proc. Natl. Acad. Sci. USA 1994, 91, 5033–5037. [Google Scholar] [CrossRef]

- Tononi, G. An information integration theory of consciousness. BMC Neurosci. 2004, 5, 42. [Google Scholar] [CrossRef]

- Tononi, G.; Sporns, O. Measuring information integration. BMC Neurosci. 2003, 4, 31. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Verschure, P.F.M.J. Integrated information for large complex networks. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–7. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Verschure, P. Computing Information Integration in Brain Networks. In Advances in Network Science, Proceedings of the 12th International Conference and School, NetSci-X 2016, Wroclaw, Poland, 11–13 January 2016; Wierzbicki, A., Brandes, U., Schweitzer, F., Pedreschi, D., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 136–146. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Verschure, P.F.M.J. High Integrated Information in Complex Networks Near Criticality. In Artificial Neural Networks and Machine Learning – ICANN 2016, Proceedings of the 25th International Conference on Artificial Neural Networks, Barcelona, Spain, 6–9 September 2016; Part, I., Villa, A.E., Masulli, P., Pons Rivero, A.J., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 184–191. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Verschure, P.F. The global dynamical complexity of the human brain network. Appl. Netw. Sci. 2016, 1, 16. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Verschure, P. Why the Brain Might Operate Near the Edge of Criticality. In Proceedings of the International Conference on Artificial Neural Networks, Alghero, Italy, 11–14 September 2017; Springer: Cham, Switzerland, 2017; pp. 326–333. [Google Scholar]

- Arsiwalla, X.D.; Verschure, P. Measuring the Complexity of Consciousness. Front. Neurosci. 2018, 12, 424. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Pacheco, D.; Principe, A.; Rocamora, R.; Verschure, P. A Temporal Estimate of Integrated Information for Intracranial Functional Connectivity. In Proceedings of the International Conference on Artificial Neural Networks, Rhodes, Greece, 4–7 October 2018; Springer: Cham, Switzerland, 2018; pp. 403–412. [Google Scholar]

- Ay, N. Information geometry on complexity and stochastic interaction. Entropy 2015, 17, 2432–2458. [Google Scholar] [CrossRef]

- Balduzzi, D.; Tononi, G. Integrated information in discrete dynamical systems: Motivation and theoretical framework. PLoS Comput. Biol. 2008, 4, e1000091. [Google Scholar] [CrossRef] [PubMed]

- Barrett, A.B.; Seth, A.K. Practical measures of integrated information for time-series data. PLoS Comput. Biol. 2011, 7, e1001052. [Google Scholar] [CrossRef] [PubMed]

- Griffith, V. A Principled Infotheoretic/phi-like Measure. arXiv 2014, arXiv:1401.0978. [Google Scholar]

- Oizumi, M.; Albantakis, L.; Tononi, G. From the phenomenology to the mechanisms of consciousness: Integrated information theory 3.0. PLoS Comput. Biol. 2014, 10, e1003588. [Google Scholar] [CrossRef] [PubMed]

- Petersen, K.; Wilson, B. Dynamical intricacy and average sample complexity. arXiv 2015, arXiv:1512.01143. [Google Scholar] [CrossRef]

- Tegmark, M. Improved Measures of Integrated Information. arXiv 2016, arXiv:1601.02626. [Google Scholar] [CrossRef] [PubMed]

- Wennekers, T.; Ay, N. Stochastic interaction in associative nets. Neurocomputing 2005, 65, 387–392. [Google Scholar] [CrossRef]

- Sarasso, S.; Casali, A.G.; Casarotto, S.; Rosanova, M.; Sinigaglia, C.; Massimini, M. Consciousness and complexity: A consilience of evidence. Neurosci. Conscious. 2021, 7, 1–24. [Google Scholar] [CrossRef]

- Hagmann, P.; Cammoun, L.; Gigandet, X.; Meuli, R.; Honey, C.J.; Wedeen, V.J.; Sporns, O. Mapping the Structural Core of Human Cerebral Cortex. PLoS Biol. 2008, 6, 15. [Google Scholar] [CrossRef]

- Honey, C.; Sporns, O.; Cammoun, L.; Gigandet, X.; Thiran, J.P.; Meuli, R.; Hagmann, P. Predicting human resting-state functional connectivity from structural connectivity. Proc. Natl. Acad. Sci. USA 2009, 106, 2035–2040. [Google Scholar] [CrossRef] [PubMed]

- Arsiwalla, X.D.; Betella, A.; Bueno, E.M.; Omedas, P.; Zucca, R.; Verschure, P.F. The Dynamic Connectome: A Tool For Large-Scale 3D Reconstruction Of Brain Activity In Real-Time. In Proceedings of the ECMS, Ålesund, Norway, 27–30 May 2013; pp. 865–869. [Google Scholar]

- Betella, A.; Martínez, E.; Zucca, R.; Arsiwalla, X.D.; Omedas, P.; Wierenga, S.; Mura, A.; Wagner, J.; Lingenfelser, F.; André, E.; et al. Advanced Interfaces to Stem the Data Deluge in Mixed Reality: Placing Human (Un)Consciousness in the Loop. In Proceedings of the ACM SIGGRAPH 2013 Posters, Hong Kong, China, 19–22 November 2013; ACM: New York, NY, USA, 2013. SIGGRAPH ’13. p. 68:1. [Google Scholar] [CrossRef]

- Betella, A.; Cetnarski, R.; Zucca, R.; Arsiwalla, X.D.; Martínez, E.; Omedas, P.; Mura, A.; Verschure, P.F.M.J. BrainX3: Embodied Exploration of Neural Data. In Proceedings of the Proceedings of the 2014 Virtual Reality International Conference, Laval, France, 9–11 April 2014; ACM: New York, NY, USA, 2014. VRIC ’14. p. 37:1–37:4. [Google Scholar] [CrossRef]

- Omedas, P.; Betella, A.; Zucca, R.; Arsiwalla, X.D.; Pacheco, D.; Wagner, J.; Lingenfelser, F.; Andre, E.; Mazzei, D.; Lanatá, A.; et al. XIM-Engine: A Software Framework to Support the Development of Interactive Applications That Uses Conscious and Unconscious Reactions in Immersive Mixed Reality. In Proceedings of the Proceedings of the 2014 Virtual Reality International Conference, Laval, France, 9–11 April 2014; ACM: New York, NY, USA, 2014. VRIC ’14. p. 26:1–26:4. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Zucca, R.; Betella, A.; Martinez, E.; Dalmazzo, D.; Omedas, P.; Deco, G.; Verschure, P. Network Dynamics with BrainX3: A Large-Scale Simulation of the Human Brain Network with Real-Time Interaction. Front. Neuroinformatics 2015, 9, 2. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Dalmazzo, D.; Zucca, R.; Betella, A.; Brandi, S.; Martinez, E.; Omedas, P.; Verschure, P. Connectomics to Semantomics: Addressing the Brain’s Big Data Challenge. Procedia Comput. Sci. 2015, 53, 48–55. [Google Scholar] [CrossRef]

- Solé, R. Synthetic transitions: Towards a new synthesis. Philos. Trans. R. Soc. B 2016, 371, 20150438. [Google Scholar] [CrossRef]

- Malyshev, D.A.; Dhami, K.; Lavergne, T.; Chen, T.; Dai, N.; Foster, J.M.; Corrêa, I.R.; Romesberg, F.E. A semi-synthetic organism with an expanded genetic alphabet. Nature 2014, 509, 385–388. [Google Scholar] [CrossRef]

- Solé, R.V.; Munteanu, A.; Rodriguez-Caso, C.; Macia, J. Synthetic protocell biology: From reproduction to computation. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2007, 362, 1727–1739. [Google Scholar] [CrossRef]

- Hutchison, C.A.; Chuang, R.Y.; Noskov, V.N.; Assad-Garcia, N.; Deerinck, T.J.; Ellisman, M.H.; Gill, J.; Kannan, K.; Karas, B.J.; Ma, L.; et al. Design and synthesis of a minimal bacterial genome. Science 2016, 351, aad6253. [Google Scholar] [CrossRef]

- Solé, R.; Amor, D.R.; Duran-Nebreda, S.; Conde-Pueyo, N.; Carbonell-Ballestero, M.; Montañez, R. Synthetic collective intelligence. Biosystems 2016, 148, 47–61. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Tian, Y.; Ma, J.; Gong, Q.; Sengupta, S.; Chen, Z.; Pinkerton, J.; Zitnick, C.L. Elf opengo: An analysis and open reimplementation of alphazero. arXiv 2019, arXiv:1902.04522. [Google Scholar]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed]

- Schrittwieser, J.; Antonoglou, I.; Hubert, T.; Simonyan, K.; Sifre, L.; Schmitt, S.; Guez, A.; Lockhart, E.; Hassabis, D.; Graepel, T.; et al. Mastering atari, go, chess and shogi by planning with a learned model. Nature 2020, 588, 604–609. [Google Scholar] [CrossRef] [PubMed]

- Marcus, G. Innateness, alphazero, and artificial intelligence. arXiv 2018, arXiv:1801.05667. [Google Scholar]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Meier, U.; Masci, J.; Maria Gambardella, L.; Schmidhuber, J. Flexible, high performance convolutional neural networks for image classification. In Proceedings of the IJCAI Proceedings-International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011; Volume 22, p. 1237. [Google Scholar]

- Sak, H.; Senior, A.W.; Beaufays, F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In Proceedings of the Interspeech, Singapore, 14–18 September 2014; pp. 338–342. [Google Scholar]

- Liao, Q.; Poggio, T. Bridging the gaps between residual learning, recurrent neural networks and visual cortex. arXiv 2016, arXiv:1604.03640. [Google Scholar]

- Moulin-Frier, C.; Arsiwalla, X.D.; Puigbò, J.Y.; Sanchez-Fibla, M.; Duff, A.; Verschure, P.F. Top-down and bottom-up interactions between low-level reactive control and symbolic rule learning in embodied agents. In Proceedings of the CoCo@ NIPS Conference, Barcelona, Spain, 9 December 2016. [Google Scholar]

- Sánchez-Fibla, M.; Moulin-Frier, C.; Arsiwalla, X.; Verschure, P. Social Sensorimotor Contingencies: Towards Theory of Mind in Synthetic Agents. In Recent Advances in Artificial Intelligence Research and Development; IOS Press: Amsterdam, The Netherlands, 2017; pp. 251–256. [Google Scholar]

- Freire, I.T.; Moulin-Frier, C.; Sanchez-Fibla, M.; Arsiwalla, X.D.; Verschure, P. Modeling the formation of social conventions in multi-agent populations. arXiv 2018, arXiv:1802.06108. [Google Scholar]

- Freire, I.T.; Puigbò, J.Y.; Arsiwalla, X.D.; Verschure, P.F. Modeling the Opponent’s Action Using Control-Based Reinforcement Learning. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Paris, France, 17–20 July 2018; Springer: Cham, Switzerland, 2018; pp. 179–186. [Google Scholar]

- Freire, I.T.; Arsiwalla, X.D.; Puigbò, J.Y.; Verschure, P. Modeling theory of mind in multi-agent games using adaptive feedback control. arXiv 2019, arXiv:1905.13225. [Google Scholar]

- Freire, I.T.; Moulin-Frier, C.; Sanchez-Fibla, M.; Arsiwalla, X.D.; Verschure, P.F. Modeling the formation of social conventions from embodied real-time interactions. PLoS ONE 2020, 15, e0234434. [Google Scholar] [CrossRef]

- Demirel, B.; Moulin-Frier, C.; Arsiwalla, X.D.; Verschure, P.F.; Sánchez-Fibla, M. Distinguishing Self, Other, and Autonomy From Visual Feedback: A Combined Correlation and Acceleration Transfer Analysis. Front. Hum. Neurosci. 2021, 15, 560657. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Herreros, I.; Moulin-Frier, C.; Sanchez, M.; Verschure, P.F. Is Consciousness a Control Process? In Artificial Intelligence Research and Development; Nebot, A., Binefa, X., Lopez de Mantaras, R., Eds.; IOS Press: Amsterdam, The Netherlands, 2016; pp. 233–238. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Herreros, I.; Moulin-Frier, C.; Verschure, P. Consciousness as an Evolutionary Game-Theoretic Strategy. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Stanford, CA, USA, 26–28 July 2017; Springer: Cham, Switzerland, 2017; pp. 509–514. [Google Scholar]

- Herreros, I.; Arsiwalla, X.; Verschure, P. A forward model at Purkinje cell synapses facilitates cerebellar anticipatory control. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3828–3836. [Google Scholar]

- Moulin-Frier, C.; Puigbò, J.Y.; Arsiwalla, X.D.; Sanchez-Fibla, M.; Verschure, P.F. Embodied Artificial Intelligence through Distributed Adaptive Control: An Integrated Framework. arXiv 2017, arXiv:1704.01407. [Google Scholar]

- Verschure, P.F. Synthetic consciousness: The distributed adaptive control perspective. Phil. Trans. R. Soc. B 2016, 371, 20150448. [Google Scholar] [CrossRef] [PubMed]

- Steels, L. Evolving grounded communication for robots. Trends Cogn. Sci. 2003, 7, 308–312. [Google Scholar] [CrossRef] [PubMed]

- Steels, L.; Hild, M. Language Grounding in Robots; Springer Science & Business Media: New York, NY USA, 2012. [Google Scholar]

- Lewis, D. Convention: A Philosophical Study; Wiley-Blackwell: Cambridge, MA, USA, 1969. [Google Scholar]

- Lewis, D. Convention: A Philosophical Study; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Hofbauer, J.; Huttegger, S.M. Feasibility of communication in binary signaling games. J. Theor. Biol. 2008, 254, 843–849. [Google Scholar] [CrossRef] [PubMed]

- Alexander, J.M. The evolutionary foundations of strong reciprocity. Anal. Krit. 2005, 27, 106–112. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. The evolutionary and genetic origins of consciousness in the Cambrian Period over 500 million years ago. Front. Psychol. 2013, 4, 667. [Google Scholar] [CrossRef] [PubMed]

- Bayne, T.; Hohwy, J.; Owen, A.M. Are there levels of consciousness? Trends Cogn. Sci. 2016, 20, 405–413. [Google Scholar] [CrossRef]

- Zahedi, K.; Ay, N. Quantifying morphological computation. Entropy 2013, 15, 1887–1915. [Google Scholar] [CrossRef]

- Füchslin, R.M.; Dzyakanchuk, A.; Flumini, D.; Hauser, H.; Hunt, K.J.; Luchsinger, R.H.; Reller, B.; Scheidegger, S.; Walker, R. Morphological computation and morphological control: Steps toward a formal theory and applications. Artif. Life 2013, 19, 9–34. [Google Scholar] [CrossRef]

- Griffith, V.; Koch, C. Quantifying synergistic mutual information. In Guided Self-Organization: Inception; Springer: Berlin/Heidelberg, Germany, 2014; pp. 159–190. [Google Scholar]

- Beer, R.D.; Williams, P.L. Information processing and dynamics in minimally cognitive agents. Cogn. Sci. 2015, 39, 1–38. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. The support of autonomy and the control of behavior. J. Personal. Soc. Psychol. 1987, 53, 1024. [Google Scholar] [CrossRef]

- Ryan, R.M.; Deci, E.L. Autonomy Is No Illusion: Self-Determination Theory and the Empirical Study of Authenticity, Awareness, and Will. In Handbook of Experimental Existential Psychology; Guilford Press: New York, NY, USA, 2004; pp. 449–479. [Google Scholar]

- Balduzzi, D.; Tononi, G. Qualia: The geometry of integrated information. PLoS Comput. Biol. 2009, 5, e1000462. [Google Scholar] [CrossRef] [PubMed]

- Casali, A.G.; Gosseries, O.; Rosanova, M.; Boly, M.; Sarasso, S.; Casali, K.R.; Casarotto, S.; Bruno, M.A.; Laureys, S.; Tononi, G.; et al. A theoretically based index of consciousness independent of sensory processing and behavior. Sci. Transl. Med. 2013, 5, 198ra105. [Google Scholar] [CrossRef] [PubMed]

- Barrett, A.B.; Murphy, M.; Bruno, M.A.; Noirhomme, Q.; Boly, M.; Laureys, S.; Seth, A.K. Granger causality analysis of steady-state electroencephalographic signals during propofol-induced anaesthesia. PLoS ONE 2012, 7, e29072. [Google Scholar] [CrossRef]

- Engel, D.; Malone, T.W. Integrated Information as a Metric for Group Interaction: Analyzing Human and Computer Groups Using a Technique Developed to Measure Consciousness. arXiv 2017, arXiv:1702.02462. [Google Scholar]

- Woolley, A.W.; Chabris, C.F.; Pentland, A.; Hashmi, N.; Malone, T.W. Evidence for a collective intelligence factor in the performance of human groups. Science 2010, 330, 686–688. [Google Scholar] [CrossRef] [PubMed]

- Woolley, A.W.; Aggarwal, I.; Malone, T.W. Collective intelligence and group performance. Curr. Dir. Psychol. Sci. 2015, 24, 420–424. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Tumer, K. An introduction to collective intelligence. arXiv, 1999; arXiv:cs/9908014. [Google Scholar]

- Matarić, M.J. From Local Interactions to Collective Intelligence. In Prerational Intelligence: Adaptive Behavior and Intelligent Systems Without Symbols and Logic, Volume 1, Volume 2, Prerational Intelligence: Interdisciplinary Perspectives on the Behavior of Natural and Artificial Systems, Volume 3; Springer: Dordrecht, The Netherlands, 2000; pp. 988–998. [Google Scholar]

- Dorigo, M.; Birattari, M. Swarm Intelligence. Scholarpedia 2007, 2, 1462. [Google Scholar] [CrossRef]

- Huebner, B. Macrocognition: A Theory of Distributed Minds and Collective Intentionality; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Borjon, J.I.; Takahashi, D.Y.; Cervantes, D.C.; Ghazanfar, A.A. Arousal Dynamics Drive Vocal Production in Marmoset Monkeys. J. Neurophysiol. 2016, 116, 753–764. [Google Scholar] [CrossRef]

- De Waal, F.B. Apes know what others believe. Science 2016, 354, 39–40. [Google Scholar] [CrossRef]

- Edelman, D.B.; Seth, A.K. Animal consciousness: A synthetic approach. Trends Neurosci. 2009, 32, 476–484. [Google Scholar] [CrossRef]

- Emery, N.J.; Clayton, N.S. The mentality of crows: Convergent evolution of intelligence in corvids and apes. Science 2004, 306, 1903–1907. [Google Scholar] [CrossRef] [PubMed]

- High, R. The Era of Cognitive Systems: An Inside Look at IBM Watson and How It Works; Redbooks; IBM Corporation: New York, NY, USA, 2012. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Aron, J. How innovative is Apple’s new voice assistant, Siri? New Sci. 2011, 212, 24. [Google Scholar] [CrossRef]

- Legg, S.; Hutter, M. A Collection of Definitions of Intelligence. Front. Artif. Intell. Appl. 2007, 157, 17. [Google Scholar]

- Newell, A. You Can’t Play 20 Questions with Nature and Win: Projective Comments on the Papers of this Symposium. In Visual Information Processing; Academic Press: New York, NY, USA, 1973; pp. 283–308. [Google Scholar]

- Kurihara, K.; Okura, Y.; Matsuo, M.; Toyota, T.; Suzuki, K.; Sugawara, T. A recursive vesicle-based model protocell with a primitive model cell cycle. Nat. Commun. 2015, 6, 8352. [Google Scholar] [CrossRef] [PubMed]

- Rubenstein, M.; Cornejo, A.; Nagpal, R. Programmable self-assembly in a thousand-robot swarm. Science 2014, 345, 795–799. [Google Scholar] [CrossRef]

- Tampuu, A.; Matiisen, T.; Kodelja, D.; Kuzovkin, I.; Korjus, K.; Aru, J.; Aru, J.; Vicente, R. Multiagent cooperation and competition with deep reinforcement learning. PLoS ONE 2017, 12, e0172395. [Google Scholar] [CrossRef]

- Maffei, G.; Santos-Pata, D.; Marcos, E.; Sánchez-Fibla, M.; Verschure, P.F. An embodied biologically constrained model of foraging: From classical and operant conditioning to adaptive real-world behavior in DAC-X. Neural Netw. 2015, 72, 88–108. [Google Scholar] [CrossRef]

- Halloy, J.; Sempo, G.; Caprari, G.; Rivault, C.; Asadpour, M.; Tâche, F.; Said, I.; Durier, V.; Canonge, S.; Amé, J.M.; et al. Social integration of robots into groups of cockroaches to control self-organized choices. Science 2007, 318, 1155–1158. [Google Scholar] [CrossRef]

- Brooks, R. A Robust Layered Control System for a Mobile Robot. IEEE J. Robot. Autom. 1986, 2, 14–23. [Google Scholar] [CrossRef]

- Gardner, H. Frames of Mind: The Theory of Multiple Intelligences; Hachette UK: London, UK, 2011. [Google Scholar]

- Perez, C.E. The Deep Learning AI Playbook: Strategy for Disruptive Artificial Intelligence; Intuition Machine: San Francisco, CA, USA, 2017. [Google Scholar]

- James, W. The Stream of Consciousness. Psychology 1892, 151–175. [Google Scholar]

- Arsiwalla, X.D.; Signorelli, C.M.; Puigbo, J.Y.; Freire, I.T.; Verschure, P. What is the Physics of Intelligence? In Frontiers in Artificial Intelligence and Applications, Proceedings of the 21st International Conference of the Catalan Association for Artificial Intelligence, Catalonia, Spain, 19–21 October 2018; IOS Press: Amsterdam, The Netherlands, 2018; Volume 308. [Google Scholar]

- Arsiwalla, X.D.; Signorelli, C.M.; Puigbo, J.Y.; Freire, I.T.; Verschure, P.F. Are Brains Computers, Emulators or Simulators? In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Paris, France, 17–20 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 11–15. [Google Scholar]

- Goleman, D. Social Intelligence; Random House: New York, NY, USA, 2007. [Google Scholar]

- Edlund, J.A.; Chaumont, N.; Hintze, A.; Koch, C.; Tononi, G.; Adami, C. Integrated information increases with fitness in the evolution of animats. PLoS Comput. Biol. 2011, 7, e1002236. [Google Scholar] [CrossRef] [PubMed]

- Reggia, J.A. The rise of machine consciousness: Studying consciousness with computational models. Neural Networks 2013, 44, 112–131. [Google Scholar] [CrossRef]

- Cassell, J. Embodied Conversational Agents; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Burden, D.J. Deploying embodied AI into virtual worlds. Knowl.-Based Syst. 2009, 22, 540–544. [Google Scholar] [CrossRef]

- Aluru, K.; Tellex, S.; Oberlin, J.; MacGlashan, J. Minecraft as an experimental world for AI in robotics. In Proceedings of the AAAI Fall Symposium, Arlington, VA, USA, 12–14 November 2015. [Google Scholar]

- Johnson, M.; Hofmann, K.; Hutton, T.; Bignell, D. The malmo platform for artificial intelligence experimentation. In Proceedings of the International joint conference on artificial intelligence (IJCAI), New York, NY, USA, 9–15 July 2016; p. 4246. [Google Scholar]

- Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 2013, 36, 181–204. [Google Scholar] [CrossRef] [PubMed]

- Lau, H.; Rosenthal, D. Empirical support for higher-order theories of conscious awareness. Trends Cogn. Sci. 2011, 15, 365–373. [Google Scholar] [CrossRef]

- Hameroff, S.; Penrose, R. Consciousness in the universe: A review of the Orch OR theory. Phys. Life Rev. 2014, 11, 39–78. [Google Scholar] [CrossRef]

- Chalmers, D.J. Facing up to the problem of consciousness. J. Conscious. Stud. 1995, 2, 200–219. [Google Scholar]

- Dehaene, S.; Lau, H.; Kouider, S. What is consciousness, and could machines have it? Science 2017, 358, 486–492. [Google Scholar] [CrossRef]

- Arsiwalla, X.D.; Moreno Bote, R.; Verschure, P. Beyond neural coding? Lessons from perceptual control theory. Behav. Brain Sci. 2019, 42, e217. [Google Scholar] [CrossRef]

- Goertzel, B. Chaotic logic: Language, Thought, and Reality from the Perspective of Complex Systems Science; Springer Science & Business Media: New York, NY, USA, 2013; Volume 9. [Google Scholar]

- King, J.L.; Jukes, T.H. Non-Darwinian Evolution: Most evolutionary change in proteins may be due to neutral mutations and genetic drift. Science 1969, 164, 788–798. [Google Scholar] [CrossRef] [PubMed]

- Killeen, P.R. The non-Darwinian evolution of behavers and behaviors. Behav. Process. 2019, 161, 45–53. [Google Scholar] [CrossRef] [PubMed]

- Lake, B.M.; Ullman, T.D.; Tenenbaum, J.B.; Gershman, S.J. Building machines that learn and think like people. Behav. Brain Sci. 2017, 40, e253. [Google Scholar] [CrossRef] [PubMed]

| Building Blocks | Sensors, Actuators | Neurons, Transistors | Individual Agents |

| Systems-Level | Prokaryotes, Autonomic | Cognitive Systems, | Population of Agents, |

| Realizations | Nervous System, Bots | Brains, Microprocessors | Social Organizations |

| Emergent | Self-Regulated | Problem Solving | Signaling Conventions, |

| Phenomena | Real-Time Behavior | Capabilities | Language, Social Norms, |

| Arts, Science, Culture |

| Complexity Kind | Complexity Measures |

|---|---|

| Index of Autonomous Functioning | |

| Synergistic Information | |

| Morphological Computation | |

| Integrated Information (v1, v2, v3, geometric) | |

| Stochastic Information/Total Information Flow | |

| Mutual & Specific Information | |

| Redundant & Unique Information | |

| Synergistic Information | |

| Transfer Entropy & Information Transfer | |

| Collective Intelligence Factor | |

| Integrated Information v2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arsiwalla, X.D.; Solé, R.; Moulin-Frier, C.; Herreros, I.; Sánchez-Fibla, M.; Verschure, P. The Morphospace of Consciousness: Three Kinds of Complexity for Minds and Machines. NeuroSci 2023, 4, 79-102. https://doi.org/10.3390/neurosci4020009

Arsiwalla XD, Solé R, Moulin-Frier C, Herreros I, Sánchez-Fibla M, Verschure P. The Morphospace of Consciousness: Three Kinds of Complexity for Minds and Machines. NeuroSci. 2023; 4(2):79-102. https://doi.org/10.3390/neurosci4020009

Chicago/Turabian StyleArsiwalla, Xerxes D., Ricard Solé, Clément Moulin-Frier, Ivan Herreros, Martí Sánchez-Fibla, and Paul Verschure. 2023. "The Morphospace of Consciousness: Three Kinds of Complexity for Minds and Machines" NeuroSci 4, no. 2: 79-102. https://doi.org/10.3390/neurosci4020009

APA StyleArsiwalla, X. D., Solé, R., Moulin-Frier, C., Herreros, I., Sánchez-Fibla, M., & Verschure, P. (2023). The Morphospace of Consciousness: Three Kinds of Complexity for Minds and Machines. NeuroSci, 4(2), 79-102. https://doi.org/10.3390/neurosci4020009