Cognition as a Mechanical Process

Abstract

:1. Introduction

1.1. The Many Definitions of Cognition

1.2. Mechanical Perspective of Cognition

1.3. Purpose of This Review

2. Mechanisms of Visual Cognition

2.1. Stochastic Processes in Biology

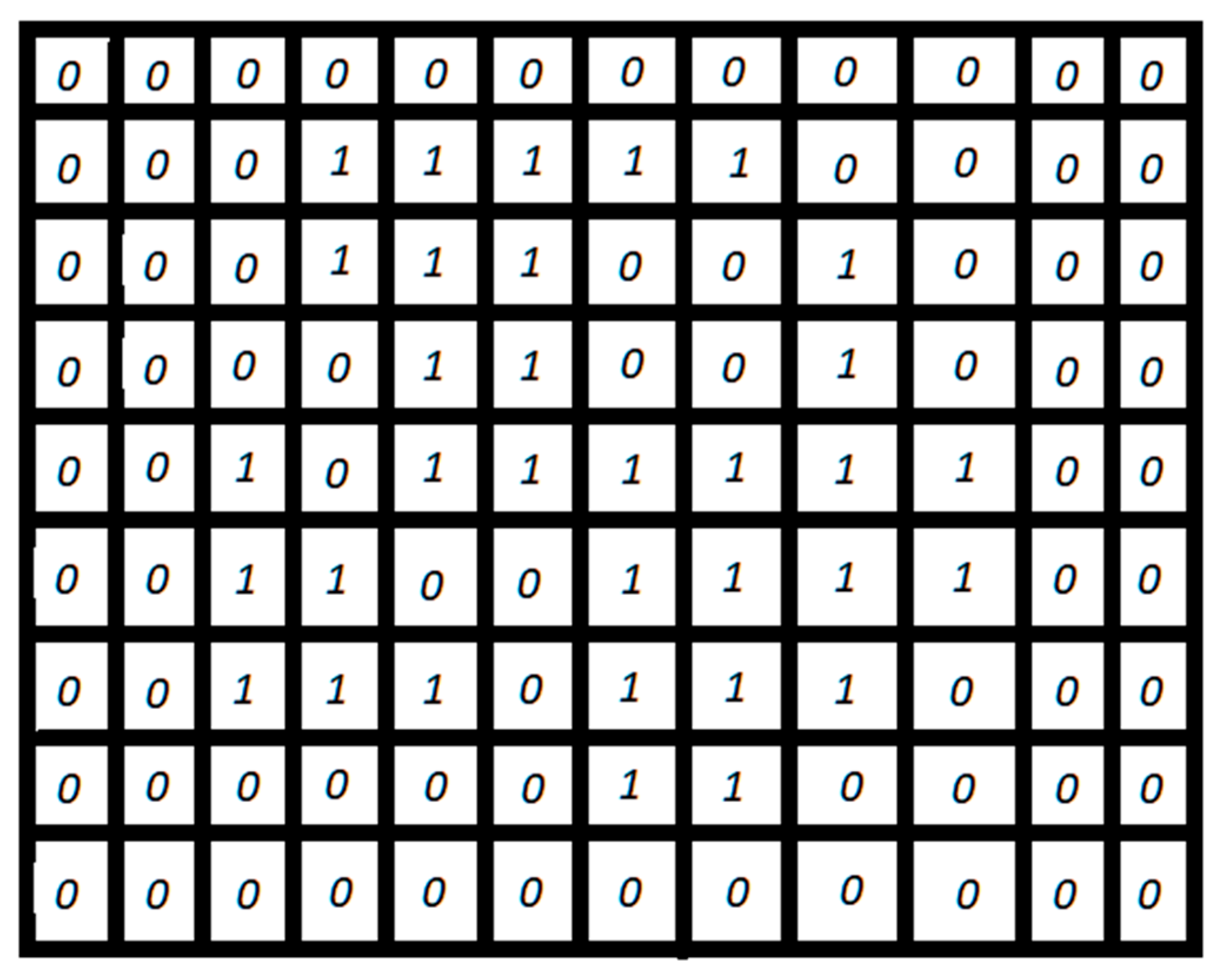

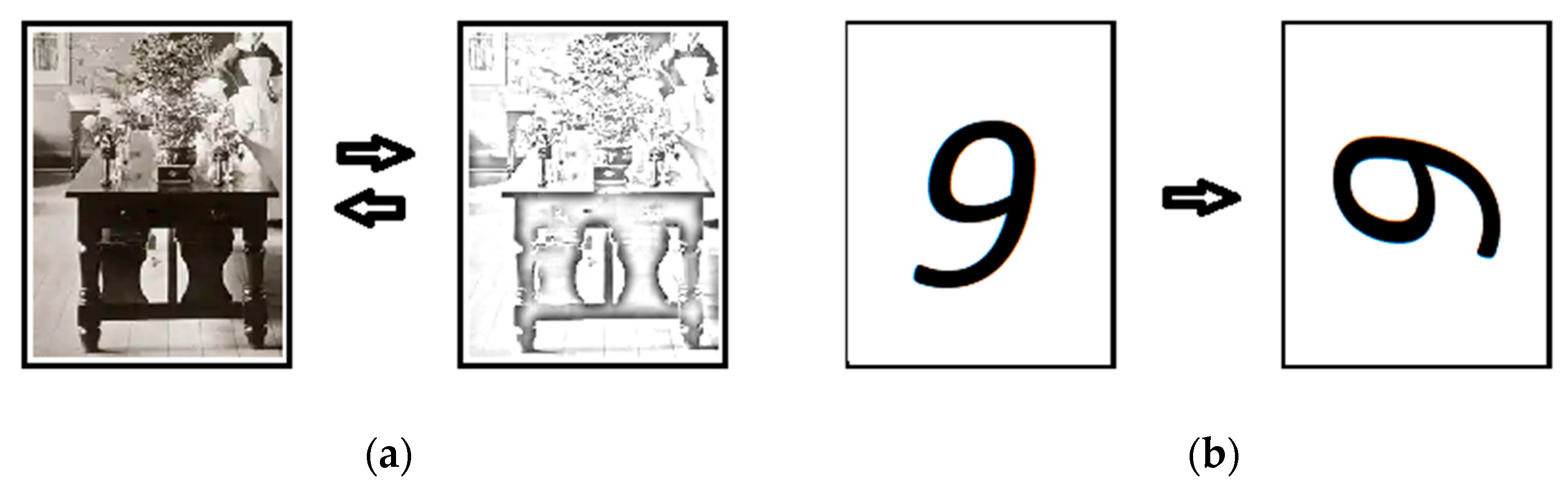

2.2. Abstract Encoding of Sensory Input

2.3. Perception as a Mechanical Process

2.4. Cognition as a Pattern Matching Process

3. General Cognition in Animals

3.1. Cognition and Essential Animal Behavior

3.2. Cognition and Large-Scale Neuroanatomical Changes

3.3. Cognition as a Physiological Process

4. Suggestions for the Natural and Computer Sciences

Funding

Conflicts of Interest

References

- Vlastos, G. Parmenides’ theory of knowledge. In Transactions and Proceedings of the American Philological Association; The Johns Hopkins University Press: Baltimore, MD, USA, 1946; pp. 66–77. [Google Scholar]

- Chang, L.; Tsao, D.Y. The code for facial identity in the primate brain. Cell 2017, 169, 1013–1028. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hinton, G. How to represent part-whole hierarchies in a neural network. arXiv 2021, arXiv:2102.12627. [Google Scholar]

- Jeans, J.H. Physics and Philosophy; Cambridge University Press: Cambridge, UK, 1942. [Google Scholar]

- Smith, A. An Inquiry into the Nature and Causes of the Wealth of Nations, 1st ed.; A. Strahan and T. Cadell: London, UK, 1776. [Google Scholar]

- Searle, J.R.; Willis, S. Intentionality: An Essay in the Philosophy of Mind; Cambridge University Press: Cambridge, UK, 1983. [Google Scholar]

- Haggard, P. Sense of agency in the human brain. Nat. Rev. Neurosci. 2017, 18, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Huxley, T.H. Evidence as to Man’s Place in Nature; Williams and Norgate: London, UK, 1863. [Google Scholar]

- Ramon, Y.; Cajal, S. Textura del Sistema Nervioso del Hombre y de los Vertebrados; Nicolas Moya: Madrid, Spain, 1904. [Google Scholar]

- Kriegeskorte, N.; Kievit, R.A. Representational geometry: Integrating cognition, computation, and the brain. Trends Cognit. Sci. 2013, 17, 401–412. [Google Scholar] [CrossRef] [Green Version]

- Hinton, G.E. Connectionist learning procedures. Artif. Intell. 1989, 40, 185–234. [Google Scholar] [CrossRef] [Green Version]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Yang, Z.; Purves, D. The statistical structure of natural light patterns determines perceived light intensity. Proc. Natl. Acad. Sci. USA 2004, 101, 8745–8750. [Google Scholar] [CrossRef] [Green Version]

- Cichy, R.M.; Pantazis, D.; Oliva, A. Resolving human object recognition in space and time. Nat. Neurosci. 2014, 17, 455–462. [Google Scholar] [CrossRef] [Green Version]

- Prasad, S.; Galetta, S.L. Anatomy and physiology of the afferent visual system. In Handbook of Clinical Neurology; Kennard, C., Leigh, R.J., Eds.; Elsevier: Amsterdam, The Netherlands, 2011; pp. 3–19. [Google Scholar]

- Paley, W. Natural Theology: Or, Evidences of the Existence and Attributes of the Deity, 1st ed.; R. Faulder: London, UK, 1802. [Google Scholar]

- Darwin, C. On the Origin of Species; John Murray: London, UK, 1859. [Google Scholar]

- Tardieu, A.; Delaye, M. Eye lens proteins and transparency: From light transmission theory to solution X-ray structural analysis. Annu. Rev. Biophys. Biophys. Chem. 1988, 17, 47–70. [Google Scholar] [CrossRef] [PubMed]

- Borst, A.; Helmstaedter, M. Common circuit design in fly and mammalian motion vision. Nat. Neurosci. 2015, 18, 1067–1076. [Google Scholar] [CrossRef] [PubMed]

- DiCarlo, J.J.; Zoccolan, D.; Rust, N.C. How does the brain solve visual object recognition? Neuron 2012, 73, 415–434. [Google Scholar] [CrossRef] [Green Version]

- Goyal, A.; Didolkar, A.; Ke, N.R.; Blundell, C.; Beaudoin, P.; Heess, N.; Mozer, M.; Bengio, Y. Neural Production Systems. arXiv 2021, arXiv:2103.01937. [Google Scholar]

- Scholkopf, B.; Locatello, F.; Bauer, S.; Ke, N.R.; Kalchbrenner, N.; Goyal, A.; Bengio, Y. Toward Causal Representation Learning. Proc. IEEE 2021, 1–22. [Google Scholar] [CrossRef]

- Hawkins, D.M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004, 44, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Yates, A.J. Delayed auditory feedback. Psychol. Bull. 1963, 60, 213–232. [Google Scholar] [CrossRef]

- Wallis, G.; Rolls, E.T. Invariant face and object recognition in the visual system. Prog. Neurobiol. 1997, 51, 167–194. [Google Scholar] [CrossRef]

- Goh, G.; Cammarata, N.; Voss, C.; Carter, S.; Petrov, M.; Schubert, L.; Radford, A.; Olah, C. Multimodal Neurons in Artificial Neural Networks. Distill 2021. [Google Scholar] [CrossRef]

- Garrigan, P.; Kellman, P.J. Perceptual learning depends on perceptual constancy. Proc. Natl. Acad. Sci. USA 2008, 105, 2248–2253. [Google Scholar] [CrossRef] [Green Version]

- Liu, A.; Tucker, R.; Jampani, V.; Makadia, A.; Snavely, N.; Kanazawa, A. Infinite Nature: Perpetual View Generation of Natural Scenes from a Single Image. arXiv 2020, arXiv:2012.09855. [Google Scholar]

- Adelson, E.H. Lightness Perception and Lightness Illusions. In The New Cognitive Neurosciences, 2nd ed.; Gazzaniga, M., Ed.; The MIT Press: Cambridge, MA, USA, 2000; pp. 339–351. [Google Scholar]

- Chase, W.G.; Simon, H.A. Perception in chess. Cogn. Psychol. 1973, 4, 55–81. [Google Scholar] [CrossRef]

- Silver, D.; Hubert, T.; Schrittwieser, J.; Antonoglou, I.; Lai, M.; Guez, A.; Lanctot, M.; Sifre, L.; Kumaran, D.; Graepel, T.; et al. A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science 2018, 362, 1140–1144. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kiani, R.; Esteky, H.; Mirpour, K.; Tanaka, K. Object category structure in response patterns of neuronal population in monkey inferior temporal cortex. J. Neurophysiol. 2007, 97, 4296–4309. [Google Scholar] [CrossRef] [Green Version]

- Pang, R.; Lansdell, B.J.; Fairhall, A.L. Dimensionality reduction in neuroscience. Curr. Biol. 2016, 26, R656–R660. [Google Scholar] [CrossRef] [Green Version]

- Grant, P.R.; Grant, B.R. Adaptive radiation of Darwin’s finches: Recent data help explain how this famous group of Galapagos birds evolved, although gaps in our understanding remain. Am. Sci. 2002, 90, 130–139. [Google Scholar] [CrossRef]

- Bostrom, N. The superintelligent will: Motivation and instrumental rationality in advanced artificial agents. Minds Mach. 2012, 22, 71–85. [Google Scholar] [CrossRef]

- Fitch, W.T. The Biology and Evolution of Speech: A Comparative Analysis. Annu. Rev. Linguist. 2018, 4, 255–279. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Wu, W.; Ling, Z.; Xu, Y.; Fang, Y.; Wang, X.; Binder, J.R.; Men, W.; Gao, J.H.; Bi, Y. Organizational principles of abstract words in the human brain. Cereb. Cortex 2018, 28, 4305–4318. [Google Scholar] [CrossRef] [PubMed]

- Muller, A.S.; Montgomery, S.H. Co-evolution of cerebral and cerebellar expansion in cetaceans. J. Evol. Biol. 2019, 32, 1418–1431. [Google Scholar] [CrossRef] [PubMed]

- Chaabouni, R.; Kharitonov, E.; Dupoux, E.; Baroni, M. Communicating artificial neural networks develop efficient color-naming systems. Proc. Natl. Acad. Sci. USA 2021, 118, e2016569118. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Friedman, R. Cognition as a Mechanical Process. NeuroSci 2021, 2, 141-150. https://doi.org/10.3390/neurosci2020010

Friedman R. Cognition as a Mechanical Process. NeuroSci. 2021; 2(2):141-150. https://doi.org/10.3390/neurosci2020010

Chicago/Turabian StyleFriedman, Robert. 2021. "Cognition as a Mechanical Process" NeuroSci 2, no. 2: 141-150. https://doi.org/10.3390/neurosci2020010