Social Impact Measurement: A Systematic Literature Review and Future Research Directions

Abstract

:1. Introduction

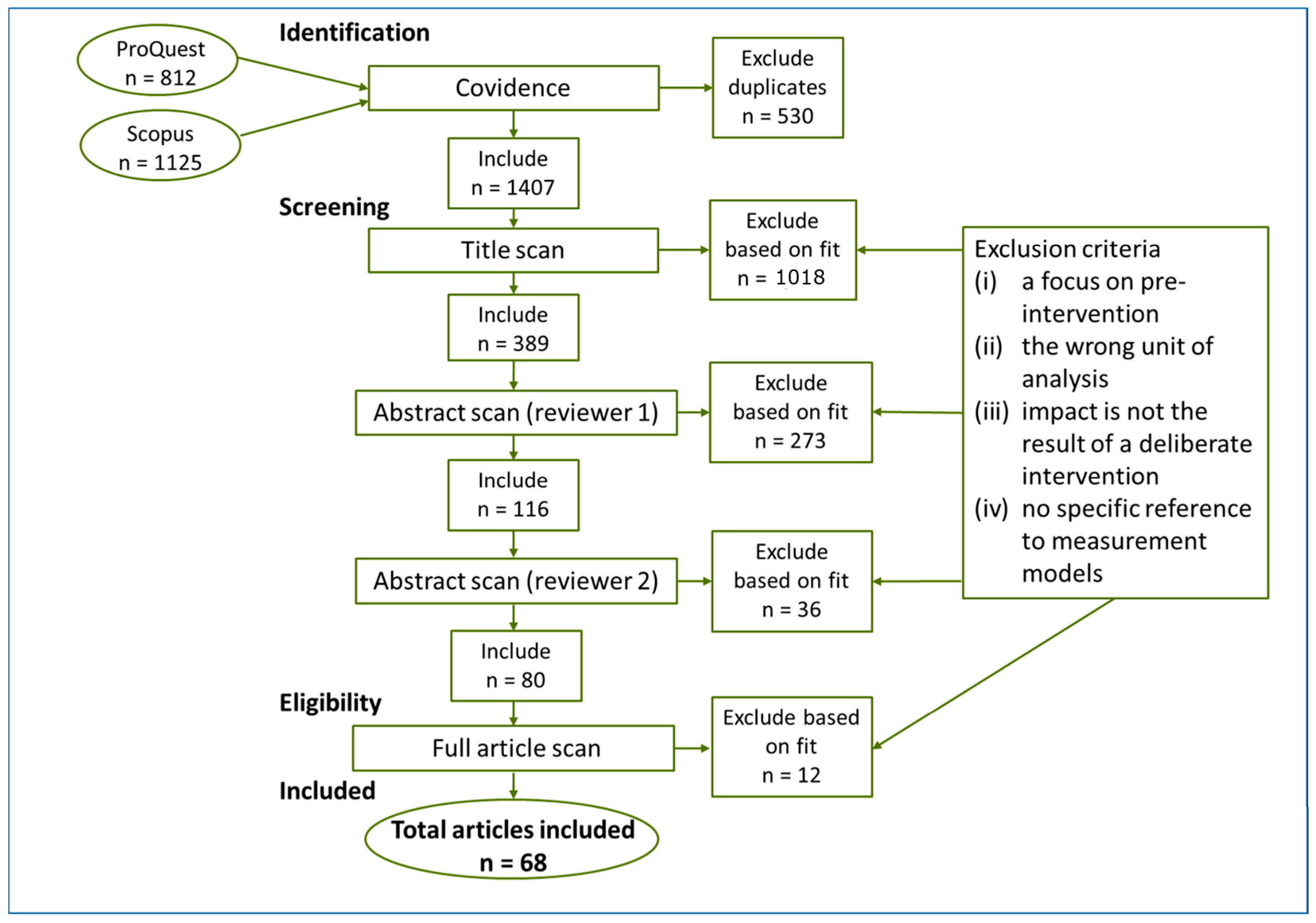

2. Materials and Methods

2.1. Document Sources

2.2. Search Criteria

2.3. Keyword Search and Screening Results

2.4. Data Analysis

- What definitions are provided for social, environmental, sustainable, and community impact?

- What common measurement methods are discussed and applied?

- What are the indicators used for measurement?

- What are the measurement drivers?

- What are the challenges to measurement?

- What are the strategies for improved measurement?

3. Results

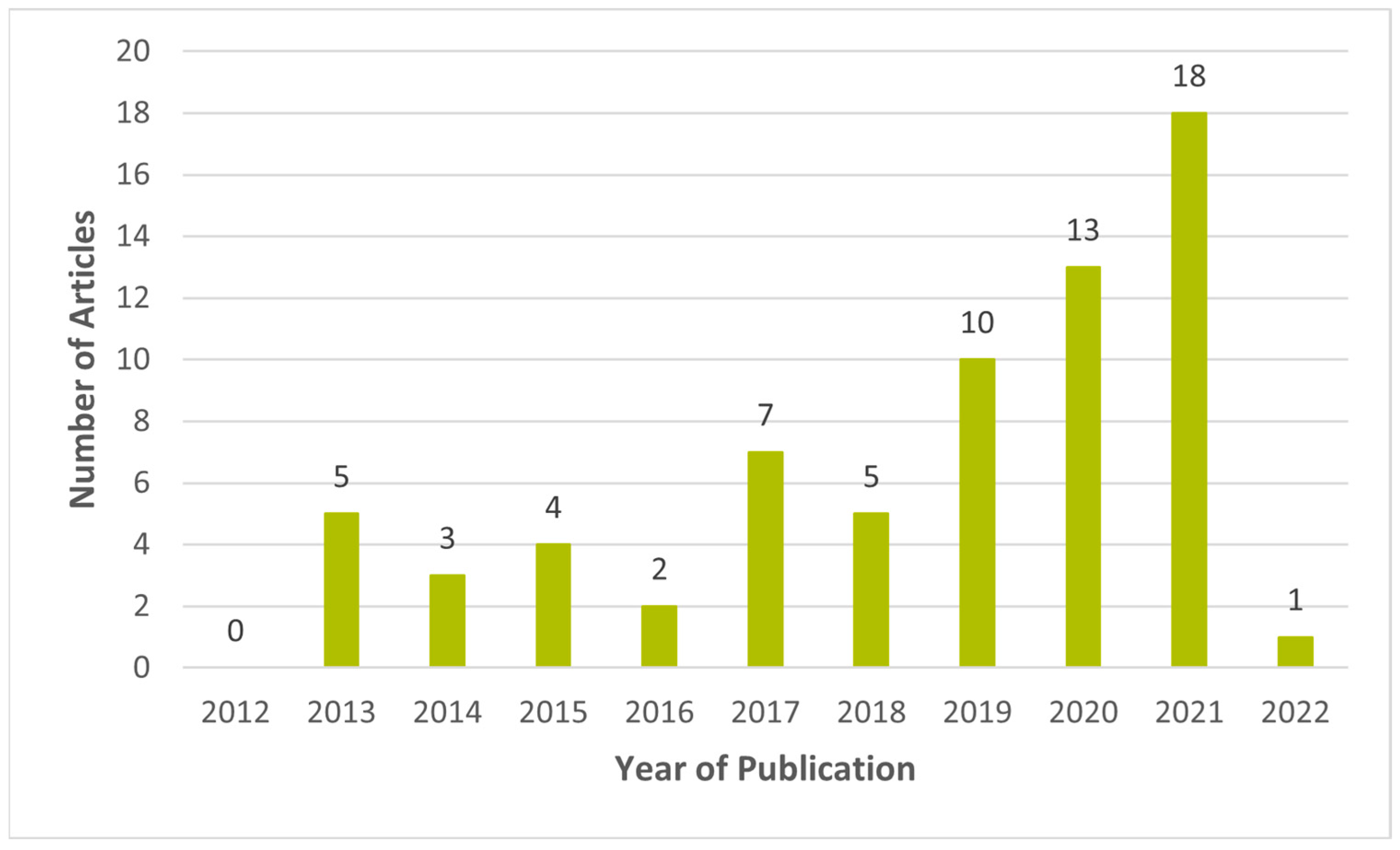

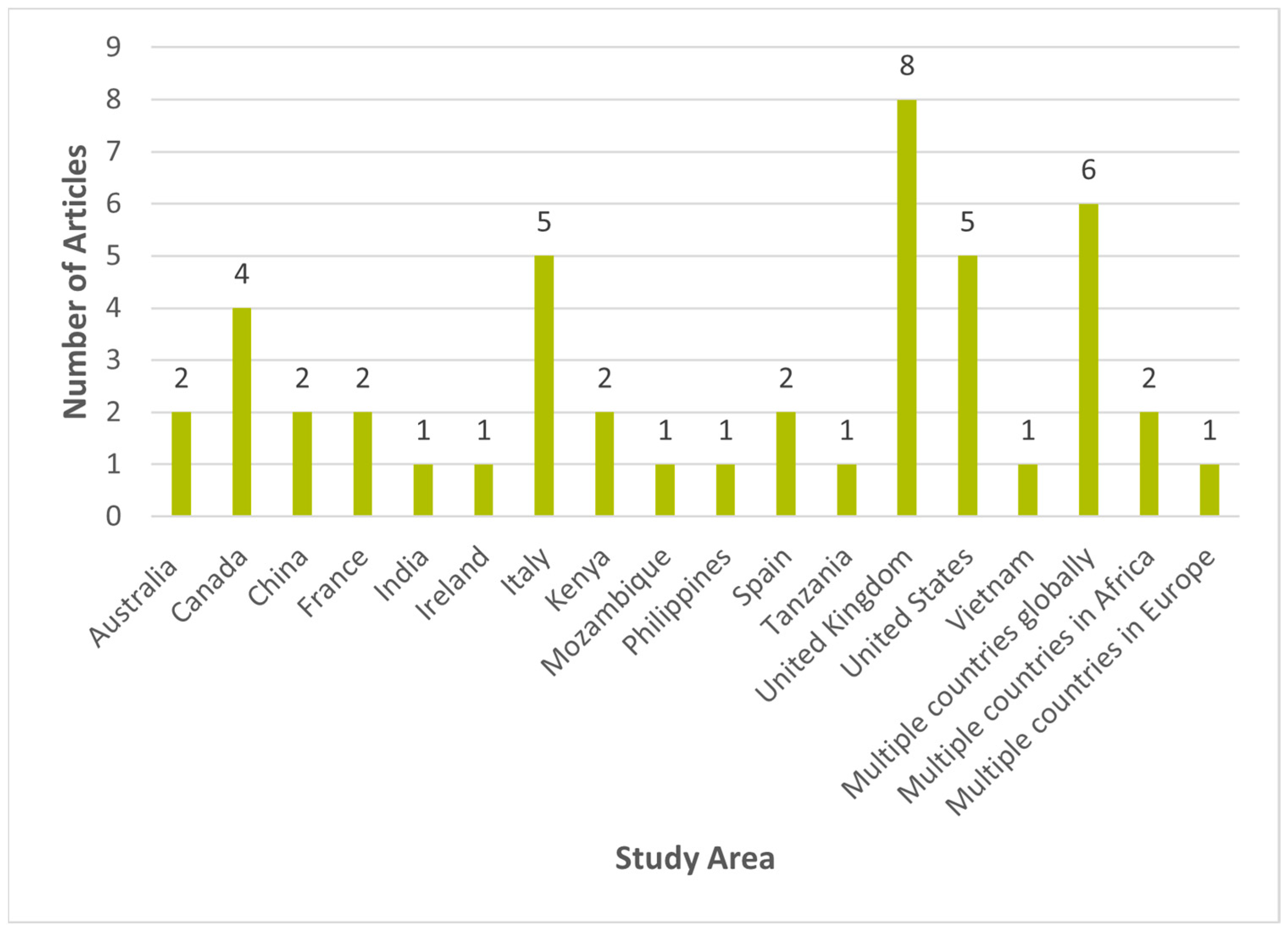

3.1. Descriptive Results

3.2. Thematic Analysis

3.2.1. Definitions of Impact

- “Social impacts include all social and cultural consequences to human populations of any public or private actions that alter the ways in which people live, work, play, relate to one another, organize to meet their needs, and generally cope as members of society” [63] (p. 59) as cited in [21,64,65].

3.2.2. Measurement Models and Tools

- Social Return on Investment (SROI)

- 2.

- Impact Reporting and Investment Standards (IRIS+)

- 3.

- Global Reporting Initiative (GRI)

- 4.

- Social Accounting and Audit (SAA)

3.2.3. Indicators

3.2.4. Measurement Drivers

3.2.5. Challenges to Measurement

3.2.6. Strategies to Improve Measurement Practices

4. Discussion and Conclusions

4.1. Implications for Practitioners

- Engaging stakeholders throughout every stage of the SIM process, most importantly in the planning stages, in particular when it comes to identifying outcome indicators.

- Gaining an understanding of data that are available and working to develop a plan on how to use this data to measure post-intervention social impact, as well as identifying any data gaps that exist.

- Working to increase organizational capacity in the competencies necessary to gather useful data as well as analyze and manage this data, or engage with third-party experts.

- Collecting both quantitative and qualitative data, given the relevance of both types when measuring social impact.

- Exploring SROI for use, or adapting elements of it to use, as an SIM model. A comprehensive guide has been published for practitioners seeking to utilize the SROI measurement model [80]. As there may not be a “one-size-fits-all” measurement model, practitioners may choose to adapt the SROI model to suit their needs or seek out an alternative model to measure social impact.

- Using the SDGs indicators as they stand or as a starting point for developing their own customized indicators. While comparability and the ability to aggregate data are important, practitioners and stakeholders may require flexibility to customize indicators that represent unique contexts when measuring social impact.

- When selecting or developing indicators, practitioners should ensure their indicators include both output and outcome indicators.

- The presence of ToC across the literature suggests its relevance in the SIM field. ToC is especially important to practitioners applying the SROI model as it is applied in the early stages of the measurement process.

- Funders and policymakers often have a significant impact on whether organizations have the capacity to be able to spend the time and resources needed to effectively design and implement their chosen SIM model. As such, it is essential, should they hope to see social impact measured effectively, that funders and policymakers invest in the capacity of organizations to implement the above recommendations.

4.2. Limitations and Future Research

- The search criteria used in this SLR resulted in the absence of study areas from certain countries and continents. For example, while the articles used in this SLR included study areas from across the globe, countries in Central America and South America were not represented, despite no geographic exclusions being applied to the search criteria. Future research can help to address this gap by including non-English sources in the original article search or by including specific geographic regions as keywords in the string search.

- To source a wide variety of literature from multiple disciplines and geographic locations, the articles included in the screening process for this SLR were sourced from ProQuest and Scopus. While the databases used in this study are comparable to WoS with respect to the number of journals indexed and categories of research covered, future SLRs on SIM should consider increasing the number of document sources to include additional databases like WoS. By including multiple databases in the initial search, future research may capture additional research disciplines or countries, adding to the diversity of the study.

- The keywords searched when sourcing documents and the use of exclusion criteria during the article selection process posed limitations to the breadth of the literature reviewed in this study. For example, this SLR focused on the broader impact of a project or program intervention on society and the environment. Thus, specific areas such as health and well-being impact, which are relevant to social impact, were not included within the keyword search of this study. Impact measurement plays an important role in determining the success of healthcare projects, programs, and organizations [90]. As such, studies in the field of health offer valuable contributions to the academic discussion on SIM and merit being explored through future research.

- By using sub-questions for our thematic analysis, we did not capture certain topics. For example, we did not analyze content related to empirical evidence on the shortcomings of the measurement models used in practice. Further, the thematic analysis of measurement models was based on the models that appeared with the greatest frequency, leading to the less common measurement models not being explored in this study. Future research could address these gaps.

- Despite a large variety of measurement models identified in this study, the literature reviewed did not present a wide selection of indicators commonly used in practice to measure social impact. Which indicators are most applied in practice? Are outcome indicators adequately included in practice? Do organizations select from existing open-source indicators or are indicators developed internally? Are stakeholders engaged in the indicator selection process? To address the gap related to indicators and support those seeking standardization, future research can be carried out to inventory the indicators used in practice.

- While this study identified many different measurement models across the literature, empirical evidence on the level of social impact achieved through different projects and programs was lacking. Given that SROI is the most common model used to measure social impact, is there evidence that it effectively measures the level of progress and achievement of the intervention that is designed to lead to social impact? How have deliberate interventions created social impact? Does social impact at the individual, organization, or community level lead to systemic change [91]? Roy et al. [92] raised similar points when calling for an increase in empirical research to examine the causal relationship between social enterprise activities and improved health and well-being.

- Although theory was not thematically analyzed as part of this study, the descriptive analysis provided some insights into the theoretical framings used across the literature reviewed. Organizational theories were most common, often used to examine the adoption of SIM practices by organization types. Theories that appeared with less frequency warrant further investigation. Specifically, the general theory of social impact accounting that was proposed by Nicholls [93] and cited in Kim Man Lee et al. [55], as it incorporates important themes to SIM such as stakeholders, types of data, and materiality. Which theories can be used to better understand SIM? How does SIM differ from financial measurement and organization-centric non-financial measurement?

- The harmonization of sustainability reporting standards is a contemporary topic that can be informed by a maturing SIM field. While this study established the importance of engaging stakeholders throughout the measurement process, the development of future sustainability reporting standards appears to be focused more narrowly on investors needs [17]. The emergence of ESG disclosures is an example of investor focused reporting that may not take a double materiality approach to capture both the risk of sustainability issues on the organization and the organization’s impact on the economy, society, and environment [17,94]. How can the strategies to improve measurement practices identified in this study contribute to the academic discourse on sustainability reporting standards? Are sustainability reporting standards capturing the risk and impact of projects and programs that are material to the organization and stakeholders? How can theory of change and logic model, concepts inherent to SIM, contribute to the integration of double materiality in sustainability reporting standards [15]?

- The terms “evaluation”, “assessment”, and “measurement” are used interchangeably in social impact literature; however, they do not hold equal meaning [3]. Naturally, the scoping of this SLR may have led to the omission of articles on complementary topics from multiple disciplines. How does semantics impact our understanding of a field of research? How can the use of certain terminology hinder a multidisciplinary approach to research? How can studies on carbon accounting [95] and accounting for sustainable development [96] help advance the quality of SIM in practice?

- Given the sheer volume of measurement models that exist and the debate on the possibility of an ideal way to measure social impact [21,97], research gaps remain in the SIM field. While there may not be a “one-size-fits-all” approach to SIM, standardization of measurement models and indicators could provide the ability to calculate collective impact towards transformational goals like SDGs. Is it possible to develop a deeper understanding of which measurement model is most suitable for an industry, organization, or program by examining the characteristics of organizations that use a specific measurement model? Answering this question will require empirical studies across sectors and organization types, indicating an important approach for future SIM research.

4.3. Contributions and Concluding Thoughts

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Journal Name | # of Articles | Journal Name | # of Articles |

|---|---|---|---|

| American Journal of Evaluation | 1 | Journal of Rural Studies | 1 |

| Annals for Istrian and Mediterranean Studies | 1 | Journal of Small Business and Enterprise Development | 1 |

| Annals of Global Health | 1 | Journal of Social Entrepreneurship | 2 |

| Annual Review of Environment and Resources | 1 | Journal of Sport Management | 1 |

| Australian Journal of Public Administration | 1 | Journal of the International Society for Southeast Asian Agricultural Sciences | 1 |

| Canadian Journal of Administrative Sciences | 1 | Journal of Urban Affairs | 1 |

| Canadian Journal of Nonprofit and Social Economy Research | 1 | Land | 1 |

| Cities | 1 | Management and Marketing | 1 |

| Community Psychology in Global Perspective | 1 | Marketing Intelligence and Planning | 1 |

| Conservation Biology | 1 | Media and Communication | 1 |

| Cultural Trends | 1 | MethodsX | 1 |

| Development Engineering | 1 | Michigan Journal of Community Service Learning | 1 |

| Development Southern Africa | 1 | Nonprofit and Voluntary Sector Quarterly | 1 |

| Entrepreneurship Theory and Practice, | 1 | Public Management Review | 1 |

| Environmental Impact Assessment Review | 2 | Qualitative Research in Accounting and Management | 1 |

| Evaluation and Program Planning | 1 | Quality—Access to Success | 1 |

| Evidence and Policy | 1 | RAUSP Management Journal | 1 |

| Globalization and Health | 1 | Renewable Agriculture and Food Systems | 1 |

| Habitat International | 1 | Research Evaluation | 1 |

| Historical Social Research | 1 | Research in International Business and Finance | 1 |

| IPE Journal of Management | 1 | Science, Technology, and Human Values | 1 |

| Irish Journal of Sociology | 1 | Social and Environmental Accountability Journal | 1 |

| Journal of Business Ethics | 1 | Social Enterprise Journal | 1 |

| Journal of Cleaner Production | 1 | Social Indicators Research | 1 |

| Journal of Cultural Heritage Management and Sustainable Development | 1 | Sustainability | 5 |

| Journal of Environmental Planning and Management | 1 | Sustainability Accounting, Management, and Policy Journal | 1 |

| Journal of Extension | 1 | The Foundation Review | 1 |

| Journal of International Agricultural and Extension Education | 1 | The Journal of Arts Management, Law, and Society | 1 |

| Journal of Librarianship and Information Science | 1 | Total Quality Management, and Business Excellence | 1 |

| Journal of Management | 1 | Voluntas | 1 |

| Journal of Public Budgeting, Accounting, and Financial Management | 1 | World Development | 1 |

| Measurement Model | |||

|---|---|---|---|

| 1 | Adaptive social impact management | 35 | Multi-dimensional controlling model |

| 2 | Added value and scalability model | 36 | Multiple-constituencies approach |

| 3 | ASIRPA | 37 | Ongoing assessment of social impacts (OASIS) |

| 4 | Balanced scorecard | 38 | Outcome evaluation approach |

| 5 | Best available charitable option (BACO) | 39 | Payback framework |

| 6 | Business Impact Assessment (BIA) | 40 | Post-project reflection |

| 7 | CFICE—Accounting for impact | 41 | Programme evaluation |

| 8 | Charity analysis framework | 42 | Public action value chain (SGMAP) |

| 9 | Co-axial matrix | 43 | Public value mapping (PVM) |

| 10 | Community capitals framework | 44 | Public value scorecard |

| 11 | Community Impact (CI) Evaluation | 45 | Quality evaluation of creative placemaking |

| 12 | Community impact evaluation (CIE) | 46 | Question-and-evidence matrix |

| 13 | Community score card | 47 | RAPID Outcome Assessment |

| 14 | Composite measure for the social values of sport (CMSVS) | 48 | RCT-based evaluation |

| 15 | Conceptual assessment framework | 49 | REF (H2020) |

| 16 | Contribution analysis | 50 | Ripple effect mapping |

| 17 | Cost benefit analysis (CBA) | 51 | SAFA—Sustainability Assessment of Food and Agriculture Systems |

| 18 | Data-collection tool development | 52 | SHARE IT |

| 19 | Kirkpatrick model | 53 | SIAMPI |

| 20 | Ecological footprint | 54 | SIMPLE |

| 21 | Economic survival framework | 55 | Social accounting and audit (SAA) |

| 22 | European Foundation for Quality Management (EFQM) | 56 | Social enterprise balanced scorecards |

| 23 | Eco-Management and Audit Scheme (EMAS) | 57 | Social footprint measurement |

| 24 | Evaluation grids | 58 | Social impact assessment (SIA) |

| 25 | Evaluative framework | 59 | Social impact measurement model |

| 26 | Framework for participatory impact assessment | 60 | Social performance framework |

| 27 | Fuzzy comprehensive evaluation (FCE) | 61 | Soft outcome universal learning (SOUL) |

| 28 | Global impact investing rating system (GIIRS) | 62 | Social return on investment (SROI) |

| 29 | GPS for social impact | 63 | Structural equation modelling |

| 30 | Global Reporting Initiative (GRI) | 64 | Sustainability quick-check model |

| 31 | Impact reporting and investment standards (IRIS) | 65 | Sustainable balanced scorecard |

| 32 | Impact value chain | 66 | TBL accounting |

| 33 | Logic model development | 67 | The B impact rating system |

| 34 | Measuring impact framework | 68 | VIS |

References

- Arvidson, M.; Lyon, F. Social Impact Measurement and Non-Profit Organisations: Compliance, Resistance, and Promotion. Volunt. Int. J. Volunt. Nonprofit Organ. 2014, 25, 869–886. [Google Scholar] [CrossRef]

- Frantzen, L.; Solomon, J.; Hollod, L. Partner-Centered Evaluation Capacity Building: Findings from a Corporate Social Impact Initiative. Found. Rev. 2018, 10, 7–25. [Google Scholar] [CrossRef]

- Vermeulen, M.; Maas, K. Building Legitimacy and Learning Lessons: A Framework for Cultural Organizations to Manage and Measure the Social Impact of Their Activities. J. Arts Manag. Law Soc. 2020, 51, 97–112. [Google Scholar] [CrossRef]

- Alomoto, W.; Niñerola, A.; Pié, L. Social Impact Assessment: A Systematic Review of Literature. Soc. Indic. Res. 2021, 161, 225–250. [Google Scholar] [CrossRef]

- Sachs, J.; Kroll, C.; Lafortune, G.; Fuller, G.; Woelm, F. Sustainable Development Report 2022; Cambridge University Press: Cambridge, UK, 2022; ISBN 978-1-00-921008-9. [Google Scholar]

- Elauria, M.; Manilay, A.; Abrigo, G.N.A.; Medina, S.M.; Reyes, R.B. Socio-Economic and Environmental Impacts of the Conservation Farming Village Project in Upland Communities of La Libertad, Negros Oriental, Philippines. J. Int. Soc. Southeast Asian Agric. Sci. 2017, 23, 45–56. [Google Scholar]

- Aiello, E.; Donovan, C.; Duque, E.; Fabrizio, S.; Flecha, R.; Holm, P.; Molina, S.; Oliver, E. Effective Strategies That Enhance the Social Impact of Social Sciences and Humanities Research. Evid. Policy A J. Res. Debate Pract. 2021, 17, 131–146. [Google Scholar] [CrossRef]

- Nguyen, L.; Szkudlarek, B.; Seymour, R.G. Social impact measurement in social enterprises: An interdependence perspective. Can. J. Adm. Sci. Rev. Can. Des Sci. De L’administration 2015, 32, 224–237. [Google Scholar] [CrossRef]

- Trautwein, C. Sustainability Impact Assessment of Start-Ups–Key Insights on Relevant Assessment Challenges and Approaches Based on an Inclusive, Systematic Literature Review. J. Clean. Prod. 2021, 281, 125330. [Google Scholar] [CrossRef]

- Fiandrino, S.; Scarpa, F.; Torelli, R. Fostering Social Impact Through Corporate Implementation of the SDGs: Transformative Mechanisms Towards Interconnectedness and Inclusiveness. J. Bus. Ethics 2022, 180, 959–973. [Google Scholar] [CrossRef]

- Phillips, F.; Libby, R.; Libby, P.A.; Mackintosh, B. Fundamentals of Financial Accounting, 5th Canadian ed.; McGra-Hill Ryerson Limited: Whitby, ON, Canada, 2018. [Google Scholar]

- Uyar, A. Evolution of Corporate Reporting and Emerging Trends. J. Corp. Account. Financ. 2016, 27, 27–30. [Google Scholar] [CrossRef]

- Elkington, J. Cannibals with Forks: The Triple Bottom Line of 21st Century Business; Conscientious Commerce; New Society Publishers: Gabriola Island, BC, Canada; Stony Creek, CT, USA, 1999; ISBN 978-0-86571-392-5. [Google Scholar]

- Laine, M.; Tregidga, H.; Unerman, J. Sustainability Accounting and Accountability, 3rd ed.; Routledge: New York, NY, USA, 2021; ISBN 978-1-03-202880-4. [Google Scholar]

- Ng, A.; Yorke, S.; Nathwani, J. Enforcing Double Materiality in Global Sustainability Reporting for Developing Economies: Reflection on Ghana’s Oil Exploration and Mining Sectors. Sustainability 2022, 14, 9988. [Google Scholar] [CrossRef]

- Rawhouser, H.; Cummings, M.; Newbert, S.L. Social Impact Measurement: Current Approaches and Future Directions for Social Entrepreneurship Research. Entrep. Theory Pract. 2019, 43, 82–115. [Google Scholar] [CrossRef]

- Adams, C.A.; Abhayawansa, S. Connecting the COVID-19 Pandemic, Environmental, Social and Governance (ESG) Investing and Calls for ‘Harmonisation’ of Sustainability Reporting. Crit. Perspect. Account. 2022, 82, 102309. [Google Scholar] [CrossRef]

- Hadad, S.; Gauca, O. (Drumea) Social Impact Measurement in Social Entrepreneurial Organizations. Manag. Mark. 2014, 9, 119–136. [Google Scholar]

- Maas, K.; Liket, K. Social Impact Measurement: Classification of Methods. In Environmental Management Accounting and Supply Chain Management; Burritt, R., Schaltegger, S., Bennett, M., Pohjola, T., Csutora, M., Eds.; Eco-Efficiency in Industry and Science; Springer Netherlands: Dordrecht, The Netherlands, 2011; pp. 171–202. ISBN 978-94-007-1390-1. [Google Scholar]

- Costa, E.; Pesci, C. Social Impact Measurement: Why Do Stakeholders Matter? Sustain. Account. Manag. Policy J. 2016, 7, 99–124. [Google Scholar] [CrossRef]

- Kah, S.; Akenroye, T. Evaluation of Social Impact Measurement Tools and Techniques: A Systematic Review of the Literature. Soc. Enterp. J. 2020, 16, 381–402. [Google Scholar] [CrossRef]

- McLoughlin, J.; Kaminski, J.; Sodagar, B.; Khan, S.; Harris, R.; Arnaudo, G.; Brearty, S.M. A Strategic Approach to Social Impact Measurement of Social Enterprises: The SIMPLE Methodology. Soc. Enterp. J. 2009, 5, 154–178. [Google Scholar] [CrossRef]

- Gallou, E.; Fouseki, K. Applying Social Impact Assessment (SIA) Principles in Assessing Contribution of Cultural Heritage to Social Sustainability in Rural Landscapes. J. Cult. Herit. Manag. Sustain. Dev. 2019, 9, 352–375. [Google Scholar] [CrossRef]

- Grieco, C.; Michelini, L.; Iasevoli, G. Measuring Value Creation in Social Enterprises: A Cluster Analysis of Social Impact Assessment Models. Nonprofit Volunt. Sect. Q. 2015, 44, 1173–1193. [Google Scholar] [CrossRef]

- Taylor, A.; Taylor, M. Factors Influencing Effective Implementation of Performance Measurement Systems in Small and Medium-Sized Enterprises and Large Firms: A Perspective from Contingency Theory. Int. J. Prod. Res. 2014, 52, 847–866. [Google Scholar] [CrossRef]

- Mura, M.; Longo, M.; Micheli, P.; Bolzani, D. The Evolution of Sustainability Measurement Research. Int. J. Manag. Rev. 2018, 20, 661–695. [Google Scholar] [CrossRef]

- Vo, A.T.; Christie, C.A. Where Impact Measurement Meets Evaluation: Tensions, Challenges, and Opportunities. Am. J. Eval. 2018, 39, 383–388. [Google Scholar] [CrossRef]

- Corvo, L.; Pastore, L.; Manti, A.; Iannaci, D. Mapping Social Impact Assessment Models: A Literature Overview for a Future Research Agenda. Sustainability 2021, 13, 4750. [Google Scholar] [CrossRef]

- Jackson, A.; McManus, R. SROI in the Art Gallery; Valuing Social Impact. Cult. Trends 2019, 28, 2–29. [Google Scholar] [CrossRef]

- Lombardo, G.; Mazzocchetti, A.; Rapallo, I.; Tayser, N.; Cincotti, S. Assessment of the Economic and Social Impact Using SROI: An Application to Sport Companies. Sustainability 2019, 11, 3612. [Google Scholar] [CrossRef]

- Joly, P.-B.; Gaunand, A.; Colinet, L.; Larédo, P.; Lemarié, S.; Matt, M. ASIRPA: A Comprehensive Theory-Based Approach to Assessing the Societal Impacts of a Research Organization. Res. Eval. 2015, 24, 440–453. [Google Scholar] [CrossRef]

- Ruff, K.; Olsen, S. The Need for Analysts in Social Impact Measurement: How Evaluators Can Help. Am. J. Eval. 2018, 39, 402–407. [Google Scholar] [CrossRef]

- Nigri, G.; Michelini, L. A Systematic Literature Review on Social Impact Assessment: Outlining Main Dimensions and Future Research Lines. In International Dimensions of Sustainable Management: Latest Perspectives from Corporate Governance, Responsible Finance and CSR; Schmidpeter, R., Capaldi, N., Idowu, S.O., Stürenberg Herrera, A., Eds.; CSR, Sustainability, Ethics & Governance; Springer International Publishing: Cham, Switzerland, 2019; pp. 53–67. ISBN 978-3-030-04819-8. [Google Scholar]

- Fink, A. Conducting Research Literature Reviews: From the Internet to Paper, 2nd ed.; Sage Publications: Thousand Oaks, CA, USA, 2005; ISBN 978-1-4129-0904-4. [Google Scholar]

- Parris, D.L.; Peachey, J.W. A Systematic Literature Review of Servant Leadership Theory in Organizational Contexts. J. Bus. Ethics 2013, 113, 377–393. [Google Scholar] [CrossRef]

- Popay, J.; Roberts, H.; Sowden, A.; Petticrew, M.; Arai, L.; Rodgers, M.; Britten, N.; Roen, K.; Duffy, S. Guidance on the Conduct of Narrative Synthesis in Systematic Reviews. ESRC Methods Programme 2006, 15, 47–71. [Google Scholar]

- Elsevier Search-How Scopus Works. Available online: http://www.elsevier.com/solutions/scopus/how-scopus-works/search (accessed on 19 April 2022).

- ProQuest Advanced Search-ProQuest. Available online: http://www.proquest.com/advanced?accountid=14906 (accessed on 19 April 2022).

- Elsevier Content-How Scopus Works. Available online: http://www.elsevier.com/solutions/scopus/how-scopus-works/content (accessed on 19 April 2022).

- Gusenbauer, M.; Haddaway, N. Which Academic Search Systems Are Suitable for Systematic Seviews or Meta-analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed and 26 Other Resources. Res. Synth. Methods 2020, 11, 181–217. [Google Scholar] [CrossRef]

- Searcy, C. Corporate Sustainability Performance Measurement Systems: A Review and Research Agenda. J. Bus. Ethics 2012, 107, 239–253. [Google Scholar] [CrossRef]

- Covidence Covidence—Better Systematic Review Management. Available online: https://www.covidence.org/ (accessed on 19 April 2022).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, T.P. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Y.; Watson, M. Guidance on Conducting a Systematic Literature Review. J. Plan. Educ. Res. 2017, 39, 93–112. [Google Scholar] [CrossRef]

- Gioia, D.A.; Corley, K.G.; Hamilton, A.L. Seeking Qualitative Rigor in Inductive Research: Notes on the Gioia Methodology. Organ. Res. Methods 2013, 16, 15–31. [Google Scholar] [CrossRef]

- Barraket, J.; Yousefpour, N. Evaluation and Social Impact Measurement amongst Small to Medium Social Enterprises: Process, Purpose and Value. Aust. J. Public Adm. 2013, 72, 447–458. [Google Scholar] [CrossRef]

- Costa, E.; Andreaus, M. Social Impact and Performance Measurement Systems in an Italian Social Enterprise: A Participatory Action Research Project. J. Public Budg. Account. Financ. Manag. 2020, 33, 289–313. [Google Scholar] [CrossRef]

- Liston-Heyes, C.; Liu, G. To Measure or Not to Measure? An Empirical Investigation of Social Impact Measurement in UK Social Enterprises. Public Manag. Rev. 2021, 23, 687–709. [Google Scholar] [CrossRef]

- Caselli, D. Did You Say “Social Impact”? Welfare Transformations, Networks of Expertise, and the Financialization of Italian Welfare. Hist. Soc. Res. Hist. Sozialforschung 2020, 45, 140–160. [Google Scholar]

- Phillips, S.D.; Johnson, B. Inching to Impact: The Demand Side of Social Impact Investing. J. Bus. Ethics 2021, 168, 615–629. [Google Scholar] [CrossRef]

- Tsotsotso, K. Is Programme Evaluation the Same as Social Impact Measurement? J. Soc. Entrep. 2021, 12, 155–174. [Google Scholar] [CrossRef]

- Tse, A.E.; Warner, M.E. The Razor’s Edge: Social Impact Bonds and the Financialization of Early Childhood Services. J. Urban Aff. 2020, 42, 816–832. [Google Scholar] [CrossRef]

- Williams, J.W. “Let’s Not Have the Perfect Be the Enemy of the Good”: Social Impact Bonds, Randomized Controlled Trials, and the Valuation of Social Programs. Sci. Technol. Hum. Values 2021, 48, 91–114. [Google Scholar] [CrossRef] [PubMed]

- Belcher, B.M.; Davel, R.; Claus, R. A Refined Method for Theory-Based Evaluation of the Societal Impacts of Research. MethodsX 2020, 7, 100788. [Google Scholar] [CrossRef] [PubMed]

- Kim Man Lee, E.; Ho, L.; Kee, C.H.; Kwan, C.H.; Ng, C.H. Social Impact Measurement in Incremental Social Innovation. J. Soc. Entrep. 2021, 12, 69–86. [Google Scholar] [CrossRef]

- Hughes, K.; Morgan, S.; Baylis, K.; Oduol, J.; Smith-Dumont, E.; Vågen, T.-G.; Kegode, H. Assessing the Downstream Socioeconomic Impacts of Agroforestry in Kenya. World Dev. 2020, 128, 104835. [Google Scholar] [CrossRef]

- Bottero, M.; Bragaglia, F.; Caruso, N.; Datola, G.; Dell’Anna, F. Experimenting Community Impact Evaluation (CIE) for Assessing Urban Regeneration Programmes: The Case Study of the Area 22@ Barcelona. Cities 2020, 99, 102464. [Google Scholar] [CrossRef]

- Wilson, J.; Tyedmers, P.; Grant, J. Measuring Environmental Impact at the Neighbourhood Level. J. Environ. Plan. Manag. 2013, 56, 42–60. [Google Scholar] [CrossRef]

- Njah, J.; Hansoti, B.; Adeyami, A.; Bruce, K.; O’Malley, G.; Gugerty, M.K.; Chi, B.H.; Lubimbi, N.; Steen, E.; Stampfly, S.; et al. Measuring for Success: Evaluating Leadership Training Programs for Sustainable Impact. Ann. Glob. Health 2021, 87, 63. [Google Scholar] [CrossRef]

- Ricciuti, E.; Bufali, M.V. The Health and Social Impact of Blood Donors Associations: A Social Return on Investment (SROI) Analysis. Eval. Program Plan. 2019, 73, 204–213. [Google Scholar] [CrossRef]

- Lee, S.; Cornwell, T.; Babiak, K. Developing an Instrument to Measure the Social Impact of Sport: Social Capital, Collective Identities, Health Literacy, Well-Being and Human Capital. J. Sport Manag. 2013, 27, 24–42. [Google Scholar] [CrossRef]

- Kanter, R.M.; Brinkerhoff, D. Organizational Performance: Recent Developments in Measurement. Annu. Rev. Sociol. 1981, 7, 321–349. [Google Scholar] [CrossRef]

- Burdge, R.J.; Vanclay, F. Social Impact Assessment: A Contribution to the State of the Art Series. Impact Assess. 1996, 14, 59–86. [Google Scholar] [CrossRef]

- Dufour, B. Social Impact Measurement: What Can Impact Investment Practices and the Policy Evaluation Paradigm Learn from Each Other? Res. Int. Bus. Financ. 2019, 47, 18–30. [Google Scholar] [CrossRef]

- Pawluczuk, A.; Hall, H.; Webster, G.; Smith, C. Youth Digital Participation: Measuring Social Impact. J. Librariansh. Inf. Sci. 2020, 52, 3–15. [Google Scholar] [CrossRef]

- Clark, C.; Rosenzweig, W.; Long, D.; Olsen, S. Double Bottom Line Project Report: Assessing Social Impact in Double Bottom Line Ventures; University of California: Berkeley, CA, USA, 2004; p. 70. [Google Scholar]

- Cerioni, E.; Marasca, S. The Methods of Social Impact Assessment: The State of the Art and Limits of Application. Calitatea 2021, 22, 29–36. [Google Scholar]

- Ebrahim, A.; Rangan, V. The Limits of Nonprofit Impact: A Contingency Framework for Measuring Social Performance. Harv. Bus. Sch. Harv. Bus. Sch. Work. Pap. 2010, 1–53. [Google Scholar] [CrossRef]

- World Commission on Environment and Development. Our Common Future; Oxford Paperbacks; Oxford University Press: Oxford, UK; New York, NY, USA, 1987; ISBN 978-0-19-282080-8. [Google Scholar]

- Ferraro, P.J.; Hanauer, M.M. Advances in Measuring the Environmental and Social Impacts of Environmental Programs. Annu. Rev. Environ. Resour. 2014, 39, 495–517. [Google Scholar] [CrossRef]

- Jones, A.; Valero-Silva, N. Social Impact Measurement in Social Housing: A Theory-Based Investigation into the Context, Mechanisms and Outcomes of Implementation. Qual. Res. Account. Manag. 2021, 18, 361–389. [Google Scholar] [CrossRef]

- Stiglitz, J.; Sen, A.; Fitoussi, J. Report of the Commission on the Measurement of Economic Performance and Social Progress (CMEPSP); Government of France: Paris, France, 2009. [Google Scholar]

- Armstrong, A.G.; Mattson, C.A.; Lewis, R.S. Factors Leading to Sustainable Social Impact on the Affected Communities of Engineering Service Learning Projects. Dev. Eng. 2021, 6, 100066. [Google Scholar] [CrossRef]

- Meringolo, P.; Volpi, C.; Chiodini, M. Community Impact Evaluation. Telling a Stronger Story. Community Psychol. Glob. Perspect. 2019, 5, 85–106. [Google Scholar] [CrossRef]

- Social Value UK SROI Guide-Guidance, Standards and Framework. Available online: https://socialvalueuk.org/resources/sroi-guide-impact-map-worked-example/ (accessed on 31 May 2022).

- Global Impact Investing Network (GIIN) Welcome to IRIS+ System | the Generally Accepted System for Impact Investors to Measure, Manage, and Optimize Their Impact. Available online: https://iris.thegiin.org/ (accessed on 31 May 2022).

- Global Reporting Initiative (GRI) GRI–Home. Available online: https://www.globalreporting.org/ (accessed on 31 May 2022).

- Dey, C.; Gibbon, J. Moving on from Scaling up: Further Progress in Developing Social Impact Measurement in the Third Sector. Soc. Environ. Account. J. 2017, 37, 66–72. [Google Scholar] [CrossRef]

- Mulloth, B.; Rumi, S. Challenges to Measuring Social Value Creation through Social Impact Assessments: The Case of RVA Works. J. Small Bus. Enterp. Dev. 2021, 29, 528–549. [Google Scholar] [CrossRef]

- Nicholls, J.; Lawlor, E.; Neitzert, E.; Goodspeed, T. A Guide to Social Return on Investment; Cabinet Office: London, UK, 2012. [Google Scholar]

- Lazzarini, S.G. The Measurement of Social Impact and Opportunities for Research in Business Administration. RAUSP Manag. J. 2018, 53, 134–137. [Google Scholar] [CrossRef]

- Calvo-Mora, A.; Domínguez-CC, M.; Criado, F. Assessment and Improvement of Organisational Social Impact through the EFQM Excellence Model. Total Qual. Manag. Bus. Excell. 2018, 29, 1259–1278. [Google Scholar] [CrossRef]

- Solórzano-García, M.; Navío-Marco, J.; Ruiz-Gómez, L.M. Ambiguity in the Attribution of Social Impact: A Study of the Difficulties of Calculating Filter Coefficients in the SROI Method. Sustainability 2019, 11, 386. [Google Scholar] [CrossRef]

- O’Byrne, D.; Mechiche-Alami, A.; Tengberg, A.; Olsson, L. The Social Impacts of Sustainable Land Management in Great Green Wall Countries: An Evaluative Framework Based on the Capability Approach. Land 2022, 11, 352. [Google Scholar] [CrossRef]

- Barnett, M.L.; Henriques, I.; Husted, B.W. Beyond Good Intentions: Designing CSR Initiatives for Greater Social Impact. J. Manag. 2020, 46, 937–964. [Google Scholar] [CrossRef]

- Harrington, R.; Good, T.; O’Neil, K.; Grant, S.; Maass, S.; Vettern, R.; McGlaughlin, P. Value of Assessing Personal, Organizational, and Community Impacts of Extension Volunteer Programs. J. Ext. 2021, 59, 6. [Google Scholar] [CrossRef]

- Mackenzie, S.G.; Davies, A.R. SHARE IT: Co-Designing a Sustainability Impact Assessment Framework for Urban Food Sharing Initiatives. Environ. Impact Assess. Rev. 2019, 79, 106300. [Google Scholar] [CrossRef]

- Pawluczuk, A.; Webster, G.; Smith, C.; Hall, H. The Social Impact of Digital Youth Work: What Are We Looking For? Media Commun. 2019, 7, 59–68. [Google Scholar] [CrossRef]

- Polonsky, M.J.; Landreth Grau, S.; McDonald, S. Perspectives on Social Impact Measurement and Non-Profit Organisations. Mark. Intell. Plan. 2016, 34, 80–98. [Google Scholar] [CrossRef]

- Caló, F.; Teasdale, S.; Donaldson, C.; Roy, M.J.; Baglioni, S. Collaborator or Competitor: Assessing the Evidence Supporting the Role of Social Enterprise in Health and Social Care. Public Manag. Rev. 2018, 20, 1790–1814. [Google Scholar] [CrossRef]

- Clarke, A.; Crane, A. Cross-Sector Partnerships for Systemic Change: Systematized Literature Review and Agenda for Further Research. J. Bus. Ethics 2018, 150, 303–313. [Google Scholar] [CrossRef]

- Roy, M.J.; Donaldson, C.; Baker, R.; Kerr, S. The Potential of Social Enterprise to Enhance Health and Well-Being: A Model and Systematic Review. Soc. Sci. Med. 2014, 123, 182–193. [Google Scholar] [CrossRef] [PubMed]

- Nicholls, A. A General Theory of Social Impact Accounting: Materiality, Uncertainty and Empowerment. J. Soc. Entrep. 2018, 9, 132–153. [Google Scholar] [CrossRef]

- Cho, C.H.; Bohr, K.; Choi, T.J.; Partridge, K.; Shah, J.M.; Swierszcz, A. Advancing Sustainability Reporting in Canada: 2019 Report on Progress. Account. Perspect. 2020, 19, 181–204. [Google Scholar] [CrossRef]

- Marlowe, J.; Clarke, A. Carbon Accounting: A Systematic Literature Review and Directions for Future Research. Green Financ. 2022, 4, 71–87. [Google Scholar] [CrossRef]

- Bebbington, J.; Unerman, J. Achieving the United Nations Sustainable Development Goals: An Enabling Role for Accounting Research. Account. Audit. Account. 2018, 31, 2–24. [Google Scholar] [CrossRef]

- Caló, F.; Roy, M.J.; Donaldson, C.; Teasdale, S.; Baglioni, S. Evidencing the Contribution of Social Enterprise to Health and Social Care: Approaches and Considerations. Soc. Enterp. J. 2021, 17, 140–155. [Google Scholar] [CrossRef]

| Measurement Model | Data Collection Method | Measurement Tools | Sector | Application Scale | Grieco et al. [24] Cluster | Corvo et al. [28] Cluster | Mentioned in # of Articles |

|---|---|---|---|---|---|---|---|

| Social return on investment (SROI) [75] | Interviews, questionnaires | Outcome indicators are developed by the assessor and informed by stakeholders | Multiple sectors | Project, program, organization | Simple Social Quantitative | Monetization | 24 |

| Impact Reporting and Investment Standards (IRIS+) [76] | Not specified | A catalog of output and outcome metrics are provided by IRIS+ | Impact investing focus made available to multiple sectors | Organization | Holistic Complex | Performance/management and sustainability | 7 |

| Global Reporting Initiative (GRI) [77] | Not specified | Disclosures are provided by GRI. Output indicators are included within some of the disclosures. | Multiple sectors | Organization | Holistic Complex | Performance/management and sustainability | 6 |

| Social accounting and audit (SAA) [18] | Interviews, questionnaires, focus groups | Output and outcome indicators are developed by the organization and informed by stakeholders | Multiple sectors | Project, program, organization | Holistic Complex | Auditing/quality | 5 |

| Drivers | SIM Supports the Practitioner in The Following |

|---|---|

| Internal: Improve organizational performance/continuous improvement | Achieving the sustainable development goals (SDGs) [4] and other social impact objectives [83]. |

| Improving understanding of how to allocate resources [24]. | |

| Developing internal learning tools [8] that can inform future projects [84] and support decision making [6]. | |

| Identifying upscaling opportunities and improve cost-effectiveness of inputs and activities [3]. | |

| Contributing to an organization’s strategy [67]. | |

| Increasing staff morale [1]. | |

| External: Demand from funders and investors | Supporting policymakers seeking to invest in social impact [49]. |

| Promoting evidence-based reporting to demonstrate the value created for communities [8]. | |

| Assessing sustainability performance to invest purposefully [9]. | |

| Generating social impact data for grant applications and providing evidence of impact [1], including to meet compliance demands [46]. | |

| Having competitive advantage when seeking contracts [1] and funding [20]. | |

| Demonstrating the impact of corporate social responsibility (CSR) initiatives funded by an organization [85]. | |

| External: Pressure from other stakeholders | Supporting decision making [4] with relevant data [86]. |

| Challenging traditional economic thinking and political decision making [9]. | |

| Legitimizing activities by gaining insights into organizational performance [3]. | |

| Demonstrating the societal impact of activities [7]. | |

| Demonstrating the results of strategic efforts [1]. | |

| Increasing transparency, accountability, and legitimacy [8,21]. | |

| Enhancing promotional efforts [1]. | |

| Complying with legislation [67]. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feor, L.; Clarke, A.; Dougherty, I. Social Impact Measurement: A Systematic Literature Review and Future Research Directions. World 2023, 4, 816-837. https://doi.org/10.3390/world4040051

Feor L, Clarke A, Dougherty I. Social Impact Measurement: A Systematic Literature Review and Future Research Directions. World. 2023; 4(4):816-837. https://doi.org/10.3390/world4040051

Chicago/Turabian StyleFeor, Leah, Amelia Clarke, and Ilona Dougherty. 2023. "Social Impact Measurement: A Systematic Literature Review and Future Research Directions" World 4, no. 4: 816-837. https://doi.org/10.3390/world4040051

APA StyleFeor, L., Clarke, A., & Dougherty, I. (2023). Social Impact Measurement: A Systematic Literature Review and Future Research Directions. World, 4(4), 816-837. https://doi.org/10.3390/world4040051