Mask Inflation Encoder and Quasi-Dynamic Thresholding Outlier Detection in Cellular Networks

Abstract

1. Introduction

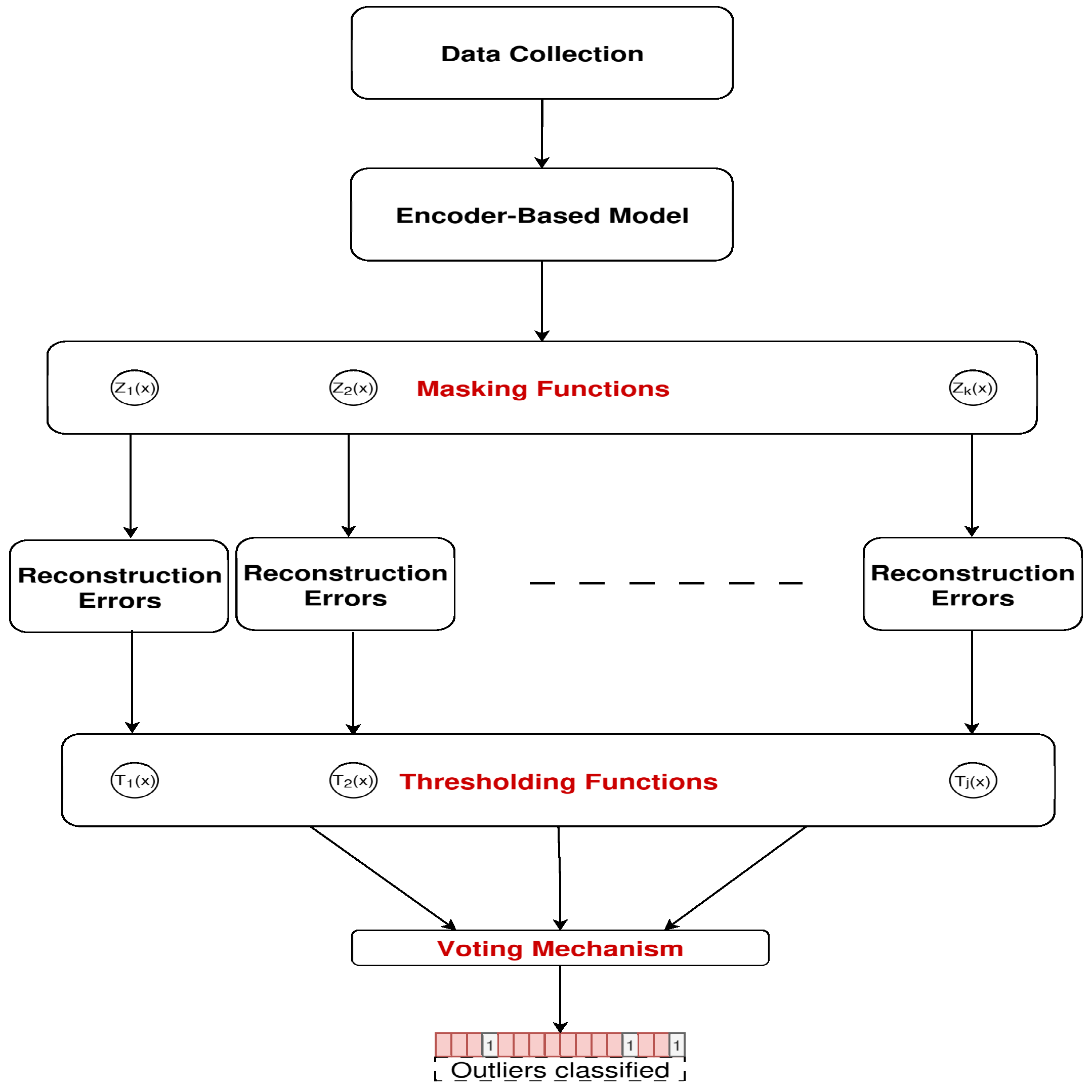

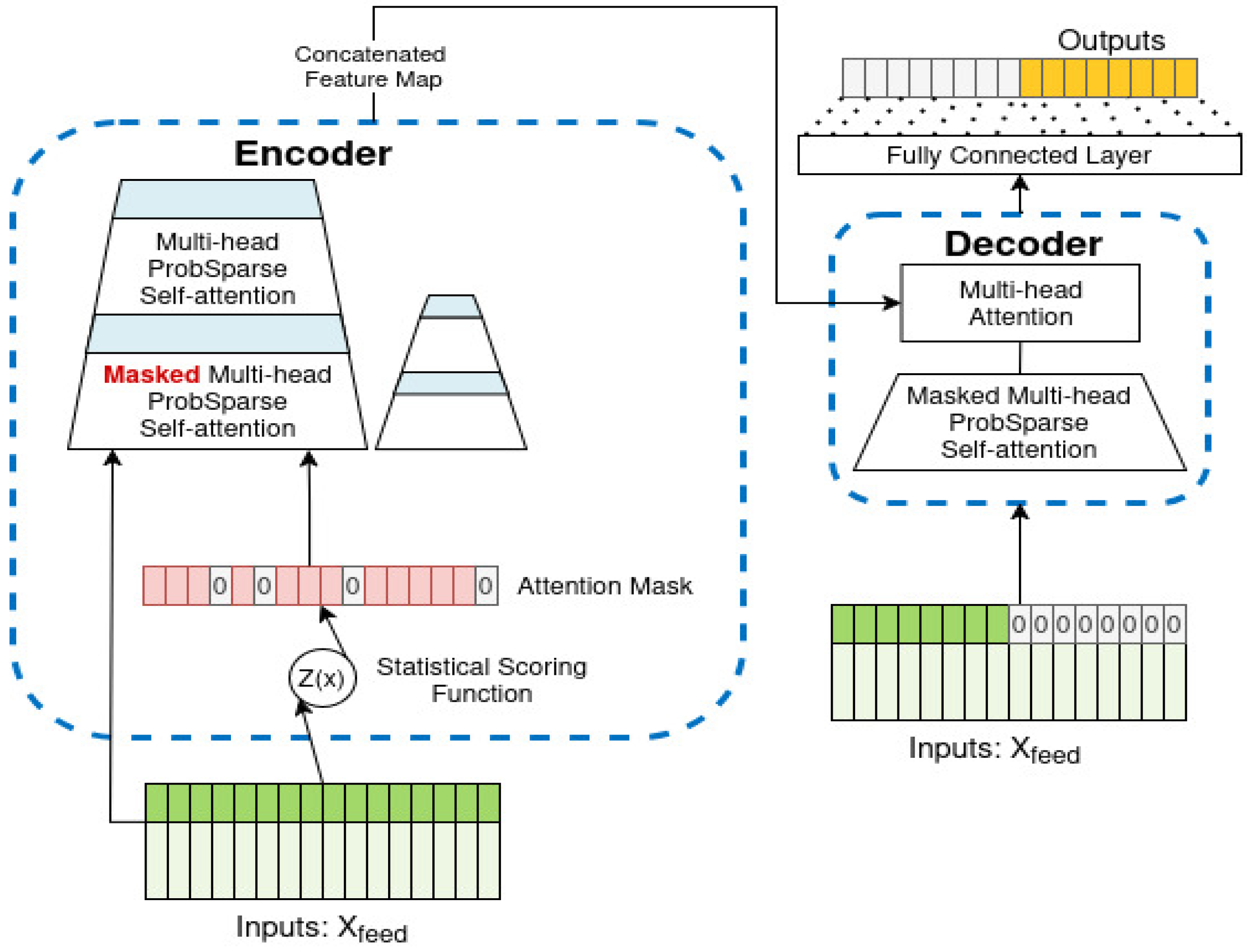

- An encoder mask inflation mechanism for an AE model, called Informer, which is used to bias the encoder’s attention towards likely abnormal observations by generating an early anomaly score using statistical normality. This mechanism is combined with quasi-dynamic thresholding based on well-established statistical techniques (Z-score, Chebyshev’s inequality, and MAD) for outlier detection (OD) in mobile networks. It is based on the assumption that anomalies produce higher reconstruction errors and there are fewer anomalies than normal observations, resulting in a right-skewed error distribution.

- The proposed model uses the Z-score, Chebyshev, and MAD methods to define the thresholds for outliers, and introduces a voting scheme that flags anomalies based on agreement between the best combinations of approaches for encoder-masking and thresholding. This novel solution is applied to the Milano HTA dataset, achieving 31% improvement of the area under the receiver operating characteristic (AUROC), with lower memory requirements and similar computational complexity, compared to relevant OD methods.

2. Related Works

| Article | Scope | Advantages | Limitations | Contribution |

|---|---|---|---|---|

| [3,9] | NN with clustering/GMM with DBSCAN for CDR. | interpretable statistical models. | Manual removal of anomalies; static thresholding. | Fully unsupervised and adaptive pipeline |

| [6,7] | CNN/CNN-AE for OD. | High accuracy; works with unlabeled data. | No temporal modeling; static threshold. | Temporal modeling and adaptive thresholding are added. |

| [8] | GenAD for WBS time series | Scalable; dual-level thresholds; light fine-tuning. | Relies only on masking; lacks structural variation. | Introduces parametric and structure-aware masking. |

| [10] | LSTM-based OD in IoT. | Models local/global temporal context. | High computational complexity; static threshold. | Encoder masking for lighter models and adaptive thresholding. |

| [11,12] | Transformer AE with masking. | Captures complex patterns; temporal masking. | Static thresholds; info loss risk. | Inflated masking and quasi-dynamic thresholding. |

| [13] | GScore for out-of-distribution (OOD) detection. | Unsupervised scoring. | Low efficiency for multi-modal data. | Uses a distribution-free model and statistical thresholding. |

| [14,15] | ARIMA forecasting for CDR/data from smart homes. | Simple and interpretable method. | Requires retraining to account for concept drift. | Adaptive thresholding. |

3. Encoder Attention Mask Inflation and Quasi-Dynamic Thresholding

3.1. Chebyshev Inequality Theorem

3.2. Standard Score (Z-Score)

3.3. Median Absolute Deviation

3.4. Encoder Inflated Masking

3.5. The Quasi-Dynamic Threshold Mechanism

3.6. Outlier Voting Mechanism

3.7. Computational Complexity

4. Data Source and Preprocessing

- For each of these interactions, a unique identifier is created by the system to trace the end-to-end activity. The communication is initiated by the UE and sent to a base transceiver station (BTS), which connects to the core network.

- For voice calls and text messages, the traffic is handled by the mobile switching center (MSC).

- For Internet usage, data packets are processed through packet-switched gateways that assign an Internet Protocol (IP) address to the UE that helps track activity and location (region, country), establish a session ID, and connect it to external data networks. Internet usage will be recorded in the volume of data utilized during a session.

4.1. Data Preprocessing

- The GridID variable was treated as a categorical variable, although denoted as numerical.

- Timestamp contains time blocks indicating the recorded data. A total of 8920 time blocks of 10 min each are formed.

- The Destination variable contains categorical variables with 2 values, Local and International.

- SmsIn, SmsOut, CallIn, CallOut, and Internet are all variables of interest used to detect outliers that contain continuous values.

4.2. Dataset Distribution

5. Challenges for Algorithm Implementation

6. Experimental Results and Discussions

- Masking illustrates the corresponding mechanism used on the Informer model, with “None” denoting that no masking was used and therefore representing the base model. Both “MAD” and “Chebyshev” denote the proposed masking methods.

- Threshold denotes the corresponding mechanisms where “standard” denotes the commonly used 95% threshold, which is compared to that obtained through Chebyshev, Box–Cox, and MAD thresholds.

- Precision indicates how many of the identified normal data points are correctly recognized.

- Accuracy is an indicative of how often the model correctly classifies normal data and outliers.

- Recall represents the model sensitivity at detecting outliers, i.e., how many of the outliers were detected among all known outliers.

- F1-score illustrates the harmonic mean of the precision and recall, which is a measure of how balanced the model is at classifying both normal data and outliers.

- AUROC measures the ability of the model to accurately discriminate between the normal data from outliers.

6.1. Computational Complexity Comparison

6.2. Comparison of Classification Performance for OD

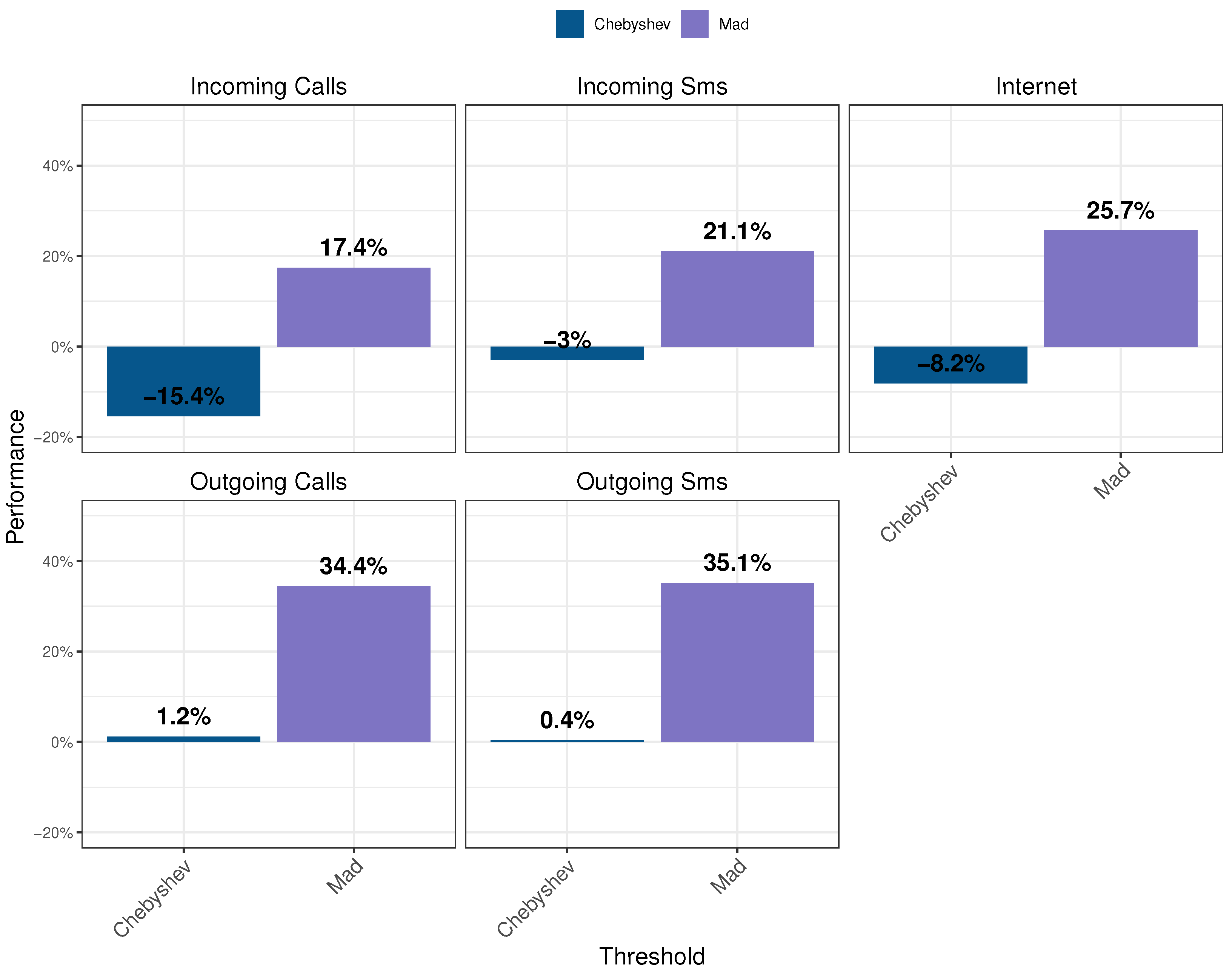

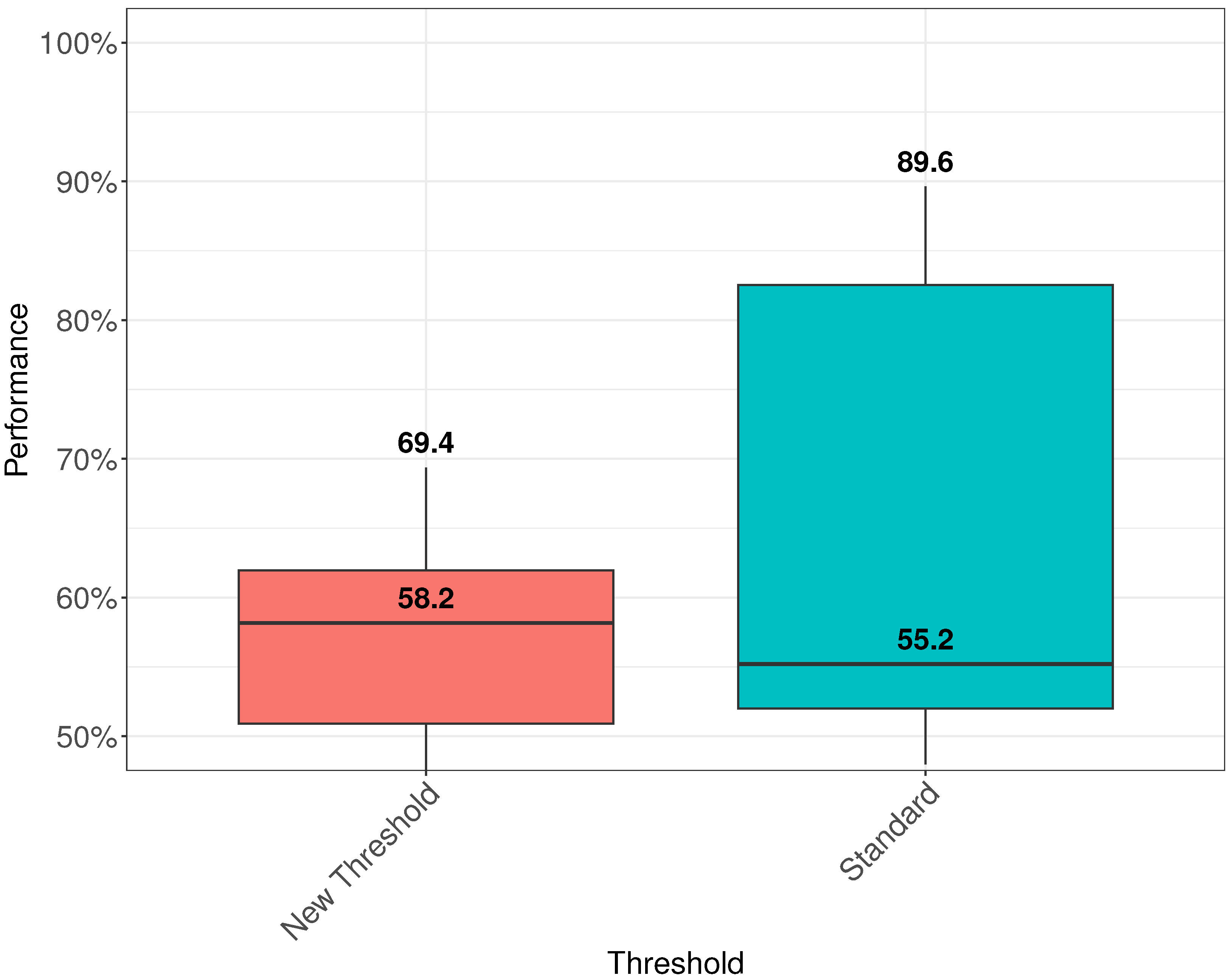

6.3. Influence of Masking and Thresholding on the Performance

Regression Analysis of the Influence of Masking and Thresholding

7. Conclusions

- Encoder masking improves the baseline Informer detection accuracy without degrading the proposed model’s computational complexity or memory requirements. Its training data processing speed is comparable to that of the LSTM-AE and SARIMA models, while incorporating the knowledge provided by the increased number of data features, and providing significantly higher memory efficiency. Consequently, the modified Informer is suitable for implementing OD functionality in the near-real-time RIC, and as observed by the authors of [20], it is viable for implementation in cases when OD in under 10 ms is required, as is also the case for the proposed model. The OD can be implemented via an xApp running on the RIC to detect malicious traffic and prevent it from exploiting security vulnerabilities in other xApps that could result in denial of service. Future work will further investigate the OD through real-time experiments in O-RAN.

- Statistical thresholding yields better results at separating classes of data and may be used together with the output of the DL model’s loss function. It achieves better classification of unlabeled data than using a fixed threshold, e.g., the 95th percentile, which is a conservative value. The proposed thresholding approach adapts this value according to the reconstruction error produced by the model. The combination of MAD thresholding and masking has been shown to yield the most significant improvement in the AUROC.

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 5G | Fifth Generation |

| AE | Autoencoder |

| ARIMA | Auto-regressive Integrated Moving Average |

| AUC | Area Under the Curve |

| AUROC | Area Under the Receiver Operating Characteristic |

| CDR | Calls Detailed Records |

| CN | Core Network |

| CNN | Convolutional Neural Network |

| CNN-AE | Convolutional Neural Network Autoencoder |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| DL | Deep Learning |

| GAN | Generative Adversarial Networks |

| GMM | Gaussian Mixture Model |

| HTA | Human Telecommunications Activities |

| LSTS | Long Sequence Time Series |

| LSTM | Long-Short-Term Memory |

| LSTM-AE | Long Short-Term Memory Autoencoder |

| MAD | Median Absolute Deviation |

| MSE | Mean Square Error |

| NN | Neural Network |

| O-RAN | Open Radio Access Network |

| OD | Outlier Detection |

| OOD | Out-of-Distribution |

| Probability Density Function | |

| RAN | Radio Access Network |

| RIC | RAN Intelligent Controller |

| SARIMA | Seasonal Auto-Regressive Integrated Moving Average |

| UE | User Equipment |

| WBS | Wireless Base Stations |

References

- Giordani, M.; Polese, M.; Mezzavilla, M.; Rangan, S.; Zorzi, M. Toward 6G networks: Use cases and technologies. IEEE Commun. Mag. 2020, 58, 55–61. [Google Scholar] [CrossRef]

- Edozie, E.; Shuaibu, A.N.; Sadiq, B.O.; John, U.K. Artificial intelligence advances in anomaly detection for telecom networks. Artif. Intell. Rev. 2025, 58, 100. [Google Scholar] [CrossRef]

- Aziz, Z.; Bestak, R. Modeling Voice Traffic Patterns for Anomaly Detection and Prediction in Cellular Networks based on CDR Data. IEEE Trans. Mob. Comput. 2024, 23, 13131–13143. [Google Scholar] [CrossRef]

- Mahrez, Z.; Driss, M.B.; Sabir, E.; Saad, W.; Driouch, E. Benchmarking of anomaly detection techniques in o-ran for handover optimization. In Proceedings of the 2023 International Wireless Communications and Mobile Computing (IWCMC), Marrakesh, Morocco, 19–23 June 2023; pp. 119–125. [Google Scholar]

- Mfondoum, R.; Ivanov, A.; Koleva, P.; Poulkov, V.; Manolova, A. Outlier Detection in Streaming Data for Telecommunications and Industrial Applications: A Survey. Electronics 2024, 13, 3339. [Google Scholar] [CrossRef]

- Rassam, M.A. Autoencoder-Based Neural Network Model for Anomaly Detection in Wireless Body Area Networks. IoT 2024, 5, 852–870. [Google Scholar] [CrossRef]

- Owoh, N.; Riley, J.; Ashawa, M.; Hosseinzadeh, S.; Philip, A.; Osamor, J. An Adaptive Temporal Convolutional Network Autoencoder for Malicious Data Detection in Mobile Crowd Sensing. Sensors 2024, 24, 2353. [Google Scholar] [CrossRef] [PubMed]

- Hua, X.; Zhu, L.; Zhang, S.; Li, Z.; Wang, S.; Deng, C.; Feng, J.; Zhang, Z.; Wu, W. GenAD: General unsupervised anomaly detection using multivariate time series for large-scale wireless base stations. Electron. Lett. 2023, 59, e12683. [Google Scholar] [CrossRef]

- Aziz, Z.; Bestak, R. Insight into Anomaly Detection and Prediction and Mobile Network Security Enhancement Leveraging K-Means Clustering on Call Detail Records. Sensors 2024, 24, 1716. [Google Scholar] [CrossRef] [PubMed]

- Rafique, S.H.; Abdallah, A.; Musa, N.S.; Murugan, T. Machine learning and deep learning techniques for internet of things network anomaly detection—Current research trends. Sensors 2024, 24, 1968. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Kang, H.; Kang, P. Time-series anomaly detection with stacked Transformer representations and 1D convolutional network. Eng. Appl. Artif. Intell. 2023, 120, 105964. [Google Scholar] [CrossRef]

- Mo, Y.; Fu, H.; Bai, S.; Deng, C.; Tang, T.; Lang, J.; Zhou, F. InfoFlow: A Transformer-based Time Series Anomaly Detection Model with Information Bottleneck and Normalizing Flows. arXiv 2024, arXiv:2402.00000. [Google Scholar]

- Zhang, Y.; Hu, J.; Wen, D.; Deng, W. Unsupervised evaluation for out-of-distribution detection. Pattern Recognition 2024, 160, 111212. [Google Scholar] [CrossRef]

- Sultan, K.; Ali, H.; Zhang, Z. Call detail records driven anomaly detection and traffic prediction in mobile cellular networks. IEEE Access 2018, 6, 41728–41737. [Google Scholar] [CrossRef]

- Priyadarshini, I.; Alkhayyat, A.; Gehlot, A.; Kumar, R. Time series analysis and anomaly detection for trustworthy smart homes. Comput. Electr. Eng. 2022, 102, 108193. [Google Scholar] [CrossRef]

- Chebyshev, P. Des valeurs moyennes. J. Math. Pures Appl. 1867, 12, 177–184. [Google Scholar]

- Stellato, B.; Van Parys, B.; Goulart, P. Multivariate Chebyshev inequality with estimated mean and variance. Am. Stat. 2017, 71, 123–127. [Google Scholar] [CrossRef][Green Version]

- Zhou, H.; Li, J.; Zhang, S.; Zhang, S.; Yan, M.; Xiong, H. Expanding the prediction capacity in long sequence time-series forecasting. Artif. Intell. 2023, 318, 103886. [Google Scholar] [CrossRef]

- Barlacchi, G.; De Nadai, M.; Larcher, R.; Casella, A.; Chitic, C.; Torrisi, G.; Antonelli, F.; Vespignani, A.; Pentland, A.; Lepri, B. A multi-source dataset of urban life in the city of Milan and the Province of Trentino. Sci. Data 2015, 2, 150055. [Google Scholar] [CrossRef] [PubMed]

- Hung, C.F.; Tseng, C.H.; Cheng, S.M. Anomaly Detection for Mitigating xApp and E2 Interface Threats in O-RAN Near-RT RIC. IEEE Open J. Commun. Soc. 2025, 6, 1682–1694. [Google Scholar] [CrossRef]

| Model | Time Complexity | Memory Requirements |

|---|---|---|

| LSTM Autoencoder | ||

| SARIMA | ||

| Baseline Informer | ||

| Modified Informer |

| Metric | Baseline Informer | Modified Informer | LSTM-AE | SARIMA |

|---|---|---|---|---|

| Training Time Per Sample Per Feature (ms) | 0.11 | 0.11 | 2.99 | 23.74 |

| Testing Time Per Sample Per Feature (ms) | 0.055 | 0.055 | 0.047 | 0.074 |

| Peak Memory during Training (MB) | 718.50 | 718.50 | 1293.79 | 12,026.81 |

| Peak Memory during Testing (MB) | 719 | 719 | 1198.19 | 12,029.56 |

| Training Size | 57,600 | 57,600 | 28,224 | 4608 |

| Test Size | 13,824 | 13,824 | 25,344 | 4608 |

| Number of Features | 5 | 5 | 3 | 1 |

| Training Execution Time (s) | 31.68 | 31.68 | 253.17 | 109.39 |

| Testing Execution Time (s) | 3.8 | 3.8 | 3.57 | 0.34 |

| Model | Masking | Threshold | Precision | Accuracy | Recall | F1-Score | AUROC |

|---|---|---|---|---|---|---|---|

| LSTM-AE | None | Standard | 15.0% | 60.5% | 86.0% | 24.3% | 82.9% |

| SARIMA | None | Standard | 40.5% | 94.2% | 42.2% | 41.3% | 83.1% |

| Baseline Informer | - | Standard | 19.0% | 93.4% | 25.6% | 21.8% | 60.8% |

| Chebyshev | 9.1% | 94.3% | 6.4% | 7.5% | 52.0% | ||

| MAD | 12.7% | 75.1% | 99.8% | 22.5% | 87.0% | ||

| Modified Informer | MAD | Standard | 14.3% | 92.9% | 19.2% | 16.4% | 57.4% |

| Chebyshev | 16.7% | 95.5% | 6.4% | 9.6% | 52.6% | ||

| MAD | 12.7% | 75.5% | 98.2% | 22.5% | 86.4% | ||

| Modified Informer | Chebyshev | Standard | 22.5% | 93.7% | 30.2% | 25.8% | 63.1% |

| Chebyshev | 21.4% | 93.5% | 30.2% | 25.0% | 63.0% | ||

| MAD | 9.1% | 63.8% | 100.0% | 16.7% | 81.2% |

| Model | Masking | Threshold | Incoming Calls | Outgoing Calls | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Accuracy | Recall | F1-Score | AUROC | Precision | Accuracy | Recall | F1-Score | AUROC | |||

| LSTM-AE | None | Standard | 13.0% | 60.0% | 85.9% | 21.7% | 81.8% | 15.7% | 60.6% | 85.4% | 25.2% | 75.7% |

| SARIMA | None | Standard | 46.6% | 94.8% | 48.7% | 47.6% | 78.5% | 35.3% | 93.7% | 36.7% | 36.0% | 78.9% |

| Baseline Informer | None | Standard | 15.3% | 93.0% | 20.6% | 17.6% | 58.2% | 4.8% | 92.0% | 6.4% | 5.5% | 50.8% |

| Chebyshev | 0.0% | 93.8% | 0.0% | 0.0% | 48.7% | 9.1% | 94.3% | 6.4% | 7.5% | 52.0% | ||

| MAD | 11.0% | 70.7% | 100.0% | 19.8% | 84.8% | 12.8% | 76.8% | 93.6% | 22.6% | 86.8% | ||

| Modified Informer | MAD | Standard | 31.4% | 94.6% | 42.2% | 36.0% | 69.4% | 6.4% | 92.1% | 8.6% | 7.3% | 51.9% |

| Chebyshev | 9.1% | 94.3% | 6.4% | 7.5% | 52.0% | 3.1% | 94.3% | 2.2% | 2.6% | 49.8% | ||

| MAD | 11.0% | 70.7% | 100.0% | 19.8% | 84.8% | 12.2% | 74.0% | 100.0% | 21.8% | 86.8% | ||

| Modified Informer | Chebyshev | Standard | 28.6% | 94.3% | 38.4% | 32.8% | 67.4% | 4.8% | 92.0% | 6.4% | 5.5% | 50.8% |

| Chebyshev | 9.1% | 94.3% | 6.4% | 7.5% | 52.0% | 9.1% | 94.3% | 6.4% | 7.5% | 52.0% | ||

| MAD | 15.3% | 80.0% | 100.0% | 26.6% | 89.6% | 11.3% | 71.7% | 99.8% | 20.3% | 85.2% | ||

| Model | Masking | Threshold | Incoming SMS | Outgoing SMS | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Accuracy | Recall | F1-Score | AUROC | Precision | Accuracy | Recall | F1-Score | AUROC | |||

| LSTM-AE | - | Standard | 18.0% | 70.3% | 86.0% | 28.9% | 91.5% | 15.2% | 62.0% | 87.0% | 24.7% | 87.1% |

| SARIMA | None | Standard | 47.4% | 94.9% | 49.3% | 48.3% | 76.1% | 45.3% | 94.7% | 47.5% | 46.3% | 80.6% |

| Baseline Informer | - | Standard | 22.5% | 93.7% | 30.2% | 25.8% | 63.1% | 5.1% | 92.0% | 6.8% | 5.8% | 51.0% |

| Chebyshev | 21.4% | 93.5% | 30.2% | 25.1% | 63.0% | 5.9% | 92.7% | 6.8% | 6.3% | 51.4% | ||

| MAD | 9.1% | 63.8% | 100.0% | 16.7% | 81.8% | 11.9% | 73.3% | 100.0% | 21.3% | 86.1% | ||

| Modified Informer | MAD | Standard | 4.8% | 92.0% | 6.4% | 5.5% | 50.8% | 0.0% | 91.5% | 0.0% | 0.0% | 47.5% |

| Chebyshev | 5.3% | 92.4% | 6.4% | 5.8% | 51.0% | 0.0% | 92.7% | 0.0% | 0.0% | 48.1% | ||

| MAD | 11.5% | 72.1% | 100.0% | 20.6% | 85.5% | 12.3% | 74.2% | 100.0% | 21.9% | 86.6% | ||

| Modified Informer | Chebyshev | Standard | 19.0% | 93.4% | 25.6% | 21.8% | 60.8% | 19.0% | 93.4% | 25.6% | 21.8% | 60.8% |

| Chebyshev | 16.7% | 93.6% | 19.2% | 17.8% | 57.8% | 25.0% | 95.0% | 25.6% | 22.7% | 58.5% | ||

| MAD | 9.4% | 65.0% | 100.0% | 17.1% | 81.8% | 9.8% | 66.8% | 9.8% | 17.9% | 82.8% | ||

| Method | Estimate | Standard Error | Lower Estimate | Upper Estimate | p-Value | Significance |

|---|---|---|---|---|---|---|

| Intercept Case | 0.5313 | 0.0564 | 0.4386 | 0.6241 | 0.0000 | Very strong |

| MAD Masking | −0.0596 | 0.0301 | −0.1092 | −0.0100 | 0.0538 | Strong |

| Baseline (No Masking) | −0.0325 | 0.0306 | −0.0921 | 0.0170 | 0.2858 | Weak |

| Chebyshev Threshold | 0.0353 | 0.0603 | −0.0838 | 0.1545 | 0.5666 | None |

| MAD Threshold | 0.3100 | 0.0603 | 0.1914 | 0.4286 | 0.0000 | Very strong |

| Standard (95%) Threshold | 0.0744 | 0.0603 | −0.0430 | 0.1736 | 0.2234 | Weak |

| Z-score Threshold | 0.0351 | 0.0522 | −0.0688 | 0.1210 | 0.5047 | None |

| MAD Masking + Chebyshev Threshold | −0.0036 | 0.0604 | −0.1219 | 0.1147 | 0.9544 | None |

| No Masking + Chebyshev Threshold | 0.0024 | 0.0604 | −0.1171 | 0.1219 | 1.0000 | None |

| MAD Masking + MAD Threshold | 0.1064 | 0.0604 | −0.0120 | 0.2248 | 0.0954 | Strong |

| No Masking + MAD Threshold | 0.0394 | 0.0426 | −0.0451 | 0.1238 | 0.3545 | Very weak |

| MAD Masking + Standard (95%) Threshold | 0.0112 | 0.0604 | −0.1069 | 0.1292 | 0.8507 | None |

| No Masking + Standard (95%) Threshold | −0.0054 | 0.0426 | −0.0756 | 0.0647 | 0.8992 | None |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mfondoum, R.N.; Gotseva, N.; Vlahov, A.; Ivanov, A.; Koleva, P.; Poulkov, V.; Manolova, A. Mask Inflation Encoder and Quasi-Dynamic Thresholding Outlier Detection in Cellular Networks. Telecom 2025, 6, 84. https://doi.org/10.3390/telecom6040084

Mfondoum RN, Gotseva N, Vlahov A, Ivanov A, Koleva P, Poulkov V, Manolova A. Mask Inflation Encoder and Quasi-Dynamic Thresholding Outlier Detection in Cellular Networks. Telecom. 2025; 6(4):84. https://doi.org/10.3390/telecom6040084

Chicago/Turabian StyleMfondoum, Roland N., Nikol Gotseva, Atanas Vlahov, Antoni Ivanov, Pavlina Koleva, Vladimir Poulkov, and Agata Manolova. 2025. "Mask Inflation Encoder and Quasi-Dynamic Thresholding Outlier Detection in Cellular Networks" Telecom 6, no. 4: 84. https://doi.org/10.3390/telecom6040084

APA StyleMfondoum, R. N., Gotseva, N., Vlahov, A., Ivanov, A., Koleva, P., Poulkov, V., & Manolova, A. (2025). Mask Inflation Encoder and Quasi-Dynamic Thresholding Outlier Detection in Cellular Networks. Telecom, 6(4), 84. https://doi.org/10.3390/telecom6040084