Abstract

RSA’s modular exponentiation is the basic operation in public key infrastructure and is naturally the target of side-channel attacks. In this work we propose two algorithms that defeat side-channel attacks: Paired Permutation Exponentiation (PPE) and Permute, Split, and Accumulate (PSA). We compare these two algorithms with the classic right-to-left technique. All three implementations are evaluated using Intel® Performance Counter Monitor (PCM) at an effective 0.25 ms sampling interval. We use fixed 2048-bit inputs, pin the Python 3.9.13 process to a single core Intel® Core™ i5-10210U, and repeat each experiment 100 and 1000 times to characterize behavior and ensemble statistics. Our proposed technique PSA shows the lowest runtime and the strongest hardening against per-bit correlation relative to the standard RtL. Residual leakage related to the Hamming weight of the exponent may remain observable but the only information gathered is the the Hamming weight of the secret key. The exact location of the secret key bits is completely obscured.

1. Introduction

RSA is widely used cryptosystem for encryption and digital signatures, relying on the computational difficulty of factoring the product of two very large primes. Although RSA is computationally secure, its implementation using bit-serial modular exponentiation is slow and can introduce vulnerabilities to side-channel attacks such as timing or power analysis. As a result, while RSA itself is secure, its vulnerable implementations can undermine cryptographic security if adequate countermeasures are not adopted.

Prior work on accelerating the RSA cryptosystem has largely optimized processor workload using crypto accelerators [1,2] and the cost of modular multiplication [3]—the workhorse inside modular exponentiation. In particular, hardware-efficient variants of Montgomery modular multiplication and low-power accelerators for Internet of Things (IoT) devices have demonstrated reduced latency and energy reductions, which indirectly benefit RSA performance [4,5]. However, these advances leave the algorithmic structure of binary modular exponentiation, the control and sequencing of the many multiplications, mostly unchanged.

Most prior defences against RSA side-channel leakage have focused on constant-time algorithms such as Montgomery Ladder and Joye’s Ladder, or efficiency-oriented improvements such as windowing. These methods reduce leakage or improve performance but do not alter the sequencing of the exponentiation process itself. The idea of introducing randomness at the schedule level has received comparatively little attention, creating a gap that this work addresses. The main contributions of this paper are as follows: (i) Schedule-level randomization techniques: we introduce two strategies, PPE and PSA, that preserve RSA correctness while randomizing the order of modular exponentiation operations. (ii) Security and performance evaluation: we demonstrate that these schedule-level changes reduce exploitable side-channel leakage and can improve runtime compared to conventional right-to-left (RtL) square-and-multiply implementations. (iii) Practical implementation: we provide both software and hardware realizations, and evaluate them using Intel® Performance Counter Monitor (PCM) to quantify performance and leakage improvements. In contrast, this paper targets the exponentiation schedule itself. We introduce two implementation strategies that preserve RSA’s correctness but randomize the order of work: (i) a randomized traversal of the bit-conditioned products (PPE), and (ii) a split method that multiplies only the terms corresponding to 1-bits in a randomized order (PSA). By decoupling the computation order from the secret bit positions, these strategies both expose improve performance and reduce exploitable side-channel signal. Our evaluation on a controlled platform using Intel® Performance Counter Monitor (PCM) shows that these schedule-level changes can improve runtime and lower observable leakage compared to a baseline right-to-left (RtL) square-and-multiply implementation.

This work is organized as follows. Section 2 gives a brief summary of the modular exponentiation operation and the left-to-right implementation algorithm. Section 3 reviews the literature related to reducing vulnerability to side-channel attacks. Section 4 introduces the two proposed techniques for securing modular exponentiation: PPE and PSA. Section 5 summarizes the software and hardware implementations of the three algorithms: RtL, PPE, and PSA. Section 6 show the Python implementation of the three algorithms. Section 7 shows the implementation results using Intel’s PCM performance tool. Section 8 provides leakage test analysis of the proposed algorithms using Test Vector Leakage Test Assessment (TVLA). Section 9 provides our summary and conclusion of the two new proposed algorithms implementations.

2. Background

2.1. Modular Exponentiation

The core computational operation in RSA is modular exponentiation expressed as follows:

where A is the message, x is the key, and p is the RSA modulus.

This operation allows RSA to transform data securely while keeping intermediate values within a manageable range. Different algorithms, such as square-and-multiply, are used to perform this operation without directly calculating the potentially enormous power . Because RSA uses large exponents and modulii (often 2048 bits or more), efficient and secure modular exponentiation is essential for performance and resistance to side-channel attacks.

Binary exponentiation is commonly used to efficiently implement modular exponentiation. It works by decomposing the exponent into its binary representation and using repeated squaring. It reduces the number of multiplications from to where m is the number of modulus p bits [6].

There are basically three methods to implement the exponentiation operation as will be explained in the following subsections.

2.1.1. Right-to-Left (RtL) Modular Exponentiation

This method expresses the exponent x in binary form:

where m is the number of bits.

Algorithm 1 is the pseudo code for the right-to-left (RtL) algorithm using multiply then square operations. Line 5 is conditional multiply depending on the value of the exponent . Line 9 is unconditional squaring operation. It is simple to reverse the order and develop an RtL algorithm using square then multiply.

| Algorithm 1 Right-to-Left (RtL) Binary Exponentiation |

|

The conditional multiply operation at a given iteration results varying iteration delay or power consumption. This makes the algorithm vulnerable to side-channel attacks such as timing or power analysis.

Similar observations are also found in the left-to-right (LtR) modular exponentiation algorithm. Further, using non-adjacent form (NAF) representation of x produces two versions of LtR or RtL algorithms. All suffer from SCA vulnerability.

2.1.2. RtL Non-Adjacent Form “NAF” Method

The Non-Adjacent Form (NAF) method reduces the number of multiplications required. This method reduces the number of adjacent ones using the geometric series identity:

Therefore we can express x in the redundant form:

where all the adjacent ones are replaced using the expression in Equation (3).

The NAF method represents the exponent x as a sum of powers of 2 with coefficients −1, 0, or 1, where no two consecutive digits are non-zero. This representation is unique and has the minimum number of non-zero digits among all signed-digit representations. This is summarized in Algorithm 2.

The NAF representation guarantees that at most half of the digits are non-zero, reducing the number of multiplications compared to the binary method. On average, only about 1/3 of the digits are non-zero, making this method approximately 11% more efficient than the standard binary method [7]. Although, The main advantage of NAF is that it reduces the average number of multiplications while requiring minimal additional precomputation only . This makes it particularly useful in environments with limited memory.

| Algorithm 2 RtL Non-Adjacent Form (NAF) Method |

|

2.2. Side-Channel Attacks

Side-channel attacks (SCAs) exploit physical observables such as execution time, power draw, and cache behaviour to infer the secret key bits. The RtL and LtR algorithms suffer from bit-position leakage, where the timing or delay of the conditional multiply operations correlate with the positions of 1-bits in x; and timing attacks analyze variations in the run-time of cryptographic routines, especially modular exponentiation. Even small, systematic differences can leak key bits if the implementation is not constant-time [8].

Power-analysis attacks observe instantaneous or aggregated power consumption during computation. Simple Power Analysis (SPA) and Differential Power Analysis (DPA) correlate power traces with data-dependent operations to extract secret material [9].

Cache attacks exploit the timing behavior of CPU caches to infer memory access patterns. By measuring cache hits and misses, techniques such as Prime + Probe and Flush + Reload can reveal which lines are touched during encryption or decryption, enabling key recovery—especially in shared hardware environments [9].

These side-channel attacks pose a significant threat to RSA, especially when implementations do not employ proper countermeasures such as constant-time execution, masking, or hardware isolation. Understanding these vulnerabilities is crucial for designing secure cryptographic systems that remain robust under real-world conditions.

3. Related Work

Prior research on securing modular exponentiation against side-channel attacks can be grouped into three categories: (i) efficiency-driven methods such as windowing and Montgomery multiplication, (ii) constant-time implementations such as Montgomery Ladder and Joye’s Ladder, and (iii) randomization-based strategies, including the schedule-level approaches we propose in this work.

3.1. Modular Exponentiation Using Windowing Techniques

Several publications in the literature discussed using windows to resist power analysis [10,11,12,13,14]. Windowed exponentiation basically reduces the number of multiplications in the modular exponentiation algorithm by processing multiple bits at once [15,16]. As a bonus side-effect, windowing greatly reduces the vulnerability to SCA since the bits in the window are processes simultaneously. SCA will not reveal either number of bits of the window nor their actual location.

Windowing expresses x in Equation (2) in radix-:

where w is the window size, is in the range , and . We see that is composed of the least significant w bits, is the next w bits, and so on.

The pre-computations in the windowing technique essentially do two series of multiplications:

- Compute all squares of for :This is squaring operations.

- Compute all multiplicative powers of A:This is multiplication operations.

- Any power of can be expressed in the following form:One multiplication is required between two terms from and , respectively. This is multiplications.

For classic RtL or LtR algorithms, the number of squaring operations is and number of multiplication operations is on average for a well-designed secret key.

Table 1 compares the number of multiplication and squaring operations of classic RtL and windowing technique.

Table 1.

Comparing the number of multiplication and squaring operations of classic RtL and windowing technique.

3.2. Montgomery Ladder

The Montgomery Ladder provides an equal number of squaring and multiplication operations regardless of the exponent bit values. Therefore, it provides resistance against certain side-channel attacks while maintaining computational efficiency. Algorithm 3 shows the Montgomery Ladder which maintains a pair of values throughout the computation with the invariant that . Initially, and , satisfying the invariant.

Montgomery Ladder is resistant to simple power analysis attacks. When implemented carefully, the algorithm can run in constant time, enhancing its security against timing attacks.

| Algorithm 3 Montgomery Ladder |

|

3.3. Joye’s Ladder

Joye’s Ladder [17] is an extension of Montgomery Ladder. The approach repeats the same operations in the same order at each iteration without inserting dummy operations. This approach provides resistance to side-channel attacks while maintaining computational efficiency.

The algorithm is not restricted to the binary case but can be generalized to any radix m and can be used in right-to-left or left-to-right scanning. two registers and with the invariant that , where is the value processed so far. For each bit of the exponent x, from least significant to most significant, let b be the current bit value 0 or 1, update , and update . After processing all bits, contains , which is the desired result.

The key advantage of Joye’s Ladder is side-channel resistance, since it performs the same sequence of operations of multiplication and squaring regardless of the bit value. When implemented carefully, it can run in constant time.

More recent studies emphasize that implementation flaws, rather than cryptographic design, often represent the greatest security risks. For example, Astriratma shows that vulnerabilities in blockchain smart contracts commonly arise from execution-level weaknesses rather than theoretical cryptography [18]. Similarly, Mendoza and Tubice highlight the value of time-series analysis in detecting subtle temporal patterns, a perspective directly relevant to identifying side-channel leakage [19]. These findings reinforce the need for countermeasures, such as PPE and PSA, that target execution-level risks and temporal alignment.

4. Proposed Techniques

In this section we explain how we modified the RSA algorithm to effectively defeat side-channel attacks as we make use of the commutative and distributive properties of the exponentiation operation. We propose, in this section, two design implementations.

4.1. Proposed Design 1: Paired–Permutation Exponentiation (PPE)

In this design we break the FOR loop in the standard RtL Algorithm 4 into three stages. This is shown in Algorithm 4.

| Algorithm 4 PPE: Paired–Permutation Exponentiation |

|

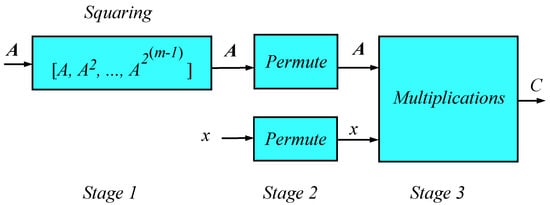

The order of execution of the three stages is shown in Figure 1.

Figure 1.

Block diagram of proposed design PPE.

Stage 1 of Algorithm 4 precomputes the powers of with in Line 5. The result is appended to a vector as in Line 6. This stage is purely sequential and is typically performed by one core of an embedded multicore system. This stage reveals no information about the secret key bits x.

Stage 2 of Algorithm 4 peforms identical random permutation on the vectors and x. This ensures random re-arrangement of the vector elements while preserving the correspondence between and . The permutations in this phase will not affect the result C because of the associative and commutative properties of modular exponentiation. This phase will compute sequentially and cannot be parallelized. Again, no information about the secret key bits is revealed as a result of Stage 2.

Stage 3 calculates the output result C using conditional multiply statements. This, however, does not reveal any information about the secret key bits for two reasons:

- Detecting whether a bit is 1 or 0 does not reveal the actual location of that bit in the original secret key x due to the permutations in Stage 2.

- The conditional multiply in Line 15 does not give much delay or power since the unconditional square step in the original algorithm has already been performed at Stage 1.

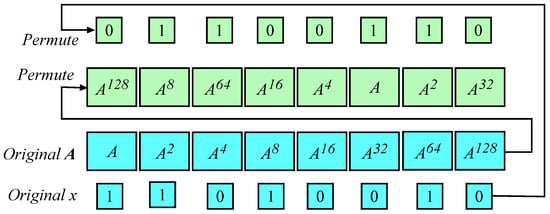

Figure 2 shows the operations of Stages 1 to 3. Case when . At the bottom of the figure is the result of Stage 1 where the vector A storing the powers of is created. The vectors x and A are shown as the blue boxes. The identical random permutations on x and A are indicated by the arrows and the result is shown as the green boxes. Note that the values of and corresponding key bit are maintained at the same location within the vectors.

Figure 2.

Proposed PPE design. Summarizing stages 1 and 2. Case when .

4.2. Proposed Design 2: Permute, Split and Accumulate (PSA)

The second proposed design breaks the FOR loop in the standard RtL Algorithm 1 into four stages and three separate loops. This is shown in Algorithm 5.

| Algorithm 5 PSA: Permute, split, and accumulate modular exponentiation algorithm |

|

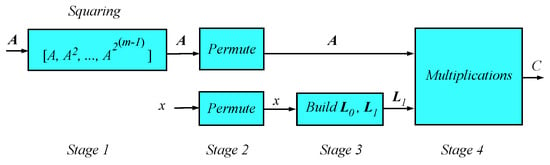

The order of execution of the four stages is shown in Figure 3.

Figure 3.

Block diagram of proposed design PSA.

Stage 1 builds the vector A which is composed of the powers .

Stage 2 of Algorithm 5 applies a random permutation to both A and x to ensure that the corresponding elements of both vectors aligned. The permutations will not affect the final result because of the associative and commutative properties of modular exponentiation. All calculations in this phase do not reveal any information about the secret key bits.

Stage 3 creates the two vectors and . Vector stores the locations of zero bits in the permuted vector x. Similarly, vector stores the locations of one bits in the permuted vector x. At this stage, the attacker might be able to determine the number of the bits with value 0 or 1. This information is not useful because permutations dissociated the values of the bits from the actual locations of in the secret key.

Stage 4 is the aggregation step that uses the content of to find the location in A vector to calculate C.

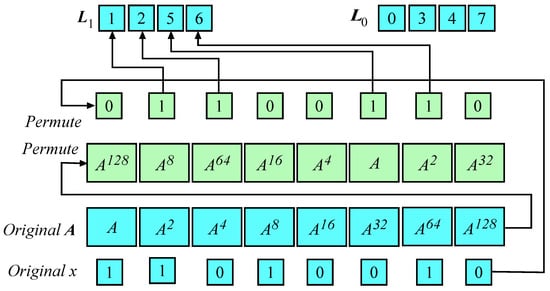

Figure 4 shows the operations of stages from 1 to 4. Case when . At the bottom of the figure is the result of Stage 1 where the vector A storing the powers of is created. The vectors x and A are shown as the blue boxes. The identical random permutations on x and A are indicated by the arrows and the result is shown as the green boxes. Note that the values of and corresponding key bit are maintained at the same location within the vectors.

Figure 4.

Proposed PSA design. Summarizing Stages 1, 2, and 3. Case when .

4.3. Comparative Summary

PSA is expected to provide superior performance because it avoids conditional branching and restricts multiplications to the positions of 1-bits, resulting in higher instruction-per-cycle efficiency. In addition, PSA’s straight-line accumulation loop reduces temporal alignment and produces smoother traces compared to PPE.

From Table 2, several insights emerge regarding efficiency and side-channel resistance. PSA achieves the highest practical efficiency because it performs multiplications only for 1-bits in the exponent and uses a branch-free, randomized schedule. This reduces alignment between secret key bits and observable events, leaving only the overall Hamming weight as residual leakage. PPE introduces schedule randomization but still requires branch handling for each permuted item, which slightly increases runtime overhead compared to PSA. RtL (right-to-left square-and-multiply) is the baseline implementation with strong bit-position leakage and standard performance. Montgomery Ladder and Joye’s Ladder are strictly constant-time. Both perform m squarings + m multiplications, independent of the exponent bit values. This provides theoretical immunity to timing attacks but comes at a performance cost because operations are always executed, even when the exponent bit is 0.

Table 2.

Comparative summary of computational complexity and leakage characteristics for modular exponentiation algorithms.

Overall, PSA offers a practical middle ground, achieving strong leakage reduction and higher efficiency, while Montgomery and Joye’s ladders provide maximum theoretical security at a higher computational cost.

5. Methodology

We summarize, in this section, the hardware and software implementation details of the standard RtL and the two proposed algorithms PPE and PSA.

Simulation Environment Configuration

The hardware and software platforms used for all experiments, including the computing device, operating system, programming environment, and measurement stack are summarized in Table 3.

Table 3.

Simulation environment configuration for the Dell model Latitude 3510.

The experiments were performed on a single pinned CPU core to minimize scheduling noise and create a worst-case environment for leakage detection. This configuration offers the attacker the clearest observation conditions; therefore, if leakage is not evident under these controlled circumstances, it is expected to be even less observable in realistic multi-core, multitasking systems.

Programming environment: Experiments were implemented in Python using the PyCharm Integrated Development Environment (IDE). The libraries used are gmpy2, numpy, pandas, matplotlib, and psutil.

Performance measurement: Hardware performance was measured with Intel® Performance Counter Monitor (PCM), compiled from the official GitHub [20] repository using Microsoft Visual Studio 2022 Community (Desktop Development with C++ workload) and the .NET Framework Developer Pack 4.7.2. PCM was invoked from Python via the subprocess module and configured to export CSV files.

To reduce operating-system (OS) scheduling jitter, we pin the Python process to a single CPU core (core 0) and elevate its priority. With the workload confined to core 0, the recorded package- and core-level counters primarily reflect the pinned Python activity while minimizing background noise.

The monitored metrics included Instructions Retired (INST), Active CPU Cycles (ACYC), L3 Cache Misses (L3MISS), Core and Package Energy (Joules), Core Temperature (°C), and Core Frequency. These metrics are not only indicators of computational performance but also potential leakage sources as variations in instructions retired, cache behavior, or energy draw can reveal patterns correlated with secret key bits. To evaluate both instantaneous leakage and statistical performance metrics, each implementation was repeated 100 and 1000 times to analyze average and variance-based results. This dual perspective ensures that both single-execution leakage and long-term statistical leakage are addressed.

Inputs , and p were used across all three RtL, PPE, and PSA implementations. This eliminates input-driven variance so that any differences in the traces arise from the algorithmic design only. As in the list below, we choose a 2048-bit odd modulus p and a full-length 2048-bit exponent x with a near-balanced Hamming weight, representative of real-world private exponents. The message A is sampled uniformly in and reduced modulo p. We also keep the sampling interval 0.25 ms, guard times, core pinning, and repeat count 100 and 1000 times across designs. The classic RtL square-and-multiply serves as the baseline. It exhibits bit-position–aligned control flow and thus provides a natural reference for both performance and leakage. The Paired Permutation Exponentiation (PPE), and Permute, Split, and Accumulate design (PSA), are evaluated relative to RtL under the same conditions.

Listing 1 shows the message, key and modulus values used in our all the experiments conducted in this work.

| Listing 1. Message, Key and Modulus values used in all experiments. |

Python built-in function pow( ) is used to compare the output results. It performs modular exponentiation efficiently, even for huge numbers. It is partially secure. It can leak information because its execution path depends on the exponent’s bits.

6. Implementations

We show in this section the implementations of the standard RtL and the two proposed algorithms PPE and PSA.

6.1. RtL Implementation

Our baseline is the classic RtL modular exponentiation algorithm. The algorithm in Listing 2 scans the exponent from the least significant bit (LSB) toward the most significant bit (MSB). In every iteration, it always performs a squaring of the current base and conditionally multiplies when the current bit of x equals 1, after which it shifts x right by one. Define and (Hamming weight), the cost is m squarings and h modular multiplications with one branch per bit. The conditional multiply introduces time-aligned work at specific bit positions, so traces of instructions retired, cycles, and sometimes energy show a structure that can correlate with the 1-bit locations. This makes RtL a natural baseline for both performance and side-channel analysis: it is simple, widely understood, and exposes the alignment effects that the randomized designs will remove.

Listing 2 shows Python coding for the classic RtL square-and-multiply algorithm.

| Listing 2. Classic RtL square-and-multiply. |

6.2. Proposed Design: Paired Permutation Exponentiation (PPE)

PPE first as in Listing 3 precomputes the squaring vector for all bit positions, organized MSB-first so that its length equals the exponent bit length m. It also constructs an MSB-first bit list of x with the same length. A random permutation of the indices is performed for each run, and the algorithm processes the pairs in that randomized order, multiplying the accumulator only if the permuted bit is 1. Because modular multiplication is both associative and commutative, changing the order of operations does not alter the result, thereby preserving correctness while obfuscating relation between time alignment and actual location of secret key bits. The costs are m squaring; the accumulation pass still visits m items and performs exactly h modular multiplications, with a branch per item. The extra overhead is the permutation of an index array of size m. From a side-channel perspective, the randomized processing order removes consistent bit-position alignment between runs, so spikes cannot be averaged back to specific positions; however, the loop still evaluates each bit, so at very fine probes, a weak dependence on the Hamming weight could in principle remain. In our PCM-resolution experiments, the counters did not exhibit a stable Hamming-weight distinguisher, and performance was typically close to RtL with slightly smoother aggregate behavior.

Listing 3 shows the construction of the squaring operation shown as Stage 1 in Figure 1 and Figure 3.

| Listing 3. Squaring all bit positions. |

6.3. Proposed Design: Permute, Split, and Accumulate (PSA)

PSA keeps the same squaring vector but reduces the workload by skipping all zero-bit positions. Figure 3 shows that the next step after calculating is to permute both and x. This will not affect the final exponentiation result C since modular exponentiation is both commutative and associative. Next stop is to scan the bits of x and generate an index list of the non-zero bits of x. The resulting index list contains exactly h positions. The computations still require m squarings, but the loop now has length h with no branching. This improves instruction-per-cycle (IPC) and reduces micro-noise relative to PPE. As with PPE, alignment is destroyed by the shuffle. The total amount of multiplication work scales with h, so under a threat model with high-resolution timing per single run, additional blinding or a constant-time ladder would be prudent; at our PCM sampling rate, we did not observe a reliable correlation to h, and across 100 randomized runs the traces remained statistically similar.

6.4. Comparative Discussion

All three designs compute , but differ in how work is organized in time. RtL is straightforward and serves as our baseline. It performs m squarings and h multiplies with a branch condition per bit, and its conditional multiply creates a time-aligned structure at bit positions that is visible in coarse counters.

The proposed PPE design retains the same amount of computations but randomizes the order of bit processing. This breaks positional alignment between runs at the cost of shuffling an m-sized index list and keeping a per-item branch.

The proposed PSA design goes further by skipping zero bits entirely and removes the hot-loop branch, so the loop length equals h and the inner body is a straight multiply-and-reduce; in practice this tends to yield the best IPC and the smoothest traces while providing the same temporal hiding as PPE.

In our measurements at ms sampling, neither PPE nor PSA exhibited recoverable bit-position patterns or a robust Hamming-weight signal, whereas RtL showed the most distinct structure and therefore remains a useful baseline for demonstrating the benefits of randomization. All outputs were verified against Python’s pow(A, x, p) equivalence.

7. Results

This section explains our evaluation of three designs (RtL, PPE and PSA) using two batch sizes (100-run and 1000-run). We report qualitative analysis for the following: Performance, Energy, Temperature, Resistance to Side-Channel Attacks and Cache Behavior. Figures referenced in each subsection illustrate the traces; the narrative contrasts 100-run and 1000-run outcomes and highlights which effects persist with longer execution.

7.1. CPU Frequency

The frequency performance of the implementation refers to the CPU core’s dynamic clock speed, it is a unitless ratio of average core frequency relative to the nominal (base) frequency. Figure 5 shows the core CPU clock speed.

Figure 5.

CPU frequency for (a) 100 runs and (b) 1000 runs.

Figure 5a shows the frequency for the 100 run case. For the 1000-run experiment in Figure 5b all designs operate around a similar baseline frequency with short excursions. RtL shows occasional tall spikes, PPE exhibits a few deeper dips alongside brief boosts, and PSA remains tightly clustered with a few outliers. RtL implementation shows occasional spikes, while PPE implementation shows a mix of shallow dips and short boosts. PSA implementation remains consistent frequency with a few isolated outliers early in the run. Across the 100-run experiments, the frequency traces cluster around a common base brief excursion.

The average CPU frequencies were stable across all three implementations: RtL 0.423 (), PPE 0.425 (), and PSA 0.422 (). These small differences indicate that performance variations are not due to dynamic frequency scaling.

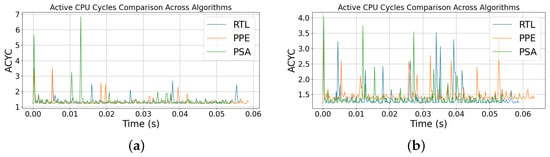

7.2. Active Cycle

Active cycle (ACYC) captures the number of active core cycles per sample. Figure 6 shows the per-sample active cycle. Figure 6a is for the 100-run case and Figure 6b is for the 1000-run case. In both figures and for the three algorithms used, the performance follows a narrow baseline with irregular, needle-like bursts. In the 100-run traces, RtL shows occasional mid-to-tall spikes, PPE exhibits more frequent low-to-mid spikes with a few shallow dips, and PSA remains tightly clustered near baseline with a few isolated high-amplitude bursts. In the 1000-run traces, the bursts are more dispersed and average out, reducing obvious run-to-run alignment.

Figure 6.

Active cycles for (a) 100 run and (b) 1000 run.

The IPC results show a clear distinction: RtL achieved a mean IPC of 0.726 (), PPE averaged 0.681 (), and PSA averaged 0.710 (). The higher IPC of PSA compared to PPE is consistent with its branch-free structure and reduced instruction overhead.

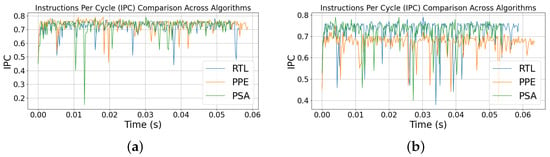

7.3. Interprocess Communication (IPC)

Figure 7 shows the IPC for the 100 and 1000 runs for the three designs. IPC is high for all designs. PPE trends marginally higher and appears the most stable. RtL exhibits a handful of deeper troughs, and PSA closely tracks RtL. When IPC is studied together with core CPU frequency, the randomizing permutation strategies do not degrade the short-horizon efficiency; PPE even benefits slightly at this scale. ACYC traces reflect the density of active core cycles per sample; brief peaks mark short compute bursts while the baseline shows steady progress. In the 100-run experiment, all designs share a narrow baseline with scattered bursts. RtL and PPE exhibit occasional moderate spikes, whereas PSA produces a small number of tall, isolated bursts early and is smoother thereafter. Overall, the randomized schedules smear activity in time relative to RtL, reducing repeatable burst positions.

Figure 7.

IPC for (a) 100 run and (b) 1000 runs.

For the 1000-run setting in Figure 7b, IPC remains high and stable for RtL and PSA, indicating consistently efficient cycle use, while PPE shows more frequent downward excursions that reflect brief stalls or less favourable scheduling windows. Taken together, PSA maintains RtL-class efficiency, whereas PPE trades some sustained efficiency for randomized ordering. Over the longer window, the same pattern persists and becomes more apparent. PPE shows dispersed bursts across the run, RtL presents more periodic moderate spikes, and PSA settles into a relatively uniform baseline after a brief early section with isolated peaks. The burst distribution in PPE/PSA is less periodic than in RtL, consistent with reduced time alignment under randomized ordering.

IPC values also highlight PSA’s balanced performance: RtL averaged 0.726 ( = 0.057), PPE averaged 0.681 ( = 0.025), and PSA averaged 0.710 ( = 0.051). PSA’s higher IPC compared to PPE reflects its branch-free structure, which avoids mispredictions and pipeline stalls.

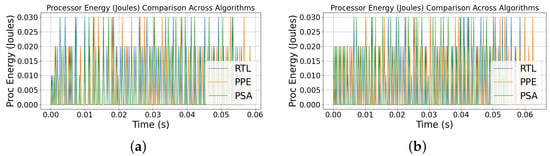

7.4. Energy

Figure 8 shows the processor energy during executing the algorithms. Figure 8a,b show the system trace mostly sits above the Processor trace because it includes non-CPU components, but both follow the same trend. The staircase shape reflects the cumulative energy, so we compare final accumulated energy over the run rather than interpreting the height or timing of individual steps. In the 100-run experiments, PSA shows the sparsest staircase and the lowest final total, RtL is intermediate, and PPE is slightly higher, with denser increments around the middle of the run. In the 1000-run experiments, the same ordering persists and separates more clearly: PSA remains the most frugal, RtL stays in the middle, and PPE accumulates more toward the tail. Short vertical pulses are counter updates, not instantaneous bursts.

Figure 8.

Processor energy during the operation of the three algorithms. (a) 100-run case. (b) 1000-run case.

Quantitative results confirm PSA’s efficiency advantage: RtL consumed 0.0240 J ( = 0.0050 J), PPE consumed 0.0229 J ( = 0.0061 J), and PSA consumed 0.0218 J ( = 0.0072 J). Thus, PSA achieves an average 9.2% reduction in energy relative to RtL.

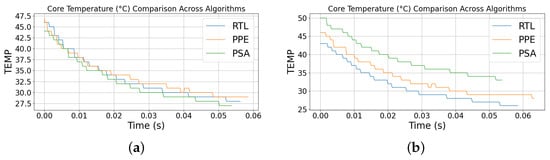

7.5. Temperature

Figure 9 shows the core temperature during the urns. Figure 9a shows the 100-run experiment, all traces show rapid, stair-stepped cooling. Starting temperatures differ slightly (with PPE starting warmer), so absolute endpoints are not comparable; the consistent downward trend across designs is the reliable indicator. At this brief timescale, the thermal curves mainly serve as a stability check and are consistent with the energy/power observations.

Figure 9.

CPU Temperature for (a) 100 run and (b) 1000 run.

Figure 9b shows the the 1000-run experiment. All curves descend with a stair-step pattern that reflects sensor update granularity and net cooling over the window. PSA begins warmer and therefore remains at a higher absolute temperature despite a strong downward trend; PPE and RtL start cooler and finish cooler. Because starting points differ, the reliable indicators are the direction and steepness of the decline—net drop and slope— which align with the energy and power rankings.

7.6. Resistance to Side-Channel Attacks

We analyze the leakage-relevant structure apparent across the prior traces, focusing on temporal alignment. At the shorter traces (100 runs), PPE and PSA de-correlate peak positions relative to RtL and reduce the occurrence of prominent bursts. The effect is visible but naturally less pronounced than in the extended run because fewer windows are available to expose drift. Even so, the randomized schedules already disrupt position-dependent structure at this scale.

For the 1000-run experiment, overlays of the activity counters indicate that both randomized designs break the stable time alignment visible in RtL. PPE shows frequent local de-synchronization and suppresses large, repeatable bursts; PSA likewise de-aligns peak positions while maintaining steady throughput. In this setting, timing-based cues are weakened without introducing memory-system side effects, making temporal alignment the primary channel of interest and showing it is effectively mitigated.

7.7. Cache Behavior

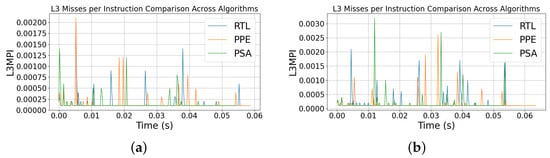

We quantify L3MPI (L3 misses per instruction) and L3MISS (raw L3 misses) versus the PCM sample interval 0.25 ms. The y-axis for L3MPI is misses per instruction (unitless), while for L3MISS it is the number of last-level cache misses per sample reported in millions, so fractional values (e.g., 0.02) mean 0.02 M = 20,000 misses in that interval. Figure 10 shows the cache misses per instruction for both the 100-run and 1000-run.

Figure 10.

L3 Misses per instruction for (a) 100 run and (b) 1000 run.

Both Figure 10a,b plots maintain near-flat baselines with only sparse, short-lived spikes that do not align across RtL, PPE, and PSA—indicating a compute-bound workload, where throughput is limited by core execution rather than memory activity.

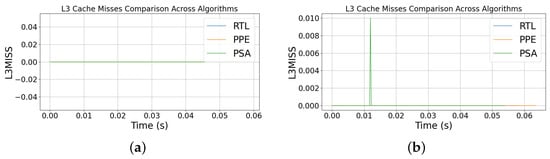

Figure 11 shows the L3 cache misses for the three algorithms. Figure 11a shows the 100-run case. It should be noted that the red and blue traces coincide with green trace the therefore are hidden. Figure 11b shows the 1000-run case. It should be noted that the red and blue traces coincide with green trace the therefore are hidden. There is also occasional, narrow blips that neither persist nor synchronize across designs.

Figure 11.

L3 misses for (a) 100 run and (b) 1000 run.

Cache behavior was flat across all three algorithms. The mean L3 miss rates were effectively zero for RtL and PPE, and negligible for PSA (0.0001 ± 0.0008). This indicates that cache activity does not represent a dominant leakage source in our experiments. Instead, side-channel risks are primarily driven by temporal alignment, underscoring the value of PSA’s schedule-level randomization.

8. Leakage Assessment Using TVLA

To evaluate whether implementations leak exploitable information, we adopt the Test Vector Leakage Assessment (TVLA) methodology. TVLA employs Welch’s t-test to statistically compare two groups of side-channel traces that differ only in secret-dependent behavior. The t-statistic is defined as follows:

where and are the mean values of the two groups, and are the variances, and and are the number of traces in each group. If the resulting -value exceeds the threshold of 4.5, the two distributions are statistically distinguishable, and leakage is deemed to exist. Otherwise, no significant leakage is detected. TVLA therefore provides a rigorous and implementation-independent means of determining whether side-channel leakage is observable.

8.1. Right-to-Left (RtL)

Applying TVLA to the baseline right-to-left (RtL) modular exponentiation reveals strong leakage. In each iteration, the algorithm always performs a squaring, while the multiplication is conditional on the exponent bit being 1. This leads to systematic differences between the traces associated with 0-bits and 1-bits. The leakage model can be written as follows:

where is the mean cost of a squaring, is the additional mean cost of a multiplication, and represents measurement noise. Substituting these into the t-statistic gives the following:

Since is nonzero, the numerator is large, leading to . Hence, the RtL algorithm fails TVLA, as its execution traces reveal per-bit information exploitable by timing and power-based attacks.

8.2. Paired–Permutation Exponentiation (PPE)

The Paired–Permutation Exponentiation (PPE) algorithm modifies the computation schedule by first precomputing all squaring operations and then applying a random permutation to both the key bits and their corresponding precomputed values prior to each run. This continuous randomized ordering ensures that multiplications are no longer aligned with specific key bit positions.

The leakage model becomes the following:

The effective distributions overlap is given by the following:

Thus PPE passes TVLA for positional leakage. However, a weak dependency on the total number of one bits (Hamming weight) can remain observable in aggregate runtime or performance counter measurements.

8.3. Permute, Split, and Accumulate (PSA)

The Permute, Split, and Accumulate (PSA) design extends this concept further by not only permuting the order of operations but also eliminating branching in the accumulation stage. After permutation, the exponent bits are split into two lists: one containing indices of 0-bits and another containing indices of 1-bits. Only the 1-bit list is processed in the accumulation loop, which is fully branchless.

To assess the security of Algorithm 5, we note that there are two distinct segments in each trace. Samples in trace covering time instances 0 to all perform squaring operations only. Samples in trace from time instance m to all perform multiplication operations only. Therefore, we can write the following statistical averages for as follows:

The t-scores of trace is estimated as follows:

score is below any threshold that the algorithm assessor might choose.

For trace the number of squaring and multiplication are given by the following:

The t-score for the trace all have the following:

score is below any threshold that the algorithm assessor might choose. This proves the security of the proposed Algorithm 5 (PSA).

Table 4 summarizes the results of applying TVLA to the three RSA exponentiation algorithms. RtL fails decisively due to per-bit conditional leakage, PPE removes positional leakage but may reveal aggregate Hamming weight, and PSA eliminates both, thereby offering the most robust protection. In conclusion, TVLA analysis confirms that schedule randomization and branch elimination are essential design strategies for preventing side-channel leakage in RSA modular exponentiation.

Table 4.

TVLA outcomes for RSA algorithms.

9. Conclusions

We presented two schedule-level variants of RSA modular exponentiation that preserve arithmetic correctness while eliminating time alignment between observable activity and secret bit positions. PPE randomizes the processing order of the bit-conditioned products, breaking positional correlation without changing total work; PSA further removes the inner-loop branch and skips zero bits, yielding a shorter, straighter multiply-only loop of length . On our pinned-core platform with PCM sampling at 0.25 ms, both approaches eliminated stable bit-position structure visible in RtL traces, and PSA delivered the best efficiency and smoothest counter profiles among the three. We verified functional correctness against Python’s pow on fixed 2048-bit inputs and evaluated across 100–1000 randomized runs. Randomizing the processing order hides bit positions but may reveal the total number of 1-bits; while this alone is unlikely to enable key recovery, constant-work padding or ladder execution can eliminate it where required by the threat model. Future work includes integrating Montgomery reduction and CRT recombination, exploring PSA parallel accumulation, and evaluating formal leakage metrics such as Welch’s t-test across broader hardware and sampling designs.

Theoretical implications: The results demonstrate that schedule-level randomization effectively disrupts the temporal alignment between key bits and execution traces, thereby reducing the attacker’s ability to correlate operations with secret data. However, residual Hamming-weight may remains observable under high-resolution conditions.

Practical implications: Due to its low computational overhead and branch-free structure, PSA provides a lightweight countermeasure suitable for deployment in embedded or resource-constrained environments. Unlike constant-time methods that double the number of operations, PSA balances efficiency and leakage resistance, offering a practical alternative for performance-critical systems.

In real-world contexts, the proposed PSA and PPE approaches can be applied to strengthen the security of cryptographic operations in smart cards, IoT devices, and hardware security modules where timing and power analysis pose practical risks. Their lightweight nature also makes them suitable for cloud-based cryptographic services, secure boot mechanisms, and embedded processors in automotive and medical systems, where maintaining both performance and resistance to side-channel leakage is critical.

Author Contributions

F.G.: theory, modelling, writing, numerical simulations, and editing; A.M.: writing, modelling, simulation, implementation, python programming, and results. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Abbreviations

The following abbreviations are used in this manuscript:

| Abbreviations | Meaning |

| ACYC | Active CPU Cycles |

| ALU | Arithmetic logic unit |

| CPA | Correlation Power Analysis (side-channel) |

| CPU | Central Processing Unit |

| CSV | Comma Separated Values |

| DPA | Differential power analysis (side-channel) |

| ECC | Elliptic curve cryptography |

| GPU | Graphic Processing Unit |

| HW | Hamming Weight |

| IDE | Integrated Development Environment |

| INST | Instructions Retired (used in Intel’s PCM) |

| IoT | Internet of things |

| IPC | Interprocess Communication |

| LSB | Least Significant Bit |

| LtR | Left-to-Right algorithm |

| L3MPI | Level 3 Cache Misses per instruction |

| L3MSS | Raw Level 3 Misses |

| L3 Cache | Level 3 cache |

| MSB | Most Significant Bit |

| NAF | Non-Adjacent Form |

| NIST | National institute of standards and technology |

| OS | Operating system |

| PCM | Performance Counter Monitor |

| PKI | Public key infrastructure |

| RAPL | Running Average Power Limit (used in Intel’s PCM) |

| RSA | Rivest-Shamir-Adleman |

| RtL | Right-to-Left algorithm |

| SCA | Side-channel attack |

| SPA | Simple power analysis (side-channel) |

| TVLA | Test Vector Leakage Assessment |

References

- Qiang, L. Research on Performance Optimization and Resource Allocation Strategy of Network Node Encryption Based on RSA Algorithm. J. Cyber Secur. Mobil. 2025, 14, 101–125. [Google Scholar] [CrossRef]

- Kolagatla, V.R.; Raveendran, A.; Desalphine, V. A Novel and Efficient SPI enabled RSA Crypto Accelerator for Real-Time applications. In Proceedings of the 2024 28th International Symposium on VLSI Design and Test (VDAT), Vellore, India, 1–3 September 2024. [Google Scholar]

- Navarro-Torrero, P.; Camacho-Ruiz, E.; Martinez-Rodriguez, M.C.; Brox, P. Design of a Karatsuba Multiplier to Accelerate Digital Signature Schemes on Embedded Systems. In Proceedings of the 2024 IEEE Nordic Circuits and Systems Conference (NorCAS), Lund, Sweden, 29–30 October 2024. [Google Scholar]

- Ibrahim, A.; Gebali, F. Symmetry-enabled resource-efficient systolic array design for Montgomery multiplication in resource-constrained MIoT endpoints. Symmetry 2024, 16, 715. [Google Scholar] [CrossRef]

- Ibrahim, A.; Gebali, F. Enhancing Security and Efficiency in IoT Assistive Technologies: A Novel Hybrid Systolic Array Multiplier for Cryptographic Algorithms. Appl. Sci. 2025, 15, 2660. [Google Scholar] [CrossRef]

- Cormen, T.H.; Leiserson, C.E.; Rivest, R.L.; Stein, C. Introduction to Algorithms, 3rd ed.; MIT Press and McGraw-Hill: Cambridge, MA, USA, 2009. [Google Scholar]

- Menezes, A.J.; Van Oorschot, P.C.; Vanstone, S.A. Handbook of Applied Cryptography; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Kocher, P.C. Timing attacks on implementations of Diffie-Hellman, RSA, DSS, and other systems. In Proceedings of the Advances in Cryptology—CRYPTO’96: 16th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 1996; Proceedings 16. Springer: Berlin/Heidelberg, Germany, 1996; pp. 104–113. [Google Scholar]

- Lou, X.; Zhang, T.; Jiang, J.; Zhang, Y. A survey of microarchitectural side-channel vulnerabilities, attacks, and defenses in cryptography. ACM Comput. Surv. (CSUR) 2021, 54, 1–37. [Google Scholar] [CrossRef]

- Liang, Y.; Bai, G. A randomized window-scanning RSA scheme resistant to power analysis. In Proceedings of the 2014 IEEE/ACIS 13th International Conference on Computer and Information Science (ICIS), Taiyuan, China, 4–6 June 2014. [Google Scholar]

- Yin, X.; Wu, K.; Li, H.; Xu, G. A randomized binary modular exponentiation based RSA algorithm against the comparative power analysis. In Proceedings of the 2012 IEEE International Conference on Intelligent Control, Automatic Detection and High-End Equipment, Beijing, China, 27–29 July 2012. [Google Scholar]

- Luo, C.; Fei, Y.; Kaeli, D. GPU Acceleration of RSA is Vulnerable to Side-channel Timing Attacks. In Proceedings of the 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018. [Google Scholar]

- Ors, K.A.B.B. Differential Power Analysis resistant hardware implementation of the RSA cryptosystem. In Proceedings of the 2008 IEEE International Symposium on Circuits and Systems (ISCAS), Seattle, WA, USA, 18–21 May 2008. [Google Scholar]

- Gulen, U.; Baktir, S. Side-Channel Resistant 2048-Bit RSA Implementation for Wireless Sensor Networks and Internet of Things. IEEE Access 2023, 11, 39531–39543. [Google Scholar] [CrossRef]

- Möller, B. Improved techniques for fast exponentiation. In Proceedings of the International Conference on Information Security and Cryptology, Seoul, Republic of Korea, 28–29 November 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 298–312. [Google Scholar]

- Möller, B. Sliding Window Exponentiation. In Encyclopedia of Cryptography, Security and Privacy; Jajodia, S., Samarati, P., Yung, M., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2025; pp. 2443–2445. [Google Scholar] [CrossRef]

- Joye, M. Highly regular m-ary powering ladders. In Proceedings of the Selected Areas in Cryptography: 16th Annual International Workshop, SAC 2009, Calgary, AB, Canada, 13–14 August 2009; Revised Selected Papers 16. Springer: Berlin/Heidelberg, Germany, 2009; pp. 350–363. [Google Scholar]

- Astriratma, R. A Study of Known Vulnerabilities and Exploit Patterns in Blockchain Smart Contracts. J. Curr. Res. Blockchain 2025, 2, 169–179. [Google Scholar] [CrossRef]

- Mendoza, C.P.T.; Tubice, N.G. Analyzing Historical Trends and Predicting Market Sentiment in Digital Currency Using Time Series Decomposition and ARIMA Models on Crypto Fear and Greed Index Data. J. Digit. Mark. Digit. Curr. 2025, 2, 270–297. [Google Scholar] [CrossRef]

- Intel Corporation. Intel/PCM. Available online: https://github.com/intel/pcm (accessed on 16 October 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).