DDoS Attacks Detection in SDN Through Network Traffic Feature Selection and Machine Learning Models

Abstract

1. Introduction

2. Methodology

2.1. Phase 1: Background

2.1.1. Theoretical Framework—SDNs and DDoS

2.1.2. Literature Review

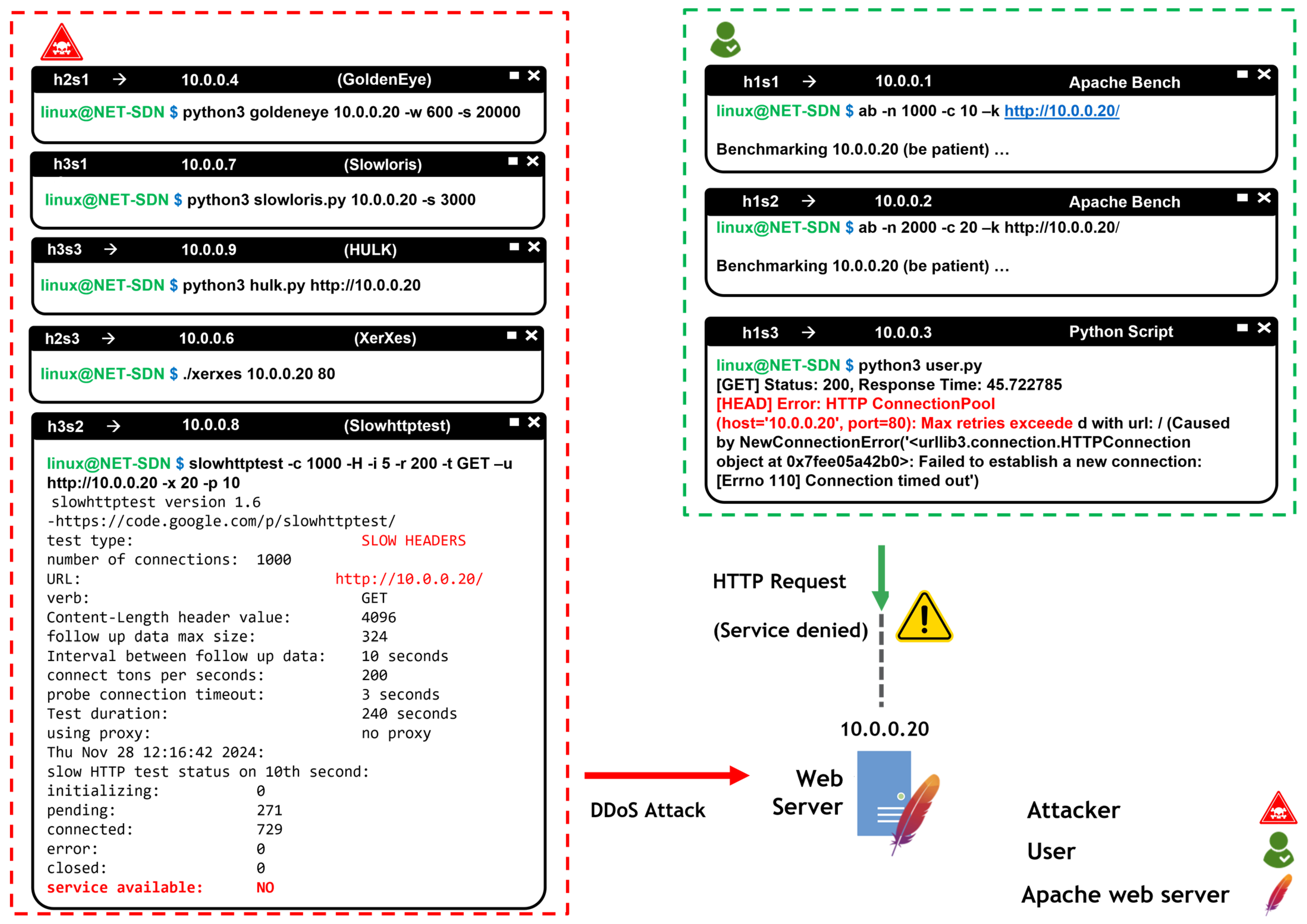

2.2. Phase 2: Scenario Design

Attack Execution

- -c 500: Specifies the number of concurrent connections.

- -H: Sets the test mode to slow headers.

- -i 10: Interval time in seconds between HTTP headers sent.

- -r 100: Number of connections attempted per second.

- -t GET: Type of HTTP request.

- -u: URL or IP address of the target server.

- -x 10: Timeout in seconds before closing open connections.

- -p 5: Time interval in seconds between response packets.

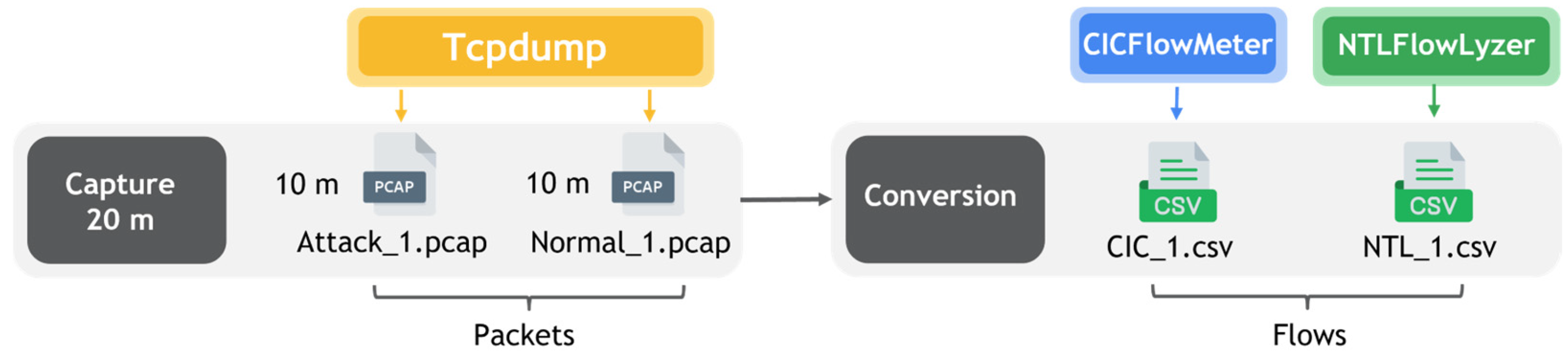

2.3. Phase 3. Dataset Construction

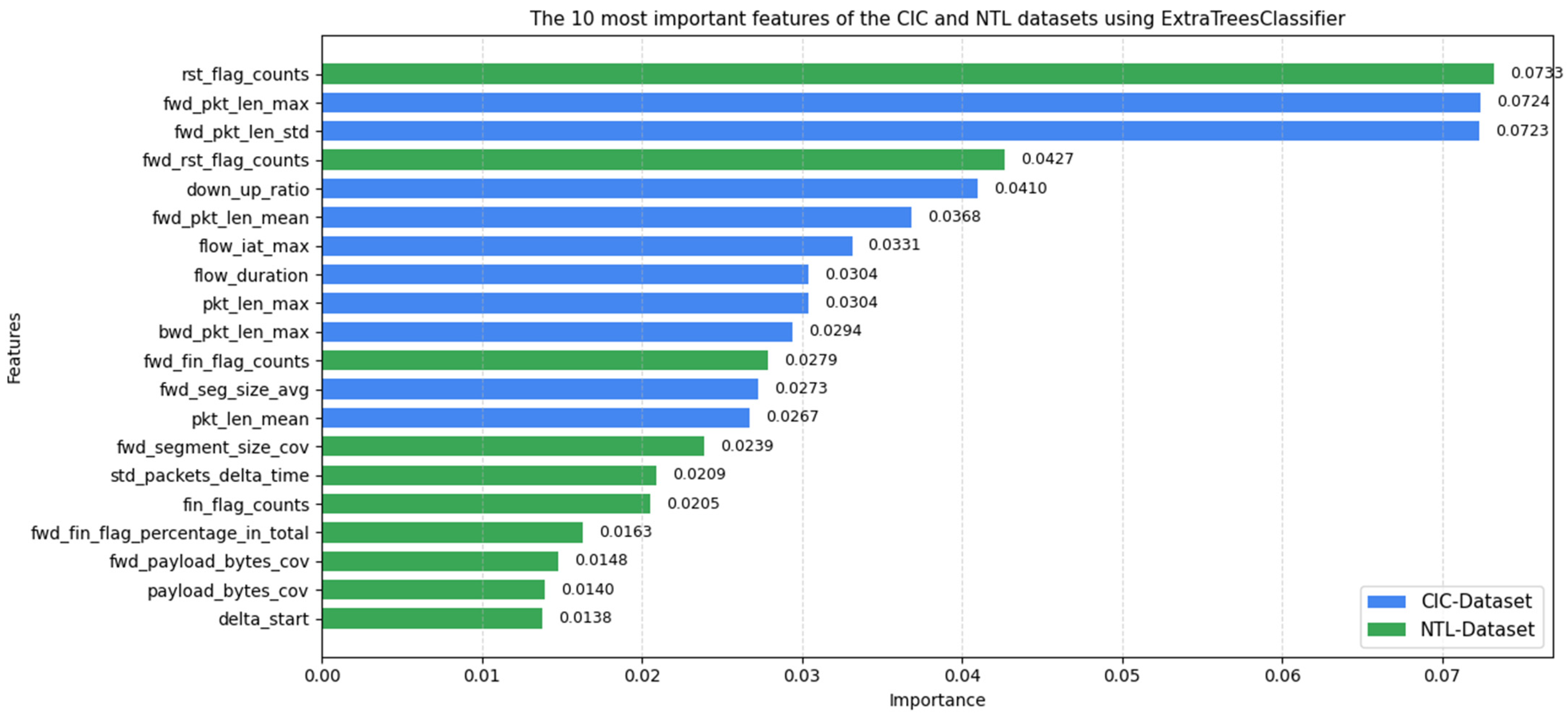

Model Selection and Training

3. Results

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mageswari, R.U.; N, Z.A.K.; M, G.A.M.; S, J.N.K. Addressing Security Challenges in Industry 4.0: AVA-MA Approach for Strengthening SDN-IoT Network Security. Comput. Secur. 2024, 144, 103907. [Google Scholar] [CrossRef]

- Eghbali, Z.; Lighvan, M.Z. A Hierarchical Approach for Accelerating IoT Data Management Process Based on SDN Principles. J. Netw. Comput. Appl. 2021, 181, 103027. [Google Scholar] [CrossRef]

- Islam, M.T.; Islam, N.; Refat, M. Al Node to Node Performance Evaluation through RYU SDN Controller. Wirel. Pers. Commun. 2020, 112, 555–570. [Google Scholar] [CrossRef]

- Khorsandroo, S.; Sánchez, A.G.; Tosun, A.S.; Arco, J.M.; Doriguzzi-Corin, R. Hybrid SDN Evolution: A Comprehensive Survey of the State-of-the-Art. Comput. Netw. 2021, 192, 107981. [Google Scholar] [CrossRef]

- Ali, O.M.A.; Hamaamin, R.A.; Youns, B.J.; Kareem, S.W. Innovative Machine Learning Strategies for DDoS Detection: A Review. UHD J. Sci. Technol. 2024, 8, 38–49. [Google Scholar] [CrossRef]

- Inchara, S.; Keerthana, D.; Babu, K.N.R.M.; Mabel, J.P. Detection and Mitigation of Slow DoS Attacks Using Machine Learning. In Recent Advances in Industry 4.0 Technologies, Proceedings of the AIP Conference Proceedings, Karaikal, India, 14–16 September 2022; American Institute of Physics Inc.: College Park, MD, USA, 2023; Volume 2917. [Google Scholar]

- Alashhab, A.A.; Zahid, M.S.; Isyaku, B.; Elnour, A.A.; Nagmeldin, W.; Abdelmaboud, A.; Abdullah, T.A.A.; Maiwada, U.D. Enhancing DDoS Attack Detection and Mitigation in SDN Using an Ensemble Online Machine Learning Model. IEEE Access 2024, 12, 51630–51649. [Google Scholar] [CrossRef]

- Ahuja, N.; Mukhopadhyay, D.; Singal, G. DDoS Attack Traffic Classification in SDN Using Deep Learning. Pers. Ubiquitous Comput. 2024, 28, 417–429. [Google Scholar] [CrossRef]

- Alghoson, E.S.; Abbass, O. Detecting Distributed Denial of Service Attacks Using Machine Learning Models. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 0121277. [Google Scholar] [CrossRef]

- Al-Dunainawi, Y.; Al-Kaseem, B.R.; Al-Raweshidy, H.S. Optimized Artificial Intelligence Model for DDoS Detection in SDN Environment. IEEE Access 2023, 11, 106733–106748. [Google Scholar] [CrossRef]

- Akhtar, M.S.; Feng, T. Deep Learning-Based Framework for the Detection of Cyberattack Using Feature Engineering. Secur. Commun. Netw. 2021, 2021, 6129210. [Google Scholar] [CrossRef]

- Srinivasa Rao, G.; Santosh Kumar Patra, P.; Narayana, V.A.; Raji Reddy, A.; Vibhav Reddy, G.N.V.; Eshwar, D. DDoSNet: Detection and Prediction of DDoS Attacks from Realistic Multidimensional Dataset in IoT Network Environment. Egypt. Inform. J. 2024, 27, 100526. [Google Scholar] [CrossRef]

- Alabdulatif, A.; Thilakarathne, N.N.; Aashiq, M. Machine Learning Enabled Novel Real-Time IoT Targeted DoS/DDoS Cyber Attack Detection System. Comput. Mater. Contin. 2024, 80, 3655–3683. [Google Scholar] [CrossRef]

- Halladay, J.; Cullen, D.; Briner, N.; Warren, J.; Fye, K.; Basnet, R.; Bergen, J.; Doleck, T. Detection and Characterization of DDoS Attacks Using Time-Based Features. IEEE Access 2022, 10, 49794–49807. [Google Scholar] [CrossRef]

- Gebrye, H.; Wang, Y.; Li, F. Traffic Data Extraction and Labeling for Machine Learning Based Attack Detection in IoT Networks. Int. J. Mach. Learn. Cybern. 2023, 14, 2317–2332. [Google Scholar] [CrossRef]

- Hossain, M.A.; Islam, M.S. Enhancing DDoS Attack Detection with Hybrid Feature Selection and Ensemble-Based Classifier: A Promising Solution for Robust Cybersecurity. Meas. Sens. 2024, 32, 101037. [Google Scholar] [CrossRef]

- Aslam, N.; Srivastava, S.; Gore, M.M. DDoS SourceTracer: An Intelligent Application for DDoS Attack Mitigation in SDN. Comput. Electr. Eng. 2024, 117, 109282. [Google Scholar] [CrossRef]

- Wabi, A.A.; Idris, I.; Olaniyi, O.M.; Ojeniyi, J.A. DDOS Attack Detection in SDN: Method of Attacks, Detection Techniques, Challenges and Research Gaps. Comput. Secur. 2024, 139, 103652. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Hakak, S.; Ghorbani, A.A. Developing Realistic Distributed Denial of Service (DDoS) Attack Dataset and Taxonomy. In Proceedings of the International Carnahan Conference on Security Technology, Chennai, India, 1–3 October 2019. [Google Scholar]

- Shafi, M.M.; Lashkari, A.H.; Roudsari, A.H. NTLFlowLyzer: Towards Generating an Intrusion Detection Dataset and Intruders Behavior Profiling through Network and Transport Layers Traffic Analysis and Pattern Extraction. Comput. Secur. 2025, 148, 104160. [Google Scholar] [CrossRef]

- Gupta, N.; Maashi, M.S.; Tanwar, S.; Badotra, S.; Aljebreen, M.; Bharany, S. A Comparative Study of Software Defined Networking Controllers Using Mininet. Electronics 2022, 11, 2715. [Google Scholar] [CrossRef]

- Correa Chica, J.C.; Imbachi, J.C.; Botero Vega, J.F. Security in SDN: A Comprehensive Survey. J. Netw. Comput. Appl. 2020, 159, 102595. [Google Scholar] [CrossRef]

- Hirsi, A.; Audah, L.; Salh, A.; Alhartomi, M.A.; Ahmed, S. Detecting DDoS Threats Using Supervised Machine Learning for Traffic Classification in Software Defined Networking. IEEE Access 2024, 12, 166675–166702. [Google Scholar] [CrossRef]

- Kabdjou, J.; Shinomiya, N. Improving Quality of Service and HTTPS DDoS Detection in MEC Environment with a Cyber Deception-Based Architecture. IEEE Access 2024, 12, 23490–23503. [Google Scholar] [CrossRef]

- Dhahir, Z.S. A Hybrid Approach for Efficient DDoS Detection in Network Traffic Using CBLOF-Based Feature Engineering and XGBoost. J. Future Artif. Intell. Technol. 2024, 1, 174–190. [Google Scholar] [CrossRef]

- Coscia, A.; Dentamaro, V.; Galantucci, S.; Maci, A.; Pirlo, G. Automatic Decision Tree-Based NIDPS Ruleset Generation for DoS/DDoS Attacks. J. Inf. Secur. Appl. 2024, 82, 103736. [Google Scholar] [CrossRef]

- Butt, H.A.; Al Harthy, K.S.; Shah, M.A.; Hussain, M.; Amin, R.; Rehman, M.U. Enhanced DDoS Detection Using Advanced Machine Learning and Ensemble Techniques in Software Defined Networking. Comput. Mater. Contin. 2024, 81, 3003–3031. [Google Scholar] [CrossRef]

- Perez-Diaz, J.A.; Valdovinos, I.A.; Choo, K.K.R.; Zhu, D. A Flexible SDN-Based Architecture for Identifying and Mitigating Low-Rate DDoS Attacks Using Machine Learning. IEEE Access 2020, 8, 155859–155872. [Google Scholar] [CrossRef]

- Yungaicela-Naula, N.M.; Vargas-Rosales, C.; Perez-Diaz, J.A. SDN-Based Architecture for Transport and Application Layer DDoS Attack Detection by Using Machine and Deep Learning. IEEE Access 2021, 9, 108495–108512. [Google Scholar] [CrossRef]

- Aslam, N.; Srivastava, S.; Gore, M.M. ONOS DDoS Defender: A Comparative Analysis of Existing DDoS Attack Datasets Using Ensemble Approach. Wirel. Pers. Commun. 2023, 133, 1805–1827. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Feng, F.; Liu, Y.; Li, Z.; Shan, Y. A DDoS Detection Method Based on Feature Engineering and Machine Learning in Software-Defined Networks. Sensors 2023, 23, 6176. [Google Scholar] [CrossRef]

- Elubeyd, H.; Yiltas-Kaplan, D. Hybrid Deep Learning Approach for Automatic DoS/DDoS Attacks Detection in Software-Defined Networks. Appl. Sci. 2023, 13, 3828. [Google Scholar] [CrossRef]

- Najar, A.A.; Manohar Naik, S. Cyber-Secure SDN: A CNN-Based Approach for Efficient Detection and Mitigation of DDoS Attacks. Comput. Secur. 2024, 139, 103716. [Google Scholar] [CrossRef]

- Garba, U.H.; Toosi, A.N.; Pasha, M.F.; Khan, S. SDN-Based Detection and Mitigation of DDoS Attacks on Smart Homes. Comput. Commun. 2024, 221, 29–41. [Google Scholar] [CrossRef]

- Singh, A.; Kaur, H.; Kaur, N. A Novel DDoS Detection and Mitigation Technique Using Hybrid Machine Learning Model and Redirect Illegitimate Traffic in SDN Network. Clust. Comput. 2024, 27, 3537–3557. [Google Scholar] [CrossRef]

- Han, D.; Li, H.; Fu, X.; Zhou, S. Traffic Feature Selection and Distributed Denial of Service Attack Detection in Software-Defined Networks Based on Machine Learning. Sensors 2024, 24, 4344. [Google Scholar] [CrossRef] [PubMed]

- Ali, U. Performance Comparison of SDN Controllers in Different Network Topologies. Res. Sq. 2024. [Google Scholar] [CrossRef]

- Sheikh, M.N.A.; Hwang, I.S.; Raza, M.S.; Ab-Rahman, M.S. A Qualitative and Comparative Performance Assessment of Logically Centralized SDN Controllers via Mininet Emulator. Computers 2024, 13, 85. [Google Scholar] [CrossRef]

- Wazirali, R.; Ahmad, R.; Alhiyari, S. Sdn-Openflow Topology Discovery: An Overview of Performance Issues. Appl. Sci. 2021, 11, 6999. [Google Scholar] [CrossRef]

- Dini, P.; Elhanashi, A.; Begni, A.; Saponara, S.; Zheng, Q.; Gasmi, K. Overview on Intrusion Detection Systems Design Exploiting Machine Learning for Networking Cybersecurity. Appl. Sci. 2023, 13, 7507. [Google Scholar] [CrossRef]

- Paramaputra, A.P.; Suranegara, G.M.; Setyowati, E. Mitigation of Multi Target Denial of Service (dos) Attacks Using Wazuh Active Response. J. Comput. Netw. Archit. High. Perform. Comput. 2025, 7, 483–493. [Google Scholar] [CrossRef]

| Ref. | Year | Controller | Attack Tools | Machine Learning/Deep Learning Model | Dataset Used | N° Features |

|---|---|---|---|---|---|---|

| [28] | 2020 | ONOS | Slowloris, SlowHTTP, RUDY, HULK | J48, RF, REP Tree, MLP, SVM | CICDDoS2017 | 44 |

| [29] | 2021 | ONOS | Hping3, SlowHTTP, DrDNS | LSTM, GRU, MLP, RF, KNN | CICDDoS2017 CICDDoS2019 | 50 |

| [30] | 2023 | ONOS | Hping3, Mausezahn, HULK | MPL, RF, XGBoost, AdaBoost | CICDDoS2017 CICDDoS2018 CICDDoS2019 | 48 |

| [31] | 2023 | RYU | Hping3 | RF, SVM, XGBoost, KNN Decision Tree | CICDDoS2018 | 26 |

| [10] | 2023 | RYU | Hping3, Iperf | 1D-CNN | RYU Monitoring (Generated) | 14 |

| [32] | 2024 | RYU | Iperf, Hping3, Scapy | CNN, GRU, DNN | CICDDoS2017 CICDDoS2019 | 88 |

| [17] | 2024 | ONOS | Hping3, Mausezahn, HULK, Torshammer | MPL, RF, KNN, SVM, XGBoost | DDoS SourceTracer app. | 22 |

| [33] | 2024 | POX | Hping3, Scapy | LSTM, DNN, GRU, BRS+CNN | CICDDoS2019 | 66 |

| [34] | 2024 | RYU | Hping3, XerXes | KNN, SVM, Decision Tree | UNSW-NB15, CICDDoS2018 | 29 |

| [35] | 2024 | ONOS | Hping3, XerXes | SVM-RF | Snort, Wireshark | 12 |

| [36] | 2024 | RYU | Hping3 | DT, SVM, RF, LR | InSDN CICDDoS2017 CICDDoS2018 | 77 |

| [7] | 2024 | RYU | Hping3, Scapy, Iperf | BernoulliNB, PA, SDG, MLP, Ensemble | CICDDoS2019 | 22 |

| Ref. | Controller Used | Attack Application Layer | Using Extraction Tool | Own Dataset | Independent Test Dataset | N° Features | Highest Accuracy Obtained (%) |

|---|---|---|---|---|---|---|---|

| [28] | ONOS | ✓ | x | x | x | 44 | 95.0 |

| [29] | ONOS | ✓ | x | x | x | 50 | 99.8 |

| [30] | ONOS | ✓ | x | x | x | 48 | 99.9 |

| [31] | RYU | x | x | x | ✓ | 26 | 99.1 |

| [10] | RYU | x | x | ✓ | x | 14 | 99.9 |

| [32] | RYU | ✓ | x | x | x | 88 | 99.8 |

| [17] | ONOS | ✓ | x | ✓ | x | 22 | 99.2 |

| [33] | POX | x | x | x | x | 66 | 99.9 |

| [34] | RYU | ✓ | x | x | x | 29 | 99.5 |

| [35] | ONOS | ✓ | x | ✓ | x | 12 | 99.1 |

| [36] | RYU | x | x | x | x | 77 | 99.9 |

| [7] | RYU | ✓ | x | x | x | 22 | 99.2 |

| Hardware Specifications Used (Virtual Machine) | ||

|---|---|---|

| Component | Technical Features | |

| RAM | 8 GB | |

| Storage | 80 GB | |

| Processors | 6 | |

| Software tools | ||

| Tool | Version | Simulation Objective |

| Operating System | Ubuntu 20.04 LST | Base environment for simulation |

| Mininet | 2.3.1 | SDN simulation |

| Open Daylight Controller | 0.3.0 Lithium | Centralized controller in SDN |

| Web Server | Apache 2.4.41 | Provides legitimate HTTP service |

| Slowloris | 0.2.6 | Malicious traffic generation using partial HTTP connections |

| Slowhttptest | 1.6 | Persistent attack generation |

| GoldenEye | 2.1 | Multiple HTTP connection attack generation |

| HULK | 5.0 | Flood HTTP traffic generation |

| XerXes | 1.0 | Flood type attack generation |

| Tcpdump | 4.9.3 | Network traffic monitoring and capture |

| Scenario | Tool | Parameters | Description |

|---|---|---|---|

| Low Intensity | Slowhttptest | Slowhttptest-c 500-H-i 10-r 100-t GET http://10.0.0.20-x 10-p 5 | Sends 500 connections with 10 s intervals and GET requests. |

| Slowhttptest | Slowhttptest-c 1000 -H-i 15-r 200-t GET http://10.0.0.20-x 20 -p 10 | Sends 1000 connections with 15 s intervals and GET requests. | |

| Slowloris | Python3 slowloris.py 10.0.0.20 -s 9000 | Launches an attack that opens 9000 partial HTTP connections and keeps them open. | |

| Medium Intensity | Slowloris | Python3 slowloris.py 10.0.0.20 -s 80,000 | Launches an attack that opens 80,000 partial HTTP connections and keeps them open |

| HULK | Python3 hulk.py http://10.0.0.20 | Launches an attack involving the massive sending of dynamic HTTP requests. | |

| High Intensity | XerXes | ./xerxes 10.0.0.20 80 | Network layer attack sending massive traffic to port 80. |

| GoldenEye | Python3 goldeneye 10.0.0.20 w 600 -s 3000 | HTTP attack with 600 threads and 2000 open sockets |

| Day | Normal Traffic Capture (s) | Attack Traffic Capture (s) |

|---|---|---|

| 1–7 | 600 | 600 |

| Total | 4200 | 4200 |

| Characteristics | ||

|---|---|---|

| Features | CICFlowMeter | NTLFlowLyzer |

| Developer | Canadian Institute for Cybersecurity (CIC), University of New Brunswick, Canada | Behavioral Cybersecurity Center (BCCC), York University, Canada |

| Base Language | Java | Python |

| Input Format | pcap | pcap |

| Output Format | csv | csv |

| Label Selection | x | ✓ |

| Customizable | x | ✓ |

| Maximum Number of Columns | 82 | 346 |

| Configuration | ||

| Features | CICFlowMeter | NTLFlowLyzer |

| Configuration file | Constants.py | Config.json |

| Activity timeout (s) | 240 | 300 |

| Expiration sweeps (garbage collection) | 1000 | 10,000 |

| Concurrence (number of threads) | 1 | 8 |

| Directionality | Unidirectional | Bidirectional |

| Day | CICFlowMeter | NTLFlowLyzer | Attack Tools | Parameter Variation | ||

|---|---|---|---|---|---|---|

| Attack | Normal | Attack | Normal | |||

| 1 | 9381 | 27,917 | 9512 | 60,126 | Slowloris | Parameter-s (number of sockets varied between 5000 and 80,000), -t (without timeout). |

| 2 | 65,592 | 22,282 | 85,667 | 45,962 | HULK | Parameter–requests (1000–5000 requests per connection) |

| 3 | 16,282 | 22,054 | 24,297 | 46,134 | SlowHTTP | Parameter-p (attack profile: 0–2), -i (interval between data packets: 1–5 s), and -r (connections per second: 500–8000). |

| 4 | 68,034 | 17,390 | 110,948 | 36,388 | GoldenEye | Parameter-w (workers varied between 200 and 600) and -s (number of sockets varied between 100 and 20,000). |

| 5 | 8846 | 30,221 | 8861 | 64,401 | XerXes | Parameter-c (concurrent connections varied between 100 and 400) and -p (packets per second varied between 500 and 1500). |

| 6 | 8801 | 27,390 | 8827 | 55,888 | Slowloris | Parameter-s (number of sockets varied between 3000 and 10,000) |

| 7 | 76,566 | 24,164 | 120,616 | 55,962 | HULK | Parameter–requests (5000–20,000 requests per connection) |

| Total | 253,502 | 171,418 | 368,728 | 362,861 | ||

| Day | CICFlowMeter | NTLFlowLyzer | Attack Tools | ||

|---|---|---|---|---|---|

| Attack Flows (s) | Normal Flows (s) | Attack Flows (s) | Normal Flows (s) | ||

| 1 | 174 | 890 | 396 | 1432 | Slowloris |

| 2 | 991 | 884 | 2403 | 1565 | HULK |

| 3 | 250 | 693 | 691 | 1244 | SlowHTTP |

| 4 | 1535 | 392 | 2672 | 1249 | GoldenEye |

| 5 | 957 | 1068 | 731 | 1156 | XerXes |

| 6 | 128 | 1230 | 233 | 1217 | Slowloris |

| 7 | 949 | 880 | 3108 | 804 | HULK |

| Average | 712.00 | 862.43 | 1462.00 | 1238.14 | |

| Total | 4984 | 6037 | 10234 | 8667 | |

| Parameters | Random Forest | SVM | KNN | XGBoost |

|---|---|---|---|---|

| Training time (s) | 0.8365 | 0.0093 | 0.0145 | 0.5838 |

| Prediction Time (s) | 0.0147 | 0.0147 | 0.0378 | 0.0080 |

| Size (KB) | 160.2 | 4.7 | 9.9 | 230.5 |

| Good Generalization | ✓ | ✓ | x | ✓ |

| Anomaly Detection | ✓ | ✓ | x | ✓ |

| Scalability with large datasets | ✓ | x | x | ✓ |

| Parameter | XGBoost Values | Random Forest Values |

|---|---|---|

| n_estimators | 100 | 100 |

| learning_rate | 0.05 | N/A |

| max_depth | 6 | 6 |

| subsample | 0.8 | N/A |

| col_sample_bytree | 0.8 | N/A |

| gamma | 1 | N/A |

| reg_alpha | 0.1 | N/A |

| reg_lambda | 1 | N/A |

| tree_method | “hist” | N/A |

| booster | “gbtree” | N/A |

| random_state | 42 | 42 |

| n_jobs | −1 | −1 |

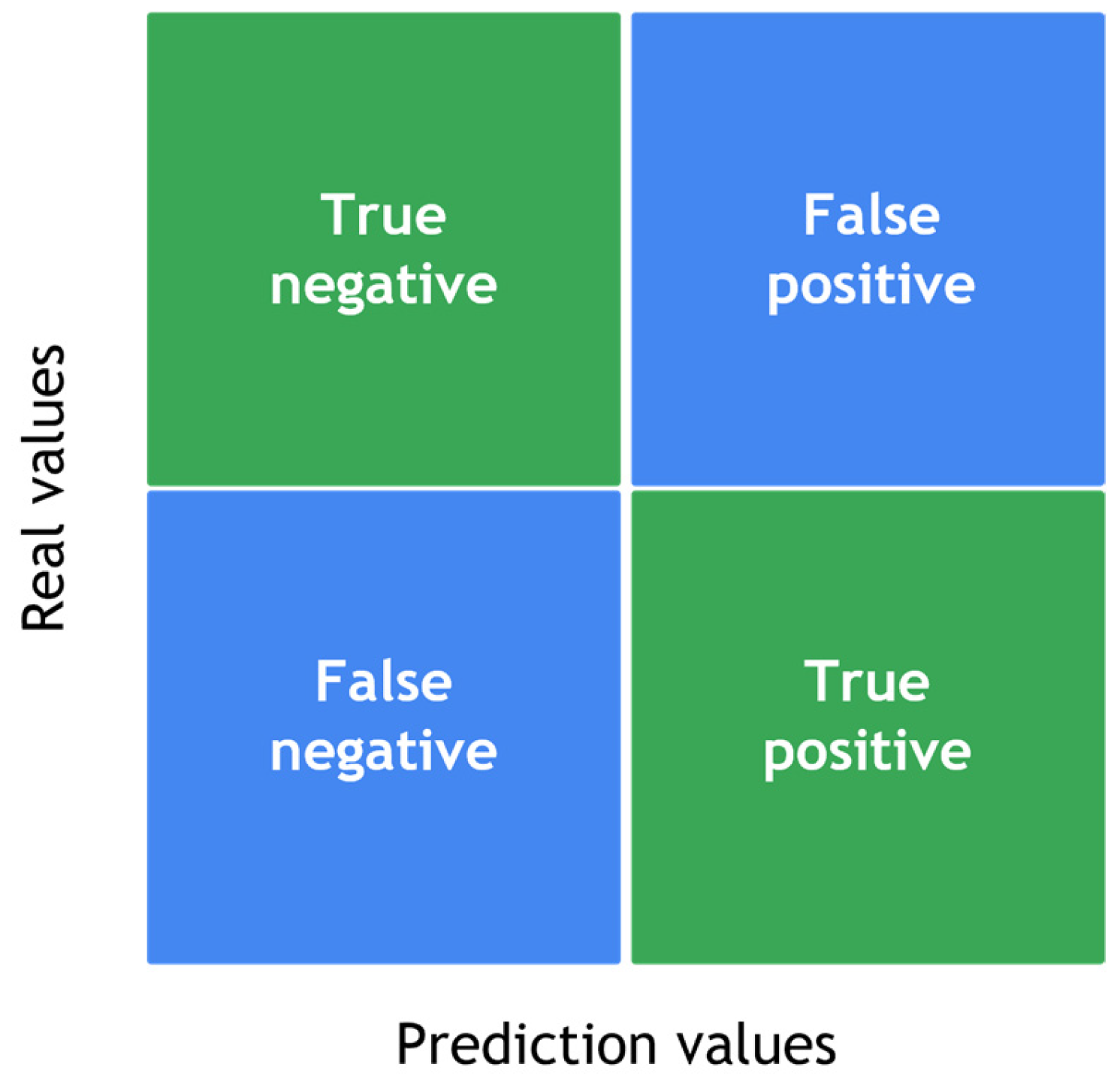

| Metric | Formula | |

|---|---|---|

| Accuracy | (1) | |

| Precision | (2) | |

| Recall | (3) | |

| F1-score | (4) | |

| False negative rate | (5) |

| Metric | CIC-Dataset | NTL-Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Slowloris | HULK | Slowhttptest | GoldenEye | XerXes | Slowloris | HULK | Slowhttptest | GoldenEye | XerXes | |

| TP | 4005 | 50,021 | 49,725 | 15,435 | 3944 | 4009 | 74,700 | 48,364 | 27,192 | 3897 |

| TN | 46,082 | 30,185 | 55,093 | 22,023 | 17,390 | 99,908 | 59,690 | 62,590 | 23,596 | 36,388 |

| FP | 364 | 36 | 214 | 31 | 2 | 15 | 4711 | 1811 | 22,537 | 0 |

| FN | 0 | 2 | 147 | 64 | 2 | 8 | 15 | 136 | 0 | 0 |

| Accuracy (%) | 99.27 | 99.95 | 99.65 | 99.74 | 99.98 | 99.97 | 96.60 | 98.27 | 69.26 | 100 |

| Precision (%) | 91.66 | 99.92 | 99.57 | 99.79 | 99.94 | 99.62 | 94.06 | 96.39 | 54.68 | 100 |

| Recall (%) | 100 | 99.99 | 99.70 | 99.58 | 99.94 | 99.80 | 99.97 | 99.71 | 100 | 100 |

| F1-Score (%) | 95.65 | 99.96 | 99.73 | 99.69 | 99.94 | 99.71 | 96.93 | 98.02 | 70.70 | 100 |

| FNR | 0 | 0.00004 | 0.0029 | 0.0041 | 0.0005 | 0.0019 | 0.0002 | 0.0028 | 0 | 0 |

| Metric | CIC-Dataset | NTL-Dataset | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Slowloris | HULK | Slowhttptest | GoldenEye | XerXes | Slowloris | HULK | Slowhttptest | GoldenEye | XerXes | |

| TP | 4005 | 50,022 | 49,623 | 15,496 | 3893 | 4016 | 74,700 | 48,423 | 27,192 | 3808 |

| TN | 46,083 | 29,859 | 55,092 | 22,027 | 17,385 | 99,920 | 64,396 | 63,390 | 46,129 | 36,388 |

| FP | 363 | 362 | 215 | 27 | 7 | 3 | 5 | 1011 | 4 | 0 |

| FN | 0 | 1 | 249 | 3 | 53 | 1 | 15 | 77 | 0 | 89 |

| Accuracy (%) | 99.28 | 99.54 | 99.55 | 99.92 | 99.71 | 99.99 | 99.98 | 99.03 | 99.99 | 99.77 |

| Precision (%) | 91.68 | 99.28 | 99.96 | 99.82 | 99.82 | 99.92 | 99.99 | 97.95 | 99.98 | 100 |

| Recall (%) | 100 | 99.99 | 99.50 | 99.98 | 98.65 | 99.97 | 99.97 | 99.84 | 100 | 97.77 |

| F1-Score (%) | 95.66 | 99.63 | 99.53 | 99.90 | 99.23 | 99.95 | 99.98 | 98.88 | 99.99 | 98.84 |

| FNR | 0 | 0.00002 | 0.0049 | 0.0001 | 0.0134 | 0.0024 | 0.0002 | 0.0015 | 0 | 0.0228 |

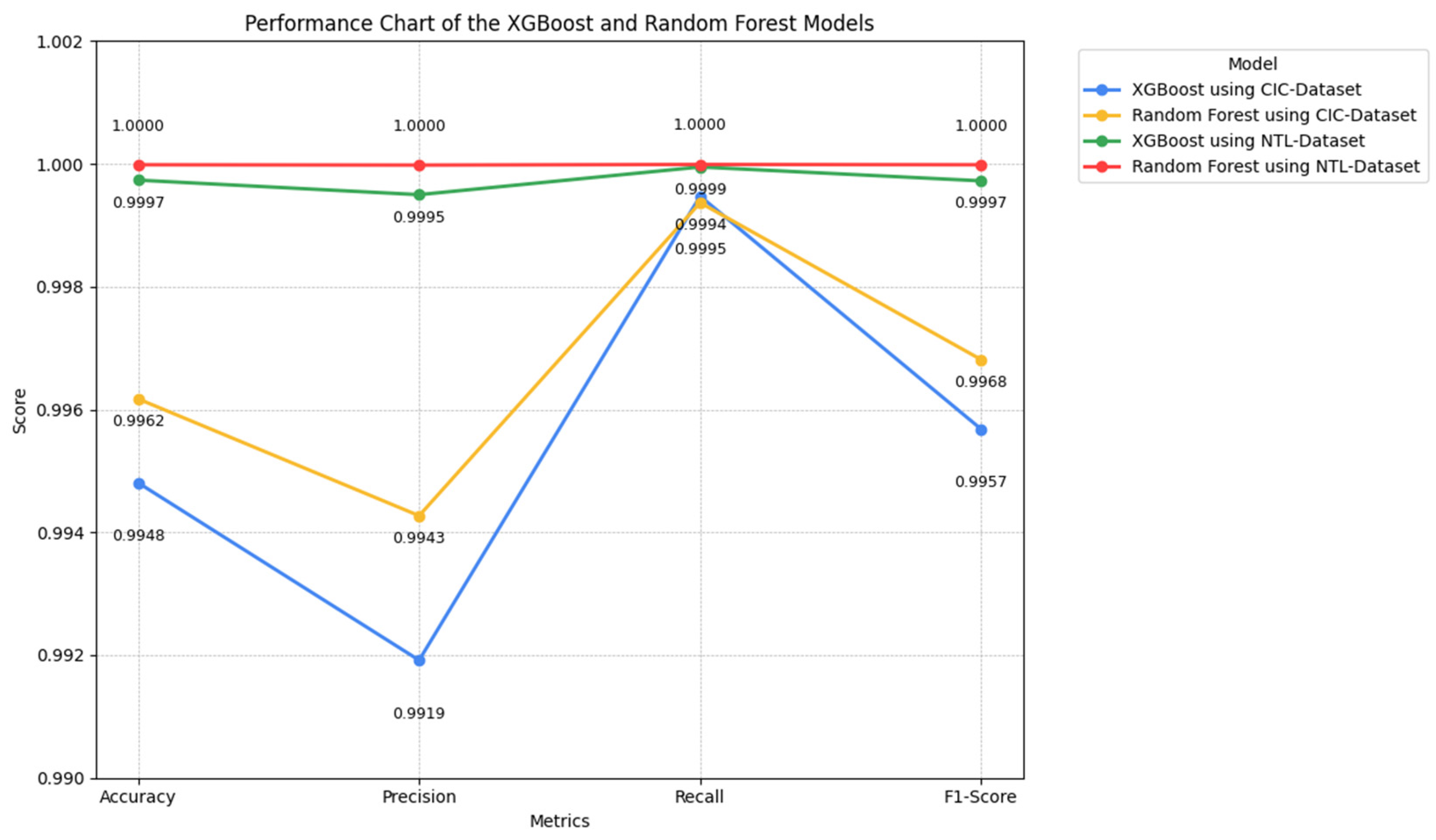

| Metric | XGBoost | Random Forest | ||

|---|---|---|---|---|

| CIC | NTL | CIC | NTL | |

| Accuracy (%) | 99.48 | 99.97 | 99.61 | 99.99 |

| Precision (%) | 99.19 | 99.94 | 99.42 | 99.99 |

| Recall (%) | 99.94 | 99.99 | 99.93 | 99.99 |

| F1-score (%) | 99.56 | 99.97 | 99.68 | 99.99 |

| FRN | 0.0005 | 0.00005 | 0.0006 | 0.00001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Estupiñán Cuesta, E.P.; Martínez Quintero, J.C.; Avilés Palma, J.D. DDoS Attacks Detection in SDN Through Network Traffic Feature Selection and Machine Learning Models. Telecom 2025, 6, 69. https://doi.org/10.3390/telecom6030069

Estupiñán Cuesta EP, Martínez Quintero JC, Avilés Palma JD. DDoS Attacks Detection in SDN Through Network Traffic Feature Selection and Machine Learning Models. Telecom. 2025; 6(3):69. https://doi.org/10.3390/telecom6030069

Chicago/Turabian StyleEstupiñán Cuesta, Edith Paola, Juan Carlos Martínez Quintero, and Juan David Avilés Palma. 2025. "DDoS Attacks Detection in SDN Through Network Traffic Feature Selection and Machine Learning Models" Telecom 6, no. 3: 69. https://doi.org/10.3390/telecom6030069

APA StyleEstupiñán Cuesta, E. P., Martínez Quintero, J. C., & Avilés Palma, J. D. (2025). DDoS Attacks Detection in SDN Through Network Traffic Feature Selection and Machine Learning Models. Telecom, 6(3), 69. https://doi.org/10.3390/telecom6030069