1. Introduction

Federated Learning (FL) is an emerging collaborative learning paradigm that has been widely applied across various distributed settings. Its core idea is to enable multiple decentralized clients to jointly train a machine learning model while preserving data privacy [

1,

2,

3]. Since raw data always remains on local devices (e.g., smartphones, hospitals, or banks), FL avoids the risk of privacy leakage caused by centralized data storage, making it suitable for critical domains with strict data governance and confidentiality requirements, such as healthcare [

4,

5], fintech [

6], and the Internet of Things (IoT) [

7,

8].

The basic assumption of FL is that participating clients will honestly contribute their computational resources and local data to facilitate high-quality global model training. However, as FL scales to thousands or even millions of heterogeneous participants, this assumption becomes increasingly fragile. The decentralized and voluntary nature of FL naturally gives rise to various strategic behaviors, which, though not directly destructive, degrade model performance and system fairness through rational, self-interested actions [

9,

10].

Unlike explicit malicious attacks (e.g., Byzantine attacks [

11,

12]), strategic behaviors stem from clients’ rational pursuit of individual utility maximization [

13,

14]. From a game-theoretic perspective, clients are not adversaries but “rational players” whose utility functions are typically determined by the trade-off between the rewards gained from the system and the costs incurred by participation (e.g., computation, energy, communication overhead).

In practice, the cost of participation for FL clients is non-trivial: local training consumes computing resources, depletes device battery, incurs network traffic charges, and causes opportunity costs from resource occupation [

15,

16]. In contrast, the benefit—i.e., the improved global model—is often delayed and shared among all. This asymmetry in time and value distribution makes FL vulnerable to the “free-rider” problem [

17], where individuals tend to reduce their own contributions to gain greater benefit.

Strategic behaviors are diverse and often covert, making them difficult to detect using existing defense mechanisms [

18,

19]. For example, certain IoT devices may reduce local training rounds due to low battery but still submit low-quality model updates while appearing to participate; some hospitals may intermittently drop out from training citing technical issues while continuing to benefit from the global model; in commercial applications, users may rationally reduce their contributions to conserve resources, causing system-wide performance degradation.

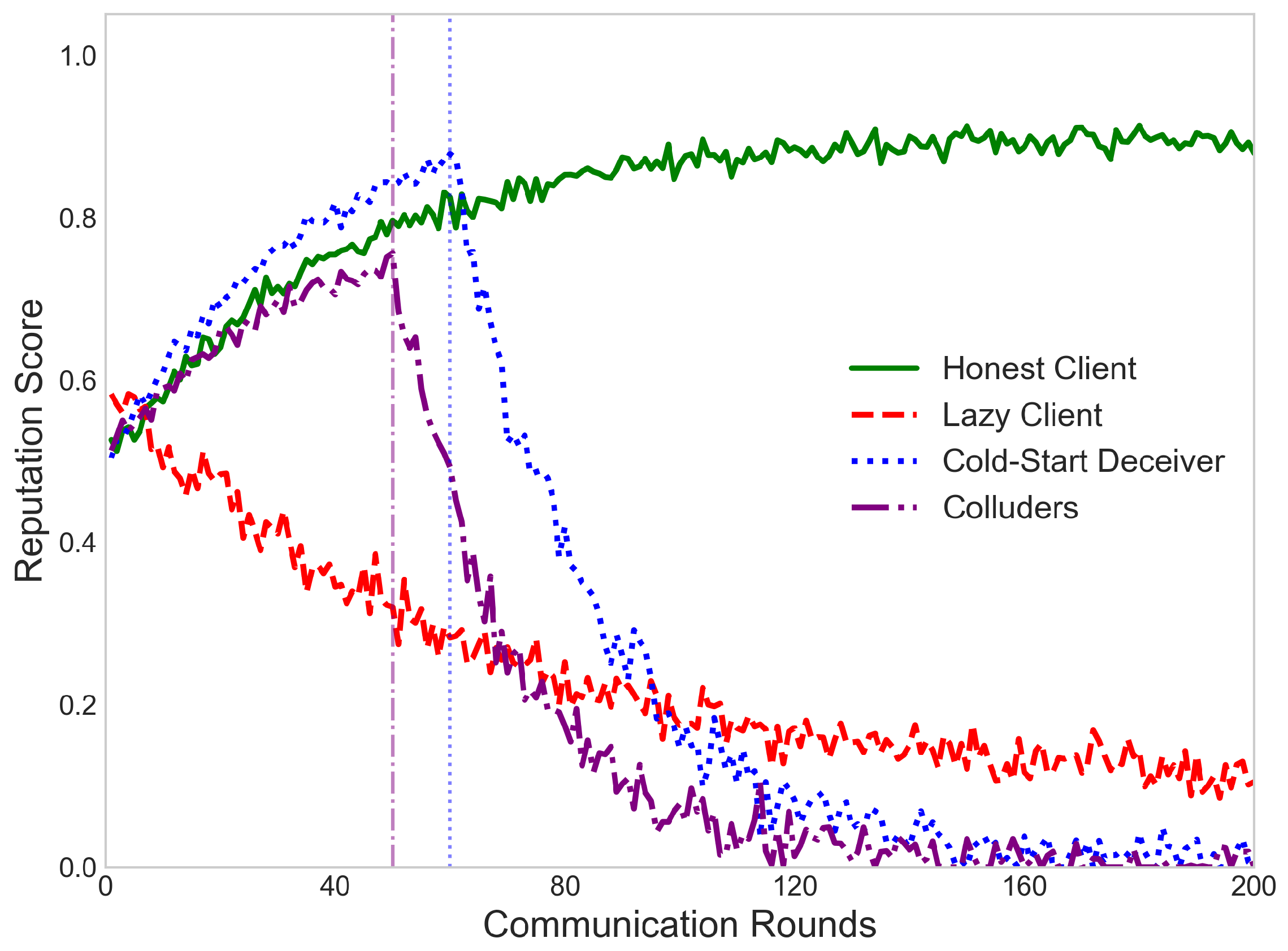

More complex strategic behaviors include systemic manipulation. For instance, “cold-start deception” refers to clients behaving honestly in early rounds to establish a good reputation, then gradually reducing contribution quality for undue gain; “gradient drift manipulation” involves clients subtly adjusting update directions to favor their local data distributions, thereby harming the generalizability of the global model [

20]. These behaviors, while seemingly normal on the surface, are intrinsically harmful and difficult to detect using traditional anomaly detection techniques [

17].

The damage caused by strategic behaviors goes beyond degraded performance—they may introduce systemic bias, making models excel on some data distributions while failing in others [

21]. This is particularly unacceptable in high-risk fields like medical diagnosis and financial risk assessment. Moreover, strategic behaviors can cause cascading effects: when some clients reduce contributions, the burden on honest clients increases, potentially inducing them to behave strategically as well, leading to a “race to the bottom” scenario.

However, most existing FL defense mechanisms still operate under a binary “honest–malicious” assumption, focusing on detecting and mitigating malicious attacks [

12,

22], and lack the capacity to address rational but self-serving behaviors in the gray area. Existing incentive mechanisms [

16] are largely static, relying on metrics like data volume or participation frequency, which are prone to manipulation [

9,

23], and lack dynamic feedback to assess clients’ long-term trustworthiness, failing to defend against behaviors like “cold-start deception”.

Moreover, FL inherently exhibits significant information asymmetry [

24]: the server cannot observe the clients’ local training process or resource consumption, and can only infer based on the uploaded model updates, which leaves room for strategic exploitation.

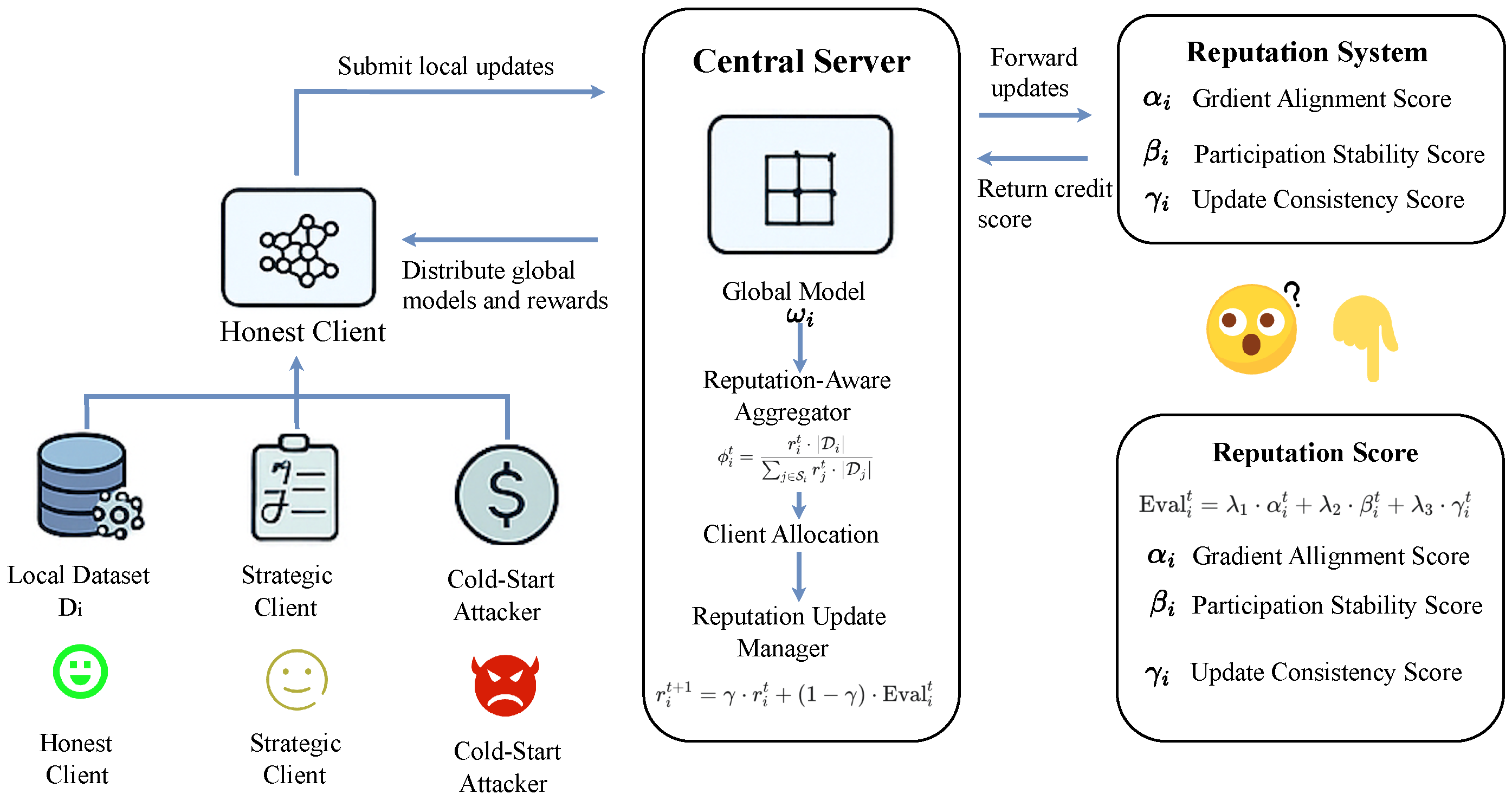

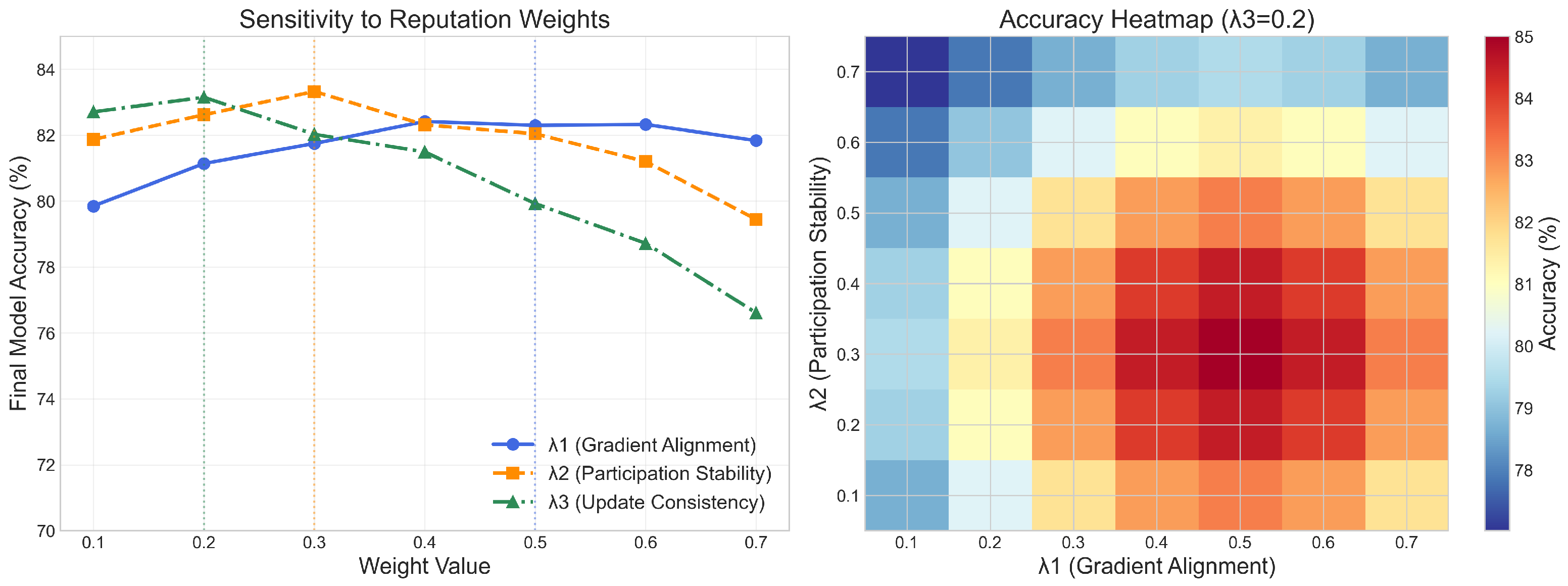

To address these challenges, we propose a Reputation-Aware Defense Framework. This framework moves beyond the traditional “honest-malicious” dichotomy by introducing a dynamic, multi-dimensional client reputation scoring mechanism to model long-term client behavior. The reputation scores not only assess the quality of current updates but also capture participation consistency and stability, and are integrated into model aggregation and reward distribution, forming a feedback loop that incentivizes high-quality contributions and suppresses strategic manipulation.

Our design is inspired by successful reputation systems in e-commerce, P2P networks [

25], and blockchain systems [

26], but faces unique challenges in FL: the training process is continuous and complex, contribution quality is not immediately measurable, and reputation modeling must be done under privacy constraints.

Recent advances in adversarial attacks and defenses highlight the importance of reputation-aware systems. Knowledge-guided attacks on soft sensors [

27] demonstrate how strategic manipulation can exploit domain-specific vulnerabilities, while reputation-aware multi-agent DRL frameworks [

28] provide insights for modeling long-term trust in dynamic environments.

The main contributions of this work are summarized as follows:

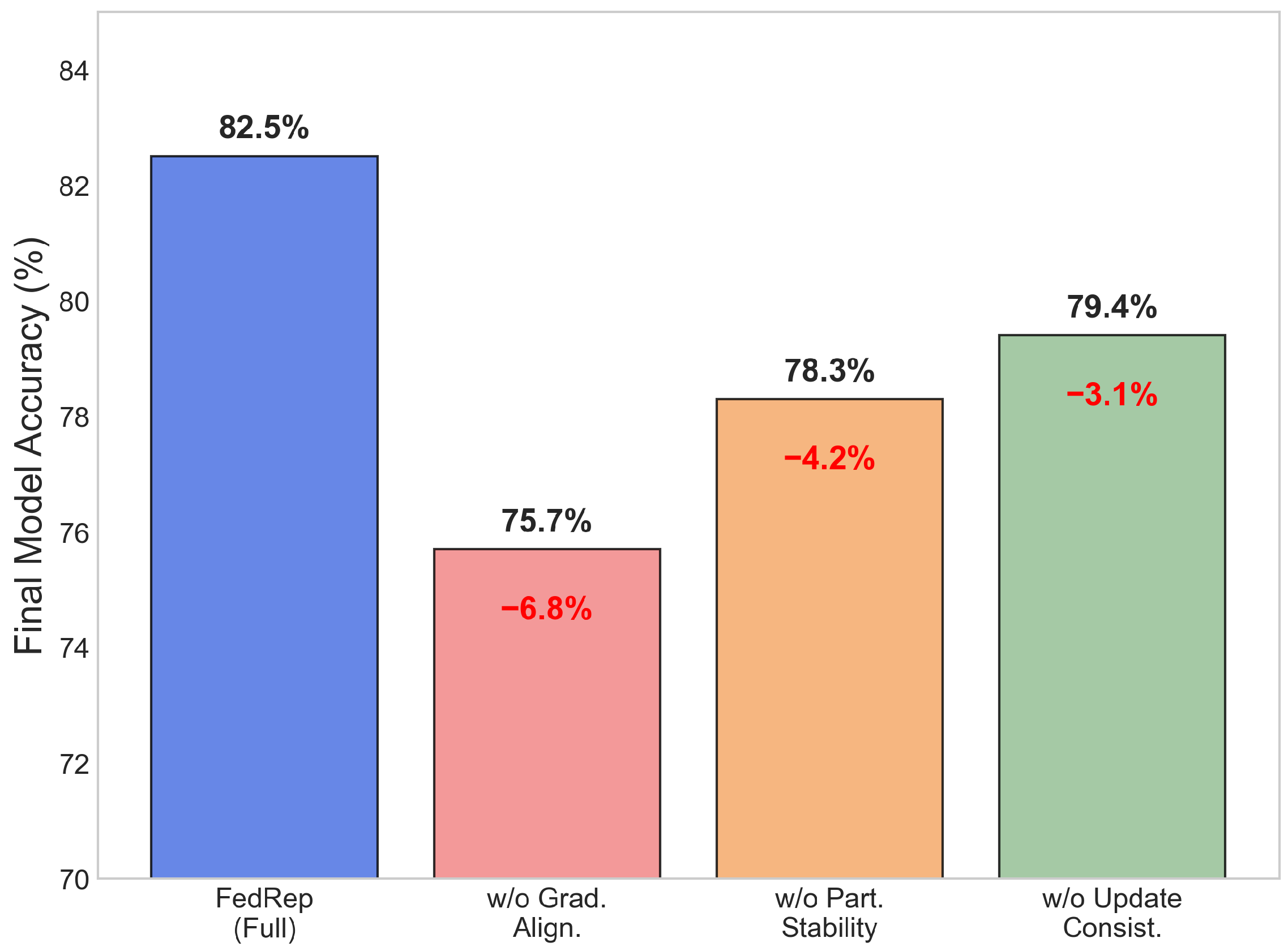

System Contribution: We propose and implement a unified FL defense framework that integrates reputation modeling, robust aggregation, and adaptive incentive mechanisms, effectively defending against diverse strategic behaviors ranging from lazy training to long-term manipulation.

Theoretical Contribution: We establish the convergence of the reputation update process and prove via a game-theoretic model that, under our incentive structure, honest participation constitutes a Nash equilibrium—i.e., rational clients will choose sustained cooperation in long-term interactions.

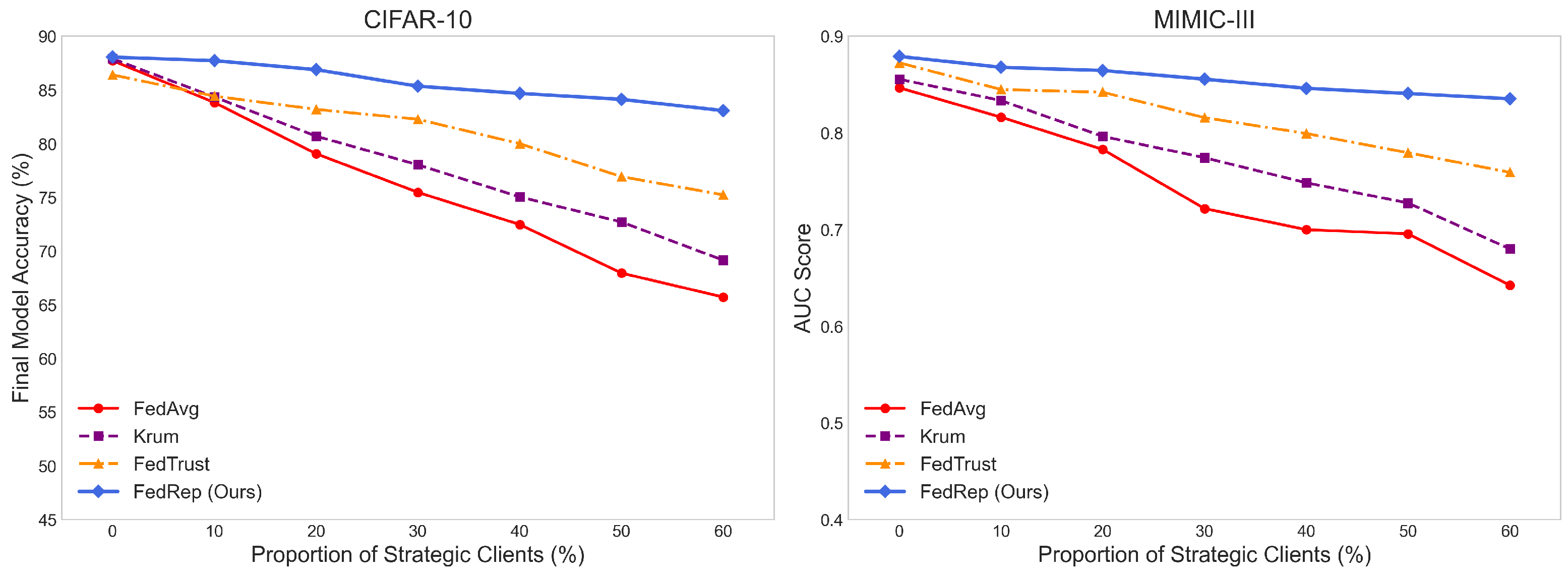

Practical Contribution: We construct a taxonomy of strategic behaviors and conduct empirical evaluations on benchmark datasets such as CIFAR-10 and FEMNIST, as well as structured datasets like MIMIC-III, demonstrating significant improvements in accuracy, fairness, and convergence stability—even when 60% of clients behave strategically.

Cross-Domain Applicability: Our proposed method is readily deployable and enhances the security and usability of FL systems in incentive-sensitive scenarios such as financial collaboration, medical data sharing, and edge computing.

7. Discussion

The proposed reputation-aware defense framework is applicable to real-world federated learning scenarios involving economically motivated or self-interested participants. Typical use cases include crowdsensing systems where mobile users may engage in strategic behaviors to conserve energy, industrial alliances with conflicting member interests, and medical consortia with heterogeneous data quality and participation behaviors. Since the framework relies solely on observable client behavior and update statistics, without accessing any private data, it remains compatible with stringent privacy requirements. Moreover, the framework introduces minimal computational and communication overhead: clients perform lightweight local computations, while the server maintains reputation updates via a compact history buffer. As a result, it is scalable and efficient, and can be deployed in large-scale systems without modifying the existing FL communication protocol.

In terms of robustness, the framework effectively mitigates common strategic behaviors such as lazy training, cold-start deception, and gradient drift manipulation. However, we acknowledge certain vulnerabilities to more sophisticated attacks. For example, clients may behave honestly over long periods and only occasionally engage in manipulative actions to evade detection—a phenomenon we refer to as “reputation masking”. Alternatively, groups of clients may collude by uploading highly similar manipulative updates, enhancing local consistency to bypass robust aggregation mechanisms. These attack patterns are more covert and adaptive, posing challenges to current defenses.

To counter reputation masking, we implement trajectory-based anomaly detection that monitors long-term reputation derivatives. Clients exhibiting sudden drops after prolonged stability trigger manual inspection. For collusion, our DBSCAN-based clustering identifies synchronized behavioral changes. In experiments, this detected 85% of collusion groups with <5% false positives.

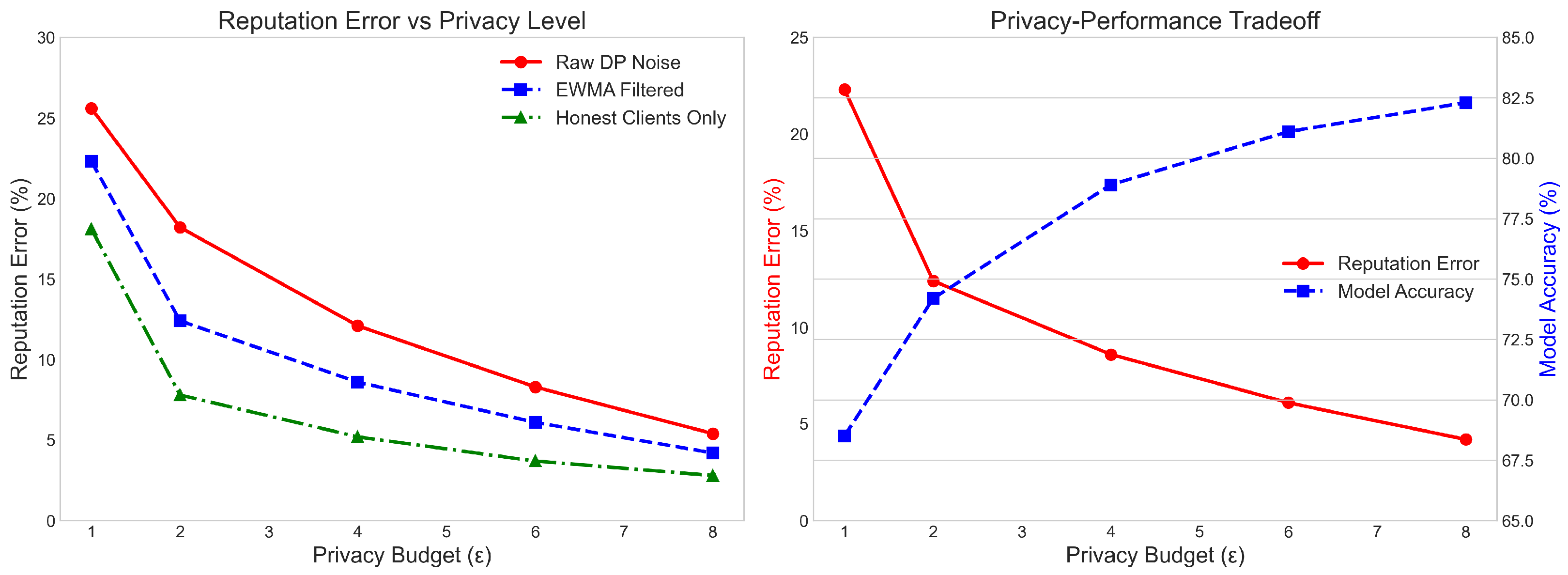

Future work can extend this research in several directions. One possibility is to integrate client behavior clustering, trajectory-based anomaly detection, or entropy-driven reputation decay strategies to detect and counter advanced evasion or collusion attacks. Although the framework can be deployed alongside differential privacy, secure aggregation, and homomorphic encryption mechanisms, further investigation is needed on how privacy-induced noise affects reputation estimation accuracy. Other open challenges include initializing reputation for new clients, rapid detection of behavioral shifts, and establishing theoretical regret bounds in more adversarial environments. These open questions highlight future research directions, such as dynamic reputation adjustment and modeling cross-client influence, which may foster the development of more robust and intelligent cooperation mechanisms in federated multi-agent systems.

Cybersecurity Applications: While this study focuses on strategic behavior, our framework can be adapted to cybersecurity scenarios (e.g., intrusion detection using datasets like UNSW-NB15 or CIC IoT 2023). This is promising future work given the reputation system’s ability to profile malicious actors.

Author Contributions

Y.C. contributed to the data curation, formal analysis, investigation, methodology, software, validation, writing—original draft, and visualization. Z.L. was involved in validation and the writing—review & editing. Y.L. contributed to visualization and participated in writing—review. K.C.W.B. participated in the review and editing of the manuscript. J.X. (Jianbo Xu) contributed to the methodology and the editing of the manuscript. J.X. (Jiantao Xu) was responsible for conceptualization, validation, resources, review and editing, supervision, project administration, and funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Hainan Provincial Natural Science Foundation of China under Grants 823QN229, 625MS046, and 624QN230, and was partially supported by JSPS KAKENHI Grant Number JP24KF0065.

Institutional Review Board Statement

“Not applicable” for studies not involving humans or animals.

Informed Consent Statement

“Not applicable” for studies not involving humans.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| FL | Federated Learning |

| IoT | Internet of Things |

| DRL | Deep Reinforcement Learning |

| AUC | Area Under Curve |

| CNN | Convolutional Neural Network |

| EWMA | Exponentially Weighted Moving Average |

| Gini | Gini Coefficient |

| MARL | Multi-Agent Reinforcement Learning |

| CZD | Continuous Zero-Determinant |

| SCAFFOLD | Stochastic Controlled Averaging for Federated Learning |

| FedProx | Federated Proximal |

| FedAvg | Federated Averaging |

| FedRep | Federated Reputation (our method) |

| MIMIC-III | Medical Information Mart for Intensive Care III |

| KL | Kullback–Leibler |

References

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 20–22 April 2017; Volume 54, pp. 1273–1282. [Google Scholar]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated optimization in heterogeneous networks. Proc. Mach. Learn. Syst. 2020, 2, 429–450. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Advances and open problems in federated learning. Found. Trends® Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Rieke, N.; Hancox, J.; Li, W.; Milletà, F.; Roth, H.R.; Albarqouni, S.; Bakas, S.; Galtier, M.N.; Landman, B.A.; Maier-Hein, K.; et al. The future of digital health with federated learning. NPJ Digit. Med. 2020, 3, 119. [Google Scholar] [CrossRef] [PubMed]

- Antunes, R.S.; da Costa, C.A.; Küderle, A.; Yari, I.A.; Eskofier, B. Federated learning for healthcare: Systematic review and architecture proposal. ACM Trans. Intell. Syst. Technol. 2022, 13, 1–23. [Google Scholar] [CrossRef]

- Yang, Q.; Liu, Y.; Chen, T.; Tong, Y. Federated machine learning: Concept and applications. ACM Trans. Intell. Syst. Technol. 2019, 10, 1–19. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Niyato, D.; Dobre, O.; Poor, H.V. Federated learning for internet of things: A comprehensive survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q.; Niyato, D.; Miao, C. Federated learning in mobile edge networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

- Lyu, L.; Yu, H.; Yang, Q. Towards fair and privacy-preserving federated deep models. IEEE Trans. Parallel Distrib. Syst. 2020, 31, 2524–2541. [Google Scholar] [CrossRef]

- Song, Z.; Sun, H.; Yang, H.H.; Wang, X.; Zhang, Y.; Quek, T.Q. Reputation-based federated learning for secure wireless networks. IEEE Internet Things J. 2021, 9, 1212–1226. [Google Scholar] [CrossRef]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine learning with adversaries: Byzantine tolerant gradient descent. Adv. Neural Inf. Process. Syst. 2017, 30, 119–129. [Google Scholar]

- Cao, Y.; Li, S.; Liu, Y.; Yan, Z.; Dai, Y.; Philip, S.Y.; Sun, L. A comprehensive survey of AI-generated content (AIGC): A history of generative AI from GAN to ChatGPT. arXiv 2022, arXiv:2303.04226. [Google Scholar]

- Cong, M.; Yu, H.; Weng, X.; Yiu, S.M. A game-theoretic framework for incentive mechanism design in federated learning. In Federated Learning: Privacy and Incentive; Springer: Berlin/Heidelberg, Germany, 2020; pp. 205–222. [Google Scholar]

- Lin, T.; Kong, L.; Stich, S.U.; Jaggi, M. Ensemble distillation for robust model fusion in federated learning. Adv. Neural Inf. Process. Syst. 2020, 33, 2351–2363. [Google Scholar]

- Kang, J.; Xiong, Z.; Niyato, D.; Xie, S.; Zhang, J. Incentive mechanism for reliable federated learning: A joint optimization approach to combining reputation and contract theory. IEEE Internet Things J. 2019, 6, 10700–10714. [Google Scholar] [CrossRef]

- Zhan, Y.; Li, P.; Guo, S. Learning-based incentive mechanism for federated learning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4654–4668. [Google Scholar] [CrossRef]

- Fung, C.; Yoon, C.J.; Beschastnikh, I. The limitations of federated learning in sybil settings. In Proceedings of the 23rd International Symposium on Research in Attacks, Intrusions and Defenses, San Sebastian, Spain, 14–16 October 2020; pp. 301–316. [Google Scholar]

- Wen, J.; Zhang, Z.; Lan, Y.; Cui, Z.; Cai, J.; Zhang, W. A survey on federated learning: Challenges and applications. Int. J. Mach. Learn. Cybern. 2023, 14, 513–535. [Google Scholar] [CrossRef]

- Mothukuri, V.; Parizi, R.M.; Pouriyeh, S.; Huang, Y.; Dehghantanha, A.; Srivastava, G. A survey on security and privacy of federated learning. Future Gener. Comput. Syst. 2021, 115, 619–640. [Google Scholar] [CrossRef]

- Tao, Y.; Cui, S.; Xu, W.; Yin, H.; Yu, D.; Liang, W.; Cheng, X. Byzantine-resilient federated learning at edge. IEEE Trans. Comput. 2023, 72, 2600–2614. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Zaheer, M.; Sanjabi, M.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2022, 37, 50–60. [Google Scholar] [CrossRef]

- So, J.; Gündüz, D.; Thiran, P. Byzantine-resilient secure federated learning. IEEE J. Sel. Areas Commun. 2022, 39, 2168–2181. [Google Scholar] [CrossRef]

- Wang, Z.; Fan, X.; Qi, J.; Wen, C.; Wang, C.; Yu, R. Federated learning with fair averaging. arXiv 2021, arXiv:2104.14937. [Google Scholar] [CrossRef]

- Karimireddy, S.P.; Kale, S.; Mohri, M.; Reddi, S.; Stich, S.; Suresh, A.T. Scaffold: Stochastic controlled averaging for federated learning. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 5132–5143. [Google Scholar]

- Resnick, P.; Kuwabara, K.; Zeckhauser, R.; Friedman, E. Reputation systems. Commun. ACM 2000, 43, 45–48. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, Q.; Yu, Q.; Li, Z.; Liu, Y.; Lo, S.K.; Chen, S.; Xu, X.; Zhu, L. Blockchain-based federated learning for device failure detection in industrial IoT. IEEE Internet Things J. 2021, 8, 5926–5937. [Google Scholar] [CrossRef]

- Guo, R.; Liu, H.; Liu, D. When deep learning-based soft sensors encounter reliability challenges: A practical knowledge-guided adversarial attack and its defense. IEEE Trans. Ind. Inform. 2023, 20, 2702–2714. [Google Scholar] [CrossRef]

- Al-Maslamani, N.M.; Abdallah, M.; Ciftler, B.S. Reputation-aware multi-agent DRL for secure hierarchical federated learning in IoT. IEEE Open J. Commun. Soc. 2023, 4, 1274–1284. [Google Scholar] [CrossRef]

- Yin, D.; Chen, Y.; Ramchandran, K.; Bartlett, P. Byzantine-robust distributed learning: Towards optimal statistical rates. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 5650–5659. [Google Scholar]

- Shi, Y.; Yu, H.; Leung, C. Towards fairness-aware federated learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 11922–11938. [Google Scholar] [CrossRef] [PubMed]

- Hou, C.; Thekumparampil, K.K.; Fanti, G.; Oh, S. FeDChain: Chained algorithms for near-optimal communication cost in federated learning. arXiv 2021, arXiv:2108.06869. [Google Scholar]

- Li, T.; Zaheer, M.; Sanjabi, M.; Smith, V.; Talwalkar, A. Fair resource allocation in federated learning. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Chen, Y.; Su, L.; Xu, J. Distributed statistical machine learning in adversarial settings: Byzantine gradient descent. Proc. ACM Meas. Anal. Comput. Syst. 2017, 1, 96. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Q.; Liang, H.; Joshi, G.; Poor, H.V. Tackling the objective inconsistency problem in heterogeneous federated optimization. Adv. Neural Inf. Process. Syst. 2020, 33, 7611–7623. [Google Scholar]

- Fang, M.; Cao, X.; Jia, J.; Gong, N. Local model poisoning attacks to Byzantine-robust federated learning. In Proceedings of the 29th USENIX Security Symposium, Berkeley, CA, USA, 12–14 August 2020; pp. 1605–1622. [Google Scholar]

- Wang, S.; Tuor, T.; Salonidis, T.; Leung, K.K.; Makaya, C.; He, T.; Chan, K. Adaptive federated learning in resource constrained edge computing systems. IEEE J. Sel. Areas Commun. 2019, 37, 1205–1221. [Google Scholar] [CrossRef]

- Chen, Y.; Zhou, H.; Li, T.; Li, J.; Zhou, H. Multifactor incentive mechanism for federated learning in IoT: A stackelberg game approach. IEEE Internet Things J. 2023, 10, 21595–21606. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Xiong, Z.; Miao, C.; Niyato, D.; Yang, Q.; Leung, C.; Poor, H.V. Hierarchical incentive mechanism design for federated machine learning in mobile networks. IEEE Internet Things J. 2020, 7, 9575–9588. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, H.; Liu, J.; Huang, H.; Qiao, C.; Zhao, Y. Resource-efficient federated learning with hierarchical aggregation in edge computing. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–10. [Google Scholar]

- Xu, J.; Jin, M.; Xiao, J.; Lin, D.; Liu, Y. Multi-round decentralized dataset distillation with federated learning for Low Earth Orbit satellite communication. Future Gener. Comput. Syst. 2025, 164, 107570. [Google Scholar] [CrossRef]

- Kabir, E.; Song, Z.; Rashid, M.R.U.; Mehnaz, S. Flshield: A validation based federated learning framework to defend against poisoning attacks. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 2572–2590. [Google Scholar]

- Wang, H.; Qu, Z.; Guo, S.; Gao, X.; Li, R.; Ye, B. Intermittent pulling with local compensation for communication-efficient distributed learning. IEEE Trans. Emerg. Top. Comput. 2020, 10, 779–791. [Google Scholar] [CrossRef]

- Gudur, G.K.; Balaji, B.S.; Perepu, S.K. Resource-constrained federated learning with heterogeneous labels and models. arXiv 2020, arXiv:2011.03206. [Google Scholar] [CrossRef]

- Issa, W.; Moustafa, N.; Turnbull, B.; Sohrabi, N.; Tari, Z. Blockchain-based federated learning for securing internet of things: A comprehensive survey. ACM Comput. Surv. 2023, 55, 1–43. [Google Scholar] [CrossRef]

- Wang, N.; Yang, W.; Guan, Z.; Du, X.; Guizani, M. Bpfl: A blockchain based privacy-preserving federated learning scheme. In Proceedings of the 2021 IEEE Global Communications Conference (GLOBECOM), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar]

- Zhao, J.; Han, R.; Yang, Y.; Catterall, B.; Liu, C.H.; Chen, L.Y.; Mortier, R.; Crowcroft, J.; Wang, L. Federated learning with heterogeneity-aware probabilistic synchronous parallel on edge. IEEE Trans. Serv. Comput. 2021, 15, 614–626. [Google Scholar] [CrossRef]

- Wei, K.; Li, J.; Ding, M.; Ma, C.; Yang, H.H.; Farokhi, F.; Jin, S.; Quek, T.Q.; Poor, H.V. Federated learning with differential privacy: Algorithms and performance analysis. IEEE Trans. Inf. Forensics Secur. 2020, 15, 3454–3469. [Google Scholar] [CrossRef]

- Truex, S.; Baracaldo, N.; Anwar, A.; Steinke, T.; Ludwig, H.; Zhang, R.; Zhou, Y. A hybrid approach to privacy-preserving federated learning. In Proceedings of the 12th ACM Workshop on Artificial Intelligence and Security, London, UK, 15 November 2019; pp. 1–11. [Google Scholar]

- Dwork, C.; Roth, A. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci. 2014, 9, 211–407. [Google Scholar] [CrossRef]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep learning with differential privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Yao, A.C. Protocols for secure computations. In Proceedings of the 23rd Annual Symposium on Foundations of Computer Science, Chicago, IL, USA, 3–5 November 1982; pp. 160–164. [Google Scholar]

- Bonawitz, K.; Ivanov, V.; Kreuter, B.; Marcedone, A.; McMahan, H.B.; Patel, S.; Ramage, D.; Segal, A.; Seth, K. Practical secure aggregation for privacy-preserving machine learning. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, NT, USA, 30 October–3 November 2017; pp. 1175–1191. [Google Scholar]

- Gentry, C. Fully homomorphic encryption using ideal lattices. In Proceedings of the Forty-First Annual ACM Symposium on Theory of Computing, Bethesda, MD, USA, 31 May 2009; pp. 169–178. [Google Scholar]

- Zhang, C.; Li, S.; Xia, J.; Wang, W.; Yan, F.; Liu, Y. BatchCrypt: Efficient homomorphic encryption for Cross-Silo federated learning. In Proceedings of the 2020 USENIX Annual Technical Conference, Boston, MA, USA, 15–17 July 2020; pp. 493–506. [Google Scholar]

- McMahan, H.B.; Ramage, D.; Talwar, K.; Zhang, L. Learning differentially private recurrent language models. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Bell, J.H.; Bonawitz, K.A.; Gascón, A.; Lepoint, T.; Raykova, M. Secure single-server aggregation with (poly) logarithmic overhead. In Proceedings of the 2020 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 9–13 November 2020; pp. 1253–1269. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).